Shape-aware Graph Spectral Learning

Abstract

Spectral Graph Neural Networks (GNNs) are gaining attention for their ability to surpass the limitations of message-passing GNNs. They rely on supervision from downstream tasks to learn spectral filters that capture the graph signal’s useful frequency information. However, some works empirically show that the preferred graph frequency is related to the graph homophily level. This relationship between graph frequency and graphs with homophily/heterophily has not been systematically analyzed and considered in existing spectral GNNs. To mitigate this gap, we conduct theoretical and empirical analyses revealing a positive correlation between low-frequency importance and the homophily ratio, and a negative correlation between high-frequency importance and the homophily ratio. Motivated by this, we propose shape-aware regularization on a Newton Interpolation-based spectral filter that can (i) learn an arbitrary polynomial spectral filter and (ii) incorporate prior knowledge about the desired shape of the corresponding homophily level. Comprehensive experiments demonstrate that NewtonNet can achieve graph spectral filters with desired shapes and superior performance on both homophilous and heterophilous datasets. The code is available at https://github.com/junjie-xu/NewtonNet.

\ul

1 Introduction

Graphs are pervasive in the real world, such as social networks (Wu et al., 2020), biological networks (Fout et al., 2017), and recommender systems (Wu et al., 2022). GNNs (Veličković et al., ; Hamilton et al., 2017) have witnessed great success in various tasks such as node classification (Kipf & Welling, 2017), link prediction (Zhang & Chen, 2018), and graph classification (Zhang et al., 2018). Existing GNNs can be broadly categorized into spatial GNNs and spectral GNNs. Spatial GNNs (Veličković et al., ; Klicpera et al., 2018; Abu-El-Haija et al., 2019) propagate and aggregate information from neighbors. Hence, it learns similar representations for connected neighbors. Spectral GNNs (Bo et al., 2023) learn a filter on the Laplacian matrix. The filter output is the amplitude of each frequency in the graph spectrum, which ultimately determines the learned representations.

In real-world scenarios, different graphs can have different homophily ratios. In homophilous graphs, nodes from the same class tend to be connected; while in heterophilous graphs, nodes from different classes tend to be connected. Several studies (Zhu et al., 2020a; Ma et al., 2022; Xu et al., 2022) have shown that heterophilous graphs pose a challenge for spatial GNNs based on message-passing as they implicitly assume the graph follows homophily assumption. Many works are proposed from the spatial perspective to address the heterophily problem (Zheng et al., 2022). Spectral GNNs (Bo et al., 2023; Levie et al., 2018) with learnable filters have shown great potential in addressing heterophily problems as they can learn spectral filters from the graph, which can alleviate the issue of aggregating noisy information from neighbors of different features/classes.

Recent works (Bo et al., 2021; Zhu et al., 2020a; Liu et al., 2022) have empirically shown that, in general, homophilous graphs contain more low-frequency information in graph spectrum and low-pass filter works well on homophilous graphs; while heterophilous graphs contain more high-frequency information and high-pass filter is preferred. However, they mostly provide empirically analysis without theoretical understanding. In addition, graphs can be in various homophily ratios instead of simply heterophily and homophily, which needs further analysis and understanding. However, there lacks a systematic investigation and theoretical understanding between the homophily ratio of a graph and the frequency that would be beneficial for representation learning of the graph.

To fill the gap, we systematically analyze the impact of amplitudes of each frequency on graphs with different homophily ratios. We observe that low-frequency importance is positively correlated with the homophily ratio, while high-frequency importance is negatively correlated with the homophily ratio. Meanwhile, middle-frequency importance increases and then decreases as the homophily ratio increases. We also provide theoretical analysis to support our observations.

These observations suggest that an effective spectral GNN should be able to learn filters that can adapt to the homophily ratio of the graph, i.e., encouraging more important frequencies and discouraging frequencies with lower importance based on the graph ratio. However, this is a non-trivial task and existing spectral GNNs cannot satisfy the goal as they solely learn on downstream tasks and are not aware of the behaviors of the learned filter. As a result, existing spectral GNNs have the following problems: (i) they cannot adapt to varying homophily ratios, resulting in their inability to encourage and discourage different frequencies; and (ii) when only weak supervision is provided, there are not sufficient labels for the models to learn an effective filter, which degrades the performance.

To address the challenges, we propose a novel framework NewtonNet, which can learn filters that can encourage important frequencies while discourage non-important frequencies based on homophily ratio. Specifically, NewtonNet introduces a set of learnable points of filters supervised by label information, which gives the basic shape of the filter. It then adopts Newton Interpolation (Hildebrand, 1987) on those interpolation points to get the complete filter. As the points are learnable, NewtonNet can approximate arbitrary filters. As those interpolation points determine the shape of the filter, to adapt the filter to the homophily ratio, we design a novel shape-aware regularization on the points to encourage beneficial frequencies and discourage harmful frequencies to achieve an ideal filter shape. Experiments show that NewtonNet outperforms spatial and spectral GNNs on various datasets.

Our main contributions are: (1) We are the first to establish a well-defined relationship between graph frequencies and homophily ratios. We empirically and theoretically show that the more homophilous the graph is, the more beneficial the low-frequency is; while the more heterophilous the graph is, the more beneficial the high-frequency is. (2) We propose a novel framework NewtonNet using Newton Interpolation with shape-aware regularization that can learn better filter encourages beneficial frequency and discourages harmful frequency, resulting in better node representations. (3) Extensive experiments demonstrate the effectiveness of NewtonNet in various settings.

2 Preliminaries

Notations and Definitions. Let be an attributed undirected graph, where is the set of nodes, and is the set of edges. is the node feature matrix, where is the node features of node and is the feature dimension. is the adjacency matrix, where if ; otherwise = 0. is the training set with known class labels , where is the unlabeled node sets. We use to denote the feature transformation function parameterized by and to denote the filter function. is a diagonal matrix with . The normalized graph Laplacian matrix is given by . We use to denote a node pair and to denote a graph signal, which can be considered as a feature vector.

Homophily Ratio measures the ratio of edges connecting nodes with the same label to all the edges, i.e., . Graphs with high homophily and low heterophily have homophily ratios near 1, while those with low homophily and high heterophily have ratios near 0.

Graph Spectral Learning. For an undirected graph, the Laplacian matrix is a positive semidefinite matrix. Its eigendecomposition is , where is the eigenvector matrix and is the diagonal eigenvalue matrix. Given a graph signal , its graph Fourier transform is , and inverse transform is . The graph filtering is given as

| (1) |

where is the spectral filter to be learned. However, eigendecomposition is computationally expensive with cubic time complexity. Thus, a polynomial function is usually adopted to approximate filters to avoid eigendecomposition, i.e., Eq. 1 is reformulated as a polynomial of as

| (2) |

where is polynomial function and are the coefficients of the polynomial.

3 Impact of Frequencies on Graphs with Various Homophily Ratios

In this section, we first provide a theoretical analysis of the influence of different frequencies on graphs with various homophily ratios. We then perform preliminary experiments, which yield consistent results with our theoretical proof. Specifically, we find that low-frequency is beneficial while high-frequency is harmful to homophilous graphs; the opposite is true for heterophilous graphs. Our findings pave us the way for learning better representations based on the homophily ratio.

3.1 Theoretical Analysis of Filter Behaviors

Several studies (Bo et al., 2021; Zhu et al., 2020a) have empirically shown that low-frequency information plays a crucial role in homophilous graphs, while high-frequency information is more important for heterophilous graphs. However, none of them provide theoretical evidence to support this observation. To fill this gap, we theoretically analyze the relationship between homophily ratio and graph frequency. Specifically, we examine two graph filters that exhibit distinct behaviors in different frequency regions and explore their impacts on graphs with varying homophily ratios.

Lemma 3.1.

For a graph with nodes, classes, and nodes for each class, if we randomly connect nodes to form the edge set , the expected homophily ratio is .

The proof is in Appendix A.1. Lemma 3.1 reveals that if the edge set is constructed by random sampling, the expected homophily ratio of the graph is . Hence, for a graph , if , it is more prone to generate homophilous edges than in the random case. If , heterophilous edges are more likely to form.

Theorem 3.2.

Let be an undirected graph. are eigenvalues of its Laplacian matrix . Let and be two spectral filters satisfying the following two conditions: (1) for ; and for , where ; and (2) They have the same norm of output values . For a graph signal , and are the corresponding representations after filters and . Let be the difference between the total distance of connected nodes got by and , where denotes the -th element of . Then we have .

Note that in the theorem, we assume and have the same norm to avoid trivial solutions. The proof of the theorem and the discussion of this assumption is in Appendix A.2. Theorem 3.2 reveals that if has higher amplitude in the high-frequency region and lower amplitude in the low-frequency region compared to , it will result in less similarity in representations of connected nodes. In contrast, increases the representation similarity of connected nodes. As most edges in heterophilous graphs are heterophilous edges, is preferred to increase distances between heterophilously connected nodes. In contrast, homophilous graphs prefer to decrease distances between homophilously connected nodes. Next, we theoretically prove the claim that low-frequency is beneficial for the prediction of homophilous graphs, while high-frequency is beneficial for heterophilous graphs.

Theorem 3.3.

Let be a balanced undirected graph with nodes, classes, and nodes for each class. is the set of possible pairs of nodes from the same class. is the set of possible pairs of nodes from different classes. and are two filters same as Theorem 3.2. Given an arbitrary graph signal , let be the total intra-class distance, be the total inter-class distance, and be the average intra-class distance while be the average inter-class distance. is the difference between average inter-distance and intra-class distance. , , , , and are corresponding values defined similarly on . If , we have: (1) when , ; and (2) when , .

The proof is in Appendix A.3. In Theorem 3.3, shows the discriminative ability of the learned representation, where a large indicates that representations of intra-class nodes are similar, while representations of inter-class nodes are dissimilar. As and are defined as described in Theorem 3.2, we can guarantee . Hence, homophilous graphs with favor ; while heterophilous graphs with favor . This theorem shows that the transition phase of a balanced graph is , where the transition phase is the point whether lower or higher frequencies are more beneficial changes.

Theorem 3.2 and Theorem 3.3 together guide us to the desired filter shape. When , the filter should involve more low-frequency and less high-frequency. When , the filter need to decrease the low-frequency and increase the high-frequency to be more discriminative.

3.2 Empirical Analysis of Varying Homophily Ratios

With the theoretical understanding, in this subsection, we further empirically analyze and verify the influence of high- and low- frequencies on node classification performance on graphs with various homophily ratios, which help us to design better GNN for node classification. As high-frequency and low-frequency are relative concepts, there is no clear division between them. Therefore, to make the granularity of our experiments better and to make our candidate filters more flexible, we divide the graph spectrum into three parts: low-frequency , middle-frequency , and high-frequency . We perform experiments on synthetic datasets generated by contextual stochastic block model (CSBM) (Deshpande et al., 2018), which is a popular model that allows us to create synthetic attributed graphs with controllable homophily ratios (Ma et al., 2022; Chien et al., 2021). A detailed description of CSBM is in Appendix D.1.

To analyze which parts of frequencies in the graph spectrum are beneficial or harmful for graphs with different homophily ratios, we conduct the following experiments. We first generate synthetic graphs with different homophily ratios as . Then for each graph, we conduct eigendecomposition as and divide the eigenvalues into three parts: low-frequency , middle-frequency , and high-frequency , because three-part provides more flexibility of the filter. As shown in Fig. LABEL:fig:mag_imp(a), we vary the output values of the filter, i.e., amplitudes of , and among respectively, which leads to combinations of output values of the filter. Then we get transformed graph Laplacian . For each filter, we use as a convolutional matrix to learn node representation as , where is the feature transformation function implemented by an MLP. In summary, the final node representation is given by . By varying the amplitudes of each part of frequency, we vary how much a certain frequency is included in the representations. For example, the larger the value of is, the more low-frequency information is included in . We then add a Softmax layer on top of to predict the label of each node. For each synthetic graph, we split the nodes into 2.5%/2.5%/95% for train/validation/test. For each filter, we conduct semi-supervised node classification on each synthetic graph and record the classification performance.

Frequency Importance. With the above experiments, to understand which filter works best for which homophily ratio, for each homophily ratio, we first select filters that give top 5% performance among the filters. Let be the set of the best filters for homophily ratio , where means the -th best filter for and is the number of best filters. Then, importance scores of low, middle, and high frequency are given as

| (3) |

The importance score of a certain frequency shows how much that frequency should be involved in the representation to achieve the best performance. The findings in Fig. LABEL:fig:mag_imp(b) reveal two important observations: (i) as the homophily ratio increases, there is a notable increase in the importance of low-frequency, a peak and subsequent decline in the importance of middle-frequency, and a decrease in the importance of high-frequency; (ii) the transition phase occurs around the homophily ratio of as there are two distinct classes, resulting in a significant shift in the importance of frequencies. The frequency importance of graphs generated with other parameters is in Appendix E.3. These observations guide us in designing the spectral filter. For high-homophily graphs, we want to preserve more low-frequency while removing high frequencies. For low-homophily graphs, more high frequencies and fewer low frequencies are desired. For graphs with homophily ratios near the transition phase, we aim the model to learn adaptively.

4 The Proposed Framework NewtonNet

The preceding theoretical and empirical analysis show that the decision to include more or less of a specific frequency is contingent upon the homophily ratio. Hence, it is desirable for the model to learn this ratio and encourage or discourage low, middle, or high-frequency accordingly. However, existing spectral methods either use predefined filters or let the model freely learn a filter solely based on labels, which lacks an efficient mechanism to promote or discourage low, middle, or high-frequency based on homophily ratio, especially when the label is sparse. Therefore, the regularization of spectral filter values poses a significant challenge for existing methods. To address the challenge, we propose a novel framework NewtonNet, which can regularize spectral filter values and encourage or discourage different frequencies according to the homophily ratio. The basic idea of NewtonNet is to introduce some points of the graph filter, where are fixed and are learnable. Fig. 2 shows the case when . These points give the basic shape of the filter, and we adopt Newton Interpolation to approximate the filter function based on these points. As those points are learnable, we can approximate any filters. To incorporate the prior knowledge, we propose a novel shape-aware regularization to regularize those learnable points to adapt to the homophily ratio of the graph. The filter is then used to learn node representation and optimized for node classification. Next, we introduce the details.

4.1 Newton Interpolation and NewtonNet

In order to learn flexible filters and incorporate prior knowledge in Section 3 in the filter, i.e., encouraging or discouraging different frequencies based on the homophily ratio, we first propose to adopt a set of learnable points of the filter. Interpolated points are fixed and distributed equally among low, middle, and high-frequency depending on their values. The corresponding values are learnable and updated in each epoch of training. These learnable points gives the basic shape of a filter. Then we can use interpolation method to approximate a filter function by passing these points such that , where . This gives us two advantages: (i) As determines the basic shape of a filter and is learnable, we can learn arbitrary filter by learning that minimizes the node classification loss; and (ii) As the filter is determined by , it allows us to add regularization on to encourage beneficial and discourage harmful frequencies based on homophily ratio.

Specifically, with these points, we adopt Newton Interpolation (Hildebrand, 1987) to learn the filter, which is a widely-used method for approximating a function by fitting a polynomial on a set of given points. Given points and their values of an unknown function , where are pairwise distinct, can be interpolated based on Newton Interpolation as follows.

Definition 4.1 (Divided Differences).

The divided differences defined on are given recursively as

| (4) |

Lemma 4.2 (Newton Interpolation).

can be interpolated by Newton interpolation as:

| (5) |

where are Newton polynomial basis and are coefficients.

Eq. 5 satisfies and is the power of the polynomial. As mentioned in Eq. 2, we directly apply the spectral filter on Laplacian to get the final representations. Then Eq. 5 is reformulated as , where is the identity matrix.

Following (Chien et al., 2021), to increase the expressive power, the node features are firstly transformed by a transformation layer , then a convolutional matrix learned by NewtonNet is applied to transformed features, which is shown in Fig. 2. Mathematically, NewtonNet can be formulated as:

| (6) |

where is a feature transformation function, and we use a two-layer MLP in this paper. can be any points . We use the equal-spaced points, i.e., . controls different frequencies depending on its value. are learnable filter output values and are randomly initialized. By learning , we are not only able to learn arbitrary filters, but can also apply regularization on ’s to adapt the filter based on homophily ratio, which will be discussed in Section 4.2. With the node representation of node , ’s label distribution probability is predicted as .

4.2 Shape-aware Regularization

As determines the shape of filter , we can control the filter shape by adding constraints on learned function values. As shown in Fig. 2, we slice into three parts

| (7) |

which represent the amplitude of low, middle, and high-frequency, respectively. In this paper, we set so that we have six learnable points and two for each frequency. According to the analysis results in Section 3, as the homophily ratio increases, we design the following regularizations. (i) For low-frequency, the amplitude should decrease, and we discourage low-frequency before the transition phase and encourage it after the transition phase. Thus, we add a loss term as to regularize the amplitudes of low-frequency. When the homophily ratio is smaller than the transition phase , the low-frequency is harmful; therefore, the loss term has a positive coefficient. In contrast, when the homophily ratio is larger than the transition phase, the low-frequency is beneficial, and we give a positive coefficient for the loss term. (ii) For high-frequency, the amplitude should decrease, and we encourage the high-frequency before the transition phase and discourage it after the transition phase. Similarly, the regularization for high-frequency is . (iii) For middle-frequency, we observe that it has the same trend as the low-frequency before the transition phase and has the same trend as the high-frequency after the transition phase in Fig. LABEL:fig:mag_imp (b). Therefore, we have the regularization . These three regularization work together to determine the shape based on homophily ratio . Then the shape-aware regularization can be expressed as

| (8) |

where are scalars to control contribution of the regularizers, respectively. is the learned homophily ratio, calculated and updated according to the labels learned in every epoch. The final objective function of NewtonNet is

| (9) |

where is classification loss on labeled nodes and denotes cross entropy loss. The training algorithm of NewtonNet is in Appendix B.

Compared with other approximation and interpolation methods This Newton Interpolation method has its advantages compared with approximation methods, e.g., ChebNet (Defferrard et al., 2016), GPRGNN (Chien et al., 2021), and BernNet (He et al., 2021b). Both interpolation and approximation are common methods to approximate a polynomial function. However, the interpolation function passes all the given points accurately, while approximation methods minimize the error between the function and given points. We further discuss the difference between interpolation and approximation methods in Appendix C. This difference makes us be able to readily apply shape-aware regularization on learned . However, approximation methods do not pass given points accurately, so we cannot make points learnable to approximate arbitrary functions and apply regularization.

Compared to other interpolation methods like ChebNetII (He et al., 2022), Newton interpolation offers greater flexibility in choosing interpolated points. In Eq. 6, values can be freely selected from the range [0, 2], allowing adaptation to specific graph properties. In contrast, ChebNetII uses fixed Chebyshev points for input values, limiting precision in narrow regions and requiring increased complexity (larger K) to address this limitation. Additionally, ChebNetII can be seen as a special case of NewtonNet when Chebyshev points are used as values (He et al., 2022).

Complexity Analysis NewtonNet exhibits a time complexity of and a space complexity of , where represents the number of edges. Notably, the complexity scales linearly with . A detailed analysis is provided in Appendix B.1.

5 Related Work

Spatial GNNs. Existing GNNs can be categorized into spatial and spectral-GNNs. Spatial GNNs (Kipf & Welling, 2016; Veličković et al., ; Klicpera et al., 2018; Hamilton et al., 2017) adopt message-passing mechanism, which updates a node’s representation by aggregating the message from its neighbors. For example, GCN (Kipf & Welling, 2017) uses a weighted average of neighbors’ representations as the aggregate function. APPNP (Klicpera et al., 2018) first transforms features and then propagates information via personalized PageRank. However, The message-passing GNNs (Gilmer et al., 2017) rely on the homophily and thus fail on heterophilous graphs as they smooth over nodes with different labels and make the representations less discriminative. Many works (Zhu et al., 2020a; Xu et al., 2022; Abu-El-Haija et al., 2019; He et al., 2021a; Wang et al., 2021; Dai et al., 2022; Zhu et al., 2021a) design network structures from a spatial perspective to address this issue. (Zhu et al., 2020a) aggregates information for ego and neighbor representations separately instead of mixing them together. LW-GCN (Dai et al., 2022) proposes the label-wise aggregation strategy to preserve information from heterophilous neighbors. Some other works (Veličković et al., 2019; Zhu et al., 2020b; Xu et al., 2021; Xiao et al., ) also focus on self-supervised learning on graphs.

Spectral GNNs. Spectral GNNs (Bo et al., 2023, 2021; Li et al., 2021; Zhu et al., 2021b) learn a polynomial function of graph Laplacian served as the convolutional matrix. Recently, more works consider the relationship between heterophily and graph frequency. To name a few, Bo et al. (2021) maintains a claim that besides low-frequency signal, the high-frequency signal is also useful for heterophilous graphs. Li et al. (2021) states that high-frequency components contain important information for heterophilous graphs. Zhu et al. (2020a) claims high frequencies contain more information about label distributions than low frequencies. Therefore, learnable spectral GNNs (He et al., 2021b, 2022; Chien et al., 2021; Levie et al., 2018) that can approximate arbitrary filters perform inherently well on heterophily. The methods used to approximate the polynomial function vary among them, such as the Chebyshev polynomial for ChebNet (Defferrard et al., 2016), Bernstein polynomial for BernNet (He et al., 2021b), Jacobi polynomial for JacobiConv (Wang & Zhang, 2022), and Chebyshev interpolation for ChebNetII (He et al., 2022). Other spectral methods design non-polynomial filters or transform the basis (Bo et al., 2023).

6 Experiments

In this section, we conduct experiments to evaluate the effectiveness of the proposed NewtonNet and address the following research questions: RQ1 How effective is NewtonNet on datasets of various domains and sizes with varying degrees of homophily and heterophily? RQ2 How does NewtonNet compare to other baselines under weak supervision? RQ3 To what extent does shape-aware regularization contribute to the performance of NewtonNet? RQ4 Is the learned filter shape consistent with our analysis on homophilous and heterophilous graphs?

6.1 Experimental Setup

We use node classification to evaluate the performance. Here we briefly introduce the dataset, baselines, and settings in the experiments. We give details in Appendix D.

Datasets. To evaluate NewtonNet on graphs with various homophily ratios, we adopt three homophilous datasets (Sen et al., 2008): Cora, Citeseer, Pubmed, and six heterophilous datasets (Pei et al., 2020; Rozemberczki et al., 2021; Traud et al., 2012; Lim et al., 2021), Chameleon, Squirrel, Crocodile, Texas, Cornell, Penn94, Twitch-gamer, and Genius. Details are in Appendix D.2.

Baselines. We compare NewtonNet with representative baselines, including (i) non-topology method: MLP; (ii) spatial methods: GCN (Kipf & Welling, 2017), Mixhop (Abu-El-Haija et al., 2019), APPNP (Klicpera et al., 2018), GloGNN++ (Li et al., 2022); and (iii) spectral methods: ChebNet (Defferrard et al., 2016), GPRGNN (Chien et al., 2021), BernNet (He et al., 2021b), ChebNetII (He et al., 2022), JacobiConv (Wang & Zhang, 2022). Details of methods are in Appendix D.3.

Settings. We adopt the commonly-used split of 60%/20%/20% for train/validation/test sets. For a fair comparison, for each method, we select the best configuration of hyperparameters using the validation set and report the mean accuracy and variance of 10 random splits on the test.

| Cora | Cite. | Pubm. | Cham. | Squi. | Croc. | Texas | Corn. | Penn94 | Gamer | Genius | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| MLP | 73.28±1.9 | 70.95±2.1 | 86.08±0.7 | 48.86±2.3 | 32.27±1.0 | 65.37±1.0 | 75.79±8.4 | 75.79±8.4 | 74.18±0.3 | 65.24±0.2 | 86.80±0.1 |

| GCN | 87.86±2.1 | 75.47±1.0 | 87.00±0.6 | 66.12±3.7 | 54.65±2.7 | 72.95±0.6 | 54.21±10.2 | 54.21±6.5 | 83.23±0.2 | 66.58±0.2 | 80.28±0.0 |

| Mixhop | 87.81±1.7 | 74.32±1.3 | 88.50±0.7 | 64.39±0.6 | 49.95±1.9 | \ul73.63±0.8 | 70.00±8.7 | 73.16±2.6 | 84.09±0.2 | 67.27±0.2 | 88.39±0.4 |

| APPNP | 89.04±1.5 | 77.04±1.4 | 88.84±0.4 | 56.60±1.7 | 37.00±1.5 | 67.42±0.9 | 76.84±4.7 | 80.53±4.7 | 75.91±0.2 | 66.76±0.2 | 87.19±0.2 |

| ChebNet | 88.32±2.0 | 75.47±1.0 | \ul89.62±0.3 | 62.94±2.2 | 43.07±0.7 | 72.01±1.0 | 81.05±3.9 | 82.63±5.7 | 82.63±0.3 | 67.57±0.2 | 86.69±0.2 |

| GPRGNN | 89.20±1.6 | 77.48±1.9 | 89.50±0.4 | 71.15±2.1 | 55.18±1.3 | 69.68±1.0 | \ul86.37±1.1 | 83.16±4.9 | 84.08±0.2 | 64.44±0.3 | 87.41±0.1 |

| BernNet | 89.76±1.6 | \ul77.49±1.4 | 89.47±0.4 | 72.19±1.6 | 55.43±1.1 | 69.70±0.9 | 85.26±6.4 | \ul84.21±8.6 | 83.04±0.1 | 62.90±0.2 | 86.52±0.1 |

| ChebNetII | 88.51±1.5 | 75.83±1.3 | 89.51±0.6 | 69.91±2.3 | 52.83±0.8 | 67.86±1.6 | 84.74±3.1 | 81.58±8.0 | 83.52±0.2 | 62.53±0.2 | 86.49±0.1 |

| JacobiConv | 88.98±0.7 | 75.76±1.9 | 89.55±0.5 | 73.87±1.6 | \ul57.56±1.8 | 67.69±1.1 | 84.17±6.8 | 75.56±6.1 | 83.28±0.1 | \ul67.68±0.2 | 88.03±0.4 |

| GloGNN++ | 88.11±1.8 | 74.68±1.3 | 89.12±0.2 | \ul73.94±1.8 | 56.58±1.7 | 69.25±1.1 | 82.22±4.5 | 81.11±4.4 | 84.94±0.2 | 67.50±0.3 | 89.31±0.1 |

| NewtonNet | \ul89.39±1.4 | 77.87±1.9 | 89.68±0.5 | 74.47±1.5 | 61.58±0.8 | 75.70±0.4 | 87.11±3.8 | 86.58±5.3 | \ul84.56±0.1 | 67.92±0.3 | \ul88.20±0.1 |

6.2 Node Classification Performance on Heterophily and Homophily Graphs

Table 1 shows the results on node classification, where boldface denotes the best results and underline denotes the second-best results. We observe that learnable spectral GNNs outperform other baselines since spectral methods can learn beneficial frequencies for prediction under supervision, while spatial methods and predetermined spectral methods only obtain information from certain frequencies. NewtonNet achieves state-of-the-art performance on eight of nine datasets with both homophily and heterophily, and it achieves the second-best result on Cora. This is because NewtonNet efficiently learns the filter shape through Newton interpolation and shape-aware regularization.

6.3 Performance with Weak Supervision

As we mentioned before, learning filters solely based on the downstream tasks may lead to suboptimal performance, especially when there are rare labels. Thus, this subsection delves into the impact of the training ratio on the performance of various GNNs. We keep the validation and test set ratios fixed at 20%, and vary the training set ratio from to . We select several best-performing baselines and plot their performance on the Chameleon and Squirrel datasets in Fig. LABEL:fig:vary_ratio. From the figure, we observe: (1) When the training ratio is large, spectral methods perform well because they have sufficient labels to learn a filter shape. Conversely, when the training ratio is small, some spectral methods do not perform as well as GCN as there are not enough labels for the models to learn the filter; (2) NewtonNet consistently outperforms all baselines. When the training ratio is large, NewtonNet can filter out harmful frequencies while encouraging beneficial ones, leading to representations of higher quality. When the training ratio is small, the shape-aware regularization of NewtonNet incorporates prior knowledge to provide guidance in learning better filters.

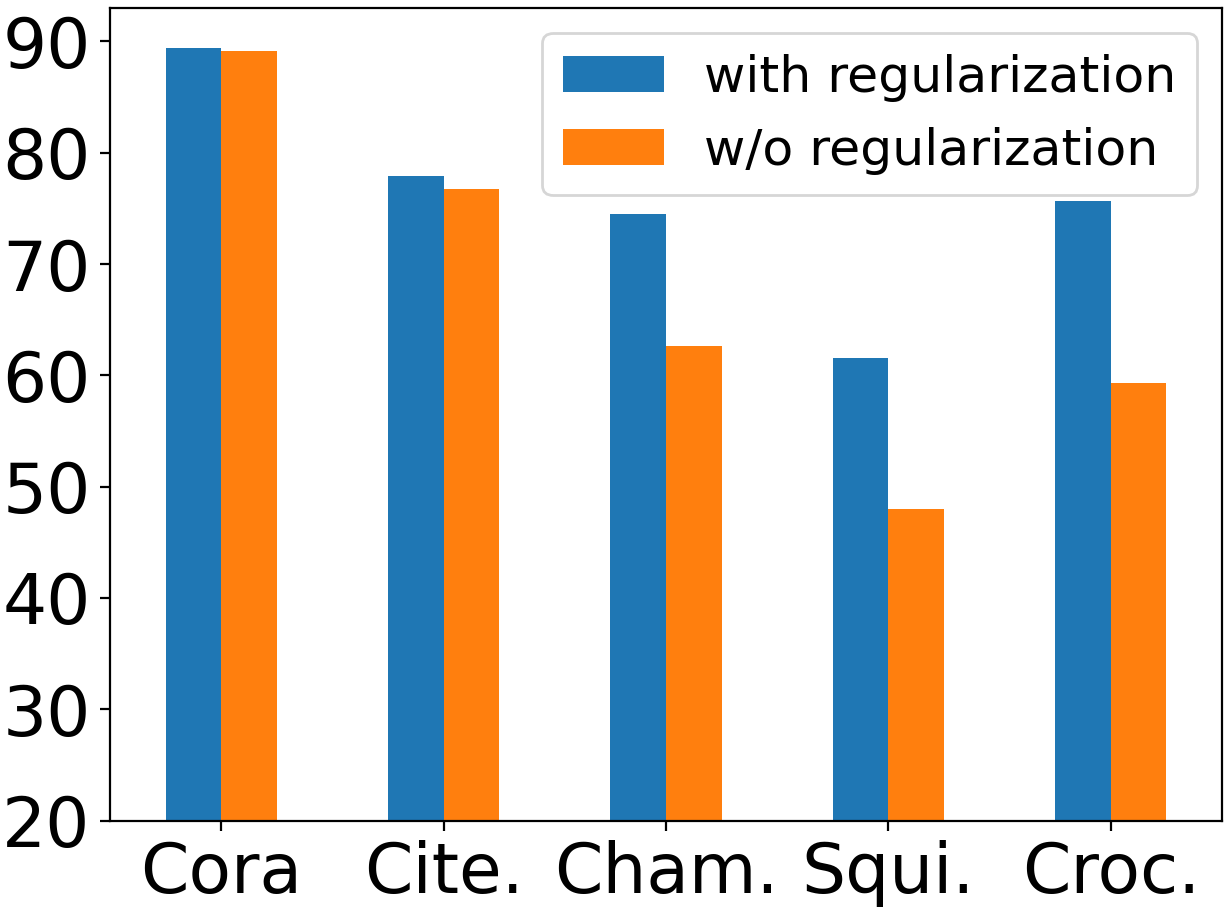

6.4 Ablation Study

Next, we study the effect of shape-aware regularization on node classification performance. We show the best result of NewtonNet with and without shape-aware regularization, where we use the same search space as in Section 6.2. Fig. 4 gives the results on five datasets. From the figure, we can observe that the regularization contributes more to the performance on heterophilous datasets (Chameleon, Squirrel, Crocodile) than on homophilous datasets (Cora, Citeseer). The reason is as follows. Theorem 3.3 and Fig. LABEL:fig:mag_imp(b) show that the importance of frequencies changes significantly near the transition phase, . Cora and Citeseer have homophily ratios of 0.81 and 0.74, while they have 7 and 6 classes, respectively. Since Cora and Citeseer are highly homophilous datasets whose homophily ratios are far from the transition faces, almost only low frequencies are beneficial, and thus the filter shapes are easier to learn. By contrast, the homophily ratios of Chameleon, Squirrel, and Crocodile are 0.24, 0.22, and 0.25, respectively, while they have five classes. Their homophily ratios are around the transition phase; therefore, the model relies more on shape-aware regularization to guide the learning process. We also conduct the hyperparameter analysis for , , , and in Appendix E.1.

6.5 Analysis of Learned Filters

Here we present the learned filters of NewtonNet on Citeseer and Chameleon and compare them with those learned by BernNet and ChebNetII. In Fig. LABEL:fig:learned_on_citeseer_chameleon, we plot the average filters by calculating the average temperatures of 10 splits. On the homophilous graph Citeseer, NewtonNet encourages low-frequency and filters out middle and high-frequency, which is beneficial for the representations of homophilous graphs. In contrast, ChebNetII and BernNet do not incorporate any shape-aware regularization, and their learning processes include middle and high-frequency, harming the representations of homophilous graphs. On the heterophilous dataset Penn94, the filter learned by NewtonNet contains more high-frequency and less-frequency, while harmful frequencies are included in the filters learned by ChebNetII and BernNet. These results reveal that NewtonNet, with its shape-aware regularization, can learn a filter shape with beneficial frequencies while filtering out harmful frequencies. More results of the learned filters can be found in Appendix E.4. We also present the learned homophily ratio in Appendix E.2.

7 Conclusion and Future Work

In this paper, we propose a novel framework NewtonNet for learning spectral filters GNNs. NewtonNet incorporates prior knowledge about the desired shape of spectral filters based on the homophily ratio of the dataset. The empirical and theoretical analysis reveals that low-frequency is positively correlated with the homophily ratio, while high-frequency is negatively correlated. NewtonNet utilizes Newton Interpolation with shape-aware regularization to learn arbitrary polynomial spectral filters that adapt to different homophily levels. Experimental results on real-world datasets demonstrate the effectiveness of the proposed framework. In the paper, we propose one possible regularization for filter and we leave the exploration of other regularizers as future work.

Impact Statements

This paper presents work whose goal is to advance the field of Machine Learning. There are many potential societal consequences of our work, none of which we feel must be specifically highlighted here.

References

- Abu-El-Haija et al. (2019) Abu-El-Haija, S., Perozzi, B., Kapoor, A., Alipourfard, N., Lerman, K., Harutyunyan, H., Ver Steeg, G., and Galstyan, A. Mixhop: Higher-order graph convolutional architectures via sparsified neighborhood mixing. In ICML, pp. 21–29. PMLR, 2019.

- Blakely et al. (2021) Blakely, D., Lanchantin, J., and Qi, Y. Time and space complexity of graph convolutional networks. Accessed on: Dec, 31, 2021.

- Bo et al. (2021) Bo, D., Wang, X., Shi, C., and Shen, H. Beyond low-frequency information in graph convolutional networks. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, pp. 3950–3957, 2021.

- Bo et al. (2023) Bo, D., Wang, X., Liu, Y., Fang, Y., Li, Y., and Shi, C. A survey on spectral graph neural networks. arXiv preprint arXiv:2302.05631, 2023.

- Chien et al. (2021) Chien, E., Peng, J., Li, P., and Milenkovic, O. Adaptive universal generalized pagerank graph neural network. In ICLR, 2021.

- Dai et al. (2022) Dai, E., Zhou, S., Guo, Z., and Wang, S. Label-wise graph convolutional network for heterophilic graphs. In Learning on Graphs Conference, 2022.

- Defferrard et al. (2016) Defferrard, M., Bresson, X., and Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. NeurIPS, 29, 2016.

- Deshpande et al. (2018) Deshpande, Y., Sen, S., Montanari, A., and Mossel, E. Contextual stochastic block models. Advances in Neural Information Processing Systems, 31, 2018.

- Fout et al. (2017) Fout, A., Byrd, J., Shariat, B., and Ben-Hur, A. Protein interface prediction using graph convolutional networks. Advances in neural information processing systems, 30, 2017.

- Gilmer et al. (2017) Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O., and Dahl, G. E. Neural message passing for quantum chemistry. In International conference on machine learning, pp. 1263–1272. PMLR, 2017.

- Hamilton et al. (2017) Hamilton, W., Ying, Z., and Leskovec, J. Inductive representation learning on large graphs. Advances in neural information processing systems, 30, 2017.

- He et al. (2021a) He, D., Liang, C., Liu, H., Wen, M., Jiao, P., and Feng, Z. Block modeling-guided graph convolutional neural networks. arXiv preprint arXiv:2112.13507, 2021a.

- He et al. (2021b) He, M., Wei, Z., Xu, H., et al. Bernnet: Learning arbitrary graph spectral filters via bernstein approximation. Advances in Neural Information Processing Systems, 34:14239–14251, 2021b.

- He et al. (2022) He, M., Wei, Z., and Wen, J.-R. Convolutional neural networks on graphs with chebyshev approximation, revisited. In NeurIPS, 2022.

- Hildebrand (1987) Hildebrand, F. B. Introduction to numerical analysis. Courier Corporation, 1987.

- Kipf & Welling (2016) Kipf, T. N. and Welling, M. Variational graph auto-encoders. arXiv preprint arXiv:1611.07308, 2016.

- Kipf & Welling (2017) Kipf, T. N. and Welling, M. Semi-supervised classification with graph convolutional networks. In ICLR, 2017.

- Klicpera et al. (2018) Klicpera, J., Bojchevski, A., and Günnemann, S. Predict then propagate: Graph neural networks meet personalized pagerank. arXiv preprint arXiv:1810.05997, 2018.

- Levie et al. (2018) Levie, R., Monti, F., Bresson, X., and Bronstein, M. M. Cayleynets: Graph convolutional neural networks with complex rational spectral filters. IEEE Transactions on Signal Processing, 67(1):97–109, 2018.

- Li et al. (2021) Li, S., Kim, D., and Wang, Q. Beyond low-pass filters: Adaptive feature propagation on graphs. In Machine Learning and Knowledge Discovery in Databases. Research Track: European Conference, ECML PKDD 2021, Bilbao, Spain, September 13–17, 2021, Proceedings, Part II 21, pp. 450–465. Springer, 2021.

- Li et al. (2022) Li, X., Zhu, R., Cheng, Y., Shan, C., Luo, S., Li, D., and Qian, W. Finding global homophily in graph neural networks when meeting heterophily. In International Conference on Machine Learning, pp. 13242–13256. PMLR, 2022.

- Lim & Benson (2021) Lim, D. and Benson, A. R. Expertise and dynamics within crowdsourced musical knowledge curation: A case study of the genius platform. In Proceedings of the International AAAI Conference on Web and Social Media, volume 15, pp. 373–384, 2021.

- Lim et al. (2021) Lim, D., Hohne, F., Li, X., Huang, S. L., Gupta, V., Bhalerao, O., and Lim, S. N. Large scale learning on non-homophilous graphs: New benchmarks and strong simple methods. Advances in Neural Information Processing Systems, 34:20887–20902, 2021.

- Liu et al. (2022) Liu, N., Wang, X., Bo, D., Shi, C., and Pei, J. Revisiting graph contrastive learning from the perspective of graph spectrum. In Oh, A. H., Agarwal, A., Belgrave, D., and Cho, K. (eds.), Advances in Neural Information Processing Systems, 2022. URL https://openreview.net/forum?id=L0U7TUWRt_X.

- Ma et al. (2022) Ma, Y., Liu, X., Shah, N., and Tang, J. Is homophily a necessity for graph neural networks? In ICLR, 2022.

- Pei et al. (2020) Pei, H., Wei, B., Chang, K. C.-C., Lei, Y., and Yang, B. Geom-gcn: Geometric graph convolutional networks. In ICLR, 2020.

- Rozemberczki & Sarkar (2021) Rozemberczki, B. and Sarkar, R. Twitch gamers: a dataset for evaluating proximity preserving and structural role-based node embeddings. arXiv preprint arXiv:2101.03091, 2021.

- Rozemberczki et al. (2021) Rozemberczki, B., Allen, C., and Sarkar, R. Multi-Scale Attributed Node Embedding. Journal of Complex Networks, 9(2), 2021.

- Sen et al. (2008) Sen, P., Namata, G., Bilgic, M., Getoor, L., Galligher, B., and Eliassi-Rad, T. Collective classification in network data. AI magazine, 29(3):93–93, 2008.

- Traud et al. (2012) Traud, A. L., Mucha, P. J., and Porter, M. A. Social structure of facebook networks. Physica A: Statistical Mechanics and its Applications, 391(16):4165–4180, 2012.

- (31) Veličković, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., and Bengio, Y. Graph Attention Networks. International Conference on Learning Representations. accepted as poster.

- Veličković et al. (2019) Veličković, P., Fedus, W., Hamilton, W. L., Liò, P., Bengio, Y., and Hjelm, R. D. Deep Graph Infomax. In International Conference on Learning Representations, 2019.

- Wang et al. (2021) Wang, T., Wang, R., Jin, D., He, D., and Huang, Y. Powerful graph convolutioal networks with adaptive propagation mechanism for homophily and heterophily. arXiv preprint arXiv:2112.13562, 2021.

- Wang & Zhang (2022) Wang, X. and Zhang, M. How powerful are spectral graph neural networks. In International Conference on Machine Learning, pp. 23341–23362. PMLR, 2022.

- Wu et al. (2022) Wu, S., Sun, F., Zhang, W., Xie, X., and Cui, B. Graph neural networks in recommender systems: a survey. ACM Computing Surveys, 55(5):1–37, 2022.

- Wu et al. (2020) Wu, Y., Lian, D., Xu, Y., Wu, L., and Chen, E. Graph convolutional networks with markov random field reasoning for social spammer detection. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 34, pp. 1054–1061, 2020.

- (37) Xiao, T., Chen, Z., Guo, Z., Zhuang, Z., and Wang, S. Decoupled self-supervised learning for graphs. In Advances in Neural Information Processing Systems.

- Xu et al. (2021) Xu, D., Cheng, W., Luo, D., Chen, H., and Zhang, X. Infogcl: Information-aware graph contrastive learning. Advances in Neural Information Processing Systems, 34:30414–30425, 2021.

- Xu et al. (2022) Xu, J., Dai, E., Zhang, X., and Wang, S. HP-GMN: graph memory networks for heterophilous graphs. In IEEE International Conference on Data Mining, ICDM 2022, Orlando, FL, USA, November 28 - Dec. 1, 2022, pp. 1263–1268. IEEE, 2022. doi: 10.1109/ICDM54844.2022.00165.

- Zhang & Chen (2018) Zhang, M. and Chen, Y. Link prediction based on graph neural networks. NeurIPS, 31, 2018.

- Zhang et al. (2018) Zhang, M., Cui, Z., Neumann, M., and Chen, Y. An end-to-end deep learning architecture for graph classification. In AAAI, 2018.

- Zheng et al. (2022) Zheng, X., Liu, Y., Pan, S., Zhang, M., Jin, D., and Yu, P. S. Graph neural networks for graphs with heterophily: A survey. arXiv preprint arXiv:2202.07082, 2022.

- Zhu et al. (2020a) Zhu, J., Yan, Y., Zhao, L., Heimann, M., Akoglu, L., and Koutra, D. Beyond homophily in graph neural networks: Current limitations and effective designs. NeurIPS, 33, 2020a.

- Zhu et al. (2021a) Zhu, J., Rossi, R. A., Rao, A., Mai, T., Lipka, N., Ahmed, N. K., and Koutra, D. Graph neural networks with heterophily. In AAAI, volume 35, pp. 11168–11176, 2021a.

- Zhu et al. (2021b) Zhu, M., Wang, X., Shi, C., Ji, H., and Cui, P. Interpreting and unifying graph neural networks with an optimization framework. In Proceedings of the Web Conference 2021, pp. 1215–1226, 2021b.

- Zhu et al. (2020b) Zhu, Y., Xu, Y., Yu, F., Liu, Q., Wu, S., and Wang, L. Deep graph contrastive representation learning. arXiv preprint arXiv:2006.04131, 2020b.

Appendix A Detailed Proofs

A.1 Proof of Lemma 3.1

Lemma A.1.

For a graph with nodes, classes, and nodes for each class, if we randomly connect nodes to form the edge set , the expected homophily ratio is .

Proof.

We first randomly sample a node from , assuming its label is . Then we sample another node . Because the classes are balanced, we have

| (10) | ||||

Therefore, if each node pair in is sampled randomly, we have

| (11) |

which completes the proof. ∎

A.2 Proof of Theorem 3.2

Theorem A.2.

Let be an undirected graph. are eigenvalues of its Laplacian matrix . Let and be two spectral filters satisfying the following two conditions: (1) for ; and for , where ; and (2) They have the same norm of output values . For a graph signal , and are the corresponding representations after filters and . Let be the difference between the total distance of connected nodes got by and , where denotes the -th element of . Then we have .

Proof.

1. Given the eigendecomposition of Laplacian , because are orthonormal eigenvectors, any unit graph signal can be expresses as the linear combination of the eigenvectors:

| (12) |

where are the coefficients of each eigenvector. Then, we have

| (13) | |||

Note that and are the eigenvalues and eigenvectors of original Laplacian . Moreover, we have

| (14) |

Eq. 14 demonstrates that each element of is determined independently by the product of each eigenvalue and . We have . Furthermore, because is an arbitrary unit graph signal, it can achieve any value with . It’s reasonable for us to assume that ’s are independently identically distributed with mean .

2. For any graph signal , its smoothness is the total distance between the connected nodes, which is given by,

| (15) | ||||

Note that the smoothness score of an eigenvector equals the corresponding eigenvalue:

| (16) |

3. For i.i.d. random variables and any , we have

| (20) | ||||

We are interested in the expected difference between the total distance of connected nodes got by and . Let denote difference between the total distance of connected nodes got by and , i.e.,

| (21) |

Then, the expected difference between the total distance of connected nodes got by and is

| (22) | ||||

4. We assume so that and have the same -norms. We make this assumption to avoid some trivial solutions. For example, if we simply multiply the representation with a constant, the value in Eq. 17 will also be enlarged and reduced, but the discriminative ability is unchanged. Therefore, we have

| (23) | ||||

because for and for . By applying the results in Eq. 23 and Eq. 20, we have

| (24) |

Then we can derive that

| (25) | ||||

which completes our proof. ∎

A.3 Proof of Theorem 3.3

Theorem A.3.

Let be a balanced undirected graph with nodes, classes, and nodes for each class. is the set of possible pairs of nodes from the same class. is the set of possible pairs of nodes from different classes. and are two filters same as Theorem 3.2. Given an arbitrary graph signal , let be the total intra-class distance, be the total inter-class distance, and be the average intra-class distance while be the average inter-class distance. is the difference between average inter-distance and intra-class distance. , , , , and are corresponding values defined similarly on . If , we have: (1) when , ; and (2) when , .

Proof.

In graph , the number of possible homophilous (intra-class) edges (including self-loop) is,

| (26) |

The number of possible heterophilous (inter-class) edges is,

| (27) |

Therefore, we have

| (28) |

| (29) |

| (30) | ||||

denotes the set of edges connecting nodes from the same class (intra-class edges). denotes the set of edges connecting nodes from different classes (inter-class edges). There are edges, homophilous edges, and heterophilous edges. In expectation, each edge has the same difference of the distance of connected nodes got by and , i.e.,

| (31) | ||||

If we solely consider the direct influence of graph convolution on connected nodes, the relationship between and can be expressed as follows:

| (32) |

| (33) | ||||

Because , then we have

(1) when , if , then ; if , then .

(2) when , if , then ; if , then .

∎

Appendix B Training Algorithm of NewtonNet

We show the training algorithm of NewtonNet in Algorithm 1. We first randomly initialize , , and . We update according to the predicted labels of each iteration. We update the learned representations and , and accordingly until convergence or reaching max iteration.

B.1 Complexity Analysis

Time complexity. According to Blakely et al. (2021), the time complexity of GCN is , where is the number of edges, and is the number of layers. One-layer GCN has the formula . is the time complexity of feature transformation, and is the time complexity of neighborhood aggregation. Following GPRGNN, ChebNetII, and BernNet, NewtonNet uses the "first transforms features and then propagates" strategy. According to Eq. 6, the feature transformation part is implemented by an MLP with time . And the propagation part has the time complexity with . In other words, NewtonNet has a time complexity , which is linear to . BernNet’s time complexity is quadratic to . We summarize the time complexity in Table 2.

| Method | Time Complexity | Space Complexity |

|---|---|---|

| MLP | ||

| GCN | ||

| GPRGNN | ||

| ChebNetII | ||

| BernNet | ||

| NewtonNet |

| Method | Chameleon | Pubmed | Penn94 | Genius |

|---|---|---|---|---|

| MLP | 1.909 | 2.283 | 6.119 | 10.474 |

| GCN | 2.891 | 3.169 | 22.043 | 20.159 |

| Mixhop | 3.609 | 4.299 | 19.702 | 27.041 |

| GPRGNN | 4.807 | 4.984 | 10.572 | 12.522 |

| ChebNetII (K=5) | 4.414 | 4.871 | 9.609 | 12.189 |

| ChebNetII (K=10) | 7.352 | 7.447 | 13.661 | 15.346 |

| BernNet (K=5) | 8.029 | 11.730 | 19.719 | 20.168 |

| BernNet (K=10) | 20.490 | 20.869 | 49.592 | 43.524 |

| NewtonNet (K=5) | 6.6135 | 7.075 | 17.387 | 17.571 |

| NewtonNet (K=10) | 12.362 | 13.042 | 25.194 | 30.836 |

To examine our analysis, Table 3 shows the running time of each method. We employ 5 different masks with 2000 epochs and calculate the average time of each epoch. We observe that (1) For the spectral methods, NewtonNet, ChebNetII, and GPRGNN run more quickly than BernNet since their time complexity is linear to while BernNet is quadratic; (2) NewtonNet cost more time than GPRGNN and ChebNetII because it calculates a more complex polynomial; (3) On smaller datasets (Chameleon, Pubmed), GCN runs faster than NewtonNet. On larger datasets (Penn94, Genius), NewtonNet and other spectral methods are much more efficient than GCN. This is because we only transform and propagate the features once, but in GCN, we stack several layers to propagate to more neighbors. In conclusion, NewtonNet is scalable on large datasets.

Space complexity. Similarly, GCN has a space complexity . is for the weight matrix of feature transformation while is for the feature matrix. is caused by the sparse edge matrix. NewtonNet has the space complexity because it pre-calculates in Equation 6. We compare the space complexity in Table 2 and the memory used by each model in Table 4. On smaller datasets, NewtonNet has a similar space consumption with GCN. However, NewtonNet and other spectral methods are more space efficient than GCN on larger datasets because we do not need to stack layers. Therefore, NewtonNet has excellent scalability.

| Method | Chameleon | Pubmed | Penn94 | Genius |

|---|---|---|---|---|

| MLP | 1024 | 1058 | 1862 | 1390 |

| GCN | 1060 | 1114 | 3320 | 2012 |

| Mixhop | 1052 | 1124 | 2102 | 2536 |

| GPRGNN | 1046 | 1060 | 1984 | 1370 |

| ChebNetII | 1046 | 1080 | 1982 | 1474 |

| BernNet | 1046 | 1082 | 2026 | 1544 |

| NewtonNet | 1048 | 1084 | 2292 | 1868 |

Appendix C Approximation vs Interpolation

Approximation and interpolation are two common curve fitting methods (Hildebrand, 1987). Given an unknown continuous function , and its values at known points , we want to use to fit unknown . Approximation methods aim to minimize the error between the original function and estimated function ; while interpolation methods aim to fit the data and make . In other words, the interpolation function passes every known point exactly, but the approximation function finds minimal error among the known points. Fig. LABEL:approxvsinter shows the difference. The property of interpolation allows us to learn the function values directly in our model, which is discussed in Section 4.

Appendix D Experimental Details

D.1 Synthetic Datasets

In this paper, we employ contextual stochastic block model (CSBM) (Deshpande et al., 2018) to generate synthetic datasets. CSBM provides a model to generate synthetic graphs with controllable inter-class and intra-class edge probability. It assumes features of nodes from the same class conform to the same distribution. Assume there are two equal-size classes with nodes for each class. CSBM generates edges and features by:

| (34) |

where is the average node degree, is mean value of Gaussian distribution, is the feature dimension, entries of has independent standard normal distributions, and . We can control homophily ratio by changing , . When , it is a totally heterophilous graph; when , it is a totally homophilous graph. Following (Chien et al., 2021), we adopt in this paper. We vary to generate graphs with different homophily levels. In Fig. LABEL:fig:mag_imp(b), we adopt to generate the synthetic dataset. We vary the number of nodes and number of features to generate different CSBM datasets and show their frequency importance in Fig. LABEL:fig:more_importance.

D.2 Real-world datasets

Citation Networks (Sen et al., 2008): Cora, Citeseer, and PubMed are citation network datasets. Cora consists of seven classes of machine learning papers, while CiteSeer has six. Papers are represented by nodes, while citations between two papers are represented by edges. Each node has features defined by the words that appear in the paper’s abstract. Similarly, PubMed is a collection of abstracts from three types of medical papers.

WebKB (Pei et al., 2020): Cornell, Texas, and Wisconsin are three sub-datasets of WebKB. They are collected from a set of websites of several universities’ CS departments and processed by (Pei et al., 2020). For each dataset, a node represents a web page and an edge represents a hyperlink. Node features are the bag-of-words representation of each web page. We aim to classify the nodes into one of the five classes, student, project, course, staff, and faculty.

Wikipedia Networks (Rozemberczki et al., 2021): Chameleon, Squirrel, and Crocodile are three topics of Wikipedia page-to-page networks. Articles from Wikipedia are represented by nodes, and links between them are represented by edges. Node features indicate the occurrences of specific nouns in the articles. Based on the average monthly traffic of the web page, the nodes are divided into five classes.

Social Networks (Lim et al., 2021): Penn94 (Traud et al., 2012) is a social network of friends among university students on Facebook in 2005. The network consists of nodes representing individual students, each with their reported gender identified. Additional characteristics of the nodes include their major, secondary major/minor, dormitory or house, year of study, and high school attended.

Twitch-gamers (Rozemberczki & Sarkar, 2021) is a network graph of Twitch accounts and their mutual followers. Node attributes include views, creation date, language, and account status. The classification is binary and to predict whether the channel has explicit content.

Genius (Lim & Benson, 2021) is from the genius.com social network, where nodes represent users and edges connect mutual followers. Node attributes include expertise scores, contribution counts, and user roles. Some users are labeled "gone," often indicating spam. Our task is to predict these marked nodes.

| Dataset | Citation | Wikipedia | WebKB | Social | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Cora | Cite. | Pubm. | Cham. | Squi. | Croc. | Texas | Corn. | Penn94 | Genius | Gamer | |

| Nodes | 2708 | 3327 | 19717 | 2277 | 5201 | 11,631 | 183 | 183 | 41554 | 421,961 | 168,114 |

| Edges | 5429 | 4732 | 44338 | 36101 | 217,073 | 360040 | 309 | 295 | 1,362,229 | 984,979 | 6,797,557 |

| Attributes | 1433 | 3703 | 500 | 2325 | 2089 | 128 | 1703 | 1703 | 4814 | 12 | 7 |

| Classes | 7 | 6 | 3 | 5 | 5 | 5 | 5 | 5 | 2 | 2 | 2 |

| 0.81 | 0.74 | 0.80 | 0.24 | 0.22 | 0.25 | 0.11 | 0.31 | 0.47 | 0.62 | 0.55 | |

D.3 Baselines

We compare our method with various state-of-the-art methods for both spatial and spectral methods. First, we compare the following spatial methods:

-

•

MLP: Multilayer Perceptron predicts node labels using node attributes only without incorporating graph structure information.

-

•

GCN (Kipf & Welling, 2017): Graph Convolutional Network is one of the most popular MPNNs using 1-hop neighbors in each layer.

-

•

MixHop (Abu-El-Haija et al., 2019): MixHop mixes 1-hop and 2-hop neighbors to learn higher-order information.

-

•

APPNP (Klicpera et al., 2018): APPNP uses the Personalized PageRank algorithm to propagate the prediction results of GNN to increase the propagation range.

-

•

GloGNN++ (Li et al., 2022): GloGNN++ is a method for creating node embeddings by aggregating information from global nodes in a graph using coefficient matrices derived through optimization problems.

We also compare with recent state-of-the-art spectral methods:

-

•

ChebNet (Defferrard et al., 2016): ChebNet uses Chebyshev polynomial to approximate the filter function. It is a more generalized form of GCN.

-

•

GPRGNN (Chien et al., 2021): GPRGNN uses Generalized PageRank to learn weights for combining intermediate results.

-

•

BernNet (He et al., 2021b): ChebNet uses Bernstein polynomial to approximate the filter function. It can learn arbitrary target functions.

-

•

ChebNetII (He et al., 2022): ChebNet uses Chebyshev interpolation to approximate the filter function. It mitigates the Runge phenomenon and ensures the learned filter has a better shape.

-

•

JacobiConv (Wang & Zhang, 2022): JacobiConv uses Jacobi basis to study the expressive power of spectral GNNs.

D.4 Settings

We run all of the experiments with 10 random splits and report the average performance with the standard deviation. For full-supervised learning, we use 60%/20%/20% splits for the train/validation/test set. For a fair comparison, for each method, we select the best configuration of hyperparameters using the validation set and report the mean accuracy and variance of 10 random splits on the test. For NewtonNet, we choose and use a MLP with two layers and 64 hidden units for encoder . We search the learning rate of encoder and propagation among {0.05, 0.01, 0.005}, the weight decay rate among {0, 0.0005}, the dropout rate for encoder and propagation among {0.0, 0.1, 0.3, 0.5, 0.7, 0.9}, and among {0, 1, 3, 5}. For other baselines, we use the original code and optimal hyperparameters from authors if available. Otherwise, we search the hyperparameters within the same search space of NewtonNet.

Appendix E Additional experimental results

E.1 Hyperparameter Analysis

In our hyperparameter sensitivity analysis on the Citeseer and Chameleon datasets, we investigated the effects of varying the values of , , and among{0, 0.01, 0.1, 1, 10, 100}. The accuracy results were plotted in Figure LABEL:fig:sensi. We made the following observations. For the Chameleon dataset, increasing the value of resulted in improved performance, as it effectively discouraged low-frequency components. As for , an initial increase led to performance improvements since it balanced lower and higher frequencies. However, further increases in eventually led to a decline in performance. On the other hand, increasing had a positive effect on performance, as it encouraged the inclusion of more high-frequency components.

Regarding the Citeseer dataset, we found that increasing the values of , , and initially improved performance. However, there was a point where further increases in these regularization terms caused a decrease in performance. This can be attributed to the fact that excessively large regularization terms overshadowed the impact of the cross entropy loss, thus hindering the model’s ability to learn effectively. We also observe that the change of Chameleon is more than that in Citeseer, so heterophilous graphs need more regularization.

We also investigate the sensitivity of the parameter . While keeping the remaining optimal hyperparameters fixed, we explore different values of within the set {2, 5, 8, 11, 14}. The corresponding accuracy results are presented in Fig. LABEL:fig:sensi_K. In both datasets, we observe a pattern of increasing performance followed by a decline. This behavior can be attributed to the choice of . If is set to a small value, the polynomial lacks the necessary power to accurately approximate arbitrary functions. Conversely, if is set to a large value, the polynomial becomes susceptible to the Runge phenomenon (He et al., 2022).

E.2 Learned Homophily Ratio

Table 6 presents the real homophily ratio alongside the learned homophily ratio for each dataset. The close proximity between the learned and real homophily ratios indicates that our model can estimate the homophily ratio accurately so that it can further guide the learning of spectral filter.

E.3 More results of frequency importance

E.4 Learned filters

The learned filters of NewtonNet, BernNet, and ChebNetII for each dataset are illustrated in Fig. LABEL:fig:learned_on_cora to Fig. LABEL:fig:learned_on_penn94. Our observations reveal that NewtonNet exhibits a distinct ability to promote or discourage specific frequencies based on the homophily ratio. In the case of homophilous datasets, NewtonNet emphasizes low-frequency components while suppressing middle and high frequencies. Conversely, for heterophilous datasets, the learned spectral filter of NewtonNet emphasis more on high-frequency components compared to other models.

| Cora | Cite. | Pubm. | Cham. | Squi. | Croc. | Texas | Corn. | Penn. | |

|---|---|---|---|---|---|---|---|---|---|

| Real | 0.81 | 0.74 | 0.80 | 0.24 | 0.22 | 0.25 | 0.11 | 0.20 | 0.47 |

| Learned | 0.83 | 0.79 | 0.83 | 0.27 | 0.23 | 0.28 | 0.12 | 0.33 | 0.51 |