Sequential anomaly detection

under sampling constraints

Abstract

The problem of sequential anomaly detection is considered, where multiple data sources are monitored in real time and the goal is to identify the “anomalous” ones among them, when it is not possible to sample all sources at all times. A detection scheme in this context requires specifying not only when to stop sampling and which sources to identify as anomalous upon stopping, but also which sources to sample at each time instance until stopping. A novel formulation for this problem is proposed, in which the number of anomalous sources is not necessarily known in advance and the number of sampled sources per time instance is not necessarily fixed. Instead, an arbitrary lower bound and an arbitrary upper bound are assumed on the number of anomalous sources, and the fraction of the expected number of samples over the expected time until stopping is required to not exceed an arbitrary, user-specified level. In addition to this sampling constraint, the probabilities of at least one false alarm and at least one missed detection are controlled below user-specified tolerance levels. A general criterion is established for a policy to achieve the minimum expected time until stopping to a first-order asymptotic approximation as the two familywise error rates go to zero. Moreover, the asymptotic optimality is established of a family of policies that sample each source at each time instance with a probability that depends on past observations only through the current estimate of the subset of anomalous sources. This family includes, in particular, a novel policy that requires minimal computation under any setup of the problem.

Index Terms:

Active sensing; Anomaly detection; Asymptotic optimality; Controlled sensing; Sequential design of experiments; Sequential detection; Sequential sampling; Sequential testing.I Introduction

In various engineering and scientific areas data are often collected in real time over multiple streams, and it is of interest to quickly identify those data streams, if any, that exhibit outlying behavior. In brain science, for example, it is desirable to identify groups of cells with large vibration frequency, as this is a symptom for the development of a particular malfunctioning [1]. In fraud prevention security systems in e-commerce, it is desirable to identify transition links with low transition rate, as this may be an indication that a link is tapped [2]. Such applications, among many others, motivate the study of sequential multiple testing problems where the data for the various hypotheses are generated by distinct sources, there are two hypotheses for each data source, and the goal is to identify as quickly as possible the “anomalous” sources, i.e., those in which the alternative hypothesis is correct. In certain works, e.g., [3, 4, 5, 6, 7, 8], it is assumed that all sources are sampled at each time instance, whereas in others, e.g., [9, 10, 11, 12, 13, 14, 15, 16, 17], only a fixed number of sources (typically, only one) can be sampled at each time instance. In the latter case, apart from when to stop sampling and which data sources to identify as anomalous upon stopping, one also needs to specify which sources to sample at every time instance until stopping.

The latter problem, which is often called sequential anomaly detection in the literature, can be viewed as a special case of the sequential multi-hypothesis testing problem with controlled sensing (or observation control), where the goal is to solve a sequential multi-hypothesis testing problem while taking at each time instance an action that influences the distribution of the observations [18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28]. In the anomaly detection case, the action is the selection of the sources to be sampled, whereas the hypotheses correspond to the possible subsets of anomalous sources. Therefore, policies and results in the context of sequential multi-hypothesis testing with controlled sensing are applicable, in principle at least, to the sequential anomaly detection problem. Such a policy was first proposed in [18] in the case of two hypotheses, and subsequently generalized in [20, 21] to the case of an arbitrary, finite number of hypotheses. When applied to the sequential anomaly detection problem, this policy samples each subset of sources of the allowed size at each time instance with a certain probability that depends on the past observations only through the currently estimated subset of anomalous sources.

In general, the implementation of the policy in [20] requires solving, for each subset of anomalous sources, a linear system where the number of equations is equal to the number of sources and the number of unknowns is equal to the number of all subsets of sources of the allowed size. Moreover, its asymptotic optimality has been established only under restrictive assumptions, such as when the following hold simultaneously: it is known a priori that there is only one anomalous source, it is possible to sample only one source at a time, the testing problems are identical, and the sources generate Bernoulli random variables under each hypothesis [21, Appendix A]. To avoid such restrictions, it has been proposed to modify the policy in [20] at an appropriate subsequence of time instances, at which each subset of sources of the allowed size is sampled with the same probability [18, Remark 7],[25]. Such a modified policy was shown in [25] to always be asymptotically optimal, as long as the log-likelihood ratio statistic of each observation has a finite second moment.

A goal of the present work is to show that the unmodified policy in [20] is always asymptotically optimal in the context of the above sequential anomaly detection problem, as long as the log-likelihood ratio statistic of each observation has a finite first moment. However, our main goal in this paper is to propose a more general framework for the problem of sequential anomaly detection with sampling constraints that (i) does not rely on the restrictive assumption that the number of anomalous sources is known in advance, (ii) allows for two distinct error constraints and captures the asymmetry between a false alarm, i.e., falsely identifying a source as anomalous, and a missed detection, i.e., failing to detect an anomalous source, and most importantly, (iii) relaxes the hard sampling constraint that the same number of sources must be sampled at each time instance, and (iv) admits an asymptotically optimal solution that is convenient to implement under any setup of the problem.

To be more specific, in this paper we assume an arbitrary, user-specified lower bound and an arbitrary, user-specified upper bound on the number of anomalous sources. This setup includes the case of no prior information, the case where the number of anomalous sources is known in advance, as well as more realistic cases of prior information, such as when there is only a non-trivial upper bound on the number of anomalous sources. Moreover, we require control of the probabilities of at least one false alarm and at least one missed detection below arbitrary, user-specified levels. Both these features are taken into account in [6] when all sources are observed at all times. Thus, the present paper can be seen as a generalization of [6] to the case that it is not possible to observe all sources at all times. However, instead of demanding that the number of sampled sources per time instance be fixed, as in [9, 10, 11, 14, 12, 13, 15, 16, 17], we only require that the ratio of the expected number of observations over the expected time until stopping not exceed a user-specified level. This leads to a more general formulation for sequential anomaly detection compared to those in [9, 10, 11, 14, 12, 13, 15, 16, 17], which at the same time is not a special case of the sequential multi-hypothesis testing problem with controlled sensing in [18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28]. Thus, while existing policies in the literature are applicable to the proposed setup, this is not the case for the existing universal lower bounds.

Our first main result on the proposed problem is a criterion for a policy (that employs the stopping and decision rules in [6] and satisfies the sampling constraint) to achieve the optimal expected time until stopping to a first-order asymptotic approximation as the two familywise error probabilities go to 0. Indeed, we show that such a policy is asymptotically optimal in the above sense, if it samples each source with a certain minimum long-run frequency that depends on the source itself and the true subset of anomalous sources. Our second main result is that the latter condition holds, simultaneously for every possible scenario regarding the anomalous sources, by policies that sample each source at each time instance with a probability that is not smaller than the above minimum long-run frequency that corresponds to the current estimate of the subset of anomalous sources. This implies the asymptotic optimality of the unmodified policy in [20], as well as of a much simpler policy according to which the sources are sampled at each time instance conditionally independent of one another given the current estimate of the subset of anomalous sources. Indeed, the implementation of the latter policy, unlike that in [20], involves minimal computational and storage requirements under any setup of the problem. Moreover, we present simulation results that suggest that this computational simplicity does not come at the price of performance deterioration (relative to the policy in [20]).

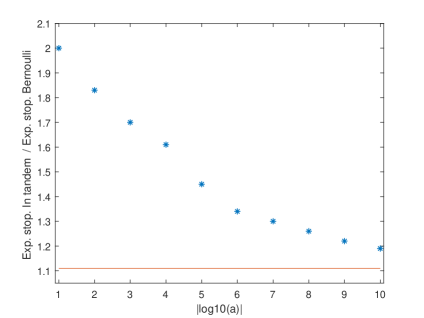

Finally, to illustrate the gains of asymptotic optimality, we consider the straightforward policy in which the sources are sampled in tandem. We compute its asymptotic relative efficiency and we show that it is asymptotically optimal only in a very special setup of the problem. Moreover, our simulation results suggest that, apart from this special setup, its actual performance loss relative to the above asymptotically optimal rules is (much) larger than the one implied by its asymptotic relative efficiency when the target error probabilities are not (very) small.

The remainder of the paper is organized as follows: in Section II we formulate the proposed problem. In Section III we present a family of policies that satisfy the error constraints, and we introduce an auxiliary consistency property.In Section IV we introduce a family of sampling rules on which we focus in this paper. In Section V we present the asymptotic optimality theory of this work. In Section VI we discuss alternative sampling approaches in the literature, and in Section VII we present the results of our simulation studies. In Section VIII we conclude and discuss potential generalizations, as well as directions for further research. The proofs of all main results are presented in Appendices B, C, D, whereas in Appendix A we state and prove two supporting lemmas.

We end this section with some notations we use throughout the paper. We use to indicate the definition of a new quantity and to indicate a duplication of notation. We set and for , we denote by the complement, by the size and by the powerset of a set , by the floor and by the ceiling of a positive number , and by the indicator of an event. We write when , when , and when , where the limit is taken in some sense that will be specified. Moreover, iid stands for independent and identically distributed, and we say that a sequence of positive numbers is summable if and exponentially decaying if there are real numbers such that for every . A property that we use in our proofs is that if is exponentially decaying, so is the sequence , for any .

II Problem formulation

Let be an arbitrary measurable space and let be a probability space that hosts independent sequences of iid -valued random elements, , which are generated by distinct data sources, as well as an independent sequence of iid random vectors, , to be used for randomization purposes. Specifically, each is a vector of independent random variables, uniform in , and each has density , with respect to some -finite measure , that is equal to either or . For every we say that source is “anomalous” if and we assume that the Kullback-Leibler divergences of and are positive and finite, i.e.,

| (1) | ||||

We assume that it is known a priori that there are at least and at most anomalous sources, where and are given, user-specified integers such that , with the understanding that if , then . Thus, the family of all possible subsets of anomalous sources is . In what follows, we denote by the underlying probability measure and by the corresponding expectation when the subset of anomalous sources is , and we simply write and whenever the identity of the subset of anomalous sources is not relevant.

The problem we consider in this work is the identification of the anomalous sources, if any, on the basis of sequentially acquired observations from all sources, when however it is not possible to observe all of them at every sampling instance. Specifically, we have to specify a random time , at which sampling is terminated, and two random sequences, and , so that represents the subset of sources that are sampled at time when , and represents the subset of sources that are identified as anomalous when . The decisions whether to stop or not at each time instance, which sources to sample next in the latter case, and which ones to identify as anomalous in the former, must be based on the already available information. Thus, we say that is a sampling rule if is –measurable for every , where

| (2) | ||||

Moreover, we say that the triplet is a policy if is a sampling rule, and is measurable for every , in which case we refer to as a stopping rule and to as a decision rule. For any sampling rule , we denote by the indicator of whether source is sampled at time , i.e., , and by (resp. ) the number (resp. proportion) of times source is sampled in the first time instances, i.e.,

We say that a policy belongs to class if its probabilities of at least one false alarm and at least one missed detection upon stopping do not exceed and respectively, i.e.,

| (3) | ||||

where are user-specified numbers in , and the ratio of its expected total number of observations over its expected time until stopping does not exceed , i.e.,

| (4) |

where is a user-specified, real number in . Note that, in view of the identity

constraint (4) is clearly satisfied when

| (5) |

This is the case, for example, when at most sources are sampled at each time instance up to stopping, i.e., when for every .

Our main goal in this work is, for any given , to obtain policies that attain the smallest possible expected time until stopping,

| (6) |

simultaneously under every , to a first-order asymptotic approximation as and go to 0. Specifically, when , we allow and to go to 0 at arbitrary rates, but when , we assume that

| (7) |

III A family of policies

In this section we introduce the statistics that we use in this work, a family of policies that satisfy the error constraint (3), as well as an auxiliary consistency property.

III-A Log-likelihood ratio statistics

Let and . We denote by the log-likelihood ratio of versus based on the first time instances when the sampling rule is , i.e.,

| (8) |

and we observe that it admits the following recursion:

| (9) |

where and . Indeed, this follows by and the fact that is -measurable, is independent of and its density under is if and if , and is independent of both and and has the same density under and .

For any we write

and we observe that the above recursion implies that

| (10) | ||||

| (11) |

In particular, for any we have

In what follows, we refer to as the local log-likelihood ratio (LLR) of source at time . We introduce the order statistics of the LLRs at time ,

and we denote by the corresponding indices, i.e.,

Moreover, we denote by the number of positive LLRs at time , i.e.,

and we also set

III-B Stopping and decision rules

We next show that for any sampling rule there is a stopping rule, which we will denote by , and a decision rule, which we will denote by , such that the policy satisfies the error constraint (3). Their forms depend on whether the number of anomalous sources is known in advance or not, i.e., on whether or . Specifically, when , we stop as soon as the largest LLR exceeds the next one by , i.e.,

| (12) |

and we identify as anomalous the sources with the largest LLRs, i.e.,

| (13) |

When the number of anomalous sources is completely unknown ( and ), we stop as soon as the value of every LLR is outside for some , i.e.,

| (14) |

and we identify as anomalous the sources with positive LLRs, i.e.,

| (15) |

When , in general, we combine the stopping rules of the two previous cases and we set

| (16) | ||||

where , and we use the following decision rule:

| (17) |

That is, we identify as anomalous the sources with positive LLRs as long as their number is between and . If this number is larger than (resp. smaller than ), then we declare as anomalous the sources with the (resp. ) largest LLRs.

Proposition III.1

Proof:

When all sources are sampled at all times, this is shown in [6, Theorems 3.1, 3.2]. The same proof applies when for any sampling rule .

∎

In view of the above result, in what follows we assume that the thresholds in are selected according to (18) when and according to (19) when . While this is a rather conservative choice, it will be sufficient for obtaining asymptotically optimal policies. For this reason, in what follows we say that a sampling rule, , that satisfies the sampling constraint (4) with , is asymptotically optimal under , for some , if

as and go to at arbitrary rates when and so that (7) holds when . We simply say that is asymptotically optimal if it is asymptotically optimal under for every . We next introduce a weaker property, which will be useful for establishing asymptotic optimality.

III-C Exponential consistency

For any sampling rule and any subset we denote by the random time starting from which the sources in are the ones estimated as anomalous by , i.e.,

| (20) |

and we say that is exponentially consistent under if is an exponentially decaying sequence. We simply say that is exponentially consistent if it is

exponentially consistent under for every . The following theorem states sufficient conditions for exponential consistency under .

Theorem III.1

Let and let be an arbitrary sampling rule.

-

•

When , is exponentially consistent under if there exists a such that is exponentially decaying for every if and for every if .

-

•

When , is exponentially consistent under if there exists a such that is exponentially decaying either for every or for every .

Proof:

Appendix B.

∎

Remark: Theorem III.1 reveals that when or , it is possible to have exponentially consistency under without sampling at all certain sources. Specifically, when (resp. ) it is not necessary to sample any source in (resp. ). On the other hand, when , it suffices to sample either all sources in or all of them in .

IV Probabilistic sampling rules

In this section we introduce a family of sampling rules and we show how to design them in order to satisfy the sampling constraint (5) and in order to be exponentially consistent. Thus, we say that a sampling rule is probabilistic if there exists a function such that for every , , and we have

| (21) |

i.e., is the probability that is the subset of sampled sources when is the currently estimated subset of anomalous sources. If is a probabilistic sampling rule, then for every and we set

| (22) | ||||

i.e., is the probability that source is sampled when is the currently estimated subset of anomalous sources.

We refer to a probabilistic sampling rule as Bernoulli if for every and we have

| (23) |

i.e., if the sources are sampled at each time instance conditionally independent of one another given the currently estimated subset of anomalous sources. Indeed, such a sampling rule admits a representation of the form

| (24) |

where are iid and uniform in ,

thus, its implementation at each time instance requires the realization of Bernoulli random variables.

The following proposition provides a sufficient condition for a probabilistic sampling rule to satisfy the sampling constraint (5), and consequently (4), for any -stopping time .

Proposition IV.1

Proof:

Finally, we establish sufficient conditions for the exponentially consistency of a probabilistic sampling rule.

Theorem IV.1

Let be a probabilistic sampling rule.

-

•

When , is exponentially consistent if, for every , is positive for every if and every if .

-

•

When , is exponentially consistent if, for every , is positive either for every or for every .

Proof:

Remark: Theorem IV.1 implies that when , a probabilistic sampling rule is exponentially consistent if, whenever the number of estimated anomalous sources is larger than (resp. smaller than ), it samples with positive probability any source that is currently estimated as anomalous (resp. non-anomalous). When , on the other hand,

it suffices to sample with positive probability at any time instance either every source that is currently estimated as anomalous or every source that is currently estimated as non-anomalous.

Remark: Unlike the proof of Proposition IV.1, the proof of Theorem IV.1 is quite challenging and it is one of the main contributions of this work from a technical point of view. It relies on two Lemmas, B.1 and B.2, which we state in Appendix B. The proof becomes much easier if we strengthen the assumption of the theorem and assume that every source be sampled with positive probability at every time instance, i.e., if we assumed that for every . Indeed, in this case Lemma B.2 becomes redundant, the proof simplifies considerably, and it essentially relies on ideas developed in [18]. However, such an assumption would limit considerably the scope of the asymptotic optimality theory we develop in the next section.

V Asymptotic optimality

In this section we present the asymptotic optimality theory of this work and discuss some of its implications. For this, we first need to introduce some additional notation.

V-A Notation

For with we set

| (26) |

and for with we set

| (27) |

That is, is the minimum and the harmonic mean of the Kullback-Leibler information numbers in , whereas is the minimum and the harmonic mean of the Kullback-Leibler information numbers in . Moreover, (resp. ) is a positive real number smaller or equal to the size of (resp. ), with the equality attained when (resp. ) for every in (resp. ).

Moreover, for with we set

| (28) |

thus, is a positive real number that is equal to 1 when for every .

In Theorem V.1 we also introduce for each two quantities,

| (29) |

which will play an important role in the formulation of all results in this section. Although their values depend on , in order to lighten the notation we simply write and unless we want to emphasize their dependence on one or more of these parameters.

V-B A universal asymptotic lower bound

Theorem V.1

Let .

(i) Suppose that . Then, as we have

| (30) |

where if ,

| (31) | ||||

and if ,

| (32) | ||||

(ii) Suppose that and let so that (7) holds.

-

•

If , then

(33) -

•

If , we distinguish two cases:

-

If either or , then

(34) If and , we set and we distinguish two further cases:

-

If either or , then

(35) -

If and , then

(36)

-

-

-

•

If , then we distinguish again two cases:

-

If either or , then

(37) If and , we set and we distinguish two further cases:

-

If either or , then

(38) -

If and , then

(39)

-

-

Proof:

The proof is presented in Appendix C. It follows similar steps as the one in the full sampling case in

[6, Theorem 5.1], with the difference that it uses a version of Doob’s optional sampling theorem in the place of Wald’s identity and requires the solution of a max-min optimization problem, in each of the cases we distinguish, to determine the denominator in the lower bound.

∎

Remark: By the definition of and in Theorem V.1 we can see that

-

•

they both take values in and at least one of them is positive,

-

•

they are both increasing as functions of ,

-

•

at least one of them is equal to 1 when ,

-

•

if (resp. ), then (resp. ).

In the next section we obtain an interpretation of the values of and .

V-C A criterion for asymptotic optimality

Based on the universal asymptotic lower bound of Theorem V.1, we next establish the asymptotic optimality under of a sampling rule that satisfies the sampling constraint and samples each source , when the true subset of anomalous sources is , with a long-run frequency that is not smaller than

| (40) |

Theorem V.2

Let and let be a sampling rule that satisfies (4) with . If for every such that the sequence is summable for every , then is asymptotically optimal under .

Proof:

Remark: Let . By the definition of in (40) it follows that:

-

•

for every , since ,

-

•

(resp. ) for every in (resp. ),

-

•

(resp. ) for every in (resp. ).

Therefore, the above theorem implies that when (resp. ) is equal to , it is not necessary to sample any source in (resp. ) in order to achieve asymptotic optimality under . Moreover, recalling the definitions of and in (26) and (27), we can see that:

| (41) | ||||

These identities reveal that (resp. ) is equal to the average of the minimum limiting sampling frequencies of the sources in (resp. ) required for asymptotic optimality under , multiplied by (resp. ).

V-D Asymptotically optimal probabilistic sampling rules

We next specialize Theorem V.2 to the case of probabilistic sampling rules. Specifically, we obtain a sufficient condition for the asymptotic optimality, simultaneously under every possible scenario, of an arbitrary probabilistic sampling rule, , in terms of the quantities , defined in (22).

Theorem V.3

If is a probabilistic sampling rule and for every it satisfies

| (45) |

then it is exponentially consistent. If also satisfies (25), then it is asymptotically optimal under for every .

Proof:

In the next corollary we show that both conditions of Theorem V.3 are satisfied when the equality holds in (45) for every .

Corollary V.1

If is a probabilistic sampling rule such that for every we have

| (46) |

then is exponentially consistent and asymptotically optimal under for every .

Proof:

While (46) suffices for the asymptotic optimality of a probabilistic sampling rule under , it is not always necessary. In the following proposition we characterize the setups for which the necessity holds.

Proposition V.1

Proof:

For any probabilistic sampling rule that satisfies (25) and (45) we have

| (47) |

where the equality follows by (42).

We argue by contradiction and assume that does not hold. By (42) and (43) it then follows that . This implies that there is a probabilistic sampling rule that satisfies (45) with strict inequality for at least one , and also satisfies (25). Thus, we have reached a contradiction, which completes the proof. ∎

V-E The optimal performance under full sampling

Corollary V.1 implies that the asymptotic lower bound in Theorem V.1 is always achieved, and as a result it is a first-order asymptotic approximation to the optimal expected time until stopping. By this approximation it follows that for any we have

| (48) |

where is defined as follows:

| (49) |

Moreover, by an inspection of the values of and in Theorem V.2 it follows that

| (50) | ||||

The equivalence in (48) implies that if and , then the optimal expected time until stopping under in the case of full sampling can be achieved, to a first-order asymptotic approximation as , without sampling all sources at all times.

Corollary V.2

If and for some , then there is a probabilistic sampling rule such that and while for some .

The next proposition, in conjunction with Proposition V.1, shows that can also be used to characterize the setups for which the equality must hold in (45). In particular, it shows that this is always the case when .

Proposition V.2

For every we have and

Proof:

By the definition of in (26), (27) it follows that

Since also at least one of and is equal to , by the definition of in (49) we conclude that . It remains to prove the equivalence. It suffices to observe that since , are both increasing in , by the definition of in (49) we have . Follows by direct verification. ∎

V-F The Chernoff sampling rule

When is an integer, we will refer to a probabilistic sampling rule as Chernoff if it satisfies the conditions of Theorem V.3 and samples exactly sources per time instance. Indeed, such a sampling rule is implied from [18],[20], [21] when these works are applied to the sequential anomaly detection problem (with a fixed number of sampled sources per time instance). In fact, if the class is restricted to policies that sample exactly sources per time instance, the asymptotic optimality of this rule under is implied by the general results in [20], as long as and are both positive. However, this is not always the case. Indeed, in the simplest formulation of the sequential anomaly detection problem, where it is known a priori that there is only one anomaly (), only one source can be sampled per time instance , and the sources are homogeneous, i.e., , for every , then one of and is 0 for any . In this setup, the asymptotic optimality of a Chernoff rule was shown in [21, Appendix A] if also , are both Bernoulli pmf’s. Our results in this section remove all these restrictions and establish the asymptotic optimality of the Chernoff rule for any values of , and without artificially modifying it at a subsequence of sampling instances, as in [25].

On the other hand, to implement a Chernoff rule one needs to determine a function that satisfies simultaneously the conditions of Theorem V.3 and also

| (51) |

which can be a computationally demanding task unless the problem has a special structure or . This should be compared with the corresponding asymptotically optimal Bernoulli sampling rule, whose implementation does not require essentially any computation under any setup of the problem.

V-G The homogeneous setup

We now specialize the previous results to the case that and for every , where the quantities in (26), (27), (28), (45) simplify as follows:

| (52) | ||||

Suppose first that the number of anomalous sources is known in advance, i.e., . Then, and do not depend on , we denote them simply by and respectively, and present their values in Table I.

From Table I and (49) it follows that for every . Then, from Propositions V.1 and V.2 it follows that if is a probabilistic sampling rule that satisfies the conditions of Theorem V.3, then it samples at each time instance each source that is currently estimated as anomalous (resp. non-anomalous) with probability (resp. ), i.e.,

| (53) |

Moreover, we observe that the first-order asymptotic approximation to the optimal performance is independent of the true subset of anomalous sources. Specifically, for every we have

| (54) |

When the number of anomalous sources is not known a priori, i.e., , we focus on the special case that and , or equivalently, . Then, the values of and , presented in Table II, do not depend on , and the optimal asymptotic performance under takes the following form:

| (55) |

From (48) and (55) we further obtain

| (56) |

Note that, in this setup, if and only if one of the following holds:

Moreover, from Theorem V.2 it follows that,

in each of these three cases, asymptotic optimality is achieved by any sampling rule, not necessarily probabilistic, that satisfies the sampling constraint and samples all sources with the same long-run frequency. This is the content of the following corollary.

Corollary V.3

Let be a sampling rule such that

-

•

the sampling constraint (4) holds with ,

-

•

is summable for every and .

If , , and for every , then is asymptotically optimal under for every with . If also and , then is asymptotically optimal under for every .

The conditions of the previous Corollary are satisfied when is a probabilistic sampling rule with for every and , e.g., when is a Bernoulli sampling rule that samples each source at each time instance with probability , independently of the other sources. Moreover, in the setup of Corollary V.3 it is quite convenient to find and implement a Chernoff rule (Subsection V-F). Indeed, when is an integer, the conditions of Corollary V.3 are satisfied when we take a simple random sample of sources at each time instance, i.e., when

Finally, in Subsection VI-A we will introduce a non-probabilistic sampling rule that satisfies the conditions of Corollary V.3.

| 0 | 0 | ||||

| 0 | 0 |

V-H A heterogeneous example

We end this section by considering a setup where is an even number, , , and

| (57) |

for some . Moreover, we focus on the case that the subset of anomalous sources is of the form . Then, the optimal asymptotic performance under takes the following form:

-

•

when ,

(58) -

•

when ,

(59) -

•

when ,

(60)

From these expressions and (48) we also obtain

| (61) |

and we note that is always strictly smaller than in this setup as long as .

VI Non-probabilstic sampling rules

In this section, we discuss certain non-probabilistic sampling rules.

VI-A Sampling in tandem

Suppose that is an integer and consider the straightforward sampling approach according to which the sources are sampled in tandem, of them at a time. Specifically, sources to are sampled at time , and if , then sources to are sampled at time , whereas if , then sources to and to are sampled at time , etc. In this way, each source is sampled exactly times in an interval of the form , where , to which we will refer as a cycle. This sampling rule satisfies the conditions of Corollary V.3, which means that in the special case that , , and for every , it achieves asymptotic optimality under when , and for every when and .

In general, by the formula for the optimal asymptotic performance under full sampling, which is obtained by the lower bound of Theorem V.1 with , we can see that if sampling is terminated at a time that is a multiple of , the expected number of cycles until stopping is, to a first-order asymptotic approximation, equal to . Since each cycle is of length , the expected time until stopping is, again to a first-order asymptotic approximation, equal to . Thus, the asymptotic relative efficiency of this sampling approach can be defined as follows:

| (62) |

where the limit is taken so that (7) holds when .

VI-B Equalizing empirical and limiting sampling frequencies

We next consider a sampling approach, which has been applied to a general controlled sensing problem [28], as well as to a bandit problem [29], and we show that not only it may not achieve asymptotic optimality in the sequential anomaly detection problem, but it may even lead to a detection procedure that fails to terminate with positive probability.

To be more specific, we consider the homogeneous setup of Subsection V-G with . In this setup, a probabilistic sampling rule that satisfies the conditions of Theorem V.3 samples a source in (resp. ) with probability (resp. ), whenever is the subset of sources currently identified as anomalous. The sampling rule that we consider in this Subsection is not probabilistic, as it requires knowledge of not only the currently estimated subset of anomalous sources, but also of the current empirical sampling frequencies. Specifically, if is the subset of sources currently estimated as anomalous, for every source in (resp. ) it computes the distance between its current empirical sampling frequency and (resp. ), and it samples next a source for which this distance is the maximum. That is, for every and we have

| (67) | ||||

Without loss of generality, we also assume that each source has positive probability to be sampled at the first time instance, i.e.,

| (68) |

Proposition VI.1

Consider the homogeneous setup of Subsection V-G with and let be sampling rule that satisfies (67)-(68). Suppose further that there is only one anomalous source, i.e., that the subset of anomalous source, , is a singleton, and also that . Then, there is an event of positive probability under on which

-

(i)

the same source is sampled at every time instance,

-

(ii)

and if also and , fails to terminate for any selection of its thresholds.

Proof:

If (i) holds, there is an event of positive probability under on which all LLRs but one are always equal to 0. Thus, (ii) follows directly from (i) and the fact that when and , the stopping rule (14) requires that all LLRs be non-zero upon stopping. Therefore, it remains to prove (i). Without loss of generality, we set . The event has positive probability under , by (68), and so does the event

| (69) |

since is an iid sequence with expectation under (see, e.g., [30, Proposition 7.2.1]). Since these two events are also independent, their intersection has positive probability under . Therefore, it suffices to show that only source 1 is sampled on . Indeed, on this event sampling starts from source 1, and as a result the vector of empirical frequencies, at time is . Moreover, the estimated subset of anomalous sources at is , independently of whether or . Since , or equivalently,

for every , by (67) it follows that source is sampled again at time , and as a result the vector of empirical frequencies at time remains . Applying the same reasoning as before, we conclude that source 1 is sampled again at time . The same argument can be repeated indefinitely, and this proves (i).

∎

Remark: When , , each is exponential with rate , and each is exponential with rate , the conditions of the previous proposition are satisfied as long as . Indeed, in this case we have

and as a result .

VI-C Sampling based on the ordering of the LLRs

A different, non-probabilistic sampling approach, which goes back to [18, Remark 5], suggests sampling at each time instance the sources with the smallest, in absolute value, LLRs among those estimated as anomalous/non-anomalous. Such a sampling rule was proposed in [12], in the homogeneous setup of Subsection V-G, under the assumption that the number of anomalous sources is known a priori, i.e., . An extension of this rule in the heterogeneous setup was studied in [13], under the assumption that and . A similar sampling rule, that also has a randomization feature, was proposed in [16] when , as well as in the case of no prior information, i.e., when . For each of them, the criterion of Theorem V.2 can be applied to establish their asymptotic optimality. Its verification, however, is a quite difficult task that we plan to consider in the future.

VII Simulation study

In this section we present the results of a simulation study where we compare two probabilistic sampling rules, Bernoulli (Section IV) and Chernoff (Subsection V-F), between them and against sampling in tandem (Subsection VI-A). Throughout this section, for every we have and , i.e., all observations from source are Gaussian with variance and mean equal to if the source is anomalous and 0 otherwise, and as a result . We consider a homogeneous setup where

| (70) |

as well as a heterogeneous setup where

| (71) |

in which case (57) holds with and . In both setups we set , , , , , , we assume that the subset of anomalous sources is of the form , and consider different values for its size. The two probabilistic sampling rules are designed so that (46) holds for every . As a result, by Corollary V.1 it follows that in both setups they are asymptotically optimal under for every . On the other hand, by Corollary V.3 it follows that sampling in tandem is asymptotically optimal under only in the homogeneous setup (71) and when (since ).

In Figure 1 we plot the expected value of the stopping time that is induced by each of the three sampling rules against the true number of anomalous sources. Specifically, in Figure 1a we consider the homogeneous setup (70) and in Figure 1b the heterogeneous setup (71). In all cases, the thresholds are chosen, via Monte Carlo simulation, so that the familywise error probability of each kind is (approximately) equal to . The Monte Carlo standard error that corresponds to each estimated expected value is approximately . From Figure 1 we can see that the performance implied by the two probabilistic sampling rules is always essentially the same. Sampling in tandem performs significantly worse in all cases apart from the homogeneous setup with , where all three sampling rules lead to essentially the same performance. We note also that, in both setups and for all three sampling rules, the expected time until stopping is much smaller when the number of anomalous sources is equal to either or than when it is between and .

In Figures 2 and 3 we plot the ratio of the expected value of the stopping time induced by sampling in tandem over that induced by the Bernoulli sampling rule against as ranges from to . (We do not present the corresponding results for the Chernoff rule, as they are almost identical). Specifically, in Figure 2 we consider the homogeneous setup (70) when the number of anomalous sources is and , whereas in Figure 3 we consider the heterogeneous setup (71) when the number of anomalous sources is and 6. For each value of , the thresholds are selected according to (19) and each expectation is computed using simulation runs. The standard error for each estimated expectation is approximately , whereas the standard error for each ratio is approximately in the homogeneous setup and in the heterogeneous setup. Moreover, in each case we plot the limiting value of this ratio, which is the limit defined in (62). In the homogeneous case this is given by (65) and is equal to

| (72) |

In the heterogeneous case it is given by (66) and is equal to

| (73) |

From Figures 2 and 3 we can see that, in all cases, the efficiency loss due to sampling in tandem is (much) larger than the one suggested by the corresponding asymptotic relative efficiency when is not (very) small.

VIII Conclusions and extensions

In this paper we propose a novel formulation of the sequential anomaly detection problem with sampling constraints, in which arbitrary, user-specified bounds are assumed on the number of anomalous sources, the probabilities of at least one false alarm and at least one missed detection are controlled below distinct tolerance levels, and the number of sampled sources per time instance is not necessarily fixed. We obtain a general criterion for achieving the optimal expected time until stopping, to a first-order asymptotic approximation as the error probabilities go to 0, as long as the log-likelihood ratio statistic of each observation has a finite first moment. We show that asymptotic optimality is achieved, simultaneously under every possible subset of anomalous sources, for any version of the proposed problem, using the unmodified sampling rule in [20], to which we refer as Chernoff, but also using a novel sampling rule whose implementation requires minimal computations, to which we refer in this work as Bernoulli. Despite their very different computational requirements, these two rules are found in simulation studies to lead to essentially the same performance. On the other hand, it has been shown in simulation studies under various setups [12, 13, 16], that the Chernoff rule leads to significantly worse performance in practice compared to non-probabilistic sampling rules as the ones discussed in Subsection VI-C. The study of sampling rules of this nature in the general sequential anomaly detection framework we propose in this work is an interesting problem that we plan to consider in the future. Another interesting problem is that of establishing stronger than first-order asymptotic optimality, which has been solved in certain special cases of the sequential multi-hypothesis testing problem with controlled sensing [23, 24].

The results of this paper can be shown, using the techniques in [8], to remain valid for a variety of error metrics beyond the familywise error rates that we consider in this work, such as the false discovery rate and the false non-discovery rate. However, this is not the case for the generalized familywise error rates proposed in [31, 32], for which different policies and a different analysis is required. These error metrics have been considered in [4, 5, 7] in the case of full sampling, whereas certain results in the presence of sampling constraints have been presented in [33].

The results in this work can also be generalized in a natural way when the sampling cost varies per source, as in [15], or when the two hypotheses in each source are not completely specified, as it is done for example in [7] in the case of full sampling. Another potential generalization is the removal of the assumption that the acquired observations are conditionally independent of the past given the current sampling choice, as it is done in [27] in a general controlled sensing setup. Finally, another direction of interest is a setup where the focus is on the dependence structure of the sources rather than their marginal distributions, as for example in [34].

IX Acknowledgments

The authors would like to thank Prof. Venugopal Veeravalli for stimulating discussions.

Appendix A

In this Appendix we state and prove two auxiliary lemmas that are used in the proofs of various results of this paper. Specifically, we fix , and for any , and any sampling rule we set

| (74) | ||||

and comparing with (10) we observe that

| (75) |

Lemma A.1

Let be an arbitrary sampling rule, , , , and . Then, the sequences

are exponentially decaying.

Proof:

We prove the result for the third probability, as the proofs for the other ones are similar. To lighten the notation, we suppress the dependence on and we write instead of . By (75), for every we have

which shows that if , then Thus, for every we have

and it suffices to show that the two terms in the upper bound are exponentially decaying. We show this only for the first one, as the proof for the second is similar. For this, we fix and we observe that, for any and , by Markov’s inequality we have

| (76) | ||||

By (74) and the law of iterated expectation it follows that the expectation in the upper bound can be written as follows:

| (77) |

Since is an -measurable Bernoulli random variable and is independent of and has the same distribution as , we also have

where is the cumulant generating function of , i.e.,

Since is convex on , , and

there is an such that , and as a result

Therefore, setting in (76)-(77) we obtain

Repeating the same argument times we conclude that there exists an such that

Since , this completes the proof.

∎

Lemma A.2

Let , , , , and let be an arbitrary sampling rule.

-

(i)

If and is summable, then so is

-

(ii)

If and is summable, then so is

-

(iii)

If and both and are summable, then so is

Proof:

For (i), by the law of total probability we have

The first term in the upper bound is summable by assumption, whereas the second one is summable, as exponentially decaying, by Lemma A.1.

The proof of (ii) is similar and is omitted. Since (iii) follows directly by (i) and (ii) when and are both positive, it suffices to consider the case where only one them is positive. Without loss of generality, we assume that . Then, by the law of total probability again we have

The first term in the upper bound is summable by assumption, whereas the second one is summable, as exponentially decaying, by Lemma A.1.

∎

Appendix B

In this Appendix we prove Theorems III.1 and IV.1, which provide sufficient conditions for the exponential consistency of a sampling rule. In order to lighten the notation, throughout this Appendix we suppress dependence on and we write , , , instead of , , , , , .

Proof:

We prove the result first when . By the definition of the decision rule in (13) it follows that when , there are , , such that , and as a result by the union bound we have

Therefore, to show that is exponentially decaying, it suffices to show that this is the case for for every and . To show this, we fix such and and we note that, by assumption, either or is exponentially decaying for small enough. Without loss of generality, suppose that this is the case for the former. By an application of the law of total probability we then obtain

As mentioned earlier, the second term in the upper bound is, by assumption, exponentially decaying for small enough. By Lemma A.1 it follows that this is also the case for the first one.

Suppose next that . By the definition of the decision rule in (17) it follows that when , there is an such that either for some , or for some . As a result, by the union bound we have

Therefore, to prove that is exponentially decaying, it suffices to show that this is true for and for every and . This can be shown similarly to the case , in view of the fact that the sequences and are, by assumption, both exponentially decaying for small enough and for every and .

The two remaining cases are and . We consider only the former, as the proof for the latter is similar, and assume that . By the definition of the decision rule in (13) it follows that when , there is an such that either for some and , or for some . As a result, by the union bound we have

Since , the sequence is, by assumption, exponentially decaying for every and small enough. Therefore, similarly to the previous cases we can show that each term in the upper bound is exponentially decaying.

∎

In the remainder of this Appendix we prove Theorem IV.1, whose proof relies on two preliminary lemmas. To state those, for every and we denote by the first time instance at which has been estimated as the subset of anomalous sources for at least times since , i.e.

| (78) |

where is an arbitrary constant, which in the proof of Theorem IV.1 will be selected to be small enough.

Lemma B.1

Let and . If , then

and

are both exponentially decaying for all small enough.

Proof:

We prove the two claims together by showing that is exponentially decaying for all small enough, where stands for either or . For any given we set

Then, on the event we have and consequently

where in the last inequality we have used the definition of . Consequently, for every and we obtain

Since , there is an such that for all we have

Therefore, it suffices to show that, for all , the sequence

| (79) |

is exponentially decaying. This follows by the fact that is a uniformly bounded martingale difference, in view of (22), and an application of the maximal Azuma-Hoeffding submartingale inequality [35, Remark 2.3].

∎

Lemma B.2

Let be such that and . If is a sampling rule that satisfies the conditions of Theorem IV.1, then the sequence is exponentially decaying.

Proof:

We consider first the case that , where we only prove the result when , as the proof when is similar. Since , and , there exists a . Since also , the assumptions of Theorem IV.1 imply that . Thus, by Lemma B.1 it follows that

| (80) |

is an exponentially decaying sequence for small enough, and it suffices to show that this is also the case for

| (81) |

Indeed, by the definition of in (78) it follows that on the event we have . Since and , by the definition of the decision rule in (17) it follows that . Therefore,

where the inequality holds because takes values in on the event . Therefore, it remains to show that the sequence

| (82) |

is exponentially decaying for small enough. Indeed, for large enough , implies that

For any given , there exists a so that for all large enough we have

| (83) |

and so that the probability in (82) is bounded by

For small enough, is small enough, and by Lemma A.1 it follows that the latter probability, and consequently (82), is exponentially decaying in .

It remains to prove the lemma when and . In this case there are and , and by the assumptions of Theorem IV.1 it follows that either or . Without loss of generality, we assume that the latter holds. Then, by Lemma B.1 it follows that (80) is exponentially decaying for all small enough, and it suffices to show that this is also the case for (81). Indeed, by the definition of in (78) it follows that on the event we have . Consequently, by the definition of the decision rule in (13) it follows that there is an such that . Therefore, by the union bound we have

where as before the inequality holds because takes values in on the event . Therefore, it remains to show that the sequence

is exponentially decaying for small enough. As before, this follows by an application of Lemma A.1. ∎

Proof:

Fix . By Theorem III.1 it suffices to show that, for all small enough, is an exponentially decaying sequence

-

•

for every , if , and for every , if , when ,

-

•

either for every or for every , when .

In order to do so, we select the positive constant in (78) to be smaller than . Then, for every there is at least one for which . As a result, for every and , by the union bound we have

Suppose first that . Then, it suffices to show that, for every and all small enough,

| (84) |

is exponentially decaying for every when and for every when . We only consider the former case, as the proof for the latter is similar. Thus, suppose that and let .

- •

- •

Suppose now that . Then, it suffices to show that (84) is exponentially decaying for every and all small enough, either for every or for every .

Appendix C

In this Appendix we fix and prove the universal asymptotic lower bound of Theorem V.2. The proof relies on two lemmas, for the statement of which we need to introduce the following function:

where , i.e., the Kullback-Leibler divergence between a Bernoulli distribution with parameter and one with parameter . Moreover, we set .

The first lemma states a non-asymptotic, information-theoretic inequality that generalizes the one used in Wald’s universal lower bound in the problem of testing two simple hypotheses [36, p. 156].

Lemma C.1

Let and let be a policy that satisfies the error constraint (3) and . Then, for any such that we have

| (85) |

Proof:

The proof is identical to that in the full sampling case in [6, Theorem 5.1], and can be obtained by an application of the data processing inequality for Kullback-Leibler divergences (see, e.g., [37, Lemma 3.2.1]). Indeed, the left-hand side is the Kullback-Leibler divergence between and given the available information up to time , when the sampling rule is utilized, whereas the right hand side is obtained by considering the Kullback-Leibler divergence between and based on a single event of . ∎

We next make use of the previous inequality to establish lower bounds on the expected number of samples taken from each source until stopping.

Lemma C.2

Let and let be a policy that satisfies the error constraint (3) and .

-

(i)

If , then

(86) -

(ii)

If , then

(87) -

(iii)

If either or , then

(88)

Proof:

We recall the sequence defined in (74), and note that it is a zero-mean, -martingale under . Moreover, by the finiteness of the Kullback-Leibler divergences in (1) we have:

Since also is an -stopping time such that , by the Optional Sampling Theorem [38, pg. 251] we obtain:

| (89) |

(i) If , there is a such that . By representation (11) and decomposition (75) it follows that the log-likelihood ratio process in (9) takes the form

By (85) and (89) we then obtain

Since this inequality holds for every , this completes the proof.

(ii) The proof is similar to (i) and is omitted.

For the proof of Theorem V.1 we introduce the following notation:

| (90) | ||||

Moreover, we observe that as we have

| (91) | ||||

| (92) |

Proof:

(i) Let such that and such that . By Lemma C.2(iii) it then follows that

and by constraint (4) we conclude that

Since the lower bound is independent of the policy , we have

Comparing with (30) and recalling (91), we can see that it suffices to show that with and as in (31)-(32). Indeed, the maximum in is achieved by ’s of the form

| (93) | ||||

for such that the constraint in is satisfied, i.e.,

| (94) |

and as a result,

This maximum is achieved by such that

, in particular by and equal to and as in (31)-(32), which completes the proof.

(ii) Suppose first that . As before, let such that and such that . Then, by Lemma C.2(i) and Lemma C.2(ii) we obtain:

and by constraint (4) we conclude that

| (95) | ||||

Since the lower bound does not depend on the policy we have

Comparing with (33) and recalling (91) we can see that it suffices to show that

as according to (7), with and as in (33). The equality follows directly from the values of and in (35). Moreover, the maximum in is achieved by of the form (93) that satisfy (94). Therefore:

and this maximum is achieved for and such that the two terms in the minimum are equal. As a result, we obtain

where and are equal to and in (33), with replaced by

. As and go to according to (7), we have (recall (92)), and consequently with as in (35).

Finally, we consider the case and omit the proof when , as it is similar. When either or , we have to show (34). Indeed, working as before, using Lemma C.2(i), we obtain

Comparing with (34), and recalling (91), we can see that it suffices to show that with as in (34). Indeed, the maximum in is achieved by of the form (93) with and such that (94) is satisfied, i.e.,

which shows that with as in (34) and completes the proof in this case.

It remains to establish the asymptotic lower bound when and , in which case we have to show (35)-(36). The asymptotic equivalence in (35) can be shown by direct evaluation, therefore it suffices to show only the asymptotic lower bound in this case.

Working as before, using Lemma C.2(i) and Lemma C.2(iii), for any such that we obtain

| (96) | ||||

The latter maximum is achieved by of the form (93) that satisfy (94), thus,

-

•

If or , the maximum in is achieved when the two terms in the minimum are equal. As a result, we have

(97) with and equal to and as in (35), but with replaced by .

- •

Therefore, letting and go to 0 in (96) according to (7), and recalling (91)-(92), proves the asymptotic lower bounds in both (35) and (36).

∎

Appendix D

In this Appendix we prove Theorems V.2 and V.3, which provide sufficient conditions for asymptotic optimality. In both proofs we recall that: for every in (resp. ) when (resp. ),

, when , and

when .

Proof:

We prove the theorem first when , where and go to 0 at arbitrary rates. By the asymptotic lower bound (30) in Theorem V.1 it follows that in this case it suffices to show

| (98) |

Then, for any small enough and we set

| (99) |

and observe that

| (100) |

For any , by the definition of in (12) it follows that on the event there are and such that , and as a result

Moreover, for any and we have

| (101) |

and consequently for every the series in (100) is bounded by

| (102) |

By the assumption of the theorem and an application of Lemma A.2(iii) with equal to (resp. ) when (resp. ) and equal to (resp. ) when (resp. ), it follows that the series in (102) converges. Thus, letting first and then in (100) proves that as we have

| (103) |

In view of (40) and the selection of threshold according to (18), this proves (98).

We next consider the case , where we let so that (7) holds for some . We prove the result when , as the proof when is similar. Thus, in what follows, , and as a result and for every . By the universal asymptotic lower bounds (33) and (34) it follows that when either or , it suffices to show that

| (104) |

On the other hand, by the universal asymptotic lower bounds (35) and (36) it follows that when , it suffices to show that

| (105) |

When in particular , the maximum is attained strictly by the first term, when , and , the maximum is attained strictly by the second term, whereas in all other cases the two terms are equal to a first-order asymptotic approximation.

We start by proving (104) when . In this case we also have , and consequently for every . Then, for small enough and we set

| (106) |

and observe that

| (107) |

By the definition of in (16) it follows that, for any , on the event there is either a such that , or an such that . As a result, by the union bound we obtain

Moreover, for any and any , we have and , which implies that the series in (107) is bounded by

| (108) | ||||

By the assumption of the theorem and an application of Lemma A.2(i) with and of Lemma A.2(ii) with it follows that (108) converges. Thus, letting first and then in (107) proves that as

In view of (40) and the selection of thresholds according to (19), this implies that

| (109) |

and proves (104).

The proof when , in which case , is similar, with the difference that we use

| (110) |

in the place of , and apply only Lemma A.2(i).

It remains to show that (105) holds when , in which case is not always positive. We recall the definitions of and in (99) and (110) and observe that for any small enough and we have

| (111) |

By the definition of in (16) it follows that, for any , on the event there are either and such that or such that , and as a result

Following similar steps as in the previous cases, applying in particular Lemma A.2(ii) with and Lemma A.2(iii) with and equal to (resp. ) when (resp. ), we conclude that as

In view of (40) and the selection of thresholds according to (19), this proves

(105).

∎

Proof:

Fix and a probabilistic sampling rule that satisfies (45). Since for every in (resp. ) when (resp. ), , when , and when , the exponentially consistency of under follows by an application of Theorem IV.1. To establish its asymptotic optimality, by Theorem V.2 it follows that it suffices to show that is an exponentially decaying sequence for every and such that . Fix such and . Then, there is an such that

| (112) |

By the definition of a probabilistic rule (recall (21)), is conditionally independent of given and its conditional distribution, , does not depend on . Thus, by [39, Prop. 6.13] there is a measurable function , which does not depend on , such that

where is a sequence of iid random variables, uniformly distributed in . Consequently, there is a measurable function such that

| (113) |

Then, for every we have

and as a result

| (114) | ||||

From (22) and (113) it follows that is a sequence of iid Bernoulli random variables with parameter , whereas by (45) and (112) it follows that . Therefore, by the Chernoff bound we conclude that the first term in the upper bound in (114) is exponentially decaying. The second term is bounded as follows

| (115) |

where the first inequality follows from the triangle inequality and the second by an application of the total probability rule on the event , in view of the fact that

By the exponentially consistency of , the upper bound in (D) is exponentially decaying, which means that the second term in the upper bound in (114) is also exponentially decaying, and this completes the proof. ∎

References

- [1] J. Stiles and T. Jernigan, “The basics of brain development,” Neuropsychology review, vol. 20, pp. 327–48, 11 2010.

- [2] R. J. Bolton and D. J. Hand, “Statistical Fraud Detection: A Review,” Statistical Science, vol. 17, no. 3, pp. 235 – 255, 2002. [Online]. Available: https://doi.org/10.1214/ss/1042727940

- [3] S. K. De and M. Baron, “Sequential bonferroni methods for multiple hypothesis testing with strong control of family-wise error rates i and ii,” Sequential Analysis, vol. 31, no. 2, pp. 238–262, 2012.

- [4] ——, “Step-up and step-down methods for testing multiple hypotheses in sequential experiments,” Journal of Statistical Planning and Inference, vol. 142, no. 7, pp. 2059–2070, 2012.

- [5] J. Bartroff and J. Song, “Sequential tests of multiple hypotheses controlling type i and ii familywise error rates,” Journal of statistical planning and inference, vol. 153, pp. 100–114, 2014.

- [6] Y. Song and G. Fellouris, “Asymptotically optimal, sequential, multiple testing procedures with prior information on the number of signals,” Electronic Journal of Statistics, vol. 11, 03 2016.

- [7] ——, “Sequential multiple testing with generalized error control: An asymptotic optimality theory,” The Annals of Statistics, vol. 47, no. 3, pp. 1776 – 1803, 2019. [Online]. Available: https://doi.org/10.1214/18-AOS1737

- [8] J. Bartroff and J. Song, “Sequential tests of multiple hypotheses controlling false discovery and nondiscovery rates,” Sequential Analysis, vol. 39, no. 1, pp. 65–91, 2020. [Online]. Available: https://doi.org/10.1080/07474946.2020.1726686

- [9] K. S. Zigangirov, “On a problem in optimal scanning,” Theory of Probability & Its Applications, vol. 11, no. 2, pp. 294–298, 1966. [Online]. Available: https://doi.org/10.1137/1111025

- [10] E. M. Klimko and J. Yackel, “Optimal search strategies for wienér processes,” Stochastic Processes and their Applications, vol. 3, pp. 19–33, 1975.

- [11] V. Dragalin, “A simple and effective scanning rule for a multi-channel system,” Metrika, vol. 43, pp. 165–182, 02 1996.

- [12] K. Cohen and Q. Zhao, “Asymptotically optimal anomaly detection via sequential testing,” IEEE Transactions on Signal Processing, vol. 63, no. 11, pp. 2929–2941, 2015.

- [13] B. Huang, K. Cohen, and Q. Zhao, “Active anomaly detection in heterogeneous processes,” IEEE Transactions on Information Theory, vol. 65, no. 4, pp. 2284–2301, 2019.

- [14] N. K. Vaidhiyan and R. Sundaresan, “Learning to detect an oddball target,” IEEE Transactions on Information Theory, vol. 64, no. 2, pp. 831–852, 2018.

- [15] A. Gurevich, K. Cohen, and Q. Zhao, “Sequential anomaly detection under a nonlinear system cost,” IEEE Transactions on Signal Processing, vol. 67, no. 14, pp. 3689–3703, 2019.

- [16] A. Tsopelakos, G. Fellouris, and V. V. Veeravalli, “Sequential anomaly detection with observation control,” in 2019 IEEE International Symposium on Information Theory (ISIT), 2019, pp. 2389–2393.

- [17] B. Hemo, T. Gafni, K. Cohen, and Q. Zhao, “Searching for anomalies over composite hypotheses,” IEEE Transactions on Signal Processing, vol. 68, pp. 1181–1196, 2020.

- [18] H. Chernoff, “Sequential design of experiments,” Ann. Math. Statist., vol. 30, no. 3, pp. 755–770, 09 1959. [Online]. Available: http://dx.doi.org/10.1214/aoms/1177706205

- [19] A. E. Albert, “The Sequential Design of Experiments for Infinitely Many States of Nature,” The Annals of Mathematical Statistics, vol. 32, no. 3, pp. 774 – 799, 1961. [Online]. Available: https://doi.org/10.1214/aoms/1177704973

- [20] S. A. Bessler, “Theory and applications of the sequential design of experiments, k-actions and infinitely many experiments, part i theory.” Department of Statistics, Stanford University, Technical Report 55, 1960.

- [21] ——, “Theory and applications of the sequential design of experiments, k-actions and infinitely many experiments, part ii applications.” Department of Statistics, Stanford University, Technical Report 56, 1960.

- [22] J. Kiefer and J. Sacks, “Asymptotically Optimum Sequential Inference and Design,” The Annals of Mathematical Statistics, vol. 34, no. 3, pp. 705 – 750, 1963. [Online]. Available: https://doi.org/10.1214/aoms/1177704000

- [23] R. Keener, “Second Order Efficiency in the Sequential Design of Experiments,” The Annals of Statistics, vol. 12, no. 2, pp. 510 – 532, 1984. [Online]. Available: https://doi.org/10.1214/aos/1176346503

- [24] S. P. Lalley and G. Lorden, “A Control Problem Arising in the Sequential Design of Experiments,” The Annals of Probability, vol. 14, no. 1, pp. 136 – 172, 1986. [Online]. Available: https://doi.org/10.1214/aop/1176992620

- [25] S. Nitinawarat, G. K. Atia, and V. V. Veeravalli, “Controlled sensing for multihypothesis testing,” IEEE Transactions on Automatic Control, vol. 58, no. 10, pp. 2451–2464, 2013.

- [26] M. Naghshvar and T. Javidi, “Active sequential hypothesis testing,” The Annals of Statistics, vol. 41, no. 6, pp. 2703–2738, 2013.

- [27] S. Nitinawarat and V. V. Veeravalli, “Controlled sensing for sequential multihypothesis testing with controlled markovian observations and non-uniform control cost,” Sequential Analysis, vol. 34, no. 1, pp. 1–24, 2015. [Online]. Available: https://doi.org/10.1080/07474946.2014.961864

- [28] A. Deshmukh, V. V. Veeravalli, and S. Bhashyam, “Sequential controlled sensing for composite multihypothesis testing,” Sequential Analysis, vol. 40, no. 2, pp. 259–289, 2021. [Online]. Available: https://doi.org/10.1080/07474946.2021.1912525

- [29] A. Garivier and E. Kaufmann, “Optimal best arm identification with fixed confidence,” in Conference on Learning Theory. PMLR, 2016, pp. 998–1027.

- [30] S. I. Resnick, Adventures in stochastic processes. Springer Science & Business Media, 1992.

- [31] G. Hommel and T. Hoffmann, “Controlled uncertainty,” in Multiple Hypothesenprüfung/Multiple Hypotheses Testing. Springer, 1988, pp. 154–161.

- [32] E. L. Lehmann and J. P. Romano, “Generalizations of the familywise error rate,” Ann. Statist., vol. 33, no. 3, pp. 1138–1154, 06 2005. [Online]. Available: http://dx.doi.org/10.1214/009053605000000084

- [33] A. Tsopelakos and G. Fellouris, “Sequential anomaly detection with observation control under a generalized error metric,” in 2020 IEEE International Symposium on Information Theory (ISIT), 2020, pp. 1165–1170.

- [34] J. Heydari, A. Tajer, and H. V. Poor, “Quickest linear search over correlated sequences,” IEEE Transactions on Information Theory, vol. 62, no. 10, pp. 5786–5808, 2016.

- [35] M. Raginsky and I. Sason, “Concentration of measure inequalities in information theory, communications, and coding,” Foundations and Trends® in Communications and Information Theory, vol. 10, no. 1-2, pp. 1–246, 2013. [Online]. Available: http://dx.doi.org/10.1561/0100000064

- [36] A. Wald, “Sequential tests of statistical hypotheses,” The Annals of Mathematical Statistics, vol. 16, no. 2, pp. 117–186, 1945.

- [37] A. Tartakovsky, I. Nikiforov, and M. Basseville, Sequential analysis: Hypothesis testing and changepoint detection. CRC press, 2015.

- [38] Y. Chow and H. Teicher, Probability Theory: Independence, Interchangeability, Martingales, ser. Springer Texts in Statistics. Springer New York, 2012. [Online]. Available: https://books.google.com/books?id=213dBwAAQBAJ

- [39] O. Kallenberg, Foundations of Modern Probability, ser. Probability and Its Applications. Springer New York, 2002. [Online]. Available: https://books.google.com/books?id=TBgFslMy8V4C