Sensor Fault Detection and Isolation via Networked Estimation: Full-Rank Dynamical Systems

Abstract

This paper considers the problem of simultaneous sensor fault detection, isolation, and networked estimation of linear full-rank dynamical systems. The proposed networked estimation is a variant of single time-scale protocol and is based on (i) consensus on a-priori estimates and (ii) measurement innovation. The necessary connectivity condition on the sensor network and stabilizing block-diagonal gain matrix is derived based on our previous works. Considering additive faults in the presence of system and measurement noise, the estimation error at sensors is derived and proper residuals are defined for fault detection. Unlike many works in the literature, no simplifying upper-bound condition on the noise is considered and we assume Gaussian system/measurement noise. A probabilistic threshold is then defined for fault detection based on the estimation error covariance norm. Finally, a graph-theoretic sensor replacement scenario is proposed to recover possible loss of networked observability due to removing the faulty sensor. We examine the proposed fault detection and isolation scheme on an illustrative academic example to verify the results and make a comparison study with related literature.

Keywords: Fault Detection and Isolation, Observability, Networked Estimation, System Digraph, Residual

I Introduction

Fault Detection and Isolation (FDI) is an emerging topic of recent literature in control and signal-processing [1]. In general, a fault is characterized as an unpermitted deviation or malfunction of (at least) one of the system parameters/properties from its standard condition, which may take place at the plant, sensor, or actuator. Fault Detection, Isolation, and Reconfiguration (FDIR) is therefore a methodology to ensure acceptable system operation and to compensate for occurrence of faults detected by FDI module[1]. The fault diagnosis schemes are spanned from the centralized approaches [2, 3, 4] to more recent distributed methods [5, 6, 7, 8, 9, 10, 11]. Indeed, with recent technological trends, many practical systems of interest are either large-scale or physically distributed, and thus it is required to develop distributed or networked FDI strategies. Toward this goal, in this paper, the problem of simultaneous sensor fault detection, isolation and networked estimation of dynamical system is investigated.

Fault detection and isolation in networked dynamical systems has been extensively studied in the literature. In [6], an FDI strategy is proposed for a distributed heterogeneous multi-agent system and in [7], fault detection of a group of interconnected noise-free individual subsystems is considered such that each subsystem is able to detect faults in its neighborhood. In [8], an FDI problem for interconnected double-integrator system under unknown system faults and fault-free measurements is investigated such that almost all faults except the faults with zero dynamics can be detected. Similarly, in [9], a faulty second-order dynamical system (representing a heterogeneous multi-agent system) is considered with bounded disturbance and fault-free measurements and a distributed observer-based FDI strategy is proposed. The case of homogeneous multi-agent linear systems under upper-bounded system noise is considered in [10], where a distributed observer-based FDI based on fault-free measurements is developed to detect faults at agents. Development of FDI on noise-free faulty systems monitored by a multi-agent network communicating under an event-triggered framework is considered in [11]. It should be noted that most of these works are with the main aim of fault detection and the state estimation of the underlying dynamical system is not considered.

The other related trend in the literature is attack detection and resilient/secure estimation, both centralized [12, 13, 14, 15, 16, 17, 18] and distributed [19, 20, 21, 22, 23, 24, 25, 26, 27]. Distributed estimation strategies are more prominent in recent years due to emergence of large-scale applications. In [19], a distributed observer is proposed to detect admissible biasing attacks over distributed estimation networks by introducing an auxiliary input tracking model. In [20], both false-data injection attack at the system/process and jamming attack at the communication network of sensors are considered and in [21], a distributed gradient descent protocol is adopted to optimize the norm of the covariance of the measurement updates as the cost function. In [22], a distributed method is proposed to estimate the system state over a k-regular sensor network under attacked noise-free measurements and in [23], a distributed resilient estimation scheme is proposed where the time-scale of the sensors (as estimators) needs to be faster than the system dynamics.

Secure distributed estimation of cyber-physical systems composed of interconnected subsystems each monitored by a sensor under possible unidentifiable attack is discussed in [24]. In [25], secure distributed estimators for noise-free system and measurements is proposed over dynamic sensor networks subject to communication loss. Other relevant works include secure estimation based on reachability analysis in [26] and static parameter estimation in [27]. Note that most of these works propose a resilient estimation protocol under sensor attack, where no attack detection and isolation scheme is considered. In general, distributed fault/attack detection finds application in cases where the centralized architecture is not possible or not desirable, as in smart-grid monitoring [28], large-scale wind-farms [29], and networked unmanned vehicles [30].

In this paper, a quantitative model-based method is adopted, as in the observer-based methods, to develop explicit mathematical model and control theory to generate residuals for sensor fault detection. The proposed observer/estimator in this paper is distributed/networked, which finds application in large-scale architectures. Similar networked estimators are proposed in the literature [31, 32, 33, 34, 35, 36, 37]. A single time-scale networked estimator is developed to track the global state of the dynamical system over a distributed network of sensors each taking local measurements with partial observability. Unlike some literature [33, 34, 32], it is not assumed that the system is observable in the neighborhood of each sensor, i.e. the system is not necessarily observable by the measurements directly shared with the sensor. This significantly reduces the connectivity requirements on the sensor network, and therefore reduces the network communication costs. Similar to [35, 36, 37], it is assumed that the underlying dynamical system is full-rank and conditions for networked observability are developed.

The design procedure in this paper is based on structured system theory, previously developed in [38, 39]. Structural (or generic) analysis is widely used in system theory, namely in fault detection [40, 41] and in distributed observability characterization [42]. Using structural analysis, the structure of the sensor network and local estimator gain can be designed to satisfy distributed observability. However, in this paper possible additive faults in sensors are considered and by mathematically deriving the estimation error and sensor residuals, probabilistic thresholds on the residuals are obtained based on error covariance, which leads to the fault detection and isolation logic. Finally, after detecting and isolating the faulty sensor, a graph-theoretic method is proposed to replace the faulty sensor with an observationally equivalent state measurement to compensate the loss of observability. To summarize, the main contributions of this paper as compared with related literature are as follows:

-

•

Considering system/process and measurement noise is a challenge in FDI strategies. This is mainly due to the fact that it is generally hard to distinguish between the presence of faults and system/measurement noise as both fault and noise terms may affect the sensor residuals. In this direction, some works in the literature [7, 18, 11, 25] assume that the system and/or sensor measurement are noise-free, which is a simplifying assumption. In this paper, we consider both system and measurement noise and propose a probability-based threshold design on the residuals to overcome this challenge.

-

•

Following the above comment, many works in FDI and attack detection literature [43, 12, 13, 44, 45, 10] assume that the noise variable is upper-bounded, i.e the noise term instead of taking different values, for example, from Gaussian distribution, only takes values in a limited range. This simplifying assumption helps to design deterministic thresholds on the residuals. In this paper, no such assumption is made, and the noise is assumed to be Gaussian random variable with no upper-bound, which is more realistic as compared to the mentioned references.

-

•

In this paper, a general LTI system is considered, which generalizes the multi-agent systems or interconnected systems each possessing a separate dynamics considered in [6, 7, 10], and the double-integrator system in [8, 9]. Furthermore, the sensor network is considered as a Strongly-Connected (SC) graph as compared to restrictive regularity condition considered in [22].

- •

The rest of this paper is organized as follows. In Section II, the general framework and state the problem are presented. Section III provides the networked estimator scenario and develops the condition for error stability. In Section IV, the algorithm for block-diagonal gain design at sensors is provided. Section V develops the sensor fault-detection and isolation logic, and in Section VI, a graph-theoretic method for observability compensation is discussed. Section VII gives simulation example to illustrate the results. Finally, Section VIII concludes the paper.

II The Framework

We consider noisy discrete-time linear systems as,

| (1) |

where is the system state and is the system noise at time-step . In this paper, the underlying system matrix is considered to be full-rank and examples of such full-rank systems are self-damped dynamical systems [48]. It should be noted that the LTI system (1) can be also obtained by the discretization of a continuous-time LTI system, based on Euler or Tustin discretization methods discussed in [48] and both of these methods result in a full-rank discrete-time LTI system. Moreover, the full-rank condition can be inherent to the system dynamics, such as in Nearly-Constant-Velocity (NCV) model for target dynamics in distributed tracking scenarios [49]. In [49], the target is modeled as a discrete-time dynamical system whose associated matrix is full-rank due to its non-zero diagonal entries. The system full-rank condition is also considered in the networked estimation literature as in [35, 36, 37].

The noise/fault-corrupted measurements of the system are taken by sensors,

| (17) |

which can be written as the global measurement equation as follows:

| (18) |

where , is the measurement noise, and represents the sensor fault vector. It is assumed that and are zero-mean Gaussian while noise, i.e. , , for all , and , for all , and similarly, for the measurement noise. Further, without loss of generality, it is assumed that each sensor measures one of the system states.

The general problem in this paper is to design a stable networked estimation protocol in the absence of faults, and, then, a fault detection and isolation logic such that to detect possible faults in sensors. Given the system and measurements as in (1) and (18), a group of sensors is considered, each embedded with communication and computation equipment to measure a state of the dynamical system and process the measured data and information received from the other sensors to estimate the global state of the dynamical system.

Note that unlike many works in the literature [33, 34, 32], no assumption on the observability of system in the direct neighborhood of each sensor is considered which implies that the minimal connectivity on the communication network of agents is required. Each sensor adopts a single time-scale networked estimation protocol and by proper design of communication network structure and feedback gain matrix, each sensor tracks the system state by bounded steady-state estimation error in the fault-free case. In case of fault occurrence, i.e. at any sensor , a residual-based fault detection and isolation logic is proposed to detect the faulty sensor by comparing the sensor residuals with pre-specified thresholds. Note that unlike many work in the literature [43, 12, 13, 44, 45], it is not assumed that the system noise and/or measurement noise are upper-bounded. Finally, by detecting the faulty sensor, one may compensate for possible loss of observability in the distributed system by introducing a new sensor measurement replacing the faulty sensor.

III Networked Estimation Protocol

In this section, we present our main tool to perform diagnosis as a single time-scale networked estimation protocol and analyze its error stability criteria. The networked estimator is proposed based on collaborative consensus on the a-priori estimates at sensors. The estimator is based on two steps as follows,

| (19) | ||||

| (20) |

where is the estimate of state at sensor given all the measurements up to time , is the estimated state given all the measurements up to time , the matrix is a stochastic matrix for consensus on a-priori estimates, and is the gain matrix at sensor . Note that the row-stochastic condition on is a necessary condition for (consensus) averaging of a-priori estimates. Recall that a matrix is row-stochastic if the summation of the entries in each row are equal to , i.e. . Further, defines the neighborhood of sensor over which the sensor shares a-priori estimates with the neighboring sensors and this neighborhood follows the structure of matrix . In fact where implies that sensor sends its information to and also includes the sensor itself, i.e. all the diagonal entries of the matrix are non-zero. This is due to the fact that each sensor uses its own estimate at previous time to calculate its a-priori estimate . It should be noted that the protocol (19)-(20) differs from our previous works [38, 39] in two aspects: (i) the protocol in [38, 39] has one more consensus step on the measurement fusion in which the neighboring measurements are shared over a different (hub-based) network, while the protocol (19)-(20) only includes one step of averaging on a-priori estimates. Therefore, the network connectivity condition in this paper is more relaxed as compared to [38, 39]; (ii) the distributed approach in [38, 39] is fault-free where the sensor measurements are not accompanied with additive faults and consequently, the error dynamics analysis is different from this work.

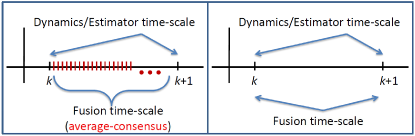

Note that the estimator (19)-(20) is single time-scale as compared to multi time-scale networked estimation given in [50, 23] where many step of averaging (or consensus) is performed between every two steps and of system dynamics (see Fig. 1).

It is known that the single time-scale approach is privileged over the multi time-scale method, since the latter requires a large number of information-exchange and communication over the sensor network between every two successive time-steps of the system dynamics. This implies that the communication time-scale needs to be much faster than the dynamics, which is not desirable and even may not be feasible for many large-scale real-time systems. To elaborate this, note that in protocol (19)-(20) only one step of averaging on a-priori estimates is done between two successive steps and , while the protocol in [50] requires many steps of consensus between steps and . In the multi time-scale estimation, the connectivity of the sensor network is more relaxed due to more information exchanges among the sensors, while in the single time-scale method, the sensor network requires more connectivity particularly for rank-deficient dynamical systems. In fact, it is proved that a SC network may not guarantee error stability for rank-deficient dynamical systems and certain hub-based network design is necessary, see [38, 39] for details.

Define the error at time-step at sensor as,

| (21) |

By substituting (1)-(18) in above, it follows that:

Recall that the row-stochastic condition of implies that . Note that for , and for , . Therefore, the row-stochastic condition can be re-written as . Based on this fact, it follows that (see [39] for similar analysis):

and consequently:

where collects the noise terms and fault term as follows:

| (22) |

The collective error at the group of sensors is defined as the concatenation of errors at all sensors, i.e. . For the collective error, it follows that:

| (23) |

where with and . The collective noise vector is given as:

| (24) |

where is the vector of 1s of size and .

To stabilize the error dynamics (23), it is necessary that the pair to be observable [51]. Note that the observability analysis of Kronecker product of matrices (and the associated composite graph) is discussed in details in [52], where it is proved that for observability of , the matrix needs to be irreducible. This implies that the sensor network needs to be Strongly-Connected (SC). In fact, this is also discussed in [38], and since the protocol (19)-(20) is a variant of the protocol in [38], the same analysis can be adopted here to prove the irreducibility of matrix. Therefore, any irreducible matrix (associated with a SC network) with non-zero diagonal entries while satisfying the row-stochastic condition can work as matrix. The SC connectivity of the sensor network is also considered in similar networked estimation literature, see for example [35, 36, 37]. Note that the -observability is also referred as networked observability (or distributed observability) condition. As it is explained in the next section, having -observability satisfied, using Linear-Matrix-Inequalities (LMI), the block-diagonal gain matrix can be designed such that is a Schur matrix [38, 53, 54], i.e. where defines the spectral radius of a matrix.

IV Design of Block-Diagonal Feedback Gain based on LMI Approach

In this section, we discuss the methodology for computation of a block-diagonal estimator gain . Note that, the observability of in the distributed estimator (19)-(20) guarantees the existence of a full matrix , such that . However, for having a distributed approach, the gain matrix is required to be block-diagonal. Such is known to be the solution of an LMI as follows:

| (25) |

where and with denoting positive definiteness. Note that the left-hand-side of the above equation is nonlinear in ; however its equivalent solution is proposed in the literature [55, 56] as the right-hand-side in (25) with . Now, having the above LMI to be linear in , we note that the constraint is a non-convex constraint. However, this constraint can be approximated with a linear function of the matrices , satisfy as the optimal point of the following optimization problem [54]:

| (26) | ||||

| s.t. | ||||

with . Overall, the optimization is summarized as follows; with observability, the gain matrix is the solution to the following:

| (27) | ||||

| s.t. | ||||

It should be mentioned that a solution of the second LMI is equivalent to , which results in an optimal value for minimum trace as . Furthermore, can be replaced with the linear approximation [54], and the iterative Algorithm 1 can be used to minimize this problem under given constraints.

In [54], it is shown that is non-increasing and converges to . In this regard, a stopping criterion of the algorithm is established as reaching within of the trace objective. Interested readers may refer to [53, 55, 56, 54, 57] for more details. It should be noted that this algorithm (and in general similar cone-complementarity algorithms) are of polynomial-order complexity, see [58, 59].

V Sensor Fault Detection and Isolation

In this section, we present our results on the development of sensor fault detection and isolation scheme for the considered system. Following the terminology in [60], given the estimated state , define the estimated output at sensor as . Note that for fault-free case is steady-state stable and bounded by proper design of and matrices. Thus, define the residual signal at sensor at time-step as,

| (28) |

where is the th hyper-row of the matrix defined as the block of rows from row to row . Moreover, can be written as:

| (29) |

Assume that sensor is faulty at some time-interval, i.e. for . Note that, considering (28) and (29), the estimation error is now affected by the sensor fault. In this case for the residual at sensor , the term and in (28) and (29), while for other sensors . Note that among many possible choices for the gain matrix , it can be designed such that the term is large enough to make more affected by the fault . Thus, although may also appear in and , since the term in (28) remains small and negligible as compared to larger values of and . Therefore, by Schur stability of matrix and proper choice of the gain matrix , the residual at faulty sensor is more affected while for the other sensors , the residuals are less affected since . The fault detection and isolation logic is therefore as follows: if the residual at sensor exceeds a predefined threshold then sensor is faulty. Thus, we need to define the thresholds on residuals for fault detection and isolation. In this direction, considering Gaussian noise for system dynamics and for measurement noise, first the variance of the estimation error is obtained and consequently one can define a threshold such that to detect faults whenever the residual exceeds this threshold.

Let and . Then, it follows that:

| (30) |

Recall that , in the steady-state we have,

Let , then using the result of [61] it can be proved that,

| (31) |

On the other hand, for the fault-free case,

| (32) |

where is by matrix of s. Then,

| (33) |

The -norm of is upper-bounded by,

| (34) |

where . Let , , and . Then, from (31) and scaling the error covariance by (number of sensors),

| (35) |

In fact, equation (35) gives an upper-bound on the variance of estimation error at each sensor . Following the Gaussianity of estimation error, one can claim that with probability more than the estimation error lies within . It can be proved that in the steady state, the fault-free estimation error is unbiased, i.e. [61]. Therefore, one can claim that with probability more than we have where the constant is the absolute value of the measurement vector (with the assumption of having one state measurement by every sensor, is the absolute value of the nonzero entry of ). Similarly, with probability more than , the residual lies below , i.e. , and with probability more than we have , etc. Therefore, in the presence of possible sensor faults, one can detect and isolate sensor faults by comparing thresholds with the residuals based on these probabilities. In other words, considering as threshold, one can claim fault detection with probability whenever the residual goes over this threshold. In this case, the probability of false alarm is less than . Similarly, stronger thresholds for fault detection and isolation can be defined as , , etc.

VI Sensor Replacement for Observability Recovery

The measurement of faulty sensors may be compensated in terms of observability recovery and in this direction, the concept of observational equivalence is a relevant term [46, 47]. The faulty sensor measurement can be replaced by a measurement of an equivalent state to recover the loss of observability. Note that in this paper, to avoid trivial case, it is assumed that the fault is inherent with the measurement of the specific state measured by the faulty sensor. This could be due to, for example, environmental condition of the sensor location resulting to the fault/anomaly at the sensor.

Extending the results of [62] to dual case of network observability, the set of necessary sensor measurements can be found over digraph representation of the dynamical system. Note that the system digraph, denoted by , is defined as the graph associated with the system matrix , or the structured system matrix representing the zero-nonzero pattern of . In every state is represented by a node and every non-zero entry (or ) is represented by a link from node to node . It is known that many generic properties of the system, including observability and controllability, can be defined over this graph [63]. As an example, consider the following structured system matrix,

| (48) |

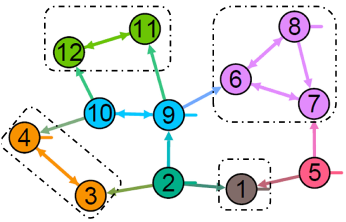

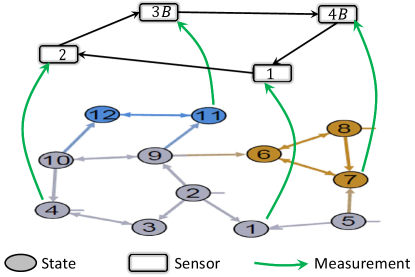

where represents a non-zero entry and as an example a non-zero entry in implies a link from node to node in . The system digraph associated to (48) is shown in Fig. 2.

In the system digraph, , a Strongly-Connected-Component (SCC) denoted by is defined as a subgraph in which there is a path from every node to every other node. Among the SCCs, define a parent SCC, denoted by , as the SCC with no outgoing link to other SCCs. In Fig. 2, the SCCs and parent SCCs associated with the structured system matrix (48) are shown. As it can be seen from this figure, the SCC may only include a single node.

It can be proved that the measurement of at least one node in every parent SCC is necessary for system observability [62], while all state nodes in the same parent SCC are observationally equivalent. This implies that the measurements of all nodes in the parent SCC equivalently recover the observability of the system digraph, .

In this direction, our proposed logic for sensor replacement is as follows: if the faulty sensor measures a state in a parent SCC , one can compensate the loss of observability by adding a new sensor measurement of another state node in . Otherwise, if the faulty sensor measures a state in no parent SCC, the faulty sensor can be removed with no affect on the estimation performance of the other sensors. For example, in Fig. 2 if the measurement of state is faulty and is removed, measurement of either states or may recover the loss of observability. Note that if the faulty sensor does not measure a necessary system state (a state in a parent SCC), its removal has no affect on distributed observability, and only the communication network needs to be restructured to satisfy the strong connectivity of the sensor network as mentioned in Section III. For example, in Fig. 2 a faulty sensor measuring any state in can be removed without loss of system observability. It should be noted that if the parent SCC includes a single node (also referred as parent node), there is no replacement for the faulty measurement. This implies that the parent nodes are more vulnerable to attacks and faults in terms of recovering system observability. For example, this is the case for the measurement of state in Fig. 2.

VII Simulations

VII-A An Illustrative Example

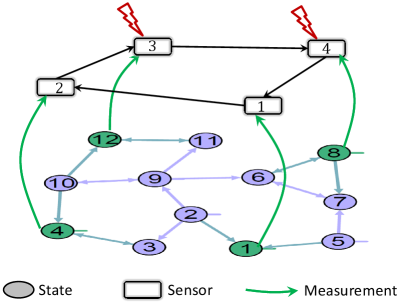

Consider a linear dynamical system with the structure given by the system digraph in Fig. 3.

The full-rank system of states with associated structured matrix (48) is considered to be tracked by a network of sensors. The non-zero entries of are chosen such that implying an unstable system dynamics. The system and measurement noise are . The measured states are represented by green nodes. Given the set of measurements as in Fig. 3, it can be checked that one state node in every parent SCC , , , is measured and therefore the pair is observable. The sensors estimate the system state over time using the networked estimator (19)-(20). The sensors share their a-priori estimates over the SC communication network (or sensor network) shown in Fig. 3. This network represents the structure of matrix while the entries of are chosen randomly such that the stochasticity of is satisfied. Having to be irreducible, for the networked system the conditions for networked observability (or distributed observability) are hold [42]. Therefore, one can design the proper gain matrix using the LMI procedure described in Section IV. Applying this block-diagonal matrix, and therefore all sensor errors are stable.

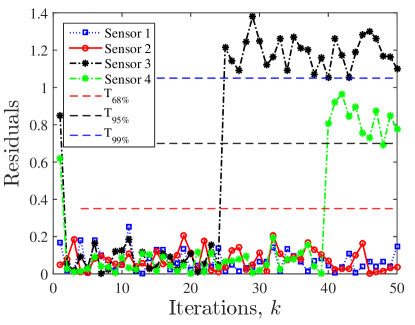

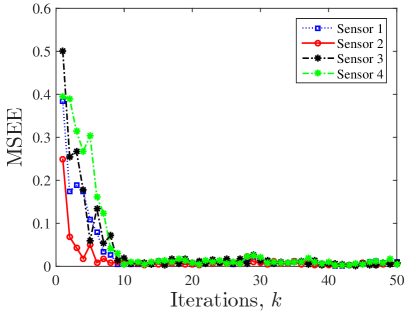

Next, it is assumed that the sensor is faulty after time-step and sensor is faulty after time-step , i.e. , . The residuals corresponding to this fault scenario are shown in Fig. 4.

As shown in this figure, the faulty sensors can be detected and isolated once the residuals corresponding to the faulty sensor exceed the thresholds. For this simulation, we have , , , , and . Further, the non-zero entries of the measurement matrix are considered to be equal to , and therefore and . Then, using equation (35), it follows that and the thresholds are,

| (49) |

Based on these thresholds, one can claim the detection of fault at sensor with probability more than and fault at sensor with probability more than . The probability of false alarms are less than and less than , respectively.

Next, one can compensate for measurement of the faulty sensors by replacing observationally equivalent state measurements from and . In Fig. 5, the set of states which are observationally equivalent to state (measured by sensor ) and to state (measured by sensor ) are, respectively, shown in blue and brown colors.

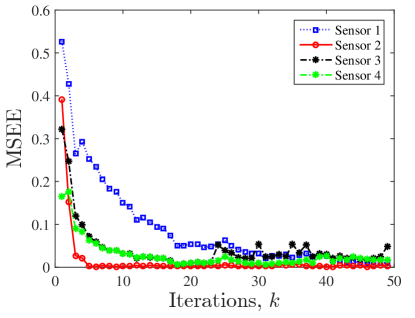

Replacing the faulty sensors and with sensors and measuring observationally equivalent states and , the loss of observability for estimation procedure is recovered. Note that we assume communication links for sensors and to be the same as sensor and . The Mean-Squared-Estimation-Error (MSEE) at all sensors in the compensated framework is shown in Fig. 6. As it is clear the MSEE is bounded steady-state stable at all sensors.

As compared to simulations in [3, 4, 19] where the underlying dynamical system is stable, the example in this section provides a distributed estimation and fault detection of an unstable system. As compared to [43, 12, 13, 44, 45, 10], in this example the noise follows Gaussian distribution and no bound on the noise is assumed. Moreover, in [7, 18, 11, 25], no system and/or measurement noise is considered. As compared to the distributed estimation in [33, 34, 32] which requires a complete all-to-all network of sensors (i.e. for this example every sensor is directly connected to all other sensors), our networked estimator only requires an SC sensor network. Among the related literature, we provide a comparison with a recent work in the next subsection.

VII-B A Comparison Study

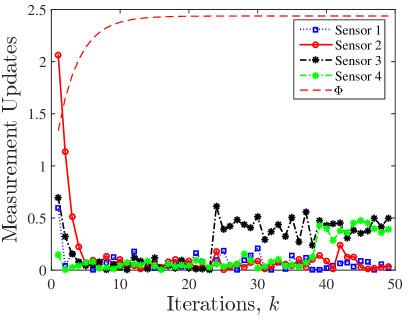

Here, our proposed sensor fault detection, isolation, and distributed estimation protocol is compared with the recent work [23]. In [23], a multi time-scale resilient distributed estimation strategy is proposed along with attack/fault detection. The reason for selecting this work for comparison is that its assumptions and framework is similar to our proposed strategy in the following aspects: (i) it considers the underlying system dynamics to be unstable; (ii) the estimation and attack detection scheme is not centralized but distributed over a sensor network; (iii) it assumes a connected undirected network of sensors, while similarly we assume a SC network; and (iv) an additive term is considered in the presence of noise on the sensors representing possible biasing attacks/faults at sensors similar to our assumption. Note that in [23], each sensor performs steps of (consensus) averaging over the sensor network between every two steps and of system dynamics and it is claimed that the proposed protocol is resilient to sensor faults of certain magnitude, while it is capable of detecting and isolating the attacked sensors in certain conditions. They particularly state that under certain conditions the attacked sensor can be detected while some other attacks cannot be detected (remain stealthy), and therefore two sets of detectable attacking set (DAS) and undetectable (or stealthy) attacking set (UAS) are defined. The proposed strategy in [23] is simulated over the same dynamic system and sensor network in Fig. 3 and all the conditions including initial state values, noise values, etc are considered similar to the previous subsection, while the sensor network is reconsidered as an undirected cycle . The parameters for the distributed estimation protocol are as in Table I.

Under these parameters the MSEE of sensors are shown in Fig. 7. Note that in this simulation, every sensor performs steps of consensus on estimations of neighboring sensors as compared to step consensus in protocol (19)-(20). This requires a processing setup times faster than our proposed networked estimation setup.

The sensor attack detection logic in [23] is as follows: if the measurement update is larger than a pre-defined threshold , then the attack at sensor is detected, otherwise the sensor is either attack-free or the attack remains undetected (stealthy). Running the proposed attack detection in [23] based on the parameters in Table I, the measurement updates and the threshold are shown in Fig. 8. As it is clear from this figure, both attacks/faults at sensors and are not large enough to be detected and both remain stealthy. However, as shown in Fig. 4, our proposed approach can detect and isolate both faults.

VIII Concluding Remarks

In this paper, a fault detection and isolation scenario for a networked estimator is presented by defining the sensor residuals and probability-based thresholds to detect and isolate sensor faults. The thresholds are defined based on the upper-bound on the norm of the estimation error covariance. It should be emphasized that the FDI and distributed estimation strategy in this paper is of polynomial order computational complexity. In fact, the protocol (19)-(20) as a variant of the protocol in [39] is of P-order complexity and according to [58, 59], Algorithm 1 and similar cone-complementarity algorithms are of P-order complexity, implying that the design of block-diagonal gain matrix in Section IV is of P-order complexity. For fault detection and mitigation strategy, the decomposition of the system digraph into SCCs, determining their partial order, and finding parent SCCs are based on the Depth-First-Search (DFS) algorithm [64] with complexity . The computational complexity of the threshold design in (35) based on the 2-norm of the covariance is . Therefore, the overall strategy in this paper is of P-order complexity and hence scalable to large-scale applications. Note that although the simulation in Section VII is given for a small-scale example system, the P-order complexity guarantees the scalability of the proposed scheme to large-scale systems.

It is worth noting that the fault detection and isolation can be improved by defining tighter upper-bounds on the residuals. This can be done by designing the gain matrix to reduce , and . The optimal LMI approach to optimize the gain to satisfy such conditions is the direction of our future research. A promising topic of future interest is optimal design of the communication network (the structure of the matrix ) and cost-optimal selection of measurements for observability recovery to reduce the estimation costs at sensor network. This may include reducing both the sensor embedding costs and communication costs in sensor networks. As another direction of future research we are currently working to extend this fault detection and isolation as well as fault compensation scenario to general rank-deficient systems. In this direction, the networked estimation protocol needs to be updated to consider measurement sharing over the sensor network.

Acknowledgement

We would like to thank Prof. Usman A. Khan from Tufts University for his helpful comments and suggestions on this paper. We also thank the authors of reference [23] for providing their paper and related files.

References

- [1] I. Hwang, S. Kim, Y. Kim, and C. E. Seah, “A survey of fault detection, isolation, and reconfiguration methods,” IEEE Transactions on Control Systems Technology, vol. 18, no. 3, pp. 636–653, 2009.

- [2] M. Davoodi, N. Meskin, and K. Khorasani, “Simultaneous fault detection, isolation and control tracking design using a single observer-based module,” in American Control Conference. IEEE, 2014, pp. 3047–3052.

- [3] S. Jee, H. J. Lee, and Y. H. Joo, “H-/H sensor fault detection and isolation in linear time-invariant systems,” International Journal of Control, Automation and Systems, vol. 10, no. 4, pp. 841–848, 2012.

- [4] Z. Li and I. M. Jaimoukha, “Observer-based fault detection and isolation filter design for linear time-invariant systems,” International Journal of Control, vol. 82, no. 1, pp. 171–182, 2009.

- [5] R. M. G. Ferrari, T. Parisini, and M. M. Polycarpou, “Distributed fault detection and isolation of large-scale discrete-time nonlinear systems: An adaptive approximation approach,” IEEE Transactions on Automatic Control, vol. 57, no. 2, pp. 275–290, 2011.

- [6] M. Davoodi, K. Khorasani, H. A. Talebi, and H. R. Momeni, “Distributed fault detection and isolation filter design for a network of heterogeneous multiagent systems,” IEEE Transactions on Control Systems Technology, vol. 22, no. 3, pp. 1061–1069, 2013.

- [7] A. Teixeira, I. Shames, H. Sandberg, and K. H. Johansson, “Distributed fault detection and isolation resilient to network model uncertainties,” IEEE transactions on cybernetics, vol. 44, no. 11, pp. 2024–2037, 2014.

- [8] I. Shames, A. Teixeira, H. Sandberg, and K. H. Johansson, “Distributed fault detection for interconnected second-order systems,” Automatica, vol. 47, no. 12, pp. 2757–2764, 2011.

- [9] Y. Quan, W. Chen, Z. Wu, and L. Peng, “Observer-based distributed fault detection and isolation for heterogeneous discrete-time multi-agent systems with disturbances,” IEEE Access, vol. 4, pp. 4652–4658, 2016.

- [10] A. Marino, F. Pierri, and F. Arrichiello, “Distributed fault detection isolation and accommodation for homogeneous networked discrete-time linear systems,” IEEE Transactions on Automatic Control, vol. 62, no. 9, pp. 4840–4847, 2017.

- [11] Y. Li, H. Fang, J. Chen, and C. Shang, “Distributed fault detection and isolation for multi-agent systems using relative information,” in American Control Conference (ACC). IEEE, 2016, pp. 5939–5944.

- [12] M. S. Chong, M. Wakaiki, and J. P. Hespanha, “Observability of linear systems under adversarial attacks,” in American Control Conference (ACC). IEEE, 2015, pp. 2439–2444.

- [13] C. Lee, H. Shim, and Y. Eun, “Secure and robust state estimation under sensor attacks, measurement noises, and process disturbances: Observer-based combinatorial approach,” in European Control Conference (ECC). IEEE, 2015, pp. 1872–1877.

- [14] X. Ren, Y. Mo, J. Chen, and K. H. Johansson, “Secure state estimation with byzantine sensors: A probabilistic approach,” IEEE Transactions on Automatic Control, 2020.

- [15] M. Pajic, I. Lee, and G. J. Pappas, “Attack-resilient state estimation for noisy dynamical systems,” IEEE Transactions on Control of Network Systems, vol. 4, no. 1, pp. 82–92, 2016.

- [16] Y. Shoukry, P. Nuzzo, A. Puggelli, A. L. Sangiovanni-Vincentelli, S. A. Seshia, and P. Tabuada, “Secure state estimation for cyber-physical systems under sensor attacks: A satisfiability modulo theory approach,” IEEE Transactions on Automatic Control, vol. 62, no. 10, pp. 4917–4932, 2017.

- [17] M. Pajic, J. Weimer, N. Bezzo, P. Tabuada, O. Sokolsky, I. Lee, and G. J. Pappas, “Robustness of attack-resilient state estimators,” in ACM/IEEE International Conference on Cyber-Physical Systems (ICCPS). IEEE, 2014, pp. 163–174.

- [18] Y. Chen, S. Kar, and J. M. F. Moura, “Dynamic attack detection in cyber-physical systems with side initial state information,” IEEE Transactions on Automatic Control, vol. 62, no. 9, pp. 4618–4624, 2016.

- [19] M. Deghat, V. Ugrinovskii, I. Shames, and C. Langbort, “Detection and mitigation of biasing attacks on distributed estimation networks,” Automatica, vol. 99, pp. 369–381, 2019.

- [20] Y. Guan and X. Ge, “Distributed attack detection and secure estimation of networked cyber-physical systems against false data injection attacks and jamming attacks,” IEEE Transactions on Signal and Information Processing over Networks, vol. 4, no. 1, pp. 48–59, 2017.

- [21] L. Su and S. Shahrampour, “Finite-time guarantees for byzantine-resilient distributed state estimation with noisy measurements,” IEEE Transactions on Automatic Control, 2019.

- [22] L. An and G. Yang, “Distributed secure state estimation for cyber-physical systems under sensor attacks,” Automatica, vol. 107, pp. 526–538, 2019.

- [23] X. He, X. Ren, H. Sandberg, and K. H. Johansson, “How to secure distributed filters under sensor attacks?” arXiv preprint arXiv:2004.05409, 2020.

- [24] W. Ao, Y. Song, and C. Wen, “Distributed secure state estimation and control for cpss under sensor attacks,” IEEE transactions on cybernetics, vol. 50, no. 1, pp. 259–269, 2018.

- [25] A. Mitra and S. Sundaram, “Secure distributed state estimation of an lti system over time-varying networks and analog erasure channels,” in American Control Conference. IEEE, 2018, pp. 6578–6583.

- [26] A. Alanwar, H. Said, and M. Althoff, “Distributed secure state estimation using diffusion kalman filters and reachability analysis,” in IEEE 58th Conference on Decision and Control (CDC). IEEE, 2019, pp. 4133–4139.

- [27] Y. Chen, S. Kar, and J. M. F. Moura, “Resilient distributed estimation through adversary detection,” IEEE Transactions on Signal Processing, vol. 66, no. 9, pp. 2455–2469, 2018.

- [28] K. Manandhar, X. Cao, F. Hu, and Y. Liu, “Detection of faults and attacks including false data injection attack in smart grid using kalman filter,” IEEE Transactions on Control of Network Systems, vol. 1, no. 4, pp. 370–379, 2014.

- [29] X. Wei and M. Verhaegen, “Fault detection of large scale wind turbine systems: A mixed / index observer approach,” in 16th Mediterranean Conference on Control and Automation. IEEE, 2008, pp. 1675–1680.

- [30] N. Meskin and K. Khorasani, Fault detection and isolation: Multi-vehicle unmanned systems. Springer Science & Business Media, 2011.

- [31] F. Garin and L. Schenato, “A survey on distributed estimation and control applications using linear consensus algorithms,” in Networked control systems. Springer, 2010, pp. 75–107.

- [32] S. M. Azizi and K. Khorasani, “Networked estimation of relative sensing multiagent systems using reconfigurable sets of subobservers,” IEEE Transactions on Control Systems Technology, vol. 22, no. 6, pp. 2188–2204, 2014.

- [33] T. Boukhobza, F. Hamelin, S. Martinez-Martinez, and D. Sauter, “Structural analysis of the partial state and input observability for structured linear systems: Application to distributed systems,” European Journal of Control, vol. 15, no. 5, pp. 503–516, Oct. 2009.

- [34] S. Kar, J. M. F. Moura, and K. Ramanan, “Distributed parameter estimation in sensor networks: Nonlinear observation models and imperfect communication,” IEEE Transactions on Information Theory, vol. 58, no. 6, pp. 3575–3605, 2012.

- [35] S. Tu and A. Sayed, “Diffusion strategies outperform consensus strategies for distributed estimation over adaptive networks,” IEEE Transactions on Signal Processing,, vol. 60, no. 12, pp. 6217–6234, 2012.

- [36] G. Battistelli, L. Chisci, G. Mugnai, A. Farina, and A. Graziano, “Consensus-based algorithms for distributed filtering,” in 51st IEEE Conference on Decision and Control, 2012, pp. 794–799.

- [37] S. Park and N. Martins, “Necessary and sufficient conditions for the stabilizability of a class of LTI distributed observers,” in 51st IEEE Conference on Decision and Control, 2012, pp. 7431–7436.

- [38] M. Doostmohammadian and U. Khan, “On the genericity properties in distributed estimation: Topology design and sensor placement,” IEEE Journal of Selected Topics in Signal Processing, vol. 7, no. 2, pp. 195–204, 2013.

- [39] M. Doostmohammadian, H. R. Rabiee, and U. A. Khan, “Cyber-social systems: modeling, inference, and optimal design,” IEEE Systems Journal, vol. 14, no. 1, pp. 73–83, 2020.

- [40] C. Commault, J. Dion, and S. Y. Agha, “Structural analysis for the sensor location problem in fault detection and isolation,” Automatica, vol. 44, no. 8, pp. 2074–2080, 2008.

- [41] C. Commault and J. Dion, “Sensor location for diagnosis in linear systems: A structural analysis,” IEEE Transactions on Automatic Control, vol. 52, no. 2, pp. 155–169, 2007.

- [42] M. Doostmohammadian and U. A. Khan, “On the characterization of distributed observability from first principles,” in 2nd IEEE Global Conference on Signal and Information Processing, 2014, pp. 914–917.

- [43] M. Pajic, P. Tabuada, I. Lee, and G. J. Pappas, “Attack-resilient state estimation in the presence of noise,” in 54th IEEE Conference on Decision and Control (CDC). IEEE, 2015, pp. 5827–5832.

- [44] A. R. Kodakkadan, M. Pourasghar, V. Puig, S. Olaru, C. Ocampo-Martinez, and V. Reppa, “Observer-based sensor fault detectability: About robust positive invariance approach and residual sensitivity,” IFAC-PapersOnLine, vol. 50, no. 1, pp. 5041–5046, 2017.

- [45] S. Kar and J. M. F. Moura, “Gossip and distributed Kalman filtering: Weak consensus under weak detectability,” IEEE Transactions on Signal Processing, vol. 59, no. 4, pp. 1766–1784, April 2011.

- [46] M. Doostmohammadian and U. A. Khan, “Measurement partitioning and observational equivalence in state estimation,” in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2016, pp. 4855–4859.

- [47] M. Doostmohammadian, H. R. Rabiee, H. Zarrabi, and U. A. Khan, “Distributed estimation recovery under sensor failure,” IEEE Signal Processing Letters, vol. 24, no. 10, pp. 1532–1536, 2017.

- [48] M. Doostmohammadian and U. Khan, “On the complexity of minimum-cost networked estimation of self-damped dynamical systems,” IEEE Transactions on Network Science and Engineering, 2019.

- [49] O. Ennasr and X. Tan, “Distributed localization of a moving target: Structural observability-based convergence analysis,” in American Control Conference. IEEE, 2018, pp. 2897–2903.

- [50] R. Olfati-Saber, “Distributed Kalman filters with embedded consensus filters,” in 44th IEEE Conference on Decision and Control, Seville, Spain, Dec. 2005, pp. 8179–8184.

- [51] J. Bay, Fundamentals of linear state space systems. McGraw-Hill, 1999.

- [52] M. Doostmohammadian and U. A. Khan, “Minimal sufficient conditions for structural observability/controllability of composite networks via kronecker product,” IEEE Transactions on Signal and Information processing over Networks, vol. 6, pp. 78–87, 2020.

- [53] U. A. Khan and A. Jadbabaie, “Coordinated networked estimation strategies using structured systems theory,” in 49th IEEE Conference on Decision and Control, Orlando, FL, Dec. 2011, pp. 2112–2117.

- [54] L. E. Ghaoui, F. Oustry, and M. A. Rami, “A cone complementarity linearization algorithm for static output-feedback and related problems,” IEEE Transactions on Automatic Control, vol. 42, no. 8, pp. 1171–1176, 1997.

- [55] O. L. Mangasarian and J. S. Pang, “The extended linear complementarity problem,” SIAM Journal on Matrix Analysis and Applications, vol. 2, pp. 359–368, Jan. 1995.

- [56] M. Pajic, S. Sundaram, J. Le Ny, G. Pappas, and R. Mangharam, “The wireless control network: Synthesis and robustness,” in 49th Conference on Decision and Control, Orlando, FL, 2010, pp. 7576–7581.

- [57] A. I. Zečević and D. D. Šiljak, “Control design with arbitrary information structure constraints,” Automatica, vol. 44, no. 10, pp. 2642–2647, 2008.

- [58] Y. Ye, “A fully polynomial-time approximation algorithm for computing a stationary point of the general linear complementarity problem,” Mathematics of Operations Research, vol. 18, no. 2, pp. 334–345, 1993.

- [59] Y. Nesterov and A. Nemirovskii, Interior-point polynomial algorithms in convex programming. SIAM, 1994.

- [60] S. Sundaram, “Fault-tolerant and secure control systems,” University of Waterloo, Lecture Notes, 2012.

- [61] U. A. Khan and A. Jadbabaie, “Collaborative scalar-gain estimators for potentially unstable social dynamics with limited communication,” Automatica, vol. 50, no. 7, pp. 1909–1914, 2014.

- [62] M. Doostmohammadian, “Minimal driver nodes for structural controllability of large-scale dynamical systems: Node classification,” IEEE Systems Journal, 2019.

- [63] C. Lin, “Structural controllability,” IEEE Transactions on Automatic Control, vol. 19, no. 3, pp. 201–208, Jun. 1974.

- [64] T. H. Cormen, C. E. Leiserson, R. L. Rivest, and C. Stein, Introduction to Algorithms. MIT Press, 2009.