Sensor-based Multi-agent Coverage Control with Spatial Separation in Unstructured Environments

Abstract

Multi-robot systems have increasingly become instrumental in tackling coverage problems. However, the challenge of optimizing task efficiency without compromising task success still persists, particularly in expansive, unstructured scenarios with dense obstacles. This paper presents an innovative, decentralized Voronoi-based coverage control approach to reactively navigate these complexities while guaranteeing safety. This approach leverages the active sensing capabilities of multi-robot systems to supplement GIS (Geographic Information System), offering a more comprehensive and real-time understanding of environments like post-disaster. Based on point cloud data, which is inherently non-convex and unstructured, this method efficiently generates collision-free Voronoi regions using only local sensing information through spatial decomposition and spherical mirroring techniques. Then, deadlock-aware guided map integrated with a gradient-optimized, centroid Voronoi-based coverage control policy, is constructed to improve efficiency by avoiding exhaustive searches and local sensing pitfalls. The effectiveness of our algorithm has been validated through extensive numerical simulations in high-fidelity environments, demonstrating significant improvements in task success rate, coverage ratio, and task execution time compared with others.

I Introduction

Multi-robot systems are extensively employed in coverage tasks across diverse applications, including gas leak detection, pollution source identification, and search and rescue operations [1, 2]. These systems can efficiently locate Targets of Interest (ToI) in unknown and unstructured environments through interactive data collection. Building on their capabilities, integration with Geographic Information Systems (GIS) offers new opportunities for improving efficiency and accuracy of such operations [3].

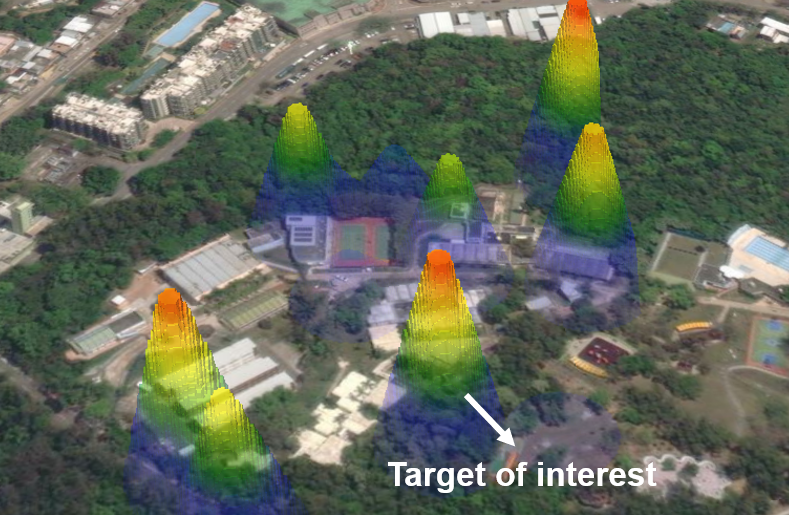

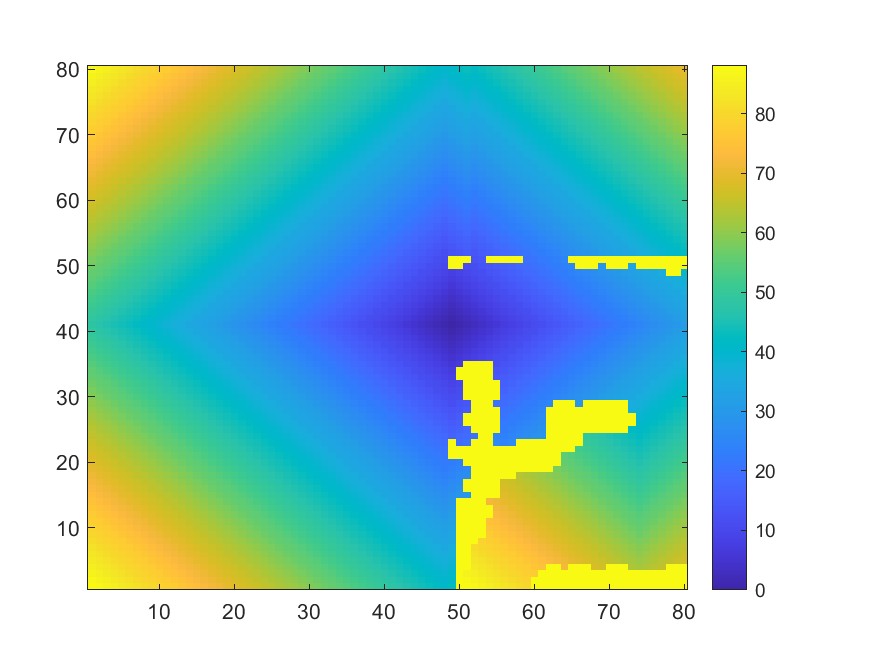

While GIS offers extensive pre-collected data, multi-robot information sharing, and advanced analysis features like heat maps as shown in Fig. 1, its limitations are evident in uncertain situations such as post-disaster rescue efforts. In these contexts, the active sensing capabilities of multi-robot systems can serve as real-time supplements to GIS, providing a more comprehensive and up-to-date understanding of the environment.

Despite the unprecedented advancements in this field, the challenge of searching ToI in unknown environments persists, particularly in balancing efficiency with successful task execution. Some search-based approaches resort to exhaustive exploration of the surrounding environment to identify feasible paths [4]. However, this approach compromises efficiency and necessitates complete environmental traversal. Such inefficiency has critical implications for timely hazard avoidance or life-saving actions, especially given the battery limitations in large-scale environments.

On the other hand, some reactive approaches compute search policies that focus on the immediate rewards associated with ToI, thereby enhancing efficiency through the local sensing range. These methods, rigorously examined in the context of Centroidal Voronoi Tessellation (CVT) in multi-robot coverage problems [2, 5, 6, 7] offer numerous advantages, including asynchronous operation, distributed execution, adaptability, and verifiable asymptotic correctness [8]. However, they are susceptible to local minima due to short-sighted decisions, which can lead to the failure to complete the task. Furthermore, the majority of existing methods are confined to two-dimensional, well-structured environments [9]. They fall short in accounting for unstructured settings, including raw sensor data, where robots are often trapped in the presence of non-convex obstacles when attempting to expedite their greedy coverage policy [10, 11, 12].

In this paper, we introduce a novel decentralized Voronoi-based active coverage policy for unknown and unstructured environments with guaranteed safety. This research is driven by scenarios where robots aim to balance coverage efficiency and success rate while adhering to safety constraints. We aim to develop a cooperative control policy that enables individual robots to make intelligent decisions while enhancing overall team efficiency. Firstly, we utilize realistic point cloud data as input, characterized by its highly non-convex and unstructured nature. By integrating spatial decomposition and spherical mirroring techniques, collision-free Voronoi regions are efficiently generated using only local sensing and position information from other robots. Secondly, explored information is employed to construct a deadlock-aware guided map to refine subsequent processes for optimal decision-making. This map is integrated with centroid Voronoi based coverage control policy to improve efficiency by avoiding exhaustive searches and local sensing pitfalls. Thirdly, the effectiveness of our proposed method has been extensively validated through numerical simulations in high-fidelity large-scale environments, including cluttered, thin-structural, and narrow settings. Compared with other Voronoi-based methods, the approach has significantly improved the successful coverage ratio and time.

To the best of our knowledge, we are the first to propose a Voronoi-based coverage algorithm that mitigates the issue of local minima trapping in general unstructured obstacle environments supported by convergence guarantees.

II Related Work

This work integrates contributions related to its functionality, building upon collision avoidance methods and our previous research on coverage control [12].

A crucial consideration in collision avoidance is the formulation of obstacles derived from point cloud data. Most existing methods rely on using high resources to maintain a cost map, such as the Euclidean Distance Transform (EDT) map, to calculate the minimum distance to obstacles for optimization [13, 14, 15], which may suffer from time-consuming computations and redundant processes [16]. Alternatively, some strategies depend on down-sampling and spatial partitioning using convex clusters, [17]. Unfortunately, such techniques may sacrifice the free space or lead to unsafe areas [18]. Instead, our approach directly generates safe Voronoi cells on a voxel-based environment representation, inspired by the concept presented in [19, 20]. The advantages lie in threefold: 1) It operates exclusively on point cloud data, eliminating the need for a post-processed map and ensuring the preservation of raw sensor measurement fidelity; 2) It does not assume the convexity or structure of the environment, making it particularly suitable for real-world sensors influenced by noise and adaptable to a range of scenarios; 3) The strategy requires only the sensing of other robots’ positions, which simplifies communication requirements and bolsters system robustness.

Another challenge in active coverage control design arises from the complexities of raw sensor measurements and unstructured environments, which often lead robots to be trapped in local minima. This issue is commonly encountered in coverage methods that rely on CVT, a form of non-convex optimization [10, 11, 12]. While there are some techniques like random restarts [21] and iterative refinement [22] address this issue, it has not been fully solved. In this work, we introduce a deadlock-aware guided map that incorporates a move-to-centroid policy and dynamically updates feasible directions as new obstacles are encountered. The gradient of this map effectively guides the robot away from obstacles and pitfalls while simultaneously directing it toward the target, thereby preventing entrapment in local minima.

III Preliminaries

Consider a team of robots localized at at time in a workspace . The dynamics of each robot can be modeled as a single integrator, described by , and its geometry can be modeled as a ball with centered position and radius , where . The environment is represented by a grid map with discretized points . It can be uniformly partitioned into non-overlapping regions corresponding to each robot utilizing Voronoi partitioning. These partitions are generated based on and can be regarded as the intersection of a set of maximum margin separating hyper-planes [23]. For each robot , the Voronoi cell is defined as follows:

| (1) | ||||

where denotes -norm. It can be observed that the discrete points within the Voronoi cell are closer to its generator than to any other robots. Therefore, it is reasonable to assume that each robot is tasked with its corresponding region using a limited-range sensor.

III-A Sensor and Obstacle Representation

Let be the set of obstacles, where is the number of all point clouds. The free configuration space is defined as Suppose the robot’s perception is solely sensor-based, with the sensor equipped having a fixed sensing range denoted as . This implies that the robot’s knowledge about the location of environmental obstacles is restricted to the structure it perceives within a nearby area around its current position. Let the robot be surrounded by point clouds of obstacles inside the sensor range . The observable obstacles environment, represented by a series of discrete points and denoted as the set , constitutes a spherical region centered at the robot’s position . Any region outside the robot’s sensory footprint is assumed to be obstacle-free until updated when the robot perceives it.

III-B Voronoi-based Coverage Control

To evaluate the coverage of the target of interest (ToI) by a multi-robot system, two key factors must be considered: the value of each sensed point and the sensing capability, which is influenced by the distance between the robot and the point on the grid to be sensed. The value of these points is indicative of the importance of the information to be covered. To quantify this, we introduce a density function based on a Gaussian mixture model for reference coverage information. As the distance between the robot and the point to be sensed increases, the sensing capability generally diminishes. To optimize the deployment locations of the multi-robot system, we utilize a cost function based on [8, 11, 24]

| (2) |

The derivative of indicates that moving to the centroids of the sensors’ corresponding Voronoi cells can reduce the coverage cost. Hence, a gradient decent control policy is designed to steer robots to a CVT:

| (3) |

where

IV Sensor-based Coverage Control with Deadlocks and Collision Avoidance

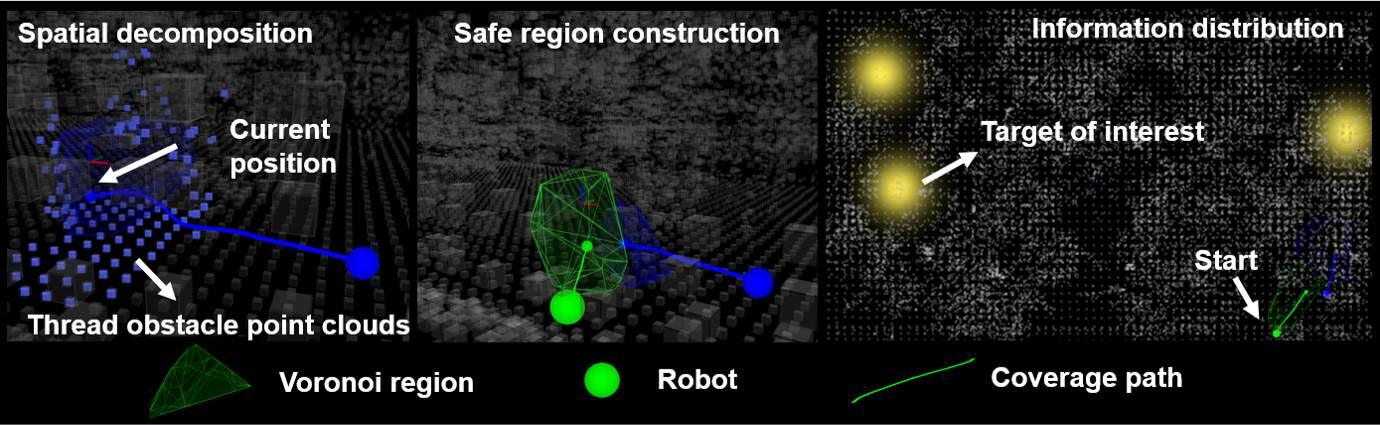

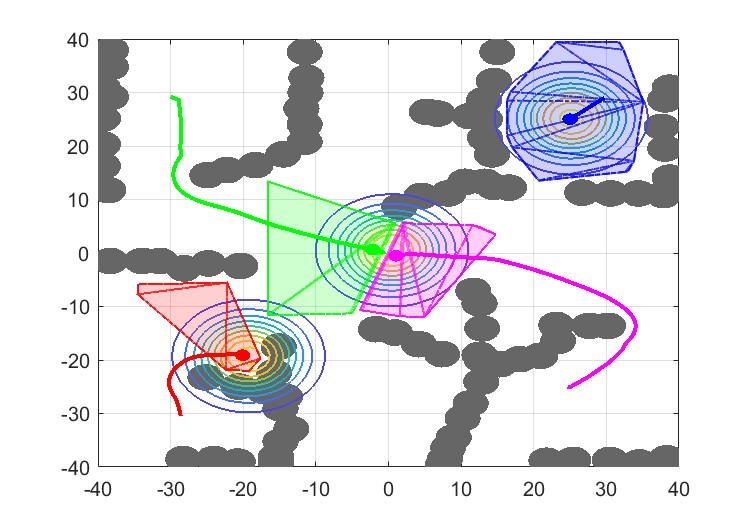

This section introduces a novel reactive coverage method that enables a multi-robot team to cover the ToI using only its current position and the structure perceived within its local sensor footprint. A method overview is depicted in Fig. 2.

IV-A Spatial Decomposition

To separate the movement space of multi-robots into some security domains without executing duplicated tasks, a safe convex region needs to be constructed for each robot at discrete time , where is the time interval. For each time, the Voronoi cell for each robot is only determined by neighboring robots and obstacles, and can thus be formed as the intersection of half-spaces separating robot from obstacles or other robots with linear separator , , and ,, where and .

IV-A1 Robot-robot Collision Avoidance Hyperplane

To achieve a spatially load-balanced deployment that can provide locally optimal sensor coverage of a target, it is essential to define a separation strategy that ensures that each voxel in the deployment region is closer to the position of the robot, which serves as the generator for its Voronoi cell, than to any other robot in the environment. This separation condition is fundamental to the definition of the Voronoi cell, which partitions the environment into non-overlapping regions based on each robot’s location. We can calculate and by finding a perpendicular bisector of any two positions of robot using the definition in the following:

| (4) |

IV-A2 Robot-obstacle Collision Avoidance Hyperplane

The robot operates within a realistic world that contains unstructured obstacles, represented by point cloud data. These raw data are processed with computationally demanding techniques like surface reconstruction and the maintenance of additional cost maps, commonly used in traditional methods. To develop a more efficient and lightweight algorithm, we have devised a method that directly extracts collision avoidance hyperplanes from the point clouds within the visible range of the robot’s sensor . Consequently, our approach substantially minimizes the computational and storage resources required for clustering obstacles.

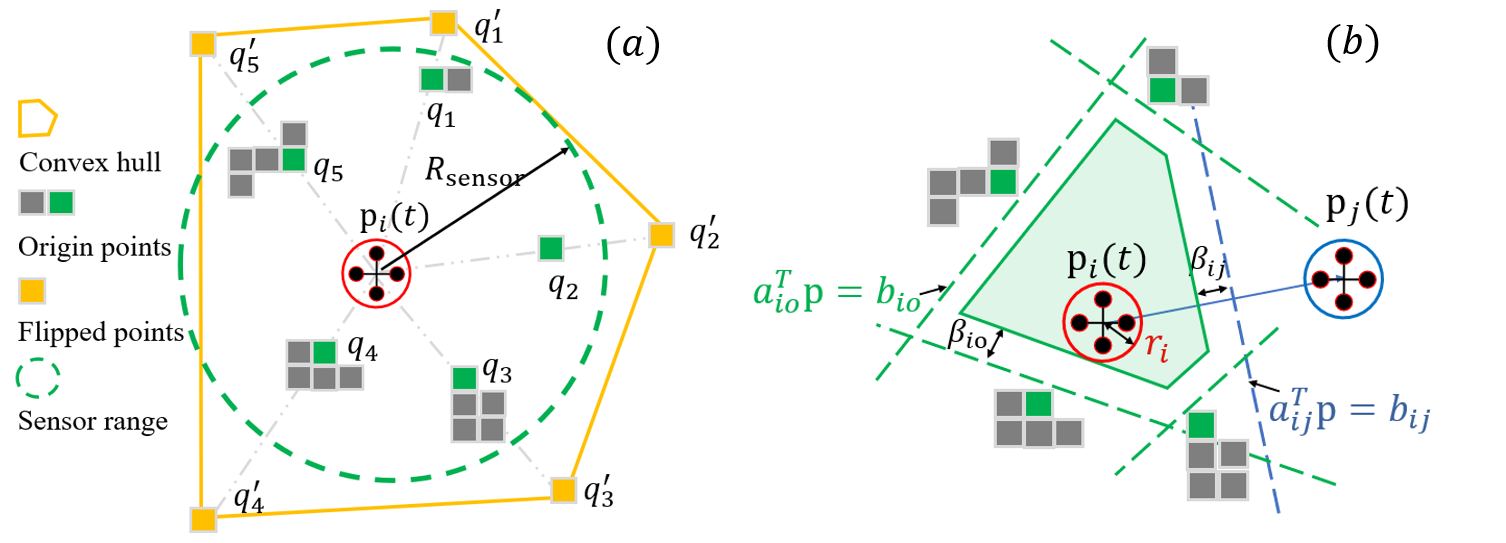

Assuming robot is initially collision-free surrounded by point clouds of obstacles inside the sensor range . We use spherical mirroring operation mentioned in [19] to map each to with :

| (5) |

where is the mirrored point, is the original point, and is the spherical mapping function. As illustrated in Fig. 3 (a), the purpose of employing spherical mirroring is to reverse the positions of any points that are located within the sphere along the corresponding ray. This mirroring operation effectively relocates the origin points of obstacles from the internal region of the sphere to the external region, creating a mirrored representation. By using the QuickHull technique [25], we can efficiently determine the transformation points required to construct a convex hull decided by a vertex vector from the mirrored points.

Moreover, due to the monotonically decreasing nature of the function , points that are closer to the robot are transformed further away. Since there is no point outside the convex hull , it implies that the area inside the corresponding origin points , is obstacle-free. With the described spherical mirroring operation, the extraction of points that are necessary for generating robot-obstacle collision avoidance hyperplanes becomes a straightforward task for an unordered point cloud.

IV-B Safe Region Construction

Based on above, can be quickly calculated by solving the following low-dimensional quadratic programming (QP) problem:

| (6) | ||||

| s.t. |

We then shift the hyper-plane to be tight with the target. Thus, . Solving a QP problem requires time, where denotes the number of decision variables [26]. These decision variables are linearly correlated with the number of obstacle points in our method. Consequently, the total computational complexity for each robot, influenced by the local density of obstacles, is . Leveraging advanced optimization utilities, such as CVXOPT, we can solve the problem in tens of milliseconds.

Moreover, we take into account the geometric dimensions of robots by employing a buffered term [27]. We introduce safety buffer variables and to ensure the body of each robot within its corresponding buffered Voronoi cells (BVCs). It should be noted that these buffer variables can be further generalized to accommodate robots of varying dimensions along different axes. Then, Eq. (1) is extended to the following definition.

Definition 1

For a team of robots localized at in an obstacle-free workspace , the buffered Voronoi cell for each robot is:

| (7) | ||||

Lemma 1 (Properties of BVC)

If and , at time , we have 1) ; 2); 3) ; 4) ; 5) ; 6) .

Proof: According to Section II-A in [26], 1) - 4) have been proved. 5) According to Eq. (7) and Eq. (6), , . Since and , by adding them, we get . Due to , we have . Since Therefore, . 6) If , then Eq. (7) should be satisfied, i.e., . Rewritten this, we have . According to 5), , i.e., , which contradicts to the definition of .

By combining separating hyperplane theorem and sphere flipping transformation, our method can efficiently extract a collision-free region using only raw measurement points, as shown in Fig. 3 (b). Consequently, each robot is capable of independently calculating its operational domain based on the relative positioning of its peers in a decentralized fashion at each time step.

Theorem 1

If a team of robot is initialized at collision-free configuration, i.e., , by using Eq. (3), then for , we have .

Proof: Given that the robot is initially collision-free, according to Lemma 1, safe can be calculated considering obstacle and other robots information using Eq. (7). Since the policy Eq. (3) ensures the robot’s position is updated inside its corresponding Voronoi cell, i.e., , by employing mathematical induction, it can be easily proved that the robots’ movements will remain confined to a sequence of secure convex regions for all future time [26].

Therefore, constructing these safe convex regions, the multi-robot system is designed to strictly prevent duplicated task execution and collisions.

IV-C Deadlock-aware Guided Map

Despite its efficiency in convex environments, the control policy in Eq. (3) may let the robot get stuck in many realistic scenarios. These challenges arise primarily due to the complex, non-convex arrangements of obstacles. To address this, we introduce a real-time constructed guided map that is dynamically adjusted in response to environmental changes, thereby enabling the robot to avoid pitfalls and enhancing both the efficiency of coverage and the system’s adaptability.

In the proposed method, we consider the density map in Eq. (2), which characterizes the distribution of information. Each point on the grid map is assigned a corresponding value. As the robot moves, it employs local sensing to identify a point within its sensor range that represents a local maximum in the information distribution, which then becomes the robot’s navigation goal. To facilitate reaching this local objective, we define the navigation function (NF) as a continuous function that approximates the minimum length of a collision-free path from any point to , i.e., cost-to-goal function.

Lemma 2 (Properties of NF [28])

1) , and iff no point in is reachable from , e.g., obstacle position ; 2) For every reachable position at time , executing an action yields a subsequent position that satisfies .

From Lemma 2, it can be observed that the NF exhibits no local minima other than at the goal, ensuring a cycle-free execution of actions that inevitably leads to the goal position. According to [28], the NF determined by Dijkstra’s algorithm working backward from the goal yields an optimality. Here, to balance the efficiency and optimality, we utilize A∗ algorithm to compute cost-to-go values for each grid on a voxel-based map representation. Consequently, based on , the map in the definition of in the Eq. (2) is appropriately modified as follows:

| (8) |

where gain serves as a design parameter that influences the magnitude of the value. By integrating centroid Voronoi tessellation with and computing , the regions are shifted to align with higher values in the density map, thereby providing feasible heading directions for coverage. The negative gradient of always effectively shortens the shortest path to the destination.

Lemma 3 (Convergence [10])

Assuming there is a finite set of , by adopting controller in Eq. (3), the location of each sensor will decrease its configuration cost and asymptotically converge to a static location.

This approach synergizes local sensing with global information distribution, resulting in more optimal coverage outcomes. Finally, a sequence of coverage path during the time interval reflecting the optimized location of deployment for each robot can be obtained.

V Simulations

This section analyzes the applicability of the proposed method across diverse and unstructured settings, including real forests and indoor office datasets, and evaluates the performance of computational time and collision distance. Besides, benchmarking our method against established coverage control protocols can help quantify the improvements in task efficiency. The accompanying video provides a clear visual aid to understand our method’s mechanics and advantages.111https://youtu.be/wOunvjGHhBQ The simulations are conducted on a laptop equipped with an Intel i7-9700 CPU and 16GB of RAM. A team of robots is simulated through multiprocessing. For real-world scenario validation, We utilized the Robot Operating System (ROS) with C++ and Python programming languages. MATLAB is employed for comparative analysis. Velocity constraints are established with a maximum limit of m/s. The time interval for each replanning is s. The obstacles are inflated by a robot radius, = 0.25m. The grid map resolution is also set to 0.25m for all axes. The sensor’s radius is restricted to a range of 15m. If m/s, the robot is considered to have converged.

V-A High-fidelity Validation

Unlike other coverage methods, like Obstacle-aware Voronoi Cells (OAVC) [10, 11], which are restricted to hyperspherical object models, or the method in [12], which presumes obstacles to be convex polytopes, our approach allows for a broader range of obstacle shapes with various obstacle densities.

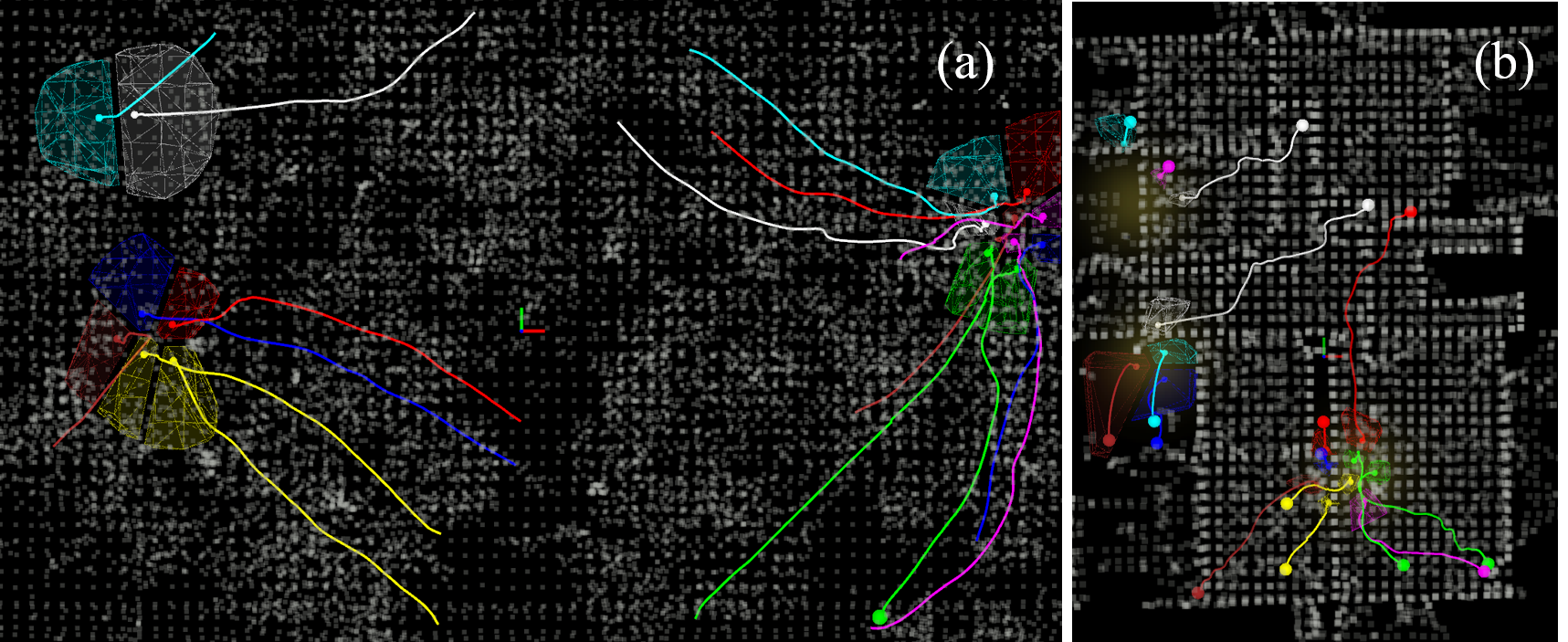

We execute tests utilizing high-fidelity point cloud data, sourced from forested areas by the University of Hong Kong and facilitated by the MARSIM simulator [29]. This dataset is characterized by extensive cluttered and thin structural elements. We expanded the scope of the forest environment to large-scale dimensions of 140m 140m 10m, and the coverage result is depicted in Fig. 4. We also use data from indoor office settings, courtesy of the Technical University of Munich [30] with dimensions of 80m 80m 5m. This environment featured narrow passageways and multiple layers, and the coverage result is depicted in Fig. 4.

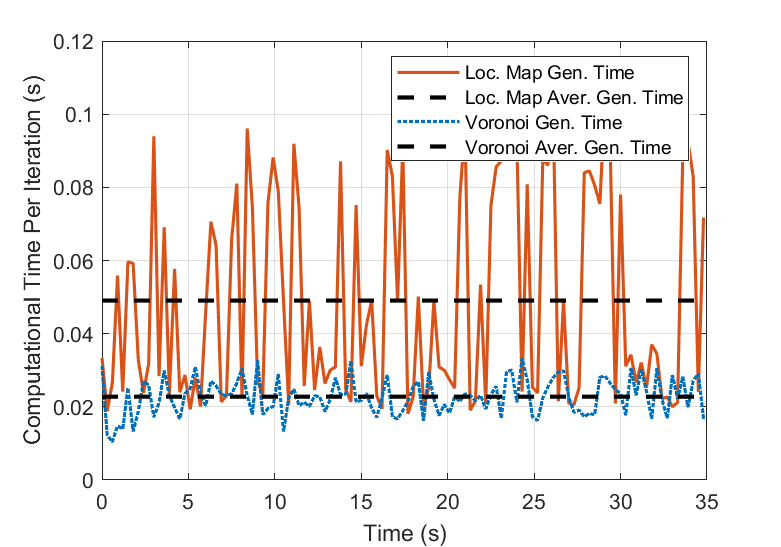

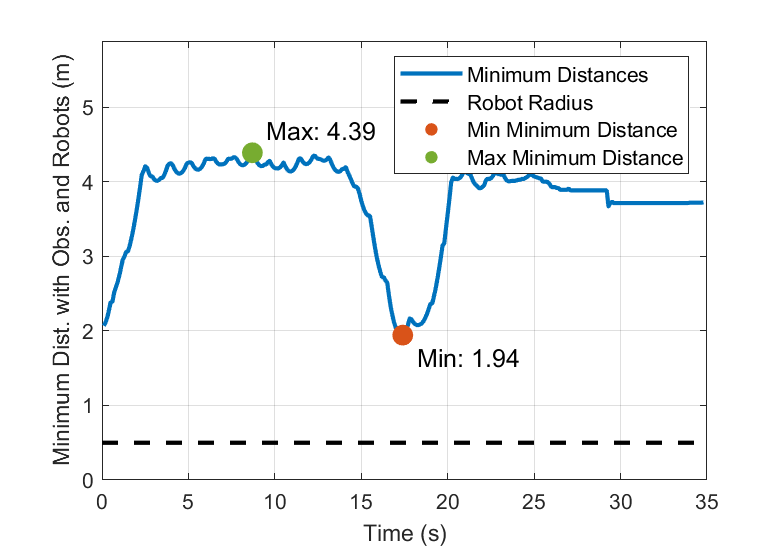

We tested the above mentioned two scenarios with randomized initialization and ToI. We evaluate the two most time-consuming components, local map and Voronoi cell generation, and consider both neighboring robots and obstacles for evaluating collision avoidance performance. One trial test in the forest is shown in Fig. 4 (a) and Fig. 5. The time required for generating a local map depends on the complexity of computing a feasible path. This complexity increases with a high density of obstacles, where conditions such as non-convex obstacles can potentially trap the robots. Despite these challenges, our proposed method can still enable robots to create a secure area in real-time while ensuring safety margins (1.95m) that substantially exceed the robot’s radius (0.25m).

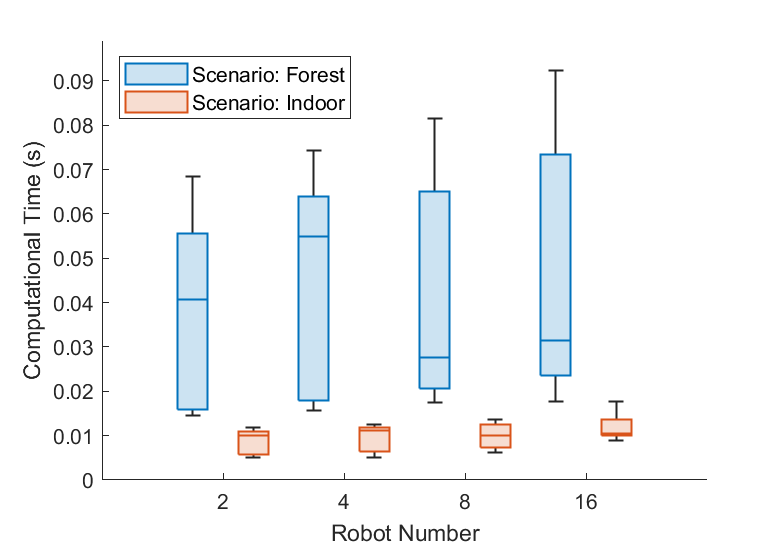

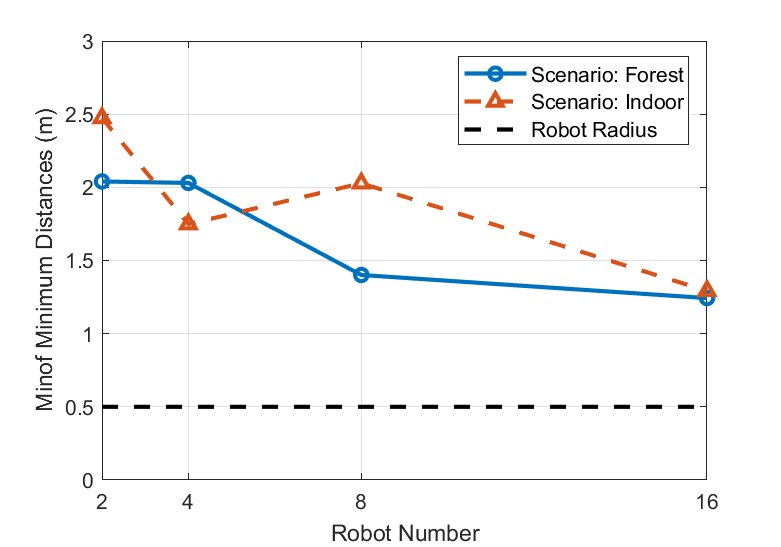

We also tested with varying robot numbers (n=2, 4, 8, 16), each configuration undergoing 10 trials, as depicted in Fig. 6. Due to the decentralized nature of our algorithm, computational time remains largely invariant as the number of robots scales up. Additionally, in more expansive environments—nearly double in obstacle density, the algorithm still satisfies real-time processing criteria. The incidence of collisions remains zero, even when covering obstacle-dense areas, and there are only negligible fluctuations in the minimum distance as the number of robots increases. This demonstrates both scalability in larger contexts and robustness in intricate environments of the proposed method.

s

V-B Comparisons of Coverage Performance

We undertake comparative evaluations with other Voronoi-based coverage methods: 1) OAVC method in [11], formulates secure areas by computing tangential boundary lines to circular obstacles and adheres to the gradient of target information; 2) Adaptive coverage method in [9], utilizes a repulsive density function concerning detected obstacles, ensuring that the newly computed centroid is positioned away from the obstacle. To be fair, the obstacles are modeled as the same shape in [11]. We evaluate these methods over 30 trials, each with randomly initialized starting positions within an 80m 80m 16m workspace. The ToI is defined by a Gaussian distribution featuring three peaks. The performance metrics are as follows: 1) Coverage ratio: The ratio of detected peaks to the total number of peaks; 2) Success rate: The fraction of trials where robots successfully converge to the ToI without experiencing deadlock or collisions within a limited time (30s) or until all peaks are detected; 3) Average time: Task execution time, considering only successful trials. The results are shown in Tab. I.

As shown in Fig. 7, utilizing the proposed method, even in highly irregular environments, all robots can maintain safe distances between each other and prevent both local minima and potential collisions. In contrast, in Fig. 8 (a), the OAVC method directs the robots toward areas of high density without accounting for the obstacle effect, thereby resulting in getting stuck in corners. In Fig. 8 (b), when multiple repulsive fields and attractive fields (target coverage) interact with each other, the adaptive method in [9] predisposes robots to entrapment. Its constrained avoidance space further exacerbates this issue, as it can reduce the navigable space and lead a group of robots to be trapped when moving collectively, thereby increasing the risk of failure.

VI Conclusions

In this work, our proposed method, which incorporates collision avoidance without entrapment, is effectively applicable across a range of unstructured settings. The approach leverages spatial decomposition and sphere flipping transformation to construct a safe Voronoi region in complex environments with considerable point cloud data. The NF is integrated to steer the robot away from pitfalls while simultaneously directing it towards the ToI. Comparisons with others highlight that our approach performs extremely well, with high success ratios and guaranteed safety. Our method also extends the traditional coverage control method, move-to-centroid policy, into more realistic scenarios. Future work includes testing our algorithm on physical robot swarms to address hardware-specific challenges and to further validate its real-world applicability.

References

- [1] S. Huang, R. S. H. Teo, W. W. L. Leong, N. Martinel, G. L. Forest, and C. Micheloni, “Coverage control of multiple unmanned aerial vehicles: A short review,” Unmanned Systems, vol. 6, no. 02, pp. 131–144, 2018.

- [2] C. Wang, S. Zhu, W. Yu, L. Song, and X. Guan, “Minimum norm coverage control of auvs for underwater surveillance with communication constraints,” in 2022 American Control Conference (ACC). IEEE, 2022, pp. 1222–1229.

- [3] J. Zhang, R. Wang, G. Yang, K. Liu, C. Gao, Y. Zhai, X. Chen, and B. M. Chen, “Sim-in-real: Digital twin based UAV inspection process,” in 2022 International Conference on Unmanned Aircraft Systems (ICUAS). IEEE, 2022, pp. 784–801.

- [4] X. Lan and M. Schwager, “Rapidly exploring random cycles: Persistent estimation of spatiotemporal fields with multiple sensing robots,” IEEE Transactions on Robotics, vol. 32, no. 5, pp. 1230–1244, 2016.

- [5] W. Luo and K. Sycara, “Voronoi-based coverage control with connectivity maintenance for robotic sensor networks,” in 2019 International Symposium on Multi-Robot and Multi-Agent Systems (MRS). IEEE, 2019, pp. 148–154.

- [6] H. Mahboubi, F. Sharifi, A. G. Aghdam, and Y. Zhang, “Distributed coordination of multi-agent systems for coverage problem in presence of obstacles,” in 2012 American Control Conference (ACC). IEEE, 2012, pp. 5252–5257.

- [7] C. Wang, S. Zhu, B. Li, L. Song, and X. Guan, “Time-varying constraint-driven optimal task execution for multiple autonomous underwater vehicles,” IEEE Robotics and Automation Letters, vol. 8, no. 2, pp. 712–719, 2022.

- [8] J. Cortes, S. Martinez, T. Karatas, and F. Bullo, “Coverage control for mobile sensing networks,” IEEE Transactions on Robotics and Automation, vol. 20, no. 2, pp. 243–255, 2004.

- [9] Y. Bai, Y. Wang, X. Xiong, M. Svinin, and E. Magid, “Adaptive multi-agent control with dynamic obstacle avoidance in a limited region,” in 2022 American Control Conference (ACC). IEEE, 2022, pp. 4695–4700.

- [10] A. Pierson and D. Rus, “Distributed target tracking in cluttered environments with guaranteed collision avoidance,” in 2017 International Symposium on Multi-Robot and Multi-Agent Systems (MRS). IEEE, 2017, pp. 83–89.

- [11] A. Abdulghafoor and E. Bakolas, “Distributed coverage control of multi-agent networks with guaranteed collision avoidance in cluttered environments,” IFAC-PapersOnLine, vol. 54, no. 20, pp. 771–776, 2021.

- [12] X. Wang, C. Gao, X. Chen, and B. M. Chen, “Fast and secure distributed multi-agent coverage control with an application to infrastructure inspection and reconstruction,” in Proceedings of the 42nd Chinese Control Conference. IEEE, 2023, pp. 5998–6005.

- [13] X. Wang, L. Xi, Y. Chen, S. Lai, F. Lin, and B. M. Chen, “Decentralized mpc-based trajectory generation for multiple quadrotors in cluttered environments,” Guidance, Navigation and Control, vol. 1, no. 02, p. 2150007, 2021.

- [14] L. Xi, X. Wang, L. Jiao, S. Lai, Z. Peng, and B. M. Chen, “GTO-MPC-based target chasing using a quadrotor in cluttered environments,” IEEE Transactions on Industrial Electronics, vol. 69, no. 6, pp. 6026–6035, 2021.

- [15] Y. Chen, S. Lai, J. Cui, B. Wang, and B. M. Chen, “GPU-accelerated incremental euclidean distance transform for online motion planning of mobile robots,” IEEE Robotics and Automation Letters, vol. 7, no. 3, pp. 6894–6901, 2022.

- [16] X. Zhou, Z. Wang, H. Ye, C. Xu, and F. Gao, “Ego-planner: An esdf-free gradient-based local planner for quadrotors,” IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 478–485, 2020.

- [17] S. Liu, M. Watterson, K. Mohta, K. Sun, S. Bhattacharya, C. J. Taylor, and V. Kumar, “Planning dynamically feasible trajectories for quadrotors using safe flight corridors in 3-d complex environments,” IEEE Robotics and Automation Letters, vol. 2, no. 3, pp. 1688–1695, 2017.

- [18] C. Toumieh and A. Lambert, “Decentralized multi-agent planning using model predictive control and time-aware safe corridors,” IEEE Robotics and Automation Letters, vol. 7, no. 4, pp. 11 110–11 117, 2022.

- [19] S. Katz, G. Leifman, and A. Tal, “Mesh segmentation using feature point and core extraction,” The Visual Computer, vol. 21, pp. 649–658, 2005.

- [20] X. Zhong, Y. Wu, D. Wang, Q. Wang, C. Xu, and F. Gao, “Generating large convex polytopes directly on point clouds,” arXiv preprint arXiv:2010.08744, 2020.

- [21] H. Oleynikova, M. Burri, Z. Taylor, J. Nieto, R. Siegwart, and E. Galceran, “Continuous-time trajectory optimization for online UAV replanning,” in 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2016, pp. 5332–5339.

- [22] F. Gao, L. Wang, B. Zhou, X. Zhou, J. Pan, and S. Shen, “Teach-repeat-replan: A complete and robust system for aggressive flight in complex environments,” IEEE Transactions on Robotics, vol. 36, no. 5, pp. 1526–1545, 2020.

- [23] O. Arslan and D. E. Koditschek, “Sensor-based reactive navigation in unknown convex sphere worlds,” The International Journal of Robotics Research, vol. 38, no. 2-3, pp. 196–223, 2019.

- [24] A. Breitenmoser, M. Schwager, J.-C. Metzger, R. Siegwart, and D. Rus, “Voronoi coverage of non-convex environments with a group of networked robots,” in 2010 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2010, pp. 4982–4989.

- [25] C. B. Barber, D. P. Dobkin, and H. Huhdanpaa, “The quickhull algorithm for convex hulls,” ACM Transactions on Mathematical Software (TOMS), vol. 22, no. 4, pp. 469–483, 1996.

- [26] D. Zhou, Z. Wang, S. Bandyopadhyay, and M. Schwager, “Fast, on-line collision avoidance for dynamic vehicles using buffered Voronoi cells,” IEEE Robotics and Automation Letters, vol. 2, no. 2, pp. 1047–1054, 2017.

- [27] X. Wang, L. Xi, Y. Ding, and B. M. Chen, “Distributed encirclement and capture of multiple pursuers with collision avoidance,” IEEE Transactions on Industrial Electronics, pp. 1–11, 2023.

- [28] S. M. LaValle, Planning algorithms. Cambridge university press, 2006.

- [29] F. Kong, X. Liu, B. Tang, J. Lin, Y. Ren, Y. Cai, F. Zhu, N. Chen, and F. Zhang, “Marsim: A light-weight point-realistic simulator for lidar-based uavs,” IEEE Robotics and Automation Letters, vol. 8, no. 5, pp. 2954–2961, 2023.

- [30] S. Ikehata, H. Yang, and Y. Furukawa, “Structured indoor modeling,” in Proceedings of the IEEE International Conference on Computer Vision (ICCV), 2015, pp. 1323–1331.