Sense of Embodiment Inducement for People with Reduced Lower-body Mobility and Sensations with Partial-Visuomotor Stimulation

Abstract.

To induce the Sense of Embodiment (SoE) on the virtual 3D avatar during a Virtual Reality (VR) walking scenario, VR interfaces have employed the visuotactile or visuomotor approaches. However, people with reduced lower-body mobility and sensation (PRLMS) who are incapable of feeling or moving their legs would find this task extremely challenging. Here, we propose an upper-body motion tracking-based partial-visuomotor technique to induce SoE and positive feedback for PRLMS patients. We design partial-visuomotor stimulation consisting of two distinctive inputs (Button Control & Upper Motion tracking) and outputs (wheelchair motion & Gait Motion). The preliminary user study was conducted to explore subjective preference with qualitative feedback. From the qualitative study result, we observed the positive response on the partial-visuomotor regarding SoE in the asynchronous VR experience for PRLMS.

1. Introduction

With the advancement of virtual reality (VR) and full-body motion tracking, a full-body avatar has been deployed in recent research and industrial VR interfaces. A Sense of Embodiment (SoE), which refers to a subjective feeling of experiencing and owning a body (Kilteni et al., 2012) has become a crucial component for the VR experience where the avatar emerges as a primary interacting medium within the VR. However, people with reduced lower-body mobility and sensation (PRLMS) due to the external damage and subsequent loss of motor function could not experience SoE during VR walking scenarios. The main reason is that existing methods require multi-modal synchronous inputs (Kokkinara and Slater, 2014) or arm swing in a stand-up position ((Cannavo et al., 2021; McCullough et al., 2015; Pai and Kunze, 2017; Wilson et al., 2016)) which are not applicable to PRLMS. Previous studies mainly attempted to use passive viewing of the VR environment, the visual-tactile method, or treadmills to induce the SoE of PRLMS. However, a novel barrier-free approach is needed to encompass the broad users, including PRLMS. This study aims to find an effective and economical method that can further increase the SoE only with controllers.

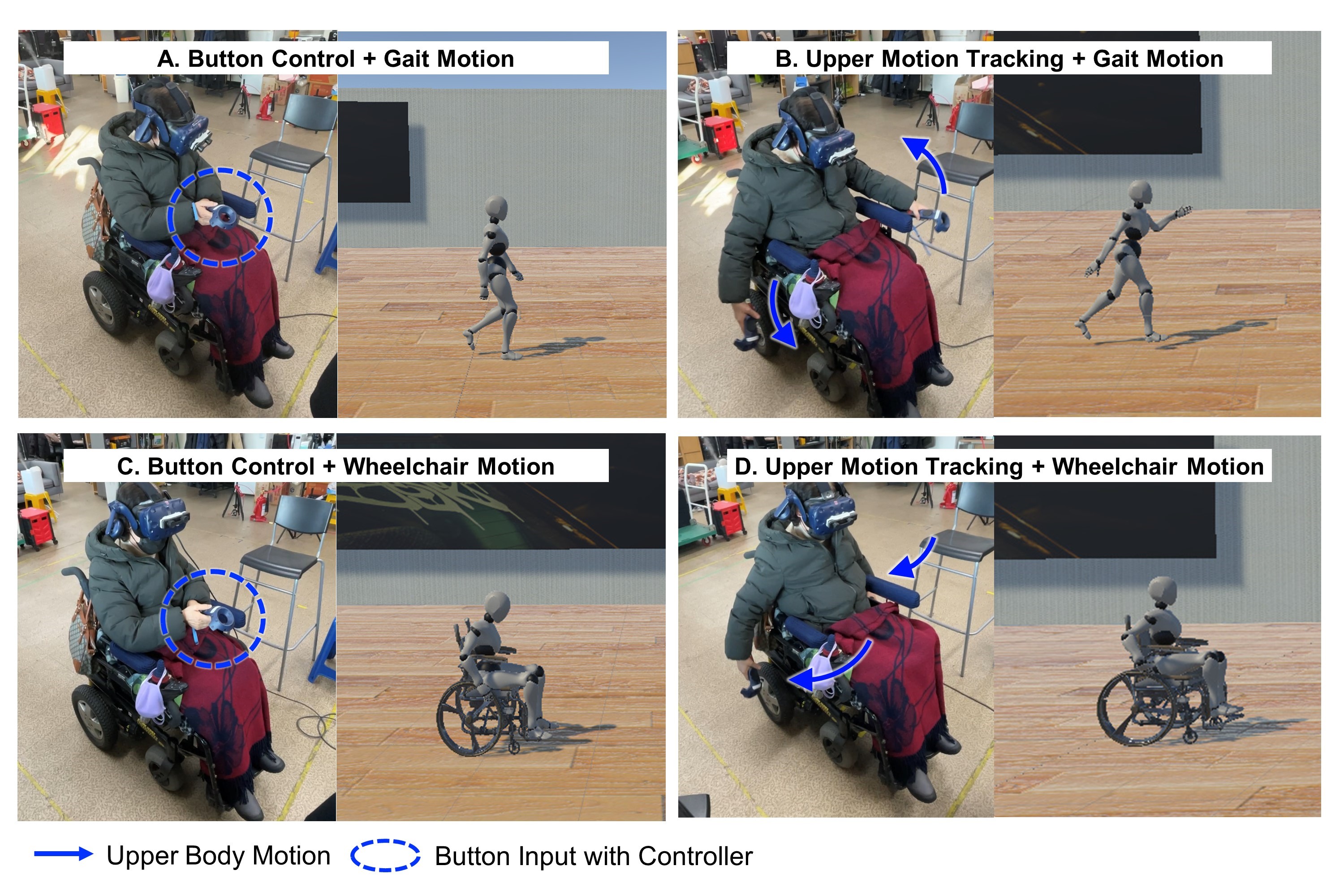

In this work, we propose partial-visuomotor stimulation which automatically generates lower body motion animation from upper motion tracking. The upper motion tracking is based on headset and hand controller movement tracking. We seek to find a way to enhance SoE inducement in situations where PRLMS have inevitably different body representations compared to full-body walking avatars in VR (Figure 1).

2. Implementation and Apparatus

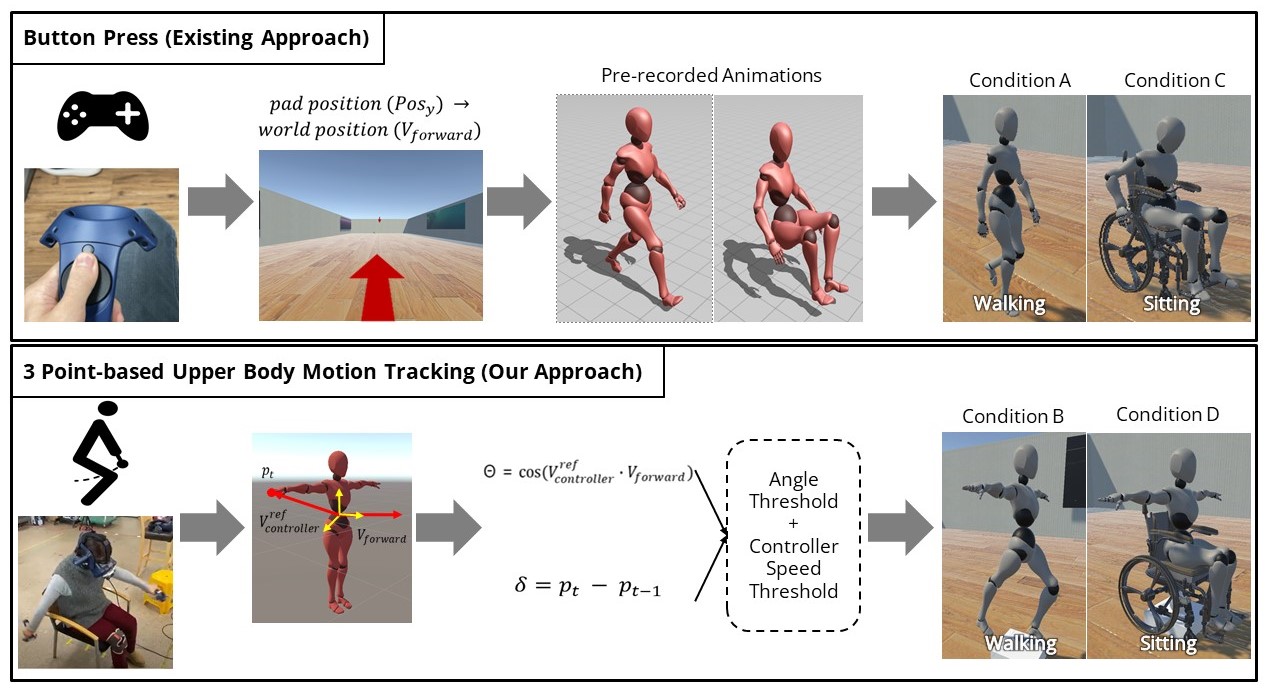

Figure 2 illustrates the implementation of four different scenarios with various input (controller manipulation & upper body motion tracking with controllers) and output methods (lower body motion generation on a wheelchair & lower body motion generation for gait).

-

(1)

Condition A: Button Control + Gait Motion HTC Vive controller pad was used for the avatar manipulation where the avatar movement was limited to forward/backward directions. We adopted the automated animation from Mixamo for the gait motion.

-

(2)

Condition B: Upper Motion Tracking + Gait motion For upper body motion tracking, we used SteamVR Plugin to track the movement of the headset and two controllers along with VR Final IK Unity asset. To calculate the relative position of the hand controllers, we first define an artificial reference coordinate that hand controllers can refer to. Then, we compute the angle () between the hand controller location relative to the reference coordinate () and a forward vector () along with the x-axis (right vector).

(1) For the lower body gait motion, we changed the local joint angles of each leg with a time interval. Both legs change angle when the hand controller angle falls within a pre-defined range (-130°-90°). If the user stops moving their hands, the character also stops moving.

-

(3)

Condition C: Button Control + Wheelchair Motion The Condition C has same input method as Condition A. For the wheelchair motion, we embedded the “Wheelchair” animation. We added “wheel pulling hand gestures” and “wheel rotation” animations when moving forward.

-

(4)

Condition D: Upper Motion Tracking + Wheelchair Motion The position of the hand controller is used as an input. The user swings his hands back and forward simultaneously as if he is pushing the wheelchair. To calculate the location of the hand controller, we assigned a fixed reference similar to Condition C. If the angle between the location of the hand controller to the fixed reference along the x-axis (right vector) is between -130°-100°, the moving forward command is triggered. We fixed the local rotation of leg joints to set the lower body animation as a general sit pose.

3. Result and conclusion

We recruited a total of 8 participants (6 female, 2 male) ranging from 36 to 64 (M=57, SD=9.20) who possess paralysis on a lower limb and are wheelchair users (paraplegia due to spinal cord injury=3, lower body paralysis caused by polio=3, left hemiplegia due to cerebral infarction=1, leg amputee =1). All participants experience all experiment settings in a within-subject design.

Participants reported positive feedback on the Condition B: “I think the motion of standing up is much better (P6)”, ”Button pressing felt convenient, but it didn’t feel like I was moving my body (P2)”, ”The upper body+walking was felt like real walking which makes me swinging my arms more enthusiastically in the subsequent trial (P7)”, and “I felt like I was walking while moving my feet in the upper motion tracking+gait motion (P8)”. Here, participants preferred walking virtually over riding a wheelchair, even if they were sitting in the wheelchair in the real world. P7 mentioned “It is usually physically/mentally exhausting to ride a wheelchair. For example, I tend to be out of breath even if I ride an electric wheelchair”. Furthermore, P8 stated that “The wheelchair conditions were not very interesting because it just simulates the real world.”

This work explores the prospective method of inducing the SoE in a VR walking scenario for PRLMS. Throughout the preliminary study with PRLMS, we observed positive feedback regarding SoE for upper motion tracking based on walking experience compared to other conditions. We expect that future research with a larger participant pool will provide richer insights. Moreover, the findings will serve as a foundation for the development of variety of upper body motion involved VR activities for PRLMS such as hiking, fishing, and climbing.

Acknowledgements.

This work was supported by Korea Institute for Advancement of Technology(KIAT) grant funded by the Korea Government(MOTIE) (P0012746, The Competency Development Program for Industry Specialist) and KAIST grant (G04210059). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the funding agency.References

- (1)

- Cannavo et al. (2021) Alberto Cannavo, Davide Calandra, F. Gabriele Prattico, Valentina Gatteschi, and Fabrizio Lamberti. 2021. An Evaluation Testbed for Locomotion in Virtual Reality. IEEE Transactions on Visualization and Computer Graphics 27, 3 (Mar 2021), 1871–1889. https://doi.org/10.1109/tvcg.2020.3032440

- Kilteni et al. (2012) Konstantina Kilteni, Raphaela Groten, and Mel Slater. 2012. The sense of embodiment in virtual reality. Presence: Teleoperators and Virtual Environments 21, 4 (2012), 373–387.

- Kokkinara and Slater (2014) Elena Kokkinara and Mel Slater. 2014. Measuring the effects through time of the influence of visuomotor and visuotactile synchronous stimulation on a virtual body ownership illusion. Perception 43, 1 (2014), 43–58.

- McCullough et al. (2015) Morgan McCullough, Hong Xu, Joel Michelson, Matthew Jackoski, Wyatt Pease, William Cobb, William Kalescky, Joshua Ladd, and Betsy Williams. 2015. Myo Arm: Swinging to Explore a VE. In Proceedings of the ACM SIGGRAPH Symposium on Applied Perception (Tübingen, Germany) (SAP ’15). Association for Computing Machinery, New York, NY, USA, 107–113. https://doi.org/10.1145/2804408.2804416

- Pai and Kunze (2017) Yun Suen Pai and Kai Kunze. 2017. Armswing: Using Arm Swings for Accessible and Immersive Navigation in AR/VR Spaces. In Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia (Stuttgart, Germany) (MUM ’17). Association for Computing Machinery, New York, NY, USA, 189–198. https://doi.org/10.1145/3152832.3152864

- Wilson et al. (2016) Preston Tunnell Wilson, William Kalescky, Ansel MacLaughlin, and Betsy Williams. 2016. VR Locomotion: Walking ¿ Walking in Place ¿ Arm Swinging. In Proceedings of the 15th ACM SIGGRAPH Conference on Virtual-Reality Continuum and Its Applications in Industry - Volume 1 (Zhuhai, China) (VRCAI ’16). Association for Computing Machinery, New York, NY, USA, 243–249. https://doi.org/10.1145/3013971.3014010