Honggyu [email protected] \addauthorZhixiang [email protected] \addauthorXuepeng [email protected] \addauthorTae-Kyun [email protected],3 \addinstitution KAIST \addinstitution The University of Sheffield \addinstitution Imperial College London Semi-Supervised Object Detection with OCL and RUPL

Semi-Supervised Object Detection with Object-wise Contrastive Learning and Regression Uncertainty

Abstract

Semi-supervised object detection (SSOD) aims to boost detection performance by leveraging extra unlabeled data. The teacher-student framework has been shown to be promising for SSOD, in which a teacher network generates pseudo-labels for unlabeled data to assist the training of a student network. Since the pseudo-labels are noisy, filtering the pseudo-labels is crucial to exploit the potential of such framework. Unlike existing suboptimal methods, we propose a two-step pseudo-label filtering for the classification and regression heads in a teacher-student framework. For the classification head, OCL (Object-wise Contrastive Learning) regularizes the object representation learning that utilizes unlabeled data to improve pseudo-label filtering by enhancing the discriminativeness of the classification score. This is designed to pull together objects in the same class and push away objects from different classes. For the regression head, we further propose RUPL (Regression-Uncertainty-guided Pseudo-Labeling) to learn the aleatoric uncertainty of object localization for label filtering. By jointly filtering the pseudo-labels for the classification and regression heads, the student network receives better guidance from the teacher network for object detection task. Experimental results on Pascal VOC and MS-COCO datasets demonstrate the superiority of our proposed method with competitive performance compared to existing methods.

1 Introduction

Semi-Supervised Object Detection (SSOD) leverages both labeled data and extra unlabeled data to learn object detectors. Previous works [Sohn et al.(2020)Sohn, Zhang, Li, Zhang, Lee, and Pfister, Jeong et al.(2019)Jeong, Lee, Kim, and Kwak, Zhou et al.(2021)Zhou, Yu, Wang, Qian, and Li, Yang et al.(2021)Yang, Wei, Wang, Hua, and Zhang, Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda, Tang et al.(2021b)Tang, Chen, Luo, and Zhang, Wang et al.(2021c)Wang, Li, Guo, Fang, and Wang, Li et al.(2022b)Li, Wu, Shrivastava, and Davis, Zhang et al.(2022)Zhang, Pan, and Wang, Zheng et al.(2022)Zheng, Chen, Cai, Ye, and Tan, Wang et al.(2021b)Wang, Li, Guo, and Wang, Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu, Chen et al.(2022a)Chen, Chen, Yang, Xuan, Song, Xie, Pu, Song, and Zhuang, Mi et al.(2022)Mi, Lin, Zhou, Shen, Luo, Sun, Cao, Fu, Xu, and Ji, Guo et al.(2022)Guo, Mu, Chen, Wang, Yu, and Luo, Chen et al.(2022b)Chen, Li, Chen, Wang, Zhang, and Hua, Kim et al.(2022)Kim, Jang, Seo, Jeong, Na, and Kwak] have proposed various methods to exploit the unlabeled data. Among them, one promising solution is to generate pseudo-labels for the unlabeled data by a pre-trained model and then use them as labeled data along with the original labeled data to train the target object detector. By applying different augmentations to an image, the consistency between the labels of them can serve as extra knowledge to train the target model. The consistency can be exploited by using the generated pseudo-labels from weakly augmented images to regularize the model’s predictions of strongly augmented images [Sohn et al.(2020)Sohn, Zhang, Li, Zhang, Lee, and Pfister]. A teacher-student framework [Tarvainen and Valpola(2017)] further learns the pseudo-labels by mutual learning teacher and student networks, where the teacher network generates pseudo-labels of unlabeled data to assist the training of the student network, and the student network transfers the updated knowledge to the weights of the teacher network.

\bmvaHangBox

|

\bmvaHangBox

|

To train the detector with the pseudo-labels, the quality of the generated pseudo-labels is critical to the detection performance. For the detection task, the pseudo-labels are defined for each region of interest and include the class labels and bounding boxes. Hence, the qualities of both the classification and the localization are important to filter out unreliable pseudo-labels. The classification score is widely adopted to select region proposals with confidence higher than a pre-defined threshold. The selected labeled regions are used to train both the classification and regression heads in the detector. There are some heuristic designs to measure the localization quality of the generated pseudo-labels, e.g., prediction consistency [Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu], interval classification uncertainty [Li et al.(2022b)Li, Wu, Shrivastava, and Davis]. While these techniques together with the classification score are effective, we argue the existing classification scores and localization optimization is suboptimal, since the lack of labeled data makes classification score less discriminative, and the measurement of pseudo labels’ localization quality is less investigated. The classification scores mainly distill knowledge from labeled data to evaluate the quality of unlabeled data, which under exploits the unlabeled data. To tackle these challenges, we extend existing methods in self-supervised representation learning to SSOD task. Instead of using heuristic designs, we further adopt uncertainty to measure the localization quality, which turns out to be an effective indicator as shown in Fig. 1.

In this paper, we propose an effective method to generate pseudo-labels for semi-supervis-ed object detection. Specifically, we present a two-step pseudo-label filtering for the classification and regression heads in a teacher-student framework. For the classification head, the classification score is used to filter out unreliable pseudo class labels. We aim to improve the classification filtering through enhancing the capacity of the classification branch by taking into consideration of the unlabeled data. We introduce an object-wise contrastive learning (OCL) loss for the feature extractor in the classification head. This contrastive loss is defined on object regions with semantic similar regions defined as positive pairs. This loss pull together object representations of the positive pairs and push away representations of negative pairs. For the regression head, we propose to use uncertainty as the indicator to filter out pseudo-labels with regression-uncertainty-guided pseudo-labeling (RUPL). We design an uncertainty head in parallel to the classification and regression heads to learn the aleatoric uncertainty for bounding boxes. We only select pseudo-labels with low uncertainties to train our models. We combine OCL and RUPL in our framework to improve the quality of pseudo-labels for the detection task. To demonstrate effectiveness of our framework, we perform the experiments on standard object detection benchmark: PASCAL VOC [Everingham et al.(2010)Everingham, Gool, Williams, Winn, and Zisserman] and MS-COCO [Lin et al.(2014)Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick]. We experimentally show that OCL and RUPL are complementary and have a synergetic effect. Our framework also achieves competitive performance compared to existing methods, without geometry or improved augmentations.

Our contributions are summarized as follows: (1) We propose an integrated framework that addresses filtering pseudo-labels for classification and regression in SSOD. (2) We propose OCL that improves the discriminativeness of the classification score to enhance the filtering the pseudo-labels for classification. (3) We propose RUPL that models bounding box localization quality via uncertainty and removes misplaced pseudo-labels for regression. (4) We experimentally show the synergetic effect between OCL and RUPL and demonstrate the effectiveness of the overall framework on standard object detection benchmarks.

2 Related Work

Semi-Supervised Object Detection. SSOD methods can be divided into two categories: pseudo-labeling [Sohn et al.(2020)Sohn, Zhang, Li, Zhang, Lee, and Pfister, Wang et al.(2021c)Wang, Li, Guo, Fang, and Wang] methods and consistency regularization [Jeong et al.(2019)Jeong, Lee, Kim, and Kwak, Jeong et al.(2021)Jeong, Verma, Hyun, Kannala, and Kwak, Tang et al.(2021a)Tang, Ramaiah, Wang, Xu, and Xiong] methods. Pseudo-labeling methods regularize the model using predictions generated from the pre-trained model utilizing unlabeled data. Recent pseudo-labeling-based works [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda, Zhou et al.(2021)Zhou, Yu, Wang, Qian, and Li, Tang et al.(2021b)Tang, Chen, Luo, and Zhang, Yang et al.(2021)Yang, Wei, Wang, Hua, and Zhang] adopt the teacher-student framework [Tarvainen and Valpola(2017)]. In this framework, a teacher’s predictions guide a student and the student weight parameters evolve the teacher, which leads to remarkable performance improvement. Since pseudo-labels may have noise that degrades the model performance, classification scores are used to eliminate unreliable ones. To improve the pseudo-label quality, IT [Zhou et al.(2021)Zhou, Yu, Wang, Qian, and Li] and ISTM [Yang et al.(2021)Yang, Wei, Wang, Hua, and Zhang] propose ensemble-based method, and ACRST [Zhang et al.(2022)Zhang, Pan, and Wang] adopts a multi-label classifier at the image level to use high level information. Furthermore, to eliminate misaligned pseudo-labels, 3DIoUMatch [Wang et al.(2021a)Wang, Cong, Litany, Gao, and Guibas] adopts IoU prediction [Jiang et al.(2018)Jiang, Luo, Mao, Xiao, and Jiang] in semi-supervised 3D object detection, and ST [Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu] utilizes regression prediction consistency, and RPL [Li et al.(2022b)Li, Wu, Shrivastava, and Davis] reformulates regression as classification. Concurrent work [Liu et al.(2022)Liu, Ma, and Kira] adopts uncertainty estimation and guides the student if pseudo-labels have lower uncertainty than the student’s predictions. In contrast to existing works, we propose an integrated framework that improves pseudo-label quality by improving the discriminativeness of object-wise features and modeling regression uncertainty.

Contrastive Learning. Contrastive Learning (CL) decreases the distance between positive paired samples and increases the distance between negative ones. Representation learning [Chen et al.(2021)Chen, Xie, and He, Chen et al.(2020)Chen, Kornblith, Norouzi, and Hinton, van den Oord et al.(2018)van den Oord, Li, and Vinyals] has succeeded through self-supervised CL that treats different views of the same image as a positive pair. By extension, SupCon [Khosla et al.(2020)Khosla, Teterwak, Wang, Sarna, Tian, Isola, Maschinot, Liu, and Krishnan] proposes supervised CL that makes a positive pair for images from the same class. Recent works [Yang et al.(2022)Yang, Wu, Zhang, Jiang, Liu, Zheng, Zhang, Wang, and Zeng, Jiang et al.(2021)Jiang, Shi, Tian, Lai, Liu, Fu, and Jia] apply CL that makes positive pairs if unlabeled images or points have the same predicted class. In SSOD, PL [Tang et al.(2021a)Tang, Ramaiah, Wang, Xu, and Xiong] adopts CL that treats overlapped region proposals as positive pairs and otherwise as negative pairs even if they have the same category, which hinders the representation learning. By contrast, in SSOD, we first introduce CL that leverages predictions to identify object regions from unlabeled images and makes positive pairs for objects from the same class to improve the discriminativeness.

Uncertainty Estimation. A seminal work [Kendall and Gal(2017)] captures epistemic and aleatoric uncertainties in the deep learning frameworks of computer vision. Recent works [He et al.(2019)He, Zhu, Wang, Savvides, and Zhang, Choi et al.(2019)Choi, Chun, Kim, and Lee] employ aleatoric uncertainty in bounding box regression to identify well-localized bounding boxes. UPS [Rizve et al.(2021)Rizve, Duarte, Rawat, and Shah] applies aleatoric uncertainty in semi-supervised image classification to remove noisy pseudo-labels. In contrast, our work adopts aleatoric uncertainty in SSOD to model the localization quality of pseudo-labels and select reliable pseudo-labels for regression.

3 Proposed Method

We first define the problem, then show the overall framework in Sec. 3.2. Finally we detail the proposed OCL in Sec. 3.3 and RUPL in Sec. 3.4.

3.1 Problem Definition

Compared with supervised object detection, semi-supervised object detection aims to utilize additional unlabeled images to improve the object detection accuracy. In the teacher-student framework, our task is to generate pseudo-labels from unlabeled data using the teacher network for to train the student network. Specifically, there are a labeled dataset and an unlabeled dataset , where , , , and denote the size of labeled dataset, the size of unlabeled dataset, labeled images, ground-truth labels, and unlabeled images, respectively. The ground-truth label consists of each object’s class category and bounding box . The goal of pseudo-label filtering is to generate and from to train the classification and regression heads in the detection model, respectively.

3.2 Framework Overview

To utilize the unlabeled data in

semi-supervised object detection, we propose object-wise contrastive learning (OCL) and regression-uncertainty-guided pseudo-labeling (RUPL) to select reliable pseudo-labels for the training of classification and regression, respectively.

We apply our proposed modules to a simple baseline [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and

Vajda]. This baseline utilizes the teacher-student framework and pseudo-labeling, which are commonly adopted in SSOD frameworks. Fig. 2 shows our proposed framework with OCL and RUPL.

Training stage.

The teacher-student framework has a student network and a teacher network.

At the pre-training stage, the student network is trained with a supervised loss (Eq. 1) using the labeled dataset .

| (1) |

The supervised loss consists of classification and regression losses for each RPN and ROI. Specifically, we follow [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] to use cross-entropy loss for , smooth L1 loss [Girshick(2015)] for , focal loss [Lin et al.(2020)Lin, Goyal, Girshick, He, and Dollár] for . We adopt uncertainty-aware regression loss [Kendall and Gal(2017)] for . At the mutual learning stage, the teacher network is initialized by the pre-trained student network. Both labeled and unlabeled images are used at this stage. The teacher network generates the pseudo-labels of unlabeled images to train the student network. The overall loss of the student network is

| (2) |

where and denote the unsupervised loss and object-wise contrastive loss, respectively.

The unsupervised and object-wise contrastive losses are computed on unlabeled images.

Our unsupervised loss consists of similar loss terms as the supervised loss.

Here, and are computed on the set of pseudo-labels for classification , and are computed on the set of pseudo-labels for regression .

And we use smoothL1 loss for .

The teacher network is iteratively updated by the student network’s weight parameters via exponential moving average [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and

Vajda].

Inference Stage. The teacher

network is used at the inference stage to detect objects for a given image. With the uncertainty branch, our model also predicts the aleatoric uncertainty of a bounding box, which can be interpreted as the confidence of the boundary location.

3.3 Object-wise Contrastive Learning

In the classification head, we propose the object-wise contrastive learning (OCL) to regularize the feature representation of ROI objects. We visualize the details of OCL and show an illustration in Fig. 3.

To use the contrastive loss, we construct positive and negative pairs of ROI objects within each batch during training. We first predict the class category , classification score , and bounding box for each ROI object detected by the teacher network taking as input the weakly augmented unlabeled images . We denote the number of detected ROI objects in a batch as . We then input two strong augmented images and to the teacher and student networks to get the ROI features and using predicted bounding boxes , respectively. Then, and are projected to the low-dimensional feature space and normalized with L2-normalization to generate and , respectively.

As the predicted class is noisy, we take the classification score into consideration to define ROI object pairs, as shown in Eq. 3. A pair is positive if the -th object feature and the -th object feature belong to the same ROI. We further measure the similarity if the two features are not from the same ROI but have the same class with high confidence.

| (3) |

is a classification score threshold for contrastive learning. Our contrastive loss is based on the supervised contrastive loss [Khosla et al.(2020)Khosla, Teterwak, Wang, Sarna, Tian, Isola, Maschinot, Liu, and Krishnan], and is formulated as

| (4) |

where is the temperature parameter. equals to 1 if the condition is true, otherwise zero. Note that, for the -th ROI object, we normalize the loss by the number of positive pairs rather than the similarity. This aims to reduce the influence of mis-classified predictions. We adopt the symmetrized loss [Chen et al.(2021)Chen, Xie, and He] to compute our object-wise contrastive loss as

| (5) |

where is the same loss as but with the augmentations for the teacher and student networks swapped. Through the OCL (Eq. 5), intra-class objects are encouraged to have similar feature representations, and inter-class objects are encouraged to have different feature representations. As a result, we can select pseudo-labels for classification task as

| (6) |

where is the pseudo-labels for the -th image and consists of the class category and bounding box for objects, is the classification score for the corresponding object in the -th image, and is a predefined threshold.

3.4 Regression-Uncertainty-guided Pseudo-Labeling

We propose regression-uncertainty-guided pseudo-labeling (RUPL) to utilize regression uncertainty to filter out unreliable bounding boxes. Following [He et al.(2019)He, Zhu, Wang, Savvides, and Zhang], we add an uncertainty head in parallel to classification and regression heads to predict regression uncertainty . We use the uncertainty-aware regression loss [Kendall and Gal(2017)] as the the student network’s bounding box regression loss 111This loss is only applied to labeled images.,

| (7) |

where and denote the predicted bounding box offset and ground truth bounding box offset. is a hyperparameter to control the effect of the uncertainty term. During training, we apply this loss to each of the four boundaries of a bounding box. During inference, we use the average of these four uncertainties (denoted as ) as the regression uncertainty of the bounding box. We can then derive the selection of pseudo labels for regression as

| (8) |

where is the pseudo-labels for the -th image and consists of the class category and bounding box for objects, and are the classification score and regression uncertainty for the corresponding object in the -th image, and are predefined thresholds. By learning the regression uncertainty, we provide an alternative way to capture the reliability of pseudo-labels without additional forwarding or reformulation.

4 Experiments

4.1 Experimental Settings

Following previous works [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda], we evaluate our method on Pascal VOC [Everingham et al.(2010)Everingham, Gool, Williams, Winn, and Zisserman] and MS-COCO [Lin et al.(2014)Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick]. We conduct experiments using different settings. (1) VOC [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda]: VOC07-trainval and VOC12-trainval are used as labeled and unlabeled datasets, respectively. We also show results using COCO20cls [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] as an additional unlabeled dataset. VOC07-test is used to evaluate. (2) COCO-standard [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda]: We randomly select 1%/5%/10% samples from COCO2017 train dataset as our labeled datasets, and the rest of them as unlabeled datasets. COCO2017 validation set is used to evaluate. (3) COCO-35k [Wang et al.(2021c)Wang, Li, Guo, Fang, and Wang]: We use a subset of COCO2014 validation set as a labeled dataset and COCO2014 training set as an unlabeled dataset. COCO2014 minival is used to evaluate. (4) COCO-additional [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda]: We use COCO2017 train dataset and COCO2017 unlabeled dataset as labeled and unlabeled datasets, respectively. COCO2017 validation set is used to evaluate.

4.2 Implementation details

Following [Sohn et al.(2020)Sohn, Zhang, Li, Zhang, Lee, and Pfister, Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda], our object detector is Faster-RCNN [Ren et al.(2015)Ren, He, Girshick, and Sun] with ResNet-50 backbone and FPN [Lin et al.(2017)Lin, Dollár, Girshick, He, Hariharan, and Belongie]. For VOC and VOC with COCO20cls settings, we train our model by 60k and 90k iterations, respectively, including 12k iterations for pre-training. For the coco-standard setting, we pre-train 5k/20k/40k iterations for 1%/5%/10% of COCO-standard and persist the training until 180k iterations. For the COCO-35k and COCO-additional settings, models are pre-trained/trained for 12k/180k and 90k/360k iterations, respectively. More training details, including augmentation strategies and model architecture, are in the supplementary.

4.3 Results

VOC. Experimental results on Pascal VOC [Everingham et al.(2010)Everingham, Gool, Williams, Winn, and Zisserman] are shown in Tab. 1. We add our proposed OCL and RUPL to UBT [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] without additional augmentation strategies. When using VOC12-trainval as unlabeled data, our model outperforms UBT [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] by 3.35 mAP and 0.89 mAP on and , respectively. When using COCO20cls [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] as additional unlabeled data, our model has larger improvements and outperforms UBT [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] by 3.54 mAP and 1.25 mAP on and , respectively. This shows that our proposed method can better benefit from unlabeled data. Moreover, our method outperforms other existing models [Sohn et al.(2020)Sohn, Zhang, Li, Zhang, Lee, and Pfister, Wang et al.(2021b)Wang, Li, Guo, and Wang, Zhou et al.(2021)Zhou, Yu, Wang, Qian, and Li, Tang et al.(2021b)Tang, Chen, Luo, and Zhang, Zhang et al.(2022)Zhang, Pan, and Wang, Li et al.(2022b)Li, Wu, Shrivastava, and Davis, Zheng et al.(2022)Zheng, Chen, Cai, Ye, and Tan, Kim et al.(2022)Kim, Jang, Seo, Jeong, Na, and Kwak, Li et al.(2022a)Li, Yuan, and Li, Liu et al.(2022)Liu, Ma, and Kira, Guo et al.(2022)Guo, Mu, Chen, Wang, Yu, and Luo] on both experiment settings, which shows the superiority of our method.

| COCO-35k | |

|---|---|

| Method | |

| Supervised [Wang et al.(2021c)Wang, Li, Guo, Fang, and Wang] | 31.3 |

| DD [Wang et al.(2021c)Wang, Li, Guo, Fang, and Wang] | 33.1 |

| MP [Wang et al.(2021c)Wang, Li, Guo, Fang, and Wang] | 34.8 |

| MP + DD [Wang et al.(2021c)Wang, Li, Guo, Fang, and Wang] | 35.2 |

| 36.36 | |

| Ours | 37.13 |

| COCO-standard | |||

|---|---|---|---|

| Method | 1% | 5% | 10% |

| Supervised [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] | 9.05 | 18.47 | 23.86 |

| STAC [Sohn et al.(2020)Sohn, Zhang, Li, Zhang, Lee, and Pfister] | 13.97 | 24.38 | 28.64 |

| UBT [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] | 20.75 | 28.27 | 31.50 |

| IT [Zhou et al.(2021)Zhou, Yu, Wang, Qian, and Li] | 18.05 | 26.75 | 30.4 |

| RPL [Li et al.(2022b)Li, Wu, Shrivastava, and Davis] | 18.21 | 27.78 | 31.67 |

| CN [Wang et al.(2021b)Wang, Li, Guo, and Wang] | 18.41 | 28.96 | 32.43 |

| [Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu] | 20.46 | 30.74 | 34.04 |

| DDT [Zheng et al.(2022)Zheng, Chen, Cai, Ye, and Tan] | 18.62 | 29.24 | 32.80 |

| MUM [Kim et al.(2022)Kim, Jang, Seo, Jeong, Na, and Kwak] | 21.88 | 28.52 | 31.87 |

| [Liu et al.(2022)Liu, Ma, and Kira] | 25.40 | 31.85 | 35.08 |

| Ours | 21.63 | 30.66 | 33.53 |

| COCO-additional | |

|---|---|

| Method | |

| Supervised [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] | 40.20 |

| STAC [Sohn et al.(2020)Sohn, Zhang, Li, Zhang, Lee, and Pfister] | 39.21 |

| PL [Tang et al.(2021a)Tang, Ramaiah, Wang, Xu, and Xiong] | 38.40 |

| UBT [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] | 41.30 |

| IT [Zhou et al.(2021)Zhou, Yu, Wang, Qian, and Li] | 40.20 |

| RPL [Li et al.(2022b)Li, Wu, Shrivastava, and Davis] | 43.30 |

| CN [Wang et al.(2021b)Wang, Li, Guo, and Wang] | 43.20 |

| [Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu] | 44.50 |

| DDT [Zheng et al.(2022)Zheng, Chen, Cai, Ye, and Tan] | 41.90 |

| MUM [Kim et al.(2022)Kim, Jang, Seo, Jeong, Na, and Kwak] | 42.11 |

| [Liu et al.(2022)Liu, Ma, and Kira] | 44.75 |

| Ours | 41.89 |

MS-COCO. We also conduct experiments on MS-COCO [Lin et al.(2014)Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick] to verify the effectiveness of our method, shown in Tab. 2. Comparing with UBT [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda], our model outperform it by 0.88/2.39/2.03 mAP, 0.77 mAP, and 0.59 mAP on COCO-standard ,COCO-35k, and COCO-additional. respectively. Moreover, our method achieves comparable results on COCO-standard, COCO-35k, and COCO-additional compared to other state-of-the-arts. These results consistently support the effectiveness of our method.

4.4 Ablation studies

We conduct ablation studies to investigate the effectiveness of our model using 1% COCO-standard. Because of the limitation of computing resources, all experiments in this section are conducted with batch size 12/12 (labeled/unlabeled) and training iteration 45k, as in [Kim et al.(2022)Kim, Jang, Seo, Jeong, Na, and Kwak].

| OCL | RUPL | ||||

|---|---|---|---|---|---|

| (1) | ✓ | ✓ | 19.42 | 34.65 | 19.36 |

| (2) | ✓ | 18.95 | 33.82 | 19.07 | |

| (3) | ✓ | 18.34 | 35.21 | 17.16 | |

| (4) | 18.05 | 34.45 | 17.09 |

| Method | |||

|---|---|---|---|

| w/o CL | 18.05 | 34.45 | 17.09 |

| Self-sup CL | 18.24 | 34.89 | 17.22 |

| OCL (Ours) | 18.34 | 35.21 | 17.16 |

| Method | |||

|---|---|---|---|

| Box jittering [Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu] | 18.15 | 34.84 | 17.37 |

| Predicted IoU [Wang et al.(2021a)Wang, Cong, Litany, Gao, and Guibas] | 18.85 | 34.76 | 18.26 |

| Aleatoric uncertainty [Kendall and Gal(2017)] (Ours) | 18.95 | 33.81 | 19.06 |

Effectiveness of proposed modules. We remove each proposed module from our framework and report results in Tab. 4. Comparing (3) to (4), we can see introducingOCL can improve both and . This result demonstrates that the model benefits from more accurate pseudo-labels w.r.t classification. Comparing (2) to (4), we can see introducing RUPL can improve but decrease . We assume the reason is that RUPL makes the model focus more on regression than classification during training. Comparing (1) to (2-4), we can see the accuracy of (1) is significantly higher than that of (2-4), which shows applying both OCL and RUPL can lead to the best accuracy. We argue that the model can generate more precise pseudo-labels with more discriminative classification scores and the introduced localization uncertainties, which leads to the improvement of accuracy. We also show the mAP curves of different experiments during training in Fig. 4, which further supports the effectiveness of our proposed modules. We emphasize that the proposed OCL and RUPL can complement each other and synergistically improve the model performance.

Ablation studies of OCL. To verify the effectiveness of the class information of our OCL, we show the results of three models in Tab. 5, i.e., without, self-supervised, and, object-wise semi-supervised contrastive learning (our OCL). In Tab. 5, self-sup CL improves the model performance compared to w/o CL and OCL further improves the performance since it helps the model to learn more discriminative feature representation for objects from different classes. This supports the effectiveness of our OCL.

Different localization quality measurements. In Tab. 5, we compare different localization quality measurements. Specifically, we compare box jittering [Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu], predicted IoU [Wang et al.(2021a)Wang, Cong, Litany, Gao, and Guibas], and aleatoric uncertainty [Kendall and Gal(2017)] in our RUPL. We use grid-search to find the best thresholds of box jittering [Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu] and predicted IoU [Wang et al.(2021a)Wang, Cong, Litany, Gao, and Guibas] to filter pseudo-labels, and set them as 0.01 and 0.8, respectively. The model with aleatoric uncertainty [Kendall and Gal(2017)] achieves the highest performance on the .

Different Regression Thresholds of RUPL. In this section, we show experimental results on how different thresholds of RUPL () affect the detection accuracy. As shown in Tab. 6, the model achieves the highest performance when we set the threshold as 0.5. The detection accuracy decreases when we set a larger threshold or small threshold. With a larger threshold, the selected samples become more diverse but unreliable. While with a smaller threshold, the selected samples become more reliable but monotonous.

| Threshold | |||

|---|---|---|---|

| 0.3 | 19.18 | 34.53 | 19.13 |

| 0.4 | 19.37 | 34.49 | 19.28 |

| 0.5 | 19.42 | 34.65 | 19.36 |

| 0.6 | 19.26 | 34.45 | 19.36 |

| 0.7 | 18.78 | 33.50 | 18.93 |

5 Conclusion

In this paper, we propose a two-step pseudo-label filtering for SSOD. We deal with both the classification and regression heads in the detection model. For the classification head, we propose an object-wise contrastive learning loss to exploit the unlabeled data to enhance the discriminativeness of classification score for pseudo label filtering. For the regression head, we design an uncertainty branch to learn regression uncertainty to measure the localization quality for bounding box filtering. We experimentally show that the two components create a synergistic effect when integrated into the teacher-student framework. Our framework achieves remarkable performance gain against our baseline on both PASCAL VOC and MS-COCO without additional augmentation, and shows competitive results compared to other state-of-the-arts.

Acknowledgement

This work is in part sponsored by KAIA grant (22CTAP-C163793-02, MOLIT), NST grant (CRC 21011, MSIT), KOCCA grant (R2022020028, MCST) and the Samsung Display corporation.

References

- [Chen et al.(2022a)Chen, Chen, Yang, Xuan, Song, Xie, Pu, Song, and Zhuang] Binbin Chen, Weijie Chen, Shicai Yang, Yunyi Xuan, Jie Song, Di Xie, Shiliang Pu, Mingli Song, and Yueting Zhuang. Label matching semi-supervised object detection. In CVPR, pages 14381–14390, June 2022a.

- [Chen et al.(2022b)Chen, Li, Chen, Wang, Zhang, and Hua] Binghui Chen, Pengyu Li, Xiang Chen, Biao Wang, Lei Zhang, and Xian-Sheng Hua. Dense learning based semi-supervised object detection. In CVPR, pages 4815–4824, June 2022b.

- [Chen et al.(2020)Chen, Kornblith, Norouzi, and Hinton] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey E. Hinton. A simple framework for contrastive learning of visual representations. In ICML, volume 119, pages 1597–1607, 2020.

- [Chen et al.(2021)Chen, Xie, and He] Xinlei Chen, Saining Xie, and Kaiming He. An empirical study of training self-supervised vision transformers. In ICCV, pages 9620–9629, 2021.

- [Choi et al.(2019)Choi, Chun, Kim, and Lee] Jiwoong Choi, Dayoung Chun, Hyun Kim, and Hyuk-Jae Lee. Gaussian yolov3: An accurate and fast object detector using localization uncertainty for autonomous driving. In ICCV, pages 502–511, 2019.

- [Everingham et al.(2010)Everingham, Gool, Williams, Winn, and Zisserman] Mark Everingham, Luc Van Gool, Christopher K. I. Williams, John M. Winn, and Andrew Zisserman. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis., 88(2):303–338, 2010.

- [Girshick(2015)] Ross B. Girshick. Fast R-CNN. In ICCV, pages 1440–1448, 2015.

- [Grill et al.(2020)Grill, Strub, Altché, Tallec, Richemond, Buchatskaya, Doersch, Pires, Guo, Azar, Piot, Kavukcuoglu, Munos, and Valko] Jean-Bastien Grill, Florian Strub, Florent Altché, Corentin Tallec, Pierre H. Richemond, Elena Buchatskaya, Carl Doersch, Bernardo Ávila Pires, Zhaohan Guo, Mohammad Gheshlaghi Azar, Bilal Piot, Koray Kavukcuoglu, Rémi Munos, and Michal Valko. Bootstrap your own latent - A new approach to self-supervised learning. In NeurIPS, 2020.

- [Guo et al.(2022)Guo, Mu, Chen, Wang, Yu, and Luo] Qiushan Guo, Yao Mu, Jianyu Chen, Tianqi Wang, Yizhou Yu, and Ping Luo. Scale-equivalent distillation for semi-supervised object detection. In CVPR, pages 14522–14531, June 2022.

- [He et al.(2019)He, Zhu, Wang, Savvides, and Zhang] Yihui He, Chenchen Zhu, Jianren Wang, Marios Savvides, and Xiangyu Zhang. Bounding box regression with uncertainty for accurate object detection. In CVPR, pages 2888–2897, 2019.

- [Jeong et al.(2019)Jeong, Lee, Kim, and Kwak] Jisoo Jeong, Seungeui Lee, Jeesoo Kim, and Nojun Kwak. Consistency-based semi-supervised learning for object detection. In NeurIPS, pages 10758–10767, 2019.

- [Jeong et al.(2021)Jeong, Verma, Hyun, Kannala, and Kwak] Jisoo Jeong, Vikas Verma, Minsung Hyun, Juho Kannala, and Nojun Kwak. Interpolation-based semi-supervised learning for object detection. In CVPR, pages 11602–11611, 2021.

- [Jiang et al.(2018)Jiang, Luo, Mao, Xiao, and Jiang] Borui Jiang, Ruixuan Luo, Jiayuan Mao, Tete Xiao, and Yuning Jiang. Acquisition of localization confidence for accurate object detection. In ECCV, volume 11218, pages 816–832, 2018.

- [Jiang et al.(2021)Jiang, Shi, Tian, Lai, Liu, Fu, and Jia] Li Jiang, Shaoshuai Shi, Zhuotao Tian, Xin Lai, Shu Liu, Chi-Wing Fu, and Jiaya Jia. Guided point contrastive learning for semi-supervised point cloud semantic segmentation. In ICCV, pages 6403–6412, 2021.

- [Kendall and Gal(2017)] Alex Kendall and Yarin Gal. What uncertainties do we need in bayesian deep learning for computer vision? In NeurIPS, pages 5574–5584, 2017.

- [Khosla et al.(2020)Khosla, Teterwak, Wang, Sarna, Tian, Isola, Maschinot, Liu, and Krishnan] Prannay Khosla, Piotr Teterwak, Chen Wang, Aaron Sarna, Yonglong Tian, Phillip Isola, Aaron Maschinot, Ce Liu, and Dilip Krishnan. Supervised contrastive learning. In NeurIPS, 2020.

- [Kim et al.(2022)Kim, Jang, Seo, Jeong, Na, and Kwak] JongMok Kim, JooYoung Jang, Seunghyeon Seo, Jisoo Jeong, Jongkeun Na, and Nojun Kwak. Mum: Mix image tiles and unmix feature tiles for semi-supervised object detection. In CVPR, pages 14512–14521, June 2022.

- [Li et al.(2022a)Li, Yuan, and Li] Aoxue Li, Peng Yuan, and Zhenguo Li. Semi-supervised object detection via multi-instance alignment with global class prototypes. In CVPR, pages 9809–9818, June 2022a.

- [Li et al.(2022b)Li, Wu, Shrivastava, and Davis] Hengduo Li, Zuxuan Wu, Abhinav Shrivastava, and Larry S. Davis. Rethinking pseudo labels for semi-supervised object detection. In AAAI, pages 1314–1322, 2022b.

- [Lin et al.(2014)Lin, Maire, Belongie, Hays, Perona, Ramanan, Dollár, and Zitnick] Tsung-Yi Lin, Michael Maire, Serge J. Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollár, and C. Lawrence Zitnick. Microsoft COCO: common objects in context. In ECCV, pages 740–755, 2014.

- [Lin et al.(2017)Lin, Dollár, Girshick, He, Hariharan, and Belongie] Tsung-Yi Lin, Piotr Dollár, Ross B. Girshick, Kaiming He, Bharath Hariharan, and Serge J. Belongie. Feature pyramid networks for object detection. In CVPR, pages 936–944, 2017.

- [Lin et al.(2020)Lin, Goyal, Girshick, He, and Dollár] Tsung-Yi Lin, Priya Goyal, Ross B. Girshick, Kaiming He, and Piotr Dollár. Focal loss for dense object detection. IEEE T-PAMI, 42(2):318–327, 2020.

- [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] Yen-Cheng Liu, Chih-Yao Ma, Zijian He, Chia-Wen Kuo, Kan Chen, Peizhao Zhang, Bichen Wu, Zsolt Kira, and Peter Vajda. Unbiased teacher for semi-supervised object detection. In ICLR, 2021.

- [Liu et al.(2022)Liu, Ma, and Kira] Yen-Cheng Liu, Chih-Yao Ma, and Zsolt Kira. Unbiased teacher v2: Semi-supervised object detection for anchor-free and anchor-based detectors. In CVPR, pages 9819–9828, June 2022.

- [Mi et al.(2022)Mi, Lin, Zhou, Shen, Luo, Sun, Cao, Fu, Xu, and Ji] Peng Mi, Jianghang Lin, Yiyi Zhou, Yunhang Shen, Gen Luo, Xiaoshuai Sun, Liujuan Cao, Rongrong Fu, Qiang Xu, and Rongrong Ji. Active teacher for semi-supervised object detection. In CVPR, pages 14482–14491, June 2022.

- [Ren et al.(2015)Ren, He, Girshick, and Sun] Shaoqing Ren, Kaiming He, Ross B. Girshick, and Jian Sun. Faster R-CNN: towards real-time object detection with region proposal networks. In NeurIPS, pages 91–99, 2015.

- [Rizve et al.(2021)Rizve, Duarte, Rawat, and Shah] Mamshad Nayeem Rizve, Kevin Duarte, Yogesh S. Rawat, and Mubarak Shah. In defense of pseudo-labeling: An uncertainty-aware pseudo-label selection framework for semi-supervised learning. In ICLR, 2021.

- [Sohn et al.(2020)Sohn, Zhang, Li, Zhang, Lee, and Pfister] Kihyuk Sohn, Zizhao Zhang, Chun-Liang Li, Han Zhang, Chen-Yu Lee, and Tomas Pfister. A simple semi-supervised learning framework for object detection. CoRR, abs/2005.04757, 2020.

- [Tang et al.(2021a)Tang, Ramaiah, Wang, Xu, and Xiong] Peng Tang, Chetan Ramaiah, Yan Wang, Ran Xu, and Caiming Xiong. Proposal learning for semi-supervised object detection. In WACV, pages 2290–2300, 2021a.

- [Tang et al.(2021b)Tang, Chen, Luo, and Zhang] Yihe Tang, Weifeng Chen, Yijun Luo, and Yuting Zhang. Humble teachers teach better students for semi-supervised object detection. In CVPR, pages 3132–3141, 2021b.

- [Tarvainen and Valpola(2017)] Antti Tarvainen and Harri Valpola. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In NeurIPS, pages 1195–1204, 2017.

- [van den Oord et al.(2018)van den Oord, Li, and Vinyals] Aäron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive predictive coding. CoRR, abs/1807.03748, 2018.

- [Wang et al.(2021a)Wang, Cong, Litany, Gao, and Guibas] He Wang, Yezhen Cong, Or Litany, Yue Gao, and Leonidas J. Guibas. 3dioumatch: Leveraging iou prediction for semi-supervised 3d object detection. In CVPR, pages 14615–14624, 2021a.

- [Wang et al.(2021b)Wang, Li, Guo, and Wang] Zhenyu Wang, Ya-Li Li, Ye Guo, and Shengjin Wang. Combating noise: Semi-supervised learning by region uncertainty quantification. In NeurIPS, pages 9534–9545, 2021b.

- [Wang et al.(2021c)Wang, Li, Guo, Fang, and Wang] Zhenyu Wang, Yali Li, Ye Guo, Lu Fang, and Shengjin Wang. Data-uncertainty guided multi-phase learning for semi-supervised object detection. In CVPR, pages 4568–4577, 2021c.

- [Wu et al.(2019)Wu, Kirillov, Massa, Lo, and Girshick] Yuxin Wu, Alexander Kirillov, Francisco Massa, Wan-Yen Lo, and Ross Girshick. Detectron2. https://github.com/facebookresearch/detectron2, 2019.

- [Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu] Mengde Xu, Zheng Zhang, Han Hu, Jianfeng Wang, Lijuan Wang, Fangyun Wei, Xiang Bai, and Zicheng Liu. End-to-end semi-supervised object detection with soft teacher. In ICCV, pages 3040–3049, 2021.

- [Yang et al.(2022)Yang, Wu, Zhang, Jiang, Liu, Zheng, Zhang, Wang, and Zeng] Fan Yang, Kai Wu, Shuyi Zhang, Guannan Jiang, Yong Liu, Feng Zheng, Wei Zhang, Chengjie Wang, and Long Zeng. Class-aware contrastive semi-supervised learning. CoRR, abs/2203.02261, 2022.

- [Yang et al.(2021)Yang, Wei, Wang, Hua, and Zhang] Qize Yang, Xihan Wei, Biao Wang, Xian-Sheng Hua, and Lei Zhang. Interactive self-training with mean teachers for semi-supervised object detection. In CVPR, pages 5941–5950, 2021.

- [Zhang et al.(2022)Zhang, Pan, and Wang] Fangyuan Zhang, Tianxiang Pan, and Bin Wang. Semi-supervised object detection with adaptive class-rebalancing self-training. In AAAI, pages 3252–3261, 2022.

- [Zheng et al.(2022)Zheng, Chen, Cai, Ye, and Tan] Shida Zheng, Chenshu Chen, Xiaowei Cai, Tingqun Ye, and Wenming Tan. Dual decoupling training for semi-supervised object detection with noise-bypass head. In AAAI, pages 3526–3534, 2022.

- [Zhou et al.(2021)Zhou, Yu, Wang, Qian, and Li] Qiang Zhou, Chaohui Yu, Zhibin Wang, Qi Qian, and Hao Li. Instant-teaching: An end-to-end semi-supervised object detection framework. In CVPR, pages 4081–4090, 2021.

In this material, we provide: 1) Why decreases when RUPL is applied, 2) Justification for using three sets of augmented data, 3) Comparison between two different evaluators, 4) Applying RUPL to the RPN, 5) Applicable to anchor free detector, 6) Comparing with DDT, 7) Qualitative results, 8) Implementation details.

Appendix A Why decreases when RUPL is applied

In [He et al.(2019)He, Zhu, Wang, Savvides, and Zhang], also decreases by up to 1.2 when applying the uncertainty-aware regression loss. We assume that this loss makes models focus more on more achievable training samples, so increases and decreases. Nevertheless, we emphasize that RUPL can improve , which is the most important metric.

Appendix B Justification for using three sets of augmented data

We generate additional strong augmented images for OCL following [Chen et al.(2020)Chen, Kornblith, Norouzi, and Hinton], which uses two sets of strong augmented images. We also observe that using weakly augmented images for OCL decreases the overall AP in Tab. 3 (1) by 0.24.

Appendix C Comparison between two different evaluators

In official repository 222https://github.com/facebookresearch/unbiased-teacher, UBT [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] authors also notice that VOCevaluator results in higher accuracy than COCOevaluator, shown in Tab. 7. They only report the results of UBTv2 [Liu et al.(2022)Liu, Ma, and Kira] with VOCevaluator without releasing code or models.

| Evaluator | Unlabeled | ||

|---|---|---|---|

| COCO | VOC12 | 49.01 | 75.78 |

| VOC | VOC12 | 54.48(+5.47) | 80.51(+4.73) |

| COCO | VOC12+COCO20cls | 50.71 | 77.92 |

| VOC | VOC12+COCO20cls | 55.79(+5.08) | 81.71(+3.79) |

Appendix D Applying RUPL to the RPN

Predicting uncertainty in the RPN is unnecessary because only the regression uncertainty in the ROI head can reflect the localization accuracy of pseudo-labels. [He et al.(2019)He, Zhu, Wang, Savvides, and Zhang] also only predicts regression uncertainty in the ROI head.

Appendix E Applicable to anchor-free detector

Our method can apply to anchor-free detectors. For OCL, we extract instance features from the feature map using detection results and feed them into the projection branch, which is added parallel to the classification branch. Also, RUPL can apply to an anchor-free detector because regression targets are four boundaries of a bounding box, similar to the anchor-free detector’s regression targets.

Appendix F Comparing with DDT

DDT [Zheng et al.(2022)Zheng, Chen, Cai, Ye, and Tan] computes the localization quality of pseudo-labels through the IoU between outputs of two parallel heads. This method utilizes the output consistency, similar to box jittering in Tab. 5. We think box jittering is a more precise method because it uses ten samples to compute consistency. Our regression uncertainty is better than box jittering as shown in Tab. 5. Besides, DDT authors did not release the code.

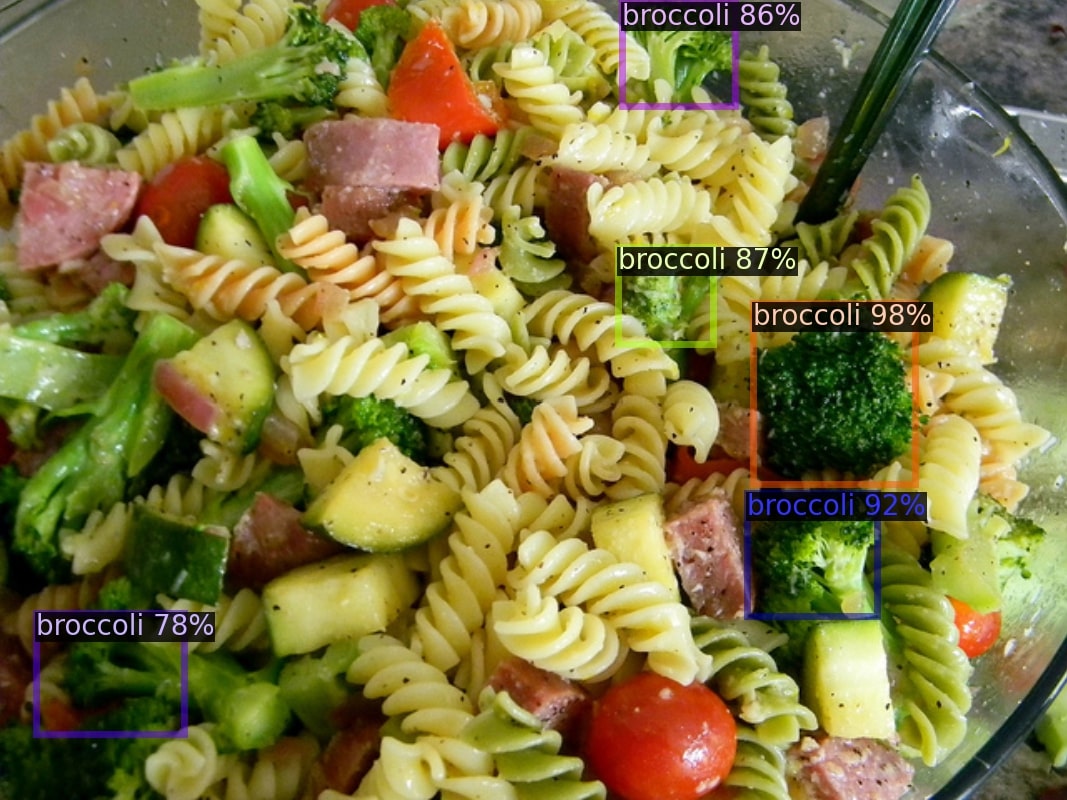

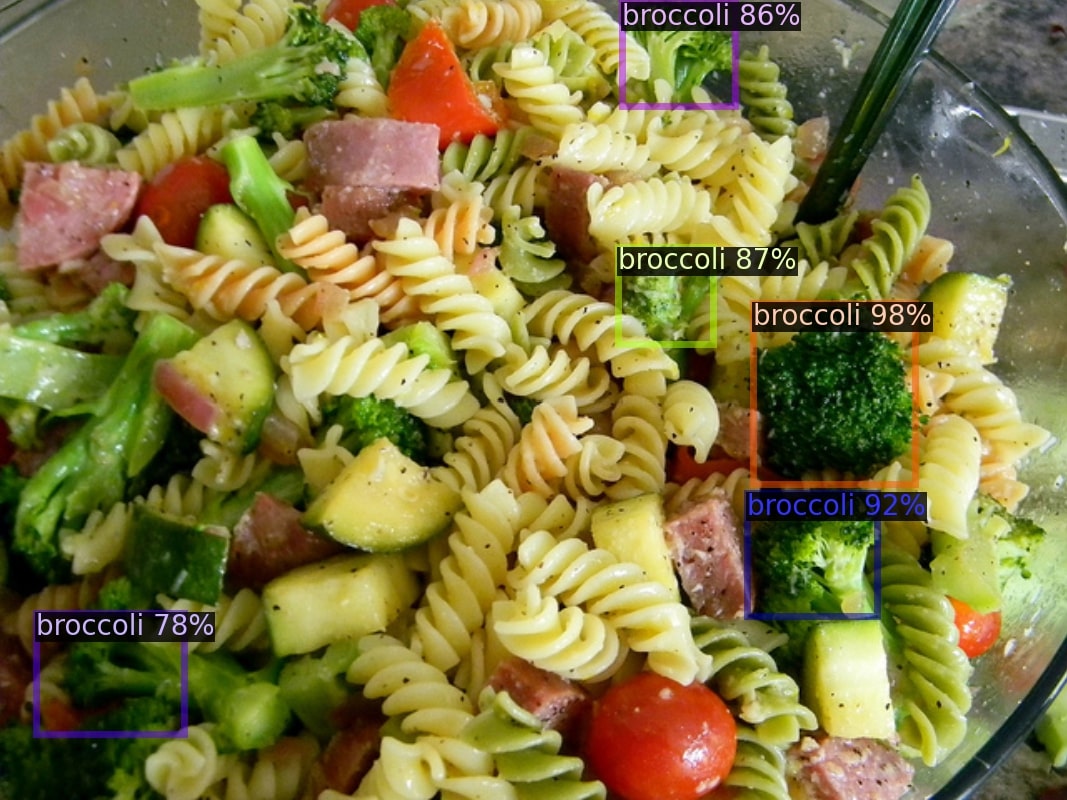

Appendix G Qualitative Results

We show the qualitative results of our framework and the baseline [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda] in Fig. 5, Fig. 6, Fig. 7, and Fig. 8. We can observe some advantages of ours. 1) By introducing the OCL, our model can detect more objects and the classification scores are higher than the baseline [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda]. 2) By introducing the RUPL, our model can predict more accurate bounding boxes.

| Baseline | Ours | Baseline | Ours |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Baseline | Ours | Baseline | Ours |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

// // |

| Baseline | Ours | Baseline | Ours |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Baseline | Ours | Baseline | Ours |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Appendix H Implementation Details

Framework details. We implement our framework based on Detectron2 [Wu et al.(2019)Wu, Kirillov, Massa, Lo, and Girshick] and UBT [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda]. Following previous works [Sohn et al.(2020)Sohn, Zhang, Li, Zhang, Lee, and Pfister, Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and Vajda], we use Faster-RCNN [Ren et al.(2015)Ren, He, Girshick, and Sun] with ResNet-50 backbone and FPN [Lin et al.(2017)Lin, Dollár, Girshick, He, Hariharan, and Belongie] as our detector. For OCL, we adopt an asymmetric architecture [Chen et al.(2021)Chen, Xie, and He, Grill et al.(2020)Grill, Strub, Altché, Tallec, Richemond, Buchatskaya, Doersch, Pires, Guo, Azar, Piot, Kavukcuoglu, Munos, and Valko] in which the teacher network has a projection module parallel to the classification branch, and the student network has an extra prediction module after a projection module. We show the architecture of projection and prediction modules in Tab. 8. For RUPL, we add class-aware uncertainty branch parallel to regression branch following He et al\bmvaOneDot [He et al.(2019)He, Zhu, Wang, Savvides, and Zhang].

| Projection Module | ||

|---|---|---|

| Layer | In_dim | Out_dim |

| Linear (w/o bias) | 1024 | 2048 |

| Batchnorm1D | 2048 | 2048 |

| ReLU | - | - |

| Linear (w/o bias) | 2048 | 128 |

| Batchnorm1D (w/o affine) | 128 | 128 |

| Prediction Module | ||

| Layer | In_dim | Out_dim |

| Linear (w/o bias) | 128 | 2048 |

| Batchnorm1D | 2048 | 2048 |

| ReLU | - | - |

| Linear (w/o bias) | 2048 | 128 |

Training details.

Following the baseline [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and

Vajda], backbone network’s weights are initialized by ImageNet-pretrained model, and SGD optimizer is used with constant learning rate. For SGD optimizer, we set a momentum parameter and learning rate as 0.9 and 0.01, respectively. For OCL, we apply random box jittering on predicted bounding boxes , which are predicted by the teacher network taking as input the weakly augmented unlabeled images , before generating and . We randomly sample values between [-6, 6] of the height and width of bounding boxes and then add them to predicted bounding boxes . Box jittering is used to learn generalizable ROI feature representation, similar to random cropping in representation learning [Chen et al.(2020)Chen, Kornblith, Norouzi, and Hinton]. Loss balance parameters and are set as 0.1 and 0.25, respectively. We set , , , and as 0.7, 0.7, 0.5, and 0.07, respectively. We set the EMA momentum parameter to 0.9996, which determines the update rate of the teacher network. We provide training settings for each experiment in Tab. 9. As summarized in Tab. 10, we use same augmentation strategies as the baseline [Liu et al.(2021)Liu, Ma, He, Kuo, Chen, Zhang, Wu, Kira, and

Vajda].

Ablation study: Different localization quality measurements. We use the same settings except for settings with respect to localization quality measurements. For box jittering [Xu et al.(2021)Xu, Zhang, Hu, Wang, Wang, Wei, Bai, and Liu], we first select pseudo-labels that have classification scores higher than the threshold (). To compute the prediction consistency of pseudo-labels, we generate ten randomly jittered bounding boxes for each pseudo-label and forward them to the ROI head to generate refined predictions. We compute the variance of each box’s boundaries through refined predictions and normalize them using the height and width of each bounding box. We use the average of normalized variances as the uncertainty of the bounding box. For predicted IoU [Wang et al.(2021a)Wang, Cong, Litany, Gao, and Guibas], we add a class-aware IoU branch with sigmoid activation parallel to the regression branch. We train the IoU branch with foreground region proposals in the same manner as the uncertainty branch. We compute ground-truth IoUs of region proposals with ground-truth labels and normalize values to [0.0, 1.0]. We use smoothL1 loss [Girshick(2015)] for the training IoU branch and set IoU loss weight as 1.0.

| Training setting | VOC | VOC | COCO | COCO | COCO |

| COCO20cls | standard | 35k | additional | ||

| Iteration for pretraining | 12k | 12k | 5k/20k/40k | 12k | 90k |

| Iteration for training | 60k | 90k | 180k | 180k | 360k |

| Batch size for labeled data | 32 | 32 | 32 | 16 | 32 |

| Batch size for unlabeled data | 32 | 32 | 32 | 16 | 32 |

| Unsupervised loss weight () | 4 | 4 | 4 | 2 | 2 |

| Weak augmentation | |||

| Process | Probability | Parameters | Details |

| Horizontal Flip | 0.5 | - | - |

| Strong augmentation | |||

| Process | Probability | Parameters | Details |

| Horizontal Flip | 0.5 | - | - |

| Color jittering | 0.8 | brightness = 0.4 | We uniformly select from [0.6, 1.4] for brightness factor. |

| contrast = 0.4 | We uniformly select from [0.6, 1.4] for contrast factor. | ||

| saturation = 0.4 | We uniformly select from [0.6, 1.4] for saturation factor. | ||

| hue = 0.1 | We uniformly select from [-0.1, 0.1] for hue factor. | ||

| Grayscale | 0.2 | - | - |

| GaussianBlur | 0.5 | (sigma_x, sigma_y)=(0.1, 2.0) | and for gaussian filter are set 0.1 and 0.2., respectively. |

| Cutout 1 | 0.7 | scale=(0.05, 0.2), ratio=(0.3, 3.3) | Randomly selected rectangle regions are erased in an image. |

| Cutout 2 | 0.5 | scale=(0.02, 0.2), ratio=(0.1, 6) | Randomly selected rectangle regions are erased in an image. |

| Cutout 3 | 0.3 | scale=(0.02, 0.2), ratio=(0.05, 8) | Randomly selected rectangle regions are erased in an image. |