Semi-supervised learning via DQN for log anomaly detection

Abstract

Log anomaly detection is a critical component in modern software system security and maintenance, serving as a crucial support and basis for system monitoring, operation, and troubleshooting. It aids operations personnel in timely identification and resolution of issues. However, current methods in log anomaly detection still face challenges such as underutilization of unlabeled data, imbalance between normal and anomaly class data, and high rates of false positives and false negatives, leading to insufficient effectiveness in anomaly recognition. In this study, we propose a semi-supervised log anomaly detection method named DQNLog, which integrates deep reinforcement learning to enhance anomaly detection performance by leveraging a small amount of labeled data and large-scale unlabeled data. To address issues of imbalanced data and insufficient labeling, we design a state transition function biased towards anomalies based on cosine similarity, aiming to capture semantic-similar anomalies rather than favoring the majority class. To enhance the model’s capability in learning anomalies, we devise a joint reward function that encourages the model to utilize labeled anomalies and explore unlabeled anomalies, thereby reducing false positives and false negatives. Additionally, to prevent the model from deviating from normal trajectories due to misestimation, we introduce a regularization term in the loss function to ensure the model retains prior knowledge during updates. We evaluate DQNLog on three widely used datasets, demonstrating its ability to effectively utilize large-scale unlabeled data and achieve promising results across all experimental datasets.

Index Terms:

Log; Log embedding; Anomaly detection; Deep reinforcement learningI Introduction

With various industries continuously digitizing, the scale and complexity of software systems are gradually increasing. The likelihood of software failures is also rising, which can lead to immeasurable consequences [1]. Therefore, for software systems, it is particularly important during both development and operations to perform anomaly detection by analyzing system behavior patterns and resource conditions. Logs, which are semi-structured texts that record program behavior during runtime, are crucial in this regard. Compared to other core operational monitoring analysis objects such as metric data and distributed tracing data [2], logs are rich in content and abundant in source, often reflecting software system anomalies [3]. By mining log data, operations engineers can proactively identify potential issues and resolve them promptly, ensuring the stable operation of systems and reducing losses caused by system failures. Currently, methods for log anomaly detection include those based on traditional machine learning, deep learning, and reinforcement learning approaches.

Traditional machine learning methods [4, 5, 6] extract features manually from log sequences to create log count vectors based on event frequencies. These log count vectors are then used with supervised or unsupervised models to identify anomalies. While these methods are simple and require fewer computational and time resources, they struggle to handle high-dimensional log data effectively as they are based on statistical knowledge. Moreover, they overlook important information such as the temporal sequence and contextual semantics of logs[7]. As a result, they often have low detection accuracy and are not suitable for practical use.

With the significant advantages of deep learning methods in feature representation learning, deep learning-based approaches, particularly recurrent neural network (RNN) models with good memory capturing capabilities[8, 9, 10, 11], have taken a dominant position in the field of log anomaly detection. The unsupervised method DeepLog [8] converts log sequences into sequential vectors and parameter value vectors, and detects anomalies by determining whether the current log deviates from the LSTM model trained from log data under normal execution. LogAnomaly [9], built upon DeepLog, introduces a similar template merging mechanism that enables the model to learn similar semantic information. By utilizing unlabeled training data, they can detect anomalies beyond specific categories. However, due to the disregard of prior label information, they often suffer from poor accuracy and generate more false positives. The supervised method LogRobust[10] converts log sequences into semantic vectors and utilizes an attention-based Bi-LSTM model to solve the problem of log instability. However, obtaining large-scale labeled datasets is very difficult, extremely time-consuming and cost-effective. Although better performance can be achieved, it is not practical. The semi-supervised method PLELog[12] converts log sequences into sequential vectors, estimates probabilistic labels for unlabeled samples clustered based on labeled normal samples, and detects anomalies using an attention-based GRU neural network. It effectively combines the advantages of supervised and unsupervised, but it only utilizes some normal instances and completely ignores the prior information of known anomalies.

In recent years, reinforcement learning has been considered for time series anomaly detection due to its sequential decision-making capabilities[13, 14, 15]. It learns autonomously from interactions with the environment and can effectively utilize unlabeled data. QLLog [16] constructs a Directed Acyclic Graph (DAG) from log sequences and establishes a Q-table based on the Q-learning[17] algorithm to assess whether a log execution sequence is anomalous. However, the exponential growth of the Q-table with an increasing number of log templates renders it impractical for datasets containing numerous log templates. Elaziz et al. [18] proposed a weakly supervised method based on deep reinforcement learning to identify anomalies in business processes using limited labeled anomaly data and abundant unlabeled data. It defines a state transition function based on Euclidean distance in the latent space of variational autoencoders to address data imbalance issues. However, it uses one-hot vector encoding to encode logs in business process trajectories, resulting in feature vectors that do not reflect semantic similarities between categories. Moreover, the data representation learned by variational autoencoders may lose important features, contributing to remaining issues of data imbalance to some extent.

In summary, the field of log anomaly detection still faces the following practical challenges:

-

•

Insufficient labeled data and underutilization of vast amounts of unlabeled data. The sheer volume of logs makes manually labeling them based on expert experience or domain knowledge nearly impossible. In real-world software systems, logs are typically not strictly labeled or unlabeled; there is often a scarcity of labeled data alongside readily available unlabeled data. While labeled data provides valuable prior information, unlabeled data may also contain anomalous patterns.

-

•

Log data categories are highly unbalanced, leading to model bias towards the majority class. In the log data acquired from software systems, the number of normal samples is often much larger than that of anomaly samples. When this highly unbalanced data is input into a model, deep learning models tend to lean more towards the majority class when learning log features. This ultimately results in the model more frequently misclassifying anomaly classes as normal classes during anomaly detection.

-

•

The ability to identify anomalies is poor, leading to many false negatives and false positives. Anomalies are often unpredictable, they could be caused by a failure in some underlying component of the system or by a certain type of network attack. In practical applications, it is difficult to obtain a training set that covers all possible anomalies. As a result, the trained model may have a poor ability to recognize anomalies, frequently resulting in false negatives and false positives.

To solve the above problems, in this paper, we propose a deep reinforcement learning-based method (DQNLog) for software log anomaly detection. The aim is to utilize a small number of labeled samples while exploring a large number of unlabeled samples to improve the performance of anomaly detection. The method first utilizes pre-trained language models to transform log data into numerical vectors with semantic features, based on log parsing. Subsequently, with log anomaly detection as the specific objective, key components of the deep reinforcement learning model are optimized to enhance the learning and training process of the agent. In particular, the data imbalance issue is mitigated through a state transition function that favors anomalies, and the agent’s behavior is evaluated using a joint reward function sensitive to anomalies. This incentivizes the agent to effectively utilize labeled prior information and potential information from unlabeled sets, thereby reducing both false negatives and false positives to improve the model’s detection performance. Our work makes the following contributions:

-

•

To address the issues of insufficient labeled data and underutilization of large-scale unlabeled data in current software systems, we optimized the key components of the deep reinforcement learning model and developed an agent based on deep Q-network to achieve log anomaly detection. During the training process, the agent does not confine the search for anomalies to the given labeled examples but autonomously interacts with an environment constructed from a small set of labeled samples and a large-scale unlabeled sample set to learn labeled anomalies and actively explore potential anomalies in the unlabeled set.

-

•

To address the issue of highly imbalanced log data, we designed a state transition function biased towards anomalies based on cosine similarity. The environment returns the next state that is most likely to be an anomaly according to the agent’s behavior. This ensures that the model does not frequently favor the majority class in imbalanced datasets but rather tends to identify anomaly log sequences that contain more informative patterns.

-

•

To address the issue of knowledge forgetting during the training process of the agent, we introduce a regularization term into the loss function, constructing a regularized loss function. This allows the agent to directly utilize known prior information during parameter updates, preventing the agent from deviating from the normal trajectory due to potentially inaccurate estimates based solely on the neural network output. This ensures that the updates of the agent are minimally affected by the unconverged network values.

-

•

Experimental results demonstrate that, compared to the baseline methods, it effectively utilizes a small amount of labeled data and large-scale unlabeled data, achieving superior detection performance.

II Log Terminology

A log message refers to the textual statement output during the software runtime, which includes log event and log parameters. Log events are statements with specific meanings that typically summarize the events expressed in the logs[19]. Log parameters typically refer to constant values, IP addresses, task IDs (such as in HDFS logs and in BGL logs), file names, etc. Log parsing is the process of extracting log events and log parameters from log messages. A log sequence consists of a series of log events partitioned by strategies such as task IDs or sliding windows. If one log message in a log sequence is anomalous, the entire log sequence is considered anomalous; otherwise, it is normal. Each log event is transformed into a semantic vector through semantic embedding, denoted as , where represents the dimensionality of the semantic vector. The sequence composed of vectorized log events is referred to as a semantic vector sequence, denoted as , where is the number of log events in the log sequence.

III Proposed approach

III-A Overview

In this paper, we propose a deep reinforcement learning approach for software log anomaly detection. In this method, raw log data is transformed into fixed-dimensional semantic vectors, and leveraging the autonomous learning and decision-making capabilities of deep reinforcement learning, the model effectively utilizes a small amount of labeled data and large-scale unlabeled data to enhance the performance of log anomaly detection.

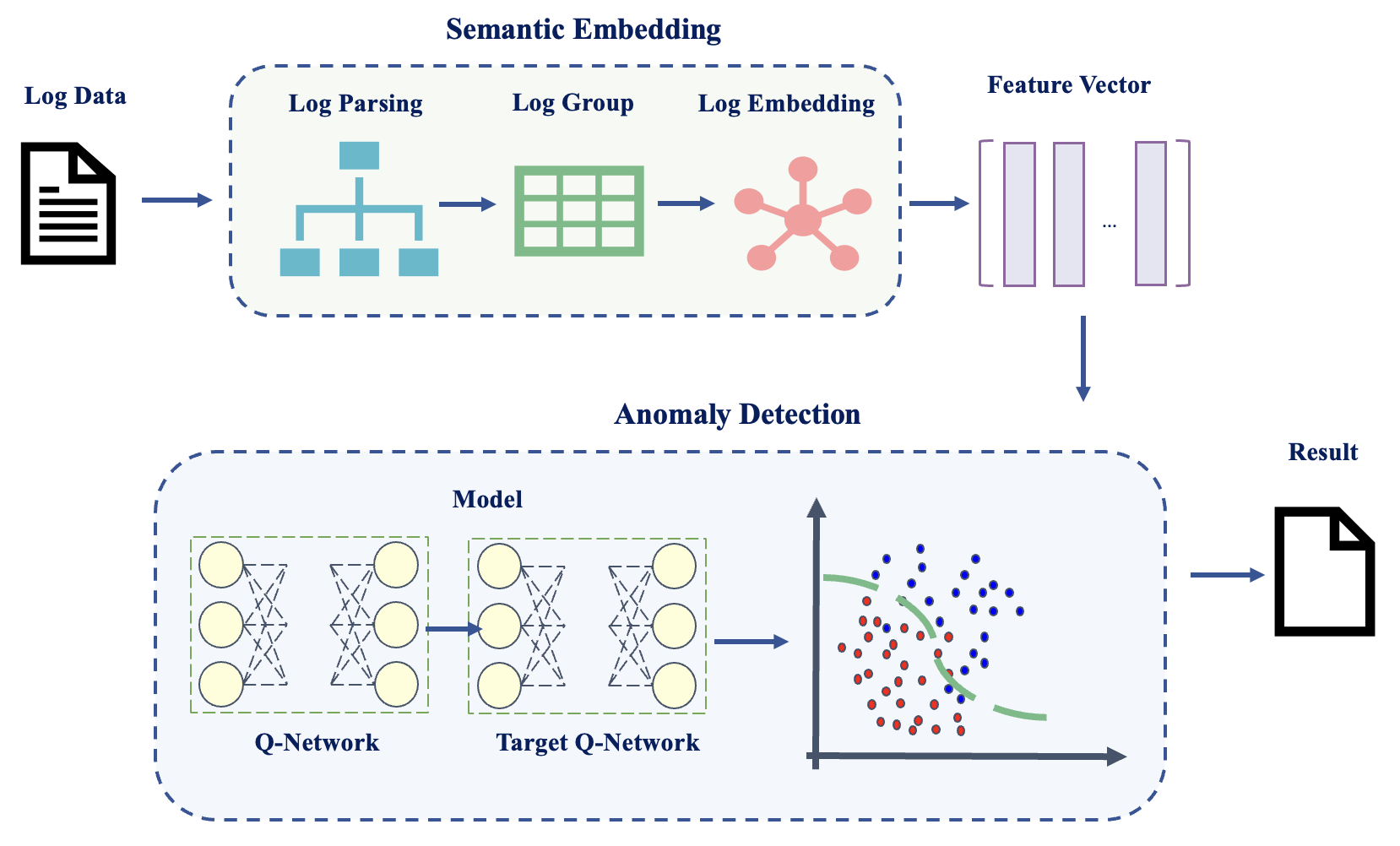

Figure 1 presents an overview of DQNLog, which comprises two stages: semantic embedding and anomaly detection model building. In the semantic embedding stage, the raw log data undergoes log parsing to extract log events. These log events are then grouped into log sequences. Afterward, the log sequences are subjected to semantic embedding to obtain log semantic vectors. Moving on to the anomaly detection stage, a model is first constructed. Subsequently, the model is trained using the log semantic vectors, and the effectiveness of anomaly detection is evaluated.

III-B Semantic Embedding

The log data generated by software systems is unstructured text, akin to natural language data, which machines cannot inherently recognize or understand. Therefore, before performing anomaly detection, we first transform the log data into fixed-format feature vectors. The process of log semantic embedding involves log parsing, log grouping, and log embedding, as depicted in Figure 2.

III-B1 Log Parsing

Log data is semi-structured text composed of static constants that remain unchanged and dynamic variables that vary with runtime behavior[20]. Extracting common segments and unique segments from raw log data is typically a preprocessing step for downstream log tasks[21]. Due to its adaptive parsing capability for various types and formats of log messages, Drain[22] method demonstrates excellent performance even across different datasets. Therefore, we employ the Drain method to extract templates from raw log data.

III-B2 Log Grouping

Since logs are sequentially output by software systems based on the occurrence of events, adjacent logs exhibit correlations. Therefore, for subsequent log analysis tasks, it is common practice to input logs within a certain time frame [23]. In our work, for HDFS and BGL datasets that include session identifiers, we group logs based on session IDs using the session window strategy, which better reflects the logical flow of software execution and the sequence of events. Conversely, for the Thunderbird dataset, we employ the sliding window strategy to capture changing trends and periodic patterns in system behavior.

III-B3 Log Embedding

Log embedding maps different log templates into a vector space, providing effective feature representation and data processing for downstream anomaly detection tasks. High-quality log feature extraction should capture semantic information from log messages, including word semantics and contextual information. DQNLog utilizes the pretrained language model Roberta to obtain semantic vectors of logs. Roberta [25] is an enhanced version of BERT[26], employing dynamic masking strategies, larger training datasets, and increased batch sizes to enhance data diversity, improve model understanding, and boost generalization capabilities, resulting in more accurate semantic vectors.

III-C DRL Tailored for Log Anomaly Detection

III-C1 Modeling of Markov Decision Processes

To fully utilize the small labeled dataset and the large unlabeled dataset, the log anomaly detection problem is first modeled as a Markov Decision Process[24]. By defining key components such as state, agent, action, environment, and reward[27], deep reinforcement learning can be effectively applied to the field of log anomaly detection.

-

•

State: The state space , where each state represents a log semantic sequence.The size of is the same as the size of the training set , which includes a limited labeled dataset and a large unlabeled dataset.

-

•

Action: The action space , where and correspond to the actions ‘normal’ and ‘anomaly’.

-

•

Agent: Implemented using an attention-based Bidirectional Long Short-Term Memory (Bi-LSTM) neural network, it seeks an optimal action from the action space for the current state.

-

•

Environment: Simulates the agent’s interaction, accomplished through a state transition function that is biased towards anomalies.

-

•

Rewards: The reward function is defined as , where represents the extrinsic reward given by the environment based on predicted and actual labels, and denotes the intrinsic reward provided by the exploration mechanism to measure novelty.

III-C2 Model framework based on DRL

The model based on deep reinforcement learning aims to fully utilize the small labeled dataset and effectively explore the large unlabeled dataset to improve the performance of log anomaly detection. It achieves goal by approximating the optimal action-value function (Q-value function), which refers to the expected return when the agent takes action a in state s under policy . It evaluates the goodness or badness of taking action in the current given state under policy . Its definition is as Equation (1).

| (1) |

Where is the discount factor, representing the value of future rewards relative to current rewards. Typically, , indicating that future rewards are considered less valuable than current rewards. Under policy , the total expected return at the current time step is the sum of the immediate reward and the discounted future rewards.

The optimal policy has an expected return that is greater than or equal to all other policies. It can be achieved by greedily selecting actions that maximize the given action-value function for each state, i.e., . When this update equation converges, the action-value function becomes consistent across all states, resulting in the optimal policy and the corresponding optimal action-value function is shown in Equation (2).

| (2) |

To obtain the optimal action-value function under the optimal policy , we employ the value-based DQN[28] algorithm, which introduces Q-network and a delayed update target Q-network. By using the neural network to approximate and applying experience replay[29], we define the overall model framework as shown in Figure 3.

The proposed framework’s goal is to train the Q-network by interacting with the environment created from training data. During training, the agent receives experience samples from the stored experience and labeled samples from the labeled dataset. On one hand, it updates the parameters of the agent based on the difference between the current Q-value and the target Q-value predicted by the two networks. On the other hand, an additional cross-entropy loss term is introduced to constrain the agent’s optimization not only based on the estimation of the Q-value function but also on the prior label information.

Both the Q-network and the target Q-network take log semantic vector sequences as input and output the corresponding Q-values for the log sequences classified as anomaly or normal. When improving its strategy, the agent receives transitions from the stored experience. It uses the Q-network’s output for in as the current Q-value and the maximum Q-value from the target Q-network for as the target Q-value, aiming to minimize the mean squared error loss between them. Simultaneously, the agent uses supervision signals from prior labels in . It applies softmax to the Q-values for all actions in labeled sequences from to get predicted classifications, minimizing a regularization term based on these predictions and the prior labels.

In each iteration, the update of the agent’s parameters is guided by the two loss functions, and the model is considered converged when the parameters of the Q-network and the target Q-network are consistent. The final obtained model is used for log anomaly detection, where the input is a log semantic vector sequence to be detected, and the output with the maximum Q-value is considered as the final classification result.

III-C3 Components of the model based on DRL

In the deep reinforcement learning model, the agent and the environment are core components. The agent learns how to take actions to achieve specific goals through interaction with the environment. The design of each key component in the model is as follows:

Agent

The agent is the main entity performing learning tasks. It perceives states and determines the optimal policy by learning the optimal action-value function that can evaluate the value of the action executed in the given state. Considering the characteristics of log data, such as temporality and instability, the attention-based Bi-LSTM neural network is used as the core of the agent to approximate the Q-value function. The specific architecture of the agent is illustrated in Figure 4, comprising four network layers: an input layer, Bi-LSTM network layer, self-attention layer, and fully connected layer. It accepts semantic vectors of log data as input and outputs two estimated Q-values corresponding to taking normal and anomalous actions.

Bi-LSTM, an extension of LSTM, effectively captures bidirectional information by splitting the hidden neuron layer into forward and backward passes. The hidden states and from both directions are concatenated to form the combined hidden state . To mitigate the influence of noise, an attention mechanism is introduced, assigning varying weights to log events. Implemented as a fully connected layer, the attention layer computes the attention weight for each log event. The hidden states are subsequently weighted and summed based on their respective attention weights. Finally, the resulting vector undergoes classification through a softmax layer.

The attention-based Bi-LSTM model can capture long-term dependencies within log sequences and autonomously discern the importance of individual log events, thereby achieving good performance on unstable logs. Throughout the learning process, the agent continually minimizes the defined regularized loss function to update the parameters of the Q-network, until the updated parameters align with those of the target Q-network, ultimately leading to a fully trained agent.

Transition function biased towards anomalies

The agent improves its anomaly detection capability by learning from the states returned by the environment. The transformation of environmental states is determined by the state transition function , which significantly influences the agent’s ability to identify anomalies. In log data, the majority of data belongs to the normal class, with only a small portion classified as anomalies, resulting in a severe class imbalance. This imbalance can cause the model to heavily favor the majority ”normal class,” leading to weaker recognition of anomalies.

To alleviate this class imbalance problem, we propose a state transition function biased towards anomalies. This allows the agent to receive more states that are potentially anomalous, thereby learning more features of anomalies and enhancing its ability to recognize them.

Considering that the defined state space includes the labeled set and the unlabeled set , the designed state transition function consists of two parts: the function for exploiting , and the function for exploring .

In , since the sample size of is relatively small overall,the environment randomly selects the next state from dataset for the current state , regardless of the agent’s action, enabling the agent to equally exploit the labeled samples in .

In , due to the larger scale of the sample size, the environment biases the sampling of the next state towards anomalies from by considering both the agent’s action and the cosine similarity to .This acceleration expedites the agent’s search for anomalies in large-scale imbalanced datasets, enhancing its efficiency in exploring . The definition of is shown in Equation (3).

| (3) |

Where is a random subset of , denotes cosine similarity between and , cosine similarity is ideal for semantic vector comparison due to its normalization and directional consideration. closer to 1 indicates greater similarity between and , while closer to -1 signifies more dissimilarity. If the current state is deemed anomalous (action ), selects closest to as the next state ; otherwise, it picks the most dissimilar as .

To ensure a balance between exploitation and exploration, the probabilities of using both and are set to 0.5, allowing both to be equally utilized by the agent.

Joint reward function

The reward function assesses the quality of the agent’s actions in the environment and guides policy updates. The reward value is feedback provided by the reward function based on the actions taken by the agent. Reasonable reward values can drive the agent to learn effective strategies and achieve task goals more quickly. However, unreasonable reward values can lead the agent into learning pitfalls, resulting in behaviors contradictory to the task goals. In the field of anomaly detection, false positives and false negatives are common. Designing a reasonable reward mechanism can effectively mitigate false positives and false negatives, enabling the agent to learn more about anomalies.

We propose a joint reward function based on an external reward function and an intrinsic reward function to drive the agent to effectively utilize labeled datasets and actively explore unlabeled datasets, thereby avoiding false positives and false negatives.

-

•

External reward function

The external reward measures the performance of the agent in detecting labeled anomalies and provides a reward signal for the action taken by the agent in state . The external reward function h provides different rewards based on the different datasets from which the input state originates, as defined in Equation (4).

| (4) |

For , the determination of is directly based on the comparison between the predicted labels and the actual labels, with . TP denotes correct anomaly recognition by the agent, TN represents correct normal recognition, FP signifies false anomaly recognition, and FN indicates false normal recognition of an anomaly by the agent.

In the field of anomaly detection in logs, since the proportion of normal samples far exceeds that of anomalous samples, it is common for the agent to correctly identify normal samples. In comparison, correctly identifying anomalies is more valuable. Therefore, a higher reward value is set for TP compared to TN, i.e., . Additionally, ignoring actual anomalies often poses a greater risk than generating false alarms. Consequently, compared to FP, a stricter penalty is imposed for FN, i.e., . In this paper, we set .

For , the intelligent agent maintains a neutral attitude. Although is unlabeled, the majority of its data is normal. Therefore, for the vast majority of normal predictions made by the intelligent agent, no reward is given, i.e., the reward value is 0. At this point, the final reward depends solely on the intrinsic reward function. Conversely, the intelligent agent incurs negative rewards as penalties to avoid excessive reliance on inherently uncertain anomalous samples.

-

•

Intrinsic reward function

The intrinsic reward is a reward provided by the exploration mechanism, which gives a reward signal to the agent for taking action in state , encouraging the agent to explore potential anomalies within the unlabeled dataset . The determination of the value of is based on the evaluation of a pre-trained LogRobust[10] model, which is defined as shown in Equation (5).

| (5) |

Where denotes the probability predicted by the LogRobust model for the normalcy of the current state , and is the specified confidence threshold.If , is deemed abnormal, and falls within . Conversely, if , is considered normal, and falls within .When approaches 0, is highly abnormal, prompting a high reward to encourage exploration of novel states. Conversely, if approaches 1, is confidently normal, and no reward is given to prevent biased exploration of normal states by the agent.

In order to achieve the agent’s exploitation of and the exploration of , we define the final reward as Equation (6).

| (6) |

III-D Regularized loss function

When making decisions, the original DQN agent approximates the Q-value function using a deep neural network and always selects the action with the maximum Q-value as the best action for the current state. However, the action Q-values output by the network are inaccurate estimates. Continuously updating the network parameters based on these estimates can lead the model to learn biased representations of the observed features and forget prior label knowledge.

To address the knowledge forgetting issue in the original DQN, a regularized loss function has been designed. The loss function during model training consists of two parts: first, the mean squared error loss of deep reinforcement learning, which aims to make the Q-network’s estimated Q-values closer to the true Q-values; second, the regularization term, which encourages the Q-network’s predicted classification results to align more with prior knowledge. The definition of the regularized loss function is shown in Equation (7).

| (7) |

Where is a hyperparameter that balances the relative contributions of the mean squared error loss from deep reinforcement learning and the regularization term. This way of defining the loss function enables the agent to continuously improve its strategy under the deep reinforcement learning mechanism (MSE loss ) while preserving prior knowledge (regularization term ). It ensures that the model does not deviate from the correct trajectory even when making ”wrong estimations.” The specific definitions of and will be elaborated on below.

III-D1 MSE loss of DRL method

The agent adopts a Temporal Difference (TD) based approach to learn the optimal action-value function, which doesn’t require multiple complete experiments but updates the Q-values of the current state using the Q-value of the next state. To address the instability issues caused by simultaneously acquiring Q-values and updating the Q-network, a target network with delayed updates is introduced.

For computational efficiency, we didn’t compute all the expectations in Equation (2). Instead, we optimized the loss function using stochastic gradient descent, replacing the expectations with single samples. The definition of the loss function is as shown in Equation (8).

| (8) |

During training, the model updates the target network parameters every steps, setting . The aforementioned loss function comprises both the predicted Q-value and the target Q-value. For a given state s and action a, the Q-network outputs the predicted Q-value as . The target Q-value is obtained from the Bellman equation, representing the long-term reward obtainable after taking action in the current state as , where r is the immediate reward provided by the environment based on state and action , is the next state entered by the agent after taking action , and is the Q-value output by the target network for the optimal action in the next state .

In the end, the MSE loss is employed to gauge the disparity between the predicted Q-values and the target Q-values. By minimizing the loss function and updating the parameters of the Q-network, it gradually converges towards the optimal Q-value function. This enables the agent to achieve better policy selection and higher performance.

III-D2 Regularization term

The MSE loss function in the original DQN is used to measure the difference between predicted values and target values, serving as a performance metric to guide the intelligent agent in updating parameters in the correct direction. It treats the output of the Q-network as the predicted value and the output of the target Q-network as the target value. However, because the target Q-network is also derived from the Q-network, both the predicted Q-values and the target Q-values are essentially potentially inaccurate neural network estimates. These inaccuracies can lead the agent to take incorrect actions, resulting in behaviors that contradict prior knowledge and leading to cases of knowledge forgetting. In other words, the MSE loss function may not be able to completely and accurately guide the behavior of the agent.

In order to prevent the agent from making erroneous decisions due to inaccurate predicted values compared to target values, it is desired that the agent receives guidance from known prior knowledge during parameter updates, rather than solely relying on estimated information. The DML strategy proposed by Zhang et al. [30] utilizes a dual loss function, where the student network ensemble, while learning from each other, is guided not only by the KL divergence loss function but also by the supervised cross-entropy loss function. Similarly, to enable the intelligent agent to receive supervisory signals from prior knowledge while being driven by RL, we incorporate partially labeled samples during agent optimization and adds a regularization term on top of the original MSE loss function, as defined in Equation (9).

| (9) |

Where is the batch size used for the regularization term, consisting of an equal number of normal and anomalous labeled samples selected from the labeled dataset . The selected samples are denoted as , where . is the true label of sample , and is the predicted label for sample , obtained by passing through and then processed through the softmax function.

This regularization term measures the discrepancy between the agent’s predicted classes and the prior labels, allowing the agent to retain portions that align with the prior information when updating its parameters. Even in the face of misestimations by the double Q-network, the agent is still able to distinguish between normal and anomalous instances. To some extent, it suppresses significant changes in critical parameters of the deep reinforcement learning model, preventing the agent from taking actions that contradict prior knowledge and guiding it to learn in a direction that does not deviate from the normal trajectory.

III-E Algorithm Description and Analysis

III-E1 The Algorithm of DQNLog

The training process of DQNLog is depicted in Algorithm 1. First, initialize the Q-network and the target Q-network with the same weight parameters, and initialize the experience reply memory with a size of . Then we train DQNLog for episodes. For each episode, first randomly select a state from , and then perform training for steps. In Steps 6-8, the agent employs the exploration strategy. It randomly selects an action from with probability ; otherwise, it selects the action with the maximum Q-value. As the Q-value function improves its accuracy, linearly decreases as the total time steps increase. In Steps 9-10, after the agent selects an action, the environment randomly returns state from with probability , otherwise it selects the state from a random subset based on , which corresponds to the state that is farthest/closest. In Steps 11-13, the environment provides a combined reward, , which is the sum of an external reward, , based on , and an internal reward, , based on . Afterwards, the obtained transition is stored in . During Steps 15-21, the Q-value function is updated. Additionally, every K steps, the Q-network resets the target Q-network.

III-E2 The Analysis of DQNLog

The log anomaly detection model based on deep reinforcement learning achieves anomaly detection by approximating the optimal value-action function . is the Q-network obtained after training with the parameter values . For a given state , the Q-network outputs the estimated Q-values corresponding to taking action or . Since taking action corresponds to the agent marking the current state as anomaly, the model output can be regarded as the anomaly assessment score for state . To illustrate the rationale of considering as an anomaly score under the designed joint reward function, an analysis is provided:

Assuming is the policy derived from the Q function, then under the given policy , for a given state , the expected return obtained by the agent taking action is defined as shown in Equation (10).

| (10) |

Assuming represents a known anomalous sample in the labeled set , represents a known normal sample in the labeled set , represents an anomalous sample in the unlabeled set , and represents a normal sample in the unlabeled set . Then, when the agent takes action , the external reward value given by the external reward function satisfies ; the internal reward value given by the internal reward function satisfies . Since the joint reward value in Equation (6) is the sum of the external reward value and the internal reward value, for states , under the same policy , the expected returns of the agent taking action satisfy .

Therefore, when the agent is continuously trained to a convergent state and can effectively approximate the optimal action-value function, the relationship of anomaly scores output by the Q-network satisfies: . This indicates that states with higher anomaly scores, , which are labeled anomalous samples and unlabeled anomalous samples respectively, are the anomalies that the model needs to detect.

IV EXPERIMENTS

IV-A Datasets

We evaluated DQNLog using three widely utilized log datasets available on Loghub[31], including the HDFS dataset, the BGL dataset, and the Thunderbird dataset. These datasets consist of real-world collected data, either manually annotated by system administrators or automatically generated with alert labels by the systems. A brief overview of each dataset is provided below:

(1) HDFS[32]: The HDFS dataset is sourced from the Hadoop Distributed File System(HDFS). It is generated by running MapReduce jobs on over 200 Amazon EC2 nodes and labeled by Hadoop domain experts. In total, 11,172,157 log messages were generated from 29 events, which can be grouped into 16,838 log sequences based on , with approximately indicating system anomalies.

(2) BGL[33]:The BGL dataset was generated by the Blue Gene/L(BGL) supercomputer at Lawrence Livermore National Laboratory. It was manually labeled by BGL domain experts. It consists of 4,747,963 log messages from 376 events, with 348,460 flagged as abnormal . Log messages in the BGL dataset can be grouped by .

(3) Thunderbird[32]: The Thunderbird dataset was generated by a Thunderbird microprocessor supercomputer deployed at Sandia National Laboratories(SNL) and manually labeled as normal or anomalous. It is a large dataset with over 200 million log messages. Among the more than one million consecutive log data collected for time calculation, 4,934 were identified as abnormal[20]. The log messages in the Thunderbird dataset can be grouped into sliding windows.

Table I presents the comprehensive statistics for these three datasets, including the column ”Log Keys” which represents the total count of distinct log templates extracted using the Drain[22] technique.

| Dataset | Log Message | Anomalies | Log Keys |

|---|---|---|---|

| HDFS | 11,172,157 | 16,838(blocks) | 47 |

| BGL | 4,747,963 | 348,460(logs) | 346 |

| Thunderbird | 20,000,000 | 4934(logs) | 815 |

IV-B Implementation setup

We conduct all the experiments on a Linux server with Intel(R) Xeon(R) Platinum 8352V 2.10GHz CPU,120GB memory,RTX 4090 with 24GB GPU memory and operating system version is Ubuntu 18.04. PyTorch was used as the deep learning framework, and the implementation was based on the Python language, utilizing the open-source Keras-rl reinforcement learning library.

DQNLog defaults to updating the parameters of the Q network after 5 episodes of pre-warming, with each episode consisting of 2000 steps. The target Q network is updated every 5 episodes. We use the Adam optimizer with a learning rate of 0.001. The size of the experience reply memory U is 100000, and the size of the mini-batch subset S is 1000. The discount factor is 0.99. The hidden layer size of the Q network is 128, and the batch size is 32. When using the greedy strategy, the exploration rate is initialized to 1 and linearly decreases to 0.1 as the training progresses, where it then remains constant.

IV-C Evaluation Metrics

To assess the performance of DQNLog, we categorize the classification outcomes as TP, TN, FP, and FN, and employ precision, recall, and F1-score as evaluation metrics. The definitions and formulas for these three metrics are as follows:

Precision: The ratio of true positive results to the total number of log sequences predicted as anomalous. A higher precision indicates a greater proportion of actual anomalous log sequences among the predicted ones. The precision formula is: .

Recall: The ratio of true anomalous log sequences correctly identified as anomalous. A higher recall indicates a greater proportion of detected anomalous log sequences. The recall formula is: .

F1-Score: The harmonic mean of precision and recall, where higher values indicate better model performance. The F1-Score formula is: .

In the field of anomaly detection, it is common for normal samples to significantly outnumber anomalous samples, resulting in highly imbalanced data. Consequently, models tend to learn the characteristics of normal classes more effectively, while their ability to recognize anomalous classes is relatively weak. In this scenario, precision can effectively measure the model’s accuracy in predicting anomalous samples. Meanwhile, given the abundance of unlabeled data, the model’s comprehensive prediction of anomalous samples in the entire unlabeled dataset requires recall for assessment. Taking both aspects into account, the F1 score, as a combined measure of recall and precision, garners more attention.

IV-D Compared Approaches

To validate the effectiveness of the log anomaly detection method proposed in this paper, several mainstream models in the field of log anomaly detection were selected as baseline methods for experimental comparison. The details are as follows:

(1)LogClustering[6]: Hierarchical clustering of log sequences, considering event weights, selects centroids as representative log sequences per cluster. Anomalies in log sequences are determined by comparing their distances to all centroids to classify them as normal or abnormal.

(2)PLELog[12]: The dimensionality-reduced log semantic vectors are clustered using the HDBSCAN algorithm. Similar vectors are grouped together, and unmarked log sequences are assigned probability labels based on known normal labels within each cluster. These probability labels indicate the likelihoods of log sequence categories, rather than providing definite labels. Build an attention-based GRU neural network model for anomaly detection, leveraging the labeled training set estimated via probability labels.

(3)LogEncoder[35]: It combines single-class and contrastive learning objectives to distinguish between normal and abnormal log sequences. Using an attention-based model, it preserves log context, learns sequence features, and maps them onto a hypersphere. Anomalies are detected based on the distance from log sequences to the hypersphere’s center.

(4)DeepLog[8]: It parses log data into sequential vectors and parameter value vectors. Sequential vectors represent log templates by sequence IDs, while parameter value vectors correspond to the parameters of each template. Initially, sequential vectors are fed into an LSTM model to detect abnormal execution paths. Then, separate LSTM networks are built for each template to identify parameter value anomalies using parameter value vectors. Log sequences showing abnormal execution paths or parameter values are flagged as anomalies.

(5)LogAnomaly[9]: It parses logs into sequential vectors and quantitative vectors, using an LSTM model to predict the next log event. If the actual next log event deviates from the predicted result, it is flagged as an anomaly.

IV-E Results and Analysis

For software systems, there is always a large amount of easily accessible unlabeled data and a small amount of labeled data. To validate the effectiveness of the method in this scenario, 10000 log sequences were extracted from each of three datasets. 80% of these sequences form the training set , which includes a small labeled dataset and a large unlabeled dataset , with a data ratio of being . The remaining 20% constitutes the test set. To ensure that the proportion of anomalies in the dataset is close to real-world scenarios, the anomaly contamination rates in the three datasets are set to 3%, 7%, and 1% respectively. The experimental data selection is shown in Table II.

| Dataset | Training Data | Testing Data | |||

|---|---|---|---|---|---|

| HDFS | 2400(72 anomalies) | 5600(168 anomalies) | 3:7 | 8000 | 2000 |

| BGL | 2400(168 anomalies) | 5600(392 anomalies) | |||

| Thunderbird | 2400(24 anomalies) | 5600(56 anomalies) |

To objectively evaluate the model’s anomaly detection capability in scenarios with a small amount of labeled samples and a large amount of unlabeled samples, accuracy, recall, and F1-score were used as evaluation metrics. The performance was compared against several mainstream models in the field of log anomaly detection. The experimental results are shown in Table III.

| Dataset | Method | Precision | Recall | F1-score |

|---|---|---|---|---|

| HDFS | LogClustering | 100% | 78.30% | 87.90% |

| PLELog | 64.20% | 87.20% | 73.95% | |

| LogEncoder | 94.50% | 94.30% | 94.40% | |

| DeepLog | 90.87% | 89.42% | 90.14% | |

| LogAnomaly | 92.50% | 90.70% | 91.59% | |

| DQNLog | 98.14% | 94.05% | 96.06% | |

| BGL | LogClustering | 72.20% | 21.70% | 33.30% |

| PLELog | 53.36% | 98.30% | 69.17% | |

| LogEncoder | 93.50% | 87.60% | 89.60% | |

| DeepLog | 84.74% | 80.78% | 82.73% | |

| LogAnomaly | 90.87% | 88.02% | 89.42% | |

| DQNLog | 98.27% | 86.99% | 92.29% | |

| Thunderbird | LogClustering | 88.90% | 76.7% | 82.35% |

| PLELog | 68.60% | 87.50% | 76.46% | |

| LogEncoder | 87.50% | 90.20% | 88.90% | |

| DeepLog | 91.45% | 91.02% | 91.23% | |

| LogAnomaly | 92.13% | 91.24% | 91.68% | |

| DQNLog | 94.64% | 94.64% | 94.64% |

The results indicate that compared to the benchmarked semi-supervised and unsupervised methods, DQNLog achieves higher accuracy while maintaining good recall, resulting in the best overall F1 score performance. This is because DQNLog leverages labeled prior knowledge to learn features of known anomalies. Furthermore, driven by deep reinforcement learning reward signals, it explores anomalies in unlabeled datasets effectively. In contrast, PLELog and LogEncoder only train on normal instances, completely disregarding known anomalies, leading to a high recall but low precision by frequently misclassifying normal log sequences as anomalous, ultimately resulting in lower F1 scores. Methods like DeepLog, LogAnomalyr treat each sample equally during training, often favoring the majority class in imbalanced data scenarios, thus achieving higher accuracy but lower recall and consequently lower F1 scores. Traditional machine learning approaches like LogClustering generally perform poorly in log anomaly detection due to their reliance solely on log count vectors, which ignore inter-log correlations and semantic relationships, making them inadequate for handling dynamic changes in log data.

Meanwhile, observations across different datasets such as HDFS, BGL, and Thunderbird show that various anomaly detection models consistently perform better on the HDFS dataset. This is because the BGL and Thunderbird datasets contain a much larger number of templates compared to the HDFS dataset, with many log templates being rare or even absent in the training set. As a result, models struggle to effectively learn the characteristics of rare templates, leading to an overall performance decrease.Specifically, it can be noted that on the BGL dataset, all anomaly detection models achieve lower recall rates. This is primarily due to the long time spans of session windows in the BGL dataset, where multiple types of anomalies may occur within the same session. Models find it challenging to effectively learn multiple types of anomalies, resulting in decreased recall rates.

IV-F Ablation Study

To validate the effectiveness of the main components in DQNLog, comparisons were made between DQNLog and its variants. The two types of defined ablation variants are as follows:

(1): a variant in which the loss function does not include the regularization term. Figure 5 shows the comparison results between DQNLog and on the HDFS dataset. The results indicate that adding the regularization term constrains the model with prior information when exploring anomalies, contributing to the overall performance improvement of DQNLog.

More specifically, compared to , DQNLog reaches convergence quickly, whereas continues to fluctuate and performs significantly worse across all three evaluation metrics. This instability in arises because it relies solely on experience samples from the experience reply memory during training, which may be inaccurate as they are generated by the Q network. In contrast, DQNLog incorporates a regularization term that leverages prior information from log sequence labels during gradient descent updates, in addition to Q network estimates. This helps DQNLog maintain accurate parameter updates, even when the estimates are imprecise.

(2) and : operates in a random environment with stochastic state transition functions, while operates in a biased anomalous environment but utilizes Euclidean distance to calculate the similarity of log vectors. Figure 6 illustrates the comparative results of DQNLog, , and on the HDFS dataset. The results indicate that the biased anomalous state transition function based on cosine similarity increases the agent’s probability of effectively exploring potential anomalies in large-scale unlabeled datasets. This approach alleviates the issue of class imbalance present in the dataset.

More specifically, DQNLog performs more stably compared to and . When the number of unlabeled data is large and the actual proportion of anomalies is small, the model can overcome the influence of the majority ”normal” class and tends to learn log sequences with more ”anomalous” characteristics. This is because cosine similarity considers both the length and direction of vectors, which better reflects the similarity of logs compared to random functions and Euclidean distance. As a result, the next state returned by the environment is more likely to be ”anomalous”, allowing the model to learn more anomalous information, significantly improving recall and F1 scores.

IV-G Hyperparameter analysis

To explore the impact of related hyperparameter values on the performance of DQNLog, we varied the values of the hyperparameters to determine the optimal parameters for the model. The investigated hyperparameters include:

(1)The value of the regularization term coefficient in the loss function. Figure 7 illustrates the variation of precision, recall, and F1 score during the training process on the HDFS dataset under different values of .

The results indicate that when , the model shows significant fluctuations in precision, recall, and F1 score during training. In contrast, when or , the metrics of the model exhibit relatively stable variations. This may be attributed to an excessive amplification of the regularization term, which overly preserves prior knowledge and results in unstable performance changes. Specifically, increasing from 0.5 to 1 improves the overall performance slightly, allowing the model to leverage the information brought by prior knowledge to a greater extent.

Therefore, it is considered that when the value of the regularization term coefficient is in the range , the model can achieve better detection performance. Excessively large hyperparameter values can cause model fluctuations, while excessively small values cause the model to ignore the prior knowledge of log sequences, ultimately leading to the capture of less information by the model.

(2)The penalty values for false positives (FP) and false negatives (FN) in the reward function, and . Table IV demonstrates the detection performance of DQNLog on the HDFS dataset as the false positive (FP) penalty varies, with a fixed false negative (FN) penalty. Table V illustrates the detection performance of DQNLog on the HDFS dataset as the false negative (FN) penalty varies, with a fixed false positive (FP) penalty.

| Precision | Recall | F1-score | |

|---|---|---|---|

| FN -1, FP -0.2 | 98.07% | 92.26% | 95.08% |

| FN -1, FP -0.3 | 98.10% | 91.67% | 94.78% |

| FN -1, FP -0.4 | 98.11% | 91.07% | 94.44% |

| FN -1, FP -0.5 | 98.11% | 91.07% | 94.44% |

| FN -1, FP -0.6 | 98.13% | 90.48% | 94.12% |

The results indicate that as the penalty for false positives increases, the model’s recall gradually decreases, while precision gradually increases. This suggests that a model with a higher false positive penalty tends to be more ”cautious” in predicting anomalies. The model will only classify a current log sequence as anomalous when it is highly confident that the sequence differs significantly from the majority of log sequences, thereby improving precision. However, this may also lead to an increase in missed actual anomalous logs, resulting in a decrease in the model’s recall.

| Precision | Recall | F1-score | |

|---|---|---|---|

| FN -1, FP -0.4 | 98.11% | 91.07% | 94.44% |

| FN -1.2, FP -0.4 | 98.10% | 91.67% | 94.78% |

| FN -1.5, FP -0.4 | 98.09% | 92.26% | 95.10% |

| FN -2, FP -0.4 | 97.53% | 92.26% | 94.77% |

The results indicate that as the penalty for false negatives increases, the model’s precision gradually decreases, while recall gradually increases. This suggests that a model with a higher false negative penalty tends to be more ”bold” in predicting anomalies. It encourages the system to learn from more potentially anomalous samples to avoid missing a significant number of actual anomalies, thereby achieving higher recall. However, it also increases the likelihood of normal log sequences being falsely classified as anomalies, leading to a decrease in precision.

V CONCLUSION

To address challenges such as insufficient labeled data, underutilization of unlabeled data, and imbalance between normal and anomaly class data in existing log anomaly detection methods, this paper proposes a deep reinforcement learning-based approach named DQNLog for software log anomaly detection. The method aims to leverage a small amount of labeled data and large-scale unlabeled data effectively. Specifically, it designs a state transition function biased towards anomalies based on cosine similarity, incorporates a joint reward function using external and internal rewards, introduces a regularization term in the loss function, and utilizes a Bi-LSTM network with attention mechanisms as the agent architecture. Evaluation on three widely used log datasets confirms the effectiveness of DQNLog.

Future work includes, but is not limited to, exploring methods with higher computational efficiency and greater accuracy in identifying anomalies as the intrinsic reward mechanism to optimize model performance. Additionally, enhancing knowledge during log embedding using domain-specific data to refine the representation of feature vectors more accurately.

References

- [1] V.-H. Le and H. Zhang, “Log-based anomaly detection with deep learning: How far are we?” in Proceedings of the 44th international conference on software engineering, 2022, pp. 1356–1367.

- [2] S. Nedelkoski, J. Cardoso, and O. Kao, “Anomaly detection and classification using distributed tracing and deep learning,” in 2019 19th IEEE/ACM international symposium on cluster, cloud and grid computing (CCGRID). IEEE, 2019, pp. 241–250.

- [3] C. Zhang, X. Peng, C. Sha, K. Zhang, Z. Fu, X. Wu, Q. Lin, and D. Zhang, “Deeptralog: Trace-log combined microservice anomaly detection through graph-based deep learning,” in Proceedings of the 44th international conference on software engineering, 2022, pp. 623–634.

- [4] M. Farshchi, J.-G. Schneider, I. Weber, and J. Grundy, “Experience report: Anomaly detection of cloud application operations using log and cloud metric correlation analysis,” in 2015 IEEE 26th international symposium on software reliability engineering (ISSRE). IEEE, 2015, pp. 24–34.

- [5] Y. Liang, Y. Zhang, H. Xiong, and R. Sahoo, “Failure prediction in ibm bluegene/l event logs,” in Seventh IEEE International Conference on Data Mining (ICDM 2007). IEEE, 2007, pp. 583–588.

- [6] Q. Lin, H. Zhang, J.-G. Lou, Y. Zhang, and X. Chen, “Log clustering based problem identification for online service systems,” in Proceedings of the 38th International Conference on Software Engineering Companion, 2016, pp. 102–111.

- [7] S. Han, Q. Wu, H. Zhang, B. Qin, J. Hu, X. Shi, L. Liu, and X. Yin, “Log-based anomaly detection with robust feature extraction and online learning,” IEEE Transactions on Information Forensics and Security, vol. 16, pp. 2300–2311, 2021.

- [8] M. Du, F. Li, G. Zheng, and V. Srikumar, “Deeplog: Anomaly detection and diagnosis from system logs through deep learning,” in Proceedings of the 2017 ACM SIGSAC conference on computer and communications security, 2017, pp. 1285–1298.

- [9] W. Meng, Y. Liu, Y. Zhu, S. Zhang, D. Pei, Y. Liu, Y. Chen, R. Zhang, S. Tao, P. Sun et al., “Loganomaly: Unsupervised detection of sequential and quantitative anomalies in unstructured logs.” in IJCAI, vol. 19, no. 7, 2019, pp. 4739–4745.

- [10] X. Zhang, Y. Xu, Q. Lin, B. Qiao, H. Zhang, Y. Dang, C. Xie, X. Yang, Q. Cheng, Z. Li et al., “Robust log-based anomaly detection on unstable log data,” in Proceedings of the 2019 27th ACM joint meeting on European software engineering conference and symposium on the foundations of software engineering, 2019, pp. 807–817.

- [11] X. Li, P. Chen, L. Jing, Z. He, and G. Yu, “Swisslog: Robust and unified deep learning based log anomaly detection for diverse faults,” in 2020 IEEE 31st International Symposium on Software Reliability Engineering (ISSRE). IEEE, 2020, pp. 92–103.

- [12] L. Yang, J. Chen, Z. Wang, W. Wang, J. Jiang, X. Dong, and W. Zhang, “Semi-supervised log-based anomaly detection via probabilistic label estimation,” in 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE). IEEE, 2021, pp. 1448–1460.

- [13] G. Pang, A. van den Hengel, C. Shen, and L. Cao, “Toward deep supervised anomaly detection: Reinforcement learning from partially labeled anomaly data,” in Proceedings of the 27th ACM SIGKDD conference on knowledge discovery & data mining, 2021, pp. 1298–1308.

- [14] K. Arshad, R. F. Ali, A. Muneer, I. A. Aziz, S. Naseer, N. S. Khan, and S. M. Taib, “Deep reinforcement learning for anomaly detection: A systematic review,” IEEE Access, vol. 10, pp. 124 017–124 035, 2022.

- [15] S. Dong, Y. Xia, and T. Peng, “Network abnormal traffic detection model based on semi-supervised deep reinforcement learning,” IEEE Transactions on Network and Service Management, vol. 18, no. 4, pp. 4197–4212, 2021.

- [16] X. Duan, S. Ying, W. Yuan, H. Cheng, and X. Yin, “Qllog: A log anomaly detection method based on q-learning algorithm,” Information Processing & Management, vol. 58, no. 3, p. 102540, 2021.

- [17] J. Clifton and E. Laber, “Q-learning: Theory and applications,” Annual Review of Statistics and Its Application, vol. 7, no. 1, pp. 279–301, 2020.

- [18] E. A. Elaziz, R. Fathalla, and M. Shaheen, “Deep reinforcement learning for data-efficient weakly supervised business process anomaly detection,” Journal of Big Data, vol. 10, no. 1, p. 33, 2023.

- [19] J. Zhou, Y. Qian, Q. Zou, P. Liu, and J. Xiang, “Deepsyslog: Deep anomaly detection on syslog using sentence embedding and metadata,” IEEE Transactions on Information Forensics and Security, vol. 17, pp. 3051–3061, 2022.

- [20] Z. Li, C. Luo, T.-H. Chen, W. Shang, S. He, Q. Lin, and D. Zhang, “Did we miss something important? studying and exploring variable-aware log abstraction,” in 2023 IEEE/ACM 45th International Conference on Software Engineering (ICSE). IEEE, 2023, pp. 830–842.

- [21] S. Tao, Y. Liu, W. Meng, Z. Ren, H. Yang, X. Chen, L. Zhang, Y. Xie, C. Su, X. Oiao et al., “Biglog: Unsupervised large-scale pre-training for a unified log representation,” in 2023 IEEE/ACM 31st International Symposium on Quality of Service (IWQoS). IEEE, 2023, pp. 1–11.

- [22] P. He, J. Zhu, Z. Zheng, and M. R. Lyu, “Drain: An online log parsing approach with fixed depth tree,” in 2017 IEEE international conference on web services (ICWS). IEEE, 2017, pp. 33–40.

- [23] I. Sedki, A. Hamou-Lhadj, O. Ait-Mohamed, and M. A. Shehab, “An effective approach for parsing large log files,” in 2022 IEEE International Conference on Software Maintenance and Evolution (ICSME). IEEE, 2022, pp. 1–12.

- [24] K. Arulkumaran, M. P. Deisenroth, M. Brundage, and A. A. Bharath, “Deep reinforcement learning: A brief survey,” IEEE Signal Processing Magazine, vol. 34, no. 6, pp. 26–38, 2017.

- [25] Y. Liu, M. Ott, N. Goyal, J. Du, M. Joshi, D. Chen, O. Levy, M. Lewis, L. Zettlemoyer, and V. Stoyanov, “Roberta: A robustly optimized bert pretraining approach,” arXiv preprint arXiv:1907.11692, 2019.

- [26] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “Bert: Pre-training of deep bidirectional transformers for language understanding,” arXiv preprint arXiv:1810.04805, 2018.

- [27] F.-M. Luo, T. Xu, H. Lai, X.-H. Chen, W. Zhang, and Y. Yu, “A survey on model-based reinforcement learning,” Science China Information Sciences, vol. 67, no. 2, p. 121101, 2024.

- [28] V. Mnih, K. Kavukcuoglu, D. Silver, A. A. Rusu, J. Veness, M. G. Bellemare, A. Graves, M. Riedmiller, A. K. Fidjeland, G. Ostrovski et al., “Human-level control through deep reinforcement learning,” nature, vol. 518, no. 7540, pp. 529–533, 2015.

- [29] Q. Zhang, M. Lin, L. T. Yang, Z. Chen, and P. Li, “Energy-efficient scheduling for real-time systems based on deep q-learning model,” IEEE transactions on sustainable computing, vol. 4, no. 1, pp. 132–141, 2017.

- [30] Y. Zhang, T. Xiang, T. M. Hospedales, and H. Lu, “Deep mutual learning,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 4320–4328.

- [31] J. Zhu, S. He, P. He, J. Liu, and M. R. Lyu, “Loghub: A large collection of system log datasets for ai-driven log analytics,” in 2023 IEEE 34th International Symposium on Software Reliability Engineering (ISSRE). IEEE, 2023, pp. 355–366.

- [32] W. Xu, L. Huang, A. Fox, D. Patterson, and M. I. Jordan, “Detecting large-scale system problems by mining console logs,” in Proceedings of the ACM SIGOPS 22nd symposium on Operating systems principles, 2009, pp. 117–132.

- [33] A. Oliner and J. Stearley, “What supercomputers say: A study of five system logs,” in 37th annual IEEE/IFIP international conference on dependable systems and networks (DSN’07). IEEE, 2007, pp. 575–584.

- [34] J. Chen, Z. Wu, Z. Wang, H. You, L. Zhang, and M. Yan, “Practical accuracy estimation for efficient deep neural network testing,” ACM Transactions on Software Engineering and Methodology (TOSEM), vol. 29, no. 4, pp. 1–35, 2020.

- [35] J. Qi, Z. Luan, S. Huang, C. Fung, H. Yang, H. Li, D. Zhu, and D. Qian, “Logencoder: Log-based contrastive representation learning for anomaly detection,” IEEE Transactions on Network and Service Management, vol. 20, no. 2, pp. 1378–1391, 2023.