Semi-supervised Learning for Marked Temporal Point Processes

Abstract

Temporal Point Processes (TPPs) are often used to represent the sequence of events ordered as per the time of occurrence. Owing to their flexible nature, TPPs have been used to model different scenarios and have shown applicability in various real-world applications. While TPPs focus on modeling the event occurrence, Marked Temporal Point Process (MTPP) focuses on modeling the category/class of the event as well (termed as the marker). Research in MTPP has garnered substantial attention over the past few years, with an extensive focus on supervised algorithms. Despite the research focus, limited attention has been given to the challenging problem of developing solutions in semi-supervised settings, where algorithms have access to a mix of labeled and unlabeled data. This research proposes a novel algorithm for Semi-supervised Learning for Marked Temporal Point Processes (SSL-MTPP) applicable in such scenarios. The proposed SSL-MTPP algorithm utilizes a combination of labeled and unlabeled data for learning a robust marker prediction model. The proposed algorithm utilizes an RNN-based Encoder-Decoder module for learning effective representations of the time sequence. The efficacy of the proposed algorithm has been demonstrated via multiple protocols on the Retweet dataset, where the proposed SSL-MTPP demonstrates improved performance in comparison to the traditional supervised learning approach.

1 Introduction

A plethora of events happen in a period of twenty four hours which sum up to make a day in the life of a human being. Generally, these events do not occur at continuous time stamps since at different times of the day different activities can be performed. For example, social media usage of an individual involves posts on various platforms at different times during the day. Other examples include visits to the hospitals on different days of the week/month, purchasing groceries/essentials at an e-commerce website, or financial transactions of buying/selling stocks at a stock market exchange. All the above examples have the common characteristic of irregular time between events. Given the presence and need of modeling such data, Temporal Point Processes (TPPs) are deemed as an appropriate method to formulate such irregularly time-spaced sequence data, i.e. sequences containing discrete events occurring at irregular times. Beyond modelling the event occurrence time, TPPs also help to model the type of action (or category of the event) also known as the marker information. TPPs which model both the event time occurrence and marker information are called Marked Temporal Point Processes (MTPPs) Du et al. (2016). MTPP based models have shown exemplary performance for predicting the next event time and event marker, while demonstrating applicability in several real-world scenarios as well.

Most of the existing research in the area of MTPPs has focused on utilizing large amount of labeled data for learning a prediction model (Figure 1(a)). The dependence on such training sets often makes it challenging to utilize the models for real-world setups having limited availability of labeled training data. Recently, due to the technological advancements and heavy reliance on the digital world, large amount of data is generated every day; a majority of which is unlabeled in nature. For example, patient visits to the hospital can be recorded instantly, however, the severity of the disease or duration of stay (marker) will be known at a later time. In order to benefit from the abundant data, the concept of semi-supervised learning Zhu (2005) has been adopted for learning prediction models, which is the branch of machine learning utilizing both labeled and unlabeled data with the goal of increasing the performance even under limited labeled training data Van Engelen and Hoos (2020).

Despite the advances in the field of semi-supervised learning and marked temporal point processes independently, to the best of our knowledge, no research has focused at the intersection of the two fields. Owing to the limitation of limited labeled training data availability in real-world setups, it is important to develop new techniques incorporating the semi-supervised learning approach into MTPP based models (Figure 1(b)). In the context of MTPPs, the concept of supervised learning is incorporated with regard to the labeled marker information and not the time of the event occurrence. To this effect, this research proposes a novel algorithm for Semi-supervised Learning for Marked Temporal Point Processes (SSL-MTPP) which utilizes a combination of labeled and unlabeled data for learning a robust event marker prediction model. The proposed SSL-MTPP algorithm has been evaluated on six protocols containing varying labeled data ranging from to events, where it demonstrates improved performance over the baseline supervised learning approach. As part of this research, analysis is also performed on the Macro-F1 and Micro-F1 metrics, while suggesting that Average Precision could be a better metric for capturing the performance for highly imbalanced per-class distribution datasets.

2 Related Work

This research presents a novel SSL-MTPP algorithm which operates at the intersection of (i) Marked Temporal Point Processes and (ii) Semi-supervised Learning. To the best of our knowledge, this is the first research focusing on this challenging area. Due to the lack of specific literature on the same area of focus, the following subsections thus independently elaborate upon these two areas of research.

2.1 Marked Temporal Point Process (MTPP)

Initially, research in the field of Temporal Point Processes (TPP) focused primarily on Self-Exciting Hawkes (1971) and Self-Correcting Isham and Westcott (1979) processes. While such techniques demonstrated good performance, they were deemed to have limited flexibility limiting their usage in real-world scenarios. A significant breakthrough in the TPP domain happened in the last decade with the advent of deep learning by the usage of neural-networks for modelling the underlying conditional function. Traditionally, TPPs were used to only model the time occurrence of an event, however, later they were also used to model and predict the corresponding type/class of the event called the marker. Such techniques which focused on predicting the event and corresponding type were termed as modeling Marked Temporal Point Processes (MTPP) Du et al. (2016). Recently, Recurrent Neural Networks (RNN) have also been used to model the Marked Temporal Point Processes. For example, techniques such as the Recurrent Marked Temporal Point Processes Du et al. (2016) and Neural Hawkes Process Mei and Eisner (2016) utilize the RNN module as the backbone architecture. Similarly, a fully neural network based model has also been proposed for general TPPs Omi et al. (2019) to model the cumulative intensity function with a neural network. Further, recently, techniques have also been proposed to learn robust representations under the constraint of missing observations as well Gupta et al. (2021). Most of the techniques mentioned above follow the supervised learning approach which often require large amount of labeled training data.

2.2 Semi-Supervised Learning

Broadly, machine learning algorithms can be categorized into the following three categories: (i) supervised learning, (ii) unsupervised learning, and (iii) semi-supervised learning Zhu (2005). Semi-supervised learning is the branch of machine learning which attempts to utilize both labeled and unlabeled data during training. Often, the goal is to enhance the performance by incorporating the benefits of both kinds of data. Over the past decades, various algorithms for semi-supervised learning have been proposed. They are broadly classified into two categories: (i) inductive learning and (ii) transductive learning Chapelle et al. (2009). In inductive learning based semi-supervised approaches, the goal is to infer the correct mapping from (data points which are labeled) to (corresponding labels) in the form of a classifier, whereas in transductive learning based semi-supervised techniques the goal is to infer the correct labels for the unlabeled data points only. Inductive learning is further divided into three categories namely unsupervised processing, wrapper methods, and intrinsically semi-supervised learning whereas transductive learning is mostly used in graph-based algorithms like graph construction, graph weighting and graph inference Van Engelen and Hoos (2020).

The proposed SSL-MTPP model extends the current body of literature by focusing on the intersection of the above two fields of research. Specifically, current TPP models follow supervised learning approach which often require large amount of labeled data. In real world scenarios, such data is often not easily available. In order to overcome the limitation of limited labeled data availability, this research proposes a novel SSL-MTPP algorithm which follows the inductive approach of semi-supervised learning and incorporates labeled and unlabeled data for learning an marker prediction model.

3 Proposed Algorithm

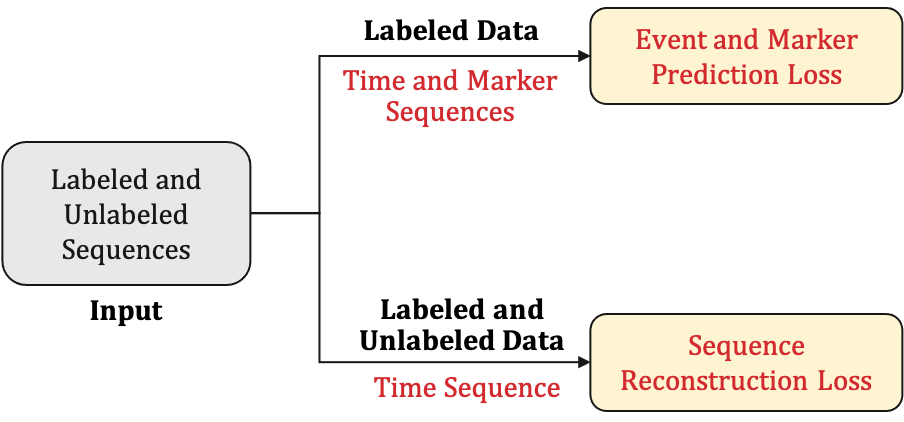

This research proposes a novel algorithm for Semi-supervised Learning for Marked Temporal Point Processes (SSL-MTPP). The proposed SSL-MTPP algorithm is useful in scenarios which contain additional unlabeled time sequences which can be used for improved model learning for marker prediction. Here, the notion of supervision is with respect to the marker information (class label) and not with respect to the event occurrence. Figure 2 presents an overview of the proposed SSL-MTPP algorithm. The model is trained via a combination of event/marker prediction loss terms and a reconstruction loss on the event sequences. The top path (labeled) allows the model to learn the relationship between the markers and events, while the bottom path (unlabeled) enables learning of a meaningful embedding for the given input event sequence which is fused with the embedding obtained from the labeled data for a more robust representation. Mathematically, the loss of the SSL-MTPP algorithm (Figure 2) is given as:

| (1) |

where, and refer to the terms corresponding to the next event time and marker prediction, respectively, while the term refers to the reconstruction component. As shown in Figure 2, the next event time and marker prediction requires labeled data (i.e. the and loss components), while the reconstruction loss () is unsupervised with respect to the marker information, and thus does not utilize the marker information during training. Detailed description of the entire architecture and each component of the proposed SSL-MTPP algorithm is as below.

3.1 SSL-MTPP Algorithm

Figure 3 presents the architecture of the proposed algorithm consisting of a labeled and an unlabeled path. The labeled path takes as input of a given set of sequences () consisting of sequence pairs () having the event time information () and the marker information (). It utilizes a Recurrent Neural Network (RNN) architecture for learning an embedding capturing the behavior of the marker and the time occurrence in the given sequences. The embedding is used by two independent modules for (i) marker prediction and (ii) time prediction of the next event. On the other hand, the unlabeled path utilizes an RNN Encoder-Decoder model Cho et al. (2014) for learning an embedding for the time sequence only. The learned embedding represents the time sequence and is used to supplement the marker-time embedding for a robust representation. Mathematical formulation and explanation of the different steps and models involved along with each loss component is as follows:

Unsupervised Reconstruction Loss () Component: The reconstruction loss is applied on the event sequences in the training set, without utilizing the marker information. Therefore, as shown in Figure 3, the reconstruction loss is applied on the unlabeled samples and the labeled samples (without utilizing the marker). Given sequences in the training set , where each sequence contains time occurrence of events, the reconstruction loss is as:

| (2) |

where, and correspond to the encoder and decoder RNN modules. The reconstruction loss focuses on learning a meaningful representation for the given time sequence, which is used for subsequent marker prediction. The reconstruction module is unsupervised in nature and does not utilize the class labels (markers) during training. A robust encoding of the time sequence () is used to augment the learned representation of the SSL-MTPP model for enhanced marker prediction. Details regarding the utilization of the encoder embedding () for marker prediction are given below:

Supervised Marker () and Time () Prediction Loss Components: An input sequence pair () containing the event time information and the marker information, respectively, is passed to an RNN module for obtaining a feature representation modeling the inter-dependence of the marker and time information:

| (3) |

The extracted representation () is then combined with the representation obtained earlier from the encoder module () to generate a fused embedding as follows:

| (4) |

where, corresponds to the weight given to the representation obtained via the RNN encoder. By fusing the two representations, the proposed algorithm is able to benefit from modeling the inter-dependence between the marker and time sequence () in a supervised manner and learning a robust representation of only the time sequence in an unsupervised manner (). The fused representation () is then provided to two multi-layer perceptron networks for predicting the next event time and marker category. The prediction models are trained with the following loss components for each event in the training set:

| (5) |

where, corresponds to the cross-entropy loss for multi-class marker classification, while corresponds to the mean absolute error loss for predicting the next event time occurrence as a regression task. Here, prediction is performed for an event belonging to sequence (i.e. event time and marker ) for a class prediction setup. is a binary variable signifying whether sample is of class or not, while is the probability of the sample belonging to class , and is the predicted event time occurrence for the given event.

3.2 Implementation Details

The proposed SSL-MTPP model has been implemented in the PyTorch environment Paszke et al. (2019). SSL-MTPP algorithm utilizes the RMTPP architecture Du et al. (2016) as the base model. The supervised branch contains a five layer Long Short-Term Memory (LSTM) module Hochreiter and Schmidhuber (1997), while the unsupervised branch contains a two layer RNN encoder and decoder. The marker and event prediction modules contain two dense layers, respectively. Dropout Hinton et al. (2012) has also been applied as a regularizer after the RNN layer. The weight value ( in Equation 4) has been set to . The model has been trained for 100 epochs with a learning rate of 0.01 using the Adam optimizer Kingma and Ba (2014). Training has been performed with a batch-size of sequences on an NVIDIA Quadro RTX6000 GPU.

| Protocol | Labeled Data | Unlabeled Data |

|---|---|---|

| P-1 | 10K | 1.39M |

| P-2 | 20K | 1.38M |

| P-3 | 30K | 1.37M |

| P-4 | 50K | 1.35M |

| P-5 | 140K (10%) | 1.26M |

| P-6 | 0.7M (50%) | 0.7M |

4 Experiments and Analysis

The proposed SSL-MTPP algorithm has been evaluated under varying amount of labeled training data. Comparison has been performed with the baseline/native supervised MTPP model which follows a similar architecture as the proposed SSL-MTPP model without the unsupervised branch (described above). Details regarding the dataset, protocols, and results are given below.

4.1 Dataset and Protocol

Experiments have been performed on the Retweet dataset Zhao et al. (2015), which is formed through the Seismic dataset. The dataset contains multiple sequences of retweets, where each sequence corresponds to information regarding the retweets on a particular tweet. Each sequence contains information regarding the event time (retweet) and the corresponding marker information. Here, the marker refers to type of user (based on the number of followers) who has retweeted. Three categories of marker are provided: (i) normal user, (ii) influencer user, and (iii) celebrity user. The marker information is defined based on the number of followers (degree) of a given user: (i) degree lower than the median (normal user), (ii) degree higher or equal to the median but less than the percentile (influencer user), and (iii) degree higher or equal to the percentile (celebrity user). The dataset consists of over two million events with an average sequence length of 209 events.The dataset has imbalance class distribution with 50.6% event marker are normal users,45% events marker are influencer users,while only 4.4% events marker are celebrity users. For experiments, data pertaining to 1.4M events was used for training, while the remaining 60K events formed the test set.

Table 1 presents the six protocols used to evaluate the proposed SSL-MTPP algorithm. The training set (consisting of 1.4M events) is split into a labeled set and an unlabeled set for each protocol. The labeled set contains data varying from 10K (P-1) to 0.7M (P-6), while the remaining data forms the unlabeled set. For experiments, the proposed SSL-MTPP algorithm is trained for each protocol, and comparison has been performed with the baseline supervised MTPP model trained in the traditional supervised manner with labeled data only.

| Protocol | Labeled Data | Model | Avg. Precision (%) | Macro-F1 (%) | Micro-F1 (%) |

|---|---|---|---|---|---|

| P-1 | 10K | Native Supervised MTPP | 38.84 | 39.40 | 58.98 |

| Proposed Semi-Supervised MTPP | 39.10 | 39.48 | 59.40 | ||

| P-2 | 20K | Native Supervised MTPP | 38.77 | 39.52 | 58.78 |

| Proposed Semi-Supervised MTPP | 67.95 | 40.77 | 59.50 | ||

| P-3 | 30K | Native Supervised MTPP | 43.92 | 40.26 | 58.03 |

| Proposed Semi-Supervised MTPP | 68.07 | 40.72 | 59.56 | ||

| P-4 | 50K | Native Supervised MTPP | 44.91 | 40.74 | 57.61 |

| Proposed Semi-Supervised MTPP | 68.35 | 40.71 | 59.59 | ||

| P-5 | 140K (10%) | Native Supervised MTPP | 45.08 | 37.88 | 56.86 |

| Proposed Semi-Supervised MTPP | 68.42 | 40.73 | 59.79 | ||

| P-6 | 0.7M (50%) | Native Supervised MTPP | 66.74 | 40.47 | 57.76 |

| Proposed Semi-Supervised MTPP | 69.49 | 40.09 | 59.22 |

4.2 Results and Analysis

Table 2 presents the results obtained on the Retweet dataset. The proposed SSL-MTPP algorithm has been evaluated on different protocols containing varying amount of labeled data (Table 1). In the literature, most of the research has focused on reporting the Macro-F1 and Micro-F1 performance metrics. As part of this research, we observe that these might not be the most appropriate metrics to judge a model’s performance, especially under the scenario of imbalanced per-class data. To this effect, along with the Macro-F1 and Micro-F1 values, we also report the average precision of each model.

As can be observed from Table 2, limited variation is observed for the Macro-F1 and Micro-F1 values for the proposed and native supervised MTPP model. For the SSL-MTPP model, the Micro-F1 values lie in the range of , regardless of the amount of labeled data, and the Macro-F1 values lie in the range of , thus demonstrating limited variation. On the other hand, the average precision lies in the range of with varying labeled data. Similar behavior is observed for the native supervised MTPP model as well, where limited variations are observed for the Macro-F1 and Micro-F1 values, while higher range is observed for the average precision metric. The consistent behavior across the two models suggest average precision to be a better metric for comparing performance in the setup of imbalanced testing data across classes.

Figure 4 presents the average precision (%) of the proposed SSL-MTPP model and the native supervised MTPP model for six protocols. Across the protocols, the proposed SSL-MTPP model demonstrates improved performance as compared to the baseline model. In P-1, where only 10K labeled data is available, the SSL-MTPP model obtains an average precision of 39.10%, presenting an improvement over the baseline model (38.84%). Larger improvements are observed for P-2 to P-5, where at least 20K labeled data is available for training. For example, in P-4, the SSL-MTPP model obtains an average precision of 68.35% demonstrating an improvement of almost 24% as compared to the baseline model. Improvement is also observed for the Macro-F1 and Micro-F1 values across protocols. Further, relatively less improvement is seen when 0.7M labeled data is available for training (P-6), where the proposed SSL-MTPP model achieves an average precision of 69.49% (as compared to 66.74% of the baseline model), thus suggesting higher improvement when limited training data is available for training. The above behavior appears intuitive in nature as well, since as the amount of labeled data increases, the baseline model is able to learn better, thus reducing the gap in improvement.

Experiments have also been performed to analyze the effect of the weight parameter ( in Equation 4) for understanding the effect of the fusion of the supervised and unsupervised representations. Figure 5 presents the average precision obtained on P-3 (30K labeled training data) with varying values. Best performance is obtained with a value of , while a drop in performance is seen with a very small () and a very large () value. A small value reduces the contribution of the unsupervised representation, while a very large value offsets the contribution of the supervised embedding thus resulting in a drop in performance.

5 Conclusion and Future Work

This research proposes a novel semi-supervised learning approach for the marked temporal point processes. The proposed SSL-MTPP algorithm is useful in scenarios where limited labeled data is available for training a marked temporal point process model. In the proposed SSL-MTPP algorithm, the labeled data is passed through an RNN module to generate meaningful embedding which captures the inter-dependence between the time and marker information, and the unlabeled data is passed through an RNN based encoder-decoder to learn the embedding from the time sequence. The representations from both the networks are combined and then used for the next event marker and time prediction. Experiments have been performed on the Retweet dataset on six different protocols by varying the amount of labeled training data. Improved performance is obtained as compared to the baseline supervised learning technique, thus suggesting applicability in different real-world scenarios. Further, analysis is also performed to suggest that the Average Precision metric is more reliable than the Macro-F1 and Micro-F1 metrics in scenarios of imbalanced per-class data.

As part of the future work, the following three directions of research have been identified. Firstly, as observed from Figure 4, when only 10K labeled data is available (P-1), the improvement in performance is less than 1% over the supervised MTPP model. The proposed SSL-MTPP can further be improved to enhance the model performance even when minimal labeled data is available. Secondly, the current architecture assumes that only the marker information to be unlabeled. As a future step, a generic framework can be developed which can handle unlabeled data either in the time or marker information in the historical sequence. Finally, the proposed SSL-MTPP algorithm can be extended to other applications in the TPP domain as well.

References

- Chapelle et al. [2009] Olivier Chapelle, Bernhard Scholkopf, and Alexander Zien. Semi-supervised learning. IEEE Transactions on Neural Networks, 20(3):542–542, 2009.

- Cho et al. [2014] Kyunghyun Cho, Bart Van Merriënboer, Caglar Gulcehre, Dzmitry Bahdanau, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. Learning phrase representations using rnn encoder-decoder for statistical machine translation. arXiv preprint arXiv:1406.1078, 2014.

- Du et al. [2016] Nan Du, Hanjun Dai, Rakshit Trivedi, Utkarsh Upadhyay, Manuel Gomez-Rodriguez, and Le Song. Recurrent marked temporal point processes: Embedding event history to vector. In ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pages 1555–1564, 2016.

- Gupta et al. [2021] Vinayak Gupta, Srikanta Bedathur, Sourangshu Bhattacharya, and Abir De. Learning temporal point processes with intermittent observations. In International Conference on Artificial Intelligence and Statistics, pages 3790–3798, 2021.

- Hawkes [1971] Alan G Hawkes. Spectra of some self-exciting and mutually exciting point processes. Biometrika, 58(1):83–90, 1971.

- Hinton et al. [2012] Geoffrey E Hinton, Nitish Srivastava, Alex Krizhevsky, Ilya Sutskever, and Ruslan R Salakhutdinov. Improving neural networks by preventing co-adaptation of feature detectors. arXiv preprint arXiv:1207.0580, 2012.

- Hochreiter and Schmidhuber [1997] Sepp Hochreiter and Jürgen Schmidhuber. Long short-term memory. Neural computation, 9(8):1735–1780, 1997.

- Isham and Westcott [1979] Valerie Isham and Mark Westcott. A self-correcting point process. Stochastic processes and their applications, 8(3):335–347, 1979.

- Kingma and Ba [2014] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Mei and Eisner [2016] Hongyuan Mei and Jason Eisner. The neural hawkes process: A neurally self-modulating multivariate point process. arXiv preprint arXiv:1612.09328, 2016.

- Omi et al. [2019] Takahiro Omi, Naonori Ueda, and Kazuyuki Aihara. Fully neural network based model for general temporal point processes. arXiv preprint arXiv:1905.09690, 2019.

- Paszke et al. [2019] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, Alban Desmaison, Andreas Kopf, Edward Yang, Zachary DeVito, Martin Raison, Alykhan Tejani, Sasank Chilamkurthy, Benoit Steiner, Lu Fang, Junjie Bai, and Soumith Chintala. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of NeurIPS, pages 8024–8035. 2019.

- Van Engelen and Hoos [2020] Jesper E Van Engelen and Holger H Hoos. A survey on semi-supervised learning. Machine Learning, 109(2):373–440, 2020.

- Zhao et al. [2015] Qingyuan Zhao, Murat A Erdogdu, Hera Y He, Anand Rajaraman, and Jure Leskovec. Seismic: A self-exciting point process model for predicting tweet popularity. In ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pages 1513–1522, 2015.

- Zhu [2005] Xiaojin Jerry Zhu. Semi-supervised learning literature survey. Technical Report, University of Wisconsin-Madison Department of Computer Sciences, 2005.