Semantically Supervised Appearance Decomposition for Virtual Staging from a Single Panorama

Abstract.

We describe a novel approach to decompose a single panorama of an empty indoor environment into four appearance components: specular, direct sunlight, diffuse and diffuse ambient without direct sunlight. Our system is weakly supervised by automatically generated semantic maps (with floor, wall, ceiling, lamp, window and door labels) that have shown success on perspective views and are trained for panoramas using transfer learning without any further annotations. A GAN-based approach supervised by coarse information obtained from the semantic map extracts specular reflection and direct sunlight regions on the floor and walls. These lighting effects are removed via a similar GAN-based approach and a semantic-aware inpainting step. The appearance decomposition enables multiple applications including sun direction estimation, virtual furniture insertion, floor material replacement, and sun direction change, providing an effective tool for virtual home staging. We demonstrate the effectiveness of our approach on a large and recently released dataset of panoramas of empty homes.

1. Introduction

With the current pandemic-related restrictions and many working from home, there is a significant uptick in home sales. Remote home shopping is becoming more popular and effective tools to facilitate virtual home tours are much needed. One such tool is virtual staging: how would furniture fit in a home and what does it look like if certain settings (e.g. sun direction, flooring) are changed? To provide effective visualization for staging, panoramas are increasingly being used to showcase homes. Panoramas provide surround information but methods designed for limited-FOV perspective photos cannot be directly applied.

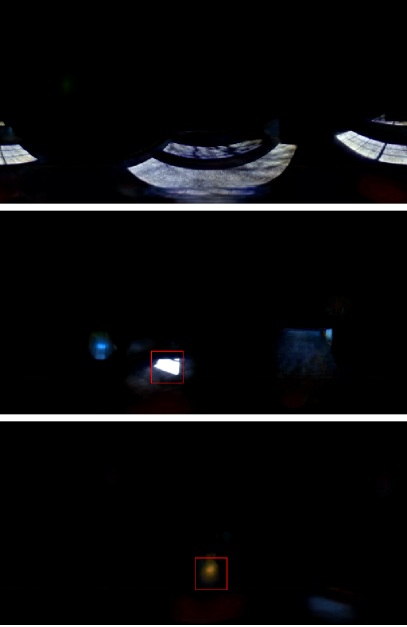

However, inserting virtual furniture into a single panoramic image of a room in a photo-realistic manner is hard. Multiple complex shading effects should be taken into consideration. Such complex interactions are shown in Fig. 2; the insertion induces effects such as occlusion, sunlight cast on objects, partially shadowed specular reflections, and soft and hard shadows. This task is challenging due to the lack of ground truth training data. The ground truth annotations for appearance decomposition tasks either require significant human labor (Bell et al., 2014), or specialized devices in a controlled environment (Grosse et al., 2009; Shen and Zheng, 2013), which is hard to be extended to a large scale. Previous approaches (Fan et al., 2017; Lin et al., 2019; Li et al., 2020a) rely on synthetic data for supervised training. However, there is the issue of domain shift and the costs of designing and rendering scenes (Li et al., 2021b).

We present a novel approach to insert virtual objects into a single panoramic image of an empty room in a near-photo-realistic way. Instead of solving a general inverse rendering problem from a single image, we identify and focus on two ubiquitous shading effects that are important for visual realism: interactions of inserted objects with (1) specular reflections and (2) direct sunlight (see Fig. 2). This is still a challenging problem that has no effective solutions because of the same reasons mentioned above. We observe that while ground truth real data for these effects is hard to obtain, by contrast, it is easier to obtain semantics automatically, given recent advances on layout estimation (Zou et al., 2018; Sun et al., 2019; Pintore et al., 2020) and semantic segmentation (Long et al., 2015; Chen et al., 2017; Wang et al., 2020). An automatically generated semantic map with ceiling, wall, floor, window, door, and lamp classes is used to localize the effects coarsely. These coarse localizations are used to supervise a GAN based approach to decompose the input image into four appearance components: (1) diffuse, (2) specular (on floor), (3) direct sunlight (on floor and walls), and (4) diffuse ambient without sunlight. The appearance decompositions can then be used for several applications including insertion of furniture, changing flooring, albedo estimation and estimating and changing sun direction.

We evaluate our method on ZInD (Cruz et al., 2021), a large dataset of panoramas of empty real homes. The homes include a variety of floorings (tile, carpet, wood), diverse configurations of doors, windows, indoor light source types and positions, and outdoor illumination (cloudy, sunny). We analyze our approach by comparing against ground truth specular and sunlight locations and sun directions, and by conducting ablation studies. Most previous approaches for diffuse-specular separation and inverse rendering were not designed for the setting used in this work to enable direct apples-to-apples comparisons; they require perspective views or supervised training of large scale real or rendered data. But we nonetheless show performances of such methods as empirical observations and not necessarily to prove that our approach is better in their settings.

Our work also has several limitations: (1) we assume the two shading effects occur either on the planar floor or walls, (2) our methods detect for mid-to-high frequency shading effects but not subtle low-frequency effects or specular/sunlight interreflections, and (3) our methods can induce artifacts if the computed semantic map is erroneous. Despite these limitations, our results suggest that the approach can be an effective and useful tool for virtual staging. Extending the semantics to furniture can enable appearance decomposition of already-furnished homes.

To sum up, our key idea is using easier-to-collect and annotate discrete signals (layout/windows/lamps) to estimate harder-to-collect continuous signals (specular/sunlight). This is a general idea that can be useful for any appearance estimation task. Our contributions include: (1) A semantically and automatically supervised framework for locating specular and direct sunlight effects (Sec. 3). (2) A GAN-based appearance separation method for diffuse, specular, ambient, and direct sunlight component estimation (Sec. 4 and 5). (3) Demonstration of multiple virtual staging applications including furniture insertion and changing flooring and sun direction (Sec. 7). To our knowledge, we are the first to estimate direct sunlight and sun direction from indoor images. The overall pipeline is illustrated in Fig. 1. Our code is released at: https://github.com/tiancheng-zhi/pano_decomp.

2. Related Work

Inverse Rendering.

The goal of inverse rendering is to estimate various physical attributes of a scene (e.g. geometry, material properties and illumination) given one or more images. Intrinsic image decomposition estimates reflectance and shading layers (Tappen et al., 2003; Li and Snavely, 2018b; Janner et al., 2017; Li and Snavely, 2018a; Liu et al., 2020; Baslamisli et al., 2021). Other methods attempt to recover scene attributes with simplified assumptions. Methods (Barron and Malik, 2015; Kim et al., 2017; Li and Snavely, 2018b; Boss et al., 2020) for a single object use priors like depth-normal consistency and shape continuity. Some methods (Shu et al., 2017; Sengupta et al., 2018) use priors of a particular category (e.g., no occlusion for faces). Some works assume near-planar surfaces (Aittala et al., 2015; Li et al., 2018; Hu et al., 2022; Gao et al., 2019; Deschaintre et al., 2019, 2018). In addition, human assistance with calibration or annotation is studied for general scenes (Yu et al., 1999; Karsch et al., 2011).

Data-driven methods require large amounts of annotated data, usually synthetic images (Li et al., 2020a; Li et al., 2021a; Li et al., 2021b; Wang et al., 2021a). The domain gap can be reduced by fine-tuning on real images using self-supervised training via differentiable rendering (Sengupta et al., 2019). The differentiable rendering can be used to optimize a single object (Kaya et al., 2021; Zhang et al., 2021). Recent works (Boss et al., 2021a, b) extend NeRF (Mildenhall et al., 2020) to appearance decomposition. The combination of Bayesian framework and deep networks is explored by (Chen et al., 2021) for reflectance and illumination estimation. In our work, we model complex illumination effects on real panoramas of empty homes. Similar to (Karsch et al., 2011), we believe that discrete semantic elements (like layout, windows, lamps, etc.) are easier to collect and train good models for. By contrast, diffuse and specular annotations are continuous spatially varying signals that are harder to label.

Illumination Estimation.

Many approaches represent indoor lighting using HDR maps (or its spherical harmonics). Some estimate lighting from a single LDR panorama (Gkitsas et al., 2020; Eilertsen et al., 2017), a perspective image (Gardner et al., 2017; Somanath and Kurz, 2020), a stereo pair (Srinivasan et al., 2020), or object appearance (Georgoulis et al., 2017; Weber et al., 2018; Park et al., 2020). Recent approaches (Garon et al., 2019; Li et al., 2020a; Wang et al., 2021a) extend this representation to multiple positions, enabling spatially-varying estimation. Others (Karsch et al., 2014; Gardner et al., 2019; Jiddi et al., 2020) estimate parametric lighting by modeling the position, shape, and intensity of light sources. Zhang et al. (2016) combine both representations and estimate a HDR map together with parametric light sources. However, windows are treated as the source of diffuse skylight without considering directional sunlight. We handle the spatially-varying high-frequency sun illumination effects, which is usually a challenging case for most methods.

Some techniques estimate outdoor lighting from outdoor images. Early methods (Lalonde et al., 2010, 2012) use analytical models to describe the sun and sky. Liu et al. (Liu et al., 2014) estimates sun direction using 3D object models. Recently, deep learning methods (Zhang et al., 2019; Hold-Geoffroy et al., 2019, 2017) regress the sun/sky model parameters or outdoor HDR maps by training on large scale datasets. A recent work (Swedish et al., 2021) estimates high-frequency illumination from shadows. However, they use outdoor images as input, where the occlusion of the sunlight by interior walls is not as significant as that for indoor scenes.

Specular Reflection Removal.

There are two main classes of specular reflection removal techniques. One removes specular highlights on objects. Non-learning based approaches usually exploit appearance or statistical priors to separate specular reflection, including chromaticity-based models (Tan and Ikeuchi, 2005; Yang et al., 2010; Shafer, 1985; Akashi and Okatani, 2016), low-rank model (Guo et al., 2018), and dark channel prior (Kim et al., 2013). Recently, data-driven methods (Wu et al., 2020; Shi et al., 2017; Fu et al., 2021) train deep networks in a supervised manner. Shi et al. (2017) train a CNN model using their proposed object-centric synthetic dataset. Fu et al. (2021) present a large real-world dataset for highlight removal, and introduce a multi-task network to detect and remove specular reflection. However, the reflection on floors is more complex than highlights, because it may reflect window textures and occupy a large region.

The second class removes reflections from a glass surface in front of the scene. Classical methods use image priors to solve this ill-posed problem, including gradient sparsity (Levin and Weiss, 2007; Arvanitopoulos et al., 2017), smoothness priors (Li and Brown, 2014; Wan et al., 2016), and ghosting cues (Shih et al., 2015). Recently, deep learning has been used for this task (Fan et al., 2017; Wan et al., 2018; Zhang et al., 2018b; Wei et al., 2019; Li et al., 2020b; Dong et al., 2021; Hong et al., 2021) and achieved significant improvements by carefully designing network architectures. Li et al. (2020b) develop an iterative boost convolutional LSTM with a residual reconstruction loss for single image reflection removal. Also, Hong et al. (2021) propose a two-stage network to explicitly address the content ambiguity for reflection removal in panoramas. However, they mostly use supervised training, requiring large amounts of data with ground truth. Most previous works of both classes do not specifically consider the properties of panoramic floor images.

Our task is in-between these classes, because the reflections are on the floor rather than on glass surfaces but the appearance is similar to that of glass reflection because the floor is flat. To our knowledge, this scenario has not been well studied.

Virtual Staging Services.

Some companies provide virtual staging services including Styldod333https://www.styldod.com/, Stuccco444https://stuccco.com/, and PadStyler555https://www.padstyler.com/. Users can upload photos of their rooms and the company will furnish them and provide the rendering results. However, most of them are not free and we are not able to know whether the specular reflections and direct sunlight are handled automatically. Certain applications like changing sun direction are usually not supported. By contrast, our approach is released for free use.

3. Semantics for Coarse Localization of Lighting Effects

We focus on two ubiquitous shading effects that are important for virtual staging: (1) specular reflections and (2) direct sunlight. These effects are illustrated in Fig. 4. Dominant specular reflections in empty indoor environments are somewhat sparse and typically due to sources such as lamps, and open or transparent windows and doors. The sunlight streaming through windows and doors causes bright and often high-frequency shading on the floor and walls. These effects must be located and removed before rendering their interactions with new objects in the environment. However, these effects are hard to annotate in large datasets to provide direct supervision for training.

Our key observation is that these effects can be localized (at least coarsely) using the semantics of the scene with simple geometrical reasoning. For example, the locations of windows and other light sources (like lamps) constrain where specular reflections occur. The sunlit areas are constrained by the sun direction and locations of windows. Our key insight is that semantic segmentation provides an easier, discrete supervisory signal for which there are already substantial human annotations and good pre-trained models (Wang et al., 2020; Lambert et al., 2020). In this section, we describe how to automatically compute semantic panoramic maps and use them to localize these effects coarsely (Fig. 5(b) and (e)). These coarse estimates are used to supervise a GAN-based approach to refine the locations and extract these effects (Fig. 5 (c) and (f)).

3.1. Transfer Learning for Semantic Segmentation

We define seven semantic classes that are of interest to our applications: floor, ceiling, wall, window, door, lamp, and other (see Fig. 5(b) for an example). Most works for semantic segmentation are designed for perspective views. Merging perspective semantics to panorama is non-trivial with problems of view selection, inconsistent overlapping regions, and limited FoV. A few networks (Su and Grauman, 2019, 2017) are designed for panoramic images but building and training such networks for our dataset requires annotations and significant engineering effort, which we wish to avoid. Thus, we propose to use a perspective-to-panoramic transfer learning technique, as follows: we obtain an HRNet model (Wang et al., 2020) pre-trained on a perspective image dataset ADE20K (Zhou et al., 2017) (with labels adjusted to our setting) and treat it as the “Teacher Model”. Then we use the same model and weights to initialize a “Student Model”, and adapt it for panoramic image segmentation.

To supervise the Student Model, we sample perspective views from the panorama. Let be the original panorama, be the sampling operator, be the Teacher Model function, and be the Student Model function. The transfer learning loss is defined as the cross entropy loss between and . To regularize the training, we prevent the Student Model from deviating from the Teacher Model too much by adding a term defined as the cross entropy loss between and . The total loss is . and are weights given by the confidence of the Teacher Model prediction and .

3.2. Coarse Localization of Lighting Effects

Coarse Specular Mask Generation.

The panoramic geometry dictates that the possible specular area, on the floor, is in the same columns as the light sources. This assumes that the camera is upright and that the floor is a mirror. See Fig. 4 for an illustration. Thus, we simply treat floor pixels, that are in the same columns as the light sources (windows and lamps in the semantic map), as the coarse specular mask. To handle rough floors, we dilate the mask by a few pixels. See Fig. 5(c) for an example coarse specular mask.

Coarse Sunlight Mask Generation.

We first estimate a coarse sun direction and then project the window mask to the floor and walls according to this direction. Since sunlight enters the room through windows (or open doors), the floor texture can be projected back onto the window region based on the sun direction. This projected floor area must be bright and a score is estimated as the intensity difference between window region and projected floor. The projection requires room geometry which can be obtained by calculating wall-floor boundary from the semantic map. Basically, we assume an upright camera at height=1 and the floor plane at Z=-1. Then, the 3D location (XYZ) of a wall boundary point is computed using known from the panorama, Z=-1, and cartesian-to-spherical coordinate transform, which can be used to calculate the wall planes.

For a conservative estimate of the floor mask, we use the K-best sun directions. Specifically, the sun direction is given by , where is the elevation angle and is the azimuth angle. We do a brute-force search for and with step size . Let be the matching score. First, top-K elevation angles are selected based on score . Let be the optimal azimuth angle for elevation . Then the selected sun directions are . In practice, we set . Fig. 5 (e) for an example coarse direct sunlight mask.

4. GAN-Based Lighting Effects Detection

Both the coarse masks estimated above are rough conservative estimates. In this section, we will demonstrate that this inaccurate information can still be used as a supervision signal that can effectively constrain and guide an accurate estimation of specular reflection and sunlit regions. We sketch the key idea in Sec. 4.1, and explain the detailed loss function in Sec 4.2.

4.1. Key Idea and Overall Architecture

Simply training a lighting effects network with the coarse masks will result in the network predicting only the coarse masks. How do we design a network that is able to produce more accurate masks? Fig. 6 illustrates our architecture that augments the lighting effects network with three local discriminators. The lighting effects network takes a single panoramic image as an input, and predicts the specular () and sunlight () components. The first local discriminator takes the image with specular component removed () as an input and tries to locate the specular reflection via per-pixel binary classification. Its output is denoted by . It is supervised by a coarse specular mask obtained from semantics described in Sec. 3.2. If the specular prediction is good, the discriminator should fail to locate specular reflection on image . Hence, the lighting effects networks should try to fool the discriminator. This idea can be seen as a per-pixel GAN treating the pixels inside the coarse mask as fake examples and the pixels outside the mask as real examples. This approach is also applied to sunlight regions, where the sunlight discriminator output is denoted by and the coarse sunlight mask is .

Because artifacts could appear when specular reflection and direct sunlight overlap with each other if the intensities are not predicted accurately, we include a third discriminator trained to detect overlapping specular reflection and direct sunlight on the image with both effects removed (). Its prediction is denoted by . This discriminator is supervised by the intersection of the coarse specular mask and the coarse sunlight mask, represented as , where is the element-wise product.

4.2. Loss Function

Sparsity Loss.

We observe that, in an empty room, specular reflection usually appears on the floor, and direct sunlight usually appears on the floor and the walls. Thus, we define the region of interest (ROI) for specular reflection to be the floor and the ROI for direct sunlight to be the floor and the walls. Different regions should have different levels of sparsity. Lighting effects should be sparser outside the ROI than inside the ROI. They should also be sparser outside the coarse mask than inside the coarse mask. Thus, we apply different levels of sparsity to different regions. Let be the input image, be the binary ROI mask, and be the coarse mask inside the ROI. The sparsity loss is:

| (1) |

where, are constant weights, and is the element-wise product. We use such sparsity loss for specular reflection and direct sunlight separately, denoted by and .

Adversarial Loss.

As mentioned in Sec. 4.1, after removing the lighting effects, a discriminator should not be able to locate the effects. Consider specular reflection as an example. Let be the input image, and be the estimated specular reflection. We use a local discriminator to find reflection areas from . The discriminator is supervised by coarse specular mask . The discriminator loss uses pixel-wise binary cross entropy, . To fool the discriminator, an adversarial loss:

| (2) |

is applied to region . Similarly, we build adversarial losses for sunlight regions and regions with overlapping specular reflection and direct sunlight, denoted by and , respectively.

Total Loss.

The total loss is the sum of the two sparsity losses and the three adversarial losses:

| (3) |

where , ,,, are constant weights.

5. Lighting Effects Removal

The previous architecture and method focused on estimating the specular and direct sunlight regions. But, naively subtracting the predicted regions from the original image, i.e. , , may still produce artifacts as shown in Fig. 7. In this section, we instead directly estimate the diffuse image (without specular reflection) and the ambient image (without specular reflection or direct sunlight).

5.1. GAN-Based Specular Reflection Removal

As shown in Fig. 8, a deep network is used to predict a diffuse image. To supervise the training of the network, we adopt the same GAN strategy as Sec. 4.2. Instead of using the coarse mask generated by Sec. 3.2, we obtain a fine specular mask by thresholding the estimated specular reflection for discriminator supervision.

Let be the original image, be the specular image, be the diffuse image, and be the reconstructed image. Inspired by (Zhang et al., 2018b), our loss consists of an adversarial loss , a reconstruction loss , a perceptual loss , and an exclusion loss :

| (4) |

where , , , are constant weights.

Adversarial Loss.

is the same as the loss defined in Sec. 4.2, replacing the coarse specular mask by the fine specular mask.

Reconstruction Loss.

calculates the L1 loss between the reconstructed image and the original image.

Perceptual Loss.

Exclusion Loss.

, where is the Frobenius norm, and is the element-wise product. This loss minimizes the correlation between diffuse and specular components in the gradient domain to prevent edges from appearing in both diffuse and specular images, following (Zhang et al., 2018b).

5.2. Semantic-Aware Inpainting for Sunlight Removal

A process similar to that used for diffuse image estimation can be used for predicting the ambient image without sunlight. In practice, however, bright sunlit regions are the highly dominant brightness component and are often saturated and the network does not predict the ambient image well. Thus, we use an inpainting approach called SESAME (Ntavelis et al., 2020) that is semantic-aware and preserved the boundaries between different classes (wall versus floor, etc).

6. Experimental Analysis

6.1. Implementation Details

Improving Lamp Semantics with HDR Estimation.

The ADE20K definition of lamps includes the whole lamp, while we care about the bright bulb. Thus, we estimate a HDR map via supervised learning to obtain a more accurate bulb segmentation. To obtain a HDR image , we train U-Net (Ronneberger et al., 2015) to predict the HDR-LDR residue in log space , using Laval (Gardner et al., 2017) and HDRI Heaven 666https://hdrihaven.com/hdris/?c=indoor datasets. A weighted L2 loss is adopted. We set batch size 32, learning rate and epochs 300. Pixels in the lamp segmentation with are kept.

Improving Room Semantics with Layout Estimation.

The floor-wall and ceiling-wall boundaries predicted by semantic segmentation might not be straight in perspective views, causing visual artifacts. Thus, we adopt a layout estimation method LED2-Net (Wang et al., 2021c) to augment the semantic map. The layout estimation predicts floor, wall and ceiling regions for the current room, which is used to improve the floor-wall and ceiling-wall boundaries. We adopt batch size 6, learning rate , and epochs 14.

Super-Resolution in Post-Processing.

Due to limited computing, we run our methods at low-resolution (). We enhance the resolution for applications requiring high resolution results with post-processing. We enhance the resolution of the specular image to via a pre-trained super-resolution model (Wang et al., 2021b). To ensure the consistency between the diffuse image and the original image, we adopt Deep Guided Decoder (Uezato et al., 2020) for super-resolution with the original image as guidance. The final resolution is . For the ambient image, we simply use super-resolution (Wang et al., 2021b) and copy-paste the inpainted region on to the high resolution diffuse image. To preserve high-frequency details, we calculate the difference between the diffuse and ambient components as the high-resolution sunlight image.

Deep Architecture and Hyper-parameters.

For semantic transfer learning, we use , batch size 4, learning rate , epochs 5, and random perspective crops with FoV 80∘ to 120∘, elevation -60∘ to 60∘, azimuth 0∘ to 360∘. We use U-Net (Ronneberger et al., 2015) for Lighting Effects Network and Diffuse Network, and FCN (Long et al., 2015) for discriminators, optimized with Adam (Kingma and Ba, 2015). See supplementary materials for network architectures. The networks use learning rate , weight decay , and batch size 16. For effects detection, . For specular sparsity, . For sunlight sparsity, . For specular reflection removal, . The networks take 2 days for training on 4 TITAN Xp GPUs and 0.05s for inference. Resolution enhancement using Guided Deep Decoder (Uezato et al., 2020) converges in 30 min for a single image.

Dataset.

We evaluate our approach on the ZInD (Cruz et al., 2021) dataset that includes 54,034 panoramas for training and 13,414 panoramas for testing (we merge their validation and test sets). For better visualization, we inpaint the tripod using SESAME (Ntavelis et al., 2020). Sec. 6.2 and 6.3 evaluate our approach at resolution. Sec. 6.4 visualizes results at resolution .

6.2. Ablation Study

Perspective Merging vs. Panoramic Semantics.

We compare with merging perspective semantics to panorama, by sampling 14 perspective views and merging the semantics via per-pixel voting. On Structured3D (Zheng et al., 2020) test set (mapped to our labels), the merging method achieves mIoU=44.8%, while ours achieves 68.1%. This shows developing methods directly is meaningful. We also test our method on different emptiness levels: No furniture mIoU: 68.1%; Some furniture: 66.6%; Full furniture: 60.6%. Since ZInD does not contain much furniture, this mIoU reduction is expected.

Lighting Effects Detection.

We manually annotate specular and sunlight regions on 1,000 test panoramas sampled from the test set for quantitative analysis. The annotation is coarse because there is no clear boundary of specular regions. For a fair comparison, we report the mean IoU with respect to the best threshold for binarization for each method. The quantitative evaluation is performed on the floor region only.

To show that the GAN-based method introduces priors for refining the coarse mask, we train a network with the same architecture as Lighting Effects Network in a standard supervised manner (without GAN), using the coarse mask as labels. We also compare with the coarse mask itself and the method without the third discriminator for overlap regions. Tab. 1 shows that the full method outperforms the others, demonstrating the effectiveness of our GAN-based approach with three discrimintors.

Specular Reflection Removal.

We use synthetic data for evaluating specular reflection removal. We render 1,000 images based on microfaucet reflectance model using the Mitsuba (Jakob, 2010) renderer with floor textures from AdobeStock. We also define images with peak specular intensity ranking top 5% in the test data as “strong reflection” . PSNR (higher is better) and LPIPS (Zhang et al., 2018a) (lower is better) are reported.

We compare with the naive method by subtracting the specular component from the original image directly, and methods without each loss components. In Tab. 2, the full method outperforms the other variants on all metrics except for the ones without adversarial loss. Although the methods without adversarial loss achieves a high score on “all testdata”, it performs worse than the full method on “strong reflection”. Besides, as shown in Fig. 9, adversarial and exclusion losses help remove perceivable visual artifacts or specular residues although average metrics don’t reflect clearly. Thus, we adopt the full method with all four losses for the applications.

| Method | Specular | Sunlight |

|---|---|---|

| Coarse mask | 6.7 | 3.0 |

| Supervised by coarse mask w/o GAN | 8.7 | 19.9 |

| No overlap effects discriminator | 34.6 | 30.0 |

| Our full method | 38.9 | 47.2 |

| Method | All Testdata | Strong Reflection | ||

|---|---|---|---|---|

| PSNR | LPIPS | PSNR | LPIPS | |

| Input subtracts specular image | 33.2 | 0.0247 | 25.0 | 0.0702 |

| No adversarial loss | 33.4 | 0.0233 | 25.2 | 0.0681 |

| No reconstruction loss | 33.2 | 0.0248 | 25.1 | 0.0693 |

| No perceptual loss | 31.3 | 0.0483 | 25.0 | 0.0903 |

| No exclusion loss | 33.3 | 0.0242 | 25.2 | 0.0693 |

| No adversarial/reconstruction | 33.4 | 0.0232 | 25.1 | 0.0683 |

| No adversarial/perceptual | 33.4 | 0.0270 | 25.3 | 0.0749 |

| No adversarial/exclusion | 33.3 | 0.0233 | 25.1 | 0.0684 |

| No reconstruction/perceptual | 14.4 | 0.3595 | 12.7 | 0.3407 |

| No reconstruction/exclusion | 33.2 | 0.0242 | 25.2 | 0.0700 |

| No perceptual/exclusion | 32.4 | 0.0333 | 25.1 | 0.0800 |

| Our full method | 33.3 | 0.0241 | 25.3 | 0.0676 |

6.3. Performances of Other Relevant Approaches

Most previous approaches for diffuse-specular separation and inverse rendering were not designed for the setting used in this work to enable direct apples-to-apples comparisons; they require perspective views or supervised (ground truth) training of large scale real or rendered data or are used for a related task. But accurately annotating large data for specular and sunlight effects is non-trivial, because of blurred boundaries or complex texture. The visual and quantitative evaluations below shows that previous approaches do not easily bridge the domain gap. These methods have strong value for the domains they were designed for but our approach provides an effective tool for virtual staging with real-world panoramas.

Specular Reflection Detection.

We evaluate a bilateral filtering based method BF (Yang et al., 2010), two deep reflection removal methods IBCLN (Li et al., 2020b) and LASIRR (Dong et al., 2021), and a deep highlight removal method JSHDR (Fu et al., 2021). Since these methods are designed for perspective images, we randomly sample 5,000 perspective crops from the 1,000 annotated panoramic images and report the performance in Tab. 3 left column. In the right column, we also evaluate in panoramic domain by converting the panorama to a cubemap, running the baseline methods, and merging the results back to panorama. Among the evaluated relevant methods, JSHDR (Fu et al., 2021) achieves the best performance, likely because it is designed for specular highlight removal, which is closer to our setting than glass reflection removal methods.

| Evaluation Domain | Method | All Testdata | Strong Reflection | ||

|---|---|---|---|---|---|

| PSNR | LPIPS | PSNR | LPIPS | ||

| Perspective | BF (2010) | 23.4 | 0.1632 | 17.7 | 0.2326 |

| IBCLN (2020b) | 27.5 | 0.1013 | 23.7 | 0.1460 | |

| LASIRR (2021) | 20.8 | 0.1984 | 17.5 | 0.2515 | |

| JSHDR (2021) | 32.0 | 0.0354 | 24.1 | 0.1158 | |

| IBCLN (2020b) (re-train) | 33.4 | 0.0279 | 25.3 | 0.1016 | |

| Ours | 34.5 | 0.0271 | 27.0 | 0.0935 | |

| Panoramic | BF (2010) | 22.1 | 0.1526 | 16.5 | 0.1934 |

| IBCLN (2020b) | 28.8 | 0.0890 | 24.2 | 0.1418 | |

| LASIRR (2021) | 23.0 | 0.1425 | 20.1 | 0.1788 | |

| JSHDR (2021) | 30.6 | 0.0698 | 22.5 | 0.1499 | |

| IBCLN (2020b) (re-train) | 32.3 | 0.0250 | 24.7 | 0.0743 | |

| Ours | 33.3 | 0.0241 | 25.3 | 0.0676 | |

Direct Sunlight Detection.

We are unaware of any work estimating direct sunlight for our setting. Thus, we evaluate an intrinsic image decomposition method USI3D (Liu et al., 2020). We assume that the sunlight region should have a strong shading intensity and threshold the output shading image as the sunlight prediction. This achieves mean IoU 7.4% on perspective crops and 7.9% on panoramas from cubemap merging while our method achieves 47.2% on perspective crops and 47.2% on panoramas. We conclude that using the semantics and sun direction is important for sunlight estimation.

Specular Reflection Removal.

Similar to lighting effect detection, we report performances based on 5,000 perspective crops sampled from the 1,000 synthetic panoramic images. Fig. 10 and the first four rows in Tab. 4 show the performances of pre-trained models on perspective images. Similar to specular reflection detection, JSHDR (Fu et al., 2021) achieves the best performance, likely due to smaller domain differences, since it is trained on a large-scale real dataset. A similar conclusion can be drawn from the evaluation in panoramic domain. We also attempted to bridge the domain gap for a more reasonable quantitative evaluation. For JSHDR, only the compiled code is available online, making it hard to adapt to our domain. Thus, we train IBCLN on our panoramic data. Specifically, let be the set of original images and be the set of estimated specular components. We treat and as the transmission image set and the reflection image set, respectively. These two sets are then used for rendering the synthetic images for training. Tab. 4 Rows 5 and 11 show that by re-training using the synthetic data based on our specular reflection estimation, the performance of IBCLN improves but still does not reach the level of our method.

6.4. Diverse Lighting and High Resolution Results

Fig. 12 visualizes effects detection results in diverse lighting conditions, including large sunlight area and (partially) occluded light sources. The results show that our detection can handle such cases.

Fig. 11 visualizes high-resolution appearance decomposition results. The specular reflections are well removed and the floor texture is consistent with the original image. The sunlight region is inpainted with the correct colors. Due to the limitation of the inpainting algorithm, the texture is not perfect. As inpainting algorithms improve, we can plug-and-play those within our pipeline.

7. Applications

Improvement of Albedo Estimation.

Albedo estimation via intrinsic image decomposition suffers from the existence of specular reflection and direct sunlight. As shown in Fig. 13 (b), a pre-trained USI3D (Liu et al., 2020) incorrectly bakes specular reflection and direct sunlight into the albedo component. When we run the same algorithm on the ambient image after removing the lighting effects, the albedo estimation (c) is signifiantly better.

Improvement of Sun Direction Estimation.

With the detected direct sunlight, we can improve the sun direction estimation proposed in Sec. 3.2. There are two modifications: (1) Instead of using the RGB image, we use the direct sunlight image for matching; (2) The wall sunlight is also considered. Previously we do not consider walls for coarse sun direction estimation because walls are usually white, which may mislead the matching score.

To evaluate the sun direction, we obtained sun elevation angles for part of the dataset from the authors of ZInD (Cruz et al., 2021). The elevation angles are calculated based on timestamps and geolocations. The ground truth azimuth angles are not available because of unknown camera orientations. The elevation angles provided may not be always accurate because the timestamps are based on the time the data were uploaded to the cloud. Thus, we manually filter obviously incorrect values and select images with visible sunlight for evaluation. A total of 257 images are evaluated.

We search for the top-1 direction at step size 1∘. Fig. 14 plots the histogram of the angular estimation error. More than half of the estimations are within 10∘ error. Fig. 14 also visualizes an example of projecting the window mask according to the estimated sun direction. Compared with the coarse sun direction, the improved fine estimation provides more accurate estimations.

Changing Floor Material.

The decomposition is used to change flooring with texture and BRDF parameters. Examples include switching between wood (specular), carpet (diffuse) or tile (specular). Fig. 7 shows four examples, including wood-to-wood (keep specularity), wood-to-carpet (remove specularity), carpet-to-wood (render specularity via HDR map), and carpet-to-carpet changes. Direct sunlight are rendered on the new material by scaling the floor intensity according to the estimated sunlight scale.

Changing Sun Direction.

Using the estimated sun direction, we project the sunlit region back to the window position. Then, we re-project sunlight onto the floor and the walls using a new sun direction. Fig. 16 visualizes two examples of changing sun direction.

Furniture Insertion.

With the decomposition result, sun direction and the estimated HDR map (Sec. 6.1), we can insert virtual objects into an empty room, by calculating the occluded specular reflection and direct sunlight via ray tracing. Using the computed layout (scale set manually), we render ambient, sunlight and specular effects separately and combine them. Fig. 8 shows several insertion examples, where sunlight is cast on the desk (a) and the bed (b&c), specular reflection is blocked by the chair (a) and bed-side table (c), and sunlight is blocked by the clothing hanger (b) and bed (c).

Fig. 9 shows scenes rendered with Li et al. (Li et al., 2020a), rendered with the estimated HDR map without appearance decomposition, and rendered with our full method. Li et al. (Li et al., 2020a) trains on synthetic data with ground truth supervision and predicts illumination and scene properties for a perspective view. The method estimates spatially-varying lighting for each pixel rather than each 3D voxel. A selected pixel and its lighting is used for rendering. This fails when the object parts are far away from the selected pixel in 3D (e.g., first row in Fig. 9). Besides, the method renders shadows in the specular region by scaling the original floor intensity without explicitly removing blocked specular reflection, leading to insufficient darkening of the floor (e.g., second row).

Combination of Multiple Applications.

The applications can be combined to allow more flexible virtual staging effects. Fig. 8 shows high-quality visualizations that include two or more of changing floor material, sun direction and object insertion.

8. Conclusion

In summary, we present an appearance decomposition method for empty indoor panoramic scenes. Relying on supervision from semantics, our method detects and removes the specular reflection on the floor and the direct sunlight on the floor and the walls. The decomposition result can be applied to multiple virtual staging tasks, including albedo and sun direction estimation, furniture insertion, changing sun direction and floor material.

There are three main limitations. First, we focus on direct lighting effects (first light bounce, no global illumination) on the floor and the walls. However, the specular reflection and direct sunlight could also appear on objects like kitchen countertops or appliances. Fig. 10 shows that our method partially detects the sunlight but sun direction and object lighting is erroneous since the surface height is unknown. This could potentially be solved by extending our semantics and geometry to include furniture or other objects as long as some coarse mask and geometry can be obtained automatically to allow for accurate ray-tracing. Second, we assume the window producing the sunlight is visible for sun direction estimation. Using multi-view information from different panoramas in a single home can help overcome this limitation. Third, our approach is tailored to panoramas. It is possible to extend our ideas to narrower perspective field of views but there are several alternate methods in that space and we lose the advantages provided by a panorama. Given the increased availability of panoramas of homes, we believe the method is timely and can be an effective tool for virtual staging.

Acknowledgements.

This work was supported by a gift from Zillow Group, USA, and NSF Grants #CNS-2038612, #IIS-1900821.References

- (1)

- Aittala et al. (2015) Miika Aittala, Tim Weyrich, and Jaakko Lehtinen. 2015. Two-shot SVBRDF Capture for Stationary Materials. ACM Trans. Graph. (Proc. SIGGRAPH) 34, 4, Article 110 (July 2015), 13 pages. https://doi.org/10.1145/2766967

- Akashi and Okatani (2016) Yasushi Akashi and Takayuki Okatani. 2016. Separation of reflection components by sparse non-negative matrix factorization. Computer Vision and Image Understanding 100, 146 (2016), 77–85.

- Arvanitopoulos et al. (2017) Nikolaos Arvanitopoulos, Radhakrishna Achanta, and Sabine Susstrunk. 2017. Single image reflection suppression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4498–4506.

- Barron and Malik (2015) Jonathan Barron and Jitendra Malik. 2015. Shape, Illumination, and Reflectance from Shading. IEEE Transactions on Pattern Analysis and Machine Intelligence 37 (08 2015), 1670–1687. https://doi.org/10.1109/TPAMI.2014.2377712

- Baslamisli et al. (2021) Anil S Baslamisli, Partha Das, Hoang-An Le, Sezer Karaoglu, and Theo Gevers. 2021. ShadingNet: image intrinsics by fine-grained shading decomposition. International Journal of Computer Vision (2021), 1–29.

- Bell et al. (2014) Sean Bell, Kavita Bala, and Noah Snavely. 2014. Intrinsic images in the wild. ACM Transactions on Graphics (TOG) 33, 4 (2014), 1–12.

- Boss et al. (2021a) Mark Boss, Raphael Braun, Varun Jampani, Jonathan T Barron, Ce Liu, and Hendrik Lensch. 2021a. Nerd: Neural reflectance decomposition from image collections. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 12684–12694.

- Boss et al. (2021b) Mark Boss, Varun Jampani, Raphael Braun, Ce Liu, Jonathan Barron, and Hendrik Lensch. 2021b. Neural-pil: Neural pre-integrated lighting for reflectance decomposition. Advances in Neural Information Processing Systems 34 (2021).

- Boss et al. (2020) Mark Boss, Varun Jampani, Kihwan Kim, Hendrik Lensch, and Jan Kautz. 2020. Two-shot spatially-varying brdf and shape estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3982–3991.

- Chen et al. (2017) Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L Yuille. 2017. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Transactions on Pattern Analysis and Machine Intelligence 40, 4 (2017), 834–848.

- Chen et al. (2021) Zhe Chen, Shohei Nobuhara, and Ko Nishino. 2021. Invertible neural BRDF for object inverse rendering. IEEE Transactions on Pattern Analysis and Machine Intelligence (2021).

- Cruz et al. (2021) Steve Cruz, Will Hutchcroft, Yuguang Li, Naji Khosravan, Ivaylo Boyadzhiev, and Sing Bing Kang. 2021. Zillow Indoor Dataset: Annotated Floor Plans With Panoramas and 3D Room Layouts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition.

- Deschaintre et al. (2018) Valentin Deschaintre, Miika Aittala, Fredo Durand, George Drettakis, and Adrien Bousseau. 2018. Single-image svbrdf capture with a rendering-aware deep network. ACM Transactions on Graphics (TOG) 37, 4 (2018), 1–15.

- Deschaintre et al. (2019) Valentin Deschaintre, Miika Aittala, Frédo Durand, George Drettakis, and Adrien Bousseau. 2019. Flexible svbrdf capture with a multi-image deep network. In Computer graphics forum, Vol. 38. Wiley Online Library, 1–13.

- Dong et al. (2021) Zheng Dong, Ke Xu, Yin Yang, Hujun Bao, Weiwei Xu, and Rynson WH Lau. 2021. Location-aware single image reflection removal. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 5017–5026.

- Eilertsen et al. (2017) Gabriel Eilertsen, Joel Kronander, Gyorgy Denes, Rafał K Mantiuk, and Jonas Unger. 2017. HDR image reconstruction from a single exposure using deep CNNs. ACM Transactions on Graphics (TOG) 36, 6 (2017), 1–15.

- Fan et al. (2017) Qingnan Fan, Jiaolong Yang, Gang Hua, Baoquan Chen, and David Wipf. 2017. A generic deep architecture for single image reflection removal and image smoothing. In Proceedings of the IEEE International Conference on Computer Vision. 3238–3247.

- Fu et al. (2021) Gang Fu, Qing Zhang, Lei Zhu, Ping Li, and Chunxia Xiao. 2021. A Multi-Task Network for Joint Specular Highlight Detection and Removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7752–7761.

- Gao et al. (2019) Duan Gao, Xiao Li, Yue Dong, Pieter Peers, Kun Xu, and Xin Tong. 2019. Deep inverse rendering for high-resolution SVBRDF estimation from an arbitrary number of images. ACM Trans. Graph. 38, 4 (2019), 134–1.

- Gardner et al. (2019) Marc-André Gardner, Yannick Hold-Geoffroy, Kalyan Sunkavalli, Christian Gagné, and Jean-Francois Lalonde. 2019. Deep parametric indoor lighting estimation. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 7175–7183.

- Gardner et al. (2017) Marc-André Gardner, Kalyan Sunkavalli, Ersin Yumer, Xiaohui Shen, Emiliano Gambaretto, Christian Gagné, and Jean-François Lalonde. 2017. Learning to predict indoor illumination from a single image. ACM Transactions on Graphics (TOG) 36, 6 (2017), 1–14.

- Garon et al. (2019) Mathieu Garon, Kalyan Sunkavalli, Sunil Hadap, Nathan Carr, and Jean-François Lalonde. 2019. Fast spatially-varying indoor lighting estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6908–6917.

- Georgoulis et al. (2017) Stamatios Georgoulis, Konstantinos Rematas, Tobias Ritschel, Efstratios Gavves, Mario Fritz, Luc Van Gool, and Tinne Tuytelaars. 2017. Reflectance and natural illumination from single-material specular objects using deep learning. IEEE Transactions on Pattern Analysis and Machine Intelligence 40, 8 (2017), 1932–1947.

- Gkitsas et al. (2020) Vasileios Gkitsas, Nikolaos Zioulis, Federico Alvarez, Dimitrios Zarpalas, and Petros Daras. 2020. Deep lighting environment map estimation from spherical panoramas. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops. 640–641.

- Grosse et al. (2009) Roger Grosse, Micah K Johnson, Edward H Adelson, and William T Freeman. 2009. Ground truth dataset and baseline evaluations for intrinsic image algorithms. In 2009 IEEE 12th International Conference on Computer Vision. IEEE, 2335–2342.

- Guo et al. (2018) Jie Guo, Zuojian Zhou, and Limin Wang. 2018. Single image highlight removal with a sparse and low-rank reflection model. In Proceedings of the European Conference on Computer Vision (ECCV). 268–283.

- Hold-Geoffroy et al. (2019) Yannick Hold-Geoffroy, Akshaya Athawale, and Jean-François Lalonde. 2019. Deep sky modeling for single image outdoor lighting estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 6927–6935.

- Hold-Geoffroy et al. (2017) Yannick Hold-Geoffroy, Kalyan Sunkavalli, Sunil Hadap, Emiliano Gambaretto, and Jean-François Lalonde. 2017. Deep outdoor illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 7312–7321.

- Hong et al. (2021) Yuchen Hong, Qian Zheng, Lingran Zhao, Xudong Jiang, Alex C Kot, and Boxin Shi. 2021. Panoramic Image Reflection Removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 7762–7771.

- Hu et al. (2022) Yiwei Hu, Chengan He, Valentin Deschaintre, Julie Dorsey, and Holly Rushmeier. 2022. An Inverse Procedural Modeling Pipeline for SVBRDF Maps. ACM Transactions on Graphics (TOG) 41, 2 (2022), 1–17.

- Jakob (2010) Wenzel Jakob. 2010. Mitsuba renderer. http://www.mitsuba-renderer.org.

- Janner et al. (2017) Michael Janner, Jiajun Wu, Tejas D Kulkarni, Ilker Yildirim, and Joshua B Tenenbaum. 2017. Self-supervised intrinsic image decomposition. In Proceedings of the 31st International Conference on Neural Information Processing Systems. 5938–5948.

- Jiddi et al. (2020) Salma Jiddi, Philippe Robert, and Eric Marchand. 2020. Detecting specular reflections and cast shadows to estimate reflectance and illumination of dynamic indoor scenes. IEEE Transactions on Visualization and Computer Graphics (2020).

- Johnson et al. (2016) Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual losses for real-time style transfer and super-resolution. In European Conference on Computer Vision. Springer, 694–711.

- Karsch et al. (2011) Kevin Karsch, Varsha Hedau, David Forsyth, and Derek Hoiem. 2011. Rendering Synthetic Objects into Legacy Photographs. ACM Transactions on Computer Systems 30, 6 (1 Dec. 2011), 1–12. https://doi.org/10.1145/2070781.2024191 Copyright: Copyright 2017 Elsevier B.V., All rights reserved.

- Karsch et al. (2014) Kevin Karsch, Kalyan Sunkavalli, Sunil Hadap, Nathan Carr, Hailin Jin, Rafael Fonte, Michael Sittig, and David Forsyth. 2014. Automatic scene inference for 3d object compositing. ACM Transactions on Graphics (TOG) 33, 3 (2014), 1–15.

- Kaya et al. (2021) Berk Kaya, Suryansh Kumar, Carlos Oliveira, Vittorio Ferrari, and Luc Van Gool. 2021. Uncalibrated Neural Inverse Rendering for Photometric Stereo of General Surfaces. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3804–3814.

- Kim et al. (2013) Hyeongwoo Kim, Hailin Jin, Sunil Hadap, and Inso Kweon. 2013. Specular reflection separation using dark channel prior. In Proceedings of the IEEE conference on computer vision and pattern recognition. 1460–1467.

- Kim et al. (2017) Kihwan Kim, Jinwei Gu, S. Tyree, P. Molchanov, M. Nießner, and J. Kautz. 2017. A Lightweight Approach for On-the-Fly Reflectance Estimation. 2017 IEEE International Conference on Computer Vision (ICCV) (2017), 20–28.

- Kingma and Ba (2015) Diederik P Kingma and Jimmy Ba. 2015. Adam: A method for stochastic optimization. In ICLR.

- Lalonde et al. (2012) Jean-François Lalonde, Alexei A Efros, and Srinivasa G Narasimhan. 2012. Estimating the natural illumination conditions from a single outdoor image. International Journal of Computer Vision 98, 2 (2012), 123–145.

- Lalonde et al. (2010) Jean-François Lalonde, Srinivasa G Narasimhan, and Alexei A Efros. 2010. What do the sun and the sky tell us about the camera? International Journal of Computer Vision 88, 1 (2010), 24–51.

- Lambert et al. (2020) John Lambert, Zhuang Liu, Ozan Sener, James Hays, and Vladlen Koltun. 2020. MSeg: A Composite Dataset for Multi-domain Semantic Segmentation. In Computer Vision and Pattern Recognition (CVPR).

- Levin and Weiss (2007) Anat Levin and Yair Weiss. 2007. User assisted separation of reflections from a single image using a sparsity prior. IEEE Transactions on Pattern Analysis and Machine Intelligence 29, 9 (2007), 1647–1654.

- Li et al. (2020b) Chao Li, Yixiao Yang, Kun He, Stephen Lin, and John E Hopcroft. 2020b. Single image reflection removal through cascaded refinement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3565–3574.

- Li et al. (2021a) Junxuan Li, Hongdong Li, and Yasuyuki Matsushita. 2021a. Lighting, Reflectance and Geometry Estimation From 360deg Panoramic Stereo. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10591–10600.

- Li and Brown (2014) Yu Li and Michael S Brown. 2014. Single image layer separation using relative smoothness. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2752–2759.

- Li et al. (2020a) Zhengqin Li, Mohammad Shafiei, Ravi Ramamoorthi, Kalyan Sunkavalli, and Manmohan Chandraker. 2020a. Inverse rendering for complex indoor scenes: Shape, spatially-varying lighting and SVBRDF from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2475–2484.

- Li and Snavely (2018a) Zhengqi Li and Noah Snavely. 2018a. Cgintrinsics: Better intrinsic image decomposition through physically-based rendering. In Proceedings of the European Conference on Computer Vision (ECCV). 371–387.

- Li and Snavely (2018b) Zhengqi Li and Noah Snavely. 2018b. Learning intrinsic image decomposition from watching the world. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 9039–9048.

- Li et al. (2018) Zhengqin Li, Kalyan Sunkavalli, and Manmohan Chandraker. 2018. Materials for Masses: SVBRDF Acquisition with a Single Mobile Phone Image. In Proceedings of the European Conference on Computer Vision (ECCV).

- Li et al. (2021b) Zhengqin Li, Ting-Wei Yu, Shen Sang, Sarah Wang, Sai Bi, Zexiang Xu, Hong-Xing Yu, Kalyan Sunkavalli, Miloš Hašan, Ravi Ramamoorthi, and Manmohan Chandraker. 2021b. OpenRooms: An End-to-End Open Framework for Photorealistic Indoor Scene Datasets. , 7190–7199 pages.

- Lin et al. (2019) John Lin, Mohamed El Amine Seddik, Mohamed Tamaazousti, Youssef Tamaazousti, and Adrien Bartoli. 2019. Deep multi-class adversarial specularity removal. In Scandinavian Conference on Image Analysis. Springer, 3–15.

- Liu et al. (2014) Yang Liu, Theo Gevers, and Xueqing Li. 2014. Estimation of sunlight direction using 3D object models. IEEE Transactions on Image Processing 24, 3 (2014), 932–942.

- Liu et al. (2020) Yunfei Liu, Yu Li, Shaodi You, and Feng Lu. 2020. Unsupervised learning for intrinsic image decomposition from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3248–3257.

- Long et al. (2015) Jonathan Long, Evan Shelhamer, and Trevor Darrell. 2015. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 3431–3440.

- Mildenhall et al. (2020) Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. 2020. Nerf: Representing scenes as neural radiance fields for view synthesis. In European conference on computer vision. Springer, 405–421.

- Ntavelis et al. (2020) Evangelos Ntavelis, Andrés Romero, Iason Kastanis, Luc Van Gool, and Radu Timofte. 2020. SESAME: semantic editing of scenes by adding, manipulating or erasing objects. In European Conference on Computer Vision. Springer, 394–411.

- Park et al. (2020) Jeong Joon Park, Aleksander Holynski, and Steven M Seitz. 2020. Seeing the World in a Bag of Chips. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1417–1427.

- Pintore et al. (2020) Giovanni Pintore, Marco Agus, and Enrico Gobbetti. 2020. AtlantaNet: Inferring the 3D Indoor Layout from a Single 360 Image Beyond the Manhattan World Assumption. In European Conference on Computer Vision. Springer, 432–448.

- Ronneberger et al. (2015) Olaf Ronneberger, Philipp Fischer, and Thomas Brox. 2015. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention. Springer, 234–241.

- Sengupta et al. (2019) S. Sengupta, Jinwei Gu, Kihwan Kim, Guilin Liu, D. Jacobs, and J. Kautz. 2019. Neural Inverse Rendering of an Indoor Scene From a Single Image. 2019 IEEE/CVF International Conference on Computer Vision (ICCV) (2019), 8597–8606.

- Sengupta et al. (2018) S. Sengupta, A. Kanazawa, Carlos D. Castillo, and D. Jacobs. 2018. SfSNet: Learning Shape, Reflectance and Illuminance of Faces ’in the Wild’. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (2018), 6296–6305.

- Shafer (1985) Steven A Shafer. 1985. Using color to separate reflection components. Color Research & Application 10, 4 (1985), 210–218.

- Shen and Zheng (2013) Hui-Liang Shen and Zhi-Huan Zheng. 2013. Real-time highlight removal using intensity ratio. Applied optics 52, 19 (2013), 4483–4493.

- Shi et al. (2017) Jian Shi, Yue Dong, Hao Su, and Stella X Yu. 2017. Learning non-lambertian object intrinsics across shapenet categories. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1685–1694.

- Shih et al. (2015) YiChang Shih, Dilip Krishnan, Fredo Durand, and William T Freeman. 2015. Reflection removal using ghosting cues. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3193–3201.

- Shu et al. (2017) Z. Shu, Ersin Yumer, Sunil Hadap, Kalyan Sunkavalli, E. Shechtman, and D. Samaras. 2017. Neural Face Editing with Intrinsic Image Disentangling. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2017), 5444–5453.

- Simonyan and Zisserman (2015) Karen Simonyan and Andrew Zisserman. 2015. Very deep convolutional networks for large-scale image recognition. In ICLR.

- Somanath and Kurz (2020) Gowri Somanath and Daniel Kurz. 2020. HDR Environment Map Estimation for Real-Time Augmented Reality. arXiv preprint arXiv:2011.10687 (2020).

- Srinivasan et al. (2020) Pratul P Srinivasan, Ben Mildenhall, Matthew Tancik, Jonathan T Barron, Richard Tucker, and Noah Snavely. 2020. Lighthouse: Predicting Lighting Volumes for Spatially-Coherent Illumination. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8080–8089.

- Su and Grauman (2017) Yu-Chuan Su and Kristen Grauman. 2017. Learning spherical convolution for fast features from 360 imagery. Advances in Neural Information Processing Systems 30 (2017), 529–539.

- Su and Grauman (2019) Yu-Chuan Su and Kristen Grauman. 2019. Kernel transformer networks for compact spherical convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9442–9451.

- Sun et al. (2019) Cheng Sun, Chi-Wei Hsiao, Min Sun, and Hwann-Tzong Chen. 2019. Horizonnet: Learning room layout with 1d representation and pano stretch data augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1047–1056.

- Swedish et al. (2021) Tristan Swedish, Connor Henley, and Ramesh Raskar. 2021. Objects As Cameras: Estimating High-Frequency Illumination From Shadows. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 2593–2602.

- Tan and Ikeuchi (2005) Robby T Tan and Katsushi Ikeuchi. 2005. Separating Reflection Components of Textured Surfaces Using a Single Image. IEEE Transactions on Pattern Analysis and Machine Intelligence 27, 2 (2005), 178–193.

- Tappen et al. (2003) Marshall Tappen, William Freeman, and Edward Adelson. 2003. Recovering Intrinsic Images from a Single Image. In Advances in Neural Information Processing Systems, S. Becker, S. Thrun, and K. Obermayer (Eds.), Vol. 15. MIT Press. https://proceedings.neurips.cc/paper/2002/file/fa2431bf9d65058fe34e9713e32d60e6-Paper.pdf

- Uezato et al. (2020) Tatsumi Uezato, Danfeng Hong, Naoto Yokoya, and Wei He. 2020. Guided deep decoder: Unsupervised image pair fusion. In European Conference on Computer Vision. Springer, 87–102.

- Wan et al. (2018) Renjie Wan, Boxin Shi, Ling-Yu Duan, Ah-Hwee Tan, and Alex C Kot. 2018. Crrn: Multi-scale guided concurrent reflection removal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4777–4785.

- Wan et al. (2016) Renjie Wan, Boxin Shi, Tan Ah Hwee, and Alex C Kot. 2016. Depth of field guided reflection removal. In 2016 IEEE International Conference on Image Processing (ICIP). IEEE, 21–25.

- Wang et al. (2021c) Fu-En Wang, Yu-Hsuan Yeh, Min Sun, Wei-Chen Chiu, and Yi-Hsuan Tsai. 2021c. LED2-Net: Monocular 360deg Layout Estimation via Differentiable Depth Rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 12956–12965.

- Wang et al. (2020) Jingdong Wang, Ke Sun, Tianheng Cheng, Borui Jiang, Chaorui Deng, Yang Zhao, Dong Liu, Yadong Mu, Mingkui Tan, Xinggang Wang, et al. 2020. Deep high-resolution representation learning for visual recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence (2020).

- Wang et al. (2021b) Xintao Wang, Liangbin Xie, Chao Dong, and Ying Shan. 2021b. Real-esrgan: Training real-world blind super-resolution with pure synthetic data. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 1905–1914.

- Wang et al. (2021a) Zian Wang, Jonah Philion, Sanja Fidler, and Jan Kautz. 2021a. Learning indoor inverse rendering with 3d spatially-varying lighting. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 12538–12547.

- Weber et al. (2018) Henrique Weber, Donald Prévost, and Jean-François Lalonde. 2018. Learning to estimate indoor lighting from 3D objects. In 2018 International Conference on 3D Vision (3DV). IEEE, 199–207.

- Wei et al. (2019) Kaixuan Wei, Jiaolong Yang, Ying Fu, David Wipf, and Hua Huang. 2019. Single image reflection removal exploiting misaligned training data and network enhancements. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 8178–8187.

- Wu et al. (2020) Zhongqi Wu, Chuanqing Zhuang, Jian Shi, Jun Xiao, and Jianwei Guo. 2020. Deep Specular Highlight Removal for Single Real-world Image. In SIGGRAPH Asia 2020 Posters. 1–2.

- Yang et al. (2010) Qingxiong Yang, Shengnan Wang, and Narendra Ahuja. 2010. Real-time specular highlight removal using bilateral filtering. In European Conference on Computer Vision. Springer, 87–100.

- Yu et al. (1999) Yizhou Yu, Paul Debevec, Jitendra Malik, and Tim Hawkins. 1999. Inverse global illumination: Recovering reflectance models of real scenes from photographs. In Proceedings of the 26th annual conference on Computer graphics and interactive techniques. 215–224.

- Zhang et al. (2016) Edward Zhang, Michael F Cohen, and Brian Curless. 2016. Emptying, refurnishing, and relighting indoor spaces. ACM Transactions on Graphics (TOG) 35, 6 (2016), 1–14.

- Zhang et al. (2019) Jinsong Zhang, Kalyan Sunkavalli, Yannick Hold-Geoffroy, Sunil Hadap, Jonathan Eisenman, and Jean-François Lalonde. 2019. All-weather deep outdoor lighting estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 10158–10166.

- Zhang et al. (2021) Kai Zhang, Fujun Luan, Qianqian Wang, Kavita Bala, and Noah Snavely. 2021. PhySG: Inverse Rendering with Spherical Gaussians for Physics-based Material Editing and Relighting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5453–5462.

- Zhang et al. (2018a) Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018a. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition. 586–595.

- Zhang et al. (2018b) Xuaner Zhang, Ren Ng, and Qifeng Chen. 2018b. Single image reflection separation with perceptual losses. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 4786–4794.

- Zheng et al. (2020) Jia Zheng, Junfei Zhang, Jing Li, Rui Tang, Shenghua Gao, and Zihan Zhou. 2020. Structured3d: A large photo-realistic dataset for structured 3d modeling. In European Conference on Computer Vision. Springer, 519–535.

- Zhou et al. (2017) Bolei Zhou, Hang Zhao, Xavier Puig, Sanja Fidler, Adela Barriuso, and Antonio Torralba. 2017. Scene parsing through ade20k dataset. In Proceedings of the IEEE conference on computer vision and pattern recognition. 633–641.

- Zou et al. (2018) Chuhang Zou, Alex Colburn, Qi Shan, and Derek Hoiem. 2018. Layoutnet: Reconstructing the 3D room layout from a single rgb image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2051–2059.