Semantic Communication with Memory

Abstract

While semantic communication succeeds in efficiently transmitting due to the strong capability to extract the essential semantic information, it is still far from the intelligent or human-like communications. In this paper, we introduce an essential component, memory, into semantic communications to mimic human communications. Particularly, we investigate a deep learning (DL) based semantic communication system with memory, named Mem-DeepSC, by considering the scenario question answer task. We exploit the universal Transformer based transceiver to extract the semantic information and introduce the memory module to process the context information. Moreover, we derive the relationship between the length of semantic signal and the channel noise to validate the possibility of dynamic transmission. Specially, we propose two dynamic transmission methods to enhance the transmission reliability as well as to reduce the communication overheads by masking some unessential elements, which are recognized through training the model with mutual information. Numerical results show that the proposed Mem-DeepSC is superior to benchmarks in terms of answer accuracy and transmission efficiency, i.e., number of transmitted symbols.

Index Terms:

Semantic communications, memory task, dynamic transmission, deep learning.I Introduction

The seamlessly connected world fosters unique services, like virtual reality (VR), mobile immersive eXtended reality (XR), or autonomous driving, and brings new challenges to communication systems, such as the scarcity of resources, the congestion of network traffic, and the scalable connectivity for edge intelligence [1]. To materialize the vision, semantic communication [2] is a communication paradigm by directly delivering the meanings of information, and extracting and transmitting only important information relevant to the task at the receiver. In the past couple of years, semantic communication is attracting extensive attention from both academia [2] and industry [3, 4]. The latest works take advantage of deep learning (DL) to design end-to-end semantic communication systems for various types of source reconstruction and specific tasks execution. Semantic communication has shown a great potential to increase the reliability in performing intelligent tasks, reducing the network traffic, and thus alleviating spectrum shortage.

The existing works in semantic communication can be categorized by the types of source data. For image-based semantic communication systems, Jankowski et al. [5] have developed digital and analog deep joint source-channel coding (JSCC) to perform the person/car re-identification task directly, which improves the image retrieval accuracy effectively. Lee et al. [6] have considered the image classification as the communication task, where JSCC is based on the convolutional neural network (CNN). Moreover, Hu et al. [7] have designed robust semantic communication against semantic noise by employing adversarial training, which reduces the probability of misleading in classification. In order to reduce the communication overheads, Yang et al. [8] have developed bandwidth-limited semantic communication by removing the redundancy of semantic features while keeping similar classification accuracy. Shao et al. [9] have proposed a dynamic semantic communication system to adaptively adjust the number of the active semantic features under different signal-to-noise ratios (SNRs) with a graceful classification accuracy degradation. Bourtsoulatze et al. [10] have investigated the deep image transmission semantic communication systems, in which the semantic and channel coding are optimized jointly. Kurka et al. [11] extended Bourtsoulatze’s work with the channel feedback to improve the quality of image reconstruction. Huang et al. [12] have designed the image semantic coding method by introducing the framework of rate-distortion, which can save the number of bits as well as keep the good quality of the reconstructed image.

Apart from image based semantic communication systems, the video based semantic communication systems also attracted much attention. Tung et al. [13] have designed the initial deep video semantic communications by accounting for occlusion/disocclusion and camera movements. Especially, the authors considered the DL-based frame design for the video reconstruction. Wang et al. [14] have proposed the adaptive deep video semantic communication systems by learning to allocate the limited channel bandwidth within and among video frames to maximize the overall transmission performance. Jiang et al. [15] have investigated the application of semantic communications in the video conference, in which the proposed system can maintain high resolution by transmitting some keypoints to represent motions and keep the low communication overheads. Similarly, Tandon et al. [16] also considered the video conference transmission. Different from [15], the authors have designed the video semantic communication by converting the video to text at the transmitter and recovering the video from the text at the receiver. By considering the multi-user scenario for multi-modal data transmission, Xie et al. [17] have proposed a unified Transformer based semantic communication framework to support the image and text transmission and to enable the receiver performing various multimodal tasks.

Meanwhile, there exist works on the speech-based, text-based, and multimodal semantic communication systems. Weng et al. [18] have developed the speech recognition-oriented semantic communication, named DeepSC-SR, in which the transmitter sends the speech signal and the receiver restores the text directly. Han et al. [19] have designed an more energy-efficient speech-to-text system by introducing the redundancy removal module to lower the transmitted data size. With the depth exploration in semantic communications, Xie et al. [20] have developed a powerful joint semantic-channel coding, named DeepSC, to encode text information into various lengths over complex channels. Moreover, Xie et al. [21] also have proposed an environment-friendly semantic communication system, named L-DeepSC, for capacity-limited devices. Zhou et al. [22] have proposed a Universal Transformer based semantic communication systems for text transmission with lower complexity. Peng et al. [23] have designed robust semantic communication systems to prevent the semantic delivery from the source noise, e.g., typos and syntax errors. These existing works have revealed the potential of semantic communication in various intelligent tasks and moved towards the ideal intelligent communication. However, the proposed semantic communication systems only consider the current time slot inputs and ignore the previous time slots inputs. For the next step of task-oriented semantic communication, we can find some inspiration from human communication.

In the human communication [24], people can perform the both memoryless tasks and memory tasks. Memoryless tasks are only relevant to the inputs received in the current time slot, e.g., receiving the image and recognizing its category. Memory tasks [25] are relevant to inputs received in both the current and past time slots, e.g., the response in the conversation relying not only on the currently listened sentences but also on the previous context. While the developed semantic communication systems only consider the inputs in the current time slot and neglected those in the previous time slots. With such a design, semantic communication is incapable of serving the memory tasks, such as scenario question answer, scenario visual question task, and scenario conversations.

Note that one of the key modules in human communications is memory, which can store the context semantic information and enable people to perform tasks requiring memory. We are inspired to introduce the memory module in semantic communications so as to execute tasks with and without memory. By dosing so, machine to machine commendations and human to machine communications will become more intelligent and human-like, which could fully exploit the advancements of semantic communications. It is of great interest to design a semantic communication system that utilizes memory information to facilitate the semantic information transmission and task execution at the receiver. To design a semantic communication system with memory, we are facing the following challenges:

-

Q1:

How to design the semantic-aware transceiver with memory module?

-

Q2:

How to ensure the effectiveness of transmitting memory over multiple time slots?

In this paper, we investigate a semantic communication for memory tasks by taking the scenario question answer task as an example. Particularly, we develop a DL enabled semantic communication system with memory (Mem-DeepSC) to address the aforementioned challenges. The main contributions of this paper are summarized as follows:

-

•

Based on the universal Transformer [26], a transceiver with a memory module is proposed. In the proposed Mem-DeepSC, the transmitter can extract the semantic features at the sentence level effectively and the receiver can process received semantic features from the previous time-slots by employing the memory module, which addresses the aforementioned Q1.

-

•

To make the Mem-DeepSC applicable to dynamic transmission environment, the relationship between the length of semantic signal and the channel noise is derived. Especially, two dynamic transmission methods are proposed to preserve semantic features from distortion and reduce the communication resources. Two lower bounds of mutual information are derived to train the dynamic transmission methods. This addresses the aforementioned Q2.

The rest of this paper is organized as follows. The system model is introduced in Section II. The semantic communication system with memory module is proposed in Section III. Section IV details the proposed dynamic transmission methods. Numerical results are presented in Section V to show the performance of the proposed frameworks. Finally, Section VI concludes this paper.

Notation: Bold-font variables denote matrices or vectors. and represent complex and real matrices of size , respectively. means variable follows a circularly-symmetric complex Gaussian distribution with mean and covariance . and denote the transpose and Hermitian, respectively. is the is the identity matrix, is the all-one vector with length , is the one-hot vector with one in the -th position. and are the element-wise multiplication and division, respectively. represents the signal at the -th time slot. represents the -th element in the vector. represents the -th row in the matrix.

II System Model

As shown in Fig. 1, we consider a single-input single-output (SISO) communication system, which is with one antenna at the transmitter and one at the receiver. We focus on the text scenario question answer, therefore, the transmission includes two phases: i) memory shaping to transmit the context, e.g., multiple sentences, images, or speeches, to the receiver via multiple time slots; ii) task execution to transmit the question relevant to the context so as to obtain the answer at the receiver. In this paper, we consider multiple sentences as the context.

The transceiver has three modules, a semantic codec to extract the semantic features of the source and perform the task, a joint source-channel (JSC) codec to compress and recover the semantic features, and the memory module to store the received context in multiple time slots and aid semantic decoder in performing the task.

II-A Memory Shaping

We assume the -th context, , is transmitted during the -th time slot and denote and as the context sentence and question sentence, respectively. In the memory shaping phase, the transmitter sends the context, e.g., multiple sentences, images, or speeches, to the receiver over multiple time slots. Subsequently, with the semantic encoder and channel encoder, the -th context sentence over the -th time slot can be encoded as

| (1) |

where is the transmitted signals after the power normalization, and are denoted as the semantic encoder with parameter and channel encoder with parameter , respectively.

Transmitting the signals over the channels, the received signal can be presented as

| (2) |

where is the channel coefficients and is the additive white Gaussian noise (AWGN), in which . For the Rayleigh fading channel, the channel coefficient follows ; for the Rician fading channel, it follows with and , where is the Rician coefficient. The SNR is defined as .

With the estimated channel state information (CSI), , the transmitted signals, , can be detected by

| (3) |

After signal detection, the semantic features can be recovered by

| (4) |

where and is denoted as the channel decoder with parameter . Then, the recovered semantic features will be inputted into the memory module.

Inspired by the short-term memory, we model the memory module as a queue with length , which only concerns the context. The memory queue at the -th time slot is represented by

| (5) |

where , is the length of memory. For , is the vector with all zero elements. The memory queue is updated with the incoming received latest semantic features and pop the oldest features out of the queue. For example, the memory queue at the -th time slot is given by

| (6) |

For scenario communications, it is important to recognize the sequence of the memory queue. In other words, we need to know the order of sentences happened earlier or later. Therefore, we need to add the temporal information before inputting to the models, which is given by

| (7) |

where is the temporal information matrix, any two elements of which satisfy for some function . We choose the positional coding employed in Transformer [27] here.

When performing the memory task, the memory shaping phase will introduce the overheads in terms of time availability due to occupying multiple time slots. For example, considering the task offloading scenario, compared to the memoryless tasks that only use several time slots, the memory shaping will occupy multiple time slots and may block the transmission pipe. This makes the time consumption a little high at the initial memory shaping phase. However, when the memory module is shaped, such consumption can be alleviated by reusing or partly updating the shaped memory module, because the memory module serves multiple questions. Therefore, the frequency of carrying memory shaping phase depends on the demands of users. When performing the memory task, the user will decide whether the current context stored in the memory module can answer the question. If not, the memory needs to be updated.

II-B Task Execution

In the task execution phase, the transmitter sends the question sentence, , to the receiver to perform the task. Specially, is encoded into by (1), transmitted over the air, and decoded into by (4). In the scenario question answer task, the question is not only relevant to only one context sentence but also multiple context sentences. Therefore, the answer is predicted with the question and memory together, which is represented as

| (8) |

where is the semantic decoder with parameters .

III Semantic Communication System with Memory

In this section, we design a semantic communication system with memory, named Mem-DeepSC, to perform scenario question answer task, in which the universal Transformer is employed for text understanding.

III-A Model Description

The proposed Mem-DeepSC is shown in Fig. 2. The semantic encoder consists of universal Transformer encoder layer with variable steps to extract the semantic feature of each word. In order to reduce the transmission overheads, the summation operation is taken here, in which these semantic features at the word level are merged to get one semantic feature at the sentence level. The reason that we choose universal Transformer in the semantic codec can be summarized as follows:

- •

-

•

The universal Transformer can be trained and tested much faster than the architectures based on recurrent layers due to the parallel computation [26].

-

•

Compared with the classic Transformer, the universal Transformer shares the parameters, which can reduce the model size.

After the semantic encoder, the JSC encoder employs multiple dense layers to compress the sentence semantic feature. The reasons that we mainly use dense layer in the channel codec can be summarized as follows:

-

•

The JSC codec aims to compress the semantic features and transmit it effectively over the air. Compared with the CNN layer to capture the local information, the dense layer is good at capturing the global information and preserving the entire attributes, which follows the target of the JSC codec. This can enhance the system’s robustness to channel noise.

At the receiver, the JSC decoder correspondingly includes multiple dense layers to decompress sentence semantic feature and reduce the distortion from channels. The semantic decoder also contains the universal Transformer encoder layer with variable steps to find the relationship between the memory queue and the query feature to get the answer. Especially, the memory queue does not contain the temporal information inside. Therefore, temporal coding is employed to add temporal information to the memory queue, in which we adopt the positional coding [27] as the temporal coding.

III-B Training Details

As shown in Algorithm 1, the training of Mem-DeepSC includes three steps, which is similar to the training algorithm proposed in [17]. The first step is to train the semantic codec. In order to improve the accuracy of answers, we choose the cross-entropy (CE) as the loss function instead of the answer accuracy. The cross-entropy is given by

| (9) |

where is the real probability of answer and is the predicted probability. After convergence, the model learns to extract the semantic features and predict the answers. The following proposition proven in Appendix A reveals the relationship between cross-entropy and the answer accuracy.

Proposition 1

Cross entropy loss function is the refined function of answer accuracy and is more stable during training.

After converged, the model is capable of extracting semantic features and predicting the answer accurately. Subsequently, the second step is to ensure the semantic features transmitted over the air effectively. Thus, the JSC codec is trained to learn the compression and decompression of the semantic features as well as to deal with the channel distortion with the mean-squared error (MSE) loss function,

| (10) |

where and are the original semantic features and the recovered semantic features, respectively.

Finally, the third step is to optimize the entire system jointly to achieve the global optimization. The semantic codec and JSC codec are trained jointly with the CE loss function to reduce the error propagation between each module.

With the Mem-DeepSC, the memory-related tasks can be performed. However, the context is transmitted via multiple time slots. If each time slot has different channel conditions, the damage to the semantic information is inevitable at the worse channel conditions, which affects the prediction accuracy. Therefore, in order to preserve the semantic information and save the communication overheads over multiple time slots, we further develop an adaptive rate transmission method.

IV Adaptive Rate Transmission

In this section, we firstly derive the relationship between the length of semantic signal and channel noise, which inspires us to transmit different length signals according to SNRs. We then develop two dynamic transmission methods, importance mask and consecutive mask for saving the communication resources and preventing the outage for memory transmission to different SNRs.

IV-A The Relationship Between the Length of Semantic Signal and Channel Noise

Adaptive modulation has been developed for conventional communications [30], where the modulation order and code rate change according to SNRs. The same spirit can be used in semantic communications if there exists the relationship between the length of semantic signal and channel noise over AWGN. In this situation, we can achieve such adaptive operation by masking some elements, i.e., masking less at low SNR regimes to ensure the reliability of performing tasks and masking more elements at high SNR regimes to achieve a higher transmission rate.

How many semantic elements should be transmitted? The existing works [13, 9] employ neural networks to learn how to determine the number of transmitted semantic elements dynamically, which lacks of interpretability. Therefore, we provide a theoretical analysis of the relationship between the length of semantic signal and the channel noise to guide us to determine the number of semantic elements at certain SNR.

The key is to find the relationship between the noise level and the number of elements that can be transmitted correctly. Firstly, we model into

| (11) |

where is the semantic information selected from the latent semantic codewords, is the model noise. We generally initialize the model weights with Gaussian distribution and apply the batch normalization/layer normalization to normalize the outputs following [31]. Therefore, we model the model noise with Gaussian distribution. In deep learning, the model noise is caused by the unstable gradients descending, the training data noise, and so on. The model noise can be alleviated by the larger dataset, the refined optimizer, and the re-designed loss function but cannot be removed.

Assume the length of is . By applying the packing sphere theory [32], can be mapped to the -dimension sphere space as shown in Fig. 3(a). In the Fig. 3(a), the smaller sphere represents the noise sphere with radius and the larger sphere is the signal sphere with radius , where is the maximum value in the latent semantic codewords. The reason that noise spheres spread the signal sphere is that the latent semantic codewords have different constellation points. Communication is reliable as long as the noise spheres do not overlap. Therefore, there exists a minimum length of to prevent the overlap from the model noise. In other words, the number of semantic codewords that can be packed with non-overlapping noise sphere over the model noise is

| (12) |

After transmitting over the AWGN channels, the received signals can be represented by submitting (11) into (2),

| (13) |

where in (2) is re-denoted to . The can also be mapped to the -dimension sphere space shown in Fig. 3(b). Because of the channel noise, the radius of noise sphere increases from to , which makes the noise spheres overlap.

In order to eliminate the overlapping, one way is to increase the length of from to () to enlarge the volume of the signal sphere so that the enlarged noise spheres do not overlap. Then, the number of semantic codewords that can be packed with non-overlapping noise sphere over the model noise and the channel noise is

| (14) |

The semantic codewords only describe the semantic information of the source and are irrelevant to the channel noise, which means that the numbers of semantic codewords in (12) and (14) are the same. Therefore, the relationship between and can be derived as shown in proposition 2.

Proposition 2

Given the minimum length, , to prevent from model noise, the minimum length for reliable communication over AWGN channels is

| (15) |

With proposition 2, the masked ratio to different SNRs can be computed theoretically. With (15), the asymptotic analysis can be derived into four cases listed below.

-

•

Case 1: When , then . The number of transmitted symbols will converge to minimum . In this case, the semantic communication system can be viewed as the compressor and decompressor.

-

•

Case 2: When , then . The number of transmitted symbols will lead to infinity. In this case, the semantic communication system experiences an outage.

-

•

Case 3: When , then . only depends on the channel noise and can be computed by

(16) In this situation, is computed by the traditional channel capacity and the number of semantic codewords.

-

•

Case 4: When , then . The semantic communication system experiences an outage, similar to case 2.

The key differences between the relationship and traditional channel capacity can be summarized in the following,

-

•

The relationship indicates how much semantic information can be transmitted error-free while the traditional channel capacity indicates how many bits can be transmitted error-free.

-

•

The length of semantic vectors is affected by three points, 1) the number of semantic codewords, 2) the model noise, and 3) the channel noise. But the channel capacity only depends on the channel noise.

-

•

When channel noise disappears, the length of semantic vectors has the lower bound, . The traditional channel capacity does not have such a lower bound.

With the relationship, it is possible to achieve dynamic transmission. The key to achieving such a dynamic transmission in semantic communication systems is to identify which elements are more important than the others and mask the unimportant ones. As shown in Fig. 4, we propose two mask methods subsequently, importance mask method and consecutive mask method.

IV-B Importance Mask

As shown in Fig. 4(a), the importance mask method introduces the importance-aware model to identify the importance order among the elements of , which can be expressed as

| (17) |

where is the importance-aware model with learnable parameter , is the importance rank of , in which the bigger value means that the corresponding element is more important.

By setting the threshold, , the mask, , can be computed with the by

| (18) |

where can be determined by the -th element in the sorted importance rank with the descend order, and is computed by (15).

Then, the masked transmitted signal can be generated by

| (19) |

With , the transmitter can send the only non-zero elements and the position information of zero elements to reduce the communication overheads.

After transmitting over the air, the receiver follows the same processing to perform signal detection, JSC decoding, and semantic decoding.

IV-B1 Loss Function Design

In order to train the importance model, the optimization goal is to keep more information related the task in the masked signals to prevent performance degradation. Therefore, the mutual information between and the goal is employed as the loss function,

| (20) |

However, minimizing (20) with gradients descending algorithm is hard since is undifferentiable and difficult to compute. There are several methods to alleviate the problem, e.g., employing the mutual information estimator and the numerical approximation. Even if these methods solve the undifferentiable problem, it is still unstable in estimating the mutual information. In order to achieve stable optimization, an approximate bound-optimization (or Majorize-Minimize) algorithm is employed. The bound-optimization aims to construct the desired majorized/minorized version of the objective function. Following the idea, two propositions are proposed for the bound-optimization of mutual information, which are proven in Appendices B and C, respectively.

Proposition 3

For classification tasks, alternately maximizing the mutual information can be viewed as a bound optimization of the cross entropy.

Proposition 4

For regression tasks, alternately maximizing the mutual information can be viewed as a bound optimization of the mean absolute error.

IV-B2 Training Details

As shown in Algorithm 2, the importance model is trained by the CE loss function and the frozen Mem-DeepSC model. The training importance model takes the backpropagations from the semantic decoder to guide the importance model, in which the SoftKMax activation function is employed to bridge the backpropagation from mask to importance model. In other words, the importance model can learn which elements have more contributions/importance to the task performance by minimizing the CE loss function.

IV-C Consecutive Mask

As shown in Fig. 4(b), the consecutive mask method masks the last consecutive elements in the to zero, so that the transmitter only sends the non-zero elements and the receiver pads the received signals with zeros to the same length of . The consecutive mask method does not need to transmit the additional mask position information but to re-train the Mem-DeepSC model. Since the importance rank of the elements of is not consecutive, directly masking these consecutive elements may experience performance degradation. The Mem-DeepSC needs to be re-trained with the consecutive mask so that it can learn to re-organize the elements of following the order of descend importance.

The training of the consecutive mask method only includes one step, which is similar to the Train Whole Network in Algorithm 1 but with two additional operations, i.e., masking operation before transmitting and padding operation after signal detection. The loss function during the training is the CE loss function.

V Simulation Results

In this section, we compare the proposed semantic communication systems with memory with the traditional source coding and channel coding method over various channels, in which the proposed mask methods are compared with different benchmarks.

V-A Implementation Details

V-A1 The Dataset

We choose the bAbI-10k dataset [33], including 20 different types of scenario tasks. Each example is composed of a set of facts, a question, the answer, and the supporting facts that lead to the answer. We split the 10k examples into 8k examples for training, 1k examples for validation, and 1k examples for testing.

V-A2 Traing Settings

The semantic encoder and decoder consist of the universal Transformer encoder layer with 3 steps and with 6 steps, respectively, in which the width of the layer is 128. The importance model is composed of one Transformer encoder layer with the width of 64. The other training settings are listed in Table I.

V-A3 Benchmarks and Performance Metrics

We adopt the typical source and channel coding method as the benchmark of the proposed Mem-DeepSC, and the random mask method as the counterpart of the proposed two mask methods.

- •

-

•

Conventional methods: To perform the source and channel coding separately, we use the following technologies, respectively:

-

–

8-bit unicode transformation format (UTF-8) encoding for text source coding, a commonly used method in text compression;

-

–

Turbo coding for text channel coding, popular channel coding for a small size file;

-

–

16-quadrature amplitude modulation as the modulation.

-

–

-

•

Random Mask: Mask the elements in the transmitted signal randomly.

In the simulation, the coding rate is 1/3 and the block length is 256 for the Turbo codes. The coherent time is set as the transmission time for each context in the simulation. We set for the Rician channels and for the AWGN channels. In order to compute the required length of semantic vectors, we train multiple Mem-DeepSC with different sizes to find the values of and . For Mem-DeepSC, and . Answer accuracy is used as the metric to compute the ratio between the number of correct answers and that of all generated answers.

| Batch Size | Learning Rate | Epoch | |

| Train Semantic Codec | 200 | 250 | |

| Train Channel Codec | 100 | 50 | |

| Train Whole Network | 200 | 30 | |

| Train Importance Mask | 200 | 10 | |

| Train Consecutive Mask | 200 | 50 |

V-B Memory Semantic Communication Systems

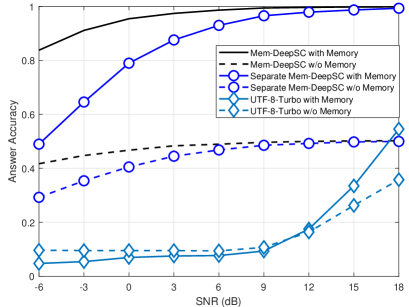

Fig. 5 compares the answer accuracies over different channels, in which the Mem-DeepSC and the UTF-8-Turbo transmit 32 symbols per sentence and 190 symbols per sentence, respectively. The proposed Mem-DeepSC with memory outperforms all the benchmarks at the answer accuracy in all SNR regimes by the margin of 0.8. Compared the Mem-DeepSC with memory and without memory, the memory module can significantly improve the answer accuracy, which validates the effectiveness of the memory module in memory-related transmission tasks. Besides, the Mem-DeepSC outperforms the separate Mem-DeepSC in low SNR regimes, which means that the three stage training algorithm can help improve the robustness to channel noise. From the AWGN channels to the Rician channels, the proposed Mem-DeepSC with memory experiences slight answer accuracy degradation in the low SNR regimes but the UTF-8-Turbo has an obvious performance loss in all SNR regimes. The inaccurate CSI deteriorates the answer accuracy for both methods, however, the proposed Mem-DeepSC can keep a similar answer accuracy in high SNR regimes, which shows the robustness of the proposed Mem-DeepSC.

V-C The Proposed Mask Methods

Table II compares the number of transmitted symbols for different methods. Compared to the UTF-8-Turbo with the adaptive modulation and channel coding (AMC), the proposed Mem-DeepSC decreases the number of the transmitted symbols significantly with only 4%-16.8% symbols. The reason is that the Mem-DeepSC transmits the semantic information at the sentence level instead of at the letter/word level. Besides, applying the dynamic methods can further reduce the number of transmitted symbols from 32 symbols to 16 symbols per sentence as the SNR increases, especially saving an additional 50% symbols in the high SNR regimes. Then, the effectiveness of (15) is validated by the following simulation in Fig. 6.

Fig. 6 verifies the effectiveness of the proposed mask strategy. For Mem-DeepSC with no mask, we provided two cases with 16 symbols and 32 symbols per sentence, respectively. Utilizing adaptive modulation and channel coding (AMC) on UTF-8-Turbo can yield comparable answer accuracy to that of Mem-DeepSC over AWGN channels. However, this comes at the expense of a reduced transmission rate. Then comprising the no mask cases with different number of symbols per sentence, increasing the number of symbols per sentence leads to higher answer accuracy in low SNR regimes but the gain disappears as the SNR increases. This suggested that the semantic communication systems can employ more symbols in low SNR to improve the robustness and transmit fewer symbols in the high SNR regimes to improve the transmission efficiency. The proposed importance mask and consecutive mask keep the similar answer accuracy as the Mem-DeepSC with 32 symbols per sentence in all SNR regimes over the AWGN and the Rician channels.

| Number of Transmitted Symbols | |||||

| -6dB | 0dB | 6dB | 12dB | 18dB | |

| Mem-DeepSC | 32 | ||||

| Dynamic Transmission | 32 | 25 | 18 | 16 | 16 |

| UTF-8-Turbo | 190 | ||||

| UTF-8-Turbo with AMC (AWGN Channels) | 760 (BPSK) | 760 (BPSK) | 380 (4QAM) | 253 (8QAM) | 190 (16QAM) |

| UTF-8-Turbo with AMC (Rician Fading Channels) | 760 (BPSK) | 760 (BPSK) | 380 (4QAM) | 253 (8QAM) | 253 (8QAM) |

However, the random mask experiences significant answer accuracy loss in the high SNR regimes as the number of masked elements increases because some important elements are masked randomly to reduce the answer accuracy. This validates the effectiveness of both proposed mask methods.

VI Conclusion

In this paper, we have proposed a memory-aided semantic communication system, named Mem-DeepSC. The scenario question answer task is taken as the example. The Mem-DeepSC can extract the semantic information at the sentence level to reduce the number of the transmitted symbols and deal with the context information at the receiver by introducing the memory queue. Moreover, with the memory module, the Mem-DeepSC can deal with the memory-related tasks compared to that without the memory module, which is closer to human-like communication. Besides, the relationship between the length of semantic signal and the channel noise over AWGN is derived to decide how many symbols are required to be transmitted at different SNRs. Two dynamic transmission methods are proposed to mask the unimportant elements in the transmitted signals, which can employ more symbols in the low SNR to improve the robustness and transmit fewer symbols in the high SNR regimes to improve the transmission efficiency. In particular, the dynamic transmission methods can save an additional 50% transmitted symbols. Therefore, the semantic communication system with memory is an attractive alternative to intelligent communication systems.

Appendix A Proof of Proposition 1

Given the mini-batch, , the question-answer accuracy can be computed by

| (21) |

where is the batch size, and is the one-hot vector with one in the -th position, is the real answer with label , and represents the predicted answer with predicted label , which is computed by

| (22) |

where is the output logits before softmax activation.

Since softmax function is the soft function of , the can be approximated by

| (23) |

where is the predicted probabilities.

Submitting the (23) to (21), the answer accuracy can be approximated as

| (24) |

where is the real probability for label and is the -th predicted probability at .

Based on (24), the loss function of answer accuracy can be designed as

| (25) |

The derivation of for the parameters is

| (26) |

From (26), there exist two optimization directions when , i.e., and . However, causes worse prediction results and should avoid. In order to make the optimization stable, the should be refined. One refined loss function is the cross-entropy loss function given by

| (27) |

The derivation of for the parameters is

| (28) |

Appendix B Proof of Proposition 2

For the classification task, the mutual information, , can be expressed as

| (29) |

where is the entropy of the real label, is the conditional entropy.

The cross-entropy between the real label and the predicted label given is

| (30) |

where is the Kullback–Leibler divergence and is always non-negative. Therefore, we have the following inequality

| (31) |

Appendix C Proof of Proposition 3

For the regression task, the mutual information, , can be expressed as (29).

Lemma 1

The conditional differential entropy yields a lower bound on the expected squared error of an estimator, for any random variable , observation , and estimator , the following holds

| (33) |

Applying the Lemma 1, the upper bound of conditional entropy, , can be expressed as

| (34) |

where means the model outputs with the .

Submitting (34) into (29), the lower bound of can be obtained

| (35) |

From (35), since is constant, maximizing the can be approximated to minimizing the . However, directly minimizing the may cause the gradient explosion.

Given the derivation of for the parameters ,

| (36) |

From (36), when , . In order to alleviate the gradient explosion, the approximation of is derived by applying the Taylor series expansion

| (37) |

The derivation of (37) for the parameters is

| (38) |

Compared (38) and (36), the item, , is removed, therefore, the gradient explosion is eliminated. Then, the lower bound of can be expressed as

| (39) |

From (39), maximizing the can be approximated to minimizing the . The lower bound will be closer to when the model is trained. Therefore, the proposition 4 is derived.

References

- [1] K. B. Letaief, Y. Shi, J. Lu, and J. Lu, “Edge artificial intelligence for 6G: Vision, enabling technologies, and applications,” IEEE J. Select. Areas Commun., vol. 40, no. 1, pp. 5–36, Nov. 2022.

- [2] Z. Qin, X. Tao, J. Lu, W. Tong, and G. Y. Li, “Semantic communications: Principles and challenges,” arXiv preprint arXiv:2201.01389, 2021.

- [3] W. Tong and G. Y. Li, “Nine challenges in artificial intelligence and wireless communications for 6G,” IEEE Wireless Commun., Early Access 2022.

- [4] J. Hoydis, F. A. Aoudia, A. Valcarce, and H. Viswanathan, “Toward a 6G AI-native air interface,” IEEE Commun. Mag., vol. 59, no. 5, pp. 76–81, May 2021.

- [5] M. Jankowski, D. Gündüz, and K. Mikolajczyk, “Wireless image retrieval at the edge,” IEEE J. Select. Areas Commun., vol. 39, no. 1, pp. 89–100, Jan. 2021.

- [6] C. Lee, J. Lin, P. Chen, and Y. Chang, “Deep learning-constructed joint transmission-recognition for Internet of Things,” IEEE Access, vol. 7, pp. 76 547–76 561, Jun. 2019.

- [7] Q. Hu, G. Zhang, Z. Qin, Y. Cai, and G. Yu, “Robust semantic communications against semantic noise,” arXiv preprint arXiv:2202.03338, 2022.

- [8] Y. Yang, C. Guo, F. Liu, C. Liu, L. Sun, Q. Sun, and J. Chen, “Semantic communications with artificial intelligence tasks: Reducing bandwidth requirements and improving artificial intelligence task performance,” IEEE Ind. Electron. Mag., Early Access, 2022.

- [9] J. Shao, Y. Mao, and J. Zhang, “Learning task-oriented communication for edge inference: An information bottleneck approach,” IEEE J. Select. Areas Commun., vol. 40, no. 1, pp. 197–211, Jan. 2022.

- [10] E. Bourtsoulatze, D. B. Kurka, and D. Gündüz, “Deep joint source-channel coding for wireless image transmission,” IEEE Trans. Cogn. Commun. Netw., vol. 5, no. 3, pp. 567–579, Sept. 2019.

- [11] D. B. Kurka and D. Gündüz, “DeepJSCC-f: Deep joint source-channel coding of images with feedback,” IEEE J. Select Areas Inform. Theory, vol. 1, no. 1, pp. 178–193, May 2020.

- [12] D. Huang, F. Gao, X. Tao, Q. Du, and J. Lu, “Towards semantic communications: Deep learning-based image semantic coding,” arXiv preprint arXiv:2208.04094, 2022.

- [13] T. Tung and D. Gündüz, “DeepWiVe: Deep-learning-aided wireless video transmission,” IEEE J. Select. Areas Commun., vol. 40, no. 9, pp. 2570–2583, Jul. 2022.

- [14] S. Wang, J. Dai, Z. Liang, K. Niu, Z. Si, C. Dong, X. Qin, and P. Zhang, “Wireless deep video semantic transmission,” arXiv preprint arXiv:2205.13129, May 2022.

- [15] P. Jiang, C.-K. Wen, S. Jin, and G. Y. Li, “Wireless semantic communications for video conferencing,” arXiv preprint arXiv:2204.07790, Apr. 2022.

- [16] P. Tandon, S. Chandak, P. Pataranutaporn, Y. Liu, A. M. Mapuranga, P. Maes, T. Weissman, and M. Sra, “Txt2Vid: Ultra-low bitrate compression of talking-head videos via text,” arXiv preprint arXiv:2106.14014, Jun. 2021.

- [17] H. Xie, Z. Qin, X. Tao, and K. B. Letaief, “Task-oriented multi-user semantic communications,” IEEE J. Select. Areas Commun., vol. 40, no. 9, pp. 2584–2597, Jul. 2022.

- [18] Z. Weng, Z. Qin, X. Tao, C. Pan, G. Liu, and G. Y. Li, “Deep learning enabled semantic communications with speech recognition and synthesis,” arXiv preprint arXiv:2205.04603, 2022.

- [19] T. Han, Q. Yang, Z. Shi, S. He, and Z. Zhang, “Semantic-preserved communication system for highly efficient speech transmission,” arXiv preprint arXiv:2205.12727, 2022.

- [20] H. Xie and Z. Qin, “A lite distributed semantic communication system for Internet of Things,” IEEE J. Select. Areas Commun., vol. 39, no. 1, pp. 142–153, Jan. 2021.

- [21] H. Xie, Z. Qin, G. Y. Li, and B. Juang, “Deep learning enabled semantic communication systems,” IEEE Trans. Signal Process., vol. 69, pp. 2663–2675, Apr. 2021.

- [22] Q. Zhou, R. Li, Z. Zhao, C. Peng, and H. Zhang, “Semantic communication with adaptive universal Transformer,” IEEE Wireless Communications Letters, vol. 11, no. 3, pp. 453–457, Mar. 2022.

- [23] X. Peng, Z. Qin, D. Huang, X. Tao, J. Lu, G. Liu, and C. Pan, “A robust deep learning enabled semantic communication system for text,” arXiv preprint arXiv:2206.02596, Jun. 2022.

- [24] M. Tomasello, Origins of human communication. MIT press, 2010.

- [25] S. Sukhbaatar, J. Weston, R. Fergus et al., “End-to-end memory networks,” in Proc. Advances Neural Info. Process. Systems (NIPS), vol. 28, Montreal, Quebec, Canada, Dec. 2015.

- [26] M. Dehghani, S. Gouws, O. Vinyals, J. Uszkoreit, and L. Kaiser, “Universal transformers,” in Proc. Int’l. Conf. Learning Representations (ICLR), New Orleans, LA, USA, May 2019.

- [27] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” in Proc. Advances Neural Info. Process. Systems (NIPS), Long Beach, CA, USA. Dec. 2017, pp. 5998–6008.

- [28] J. Devlin, M. Chang, K. Lee, and K. Toutanova, “BERT: pre-training of deep bidirectional transformers for language understanding,” in Proc. North American Chapter of the Assoc. for Comput. Linguistics: Human Language Tech., (NAACL-HLT’19), Minneapolis, MN, USA, Jun. 2019, pp. 4171–4186.

- [29] T. Brown, B. Mann, N. Ryder, M. Subbiah, J. D. Kaplan, P. Dhariwal, A. Neelakantan, P. Shyam, G. Sastry, A. Askell et al., “Language models are few-shot learners,” Proc. Advances Neural Info. Process. Systems (NIPS), vol. 33, pp. 1877–1901, Virtual, Dec. 2020.

- [30] A. Goldsmith, Wireless communications. Cambridge university press, 2005.

- [31] S. Ioffe and C. Szegedy, “Batch normalization: Accelerating deep network training by reducing internal covariate shift,” in Proc. PMLR Int’l. Conf. on Machine Learning (ICML), Lille, France, July 2015, pp. 448–456.

- [32] D. Tse and P. Viswanath, Fundamentals of wireless communication. Cambridge university press, 2005.

- [33] J. Weston, A. Bordes, S. Chopra, and T. Mikolov, “Towards ai-complete question answering: A set of prerequisite toy tasks,” in Proc. Int’l. Conf. Learning Representations (ICLR), San Juan, Puerto Rico, May 2016.