Self-Verification in Image Denoising

Abstract

We devise a new regularization, called self-verification, for image denoising. This regularization is formulated using a deep image prior learned by the network, rather than a traditional predefined prior. Specifically, we treat the output of the network as a “prior” that we denoise again after “re-noising”. The comparison between the again denoised image and its prior can be interpreted as a self-verification of the network’s denoising ability. We demonstrate that self-verification encourages the network to capture low-level image statistics needed to restore the image. Based on this self-verification regularization, we further show that the network can learn to denoise even if it has not seen any clean images. This learning strategy is self-supervised, and we refer to it as Self-Verification Image Denoising (SVID). SVID can be seen as a mixture of learning-based methods and traditional model-based denoising methods, in which regularization is adaptively formulated using the output of the network. We show the application of SVID to various denoising tasks using only observed corrupted data. It can achieve the denoising performance close to supervised CNNs.

1 Introduction

Images captured by various devices are prone to noise and corruption due to limited imaging environments such as low light and slow shutter speed. Denoising is the problem whereby we recover the underlying clean image from its noise measurements. While sometimes the purpose may be for aesthetic reasons, downstream computer vision tasks, such as detection and segmentation, are also greatly facilitated by having minimally corrupted inputs.

A noisy image is usually modeled as

| (1) |

where represents the noise, is the clean image to be restored. This inverse problem is challenging because the statistics of are usually unknown and often complex.

In general, model-based image denoising algorithms aim to solve this problem through optimizing functions of the form

| (2) |

where is a data fidelity term that ensures the solution agrees with the observation, is a regularizer and is a trade-off parameter. The first challenge of Eq. (2) is choosing , which encodes the pre-known image properties and directs the solution towards a more plausible image. (Given this sense of , we refer to it as a “prior” although we are not taking a Bayesian perspective.) Some common priors for constructing include total variation, non-local self-similarity, and others [5, 17]. However, these preselected priors often do not model the finer properties of natural images, and so often lead to degraded results.

Recently, supervised deep networks have achieved unprecedented success in image denoising by learning image priors and noise statistics from pairs of noisy/clean images [32]. Most CNN denoisers, e.g. DnCNN [29] and VDN [26], have superior performance over model-based denoisers. Unfortunately, in most cases, collecting a large number of realistic paired data is difficult, which limits the application of supervised CNNs. This leads to the topic of learning to denoise without paired data, the common approach of non-deep models, but less addressed by neural networks. Some self-supervised methods [12] show that only noisy data is required to train a denoising network under certain minor assumptions (e.g. noise is zero-mean). One of these methods is called a deep image prior (DIP) [23], which has attracted much attention because it handles image denoising from a unique perspective. DIP found that the structure of CNN is naturally an implicit regularizer for image denoising. Specifically, DIP uses a deep CNN to reparameterize a noisy image , i.e.,

| (3) |

where is a fixed random vector, represents the deep CNN and is its parameters. The optimization trajectory of Eq. (3) will pass through a good local optimum (i.e. a clean image) before overfitting to . So denoising can be completed by stopping training early. Moreover, we found that similar results (see Figure 1) can be observed even if in Eq. (3) is replaced by ,

| (4) |

The effectiveness of DIP relies on the fact that the CNN naturally tends to capture low-level image statistics, while showing strong “impedance” to unstructured noise [23]. DIP demonstrates the potential of neural networks to learn image priors without clean labels.

1.1 Motivation

While DIP is an effective unsupervised approach, a drawback for real-world applications is its time-consuming training that requires human intervention for stopping. Inspired by DIP, in this paper we explore a more flexible use of the neural network image prior. Similar to DIP, we only focus on self-supervised cases; the difference is that our goal is to formulate an explicit regularization for denoising using the prior determined by the network.

Since DIP has confirmed the affinity of the deep network for learning low-level image statistics, we expect that this network will first capture low-level image statistics as well in early iterations before attempting to memorize the noise when solving Eq. (5). Since the output of the network (i.e. ) contains the low-level statistics required to restore the image, we consider using as the prior for image denoising. Put another way, can the output of the denoising network be used to construct a regularization for itself? In this paper, we give a feasible solution for performing this task.

1.2 Our Proposed Contribution

Suppose there is a second noise-degraded image in addition to for which both share the same latent clean image , and which have independent and identically distributed (i.i.d.) noise. Based on and , we devise a new regularization term for image denoising. Then, we could consider for Eq. (5) the function

| (6) |

Since and have different noise, is obviously a good candidate solution for minimizing Eq. (6). However, in most real-world applications, is available, but we do not have a second noisy image . Instead, we use the output of the network to synthesize a new ,

| (7) |

where is a predefined adaptive noise degradation function and is newly generated noise. We discuss later. Combining Eqs. (6) and (7), we have

| (8) |

The first term of Eq. (8) implicitly constrains the removed noise to be zero mean. In the second term of Eq. (8), if the noise of and share similar statistical characteristics, but , then is still a good solution for Eq. (8). We refer to the second term of Eq. (8) as a self-verification regularization. In , can be regarded as a deep image prior learned by the network. The similarity comparison between and can be seen as using a prior generated by the network to verify the denoising behavior of the network itself. We update the network to minimize . When converges and the network becomes a good denoiser, the discrepancy between and should be small.

With self-verification regularization, we further show how a deep CNN learns to denoise with self-supervision. We call this training method Self-Verification Image Denoising (SVID). SVID does not rely on other noise statistics except the assumption of zero-mean noise. To perform self-verification, SVID uses a Siamese network as the backbone. Although SVID is based only on noisy training data, its denoising results are impressive. We demonstrate the superior performance of SVID in various denoising experiments. SVID significantly outperforms other state-of-the-art self-supervised methods.

2 Related Work

In this section, we review related topics most relevant to this work, including model-based denoising methods, learning-based denoising methods and Siamese networks.

Most model-based denoising methods focus on using handcrafted priors [24, 2, 17], [34] to construct regularization. Over the past decades, various ideas have been proposed, all aiming to identify sources of inner structure in visual data. For example, the non-local self-similarity (NSS) prior [25] is widely used in image denoising; some well-known NSS-based methods include BM3D [4] and WNNM [7]. Other prominent techniques, such as wavelet coring [22], total variation [20] and low-rank assumptions [5] are also standard approaches.

In recent years, supervised deep learning with CNNs has shown excellent denoising performance [31]. Many methods adopt sophisticated network structures to achieve better denoising results [30, 27, 14]. Their effectiveness is due to their ability to learn image priors from large amounts of paired data [33]. However, they require paired noisy/clean images. To mitigate this issue, Lehtinen et al. [13] demonstrate that the denoising CNN can be trained with pairs of independent noisy measurements of the same scene. This training strategy is called Noise2Noise, and achieves denoising performance on par with general supervised learning methods.

To relax the supervised requirement, networks trained with only noisy images has recently been considered [12]. For instance, Noise2Void [11] proposes a blind spot strategy, which trains a network to predict masked pixels using their neighbors so as to achieve denoising. The blind spot strategy has strong denoising ability, and it is adopted by many self-supervised methods, such as Self2Self [18] and Noise2Self [1]. Later, Neighbor2Neighbor [9] showed that two different subsamples of a noisy input can also be the training data for denoising.

As for the choice of network, Siamese networks are natural and effective tools for modeling invariance, and therefore have become a common structure for self-supervised representation learning methods [3, 28, 6]. Generally, inputs are distorted versions of the same sample, and maximize the similarity subject to different conditions. In this paper, we also use a Siamese network, not to learn a robust representation, but to discover the clean target shared between the inputs and .

3 Methodology

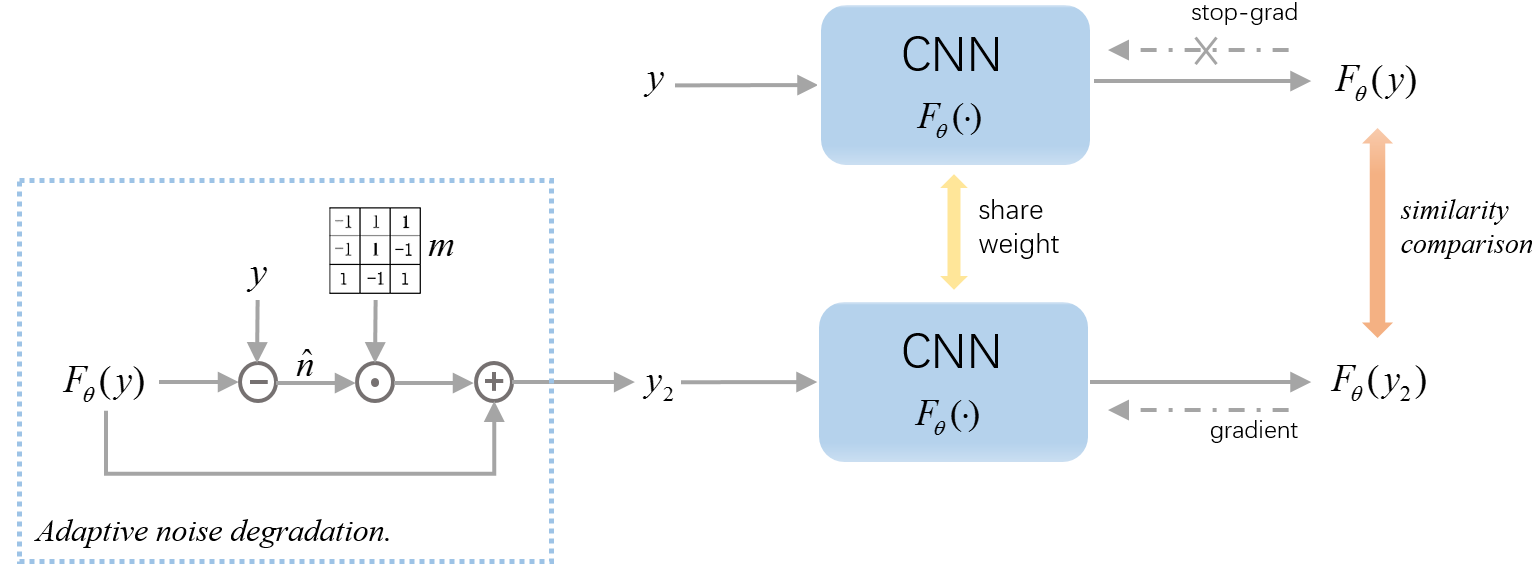

Given a noisy dataset , we aim to use these noisy images to learn to denoise without using other clean images. We propose a self-verification image denoising (SVID) approach, as shown in Figure 2. SVID is derived from Eq. (8), in which self-verification regularization plays a major role. Unlike DIP [23] trains a new network for each image, we use all noisy images to train a shared denoising network by mini-batch gradient descent. For simplicity, we set the batch size to 1 in the objective function of SVID, but it is easy to expand to the case where the batch size is greater than 1. After training, the denoising CNN in SVID is directly applied to new noisy images without additional learning.

3.1 Overview of SVID

Self-verification consists of two steps: adaptive noise degradation and similarity comparison. We use a Siamese network, where the goal is first to input to a CNN to obtain a denoised image and its removed noise . Since low-level image statistics are easier to be captured by deep CNNs than noise, we assume that is a clean image. We then synthesize by the following adaptive noise degradation function,

| (9) | ||||

where is a new noise which is adaptively synthesized with respect to . represents element-wise multiplication, is a random binary ( or ) mask that is independent of but has the same dimension as . In each iteration of learning, a new is sampled. Each element in is with a probability of , and otherwise.

Next, the network learns to denoise by maximizing the similarity between and . This is achieved by solving the following optimization problem,

| (10) |

“Stopgrad” means that the gradient does not backpropagate to , which helps prevent trivial solutions (i.e. , is a constant.). The stop-gradient procedure is a common strategy for learning Siamese networks [3].

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a528bd3d-6f0e-450c-b43b-dbc17653d7c4/cat_y.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a528bd3d-6f0e-450c-b43b-dbc17653d7c4/cat_x_1k.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a528bd3d-6f0e-450c-b43b-dbc17653d7c4/cat_n_hat_1k.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a528bd3d-6f0e-450c-b43b-dbc17653d7c4/cat_n2_1k.png)

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/a528bd3d-6f0e-450c-b43b-dbc17653d7c4/cat_y2_1k.png)

3.2 Discussion of SVID

We motivate the SVID objective of Eq. (10) as a modification of Eq. (6). From Eq. (6), we observe that we can expand the self-regularization as

We observe that the squared error is implicitly included in , and therefore, modulo a rescaling of the regularization parameter , the squared error is not necessary in . We also observe that the squared error term is between and the output using . Since the noise in and are assumed zero mean and independent, we can view this as an adversarial term, since it is not possible to model noise in based on the noise in , and therefore the network defaults to their shared structure.

In Eq. (3.2), we need the second noisy image . We observe that if is synthesized by Eq. (9), then naturally has some desirable properties. Specifically,

1. Some image details may remain in . These details can be destroyed by multiplying with a random mask , which makes a more natural-looking noise (see Figure LABEL:fig_cat).

2. leads to . Therefore, the noise of meets the zero-mean assumption.

3. Since is composed of , the statistical characteristics of and should be similar, as shown in Figure 4. In addition, is random, so . This further leads to . If , self-verification Eq. (10) is meaningless.

| Test noise level | BM3D | NLH | DIP | N2V | S2S | Nb2Nb | N2N | U-Net | SVID | |

|---|---|---|---|---|---|---|---|---|---|---|

| Gaussian | 30.90 | 30.31 | 29.99 | 30.56 | 29.63 | 31.07 | 31.62 | 31.81 | 31.50 | |

| 31.69 | 31.77 | 29.66 | 31.88 | 27.76 | 32.25 | 33.27 | 33.44 | 32.97 | ||

| Speckle | 26.64 | 25.18 | 26.73 | 28.40 | 27.60 | 28.89 | 31.13 | 31.49 | 29.73 | |

| 26.70 | 26.10 | 27.06 | 28.77 | 27.53 | 29.29 | 31.39 | 31.86 | 30.10 | ||

| Poisson | 27.70 | 28.49 | 28.77 | 29.78 | 29.23 | 29.97 | 30.91 | 31.09 | 30.41 | |

| 27.23 | 26.12 | 27.98 | 28.94 | 28.01 | 29.03 | 30.25 | 30.44 | 29.49 |

Our method uses a stop-gradient operation to avoid trivial solutions. We next discuss one hypothesis on why SVID is practically effective, as our experiments will show. In our method, we use the network’s output and the adaptively synthesized to learn denoising, hoping that the learned knowledge can adapt to the real noisy data . SVID is an EM-like algorithm. It implicitly involves two sets of variables (i.e. and ), and solves two underlying sub-problems. The presence of the stop-gradient is the consequence of introducing the extra set of variables.

We re-express the objective function of SVID in the following form,

| (12) |

Note that Eq. (12) is consistent with the objective function in Eq. (10). For clarity, we use to denote . With this formulation, we consider solving:

| (13) |

The problem in Eq. (13) can be solved by an alternating algorithm, fixing one set of variables and solving for the other set. At each training step , we randomly sample a noisy image from the given noisy dataset . Then, alternately solve the two sub-problems:

| (14) |

| (15) |

where “” means assigning.

Solving for . There is no optimization in this part, but simply a redefinition of the term to be the output of the neural network on . Moreover, previous work on DIP has revealed that the CNN naturally tends to capture low-level image statistics. Therefore, the similarity between and will gradually increase.

Solving for . is constructed by Eq. (9) using . The more similar is to , the closer the noise statistics of and are (see Figure 4 for illustration). The denoising network is improved by solving Eq. (15). is fixed in this subproblem, so the gradient does not backpropagate to .

In short, one output of the network is obtained at training step , which serves as a “prior” target for denoising. contains the low-level image statistics required to restore a clean image. Therefore, we use as a label to improve the denoising ability of the network. This leads to the network to produce a better prior at time . By solving the two sub-problems alternately at each training step, approaches the true underlying .

3.3 Training Details

The denoising CNN in SVID is a simple U-Net [19]. We use PyTorch and Adam [10] with a batch size of 1 to train the network. The training images are randomly cropped into patches before being input to the network. The learning rate is fixed to for the first iterations and linearly decays to 0 for the next iterations.111We will include a reference here to our code and datasets. After training, the CNN with fixed parameters is directly applied to other noisy images.

4 Experiments

We evaluate SVID on various denoising tasks.

4.1 Synthetic Noise

We collected 4744 images from the Waterloo Exploration Database [15] to synthesize noisy images for training. Several state-of-the-art denoising methods are adopted for performance comparison, including model-based methods BM3D [4] and NLH [8], self-learning methods DIP [23], Noise2Void(N2V) [11], Self2Self(S2S) [18] and Neighbor2Neighbor(Nb2Nb) [9], other deep learning methods include Noise2Noise(N2N) [13] and a common fully-supervised U-Net. U-Net is trained with noisy/clean image pairs, while N2N uses paired noisy/noisy images. For the sake of fairness, N2N, U-Net and our SVID adopt the same network architecture to perform denoising. BSD300 [16] is the test set of the following experiments.

Gaussian noise. We first study the effectiveness of SVID on additive Gaussian noise. Each training example is corrupted by zero-mean Gaussian noise with a random standard deviation . For testing, we synthesize two sets of noisy images, using a fixed noise level and a variable . We present the quantitative results in Table 1. Visual comparisons can be found in Figure 5. In addition, Figure 4 shows the statistical histograms of the noise removed by SVID during training.

Speckle noise. Multiplicative speckle noise is signal dependent and is often observed in medical ultrasonic images and radar images. The speckle noise in the image is modeled as the random value multiplied by the pixel value of the latent signal , which can be expressed as . In this model, is an uniform noise with a mean of and a variance of . We vary the noise variance during training. The results and the comparisons of denoising on Speckle noise can be seen in Table 1 and Figure 6.

Poisson noise. In our third experiment we consider Poisson noise, which can be used to model photon noise in imaging sensors. Poisson noise is also signal-dependent because its expected magnitude depends on the pixel brightness. We randomize the noise magnitude separately for each training example. Quantitative and subjective comparisons are reported in Table 1 and Figure 7, respectively.

Discussion. In the above comparisons, SVID shows excellent denoising results both subjectively and quantitatively. These results demonstrate that the output of the denoising network can be a prior for denoising, as this paper conjectures. The denoising performance of SVID outperforms other self-supervised methods (i.e. DIP, N2V, S2S, Nb2Nb) by a large margin, which confirms the effectiveness of self-verification. In SVID, the denoising ability of the network can be improved by self-verification. In addition, SVID is better than model-based methods BM3D and NLH. This is because the deep image prior captured by the network can better model the properties of natural images than the traditional non-local self-similarity prior. We also noticed that SVID is inferior to supervised methods U-Net and N2N, which is reasonable considering that our SVID has no access to paired data for supervision. In short, our SVID consistently exhibits encouraging denoising performance for various types of noise. The denoised images are clean and sharp. More importantly, SVID does not rely on paired data or pre-known noise statistics, which shows its potential value for many practical applications.

| Noisy | U-Net | SVID | |

|---|---|---|---|

| SSIM | 0.766 | 0.827 | 0.813 |

| PSNR | 22.61 | 28.56 | 27.20 |

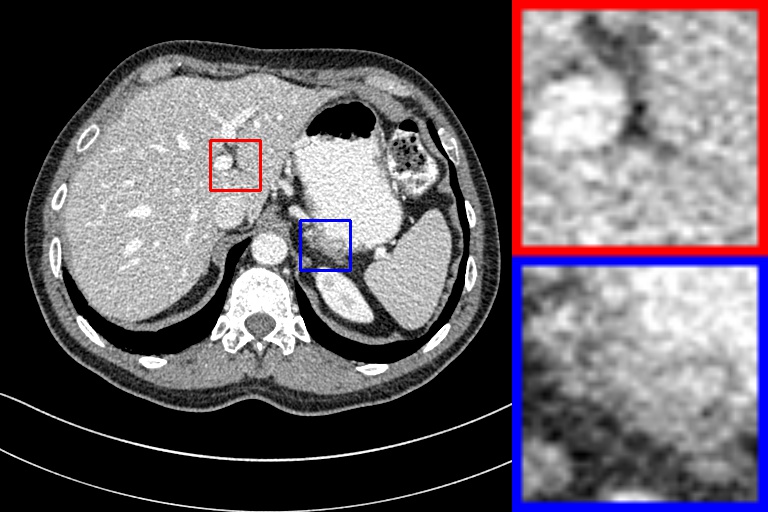

4.2 Low-Dose CT Denoising

Computed tomography (CT) is a popular imaging modality in clinical diagnosis. Despite the healthcare benefits, the extensive use of CT has caused public concern about the potential risks of X-ray radiation. Reducing radiation dose is an effective way to deal with this problem. However, a decrease of radiation dose is associated with an increase of noise and artifacts in the reconstructed image, which may adversely affect subsequent diagnosis. Therefore, denoising is a critical post-processing step for low-dose CT images [21]. Since the noise statistics in CT images are very complicated and paired training data is difficult to obtain, the self-supervised denoising methods can better cater to low-dose CT images. We further evaluate SVID in the task of low-dose CT denoising.

We use a real clinical dataset authorized for the 2016 NIH-AAPM-Mayo Clinic LDCT Grand Challenge by Mayo Clinic.222https://www.aapm.org/GrandChallenge/LowDoseCT/ This dataset contains 5946 pairs of normal-/low- dose images with a slice thickness of 1mm. We randomly select 5000 pairs of images as the training set, and the rest as the test set. We compare SVID with a fully supervised U-Net. Note that SVID only uses low-dose CT images to learn denoising without the normal-dose images. The comparison results are reported in Figure 8 and Table 2. As can be seen, our SVID is also effective for noise in CT. SVID is successful in removing the majority of noise and restoring high-quality image details. Its performance is close to the fully supervised U-Net.

4.3 Stop-gradient

Our SVID employs a Siamese network to perform self-verification. An undesired trivial solution to Siamese networks is all outputs collapse to a constant. There are several strategies to prevent Siamese networks from collapsing, such as contrastive learning, clustering and stop-gradients [3]. In SVID, the stop-gradient operation is a natural consequence of introducing an extra variable. Here, our aim is to show that the stop-gradient is critical to preventing SVID from collapsing.

Figure 9 presents a comparison with and without using the stop-gradient. Without stop-gradients, all outputs of the denoising network collapse to 0 and the objective function Eq. (12) reaches its minimum of 0. With stop-gradients, the objective function Eq. (12) implicitly constrains the removed noise to be zero-mean. Therefore, the denoised image is prevented from collapsing to 0. Moreover, the deep CNN tends to capture low-level image statistics, which promotes the denoised image to look like a clean natural image.

5 Conclusion

We have demonstrated that the output of a neural network can be a prior for image denoising. Based on this prior, we developed a new self-verification regularization where even without any clean data, the network can learn to denoise in a self-supervised manner. We demonstrated the effectiveness and broad applicability of the proposed method on several denoising tasks involving different noise properties and data types. We believe that this study hints at the advantage of exploring the use of CNNs to generate deep image priors for various CV tasks.

References

- [1] J. Batson and L. Royer. Noise2self: Blind denoising by self-supervision. In ICML, 2019.

- [2] A. Buades, B. Coll, and J.-M. Morel. A non-local algorithm for image denoising. In CVPR, 2005.

- [3] X. Chen and K. He. Exploring simple siamese representation learning. In CVPR, 2021.

- [4] K. Dabov, A. Foi, V. Katkovnik, and K. Egiazarian. Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Transactions on Image Processing, 16(8):2080–2095, 2007.

- [5] W. Dong, G. Li, G. Shi, X. Li, and Y. Ma. Low-rank tensor approximation with laplacian scale mixture modeling for multiframe image denoising. In ICCV, 2015.

- [6] J.-B. Grill, F. Strub, F. Altché, C. Tallec, P. H. Richemond, E. Buchatskaya, C. Doersch, B. A. Pires, Z. D. Guo, M. G. Azar, et al. Bootstrap your own latent: A new approach to self-supervised learning. In NeurIPS, 2020.

- [7] S. Gu, L. Zhang, W. Zuo, and X. Feng. Weighted nuclear norm minimization with application to image denoising. In CVPR, 2014.

- [8] Y. Hou, J. Xu, M. Liu, G. Liu, L. Liu, F. Zhu, and L. Shao. Nlh: A blind pixel-level non-local method for real-world image denoising. IEEE Transactions on Image Processing, 29:5121–5135, 2020.

- [9] T. Huang, S. Li, X. Jia, H. Lu, and J. Liu. Neighbor2neighbor: Self-supervised denoising from single noisy images. In CVPR, 2021.

- [10] D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- [11] A. Krull, T.-O. Buchholz, and F. Jug. Noise2void-learning denoising from single noisy images. In CVPR, 2019.

- [12] S. Laine, T. Karras, J. Lehtinen, and T. Aila. High-quality self-supervised deep image denoising. In NeurIPS, 2019.

- [13] J. Lehtinen, J. Munkberg, J. Hasselgren, S. Laine, T. Karras, M. Aittala, and T. Aila. Noise2noise: Learning image restoration without clean data. In ICML, 2018.

- [14] D. Liu, B. Wen, Y. Fan, C. C. Loy, and T. S. Huang. Non-local recurrent network for image restoration. In NeurIPS, 2018.

- [15] K. Ma, Z. Duanmu, Q. Wu, Z. Wang, H. Yong, H. Li, and L. Zhang. Waterloo exploration database: New challenges for image quality assessment models. IEEE Transactions on Image Processing, 26(2):1004–1016, 2016.

- [16] D. Martin, C. Fowlkes, D. Tal, and J. Malik. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In ICCV, 2001.

- [17] D. Meng and F. De La Torre. Robust matrix factorization with unknown noise. In ICCV, 2013.

- [18] Y. Quan, M. Chen, T. Pang, and H. Ji. Self2self with dropout: Learning self-supervised denoising from single image. In CVPR, 2020.

- [19] O. Ronneberger, P. Fischer, and T. Brox. U-net: Convolutional networks for biomedical image segmentation. In MICCAI, 2015.

- [20] I. Selesnick. Total variation denoising via the moreau envelope. IEEE Signal Processing Letters, 24(2):216–220, 2017.

- [21] H. Shan, A. Padole, F. Homayounieh, U. Kruger, R. D. Khera, C. Nitiwarangkul, M. K. Kalra, and G. Wang. Competitive performance of a modularized deep neural network compared to commercial algorithms for low-dose ct image reconstruction. Nature Machine Intelligence, 1(6):269–276, 2019.

- [22] E. P. Simoncelli and E. H. Adelson. Noise removal via bayesian wavelet coring. In ICIP, 1996.

- [23] D. Ulyanov, A. Vedaldi, and V. Lempitsky. Deep image prior. In CVPR, 2018.

- [24] J. Xu, L. Zhang, and D. Zhang. A trilateral weighted sparse coding scheme for real-world image denoising. In ECCV, 2018.

- [25] J. Yao, D. Meng, Q. Zhao, W. Cao, and Z. Xu. Nonconvex-sparsity and nonlocal-smoothness-based blind hyperspectral unmixing. IEEE Transactions on Image Processing, 28(6):2991–3006, 2019.

- [26] Z. Yue, H. Yong, Q. Zhao, D. Meng, and L. Zhang. Variational denoising network: Toward blind noise modeling and removal. In NeurIPS, 2019.

- [27] Z. Yue, Q. Zhao, L. Zhang, and D. Meng. Dual adversarial network: Toward real-world noise removal and noise generation. In ECCV, 2020.

- [28] J. Zbontar, L. Jing, I. Misra, Y. LeCun, and S. Deny. Barlow twins: Self-supervised learning via redundancy reduction. arXiv preprint arXiv:2103.03230, 2021.

- [29] K. Zhang, W. Zuo, Y. Chen, D. Meng, and L. Zhang. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Transactions on Image Processing, 26(7):3142–3155, 2017.

- [30] K. Zhang, W. Zuo, and L. Zhang. Ffdnet: Toward a fast and flexible solution for cnn-based image denoising. IEEE Transactions on Image Processing, 27(9):4608–4622, 2018.

- [31] Y. Zhang, K. Li, K. Li, G. Sun, Y. Kong, and Y. Fu. Accurate and fast image denoising via attention guided scaling. IEEE Transactions on Image Processing, 30:6255–6265, 2021.

- [32] Y. Zhang, K. Li, K. Li, B. Zhong, and Y. Fu. Residual non-local attention networks for image restoration. In ICLR, 2019.

- [33] Y. Zhang, Y. Tian, Y. Kong, B. Zhong, and Y. Fu. Residual dense network for image restoration. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(7):2480–2495, 2020.

- [34] Q. Zhao, D. Meng, Z. Xu, W. Zuo, and L. Zhang. Robust principal component analysis with complex noise. In ICML, 2014.