Self-Supervised Side-Tuning for Few-Shot Segmentation

Abstract

The IJCAI–PRICAI–20 Proceedings will be printed from electronic manuscripts submitted by the authors. The electronic manuscript will also be included in the online version of the proceedings. This paper provides the style instructions.

1 Introduction

While matching unseen objects across modalities appears effortless for human vision, it is extremely challenging for computer vision. When new categories are added or replaced in tasks such as classification, detection and segmentation, it takes not only time to collect and label a large amount of data, but also time to retrain the corresponding model. So it is very necessary to study how to design the model so that it can quickly learn the corresponding features from few number of samples, which is the few-shot learning problem. This is especially significant for segmentation tasks, because it takes much more time to finely assign pixel level labels to a single image than bounding box anotations or image-level tags. In this case, few-shot segmentation method can effectively reduce the overall cost and improve the flexibility of segmentation models.

Few-shot segmentation task aims at minimize generalization error across different episodes in which few samples and corresponding labels (we call it support setting) of an unseen class are provided to segment other images (we call it query images) of the same class. Existing methods generally follow the steps below. First, the query and support images are respectively projected into the same feature space, and then the support mask information is applied to select the features of the corresponding category in the support setting. Guided by the learnable similarity metric function, the approximate spatial position of the corresponding object in the query image is located and finally refined by the decoder.

However, we find that the existing methods are not good enough even to segment the support image itself under the guidance of corresponding support setting in many cases, which is the simplest special case in the few-shot segmentation task. The representative texture or spatial features extracted from the support image and mask are not discriminative due to the previously unseen and sparse number of categories in each episode. In the absence of such valid semantic information, it is difficult to identify the support image itself, let alone objects of the same category in other scenarios (query images).

To make up for this, we applied a self-supervised side-tuning framework for few-shot segmentation to provide personalized prior parameters for each new task. Specifically, before query-support similarity comparision in each episode, we use the support setting to construct an online self-supervised segmentation task, in which the initial support feature is used as the inputs to both branches. The resulting loss gradient provides information about how to segment itself better and dynamically adjust the spatial texture characteristics of the corresponding class, thus guiding the query image to locate the spatial position with more sufficient semantics. Moreover, the self-supervised module we designed is exactly the subtask of the few-shot segmentation mentioned above. While using the self-supervised module to guide the segmentation, we also directly add the resulting loss to the final main segmentation loss to promote the update of whole model. This subtask loss is equivalent to a data enhancement to few samples and then constructing more training content with the same number of training iterations. Our experimental results on both Pascal-5i and COCO datasets achieve the state of the art. Furthermore, the visual analysis of SSM modules and subtask loss also proves their superiority.

Our main contributions are as follows:

1.We design a self-supervised side-tuning suitable for few-shot segmentation to help the model dynamically adjust its inductive bias in different episodes.

2.The self-segmentation subtask is introduced into the training process to assist the network to learn better feature representation and similarity function.

3.We achieved the state of the art in both 1-shot and 5-shot few-shot segmentation task.

2 Related Work

Few-shot learning In the computer vision community, one-shot learning has recently received a lot of attention and substantial progress has been made based on deep learning. Generally, these methods can be devided into three categories. Metric-based method aims at learning the best feature representation for new categories with few samples and finding a suitable comparision method such as cosine or Euclidean distance. Unlike the above methods that focus on the projection and metric of two branches, optimization-based method optimizes network weights quickly based on the backpropagation of gradient. The model-agnostic meta-learning (MAML) and Reptile algorithm are proposed to obtain the best global initial seeds such that a small number of gradient updates will lead to fast learning on a new task. Model-based method mainly utilizes the internal architecture of the network (such as memory module, meta-learner, etc.) to realize the rapid parameter update in new categories. In the latest study, A, B introduce machine learning methods such as SVM and ridge regression into the inner loop of the base learner, and C directly replace the inner loop with neural network structure in an encoded-decode form. This method is widely influenced by meta-learning and has achieved the state-of-art performance in one-shot classification task at present. Our model is inspired by the ideas of model-based methods.

Semantic Segmentation Semantic segmentation is an important task in computer vision and FCNs have greatly promoted the development of the field. Among them, DeepLabV3 and PSPNet propose different global contextual modules, which pay more attention to the scale change and global information in the segmentation process. A and B consider the full-image dependencies from all pixels based on Non-local Networks, which show superior performance in terms of reasoning. We draw ideas from these methods and apply them to one-shot segmentation problem.

Few-shot Semantic Segmentation While the work on one-shot learning is quite extensive, the research on one-shot segmentation has been established only recently, including one-shot image segmentation and one-shot video segmentation. shaban2017one first proposes the definition and task of one-shot segmentation. Following this, A solves the problem with sparse pixelwise annotations, and then extends their method to interactive image segmentation and video object segmentation. B generalizes the few-shot semantic segmentation problem from 1-way (class) to N-way (classes). C introduces an attention mechanism to effectively fuse information from multiple support examples and propose an iterative optimization module to refine the predicted results. D process both support and query images within one network with similarity guidance and adaptive masked proxies respectively. E and F leverage the annotations of the support images as supervision in different ways. Different from them, we pay more attention to the contextual dependencies reasoning between the query and support images.

3 Problem Description

We define a input triple , a label and a relation function , where and are the query and support images containing objects of the same class i correspondingly, and are the pixel-wise labels corresponding to the class objects in and , is the actual segmentation result, and is all parameters to be optimized in function F. Our task is to randomly sample triples from the dataset, train and optimize , thus minimizing the loss function :

| (1) |

We expect that the relationship function can segment the same class object region in another target image each time it sees a reference image of a new class. This is the embodiment of the meaning of one-shot segmentation. It should be mentioned that the classes sampled by the test set are not present in the training set, that is, . The relation function in this problem is implemented by the model detailed in Section.

4 Method

4.1 Model Overview

Different from other solutions of few-shot segmentation, we adopt an optimization-based meta-learning model as a whole. The model is mainly composed of a base-learner that quickly adapts to the inductive bias of new types of data and a meta-learner that learns the universal representation and two-branch fusion method. These two modules will be explained in detail in the next two subsections. In this subsection, we mainly represent the whole model mathematically, and the symbolic representation is consistent with the 3.

As shown in Figure 2, a Siamese network is first used to extract the features of two branch input images. Since query and support images belong to the same category in few-shot segmentation tasks, sharing parameters not only promotes optimization but reduces the amount of calculation. At this step, the preliminary feature representations and are obtained as follows:

| (2) | |||

| (3) |

Here is the learnable parameter of the sharing encoder.

To enable the model to extract the personalized features of the new type of images in each episode, we propose a base-learner suitable for few-shot segmentation tasks. We extract () the prior information which guides the new type in the support set, and adjust () the combination of preliminary feature representations accordingly:

| (4) |

Inspired by the Relation Network, our meta-learner consists of a learnable deep metric and a segmentation decoder . It compares the reorganization features, determines similar regions in query images, and upsamples to the original image size:

| (5) |

Similarly, and stand for the parameters of metric and decoding part. With continuous learning from different episodes, our meta-learner gradually improves the learning ability of metric, decoder and base-learner.

4.2 Self-Segmentation Base Learner

Considering that there are only two types of foreground and background pixels in the support image of the few-shot segmentation task and the proportion is imbalanced, if the base-learner used in the classification method is directly transplanted, it will easily lead to overfitting and large amount of calculation. Therefore, for the characteristics of the few-shot segmentation task, we regard the self-segmentation task of the support image as the base learner. That is, we see the condition where one image as both query and support input should can segment itself as a prerequisite for segmenting other images of the same type. Specifically, this prior from the base learner is in the form of the loss gradient information. We copy the features of the support image as the two inputs of 5 in 4.1 and calculate the standard cross-entropy loss () with the corresponding label:

| (6) |

Then we backpropagate the loss function for the gradient of the support feature and perform a gradient ascent to make the feature combination mode more suitable for the characteristics of this new type:

| (7) |

Note that only the feature representation is updated here and the network parameters are not updated.

4.3 Deep Learnable Meta Learner

Affected by the Relation Network, we use a deeply nonlinear metric to better measure the similarity between the two branches. At the same time, rakelly2018few proves that thd late fusion for images and masks is more suitable for few-shot segmentation tasks, so we use the deep metric with late fusion as the main body of meta-learner. First, we multiply the feature by the downsampling mask and pool it to obtain the representative feature of the foreground. This feature is then tiled to the original spatial scale, so that each position of the query feature is aligned with the representative feature ():

| (8) |

Then through the Relation Network comparison learning, the preliminary segmentation result of the query imagee is obtained:

| (9) |

Finally, we input it into the segmentation decoder, refine and restore the original image size to get accurate segmentation results.

4.4 Training Loss and Generalization to 5-shot Learning

In addition to the cross-entropy loss function of segmentation obtained by query-support setting commonly used in other methods (we call it the main loss), we also include the cross-entropy loss of support-support segmentation from base-learner (we call it subtask loss) into the final training loss as an auxiliary. In our method, the subtask loss itself is created as an intermediate process, so there is not much extra computation required. It can be seen from the experiment part that the auxiliary loss can accelerate the convergence and improve the training effect.

When generalizing to 5-shot segmentation, the main difference is the base-learner part. Considering that the number of samples for 5 images is small, if we calculate the gradient optimization together, it is easy to produce large heterosexuality. Therefore, we calculate the gradient optimization separately, get 5 preliminary segmentation results, and then take the average to get the final result, that is:

| (10) |

| +SSM | +SS Loss | i=0 | i=1 | i=2 | i=3 | mean |

|---|---|---|---|---|---|---|

| 49.6 | 62.6 | 48.7 | 48.0 | 52.2 | ||

| ✓ | 53.2 | 63.6 | 48.7 | 47.9 | 53.4 | |

| ✓ | 51.1 | 64.9 | 51.9 | 50.2 | 54.5 | |

| ✓ | ✓ | 54.4 | 66.4 | 54.2 | 52.5 | 56.9 |

| +SSM | +SS Loss | i=0 | i=1 | i=2 | i=3 | mean |

| 68.6 | 72.6 | 53.3 | 64.6 | 0 | ||

| ✓ | 69.4 | 73.7 | 53.5 | 64.9 | 0 | |

| ✓ | 70.8 | 77.5 | 59.0 | 68.2 | 54.5 | |

| ✓ | ✓ | 0 | 0 | 0 | 0 | 0 |

| +SSM | +SS Loss | i=0 | i=1 | i=2 | i=3 | mean |

|---|---|---|---|---|---|---|

| 53.2 | 66.5 | 51.5 | 51.4 | 55.7 | ||

| ✓ | 56.6 | 67.2 | 56.4 | 54.0 | 58.6 | |

| ✓ | 56.8 | 68.7 | 58.4 | 55.0 | 59.7 | |

| ✓ | ✓ | 58.6 | 68.7 | 58.5 | 55.3 | 60.3 |

| +SSM | +SS Loss | i=0 | i=1 | i=2 | i=3 | mean |

|---|---|---|---|---|---|---|

| 69.6 | 75.7 | 51.5 | 67.3 | 66.0 | ||

| ✓ | 72.3 | 76.9 | 56.5 | 68.2 | 68.5 | |

| ✓ | 0 | 0 | 0 | 0 | 0 | |

| ✓ | ✓ | 73.5 | 77.4 | 58.8 | 68.5 | 69.6 |

| Method | Backbone | i=0 | i=1 | i=2 | i=3 | mean |

| OSLSM | Vgg16 | 33.6 | 55.3 | 40.9 | 33.5 | 43.9 |

| SG-One | Vgg16 | 40.2 | 58.4 | 48.4 | 38.4 | 46.3 |

| PAnet | Vgg16 | 42.3 | 58.0 | 51.1 | 41.2 | 48.1 |

| FWBFS | Vgg16 | 47.0 | 59.6 | 52.6 | 48.3 | 51.9 |

| Ours | Vgg16 | 50.9 | 63.0 | 51.2 | 49.6 | 53.7 |

| CAnet | ResNet50 | 52.5 | 65.9 | 51.3 | 51.9 | 55.4 |

| FWBFS | ResNet101 | 51.3 | 64.5 | 56.7 | 52.2 | 56.2 |

| Ours | ResNet50 | 54.4 | 66.4 | 54.2 | 52.5 | 56.9 |

| Method | Backbone | i=0 | i=1 | i=2 | i=3 | mean |

| OSLSM | Vgg16 | 35.9 | 58.1 | 42.7 | 39.1 | 43.8 |

| SG-One | Vgg16 | 41.9 | 58.6 | 48.6 | 39.4 | 47.1 |

| PAnet | Vgg16 | 51.8 | 64.6 | 59.8 | 46.5 | 55.7 |

| FWBFS | Vgg16 | 50.9 | 62.9 | 56.5 | 50.1 | 55.1 |

| Ours | Vgg16 | 52.5 | 64.8 | 54.2 | 51.3 | 55.7 |

| CAnet | ResNet50 | 55.5 | 67.8 | 51.9 | 53.2 | 57.1 |

| FWBFS | ResNet101 | 54.8 | 67.4 | 62.2 | 55.3 | 59.9 |

| Ours | ResNet50 | 58.6 | 68.7 | 58.5 | 55.3 | 60.3 |

| Method | mean-IoU | FB-IoU | ||

| 1-shot | 5-shot | 1-shot | 5-shot | |

| A-MCG | - | - | 52 | 54.7 |

| PANet | 20.9 | 29.7 | 59.2 | 63.5 |

| FWBFS | 21.2 | 23.7 | - | - |

| Ours | 22.2 | |||

5 Experiment

5.1 Dataset and Settings

Dataset To evaluate the performance of our model, we experiment on Pascal- dataset, which was first proposed in shaban2017one and was recognized as the standard dataset in the field of one-shot segmentation in subsequent work. That is, from the set of 20 classes in Pascal dataset, we sample five and consider them as the test subsets , with i being the fold number (), and the remaining 15 classes form the training set . The specific class name is shown in Table. In the test stage, we randomly sample 1000 pairs of images. To be fair, we refer to the contents of test set in B.

Settings Like shaban2017one, we choose the per-class foreground Intersection-over-Union (IoU) and the average IoU over all classes (meanIoU) as the main evaluation indicator of our task. While the foreground IoU and background IoU (FB-IoU) is a commonly used indicator in the field of binary segmentation, it is also used by a small number of papers in the one-shot segmentation task. Because meanIoU can better measure the overall level of different classes and ignore the proportion of background, we show the results of meanIoU in all experiments and FB-IoU only in the comparision with some methods for reference.

5.2 Ablation Study

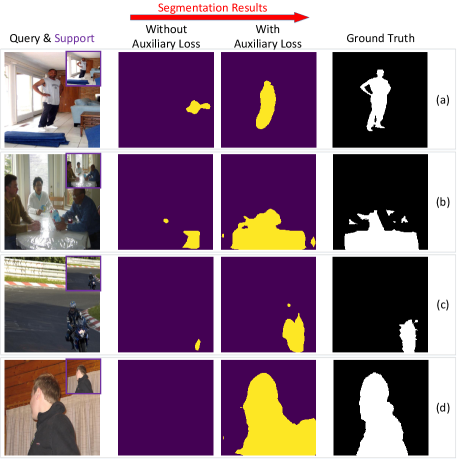

To prove the effectiveness of our architecture, We conduct several ablation experiments as shown in Table LABEL:tab:ablation_1shot and LABEL:tab:ablation_5shot. To improve efficiency, all models in the ablation study only choose ResNet50 as backbone for fair comparison. Our network is mainly attributed to two prominent components: SSM and Subtask Loss. The following will evaluate the efficacy of each and integration of both. We first train a baseline without the two modules, and then add them respectively to compare the results.

5.3 Comparsion with SOTA

To better assess the overall performance of our network, we compare it to SOTA in and datasets.

5.4

5.5

5.6 Analysis

5.6.1 Function of Pretext Task

5.6.2 Importance of Subtask Loss

mask for self-seg

6 Conclusion

Acknowledgments

The preparation of these instructions and the LaTeX and BibTeX files that implement them was supported by Schlumberger Palo Alto Research, AT&T Bell Laboratories, and Morgan Kaufmann Publishers. Preparation of the Microsoft Word file was supported by IJCAI. An early version of this document was created by Shirley Jowell and Peter F. Patel-Schneider. It was subsequently modified by Jennifer Ballentine and Thomas Dean, Bernhard Nebel, Daniel Pagenstecher, Kurt Steinkraus, Toby Walsh and Carles Sierra. The current version has been prepared by Marc Pujol-Gonzalez and Francisco Cruz-Mencia.