Self-Supervised Learning for Effective Denoising of Flow Fields

Abstract

In this study, we proposed an efficient approach based on a deep learning (DL) denoising autoencoder (DAE) model for denoising noisy flow fields. The DAE operates on a self-learning principle and does not require clean data as training labels. Furthermore, investigations into the denoising mechanism of the DAE revealed that its bottleneck structure with a compact latent space enhances denoising efficacy. Meanwhile, we also developed a deep multiscale DAE for denoising turbulent flow fields. Furthermore, we used conventional noise filters to denoise the flow fields and performed a comparative analysis with the results from the DL method. The effectiveness of the proposed DL models was evaluated using direct numerical simulation data of laminar flow around a square cylinder and turbulent channel flow data at various Reynolds numbers. For every case, synthetic noise was augmented in the data. A separate experiment used particle-image velocimetry data of laminar flow around a square cylinder containing real noise to test DAE denoising performance. Instantaneous contours and flow statistical results were used to verify the alignment between the denoised data and ground truth. The findings confirmed that the proposed method could effectively denoise noisy flow data, including turbulent flow scenarios. Furthermore, the proposed method exhibited excellent generalization, efficiently denoising noise with various types and intensities.

I Introduction

Understanding, modeling, and controlling fluid flow is crucial in various fields, such as energy production, aerospace engineering, and weather prediction. However, the fluid dynamics in these fields are complex. The turbulent flow is a particularly challenging problem because of its highly nonlinear and chaotic behavior. In most situations, mass and accurate data are required for fluid flow visualization and statistical analysis. Experimental and computational fluid dynamics (CFD) has been used to develop many efficient data generation methods. In CFD, direct numerical simulation (DNS) has been used to accurately simulate various cases of fluid flows by solving the Navier–Stokes equation[1, 2, 3]. In experimental fields, particle-image velocimetry (PIV) was applied to visualize and quantify the complex instantaneous structure of fluid flows[4]. Advanced derivatives of PIV, such as tomographic PIV[5] and thermographic PIV[6], are used to measure three-dimensional flow and temperature fields. However, the accuracy of PIV measurements is limited by white noise, such as mechanical vibration, inadequate illumination, background speckle, and optical problems caused by the inevitable errors of the experimental setup[7]. Noise reduces the signal-to-noise ratio (SNR) of small-scale velocity fluctuations and PIV measurement accuracy, especially when measuring the small-scale velocity fluctuations of turbulent flows.

Several denoising methods, including energy, convolution, wavelet, and optimal Wiener filters, have been proposed to increase PIV measurement accuracy[8, 9]. In addition to filter-based denoising methods, data-driven approaches, such as proper orthogonal decomposition[10, 11] and dynamic mode decomposition[12], achieve excellent resolution reconstruction and noise elimination [13, 7]. Because of their linear nature, these methods exhibit a limited capacity for denoising the complex fluid flow with high nonlinearities and multiple spatiotemporal scales[14].

Deep learning (DL) algorithms, a subset of machine learning (ML), are increasingly being used in various domains[15]. DL is widely adopted in fluid dynamics because it effectively handles highly nonlinear mappings[14]. In particular, DL can be used to address challenges related to turbulent flow problems, such as the temporal data generation of turbulent flow[16, 17], reconstruction of flow fields[18, 19, 20, 21], and prediction of flow field parameters[22, 23].

Using DL algorithms for data denoising is a critical topic of research. In computer vision studies, denoising DL models have been extensively investigated, especially for image denoising[24]. However, most DL denoising models are based on supervised learning, which requires paired clean and noisy data to train DL models. Supervised DL methods are not always feasible in fluid flow dynamics problems because paired data are not always available. Therefore, unsupervised learning with unpaired datasets is an excellent alternative for reconstructing fluid flow fields. Generative adversarial networks (GANs) proposed by Goodfellow et al.[25], is a well-known unsupervised learning algorithm. Based on the GANs, Kim et al.[26] applied an unsupervised cycle-consistent GANs (CycleGANs) model for the super-resolution reconstruction of turbulent flows. However, although the low-resolution (LR) and high-resolution (HR) datasets are not paired in the training process, HR data are still required.

Physics-informed neural networks (PINNs)[27] have become a critical topic of interest. PINNs are function approximators described using partial differential equations for embedding the information of physical laws governing a given dataset in the learning process. Fathi et al.[28] proposed a physics-informed DL method for processing 4D-flow MRI to increase the spatiotemporal resolution and reduce noise. This method used a deep neural network to approximate the underlying flow field. Similarly, Gao et al.[29] proposed a physics-informed deep learning (DL) solution for the spatial super-resolution of flow fields. DL model training only requires LR samples instead of their HR counterparts as labels using fluid flows’ physical laws and boundary conditions. After adequate training, the DL model could spatially reconstruct the flow field. Here, a noisy LR was inputted in the parameter space. However, PINN limitations cannot be neglected. For example, PINNs cannot effectively propagate information from initial or boundary conditions to latent parts of the domain or future times, particularly in large computational domains, for example, unsteady turbulent flow[30, 31]. Therefore, PINNs can be weak in efficiently solving complex turbulent flow problems.

Reinforcement learning (RL), another subset of the ML method in which an agent is trained to make decisions through interaction with the environment, achieves excellent results in robotics, game playing, natural language processing, and computer vision problems[32]. This learning approach renders deep RL (DRL) a viable method for addressing various challenges within fluid dynamics, such as active flow control [33, 34], design optimization[35], and CFD[36]. Yousif et al.[37] proposed a physics-constrained DRL (PCDRL) to correct flow fields from noisy data. The momentum equation, pressure Poisson equation, and boundary conditions were used to calculate the reward function. Using the physics-based reward function, the agent can determine the most suitable filter to denoise the value for the points in the flow field. Using physical constraints, PCDRL was data-free without using clean data as the target.

However, these physics-constrained and physics-informed methods have a common limitation: all flow field parameters are required to solve the governing equations. Therefore, they cannot solve certain cases, particularly unsteady turbulent flows in which two-dimensional (2D) data are derived from three-dimensional (3D) data, such as 2D experimental data obtained through PIV. This study developed an efficient method for fluid flow denoising without clean data requirements. This method is suitable for denoising noisy turbulent flow data.

This study revealed that a bottleneck-shaped autoencoder neural network with a small latent space exhibits excellent flow field denoising ability. The autoencoder is a self-supervised DL model with the same input and target data. Thus, this method does not require clean data. This study investigated the mechanism by which the bottleneck-shaped autoencoder denoises data. To evaluate denoising performance, noisy data of laminar flow around a square cylinder were considered. Next, deep multiscale DAE (DMS-DAE) was developed to denoise the 2D sections of the noisy turbulent channel flow. Here, DNS generated the data of the flow cases, and noise was added synthetically. Noisy PIV data of flow over a square cylinder were applied to check the denoising ability of the proposed DAE model on real experimental data.

The remainder of this paper is organized as follows. Sec. 2 introduces DNS data generation, the methods for adding synthetic noise, and the experimental data generation used in this study. Sec. 3 details the proposed model. Sec. 4 details the results for all cases. Finally, Sec. 5 presents the conclusions.

II Generation of the data set

II.1 DNS data and synthetic noise

This study used two DNS flow data sets with synthetic noise to evaluate the DL model. First, the 2D laminar flow around a square cylinder (bluff body flow) at = 100 were used as a demonstration case. The turbulent channel flow at = 180, 395, and 550 were used for subsequent denoising performance tests of MS-DAE.

In the bluff body flow, the simulation domain size was set to × = 20 × 15, where and are the streamwise and spanwise directions, respectively. The corresponding grid size was 381 × 221. The stretching mesh technique applied local mesh refinement near the cylinder walls. The uniform inlet velocity and pressure outlet boundary conditions were applied to the inlet and outlet of the domain. Furthermore, no-slip boundary conditions were used in the cylinder walls and the symmetry plane to the sides of the domain. The dimensionless time step of the simulation, , was set to . The DNS data used in this study were cut around the edges and interpolated into uniform grids, where the new domain size is × = 17 × 8 and the uniform grid size is 256 × 128. Moreover, the interval between the collected snapshots of the flow fields i s ten times the duration of the simulation time step. A total of 1200 snapshots were collected and used.

For the turbulent channel flow, three friction Reynolds numbers, namely = = 180, 395, and 550, were used. Here, is the friction velocity, and is half of the channel height. Table 11 lists the simulation parameters of each . The periodic boundary condition was used in the streamwise and spanwise directions. Moreover, the no-slip condition was applied to the upper and lower walls of the channel. The turbulence statistics obtained from the DNS were validated by comparing them with the results from reference paper[2, 3].

Training and testing data were collected from the – plane of the 3D channel flow domain. The flow data at = 395 and 550 were interpolated to align with the grid size of the DNS data for the flow at = 180, which features 128 and 256 grids in the - and -directions, respectively. This data processing ensures that the flow at all three Reynolds numbers can be input into the DL model simultaneously.

In this study, synthetic noises, namely Gaussian noise, salt-and-pepper noise, and speckle noise, were added to degrade the quality of all DNS data cases. For Gaussian noise, zero-mean random noise with (0,) was added to the flow fields, as displayed in Figure 1 (a), where , are the normal distribution and variance, respectively. Salt-and-pepper noise data are generated by randomly adding the minimum and maximum values of the velocity components to the flow data, as displayed in Figure 1 (b). Speckle noise is a granular texture degrading quality due to interference among wavefronts in coherent imaging systems, such as optical coherence tomography [38]. This study simplified speckle noise by assuming it exists only at the particle positions in the PIV system. Thus, artificial speckle noise is generated using a method displayed in Figure 1 (c). First, an artificial particle background, where the density of the particles was 50%, imitating the real PIV measurement, was devised. Next, all pixels with particles (white) were considered as coefficient 1. By contrast, the black region without particles was coefficient 0. Finally, speckle noise data were obtained by multiplying the background coefficient with Gaussian noise and adding it to the flow data. Moreover, the SNR represents the noise level. Here, SNR=, where and are the variances of the DNS and noise data, respectively. This study defines the noise level as the reciprocal of the SNR, 1/SNR.

II.2 Configuration of PIV measurement

Noisy PIV data of flow over a square cylinder were generated and used in this study. Noisy PIV data were generated through a return-type water channel. The test section size of the water channel was 1 m (length) × 0.35 m (height) × 0.3 m (width). The free stream velocity was set to 0.02 m s-1 with an of 200. Background noise was generated at high levels because of external noise and the sparse honeycomb configuration of the water channel. Polyamide12 seed particles of INTECH SYSTEMS were used to seed the channel with a 50 m diameter. A high-speed camera (FASTCAM Mini UX 50) and a continuous laser with a 532 nm wavelength were used in the PIV system. The snapshot frequency was set to 24 Hz. In this experiment, a square cylinder model was constructed using an acrylic board, and the cross-section of the model was set to 1 × 1 cm. The model was not entirely transparent. Thus, a shadow region existed near the bluff body when the laser passed through the model.

III DL methodology

III.1 Denoising autoencoder

Autoencoder (AE) is a classical neural network extensively used for image classification, object detection, and natural language processing [30]. AE solves various problems in fluid dynamics, such as the super-resolution reconstruction of flow fields [39] and predicting turbulent dynamics [40]. Furthermore, in this study, AE’s fluid flow denoising ability was introduced. The denoising mechanism of the autoencoder was investigated by comparing various networks with different structures. This comparison used 1000 and 200 snapshots of noisy bluff body flow data with Gaussian noise at 1/SNR = 0.5 as training and validation data, respectively. The training of the AE was based on self-supervised learning without clean label data, in which the input and output were the same. Thus, the testing data were the same as the training data. Table 2 summarises the comparison, which indicates that only bottleneck-shaped AE with convolutional neural networks (CNNs) and a small latent space size can denoise the data. Such AE is called DAE.

| Cases | Shape of networks | Denoising ability | ||

| Network structure | Type of layers | Latent sapce size | ||

| Non-AE | Dense Layers | / |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/4ad4a7e5-9bf4-4822-899e-1e018720f79c/x2.png)

|

No |

| CNN Layers | / | No | ||

| Bottlebody-shaped AE | CNN AE | 256 × 512 |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/4ad4a7e5-9bf4-4822-899e-1e018720f79c/x3.png)

|

No |

| 512 × 1024 | No | |||

| 1024 × 2048 | No | |||

| Bottleneck-shaped AE | Dense Layers | 8 × 16 |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/4ad4a7e5-9bf4-4822-899e-1e018720f79c/x4.png)

|

No |

| CNN AE | 64 × 128 | No | ||

| 32 × 64 | No | |||

| 16 × 32 | Yes | |||

| 8 × 16 | Yes | |||

| 4 × 8 | Yes | |||

Figure 2 displays the structure of the DAE. The convolution and carpooling layers make up the encoder part of DAE. The decoder is composed of convolution and sampling layers. The channel number of the convolution layers varies with the AE depth, where the CH is larger when the convolution layers are closer to the latent space. Furthermore, noisy and clean data were passed several times into the DAE to output procedure data from various convolution layers. As displayed in Figure 3, the noise level was reduced with the output from various convolution layers in the encoder. This phenomenon proved that the DAE encoder can filter the noise as a funnel. Therefore, only bottleneck-shaped AE can denoise rather than bottlebody-shaped AE. When noisy data passed the encoder, the data size was compressed and decreased by pooling layers. The filters of the convolution layer were not sufficiently sensitive for capturing high-frequency noise features. Thus, noise information cannot be preserved in the encoder. If noisy data were not compressed and filtered sufficiently with a large latent space, noise information was retained so that the AE loses its denoising ability. The noise of the output around the latent space increased slightly because the procedure data around the latent space were highly compressed and sensitive to 1/SNR calculation. The procedure data in the decoder exhibited a low noise level. Finally, the denoised data were reconstructed.

III.2 Deep multiscale denoising autoencoder

The DAE in the last subsection has a simple frame that can only denoise uncomplex flow fields. The performance of this DAE for denoising the turbulent flow was not satisfactory, as the DL model could not distinguish small eddies from noise and could not reconstruct the turbulent flow well. Thus, a complex deep multiscale DAE (DMDAE) was developed. As shown in Figure 4, DMDAE features deep convolutional layers, enhancing its capability to capture features. The skip connexion was used to avoid the vanishing gradient problem in the training process. Furthermore, the encoder and decoder have three branches with various filter sizes. This multiscale CNN strategy renders the convolutional layers distinguishable [41].

This subsection introduces the training and testing processes of DAE and DMDAE. Before training, all data were normalized using the min–max normalization function using the minimum and maximum values from the average flow field to scale the values between 0 and 1. Considering DAE as an example, noisy data were passed into DAE to obtain output data. Next, the training loss was calculated from the output and noisy input data using the mean square error loss function. After calculating the training loss, the optimization algorithm updated the weights of the DAE to minimize the loss throughout the training period. This study used the adaptive moment estimation (Adam) algorithm as the optimization algorithm [42]. Validation data were used to validate the DL model by calculating the validation loss. The weights were saved only if the validation loss decreased compared with the previous epoch. On completion of the training process, testing data (identical to the training data) were inputted into the trained DL model to obtain denoised flow data. This study used the open-source library TensorFlow 2.3.0 to implement the DL model. The customized sample Python code for the proposed models can be accessed on the website (https://fluids.pusan.ac.kr/fluids/65416/subview.do).

IV Results and discussion

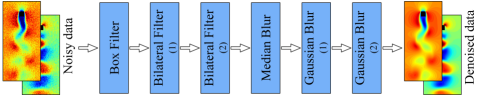

Four experiments were conducted to examine the denoising efficacy of the proposed self-supervised DL method. The first evaluation involved denoising bluff body flow data contaminated with Gaussian noise at various noise levels using DAE. The second evaluation focused on denoising bluff body flow data contaminated with three noise types, namely Gaussian, salt-and-pepper, and speckle, at a consistent noise level by using DAE. In the third evaluation, DMDAE eliminated noise from channel flows under noisy conditions at three Reynolds numbers. The fourth evaluation demonstrated denoising noisy PIV data. Furthermore, a comparative analysis was performed using a denoising method using classical filters (CF) and the proposed DL method. As displayed in Figure 5, CF contains several denoising filters, including one box filter, two bilateral filters, one median blur filter, and two Gaussian blur filters. Table 3 plots the parameter settings of the CF.

| Classical filters | Parameters |

| Box filter | Filter size = 5 × 5 |

| Bilateral filters (1) | Filter size = 5 × 5, = 0.1, = 5 |

| Bilateral filters (2) | Filter size = 5 × 5, = 1.0, = 5 |

| Median blur filter | Filter size = 5 × 5 |

| Gaussian blur filter (1) | Filter size = 5 × 5, = 0.5 |

| Gaussian blur filter (2) | Filter size = 5 × 5, = 1.5 |

IV.1 Evaluation 1: Noisy bluff body flow data with Gaussian noise at various noise levels

In this subsection, noisy bluff body flow data with Gaussian noises at 1/SNR = 0.1, 0.5, and 1.5 were used to train the DAE, where each noisy data set includes 1200 snapshots. In the testing process, noisy bluff body flow data at various noise levels from the training data were used to check the denoising performance and DAE generalization. In addition to the test using training data, noisy data at 1/SNR = 0.3 and 1 were used for the interpolation test, whose noise levels were within the noise level range of the training data. Noisy data at 1/SNR = 0.05, 0.075, 1.75, and 2 were used for the extrapolation test, whose noise levels were out of the noise level range of the training data.

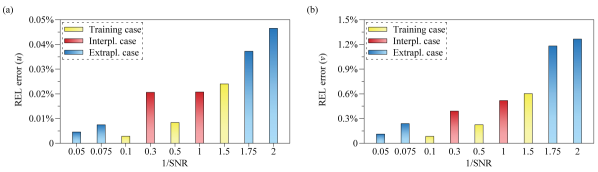

Figure 6 displays the denoised instantaneous contours of bluff body flow with Gaussian noise at 1/SNR = 0.1, 0.5, and 1.5 (training case). The DAE outperformed CF in denoising, especially in the high noise level case. DAE allowed the noise to be removed. The flow fields could be accurately reconstructed. Figure 7 displays the root-mean-square profiles of velocity fields sampling at various positions of axis. The results obtained from DAE were consistent with those of DNS. However, the results of the CF exhibit apparent deviations compared with DNS. Figure 8 displays the relative error of training, interpolation, and extrapolation cases. The relative errors revealed an increasing trend with the increase in the noise level. However, all the errors were minor, where the maximum error of is 0.05% and 1.5% for . Although the interpolation case never attends DAE training, the errors of the interpolation case were similar to those of the training case at 1/SNR =1.5. Notably, the extrapolation case not only absented in DAE training but also featured noise levels beyond the range encountered in the training cases. However, the extrapolation case could be effectively denoised, resulting in a low error level. Therefore, Figure. 8 indicates that the proposed denoising methods exhibit excellent generalization that is suitable for denoising noisy data at a range of noise levels, even if the noise level is out of the range of the training case.

IV.2 Evaluation 2: Noisy bluff body flow data with noises of three types

In this subsection, noisy bluff body flow data with various noises, that is, Gaussian noise, salt-and-pepper noise, and speckle noise at the same noise level (1/SNR = 0.5), were passed to train the DAE, where each noisy data includes 1200 snapshots.

Figure 9 displays the denoise instantaneous contours of the bluff body flow, contaminated with three types of noise at a noise level of 1/SNR = 0.5. The results denoised by DAE appear smoother than those processed by CF. Moreover, although the CF struggled with denoising salt-and-pepper and speckle noise, DAE consistently removed these three distinct noise types. Furthermore, as depicted in Figure 10, the statistical outcomes derived from the DL method exhibit higher accuracy than those obtained through CF.

IV.3 Evaluation 3: Noisy channel flow data at three Reynolds numbers

In this subsection, DMDAE was used to denoise turbulent channel flows at = 180, 395, and 550, which presents a higher level of complexity than the laminar flow cases. The dataset used for training DMDAE comprises cases of noisy channel flow data with Gaussian noise at 1/SNR = 0.5, each containing 1200 snapshots. Various outcomes, including instantaneous contours and turbulent statistics, are illustrated to demonstrate that the denoise data numerically and physically align with DNS data. The instantaneous contours (Figure 11) indicate that DMDAE effectively denoises and reconstructs the three-channel flow cases. By contrast, the CF method could not accurately distinguish flow features from noise, erroneously eliminating flow features as noise. Figures 12, 13, and 14 demonstrate the velocity and vorticity RMS profiles for the three-channel flow cases. The outcomes obtained from DMDAE were consistent with the DNS results, whereas the results from CF exhibited deviations compared with DNS. Figure 15 displays the probability density function () of the velocity components for channel flow. The velocity distribution curves of the DL results were consistent with the ground truth. Furthermore, the most pronounced deviation in the CF results occurred in the case of = 550, where the flow was more chaotic and challenging to denoise. Figures16, 17, and 18 reveal the spanwise energy spectra of the velocity components. Energy spectra results revealed that conventional denoising filters perform poorly on turbulent flow denoising as the eddies of channel flow were mistakenly eliminated. The proposed DL method performs better in ensuring that the denoise results are consistent with the DNS data.

IV.4 Evaluation 4: noisy PIV data

In this subsection, the denoising performance of the DAE was examined using noisy PIV data. Figure 19 displays the denoise instantaneous contours of the PIV data. Comparing the contours of denoise and DNS data reveals that the proposed DL model can remove most noise from noisy PIV data. However, the CF cannot denoise sufficiently where some large-scale noise exists in the flow field. By contrast, DAE cannot remove the shadow region near the bluff body. The shadow region exists in every snapshot of the PIV data; therefore, DAE regards the shadow as a part of the flow and retains it. Thus, DAE can only remove the noise randomly appearing but not restore the permanent corruption within the flow field. Figure 20 represents the RMS profiles of the velocity components, where a DNS case having the same as the PIV data is also plotted. The figure reveals that the denoised data are closer to the DNS results after denoising using the DL model.

V Conclusions

This study proposed two efficient flow field denoising DL models, namely DAE and DMDAE. The models were developed based on the self-learning principle. Therefore, the training process did not require clean data as the label. The bottleneck-shaped autoencoder with a small latent space could denoise noisy flow data. The convolutional and max-pooling layers in the encoder played a funnel role in filtering out the noise. Benefiting from eliminating noise in the encoder, the decoder would not reconstruct the noise but instead output denoised flow fields. Furthermore, DNS bluff body flow data and DNS turbulent channel flow data at various Reynolds numbers with synthetic noise were applied to evaluate the denoising capability of the proposed DL models. Three noise types, Gaussian, salt-and-pepper, and speckle noise, were used in this study. Furthermore, PIV bluff body data with natural noise were used to examine DAE denoising performance. For comparison, some classical noise filters denoise the flow fields. Four evaluations were conducted in this study. First, noisy bluff body flow data with Gaussian noises at 1/SNR = 0.1, 0.5, and 1.5 were used to train the model. In addition to regular testing, interpolation testing (noise level within the range of 0.1 to 1.5) and extrapolation (noise level out of 0.1 to 1.5) were adopted. The results revealed that DAE achieved excellent denoising capability and generalization. All the noise data cases, even the noise level not in the training data range, were well denoised. Second, noisy bluff body flow data with three noises were simultaneously used to train DAE. DAE deleted all the types of noises. Next, turbulent channel flow data with Gaussian noise at 1/SNR = 0.5 were adapted to evaluate the denoising performance for the turbulent flow by using DMDAE. The noisy channel flow data at three Reynolds numbers ( = 180, 395, and 550) were used to train the DMDAE. The instantaneous contours and statistical results indicated that DMDAE could effectively denoise noisy turbulent flow. Finally, the last evaluation proved that DAE could also denoise noisy PIV data with actual noise. Furthermore, the proposed DL method outperformed the classical denoising filter in denoising. In particular, classical denoising filters performed worst in turbulent channel flow cases, where the CF could not distinguish the small eddies and flow features from noise. The proposed DAE and DMDAE could be efficient and applicable in denoising in the fluid dynamic field, especially from their power denoising capability and generalization.

Acknowledgements.

This work was supported by ’Human Resources Program in Energy Technology’ of the Korea Institute of Energy Technology Evaluation and Planning (KETEP), granted financial resource from the Ministry of Trade, Industry & Energy, Republic of Korea (no. 20214000000140). This work was supported by the Korean Cancer Research Institute grant (2024).Data Availability

The data that supports the findings of this study are available within this article.

References

- Moin and Mahesh [1998] P. Moin and K. Mahesh, “DIRECT NUMERICAL SIMULATION: A tool in turbulence research,” Annu. Rev. Fluid Mech. 30, 539–578 (1998).

- Kim, Moin, and Moser [1987] J. Kim, P. Moin, and R. Moser, “Turbulence statistics in fully developed channel flow at low Reynolds number,” J. Fluid Mech. 177, 133–166 (1987).

- Moser, Kim, and Mansour [1999] R. Moser, J. Kim, and N. Mansour, “Direct numerical simulation of turbulent channel flow up to =590,” Phys. Fluids 11, 943–945 (1999).

- Adrian [2005] R. Adrian, “Twenty years of particle image velocimetry.” Exp Fluids 39, 159–169 (2005).

- Scarano [2013] F. Scarano, “Tomographic PIV: Principles and practice,” Meas. Sci. Technol. 24, 012001 (2013).

- Allison and Gillies [1997] S. Allison and G. Gillies, “Remote thermometry with thermographic phosphors: Instrumentation and applications.” Rev. Sci. Instrum. 68, 2615–2650 (1997).

- Scherl et al. [2020] I. Scherl, B. Strom, J. Shang, O. Williams, B. Polagye, and S. Brunton, “Robust principal component analysis for modal decomposition of corrupt fluid flows.” Rev. Fluids 5, 054401 (2020).

- Vétel, Garon, and Pelletier [2011] J. Vétel, A. Garon, and D. Pelletier, “Denoising methods for time-resolved piv measurements,” Exp. Fluids 51, 893–916 (2011).

- Oxlade et al. [2012] A. Oxlade, P. Valente, B. Ganapathisubramani, and J. Morrison, “Denoising of time-resolved piv for accurate measurement of turbulence spectra and reduced error in derivatives.” Exp. Fluids 53 (2012), https://doi.org/10.1007/s00348-012-1375-4.

- Lumley [1967] J. Lumley, “The structure of inhomogeneous turbulent flows.” In Atmospheric Turbulence and Radio Wave Propagation , pp. 166–177 (1967).

- Berkooz, Holmes, and Lumley [1993] G. Berkooz, P. Holmes, and J. Lumley, “The proper orthogonal decomposition in the analysis of turbulent flows.” Annu. Rev. Fluid Mech. 25, 539–575 (1993).

- Schmid [2010] P. Schmid, “Dynamic mode decomposition of numerical and experimental data.” J. Fluid Mech. 656, 5–28 (2010).

- He and Liu [2017] C. He and Y. Liu, “Proper orthogonal decomposition-based spatial refinement of tr-piv realizations using high-resolution non-tr-piv measurements.” Exp. Fluids 58, 86 (2017).

- Brunton, Noack, and Koumoutsakos [2020] S. L. Brunton, B. R. Noack, and P. Koumoutsakos, “Machine learning for fluid mechanics,” Annu. Rev. Fluid Mech. 52, 477–508 (2020).

- Pouyanfar et al. [2018] S. Pouyanfar, S. Sadiq, Y. Yan, H. Tian, Y. Tao, M. Reyes, M.-L. Shyu, S.-C. Chen, and S. Iyengar, “A survey on deep learning: Algorithms, techniques, and applications,” ACM Comput. Surv. 51, 92:1–92:36 (2018).

- Jiang et al. [2021] C. Jiang, R. Vinuesa, R. Chen, J. Mi, S. Laima, and H. Li, “An interpretable framework of data-driven turbulence modeling using deep neural networks,” Phys. Fluids 33, 055133 (2021).

- Duraisamy, Iaccarino, and H. [2019] K. Duraisamy, G. Iaccarino, and X. H., “Turbulence modeling in the age of data,” Annu. Rev. Fluid Mech. 51, 357–377 (2019).

- Yousif, Yu, and Lim [2021] M. Z. Yousif, L. Yu, and H.-C. Lim, “High-fidelity reconstruction of turbulent flow from spatially limited data using enhanced super-resolution generative adversarial network,” Phys. Fluids 33, 125119 (2021).

- Yousif et al. [2023a] M. Yousif, L. Yu, S. Hoyas, R. Vinuesa, and H.-C. Lim, “A deep-learning approach for reconstructing 3D turbulent flows from 2D observation data,” Sci. Rep. 13, 2529 (2023a).

- Sha et al. [2023] Y. Sha, Y. Xu, Y. Wei, W. Xia, and C. Wang, “Reconstruction of incomplete flow fields based on unsupervised learning.” Ocean Eng. 288, 116036 (2023).

- Yu et al. [2022] L. Yu, M. Yousif, M. Zhang, S. Hoyas, R. Vinuesa, and H.-C. Lim, “Three-dimensional esrgan for super-resolution reconstruction of turbulent flows with tricubic interpolation-based transfer learning.” Phys. Fluids 34, 125126 (2022).

- Guastoni et al. [2021] L. Guastoni, A. Güemes, A. Ianiro, S. Discetti, P. Schlatter, H. Azizpour, and R. Vinuesa, “Convolutional-network models to predict wall-bounded turbulence from wall quantities.” J. Fluid Mech. 928, A27 (2021).

- Yu et al. [2023] L. Yu, M. Yousif, Y.-W. Lee, X. Zhu, M. Zhang, P. Kolesova, and H.-C. Lim, “Predicting unavailable parameters from existing velocity fields of turbulent flows using a gan-based model.” arXiv.2304.07762 (2023), https://doi.org/10.48550/arXiv.2304.07762.

- Tian et al. [2020] C. Tian, L. Fei, W. Zheng, Y. Xu, W. Zuo, and C.-W. Lin, “Deep learning on image denoising: An overview.” Neural Netw. 131, 251–275 (2020).

- Goodfellow et al. [2020] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial networks.” Commun. ACM 63, 139–144 (2020).

- Kim et al. [2021] H. Kim, J. Kim, S. Won, and C. Lee, “Unsupervised deep learning for super-resolution reconstruction of turbulence.” J. Fluid Mech. 910, A29 (2021).

- Raissi, Perdikaris, and Karniadakis [2019] M. Raissi, P. Perdikaris, and G. Karniadakis, “Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations.” J. Comput. Phys. 378, 686–707 (2019).

- Fathi et al. [2020] M. Fathi, I. Perez-Raya, A. Baghaie, P. Berg, G. Janiga, A. Arzani, and R. D’Souza, “Super-resolution and denoising of 4d-flow mri using physics-informed deep neural nets.” omput. Methods Programs Biomed. 197, 105729 (2020).

- Gao, Sun, and Wang [2021] H. Gao, L. Sun, and J.-X. Wang, “Super-resolution and denoising of fluid flow using physics-informed convolutional neural networks without high-resolution labels.” Phys. Fluids 33, 073603 (2021).

- Li et al. [2023] Z. Li, H. Zheng, N. Kovachki, D. Jin, H. Chen, B. Liu, K. Azizzadenesheli, and A. Anandkumar, “Physics-informed neural operator for learning partial differential equations.” arXiv.2111.03794 (2023), https://doi.org/10.48550/arXiv.2111.03794.

- Faroughi et al. [2022] S. Faroughi, N. Pawar, C. Fernandes, M. Raissi, S. Das, N. Kalantari, and S. Mahjour, “Physics-guided, physics-informed, and physics-encoded neural networks in scientific computing.” ARXIV.2211.07377 (2022), https://doi.org/10.48550/ARXIV.2211.07377.

- Li [2018] Y. Li, “Deep reinforcement learning: An overview,” arXiv.1701.07274 (2018), https://doi.org/10.48550/arXiv.1701.07274.

- Rabault et al. [2019] J. Rabault, M. Kuchta, A. Jensen, U. Réglade, and N. Cerardi, “Artificial neural networks trained through deep reinforcement learning discover control strategies for active flow control.” J. Fluid Mech. 865, 281–302 (2019).

- Yousif et al. [2023b] M. Yousif, P. Kolesova, Y. Yang, M. Zhang, L. Yu, J. Rabault, R. Vinuesa, and H.-C. Lim, “Optimizing flow control with deep reinforcement learning: Plasma actuator placement around a square cylinder.” Phys. Fluids 35, 125101 (2023b).

- Viquerat et al. [2021] J. Viquerat, J. Rabault, A. Kuhnle, H. Ghraieb, A. Larcher, and E. Hachem, “Direct shape optimization through deep reinforcement learning.” J. Comput. Phys. 428, 110080 (2021).

- Novati, de Laroussilhe, and Koumoutsakos [2021] G. Novati, H. de Laroussilhe, and P. Koumoutsakos, “Automating turbulence modelling by multi-agent reinforcement learning.” Nat. Mach. Intell. 3, 87–96 (2021).

- Yousif et al. [2023c] M. Yousif, M. Zhang, L. Yu, Y. Yang, H. Zhou, and H. Lim, “Physics-constrained deep reinforcement learning for flow field denoising.” J. Fluid Mech. 973, A12 (2023c).

- [38] .

- Fukami, Fukagata, and Taira [2019] K. Fukami, K. Fukagata, and K. Taira, “Super-resolution reconstruction of turbulent flows with machine learning,” J. Fluid Mech. 807, 106–120 (2019).

- Racca, Doan, and Magri [2023] A. Racca, N. Doan, and L. Magri, “Predicting turbulent dynamics with the convolutional autoencoder echo state network.” J. Fluid Mech. 975, A2 (2023).

- Huang et al. [2019] W. Huang, J. Cheng, Y. Yang, and G. Guo, “An improved deep convolutional neural network with multi-scale information for bearing fault diagnosis.” Neurocomputing 359, 77–92 (2019).

- Kingma and Ba [2017] D. Kingma and J. Ba, “Adam: A method for stochastic optimization,” ArXiv14126980 Cs. (2017), doi.org/10.48550/arXiv.1412.6980.