Self-supervised Image Clustering from Multiple Incomplete Views via Constrastive Complementary Generation

Abstract

Incomplete Multi-View Clustering aims to enhance clustering performance by using data from multiple modalities. Despite the fact that several approaches for studying this issue have been proposed, the following drawbacks still persist: 1) It’s difficult to learn latent representations that account for complementarity yet consistency without using label information; 2) and thus fails to take full advantage of the hidden information in incomplete data results in suboptimal clustering performance when complete data is scarce. In this paper, we propose Contrastive Incomplete Multi-View Image Clustering with Generative Adversarial Networks (CIMIC-GAN), which uses GAN to fill in incomplete data and uses double contrastive learning to learn consistency on complete and incomplete data. More specifically, considering diversity and complementary information among multiple modalities, we incorporate autoencoding representation of complete and incomplete data into double contrastive learning to achieve learning consistency. Integrating GANs into the autoencoding process can not only take full advantage of new features of incomplete data, but also better generalize the model in the presence of high data missing rates. Experiments conducted on four extensively-used datasets show that CIMIC-GAN outperforms state-of-the-art incomplete multi-View clustering methods.

1 INTRODUCTION

The majority of data in real life is in the form of numerous modalities or multiple views Baltrušaitis et al. [2018], e.g., the RGB images or depth maps taken by using different types of cameras or from different angles by the same camera. The information in multi-modal data cannot be effectively utilized by the single-modal or single-view method. If we can comprehensively observe different views of the object or use multiple modalities of the image object, we can better build a vision model of the object. Therefore, an effective multi-modal learning approach, particularly one that is unsupervised, is highly desired for real-world vision applications. Existing methods all explicitly require multi-view data to satisfy the assumption of cross-view consistency, also known as data completeness, and require that all views of each sample point exist. However, considering the scenarios in practice are always missing a lot during data collection or transmission, the data from complete views are very scarce and a crucial problem, incomplete multi-view problem (IMP), arises. The key to IMP is whether the missing information can be inferred from existing data, or whether the correct judgment can be made using the available data information.

Self-supervised algorithms of incomplete multi-view clustering (IMC) have been developed to address IMPs in clustering. Although significant progress has been made in many fields utilizing existing IMC methods, their performance is limited due to the following drawbacks. Obtaining high-level semantic features with traditional IMC methods is difficult, and degrades clustering performance on complicated real-world data. Global latent representations with high-level semantic information should not only promote consistency among views while weakening inconsistency but also account for complementary information. However, some self-supervised IMC methods Liu et al. [2013]; Zhao et al. [2017]; Yang et al. [2020a]; Xu et al. [2019]; Hu and Chen [2018]; Wang et al. [2015]; Li et al. [2014]; Zhang et al. [2018]; Wang et al. [2019] either extract only the common semantics of different views to maximize inter-view consistency, or only mine the information that complements each other between views by enhancing subspace learning and fusion, lacking a comprehensive consideration. Even if some methods Wen et al. [2019]; Liu et al. [2020]; Andrew et al. [2013] take into account consistency and complementary, the clustering performance is still poor due to failure to take full advantage of the hidden information in incomplete data. Many methods Liu et al. [2013]; Zhao et al. [2017]; Yang et al. [2020a]; Xu et al. [2019]; Lin et al. [2021]; Wen et al. [2019]; Andrew et al. [2013]; Wang et al. [2019] can only utilize aligned complete data for representation learning based on the consistency assumption of the data. However, the data from complete views are very scarce and will be not enough to learn consistent information. The information hidden in the incomplete data appears more important for self-supervised IMC.

Actually, due to the variety and diversity of incomplete data from multiple views, it is quite challenging to utilize incomplete data to its full potential which makes the model more robust in severe scenarios with high missing rates. To make up for the lack of complete data pairs and comprehensively utilize the hidden information in incomplete data, contrastive learning has been applied in IMC Lin et al. [2021]; Chen et al. [2020]; Tian et al. [2020]; Xu et al. [2021]; Yuan et al. [2021]. However, while learning consistency from different views, contrastive learning in the feature space will result in the loss of complementary information. We map datasets into multiple-view joint subspaces constructed using auto-encoding representation learning, and then leverage the Generative Adversarial Network (GAN) Goodfellow et al. [2014] to fill in the incomplete data to the auto-encoding representation of contrastive learning. In this way, the data diversity of each view is completely maintained in our framework by reconstructing its high-dimensional latent representation back to the view, which enables us to contain more complimentary information and use its latent representation to construct a contrastive learning module. The above design allows the representations learned by our framework to be complementary yet consistent. Ultimately, a new self-supervised IMC framework, named Contrastive Incomplete Multi-View Image Clustering with Generative Adversarial Network (CIMIC-GAN), is proposed. It integrates several view-specific autoencoders, mutually symmetric prediction models, and GAN into a double contrastive complementary learning for self-supervised IMC.

As indicated in Fig. 1, CIMIC-GAN consists of three modules: view reconstruction module with GAN, contrastive consistency module, and contrastive prediction module. The view reconstruction module with GAN projects the view data into a specific view latent space, which is also the premise of consistent learning. At the same time, GAN can also complete the missing views to form a representation. The contrastive consistency module and the contrastive prediction module constitute a double contrastive learning framework for consistency learning, which maximizes the mutual information while making the latent representations predict each other. It is worth mentioning that, although the essence of GAN and the contrastive prediction module is distinct, they are both complementary to missing views. The contrastive prediction module can learn mutual representations between complete views. On the other hand, GAN can generate new latent distributions from incomplete view data, allowing the contrastive prediction module to be more comprehensively trained. The primary contributions of our work are illustrated as follows:

-

•

We address the reason why it is difficult to learn more advanced representation in IMC of images, and find it is primarily due to very few complete data pairs in practice while neglecting hidden information of incomplete data.

-

•

We incorporate GAN into feature representation to comprehensively utilize incomplete view data, which makes up for the difficulty of consistent learning when complete data is insufficient. Our proposed method can perform well even with abnormally high missing rates.

-

•

The proposed auto-encoding representation maintains more complementary information in the multiple-view feature space, and benefits the overall double contrastive learning process. Ultimately, the proposed self-supervised clustering model alleviates representation inconsistency of multiple views and thus enhances consistency of self-supervised IMC.

-

•

Experiments on four types of datasets show that CIMIC-GAN achieves state-of-the-art clustering performance and strong robustness.

To our knowledge, CIMIC-GAN is the first clustering solution that can mine vision features both in complete views and incomplete views. Without loss of generality, we implement CIMIC-GAN in a two-view clustering scenario, but there remains space for expansion in the case of more views. This work allows IMC models to be embedded into the physical world to learn more consistent representation in broad scenarios in a self-supervised way.

2 RELATED WORK

In the section, we briefly review three lines of related work, contrastive learning ,incomplete multi-view clustering, and generative adversarial networks.

2.1 Contrastive Learning

Contrastive learning Chen et al. [2020]; Deng et al. [2018]; Tian et al. [2020]; Tsai et al. [2020] is an essential method for unsupervised learning Bengio et al. [2013]. Its major goal is to maximize feature space similarity between positive samples while reducing the distance between negative samples. Most contrastive learning methods Yuan et al. [2021]; He et al. [2020] are built to handle various data augmentations or modal augmentations. Especially in the field of computer vision, contrastive learning methods have produced excellent results Van Gansbeke et al. [2020]. As an example, methods such as SimClR or MoCo Xu et al. [2021]; He et al. [2020]; Girshick and He minimize the InfoNCE loss function van den Oord et al. to maximize the upper bound of mutual information. Because dealing with negative samples is inconvenient, later contrastive learning algorithms Grill et al. [2020]; Chen and He [2021] have successfully replaced the contrast task with the prediction task without the need for negative samples. More tools can be used in contrastive learning. As the first comparative hashing method, CMH Hu et al. [2022] solves the problem of the negative impact of false negative samples.

Almost all existing contrastive learning methods Xu et al. [2021]; He et al. [2020]; Girshick and He ; Grill et al. [2020]; Chen and He [2021] are designed to handle single-view data, exhaustively exploring various data augmentations to build different views/augments. To the best of our knowledge, this is the first time double contrastive learning has been used on the IMC problem to handle the challenge of mutual promotion of consistency learning and data recovery from a different perspective. The introduced double contrastive learning enhances consistency in learning while alleviating inconsistency. We use latent representations to learn the consistency between distinct views and a BYOL Grill et al. [2020] based prediction network to attenuate the inconsistency between different views. These two are integrated and mutually promote each other, which greatly improves the clustering performance. As far as we know, this is the first work that uses the BYOL structure for data recovery.

2.2 Incomplete Multi-view Clustering (IMC)

According to the utilization method of multimodal data, traditional IMC methods can be roughly divided into four categories: IMC based on kernel learning Liu et al. [2020]; Rai et al. [2010], IMC based on matrix factorization, IMC based on graph, and IMC based on spectral clustering. Trivedi et al. proposed a kernel-based method Rai et al. [2010] that recovers the kernel matrix of incomplete views from the kernel matrix of complete views but requires one of the views to be all complete. To address this limitation, matrix factorization-based IMC methods Liu et al. [2013]; Zhao et al. [2017]; Yang et al. [2020a]; Hu and Chen [2018]; Li et al. [2014] use lower ranks to project parts of the data into a common subspace, formally similar to the K-means relaxation method MacQueen et al. [1967]. In the follow-up work, Linlin et al. Zong et al. [2020] introduced an indicator matrix to map non-existing instances into 0-element vectors, and Zhiqi et al. Yu et al. [2022] introduced a fusion matrix while learning the self-representation in the subspace, which further developed the IMC method based on matrix decomposition. However, feature-based matrix factorization methods generally cannot explore nonlinear data structures, while graph-based methods Peng et al. [2019]; Wen et al. [2021] can effectively deal with nonlinear data structures. For example, PSIMVC-PG Li et al. [2022] proposes a parameter-free clustering based on a prototype graph framework to handle non-linear data structures with lower complexity. In addition, the spectral clustering-based method Wang et al. [2019] can also deal with the nonlinearity in the data by constructing a Laplacian graph. For example, FMDC Qiang et al. [2021] uses a very short time to construct an anchor graph on different views, which significantly reduces the time cost. IMSC-AGLWen et al. [2018] used graph learning and spectral clustering to learn incomplete public representations for the first time, and achieved better results.

However, traditional IMC methods have limitations in terms of representation ability and high complexity. In recent years, the self-supervised IMC method Jiang et al. [2019]; Wang et al. [2018]; Xu et al. [2019]; Lin et al. [2021]; Zhang et al. [2019]; Wang et al. [2015]; Andrew et al. [2013]; Wang et al. [2021] based on deep learning has attracted attention, and this method combined with deep neural networks shows strong generalization ability. The differences between this work and existing studies are as follows. CIMIC-GAN belongs to a deep IMC framework, which has better scalability than traditional IMC methods. Most existing IMC methods Liu et al. [2013]; Zhao et al. [2017]; Yang et al. [2020a]; Xu et al. [2019]; Lin et al. [2021]; Wen et al. [2019]; Andrew et al. [2013]; Wang et al. [2019] can only infer and generate missing data with consistency learned from complete data, but can’t properly use incomplete data, wasting the hidden information contained in the training set’s incomplete data. In contrast, we leverage GAN to fill in the missing views and train the prediction network with the new data generated. Therefore, we target the problem that incomplete data in IMC cannot be used or trained. It can even outperform other methods in extreme circumstances where the missing rate is exceedingly high.

2.3 Generative Adversarial Networks (GAN)

Recently, there have been several approaches utilizing GANs Goodfellow et al. [2014] for multi-view learning, such as CycleGAN Zhu et al. [2017]; Yang et al. [2020b] and StarGAN Choi et al. [2018]. Wang et al. proposed a generative adversarial network (GAN) based deep IMC method Wang et al. [2018]; Goodfellow et al. [2014]. It uses one view to generate missing data for another view of recurrent GAN Zhu et al. [2017]. However, the accompanying challenge of cross-modal generation is how to narrow the differences between different modalities. To overcome this problem, CAD Hu et al. [2021] fills the heterogeneity gap between modalities by matching the synthesized data in the feature space with the help of two groups of specific GANs and a discriminative mechanism across modalities. However, GANs mostly adopt shallow models, which are difficult to capture deep semantic understanding. AIMC Xu et al. [2019] uses deeper networks to perform missing data inference while finding the common latent space of multiple view data.

Different from the above works, these methods mainly focus on cross-domain data generation. In our framework, the adversarial process only acts on a single view, which preserves the private information of the view to the greatest extent, thus effectively realizing incomplete data recovery in independent feature space. Moreover, the adversarial process is integrated with the target reconstruction, so that the pseudo-data distribution contains more complementary information, which is beneficial for the network to capture a better clustering structure. Furthermore, Consistent GAN Wang et al. [2018] for the two-view IMC problem can only handle dual-view data, it uses one view to generate missing data from the other and then performs clustering on the generated full data. While CIMIC-GAN can be extended and applied to the IMC problem with an arbitrary number of views.

3 The Method

In order to better solve the IMC problem by learning consistency of incomplete multiple views, we propose Contrastive Incomplete Multi-View Image Clustering with Generative Adversarial Network (CIMIC-GAN).

3.1 Notations and Problem Formulation

Given an instance, it usually contains multiple views or multiple modalities, such as RGB images or depth maps, representing different perspectives of the same object. Regarding this phenomenon, the incomplete multi-view clustering problem tries to solve the premise that there are missing views in an instance and can have good clustering performance. One instance generally has multiple views, which may or may not be complete. As shown in Fig. 2, assuming that a dataset has two views, namely =2, there are instances in total, such a dataset can be divided into two parts: complete data and incomplete data. In the set of complete instances, we use to denote the feature vector for the -th view of the -th instance. In the set of incomplete instances, we use to denote the feature vector for the -th view of the -th instance. , where is the dimensionality of the -th view. Our goal is to group all the instances into clusters.

Without loss of generality, we make , i.e., two views are involved. Define a two-view dataset as a collection of of instances, where and represent instances that exist and are aligned in two views, and where and represent instances that exist only in the first and second views, respectively.

3.2 Model Overview

Multi-View Clustering is an unsupervised learning problem. The key to solve this problem is to learn strong representations as well as the consistency of them. Considering that contrastive learning has a strong representation ability in the field of unsupervised learning, we incorporate a double contrastive learning module (Network1) into our model, CIMIC-GAN, to learn consistency of the representations of the views. On the other hand, considering the missing data of instances, we fill the incomplete data in a generative adversarial way to obtain more complete auto-encoding representations of views (Network2). The training process of CIMIC-GAN is divided into three steps:

Step 1: Train Network1 with . The aligned complete view data and is respectively used in the encoder and of Network1 to obtain the latent representations. and is the latent representations of the first view and the second view, respectively. Based on and , there are three objective functions that need to be optimized in this step: i) The loss obtained by reconstructing different views through the autoencoder is denoted by . ii) By using contrastive learning, we maximize mutual information between and . The corresponding loss is denoted by . iii) Through contrastive learning without negative samples, and are predicted by two symmetrical networks using predictors to alleviate the inconsistency between distinct viewpoints, and the loss function is denoted by . Ultimately, we propose the overall objective function of network 1:

| (1) |

The parameters and are the balanced factors on and , respectively. We set these two parameters as 0.1, which will be proven in the following parameter analysis experiments.

Step 2: Train Network2 using . Feed the incomplete view data and into the encoder of Network2. Note that, the encoder , and the decoder , already have converged in step 1. The decoder and will be equivalent to being well initialized as generators in the GAN structure. Each decoder incorporates the corresponding discriminator to form a typical GAN network. In detail, is first generated according to . Then, the discriminator will judge whether generated by the decoder is true. Till the discriminator cannot provide that correctly, the generator converges. The purpose of this step is to train a powerful generator to generate the missing data of imcomplete view as well as expand the training dataset. As shown in Figure 2, according to the incomplete view , the corresponding missing data is generated through Network2 and fills it in the corresponding modality in pair, .

Step 3: Train Network1 again with to achieve a consistent representation. Feed the pseudo-complete data of different views to Network1, and perform consistency learning as step 1. The optimization objective is the same as the first step, and the balance factor has not changed, as follow Eq.(1). The role of this step is to get sufficient training data to make the Network1 model more generalized and robust. After Network1 has converged, we feed to Network1. This will give us latent representations of all views, including views with missing data. Finally, a consistent representation is obtained by concatenating representations of different views (see 2), which is further input to a specific cluster (Kmeans or other adaptive deep clustering methods with enhanced performance, such as GATCluster Niu et al. [2020], SpiceNiu et al. [2021], etc.) to achieve qualified multi-view image clustering.

3.3 Loss Function

The loss functions used in the proposed model is provided in this section.

3.3.1 Reconstruction Loss

Autoencoders Hinton and Salakhutdinov [2006] can compute nonlinear mappings by learning the latent feature space between input and output. As shown in Fig. 3, we designed a deep autoencoder to project a view into its latent space while learning latent representation. The structure of this autoencoder ensures a diversity of viewpoints while mining complementary information from more clustering patterns, which is the foundation to improve clustering performance with multimodal data. Additionally, avoiding model collapse is advantageous.

is the result of being reconstructed by the autoencoder. We use distance as our view reconstruction loss function , is defined as

| (2) |

where denotes the -th sample of . denotes the decoder for the -th view. The latent representation of -th sample in -th view is given by

| (3) |

where denotes the representations of and denotes the encoder for the -th view,.

3.3.2 Adversarial Loss

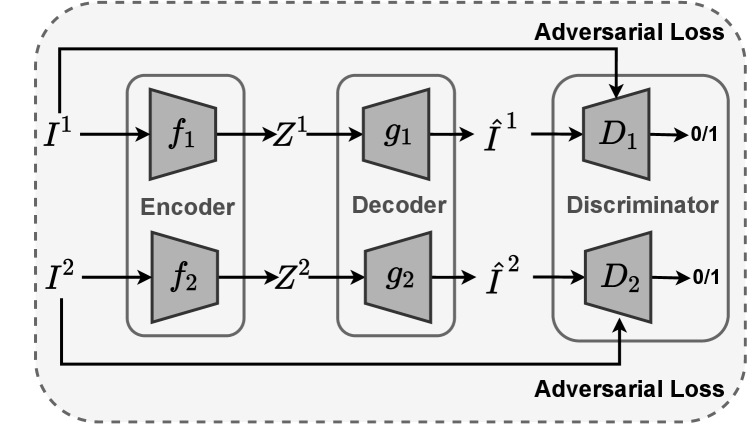

As shown in Fig. 4, the decoder and the discriminator constitute two sets of GANs. essentially matches the difference between the latent representation distribution and the data distribution, with the goal of aligning the different representation distributions. In step2 (see Section 3.2), the corresponding data in the missing view is generated according to the incomplete data, and combined into a new data set .

Adversarial loss is based on a paper Goodfellow et al. [2014]. The traditional GAN will train two models of generator and discriminator , and the objective function is the value function defined as:

| (4) |

is a two-player minimax game with two expectations added. Leveraging the decoder as a generator, we implement a generative adversarial framework for multi-view reconstruction, and thus the corresponding loss function is designed as:

| (5) | ||||

we reconstruct the adversarial loss of incomplete data according to a view-specific latent representation , and the representation of the missing view is obtained.

The missing data representation generated by incorporates to form the representation of a complete view, , which is used to retrain Network1 in step3 (see Section 3.2). This achieves more effective use of incomplete data , and makes Network1 more fully trained.

3.3.3 Contrastive Prediction Loss

We propose a mutual prediction methods based on contrastive learning without negative samples to address the problem of missing data recovery. In contrast to the traditional contrastive learning Grill et al. [2020], every decoder and Multi-Layer Perceptron (MLP) in the contrastive prediction module take a softmax function to achieve explicit prediction. Additionally, we design two networks with the same structure, where each predictor can predict the latent representation of the corresponding modality.

As shown in Fig. 5, the online network consists of a decoder , a projector , and another predictor . On the other hand, the target network includes a decoder and a decoder . With using a mean squared error loss function, the outputs of the online network and the target network approach consistent. This process can be formulated by the following loss function:

| (6) |

where the output obtained by the latent representation through the online network is defined by

| (7) |

After that, we send to the target network, and send to the online network. This process is defined as

| (8) |

The loss function of the whole can be defined as

| (9) |

because the contrastive prediction module is two sets of left and right symmetrical modules, so it is still necessary to add the loss of another set of prediction network.

Decoder and decoder use the same network architecture but different parameters, decoder is updated with the gradient update, and decoder is updated in the form of moving average like He et al. [2020], that is, a momentum encoder is used. Decoder provides the regression target while training decoder , and its parameters are an exponential moving average of decoder ’s parameters . To update these parameters, a target momentum is involved as the following,

| (10) |

If we choose a large momentum during training, the decoder updates very slowly and does not change rapidly with decoder , thus ensuring that decoder is always a similar encoder at each step.

3.3.4 Contrastive Consistency Loss

Inter-view consistency learning and missing view completion are mutually reinforcing processes Lin et al. [2021]. A common strategy to maximize the consistency between two views is to maximize the correlation, which can also be understood as extracting shared components. Here, maximizing the consistency between and can be formulated as maximizing the mutual information of and , as shown in Fig. 6.

| (11) |

where is information entropy, is mutual information, and a regularization term Lin et al. [2021] with parameter is involved to improve the generalization of the module. In order to calculate the mutual information , the output of the softmax function in the decoder involved in Section 3.3.3 is used as an over-cluster class probability distribution of Ji et al. [2019], and the joint probability distribution of and is obtained. In this way, is calculated according the following formula Lin et al. [2021],

| (12) |

where is the balance term defined in Formula 11.

4 EXPERIMENTS

In this section, we evaluate the proposed CIMIC-GAN method on four widely-used multi-view datasets and compare it with several state-of-the-art clustering methods.

4.1 Datasets and Experimental Settings

We evaluate CIMIC-GAN on four clustering problem datasets. 1) Caltech101-20 Li et al. [2015] consists of 2,386 images of 20 subjects, and we use two views of HOG and GIST features, with 1984 and 512 as the feature dimensions, respectively. 2) Scene-15 Fei-Fei and Perona [2005] consists of 4,485 images distributed over 15 Scene categories, and we use two views of PHOG and GIST features, 20D and 59D feature vectors, respectively. 3) LandUse-21 Yang and Newsam [2010] consists of 2100 satellite images from 21 categories , and we use two views of PHOG and LBP features, 59D and 40D feature vectors, respectively. 4) A large dataset, Noisy MNIST Wang et al. [2015], consists of 70,000 instances of 10 classes. We randomly select 15,000 original instances as view 1 and 15,000 Gaussian noise-added instances as view 2. We summarize the detailed statistics of the datasets in Table 1.

| Datasets | Size | # of categories | Dimension |

| Caltech101-20 | 2386 | 20 | 1984/512 |

| Scene-15 | 4485 | 15 | 20/59 |

| LandUse-21 | 2100 | 21 | 59/40 |

| 20 | 70,000 | 10 | 784/784 |

We conduct all the experiments on the platform of ubuntu 16.04 with Tesla P100 Graphics Processing Units (GPUs) and 32G memory size. Our model, method and baseline are built on the pytorch 1.7.0 framework. Based on extensive ablation studies, the bacth size is set to 256 and the epochs of the three steps of training are 300, 250, and 200, respectively. The missing rate is fixed to 0.5, the momentum is fixed to 0.6, the entropy parameter is fixed to 9 and trade-off hyper-parameters and are fixed to 0.1. We utilize Adam optimizer Kingma and Ba [2015] with default parameters and a learning rate of 0.0001. We set the dimension of the autoencoder to d-1024-1024-1024-128, where d is the dimension of the input data. We simply adopt a dense (i.e., fully-connected) network where each layer is followed by a batch normalization layer and a ReLU layer. The structures of the autoencoder for different modalities are the same. MLPs are used to implement the contrastive prediction module, and all MLPs use batch normalization after each linear layer. Each MLP has two linear layers, with the ReLU activation function added in the middle of each of them.

Compared methods and evaluate metrics: We compare CIMIC-GAN with several baselines, including Autoencoder in Autoencoder Networks (AE2Nets) Zhang et al. [2019], Unified Embedding Alignment Framework (UEAF) Wen et al. [2019], Doubly Aligned Incomplete Multi-view Clustering (DAIMC) Hu and Chen [2018], Efficient and Effective Regularized Incomplete Multi-view Clustering(EERIMVC) Liu et al. [2020], Deep Canonically Correlated Autoencoders (DCCAE) Wang et al. [2015], Partial Multi-View Clustering (PVC) Li et al. [2014], Binary Multi-view Clustering (BMVC) Zhang et al. [2018], Deep Canonically Correlated Analysis (DCCA) Andrew et al. [2013], Perturbation oriented Incomplete Multi-view Clustering (PIC) Wang et al. [2019], and COMPLETER Lin et al. [2021]. The clustering performance is evaluated with three metrics: Accuracy (ACC), Normalized Mutual Information (NMI) and Adjusted Rand Index (ARI). More details on these indicators can be found in Amigó et al. [2009]. A higher value of these evaluation indicators can reflect an advanced clustering performance.

Missing rate: To uniformly evaluate the performance of CIMIC-GAN on incomplete multi-view data, we randomly select instances as incomplete data and randomly remove some views from each of them. The Missing Rate(MR) is defined as . The larger the missing rate, the more incomplete data.

4.2 Performance with Different Missing Rates

We design experiments with different methods at different missing rates to further demonstrate the efficiency of our method at high missing rates. On the Caltech101-20 dataset, we divide the missing rate into 10 cases ranging from 0 to 0.9 with a 0.1 interval. We adjust the batch size to 128 when the missing rate is 0.9. When the missing rate is 0, since there is no incomplete data, we will directly skip the first and second steps, and we use the complete data to train CIMIC-GAN in an end-to-end manner.

Both DCCA and DCCAE learn nonlinear analysis between paired views through neural networks. BMVC needs paired views to encode and embed in binary space, and AE2Nets needs to first convert different views into a unified comprehensive representation. However, when the missing rate is 1, it means that there are no paired samples in the original data distribution, which makes the above four methods not work. COMPLETER relies heavily on the mutual information of complete sample pairs, so the phenomenon of model collapse occurs, and the accuracy rate is only 26.33% when all views are missing. The accuracies of UEAF, DAIMC, EEIMVC, PVC, PIC, and CIMIC-GAN are 32.15%, 26.77%, 35.00%, 33.24%, 37.12%, and 34.30%, respectively, in terms of the different number of missing views. The two traditional methods, PIC and EERIMVC, use the similarity of other views to fill in the missing similar items. Although the filling method is simple, it is effective when the missing rate is 1. However, our method is a deep method and infers missing data rather than missing similarities, so it enjoys higher interpretability. Even without paired samples, our method still approximates the original data distribution and achieves near-optimal performance.

As shown in Fig. 7, we observe the accuracy of different methods under different missing rates and can get the following results: 1. CIMIC-GAN outperforms almost all tested baselines in all missing rates, which validates the effectiveness of the proposed method. 2. Due to the pseudo-data distribution generated by GAN fitting more missing samples, CIMIC-GAN outperforms the suboptimal method by 3.68% with a missing rate of 0.9. This experiment also demonstrates that the model has strong robustness and generalization ability.

| Missing Rate | Method | Caltech101-20 | Scene-15 | LandUse-21 | Noisy MNIST | ||||||||

| ACC | NMI | ARI | ACC | NMI | ARI | ACC | NMI | ARI | ACC | NMI | ARI | ||

| 0.5 | AE2Nets | 33.61 | 49.12 | 24.59 | 28.01 | 31.12 | 13.85 | 19.03 | 23.01 | 5.65 | 37.98 | 33.55 | 19.08 |

| UEAF | 47.26 | 56.69 | 33.61 | 23.79 | 25.33 | 8.98 | 15.36 | 22.63 | 3.46 | 34.48 | 33.13 | 23.78 | |

| DAIMC | 44.50 | 59.44 | 32.67 | 23.58 | 21.81 | 9.33 | 19.02 | 19.38 | 5.62 | 34.23 | 27.13 | 16.33 | |

| EERIMVC | 40.59 | 51.25 | 27.97 | 33.04 | 31.81 | 15.92 | 22.06 | 24.96 | 9.01 | 54.99 | 45.16 | 36.31 | |

| DCCAE | 39.99 | 52.70 | 29.98 | 31.79 | 34.35 | 15.67 | 14.86 | 20.89 | 3.53 | 61.23 | 59.10 | 33.25 | |

| PVC | 41.34 | 56.46 | 30.75 | 25.42 | 25.20 | 11.28 | 21.26 | 22.93 | 8.07 | 36.21 | 27.92 | 17.55 | |

| BMVC | 32.06 | 40.58 | 12.16 | 30.82 | 30.19 | 10.80 | 18.71 | 18.68 | 3.66 | 25.12 | 15.79 | 7.09 | |

| DCCA | 38.57 | 52.42 | 29.71 | 31.73 | 33.11 | 14.85 | 13.93 | 19.91 | 3.32 | 61.99 | 60.56 | 38.09 | |

| PIC | 57.49 | 64.20 | 45.18 | 38.69 | 37.97 | 20.06 | 23.52 | 25.44 | 9.38 | - | - | - | |

| COMPLETER | 68.47 | 67.54 | 74.36 | 38.70 | 48.26 | 23.48 | 22.15 | 27.01 | 10.36 | 80.02 | 75.33 | 70.70 | |

| CIMIC-GAN | 69.48 | 68.25 | 75.12 | 39.09 | 46.12 | 23.55 | 23.76 | 28.03 | 11.10 | 81.97 | 77.22 | 72.56 | |

4.3 Performance with Different Momentum

In the contrastive prediction module, if the parameters of the online network updated by the current step are directly copied to the target network, the target network’s training state will eventually be completely disordered, causing the incapacity to train to oscillate. Therefore, the coordination of states by introducing momentum is a natural solution. Consistency and complementarity represent the same semantics and different semantics of different views, respectively. If the network is updated faster, the learned representation will contain more consistency, otherwise, it can retain more complementary information. So the introduction of momentum can also help us find the most suitable balance point. We designed a set of experiments on the Caltech101-20 dataset with a missing rate (MR) of 0.5. As shown in Fig. 8, the clustering effect is observed by adjusting different values of the momentum .

From the results obtained in Fig. 8, it can be seen that: 1) When the value of is small, the parameters of the target network change too quickly, ignoring the complementarity between hidden representations. 2) When is large (), the gradient update is slow the consistent learning is hindered, and the accuracy will experience a significant drop again. Therefore, too fast or too slow parameter changes are not conducive to consistent learning, because the consistency and complementarity of different views are not balanced. So choosing an appropriate momentum as a balance point can promote the consistent learning of latent representations from different views. In the update of the target network, 60% of the parameters keep the same value, and the clustering performance is optimal at this time.

4.4 Comparisons with state of the arts

For methods that can only handle complete view data, we fill in the missing data with the mean of the same view. For a fair comparison, we use the default network structure and parameters for all methods. We test all methods with an MR of equal to 0.5. Experimental results on the above four databases are listed in Table 2. Compared with the ten clustering methods mentioned above, our CIMIC-GAN achieves state-of-the-art clustering performance. We can observe the following from the results: 1) On all four datasets, CIMIC-GAN outperforms other methods. 2) In most cases, DAIMC, PVC, EERIMVC, and PIC obtain worse IMC performance than the other methods. These methods all consider consistency learning and data recovery as two separate parts. The contrastive consistency module in CIMIC-GAN strengthens consistency learning, and the contrastive prediction module alleviates inconsistency. There is a trade-off between these two parts. We can conclude that using double contrastive learning to simultaneously mine complementary and consistent information from multiple perspectives can improve clustering performance. 3) Methods such as DCCAE, PVC, BMVC, and PIC only learn consistency, while DAIMC learns representations that only consider complementary information. Thus, CIMIC-GAN far outperforms the above methods. This shows that effectively utilizing the complementary and consistent information of images can improve clustering performance. 4) CIMIC-GAN outperforms methods with incomplete data that do not participate in training, such as UEAF, DCCA, PIC, COMPLETER, etc. This shows that it is necessary for us to use GAN to mine the hidden information in incomplete data.

4.5 Performance on different number of views

To demonstrate that our method can in principle be extended to more than two views, we implement a set of comparative experiments on different number of views. A dataset Fashion Xiao et al. [2017] with three views is introduced here to support this set of experiments. We leverage this dataset to construct a dataset only with two views, Fashion-2V, whereas we construct Fashion-3V with three views that is missing two views, and Fashion-3V* with three views that is missing one view. See Table 3 for more detailed settings of these three datasets.

| Datasets | Size | Views | # of categories | Dimension |

| Fashion-2V | 10,000 | 2 | 10 | 784/784 |

| Fashion-3V | 10,000 | 3 | 10 | 784/784/784 |

| Fashion-3V* | 10,000 | 3 | 10 | 784/784/784 |

To handle instances with more than two views, CIMIC-GAN needs some extensions. Specifically, we involve another set of network2 to learn the latent representation for the third view. Similarly, we need to introduce two additional sets of prediction networks to realize the mutual prediction of and , and and . Correspondingly, we maximize the mutual information between the latent representations of any two of these three views (Formula 12). Fig. 11 shows the results of training on Fashion-2V, Fashion-3V and Fashion-3V* for 200 epochs. As the number of instance views changed from two to three, more features are involved and each evaluation metric improved significantly. Among them, the performance of Fashion-3V* is slightly higher than Fashion-3V in an all-around way. This is because our method alleviates the negative impact of missing views, so their two curves are very close, which further proves that CIMIC-GAN has strong robustness and adaptability.

4.6 Parameter Analysis and Ablation Studies

In this section, we analyze CIMIC-GAN on the Caltech101-20 dataset from two perspectives, i.e., parameter analysis and ablation studies.

Our method has two user-specified parameters, i.e., the reconstruction loss trade-off parameter , and the contrastive consistency loss trade-off parameter . To evaluate the impact of and , we change their value in the range of 0.001, 0.01, 0.1, 1, 10 when training the model on dataset Caltech101-20. As shown in Fig. 9, when and are set to 0.1 and 0.1, respectively, the experimental results are the best. Moreover, CIMIC-GAN was run with the same hyper-parameter setting on the four datasets and achieved good performance (see Section 4.4), which means CIMIC-GAN is not very sensitive concerning the hyperparameters.

We conduct ablation studies with four variants of CIMIC-GAN to show the effectiveness of its different modules. (1) We only use the reconstruction module multi-modal clustering. (2) We only use the contrastive prediction module multi-modal clustering. (3) We only use the contrastive consistency module multi-modal clustering. (4-6) We combine the above three modules in pairs. (7) We use the complete Network1 with three modules to learn consistent cluster predictions of multiple modalities. (8) Compared with setting “(7)”, we additionally added GAN in Network2.

| moudules of CIMIC-GAN | ACC | NMI | ARI |

| (1) | 32.06 | 30.58 | 14.16 |

| (2) | 34.94 | 33.22 | 25.02 |

| (3) | 46.59 | 57.36 | 41.56 |

| (4) | 53.36 | 43.35 | 27.96 |

| (5) | 55.45 | 62.67 | 54.59 |

| (6) | 64.58 | 62.10 | 70.87 |

| (7) | 66.32 | 66.30 | 69.69 |

| (8) | 69.48 | 68.25 | 75.12 |

Table 4 shows the loss components and experimental results corresponding to the four variants. In row (2), it can be seen that optimization alone with contrastive prediction loss may lead to trivial solutions or model collapse because is not optimized and thus the low-dimensional latent representation loses more complementary information. This also demonstrates that the key task of IMC is to mine complementary information to achieve consistent predictions across multiple modalities. Comparing row (6) with row (4) and (5), dual contrastive learning is more effective than a single consistency learning module. Comparing row (7) with row (1), it can be inferred that double contrast learning has a great improvement in the clustering performance, and shows that the improvement in consistency by the double contrastive learning module is huge. Comparing row (7) with row (8), the introduction of GAN in the encoding process makes the hidden information of incomplete data more fully utilized. Significantly, one can find that each module of CIMIC-GAN has improved the clustering performance, which further proves the effectiveness of our motivations and the proposed framework.

4.7 Visualization

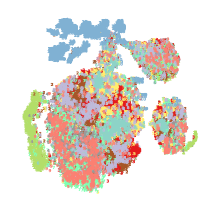

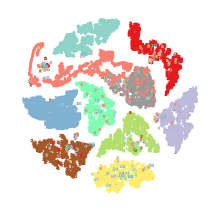

As shown in Fig. 10, we show t-sne Van der Maaten and Hinton [2008] visualizations of the obtained representations on four datasets. In the experiments, the missing rate is fixed to 0.5. The common representation itself is a fusion representation, which fuses a complete view representation and a missing view representation, where the missing view representation is filled by incomplete data through a contrastive prediction module. Therefore, Fig. 10 illustrates the learning process of Network1. With the increase of epochs, more advanced semantic information is achieved, the common representations learned by Network1 become more compact and independent, and the density of clusters is higher. The advanced semantic information enables better consistent learning. For all these four datasets, Network1 converges at around 200 epochs, indicating that our proposed model is very robust.

5 Conclusion

This paper proposes CIMIC-GAN to effectively exploit the hidden information in incomplete data to learn multi-view consistent semantics. CIMIC-GAN works well without paired-view data and achieves optimal clustering performance even in the case of high missing rates. But there are also some disadvantages, such as the model not being easy to expand when the number of views is large. This work aims to provide a general framework for IMC of visual instances, where the models may also suffer from inherent bias in the data, especially in the case of dirty samples. The framework learns feature extractors and predictors, which can be used in areas such as feature compression, unsupervised labeling, and cross-modal feature retrieval. In the future, we plan to further explore the potential of our framework in other multi-view learning tasks, e.g., 3D reconstruction.

Acknowledgments

This work was supported by the National Science Foundation of China (61962045, 62062055, 61650205, 61902382, 61972381), the Open Foundation of Inner Mongolia Key Laboratory of Discipline Inspection and Supervision (IMDBD2020017, IMDBD2020018), the Science and Technology Planning Project of Inner Mongolia Autonomous Region (2019GG372).

References

- Baltrušaitis et al. [2018] Tadas Baltrušaitis, Chaitanya Ahuja, and Louis-Philippe Morency. Multimodal machine learning: A survey and taxonomy. IEEE transactions on pattern analysis and machine intelligence, 41(2):423–443, 2018.

- Liu et al. [2013] Jialu Liu, Chi Wang, Jing Gao, and Jiawei Han. Multi-view clustering via joint nonnegative matrix factorization. In Proceedings of the 2013 SIAM international conference on data mining, pages 252–260. SIAM, 2013.

- Zhao et al. [2017] Handong Zhao, Zhengming Ding, and Yun Fu. Multi-view clustering via deep matrix factorization. In Thirty-first AAAI conference on artificial intelligence, 2017.

- Yang et al. [2020a] Zuyuan Yang, Naiyao Liang, Wei Yan, Zhenni Li, and Shengli Xie. Uniform distribution non-negative matrix factorization for multiview clustering. IEEE transactions on cybernetics, 51(6):3249–3262, 2020a.

- Xu et al. [2019] Cai Xu, Ziyu Guan, Wei Zhao, Hongchang Wu, Yunfei Niu, and Beilei Ling. Adversarial incomplete multi-view clustering. In IJCAI, pages 3933–3939, 2019.

- Hu and Chen [2018] Menglei Hu and Songcan Chen. Doubly aligned incomplete multi-view clustering. In IJCAI, 2018.

- Wang et al. [2015] Weiran Wang, Raman Arora, Karen Livescu, and Jeff Bilmes. On deep multi-view representation learning. In International conference on machine learning, pages 1083–1092. PMLR, 2015.

- Li et al. [2014] Shao-Yuan Li, Yuan Jiang, and Zhi-Hua Zhou. Partial multi-view clustering. In Proceedings of the AAAI conference on artificial intelligence, volume 28, 2014.

- Zhang et al. [2018] Zheng Zhang, Li Liu, Fumin Shen, Heng Tao Shen, and Ling Shao. Binary multi-view clustering. IEEE transactions on pattern analysis and machine intelligence, 41(7):1774–1782, 2018.

- Wang et al. [2019] Hao Wang, Linlin Zong, Bing Liu, Yan Yang, and Wei Zhou. Spectral perturbation meets incomplete multi-view data. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, page 3677, 2019.

- Wen et al. [2019] Jie Wen, Zheng Zhang, Yong Xu, Bob Zhang, Lunke Fei, and Hong Liu. Unified embedding alignment with missing views inferring for incomplete multi-view clustering. In Proceedings of the AAAI conference on artificial intelligence, volume 33, pages 5393–5400, 2019.

- Liu et al. [2020] Xinwang Liu, Miaomiao Li, Chang Tang, Jingyuan Xia, Jian Xiong, Li Liu, Marius Kloft, and En Zhu. Efficient and effective regularized incomplete multi-view clustering. IEEE transactions on pattern analysis and machine intelligence, 43(8):2634–2646, 2020.

- Andrew et al. [2013] Galen Andrew, Raman Arora, Jeff Bilmes, and Karen Livescu. Deep canonical correlation analysis. In International conference on machine learning, pages 1247–1255. PMLR, 2013.

- Lin et al. [2021] Yijie Lin, Yuanbiao Gou, Zitao Liu, Boyun Li, Jiancheng Lv, and Xi Peng. Completer: Incomplete multi-view clustering via contrastive prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11174–11183, 2021.

- Chen et al. [2020] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple framework for contrastive learning of visual representations. In International conference on machine learning, pages 1597–1607. PMLR, 2020.

- Tian et al. [2020] Yonglong Tian, Dilip Krishnan, and Phillip Isola. Contrastive multiview coding. In European conference on computer vision, pages 776–794. Springer, 2020.

- Xu et al. [2021] Jie Xu, Huayi Tang, Yazhou Ren, Xiaofeng Zhu, and Lifang He. Contrastive multi-modal clustering. 2021.

- Yuan et al. [2021] Xin Yuan, Zhe L. Lin, Jason Kuen, Jianming Zhang, Yilin Wang, Michael Maire, Ajinkya Kale, and Baldo Faieta. Multimodal contrastive training for visual representation learning. pages 6991–7000, 2021.

- Goodfellow et al. [2014] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. Advances in neural information processing systems, 27, 2014.

- Deng et al. [2018] Cheng Deng, Zhaojia Chen, Xianglong Liu, Xinbo Gao, and Dacheng Tao. Triplet-based deep hashing network for cross-modal retrieval. IEEE Transactions on Image Processing, 27(8):3893–3903, 2018.

- Tsai et al. [2020] Yao-Hung Hubert Tsai, Yue Wu, Ruslan Salakhutdinov, and Louis-Philippe Morency. Self-supervised learning from a multi-view perspective. In International Conference on Learning Representations, 2020.

- Bengio et al. [2013] Yoshua Bengio, Aaron Courville, and Pascal Vincent. Representation learning: A review and new perspectives. IEEE transactions on pattern analysis and machine intelligence, 35(8):1798–1828, 2013.

- He et al. [2020] Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 9729–9738, 2020.

- Van Gansbeke et al. [2020] Wouter Van Gansbeke, Simon Vandenhende, Stamatios Georgoulis, Marc Proesmans, and Luc Van Gool. Scan: Learning to classify images without labels. In European Conference on Computer Vision, pages 268–285. Springer, 2020.

- [25] Xinlei Chen Haoqi Fan Ross Girshick and Kaiming He. Improved baselines with momentum contrastive learning.

- [26] Aaron van den Oord, Yazhe Li, and Oriol Vinyals. Representation learning with contrastive predictive coding.

- Grill et al. [2020] Jean-Bastien Grill, Florian Strub, Florent Altché, Corentin Tallec, Pierre Richemond, Elena Buchatskaya, Carl Doersch, Bernardo Avila Pires, Zhaohan Guo, Mohammad Gheshlaghi Azar, et al. Bootstrap your own latent-a new approach to self-supervised learning. Advances in Neural Information Processing Systems, 33:21271–21284, 2020.

- Chen and He [2021] Xinlei Chen and Kaiming He. Exploring simple siamese representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 15750–15758, 2021.

- Hu et al. [2022] Peng Hu, Hongyuan Zhu, Jie Lin, Dezhong Peng, Yin-Ping Zhao, and Xi Peng. Unsupervised contrastive cross-modal hashing. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022.

- Rai et al. [2010] Piyush Rai, Anusua Trivedi, Hal Daumé III, and Scott L DuVall. Multiview clustering with incomplete views. In Proceedings of the NIPS Workshop on Machine Learning for Social Computing. Citeseer, 2010.

- MacQueen et al. [1967] James MacQueen et al. Some methods for classification and analysis of multivariate observations. In Proceedings of the fifth Berkeley symposium on mathematical statistics and probability, volume 1, pages 281–297. Oakland, CA, USA, 1967.

- Zong et al. [2020] Linlin Zong, Xianchao Zhang, Xinyue Liu, and Hong Yu. Multi-view clustering on data with partial instances and clusters. Neural Networks, 129:19–30, 2020.

- Yu et al. [2022] Zhiqi Yu, Mao Ye, Siying Xiao, and Liang Tian. Learning missing instances in latent space for incomplete multi-view clustering. Knowledge-Based Systems, page 109122, 2022.

- Peng et al. [2019] Xi Peng, Zhenyu Huang, Jiancheng Lv, Hongyuan Zhu, and Joey Tianyi Zhou. Comic: Multi-view clustering without parameter selection. In International conference on machine learning, pages 5092–5101. PMLR, 2019.

- Wen et al. [2021] Jie Wen, Zheng Zhang, Yong Xu, Bob Zhang, Lunke Fei, and Guo-Sen Xie. Cdimc-net: Cognitive deep incomplete multi-view clustering network. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, pages 3230–3236, 2021.

- Li et al. [2022] Miaomiao Li, Siwei Wang, Xinwang Liu, and Suyuan Liu. Parameter-free and scalable incomplete multiview clustering with prototype graph. IEEE Transactions on Neural Networks and Learning Systems, 2022.

- Qiang et al. [2021] Qianyao Qiang, Bin Zhang, Fei Wang, and Feiping Nie. Fast multi-view discrete clustering with anchor graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, pages 9360–9367, 2021.

- Wen et al. [2018] Jie Wen, Yong Xu, and Hong Liu. Incomplete multiview spectral clustering with adaptive graph learning. IEEE transactions on cybernetics, 50(4):1418–1429, 2018.

- Jiang et al. [2019] Yangbangyan Jiang, Qianqian Xu, Zhiyong Yang, Xiaochun Cao, and Qingming Huang. Dm2c: Deep mixed-modal clustering. Advances in Neural Information Processing Systems, 32, 2019.

- Wang et al. [2018] Qianqian Wang, Zhengming Ding, Zhiqiang Tao, Quanxue Gao, and Yun Fu. Partial multi-view clustering via consistent gan. In 2018 IEEE International Conference on Data Mining (ICDM), pages 1290–1295. IEEE, 2018.

- Zhang et al. [2019] Changqing Zhang, Yeqing Liu, and Huazhu Fu. Ae2-nets: Autoencoder in autoencoder networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 2577–2585, 2019.

- Wang et al. [2021] Jingyu Wang, Zhenyu Ma, Feiping Nie, and Xuelong Li. Progressive self-supervised clustering with novel category discovery. IEEE Transactions on Cybernetics, 2021.

- Zhu et al. [2017] Jun-Yan Zhu, Taesung Park, Phillip Isola, and Alexei A Efros. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision, pages 2223–2232, 2017.

- Yang et al. [2020b] Xuewen Yang, Dongliang Xie, and Xin Wang. Crossing-domain generative adversarial networks for unsupervised multi-domain image-to-image translation. CoRR, abs/2008.11882, 2020b. URL https://arxiv.org/abs/2008.11882.

- Choi et al. [2018] Yunjey Choi, Minje Choi, Munyoung Kim, Jung-Woo Ha, Sunghun Kim, and Jaegul Choo. Stargan: Unified generative adversarial networks for multi-domain image-to-image translation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 8789–8797, 2018.

- Hu et al. [2021] Peng Hu, Xi Peng, Hongyuan Zhu, Jie Lin, Liangli Zhen, Wei Wang, and Dezhong Peng. Cross-modal discriminant adversarial network. Pattern Recognition, 112:107734, 2021.

- Niu et al. [2020] Chuang Niu, Jun Zhang, Ge Wang, and Jimin Liang. Gatcluster: Self-supervised gaussian-attention network for image clustering. In European Conference on Computer Vision, pages 735–751. Springer, 2020.

- Niu et al. [2021] Chuang Niu, Hongming Shan, and Ge Wang. Spice: Semantic pseudo-labeling for image clustering. arXiv preprint arXiv:2103.09382, 2021.

- Hinton and Salakhutdinov [2006] Geoffrey E Hinton and Ruslan R Salakhutdinov. Reducing the dimensionality of data with neural networks. science, 313(5786):504–507, 2006.

- Ji et al. [2019] Xu Ji, Joao F Henriques, and Andrea Vedaldi. Invariant information clustering for unsupervised image classification and segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 9865–9874, 2019.

- Li et al. [2015] Yeqing Li, Feiping Nie, Heng Huang, and Junzhou Huang. Large-scale multi-view spectral clustering via bipartite graph. In Twenty-Ninth AAAI Conference on Artificial Intelligence, 2015.

- Fei-Fei and Perona [2005] Li Fei-Fei and Pietro Perona. A bayesian hierarchical model for learning natural scene categories. In 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), volume 2, pages 524–531. IEEE, 2005.

- Yang and Newsam [2010] Yi Yang and Shawn Newsam. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL international conference on advances in geographic information systems, pages 270–279, 2010.

- Kingma and Ba [2015] Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. In ICLR (Poster), 2015.

- Amigó et al. [2009] Enrique Amigó, Julio Gonzalo, Javier Artiles, and Felisa Verdejo. A comparison of extrinsic clustering evaluation metrics based on formal constraints. Information retrieval, 12(4):461–486, 2009.

- Xiao et al. [2017] Han Xiao, Kashif Rasul, and Roland Vollgraf. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747, 2017.

- Van der Maaten and Hinton [2008] Laurens Van der Maaten and Geoffrey Hinton. Visualizing data using t-sne. Journal of machine learning research, 9(11), 2008.