Self-Learning with Rectification Strategy for Human Parsing

Self-Learning with Rectification Strategy for Human Parsing

Abstract

In this paper, we solve the sample shortage problem in the human parsing task. We begin with the self-learning strategy, which generates pseudo-labels for unlabeled data to retrain the model. However, directly using noisy pseudo-labels will cause error amplification and accumulation. Considering the topology structure of human body, we propose a trainable graph reasoning method that establishes internal structural connections between graph nodes to correct two typical errors in the pseudo-labels, i.e., the global structural error and the local consistency error. For the global error, we first transform category-wise features into a high-level graph model with coarse-grained structural information, and then decouple the high-level graph to reconstruct the category features. The reconstructed features have a stronger ability to represent the topology structure of the human body. Enlarging the receptive field of features can effectively reducing the local error. We first project feature pixels into a local graph model to capture pixel-wise relations in a hierarchical graph manner, then reverse the relation information back to the pixels. With the global structural and local consistency modules, these errors are rectified and confident pseudo-labels are generated for retraining. Extensive experiments on the LIP and the ATR datasets demonstrate the effectiveness of our global and local rectification modules. Our method outperforms other state-of-the-art methods in supervised human parsing tasks.

1 Introduction

Human parsing, a sub-task of semantic segmentation, aims to understand human-body parts on the pixel level. In particular, human parsing is characterized by utilizing the structure of the human body. It has been widely applied in human-computer interaction [26], human behavior understanding [45, 10, 53], security monitoring [55, 12] etc. For deep neural network-based algorithms, they learn complex information from a large amount of labeled samples to boost performance. But collecting accurate and fine-grained labeling for human parsing is very expensive and needs massive human labor. With insufficient training samples, weakly-supervised and semi-supervised methods are proposed to address this issue. Most existing algorithms adopt the human posture or skeleton key points as a supplement to human parsing [11, 27]. However, these algorithms require extra computing resources of human pose or key points, which are usually unavailable in real cases and may introduce new errors [52].

To enlarge the number of samples for training, we regard the predicted masks of unlabeled images as their pseudo-labels and retrain the segmentation network. But these noisy pseudo-labels contain many errors. If the network is blindly confident of its incorrect predictions, the error will be amplified during retraining [34]. Thus, the technical bottleneck is how to autonomously correct the errors of pseudo-labels. For severely noisy pseudo-labels in self-training, some label denoising algorithms are proposed. The transition matrix is adopted to capture the transition probability between the noisy label and the true label [20, 33], and the extra linear network is added to evaluate the noise [15, 40]. However, these algorithms conducting experiments on the simple MNIST dataset are hard to learn the complex noise in human parsing. We propose a new rectification network to detect and correct the errors of pseudo-labels by graph reasoning.

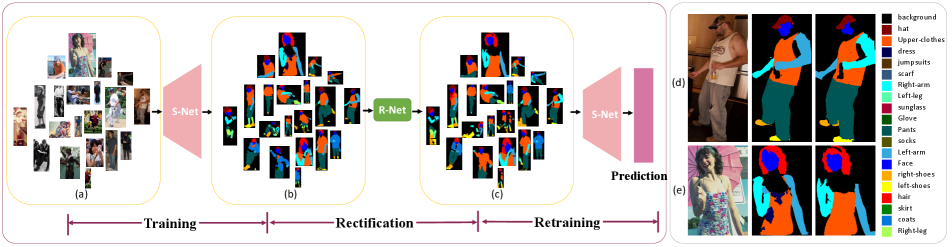

There are two main types of predicted errors in human parsing [32, 29], i.e., the global structure error [17] and the local consistency error (Fig. 1). The first type is the inter-part error in the human body. For example, in Fig. 1 (d), the left-arm is incorrectly predicted as the right-arm. It is mainly caused by reasoning errors on the human structural level. The second type is the intra-part error such as the spotted noise in an area of a certain category. As shown in Fig. 1 (e), some of the pixels belonging to the upper-clothes are predicted as the dress due to the limited receptive field of the networks. Luo et al. [29] proposed macro and micro discriminators respectively for the two types of errors, but the adversarial strategy leads to unstable training process with interminable training time. Nie et al. [32] proposed to jointly learn human parsing and pose estimation, but their algorithm needs doubled computing resources and multiplied training time. Because of the topology structure of human body, it is natural to build a graph model for it and perform graph reasoning to solve the structural errors.

Based on the idea of pseudo-label denoising in self-learning, we propose a new Graph Rectification Network that learns the hierarchical structure of human body and solves the above-mentioned errors by constructing a dual graph reasoning framework. To deal with the global structural error, we introduce a Global Structure Module for graph reasoning. We first transform the grid features into a low-level semantic graph, where each node explicitly represents a certain body-part category (e.g., left leg, right arm, and coat). Then the low-level graph is aggregated into a high-level graph to implicitly represent coarse-grained human parts (e.g., upper limbs, lower limbs and clothes). The hierarchical knowledge is transferred back to each category by decoupling the high-level graph to reconstruct a new low-level graph. The reconstructed low-level graph are more discriminative on confusing problems caused by similar appearances. As for the local consistency error, we build a Local Consistency Module to process spatial pixels. It indirectly defines the relationship between pixels in an enlarged receptive field. Meanwhile, the globally structural information works as the auxiliary knowledge to solve the local errors. By guiding the global structural relationship and enlarging the local receptive field, the local consistency error is corrected. Thus, we combine the global graph with the local graph to boost performance of the fine-grained segmentation. The dual hierarchical structural information acts as a structural attention to rectify the pseudo-labels.

Our paper has the following main contributions. We first propose a semi-supervised training framework named as Graph Rectification Network to solve the sample insufficiency problem in human parsing. Then, a Global Structure Module and a Local Consistency Module are designed to rectify the global and local errors of pseudo-labels by constructing the graph reasoning models on different semantic levels. Finally, our method can be applied to different baseline algorithms with state-of-the-art performance.

2 Related Work

Human Parsing. Human parsing is a subtask of semantic segmentation, but with the particular structure constraint on the human body [35, 43]. Gong et al. [17] introduced the pseudo pose loss as an auxiliary constraint to assist human parsing. The works of [52, 49, 32] jointly trained pose estimation and human parsing networks to improve the performance of both tasks. Wang et al. [35] adopted the bottom-up and top-down hierarchical human body structure to reason human part segmentation and achieved the state-of-the-art performance. In our work, we exploit the hierarchical spirit in our graph reasoning modules. Through trainable aggregation strategy, we transform the low-level nodes corresponding to different human parts to implicit high-level nodes. Then we revert them to low-level nodes through a trainable decoupling strategy, which makes each low-level node carry the structural knowledge.

Semi-supervised learning. In image and video segmentation, sufficient and accurate annotations are helpful for network training [25, 23, 4, 6, 46, 50]. However, the annotation work is time-consuming and requires amounts of human resources. Existing datasets may not satisfy the demand of network training. Recently, semi-supervised and weakly-supervised methods have emerged. To fuse the specific structural information of the human body, [11, 27] adopted pose predictions of the target domain to assist the segmentation of the source domain. [16] proposed to augment the number of samples from other datasets by matching the corresponding relationship among categories via the graph model. Generating pseudo-labels for wild data is an efficient strategy for data augmentation [21, 9, 44]. However, the self-learning strategy with noisy pseudo-labels may result in error amplification and accumulation. To address it, many label denoising algorithms have been proposed [18]. The transition matrix was adopted to capture the transition probability between the noisy label and the true label [20, 33, 15, 40]. However, it is hard to learn the transition matrix due to the agnosticism of neural networks.

We follow the idea of pseudo-label denoising in self-learning and propose the Graph Rectification Network, which learns the hierarchical structure of the human body and assigns the global and local information to each graph node. This structural information helps to rectify the global structural error and the local consistency error happened when the training samples are not sufficient. Thus more confident pseudo-labels are generated for expanding the training set by semi-supervised learning.

Graph reasoning. Rex et al. [51] proposed a differentiable pooling for graph representation by aggregating multiple nodes with similar relations into one node to obtain an advanced graph model. Gao et al. [13] further proposed a graph U-Net algorithm, which allows the graph information to be aggregated and spread out, resulting in a graph model with stronger representation ability. Because of the effectiveness of graph reasoning, it has been quickly applied in various fields, such as human re-id [36, 30, 24], motion recognition [39, 48, 41], human-object relation reasoning [35, 31, 38, 14], and multi-label classification problem [8]. In visual semantic segmentation, [7, 22] transformed the grid Euclidean data into the structural graph model, and performed graph reasoning on it. The human body is a typical topology structure with skeleton key point constraints (e.g., the left arm and left foot should be on one side of the body). So graph reasoning is natural to be adopted in this task. Gong et al. [16] took full advantage of the labeled datasets by modeling the relations between different domains with a pre-defined adjacency matrix. Our work constructs a global graph model by mapping the category-wise features into graph nodes and aggregates these nodes into a higher-level graph to acquire hierarchical structure knowledge of the human body.

3 Method

3.1 Overview

Our pipeline is shown in Fig. 2. We put images into the Segmentation Network (S-Net) to get the predicted masks. Then we concatenate the images and predicted masks and deliver them into our rectification network to get the globally and locally rectified masks. We use the rectified masks to retrain the S-Net. The Rectification Network (R-Net) consists of three parts, that are the backbone, the Global Structure Module (GSM) and the Local Consistency Module (LCM). The GSM module is introduced in 3.2 and 3.3 including graph construction and hierarchical graph reasoning. The LCM module is introduced in 3.4. We introduce the integration method of the GSM and LCM in 3.5, and our semi-supervised training strategy in 3.6.

The global error is the predicted error of the entire human parts, such as confusing left and right arms. The local error means that pixels belonging to one human part may be assigned to two or more categories (e.g. the pixels belonging to dress are assigned to dress and upper-clothes). The GSM and LCM are different in the way the graph is built for different optimization objectives.

3.2 Build Global Graph Model Explicitly

To parse the hard samples such as complicated postures or limbs absence, we propose a global graph model to explicitly represent the human-body features, and then optimize the model through an implicit graph model hierarchical reasoning. As shown in Fig. 2, the S-Net generates features of noisy masks , where , and represent number of categories, the width and the height of the feature map, respectively.

To semantically model the features, we perform the one-to-one correspondence between the channels of the feature map and the nodes of the graph model. We globally transform the feature into a semantically low-level undirected graph model , where denotes the eigenvectors of graph nodes, denotes the dimension of each eigenvector, denotes the adjacency matrix of the graph model and equals to the category number of the dataset. denotes the spatial adjacency between the class and the class . For the -th channel of corresponding to the -th category, i.e., , we transform it to a graph node as Eq. (1):

| (1) |

where means reshaping the matrix from to , is the projection matrix of the -th category, is the eigenvector of the -th node, and denotes the matrix multiplication. is a learnable parameter and can be trained end-to-end. The low-level graph model contains the representation of human-body parts and the relations among them.

3.3 Graph Model Aggregation/Decoupling

Because the human body structure is hierarchical [35], e.g., the upper body includes the head, the upper torso and the arms, the lower body includes the legs and the feet, the clothes include the coat, the pants and the dress, etc. We aggregate the low-level information of the relational graph nodes to perform higher-level structural information reasoning. In detail, we build the GSM to depict human structure information hierarchically and avoid the semantic structural errors by graph reasoning [16].

For the low-level graph model , we use Eq. (2) to perform graph reasoning for information propagation of nodes.

| (2) |

where is the node features after low-level graph reasoning, is the trainable weight matrix and denotes the number of nodes in the low-level graph model.

We propose a trainable aggregation matrix to convert the low-level graph model to the high-level graph model. Then we obtain the high-level graph model by the Eq. (3) and (4),

| (3) |

| (4) |

where and denote the feature of nodes and the number of nodes in the high-level graph model, respectively. For the high-level graph model, similar to Eq. (2), we perform message passing between nodes with Eq. (5) to get a new graph representation with more discriminative power,

| (5) |

Specifically, we compute the aggregation matrix with Eq. (6),

| (6) |

where is the trainable weight matrix, and is pre-set to control the number of nodes in the high-level graph model. The is equal to the number of categories here. This strategy makes our aggregation matrix trainable and improves the aggregating ability from low-level categories to high-level categories.

After aggregating the low-level categories (e.g., head, arm, pants and so on) into the implicit high-level categories (e.g., torso and clothes), we next revert the aggregated high-level model back to the low-level model. However, we cannot separate them by simply converting according to corresponding relations because they are one-to-many correspondence. Thus we perform decoupling processing to revert the aggregated information to the body-part level. After decoupling, a set of low-level graph nodes with stronger discriminative features is generated. Similar to the process of aggregation, we compute the trainable decoupling matrix by Eq. (7), and decouple the high-level graph model by Eq. (8) and (9) to generate the reverted eigenvectors of the low-level graph and the adjacency matrix :

| (7) |

| (8) |

| (9) |

In this way, we obtain the reverted low-level graph model containing the high-level body structure information.

We apply a skip connection to integrate the original low-level graph with the reverted low-level graph,

| (10) |

We enhance the discrimination of the features by the trainable aggregating and decoupling strategy to correct the common global structural errors of human parsing, e.g., the confusion caused by the similar appearance of human parts. The eigenvector of the reasoned graph model contains strong representation ability.

To apply hierarchical graph model to original feature maps, we convert the graph nodes to channel weights of through Eq. (11):

| (11) |

where denotes the global average pooling operation and denotes the element-wise product between a vector and a tensor , where

| (12) |

This strategy can increase the weights of categories with strong correlations and reduce the weights of the irrelevant categories.

3.4 Local Consistency Module

In human parsing, another type of semantic error is the local consistency error. It means that pixels belonging to one human part may be assigned to two or more categories. This problem can be solved by computing the relation between pixels in an enlarged receptive field [2, 47]. The non-local algorithm [47] was proposed to calculate the spatial-wise correlation of all the pixels to obtain global semantic information, but the algorithm brings a large amount of computational redundancy. Similarly, it is not practical to set each pixel as a graph node and perform the graph reasoning directly, because the computational cost is too high to calculate the nodes relations.

Thus we project pixel features to graph nodes in our LCM. After projection, we indirectly obtain the relations between pixels by calculating the relations between nodes. By avoiding the local error, the performance can be improved through the enlarged receptive field within the controllable amount of calculation.

We perform projection from the input feature map to a local graph model by Eq. (13). represents the node features. is the channel number of . It may not equal to the number of categories .

| (13) |

where and represent the projection matrices, and represents reshaping the matrix from to . Then we perform graph reasoning similar to Eq. (2) to obtain the graph model . Note that the way to build the graph model in LCM is different from the way to GSM in 3.2.

We convert the graph feature to the weight of the feature maps, and perform the element-wise product of the weight and the input feature map to get the spatially and locally rectified feature map by Eq. (14),

| (14) |

By local graph reasoning, we capture the contexts and get the spatial relation of pixels indirectly. Because of the structural nature of human parts, we utilize the global information to adjust the local information for better local category consistency, and the first equation in Eq. (14) is transferred to

| (15) |

where is the weight of global auxiliary.

3.5 Graph Module Integration

We correct the global structural error and the local consistency error of the predicted masks by our GSM and LCM, respectively. The graph features of the two modules have different level of feature representation. Thus, we can not simply integrate them by addition. For the two types of errors, the local error is lower-level than the global one and should be corrected before the global structure error. It is worth noting that the process is not reversible. Thus the GSM and LCM are integrated by a cascaded way, as shown in Eq. (16).

| (16) |

where represents multi-layer convolutions. Then we integrate these two rectification modules to obtain stronger representation ability.

3.6 Training Strategy

Pseudo-labels for retraining may exploit abundant features of the object, making the segmentation network have stronger inference power. However, lots of noisy pseudo-labels are introduced and the network is not capable to rectify itself autonomously, causing error accumulation and network degradation. Simply using the originally predicted masks to retrain the segmentation network cannot enhance the performance effectively.

On the contrary, our rectification network is proposed to learn the error distribution of noisy predicted masks and reduce the global and local errors. The training process is elaborately shown as Alg. 1.

4 Experiments

4.1 Datasets and Metrics

Datasets.

We evaluated our algorithm on LIP and ATR datasets. The LIP dataset [17] is currently the largest dataset of human parsing, containing many difficult samples, e.g., severely missing human parts, back-towards-lens, and complicated human posture. It provides 50462 images, including 30462 images for training, 10000 images for verification and 10000 images for testing. There are 20 categories labeled in the LIP dataset, involving 12 types of clothing, 7 types of human parts, and the background as one category. The ATR dataset [25] contains 18 categories of pixel-wise annotations, including 6 categories of body parts, 11 categories of clothing and the background. It has 17700 images, including 16000 for training, 1000 for testing and 700 for verification.

Metrics

We follow the rules in the protocol of the LIP dataset to use pixel-accuracy, mean-accuracy and mean IoU as the evaluation criteria. We use the criteria of pixel accuracy, foreground accuracy, average precision, average recall and average F1-score for the ATR dataset.

4.2 Implementation Details

The baseline of our segmentation network is the CE2P algorithm [37], and the backbone of our rectification network is ResNet-101 [19], since our graph reasoning is performed at a high semantic level. The size of input images is . We adopt data augmentation in training and retraining, like random scales (0.5 to 1.5), cropping and horizontal flipping. We both train and retrain our networks for 150 epochs in experiments under the semi-supervised setting. In the optimization process, we adopt SGD optimizer with momentum of 0.9 and weight decay of 5e-4. We use the “poly” learning rate strategy with an initial rate of 0.007. The node number of low-level graph model is a hyper-parameter, and ={20, 18} for {LIP, ATR} dataset. We set the batch size as 10 per-GPU.

| DS | R | G | L | P-Accu | M-Accu | M-IoU | IoU.I. | IoU.D. |

| 82.10 | 51.00 | 39.79 | 0.00 | 13.31 | ||||

| ✓ | 82.66 | 51.30 | 40.64 | 0.85 | 12.46 | |||

| 1/8 | ✓ | ✓ | 83.11 | 53.57 | 42.32 | 2.53 | 10.78 | |

| ✓ | ✓ | 83.06 | 53.28 | 42.18 | 2.39 | 10.92 | ||

| ✓ | ✓ | ✓ | 83.26 | 54.12 | 42.79 | 3.00 | 10.31 | |

| 83.47 | 54.75 | 43.06 | 0.00 | 10.04 | ||||

| ✓ | 84.21 | 55.89 | 44.66 | 1.60 | 8.44 | |||

| 1/4 | ✓ | ✓ | 85.77 | 59.91 | 48.34 | 5.28 | 4.76 | |

| ✓ | ✓ | 85.73 | 59.70 | 48.24 | 5.18 | 4.86 | ||

| ✓ | ✓ | ✓ | 85.72 | 61.11 | 48.60 | 5.54 | 4.50 | |

| 85.01 | 59.24 | 47.00 | 0.00 | 6.10 | ||||

| ✓ | 85.57 | 59.49 | 47.84 | 0.84 | 5.26 | |||

| 1/2 | ✓ | ✓ | 86.60 | 62.47 | 50.56 | 3.56 | 2.54 | |

| ✓ | ✓ | 86.28 | 62.09 | 49.85 | 2.85 | 3.25 | ||

| ✓ | ✓ | ✓ | 86.67 | 64.89 | 50.99 | 3.99 | 2.11 | |

| 1 | 87.37 | 63.20 | 53.10 | 0.00 | 0.00 |

4.3 Ablation Study

We conduct elaborate ablation experiments for our rectification strategy and the graph reasoning modules. In section 4.3.1, we conduct experiments under semi-supervised settings to evaluate our retraining and rectification strategy. We adopt different amount of labeled and unlabeled samples for semi-supervised learning. In section 4.3.2, we perform more experiments under supervised setting to test our GSM and LCM.

4.3.1 Semi-Supervised Training Test

We first conduct the semi-supervised learning experiments with different training settings. With the rectification module consisting of the GSM and the LCM, we expand the dataset with rectified masks as pseudo-labels. In the dataset, we consider a small number of samples with ground truth as labeled data, and the other samples without ground truth as unlabeled data. By retraining the segmentation network with labeled samples and samples with rectified pseudo-labels, the segmentation network gets better performance.

| Method | L | G |

bkg |

hat |

hair |

glove |

glasses |

u-cloth |

dress |

coat |

socks |

pants |

j-suits |

scarf |

skirt |

face |

l-arm |

r-arm |

l-leg |

r-leg |

l-shoe |

r-shoe |

P-Accu |

M-Accu |

mIoU |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSPNet[54] | 84.88 | 59.86 | 66.50 | 32.40 | 14.40 | 65.79 | 33.73 | 52.82 | 39.04 | 70.04 | 27.40 | 14.53 | 26.93 | 69.14 | 54.09 | 57.01 | 39.78 | 40.41 | 27.84 | 27.82 | 84.77 | 55.26 | 45.22 | ||

| ✓ | 85.40 | 62.25 | 67.07 | 36.12 | 21.88 | 66.42 | 34.18 | 53.45 | 42.04 | 71.11 | 28.46 | 18.35 | 29.10 | 70.02 | 55.75 | 58.60 | 46.70 | 45.60 | 32.25 | 32.31 | 85.23 | 59.43 | 47.85 | ||

| ✓ | 85.76 | 62.93 | 67.75 | 35.90 | 21.57 | 66.73 | 33.88 | 54.53 | 40.56 | 71.43 | 30.70 | 15.89 | 24.98 | 70.46 | 56.63 | 59.72 | 44.75 | 45.40 | 32.02 | 33.05 | 85.46 | 58.98 | 47.73 | ||

| ✓ | ✓ | 85.56 | 62.54 | 67.04 | 35.94 | 23.29 | 66.91 | 33.53 | 54.68 | 42.20 | 71.57 | 29.96 | 17.66 | 28.62 | 70.25 | 55.87 | 58.70 | 45.81 | 45.86 | 32.03 | 32.88 | 85.42 | 59.56 | 48.04 | |

| CE2P [37] | 86.23 | 66.07 | 70.41 | 37.75 | 31.87 | 67.16 | 29.91 | 55.01 | 43.99 | 70.66 | 32.30 | 19.60 | 25.71 | 72.46 | 59.17 | 62.68 | 50.77 | 50.12 | 35.40 | 35.53 | 85.90 | 61.57 | 50.14 | ||

| ✓ | 87.75 | 65.99 | 71.53 | 41.99 | 31.46 | 69.25 | 34.73 | 56.55 | 47.99 | 74.76 | 30.99 | 22.88 | 28.97 | 74.05 | 64.66 | 67.18 | 58.84 | 57.87 | 45.07 | 46.64 | 87.37 | 65.51 | 53.99 | ||

| ✓ | 87.90 | 66.67 | 71.87 | 42.54 | 30.15 | 69.98 | 36.98 | 57.25 | 49.18 | 74.88 | 35.52 | 20.45 | 28.08 | 74.71 | 64.77 | 67.72 | 58.41 | 58.19 | 44.10 | 45.37 | 87.53 | 65.89 | 54.08 | ||

| ✓ | ✓ | 87.70 | 65.74 | 71.55 | 42.58 | 30.62 | 69.44 | 37.13 | 56.05 | 47.34 | 74.92 | 31.18 | 23.77 | 30.44 | 74.70 | 64.73 | 67.27 | 57.18 | 57.89 | 45.82 | 46.30 | 87.35 | 66.11 | 54.12 |

The LIP dataset. The experimental results on the LIP dataset are shown in Tab. 1. We separately adopt of the samples as labeled data, and compare the results between retraining and training phases. When the retraining strategy is without the GSM and LCM, the performance of mIoU is only improved by . Although the quantity of retraining samples (the unlabeled samples with pseudo-labels) is large, the performance is not improved obviously. The experimental results demonstrate that severely noisy pseudo-labels consisting of the global and local errors lead to error accumulation and model degradation.

On the contrary, the performance of our retraining network is improved by respectively in the mIoU criterion, with the assistance of GSM and the LCM. When using GSM, the mIoU is improved by , and when applying the LCM, the mIoU is improved by . We attribute the phenomenon to the characteristic of the base segmentation network, having a weak ability to distinguish the human parts and leading to more global body-part errors than the local consistency errors.

Moreover, when we use only labeled samples of the dataset with retraining and rectification strategies, the performance achieves considerable improvement, by in mIoU. And it even outperforms the result of using 1/2 labeled samples without retraining and rectification strategies. This demonstrates the high-quality of our rectified mechanism. For the gap between using labeled samples and full labeled samples of the dataset, adopting our semi-supervised strategy reduces the performance drop of mIoU from to .

The ATR dataset. The experimental results on the ATR dataset [25] are shown in Tab. 3. Our retraining strategy with the GSM and LCM achieves improvement over the baseline with samples of the dataset. Using a single global or a local module in retraining can improve the mIoU by and . The gap between the algorithms of using all samples and samples (without retraining) is , and the gap is reduced to when adopting our rectification strategy. It demonstrates that the retraining strategy with our rectification module is reliable for the human parsing network to improve the representation and classification power.

| DS | R | G | L | P-Accu | F-Accu | A-Prec | Recall | F1-S | F.I | F.D |

|---|---|---|---|---|---|---|---|---|---|---|

| 92.75 | 72.62 | 60.56 | 67.48 | 63.83 | 0.00 | 6.04 | ||||

| ✓ | 93.61 | 75.51 | 61.81 | 67.17 | 64.38 | 0.55 | 5.49 | |||

| 1/2 | ✓ | ✓ | 94.29 | 78.33 | 65.27 | 69.89 | 67.50 | 3.67 | 2.37 | |

| ✓ | ✓ | 94.18 | 77.81 | 64.81 | 69.48 | 67.06 | 3.23 | 2.81 | ||

| ✓ | ✓ | ✓ | 94.43 | 78.74 | 66.27 | 70.36 | 68.25 | 4.42 | 1.62 | |

| 1 | 94.66 | 79.74 | 68.26 | 71.56 | 69.87 | 0.00 | 0.00 |

4.3.2 Module Effectiveness Evaluation

We apply our global structure module and local consistency module into base segmentation networks rather than the rectification network, under the supervised learning settings to evaluate the effectiveness of our two graph reasoning modules. We perform the ablation experiments on the LIP validation set [17]. We adopt the CE2P and PSPNet as baselines and reproduce their results by running the source codes. As shown in Tab. 2, the proposed graph reasoning modules almost improve all the human-part categories. By integrating the global module and the local module into CE2P [37], the performance is improved by and , respectively. As for the PSPNet [54], the mIoU is improved by and , respectively. For the confusing categories with symmetrical structure (e.g., left and right arms) or similar appearance (e.g., the coat and jumpsuits), our algorithms predict more accurately.

4.4 Compare with State-of-the-art

We compare the proposed methods with several strong baselines on the LIP dataset, as demonstrated in Tab. 4. In the experiment setting, we treat the training set as labeled samples, and use the validation set and the test set as unlabeled samples. According to our proposed framework, we generate pseudo-labels for unlabeled samples, then use the labeled and augmented samples to retrain the segmentation network. As shown in Tab. 4, our algorithm outperforms the other state-of-the-art algorithms. Specifically, our method yields a mIoU of , improved by compared to the HRNet [42] and by compared to the baseline CE2P [37]. Additionally, our model also outperforms the MuLA [32] (), the MMANs [29] (), the Attention [5] (), and the Deeplabv2 [3] (), respectively. MMANs [29] adopted adversarial network with unstable and intricate training process, and MuLA [32] proposed to jointly train the human parsing and pose estimation networks with tremendous cost of computation and time. But they only get limited performance in terms of mIoU. This confirms the effectiveness of our rectification strategy. Using unlabeled samples still makes sense for improving the representation and generalization power.

| Method | Published | P-Accu | M-Accu | mIoU |

|---|---|---|---|---|

| SegNet[1] | 2017 T-PAMI | 69.0 | 24.0 | 18.2 |

| FCN-8s[28] | 2015 CVPR | 76.1 | 36.8 | 28.3 |

| DeepLab V2[3] | 2017 T-PAMI | 82.7 | 51.6 | 41.6 |

| Attention[5] | 2016 CVPR | 83.4 | 54.4 | 42.9 |

| Attention+SSL[17] | 2017 CVPR | 84.4 | 54.9 | 44.7 |

| MMANs[29] | 2018 ECCV | - | - | 46.81 |

| MuLA[32] | 2018 ECCV | 88.5 | 60.5 | 49.3 |

| JPPNet[23] | 2018 T-PAMI | - | - | 51.37 |

| CE2P[37] | 2019 AAAI | - | - | 53.10 |

| HRNet[42] | 2019 ICCV | 88.21 | 67.43 | 55.90 |

| Ours | 88.33 | 66.53 | 56.34 |

4.5 Qualitative Results

We add our rectification network on the top of the segmentation network, and retrain the latter one using the labeled data and the generated pseudo-labeled samples. The visual explanation of the rectification strategy is shown in Fig. 3. In the second row of the figure, the wrongly predicted areas are highlighted in the dotted rectangles. The CE2P algorithm correctly predicts the edges but fails to label the hard samples of semantic errors. The third row illustrates the attention maps for rectification. These maps are generated by calculating the gradient of the rectification network and corresponding to the original image. It is obvious that the rectified network focuses on the mistakenly predicted human parts. For example, for the hard samples of the back view (columns ), the samples without head (columns ), and the samples with complicated gestures (columns ), the rectification network can capture the errors and perform rectification as shown in the fourth row. These predicted errors in baseline are corrected effectively, especially for the confusing left and right limbs. Moreover, due to the high cost of accurate pixel-wise annotation, the ground truth of the LIP dataset is low-quality in detail. Our rectification mask performs even better than the ground truth in these details (column 8).

5 Conclusion

For the problem of insufficient training data in human parsing, we managed to generate confident pseudo-labels for unlabeled samples. We propose a rectification network for detecting and correcting predicted errors of pseudo-labels. The rectification network consists of the global structure module and the local consistency module. The global module is based on the hierarchical graph reasoning and captures the structural relationships of human-body parts. The local module is based on relational local graph reasoning and captures larger semantic contexts with acceptable computational costs. Through the rectification network, the global structural error and local consistency error of the pseudo-labels are corrected. We retrain the segmentation network with both the labeled and pseudo-labeled samples. Experimental results have demonstrated the effectiveness of our parsing framework.

References

- [1] V. Badrinarayanan, A. Kendall, and R. Cipolla. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE TPAMI, 39(12):2481–2495, 2017.

- [2] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv preprint arXiv:1412.7062, 2014.

- [3] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE TPAMI, 40(4):834–848, 2017.

- [4] X. Chen, R. Mottaghi, X. Liu, S. Fidler, R. Urtasun, and A. Yuille. Detect what you can: Detecting and representing objects using holistic models and body parts. In IEEE CVPR, pages 1971–1978, 2014.

- [5] L.-C. Chen, Y. Yang, J. Wang, W. Xu, and A. L. Yuille. Attention to scale: Scale-aware semantic image segmentation. In IEEE CVPR, pages 3640–3649, 2016.

- [6] J. Hu, J. Shen, B. Yang, and L. Shao: Infinitely wide graph convolutional networks: semi-supervised learning via Gaussian processes, arXiv preprint, arXiv:2002.12168, 2020.

- [7] Y. Chen, M. Rohrbach, Z. Yan, Y. Shuicheng, J. Feng, and Y. Kalantidis. Graph-based global reasoning networks. In IEEE CVPR, pages 433–442, 2019.

- [8] Z.-M. Chen, X.-S. Wei, P. Wang, and Y. Guo. Multi-label image recognition with graph convolutional networks. In IEEE CVPR, pages 5177–5186, 2019.

- [9] Y. Ding, L. Wang, D. Fan, and B. Gong. A semi-supervised two-stage approach to learning from noisy labels. In IEEE WACV, pages 1215–1224. IEEE, 2018.

- [10] L. Fan, W. Wang, S. Huang, X. Tang, and S.-C. Zhu. Understanding human gaze communication by spatio-temporal graph reasoning. arXiv preprint arXiv:1909.02144, 2019.

- [11] H.-S. Fang, G. Lu, X. Fang, J. Xie, Y.-W. Tai, and C. Lu. Weakly and semi supervised human body part parsing via pose-guided knowledge transfer. arXiv preprint arXiv:1805.04310, 2018.

- [12] H. Foroughi, B. S. Aski, and H. Pourreza. Intelligent video surveillance for monitoring fall detection of elderly in home environments. In international conference on computer and information technology, pages 219–224. IEEE, 2008.

- [13] H. Gao and S. Ji. Graph u-nets. arXiv preprint arXiv:1905.05178, 2019.

- [14] W. Wang, H. Zhu, J. Dai, Y. Pang, J. Shen, L. Shao, Hierarchical human parsing with typed part-relation reasoning, In IEEE CVPR, 2020.

- [15] J. Goldberger and E. Ben-Reuven. Training deep neural-networks using a noise adaptation layer. 2016.

- [16] K. Gong, Y. Gao, X. Liang, X. Shen, M. Wang, and L. Lin. Graphonomy: Universal human parsing via graph transfer learning. In IEEE CVPR, pages 7450–7459, 2019.

- [17] K. Gong, X. Liang, D. Zhang, X. Shen, and L. Lin. Look into person: Self-supervised structure-sensitive learning and a new benchmark for human parsing. In IEEE CVPR, pages 932–940, 2017.

- [18] J. Han, P. Luo, and X. Wang. Deep self-learning from noisy labels. In IEEE ICCV, pages 5138–5147, 2019.

- [19] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In IEEE CVPR, pages 770–778, 2016.

- [20] D. Hendrycks, M. Mazeika, D. Wilson, and K. Gimpel. Using trusted data to train deep networks on labels corrupted by severe noise. In NeurIPS, pages 10456–10465, 2018.

- [21] D.-H. Lee. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Workshop on Challenges in Representation Learning, ICML, volume 3, page 2, 2013.

- [22] Y. Li and A. Gupta. Beyond grids: Learning graph representations for visual recognition. In NeurIPS, pages 9225–9235, 2018.

- [23] X. Liang, K. Gong, X. Shen, and L. Lin. Look into person: Joint body parsing & pose estimation network and a new benchmark. IEEE TPAMI, 41(4):871–885, 2018.

- [24] M. Ye, J. Shen, Probabilistic structural latent representation for unsupervised embedding, IEEE CVPR, 2020.

- [25] X. Liang, S. Liu, X. Shen, J. Yang, L. Liu, J. Dong, L. Lin, and S. Yan. Deep human parsing with active template regression. IEEE TPAMI, 37(12):2402–2414, 2015.

- [26] J. Lin, X. Guo, J. Shao, C. Jiang, Y. Zhu, and S.-C. Zhu. A virtual reality platform for dynamic human-scene interaction. In SIGGRAPH ASIA, page 11. ACM, 2016.

- [27] K. Lin, L. Wang, K. Luo, Y. Chen, Z. Liu, and M.-T. Sun. Cross-domain complementary learning with synthetic data for multi-person part segmentation. arXiv preprint arXiv:1907.05193, 2019.

- [28] J. Long, E. Shelhamer, and T. Darrell. Fully convolutional networks for semantic segmentation. In IEEE CVPR, pages 3431–3440, 2015.

- [29] Y. Luo, Z. Zheng, L. Zheng, T. Guan, J. Yu, and Y. Yang. Macro-micro adversarial network for human parsing. In ECCV, pages 418–434, 2018.

- [30] M. Ye, J. Shen, G. Lin, T. Xiang, L. Shao, S. C. H. Hoi: Deep learning for person re-identification: A survey and outlook. arXiv preprint, arXiv:2001.04193, 2020.

- [31] S. Qi, W. Wang, B. Jia, J. Shen, and S. Zhu. Learning human-object interactions by graph parsing neural networks. In ECCV, pages 407–423. Springer, 2018.

- [32] X. Nie, J. Feng, and S. Yan. Mutual learning to adapt for joint human parsing and pose estimation. In ECCV, pages 502–517, 2018.

- [33] G. Patrini, A. Rozza, A. Krishna Menon, R. Nock, and L. Qu. Making deep neural networks robust to label noise: A loss correction approach. In IEEE CVPR, pages 1944–1952, 2017.

- [34] R. Reichart and A. Rappoport. Self-training for enhancement and domain adaptation of statistical parsers trained on small datasets. In ACL, pages 616–623, 2007.

- [35] W. Wang, Z. Zhang, S. Qi, J. Shen, Y. Pang, and L. Shao. Learning compositional neural information fusion for human parsing. In IEEE ICCV, 2019.

- [36] E. Ristani and C. Tomasi. Features for multi-target multi-camera tracking and re-identification. In IEEE CVPR, pages 6036–6046, 2018.

- [37] T. Ruan, T. Liu, Z. Huang, Y. Wei, S. Wei, and Y. Zhao. Devil in the details: Towards accurate single and multiple human parsing. In AAAI, volume 33, pages 4814–4821, 2019.

- [38] Z. Shen, W. Wang, X. Lu, J. Shen, H. Ling, T. Xu, and L. Shao. Human-aware motion deblurring. In IEEE ICCV, pages 5571–5580. IEEE, 2019.

- [39] C. Si, Y. Jing, W. Wang, L. Wang, and T. Tan. Skeleton-based action recognition with spatial reasoning and temporal stack learning. In ECCV, pages 103–118, 2018.

- [40] S. Sukhbaatar, J. Bruna, M. Paluri, L. Bourdev, and R. Fergus. Training convolutional networks with noisy labels. arXiv preprint arXiv:1406.2080, 2014.

- [41] W. Wang, X. Lu, J. Shen, D. Crandall, and L. Shao. Zero-shot video object segmentation via attentive graph neural networks. In IEEE ICCV, pages 9235–9244. IEEE, 2019.

- [42] K. Sun, Y. Zhao, B. Jiang, T. Cheng, B. Xiao, D. Liu, Y. Mu, X. Wang, W. Liu, and J. Wang. High-resolution representations for labeling pixels and regions. arXiv preprint arXiv:1904.04514, 2019.

- [43] T. Zhou, H. Fu, C. Gong, J. Shen, L. Shao, F. Porikli, Multi-mutual consistency induced transfer subspace learning for human motion segmentation, In IEEE CVPR, 2020.

- [44] D. Tanaka, D. Ikami, T. Yamasaki, and K. Aizawa. Joint optimization framework for learning with noisy labels. In IEEE CVPR, pages 5552–5560, 2018.

- [45] C. Wang, Y. Wang, and A. L. Yuille. An approach to pose-based action recognition. In IEEE CVPR, pages 915–922, 2013.

- [46] W. Wang, J. Shen, F. Porikli, and R. Yang. Semi-supervised video object segmentation with super-trajectories. IEEE TPAMI, 41(4):985–998, 2019.

- [47] X. Wang, R. Girshick, A. Gupta, and K. He. Non-local neural networks. In IEEE CVPR, pages 7794–7803, 2018.

- [48] X. Wang and A. Gupta. Videos as space-time region graphs. In ECCV, pages 399–417, 2018.

- [49] F. Xia, P. Wang, X. Chen, and A. L. Yuille. Joint multi-person pose estimation and semantic part segmentation. In IEEE CVPR, pages 6769–6778, 2017.

- [50] W. Wang, J. Shen, R. Yang, and F. Porikli. Saliency-aware video object segmentation. IEEE TPAMI, 40(1):20–33, 2018.

- [51] Z. Ying, J. You, C. Morris, X. Ren, W. Hamilton, and J. Leskovec. Hierarchical graph representation learning with differentiable pooling. In NeurIPS, pages 4800–4810, 2018.

- [52] S.-H. Zhang, R. Li, X. Dong, P. Rosin, Z. Cai, X. Han, D. Yang, H. Huang, and S.-M. Hu. Pose2seg: Detection free human instance segmentation. In IEEE CVPR, pages 889–898, 2019.

- [53] T. Zhou, W. Wang, S. Qi, H. Ling, and J. Shen. Cascaded human-object interaction recognition. In IEEE CVPR, 2020.

- [54] H. Zhao, J. Shi, X. Qi, X. Wang, and J. Jia. Pyramid scene parsing network. In IEEE CVPR, pages 2881–2890, 2017.

- [55] X. Zhou, M. Zhu, S. Leonardos, K. G. Derpanis, and K. Daniilidis. Sparseness meets deepness: 3d human pose estimation from monocular video. In IEEE CVPR, pages 4966–4975, 2016.