Self-Improving Interference Management Based on Deep Learning With Uncertainty Quantification

Abstract

This paper presents a groundbreaking self-improving interference management framework tailored for wireless communications, integrating deep learning with uncertainty quantification to enhance overall system performance. Our approach addresses the computational challenges inherent in traditional optimization-based algorithms by harnessing deep learning models to predict optimal interference management solutions. A significant breakthrough of our framework is its acknowledgment of the limitations inherent in data-driven models, particularly in scenarios not adequately represented by the training dataset. To overcome these challenges, we propose a method for uncertainty quantification, accompanied by a qualifying criterion, to assess the trustworthiness of model predictions. This framework strategically alternates between model-generated solutions and traditional algorithms, guided by a criterion that assesses the prediction credibility based on quantified uncertainties. Experimental results validate the framework’s efficacy, demonstrating its superiority over traditional deep learning models, notably in scenarios underrepresented in the training dataset. This work marks a pioneering endeavor in harnessing self-improving deep learning for interference management, through the lens of uncertainty quantification.

Index Terms:

Deep learning, interference management, self-improving, uncertainty quantification.I Introduction

In the realm of wireless communications, extensive efforts have been dedicated to interference management, a critical challenge in enhancing system performance. Specifically, many traditional algorithms have been devised to effectively address interference management optimization problems based on optimization theory, such as the weighted minimum mean squared error (WMMSE) and interference pricing algorithms [1, 2, 3]. Nonetheless, the practical implementation of these algorithms still encounters many obstacles due to their substantial computational demands [4].

To address this challenge, deep learning, like in other domains of wireless communications, has been extensively applied in interference management [4, 5]. Rather than solving the problem directly, this approach involves training a deep learning model to predict the optimal solution to the problem specified by a given channel realization. The model can be trained using a dataset consisting of channel realizations and their corresponding optimal solutions. Once trained, the model can efficiently predict the optimal solution for any given channel realization, offering a cost-effective alternative to optimization-based algorithms.

However, this data-driven approach based on deep learning may not guarantee effective interference management in situations that deviate significantly from the given dataset. Indeed, this limitation is inherent to data-driven approaches, since a single dataset cannot encompass all the diverse scenarios encountered in real-world systems [6]. Hence, to ensure effective interference management, it is essential to assess the confidence level of the solution predicted by the deep learning model. Then, based on this evaluation, optimization-based algorithms can be employed to complement the deep learning model while collecting additional data samples to improve model, enhancing its performance.

In this paper, we propose a self-improving interference management framework based on deep learning with uncertainty quantification. Within this framework, we introduce a method to quantify the uncertainties associated with solutions made by the deep learning model. Leveraging this quantified uncertainty, we devise a qualifying criterion to evaluate the trustworthiness of the model’s solutions in terms of system performance. Using this criterion, the framework selectively employs the model’s solutions only when they are deemed trustworthy; otherwise, traditional algorithms are employed. Through this iterative process, the framework collects data samples for situations that the model struggles with, subsequently allowing for self-improvement using the accumulated data. We demonstrate via experimental results that our proposed framework is effective, not only for interference management but also for enhancing the deep learning model itself. To the best of our knowledge, this paper represents the first endeavor to design a self-improving deep learning framework for interference management via uncertainty quantification in the field of deep learning.

II Deep Learning-Based Interference Management

II-A System Model and Problem Formulation

We consider a downlink network comprising pairs of single-antenna transceivers, each pair consisting of a base station (BS) and a corresponding scheduled user. The set of BSs is defined as . Let denote the channel gain between BS and its scheduled user, and the interference channel gain from BS to the scheduled user of BS . The channel gain matrix is defined as . We assume that the channels main unchanged during each timeslot. We denote the transmission power of BS as and define the vector of transmission powers as . Then, the signal to interference-plus-noise ratio (SINR) for the user of BS is given by

| (1) |

where is the noise power at that user. The sum-rate of the network is given by

| (2) |

To maximize the weighted sum-rate, a power allocation problem for managing downlink interference is formulated as

| (3) |

where with being the maximum transmission power. The optimal solution for a given is denoted as .

II-B Deep Learning for Interference Management

In the literature, a number of algorithms have been developed to address the interference management problem as formulated in (3) with a given channel gain matrix [1, 2, 3]. Most of these algorithms employ an iterative approach, and their effectiveness has been demonstrated through simulation results and theoretical analyses. However, implementing these algorithms in practical systems proves, in many cases, challenging due to their computational complexity.

To overcome this issue, recently, deep learning-based approaches to interference management have gained significant attention [4, 5]. These approaches treat an interference management algorithm as a function that takes as input and yields as output. Then, a deep neural network (DNN) model, , is trained to approximate , where represents the weights of the DNN model. After training, the approximated solution can be rapidly computed using the DNN model, requiring far fewer calculations than traditional algorithms. Fig. 1 illustrates a typical deep learning-based approach to interference management. This approach involves generating a dataset from the target algorithm for given channel gain matrices, i.e., pairs of . Then, the DNN model is trained using this dataset, as shown in Fig. 1a, and subsequently applied to interference management, as shown in Fig. 1b. This implies that the efficacy of the DNN model highly depends on the dataset used for training. However, generating a comprehensive dataset that encompasses all possible channel conditions and corresponding power allocations is impractical. Consequently, it is inherently challenging to ensure the DNN model’s effectiveness in all conceivable interference scenarios, especially as some scenarios might exceed the generalization capabilities of deep learning and fall outside the scope of the training dataset.

III Self-Improving Interference Management Using Deep Learning

In this section, we introduce the concept of uncertainty quantification in deep learning and its relevance to our context. We then present a proposed framework for self-improving interference management, which integrates deep learning with uncertainty quantification, as illustrated in Fig. 2.

III-A Concept of Uncertainty Quantification

The quantification of predictive uncertainty in deep learning has been widely studied due to its role in assessing the trustworthiness of predictions. Predictive uncertainty mainly stems from two sources: aleatoric and epistemic uncertainties [6]. Aleatoric uncertainty is associated with inherent variabilities in the data distribution, rather than a characteristic of the DNN models. As such, it is considered irreducible, meaning that it cannot be reduced through the collection of more data or improvements in the models. In contrast, epistemic uncertainty stems from the limitations in DNN models, often due to insufficient training or inadequate experiences. This type of uncertainty is typically prominent in regions of the input feature space that are not well-represented by the training dataset and is, therefore, reducible. For more detailed theoretical background on uncertainty quantification, readers are referred to [6].

Deep ensemble is one of the representative uncertainty quantification methods in deep learning [7]. In uncertainty quantification, the output for a given input feature is treated as a random variable encompassing uncertainty. Therefore, each DNN model in deep ensemble aims to approximate both the mean and variance of the output value of a given input feature, in contrast to typical DNN models that focus on approximating a single output value. To effectively quantify uncertainties, deep ensemble involves training multiple models, each with distinct random initialization. The prediction from these models are subsequently integrated to derive the final estimate.

III-B Uncertainty Quantification in Deep Learning-Based Interference Management

We now describe an uncertainty quantification approach in deep learning-based interference management using deep ensemble.111In this paper, we use deep ensemble for quantifying uncertainty in deep learning due to its simplicity of implementation and adaptability to various types of NNs. However, other uncertainty quantification methods, such as Bayesian NN [8] and conformal regression [9], can also be applied within our proposed framework. To quantify uncertainty in interference management, we consider DNN models that jointly approximate the following distributional parameters of transmission power for a given channel gain matrix : the mean function and the variance function , where and describe the solution and the uncertainty of the estimate, respectively. We denote the estimation of and by the th DNN model as and , respectively. Note that we cannot simply use a mean squared error (MSE) loss function, a standard loss function for regression problems, as a loss function for training the models since the variance cannot be trained via the MSE. Hence, to train the models for approximating both mean and variance, we employ a negative log-likelihood function as the loss function:

| (4) |

where is a constant added to the loss function for practical reasons, such as numerical stability, without altering the fundamental behavior of the optimization process. Then, by using the loss function, the DNN models with random initialization are trained by using given dataset.

We now estimate the uncertainty of the predicted solution by averaging the predictions of DNN models, which implies that the individual distributions are ensembled as a uniformly-weighted mixture model. For transmission power , the averaged mean is given by , and the averaged variance is given by . We can further decompose the variance as

| (5) |

where the first term, the averaged estimated variance over all models, signifies aleatoric variance, and the second term, which goes to zero as the mean predictions of all models become identical, signifies epistemic variance. Consequently, as shown in Fig. 2a, the transmission power is predicted as , and its corresponding uncertainty is estimated as by employing the DNN models.

III-C Self-Improving Deep Learning for Interference Management

The quantified uncertainty of predictions can be used to improve deep learning [10, 11]. Commonly, typical uncertainty-aware deep learning methods in machine learning literature focus on maximizing prediction accuracy by leveraging uncertainty. However, in the realm of wireless communications, the goal of deep learning-based interference management is not to precisely estimate the power control solution, but to improve the system performance, specifically by maximizing the sum-rate as in (3). Hence, when designing self-improving deep learning, it is insufficient to simply consider the quantified uncertainty of the predicted solution as in (5) for effectively pursuing the goal of interference management.

To address this issue, we propose a novel framework for self-improving interference management that elaborately considers actual system performance in light of the quantified uncertainty in deep learning. In the framework, first during the training stage, the interference management and corresponding uncertainty quantification models are trained as described in Section III-B. In the subsequent self-improving stage, for each instance of , the framework obtains the predicted solution and its quantified uncertainty. Then, based on the quantified uncertainty, the credibility of the prediction is qualified. If the predicted solution is deemed credible, it is used as is. If not, the predicted solution is refined using existing optimization-based methods,222Note that the predicted solution itself is a plausible solution. Hence, enhancing this predicted solution is substantially more cost-efficient compared to directly seeking the optimal solution from scratch. This efficiency is evident in our empirical experiments. In one sample case, using the predicted solution as an initial point significantly reduces computational time. The WMMSE algorithm requires only ms of CPU time when starting with , as opposed to ms when no initial point is provided. and this improved solution is then used to further enhance the DNN models, as illustrated in Fig. 2b.

To qualify the credibility, we need to design a qualifying criterion that can assess how much the predicted solution is close to the optimal one in terms of the system performance. For design, we first construct a confidence interval for each predicted transmission power, using the standard deviation of epistemic uncertainty , as

| (6) |

where is the control parameter that determines the confidence level. With the confidence interval, we can define an uncertainty-aware feasible set of solutions as . Since the optimal solution is likely to belong to the uncertainty-aware feasible set, comparing the sum-rate with the predicted solution (i.e., ) and the maximum sum-rate achievable within the set (i.e., ) is a straightforward way to design the criterion. If is sufficiently close to , the predicted solution is considered credible from the perspective of system performance, and if not, it requires enhancement. However, identifying the maximum achievable sum-rate within the feasible set is equivalent to solving the power allocation problem for interference management. Consequently, the comparison of and is not practical.

Instead, we design a qualifying criterion to evaluate whether the uncertainty-aware feasible set is sufficiently small to minimally affect the sum-rate. This approach is plausible for achieving a sum-rate close to the optimal one, as the feasible set likely contains the optimal solution; the sum-rate achieved by the predicted solution will be close to the optimal one if the feasible set is sufficiently small. To this end, we define the maximal and minimal interference solutions as and , respectively, and calculate their corresponding sum-rates as and , respectively. Then, we design a qualifying criterion involving , , and as

| (7) |

where , and is a criterion value that controls the criterion’s sensitivity. This criterion evaluates whether the uncertainty-aware feasible set is small enough so that the sum-rates achieved by the extreme solutions within the feasible set and the predicted solution (i.e., , , and ) appear similar. If this criterion is satisfied, the predicted solution is considered credible since is expected to be close to . On the other hand, if not due to a large uncertainty-aware feasible set, the prediction solution requires enhancement.

III-D Description of Self-Improving Interference Management Framework

Here, we describe the self-improving interference management framework, illustrated in Fig. 2 and detailed in Algorithm 1. First, in the training stage (Fig. 2a), a dataset for interference management is generated using the target algorithm and channel instances as ’s (line 1). We denote this dataset by . For prediction and uncertainty quantification, we construct DNN models, each outputting the means and variances of the transmission power ’s as (line 2).333It is worth noting that any kind of DNN model capable of producing mean and variance as outputs can be used for self-improving interference management. The weights of each DNN model , , are randomly initialized (line 3) and trained to minimize the negative log-likelihood loss function in (4) with respect to (lines 4–6). During this training stage, various learning techniques such as cross-validation or early stopping can be employed.

In the self-improving stage (Fig. 2b), for each arrival of interference management request with instance , the predicted solution and its epistemic standard deviation are computed using deep ensemble with DNN models as in Section III-B (lines 8–9). Using these calculations, the uncertainty-aware feasible set is constructed with ’s in (6) (line 10). Then, the qualifying criterion in (7) is examined with ; if the criterion is met, the predicted solution is directly used for transmission (line 12). If not, it is enhanced by using the target algorithm starting from the initial point , and the enhanced solution is then used for transmission (line 14). The enhanced solution (i.e., ) is collected into a self-improving dataset (line 15). The DNN models, ’s, are improved using if the number of collected enhanced solutions is large enough to improve the models, i.e., , where denotes the cardinality of set , and denotes the required number of samples for model improvement. The self-improving dataset is cleared once it is used (lines 17–19). The model improvement can be achieved either by simply retraining the DNN models with the combined dataset or by adopting continual learning techniques [12].

IV Experimental Results

In this section, we provide experimental results to demonstrate the effectiveness of our self-improving interference management framework. To this end, we implement the self-improving interference management framework (SI-DNN) in Python using a library for uncertainty quantification in deep learning called Fortuna [13]. We set to (for a % confidence interval and to . We also implement a DNN-based interference management without self-improving (DNN). For those approaches, we consider a DNN architecture consisting of hidden layers with , , , , units, respectively. Each DNN model is trained by using the Adam optimizer with learning rate and batchsize 100. As a target algorithm of deep learning models, we consider the WMMSE algorithm as described in [4]. Hence, the sum-rate performance of WMMSE serves as an upper bound. Furthermore, for the purpose of comparison, we also consider random power allocation (RandPower) and maximum power allocation (MaxPower). Additionally, we adopt a network setup with transmission links considered in [4]. Each channel coefficient is generated using a pathloss coefficient of and Rayleigh fading.

To clearly demonstrate the self-improvement of the models, we consider a scenario involving two topologies, Topology A and Topology B, each with different locations of transmitters and receivers. In each topology, the distances between the transmitters and receivers within the same transmission links are randomly chosen from the interval m, while the distances between the transmitters and receivers of different transmission links are randomly chosen from the interval m. During the training stage, the DNN models are trained using a training dataset generated exclusively from Topology A. However, during the testing (self-improving) stage, the trained models are tested using a testing dataset generated from both Topologies A and B. Consequently, it is more likely to encounter less reliable predictions (i.e., untrustworthy solutions) in Topology B than in Topology A. Through this scenario, we can clearly show how the self-improving interference management addresses the issue of untrustworthy samples.

| Alg. | Total | Topology A | Topology B |

|---|---|---|---|

| WMMSE | 3.925 (100.00%) | 3.831 (100.00%) | 4.018 (100.00%) |

| SI-DNN | 3.845 (97.98%) | 3.767 (98.33%) | 3.924 (97.65%) |

| DNN | 3.483 (88.74%) | 3.599 (93.96%) | 3.366 (83.77%) |

| MaxPower | 2.440 (62.17%) | 2.276 (59.41%) | 2.604 (64.81%) |

| RandPower | 2.072 (52.80%) | 1.971 (51.44%) | 2.174 (54.10%) |

We first provide the sum-rate performance of the algorithms in Table I. From the table, we can observe that the sum-rate achieved by DNN for Topology A closely approximates that of WMMSE, but this similarity is not as pronounced for Topology B. This discrepancy arises because certain samples from Topology B are difficult to be effectively addressed by exploiting only the knowledge acquired from Topology A. In contrast, SI-DNN consistently achieves the sum-rates that are closely aligned with WMMSE, irrespective of the underlying topologies. This clearly demonstrates its ability to identify untrustworthy predictions and improve upon them.

For further investigation, we provide the cumulative distribution function (CDF) of the sum-rates achieved by each algorithm in Fig. 3. From the figure, we can observe that the CDF curve of SI-DNN closely aligns with that of WMMSE, regardless of the underlying topologies. As indicated in Table I, the CDF curve of DNN closely aligns with that of WMMSE only for instances from Topology A. Notably, we can see that SI-DNN exhibits cumulative probabilities lower than DNN across the entire sum-rate range, which implies that SI-DNN consistently outperforms DNN with a uniform gain.

In Fig. 4, we provide the predicted solutions and corresponding confidence intervals computed by SI-DNN for an instance from each topology to comprehend the uncertainty quantification. The figures describe the normalized transmission power of each link within the rage of . In Fig. 4a, the predicted solution for the instance from Topology A is provided, and it is assessed as trustworthy. We can observe that the confidence interval is narrow overall, and the solution is predicted well. On the other hand, in Fig. 4b, the predicted solution for the instance from Topology B is provided, and it is assessed as untrustworthy. In this instance, we note that the confidence interval is wide for many links, and indeed, the predictions for those links are not precise.

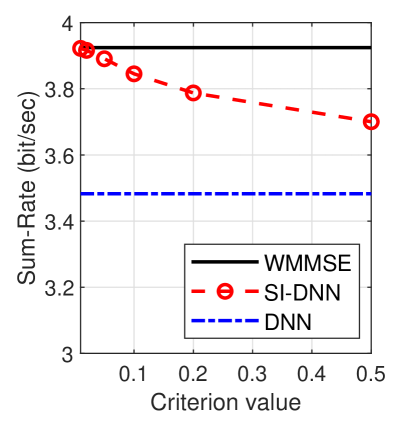

To show the impact of the criterion value in (7), we provide the enhancing rate and sum-rate of the algorithms while varying in the set in Fig. 5. The enhancing rate quantifies the proportion of predictions that undergo enhancement. As the criterion value increases, the qualifying criterion becomes more easily met, and thus, enhancing predictions for self-improving occurs more rarely, as shown in Fig. 5a. Accordingly, in Fig. 5b, the sum-rate of SI-DNN decreases as the criterion value increases. Furthermore, from Fig. 5a, we can observe that the predictions for Topology B are notably more frequently enhanced compared to those for Topology A. This clearly demonstrates that SI-DNN effectively assesses the trustworthy of the predictions, given that the DNN model is trained exclusively on the dataset from Topology A.

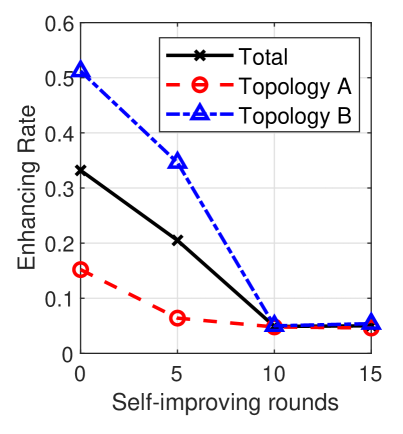

To show the effectiveness of self-improving, we provide the enhancing rate and sum-rate of the algorithms according to the self-improving rounds in Fig. 6, where each self-improvement round corresponds to executing line 20 in Algorithm 1 once. For self-improving, we set to to conduct one round of self-improving and assume that DNN is re-trained using the dataset for each round. As self-improving progresses, the DNN model is improved by using the enhanced samples for which it previously predicted untrustworthy solutions. Hence, the enhancing rate decreases, as shown in Fig. 6a. From the figure, we can observe that the enhancing rate of SI-DNN converges after rounds. We can explain that this convergence in the enhancing rate can be attributed to limitations in the DNN model, such as its representational capacity and generalization capability. From Fig. 6b, we can see that the sum-rate of DNN experiences effective improvement with the enhanced samples. This clearly demonstrates SI-DNN’s ability to proficiently identify untrustworthy predictions.

V Conclusion

In this paper, we have proposed the self-improving interference management framework. If the solution provided by the deep learning-based algorithm is deemed untrustworthy, it enhances the solution using optimization-based algorithms in a complementary manner, and these enhanced solutions are utilized to refine the DNN model. To this end, we have proposed the qualifying criterion for solutions based on quantified uncertainties while considering system performance. Through the simulation results, we have demonstrated that the proposed framework adeptly identifies untrustworthy solutions and efficiently enhances the DNN model for interference management.

References

- [1] J. Papandriopoulos and J. S. Evans, “SCALE: A low-complexity distributed protocol for spectrum balancing in multiuser dsl networks,” IEEE Trans. Inf. Theory, vol. 55, no. 8, pp. 3711–3724, Aug. 2009.

- [2] Q. Shi, M. Razaviyayn, Z.-Q. Luo, and C. He, “An iteratively weighted MMSE approach to distributed sum-utility maximization for a mimo interfering broadcast channel,” IEEE Trans. Signal Process., vol. 59, no. 9, pp. 4331–4340, Sept. 2011.

- [3] H. Baligh et al., “Cross-layer provision of future cellular networks: A WMMSE-based approach,” IEEE Signal Process. Mag., vol. 31, no. 6, pp. 56–68, Nov. 2014.

- [4] H. Sun, X. Chen, Q. Shi, M. Hong, X. Fu, and N. D. Sidiropoulos, “Learning to optimize: Training deep neural networks for interference management,” IEEE Trans. Signal Process., vol. 66, no. 20, pp. 5438–5453, Oct. 2018.

- [5] Y. Shen, Y. Shi, J. Zhang, and K. B. Letaief, “Graph neural networks for scalable radio resource management: Architecture design and theoretical analysis,” IEEE J. Sel. Areas Commun., vol. 39, no. 1, pp. 101–115, Jan. 2020.

- [6] M. Abdar et al., “A review of uncertainty quantification in deep learning: Techniques, applications and challenges,” Inf. Fusion, vol. 76, pp. 243–297, Dec. 2021.

- [7] B. Lakshminarayanan, A. Pritzel, and C. Blundell, “Simple and scalable predictive uncertainty estimation using deep ensembles,” Adv. Neural Inf. Process. Syst., vol. 30, 2017.

- [8] C. Blundell, J. Cornebise, K. Kavukcuoglu, and D. Wierstra, “Weight uncertainty in neural network,” in Proc. ICML. PMLR, 2015, pp. 1613–1622.

- [9] Y. Romano, E. Patterson, and E. Candes, “Conformalized quantile regression,” Adv. Neural Inf. Process. Syst., vol. 32, 2019.

- [10] S. Mukherjee and A. Awadallah, “Uncertainty-aware self-training for few-shot text classification,” Adv. Neural Inf. Process. Syst., vol. 33, 2020.

- [11] J. Wang, C. Wang, J. Huang, M. Gao, and A. Zhou, “Uncertainty-aware self-training for low-resource neural sequence labeling,” Proc. AAAI, vol. 37, no. 11, pp. 13 682–13 690, Jun. 2023.

- [12] L. Wang, X. Zhang, H. Su, and J. Zhu, “A comprehensive survey of continual learning: Theory, method and application,” arXiv preprint arXiv:2302.00487, 2023.

- [13] G. Detommaso, A. Gasparin, M. Donini, M. Seeger, A. G. Wilson, and C. Archambeau, “Fortuna: A library for uncertainty quantification in deep learning,” arXiv preprint arXiv:2302.04019, 2023.