Self-Correcting Self-Consuming Loops for Generative Model Training

Abstract

As synthetic data becomes higher quality and proliferates on the internet, machine learning models are increasingly trained on a mix of human- and machine-generated data. Despite the successful stories of using synthetic data for representation learning, using synthetic data for generative model training creates “self-consuming loops” which may lead to training instability or even collapse, unless certain conditions are met. Our paper aims to stabilize self-consuming generative model training. Our theoretical results demonstrate that by introducing an idealized correction function, which maps a data point to be more likely under the true data distribution, self-consuming loops can be made exponentially more stable. We then propose self-correction functions, which rely on expert knowledge (e.g. the laws of physics programmed in a simulator), and aim to approximate the idealized corrector automatically and at scale. We empirically validate the effectiveness of self-correcting self-consuming loops on the challenging human motion synthesis task, and observe that it successfully avoids model collapse, even when the ratio of synthetic data to real data is as high as 100%.

1 Introduction

Generative models have been used to synthesize training data for various learning tasks, to varying degrees of success. For example, for the tasks of image classification and contrastive representation learning, recent work (Azizi et al., 2023; Tian et al., 2023) finds that using data synthesized from generative models rivals using real data. Unfortunately, there is a gloomier outlook when attempting to generalize this framework to generative model training.

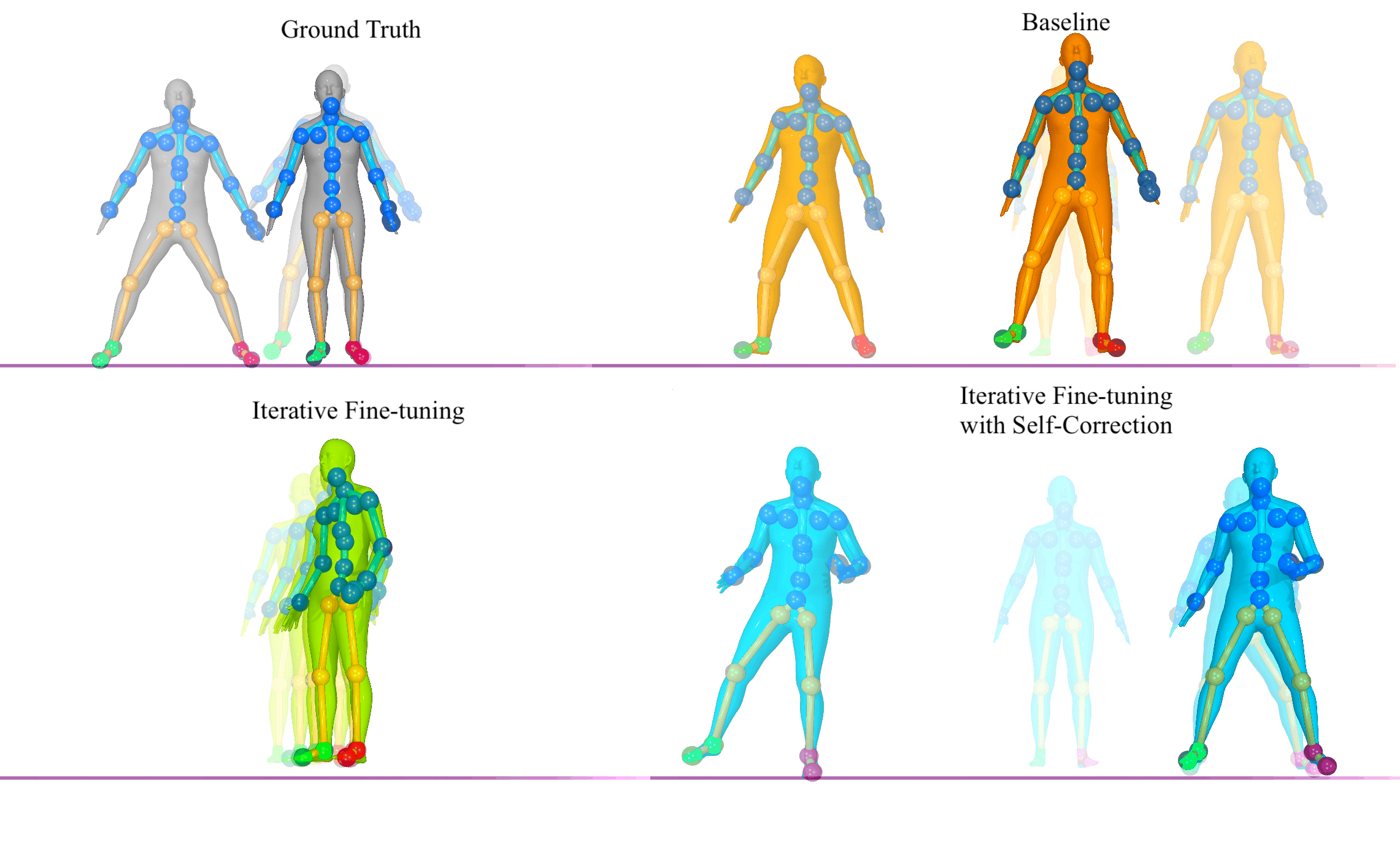

On one hand, there is evidence to suggest that training a generative model with its own outputs in a self-consuming manner will lead to collapse (Alemohammad et al., 2024). For example, after 50 iterations of self-consuming training, a human motion diffusion model (Tevet et al., 2023) collapses and fails to follow the text prompts or the laws of physics (see the two examples on the left of Figure 1).

On the other hand, evidence suggests that such a framework could avoid collapse, but only when a “moderate” amount of synthetic data is used (Bertrand et al., 2024). Worse still, this self-consuming scenario might happen without us knowing, and without us being able to quantify how much synthetic data is being used during training, due to the wide spread of AI generated content on the internet.

Intuitively, model collapse might be delayed or avoided by incorporating higher quality human generated data (Alemohammad et al., 2024), or by manually fixing the “mistakes” in machine created data. Considering the size of datasets used in practice (Schuhmann et al., 2022), neither of these options is a scalable solution.

In this paper, we aim to provide a theoretical analysis of how certain operations would avoid collapse in self-consuming loops, without any assumptions on the “moderateness” of synthetic data corruption. We introduce the mathematical abstraction of a self-correction operation. This operation maps synthesized data that are sampled from the generative model to data that are better representatives from the target probability distribution that the model is attempting to approximate. Instead of training on a combination of real data and synthesized data, we propose training on a combination of real data and synthesized and then self-corrected data. Note that injecting fresh human generated data can be viewed as a special case of this operation.

Our main theoretical findings (Theorem 4.3):

-

(1)

The self-consuming model with self-correction is exponentially more stable than the self-consuming model without any self-correction.

-

(2)

The self-correction procedure guarantees less unwanted variance during self-consuming model training.

In our theoretical study, we assume that correction is ideal in order to obtain rigorous performance guarantees. In our empirical study, we evaluate whether the same conclusions hold for noisy self-correction functions. We propose to automate this “self-correction” process by relying on programmed expert knowledge rather than a human-in-the-loop, such that the function can be applied at scale. We focus on the human motion synthesis task (Guo et al., 2022), and implement the self-correction function with a physics simulator-based imitation model (Luo et al., 2021). Our empirical results confirm that our theoretical findings hold in practice:

-

(1)

As illustrated in Figure 1, the self-correcting self-consuming model generates higher-quality human motion than the one without any self-correction.

-

(2)

The self-correction function allows self-consuming loops to avoid collapse even at a high synthetic data to real data ratio (e.g. 100%).

Our theory and experiments suggest that self-correction should stabilize self-consuming model training for any generative modeling task for which there exists a high quality “self-correction” function. We have released all the code associated with this paper.111Project page: https://nategillman.com/sc-sc.html

2 Related Work

2.1 Learning Representations with Synthetic Data

Real curated datasets are costly to obtain, so there has been much interest in generating synthetic data as training data for various vision tasks. Azizi et al. (2023) demonstrates that text-to-image diffusion models such as Imagen (Saharia et al., 2022) can generate synthetic examples that augment the ImageNet dataset for better image classification. He et al. (2023) studies how synthetic data from text-to-image models, when used exclusively, can be used as training data for image recognition tasks. Similarly, Tian et al. (2023) finds that using synthetic outputs from a text-to-image model results in contrastive models whose downstream performance rivals that of CLIP (Radford et al., 2021) on visual recognition tasks, including dense prediction. And the work in Jahanian et al. (2022) explored methods for multi-view representation learning by using the latent space of the generative models to generate multiple “views” of the synthetic data. The above works collectively provide evidence that some representation learning tasks, when trained on synthetic data from some given generative models, yield excellent results.

2.2 Training Generative Models on Synthetic Data

Another line of reseach investigates the use of synthetic data for training generative models. Shumailov et al. (2023) and Martínez et al. (2024) show that the use of model generated content in generative model training results in model degradation, likely because self-consuming loops remove low-density areas from the estimated probability manifold. Alemohammad et al. (2024) formalize three different kinds of self-consuming generative models: the fully synthetic loop, the synthetic augmentation loop, and the fresh data loop. In all of these loops, they iteratively re-train the model from scratch for every new generation. They empirically find that only the fresh data loop avoids model degradation.

Another recent work (Bertrand et al., 2024) considers the problem of iteratively fine-tuning in the context of synthetic augmentation loops. They find that self-consuming augmentation loops do not necessarily collapse, so long as the synthetic augmentation percentage is sufficiently low. The authors use techniques from the field of performative stability (Perdomo et al., 2020) to prove the existence of a convergence phenomenon in the space of model parameters. Our paper differs from prior work as we conduct analysis on self-consuming generative model training when the synthetic data can be optionally corrected. The correction can be performed with a human-in-the-loop, or by incorporating learned or programmed expert knowledge, as explored for natural language (Saunders et al., 2022; Welleck et al., 2023; Wu et al., 2023) and human motion (Yuan et al., 2023; Xu et al., 2023). We validate our theory with a practical self-correcting operations designed for image generation and human motion synthesis tasks.

3 Overall Training Procedure

We describe our proposed procedure in concise language in Algorithm 1, and we explain it in more detail here. We train the zero’th generation from scratch on the ground truth dataset , and we stop training when the model is close to convergence. For all the following generations, we fine-tune the previous generation’s latest checkpoint on a combination of the ground truth dataset , as well as synthetic data points which are generated from the previous generation’s latest checkpoint, and then passed through the correction function .

The correction function is parameterized by the correction strength , which controls how much influence the correction function has on the input data points towards increasing a given point’s likelihood with respect to the target distribution. The other main hyperparameter is the synthetic augmentation percent, and it controls the ratio of synthetic data to real data in each iteration of fine-tuning. When , we recover iterative re-training with synthetic augmentation considered in (Bertrand et al., 2024). And if we choose the synthetic augmentation percent to be , then each generation simply corresponds to fine-tuning the model on the same dataset that it was trained on initially.

We now use iterative fine-tuning interchangeably with the more general term self-consuming loop. We also consider the idealized correction function for our theoretical analysis, and a broader family of practical correction functions for different data types.

4 Theoretical Analysis

4.1 Preliminaries

We mostly follow the notation from (Bertrand et al., 2024), except for introducing the correction function . Let us denote by the ground truth probability distribution that we want to train a generative model to estimate. Suppose we have some dataset sampled from . We write . More generally, we use a hat to denote the empirical distribution over finitely many samples from the corresponding distribution.

Suppose that we have a class of generative models parameterized by . We denote by a probability distribution in this class with model parameters . We define the optimal model parameters within this class to be

| (1) |

where we break ties by minimizing . Typically, such optimal parameters yield a model which closely approximates the oracle ground truth distribution , but doesn’t equal it exactly; accordingly, we define the Wasserstein-2 distance between the distributions to be

| (2) |

The model weights for the first generation are naturally defined according to the optimization

| (3) |

This corresponds to training on the finite subset . Next, let us suppose that the model weights from generation are denoted . We will formalize a procedure for updating these weights for the next generation to obtain . For this, we need to define our correction function, and then we will use it to define the weight update.

Definition 4.1.

For any probability distribution, and for any , we define the correction of strength of distribution to be the distribution

| (4) |

where is defined in (1). For any augmentation percentage , we define the weight update mapping to be

| (5) | ||||

where and are empirical distributions of size and respectively.

To continue our discussion from before, our iterative weight update is defined as .

Note that we use an global maximization in (3) when defining the initial parameters , but we use a local maximization when computing our parameter update in (5). This difference is analogous to the differences between how model weights update during initial training, where parameter updates are more global, and during fine-tuning, where parameter updates are more local.

4.1.1 Understanding the correction

For , the correction mapping in (4) simplifies to , which is just the original distribution; this corresponds to no correction at all. For , it is . And for , it is , which corresponds to the optimal distribution. So as increases from to , the distribution has a likelihood profile that matches less, and more. As is the optimal model in our generative model class, this means that as increases from to , we have that is a PDF which better represents the target likelihood that we want to estimate through training the generative model.

In our theoretical formulation, we consider correction functions that correct the probability distribution , rather than the more intuitive (and practical) case of a correction function that corrects individual points that the distribution is defined over. In Appendix C, we specify sufficient conditions under which a pointwise correction function is guaranteed to correspond to a distribution-wise correction function of the same form as those which we consider in our theoretical study and therefore can enjoy the theoretical stability guarantees we prove. We also provide a concrete example of a projection function, in the Gaussian case, which provably satisfies those conditions. We conduct a series of experiments on this toy example in Section 5.

4.1.2 Understanding the weight update

The weight update in (5) is a formalization of the intended output of fine-tuning on , where is the ground truth dataset of size , and is the synthesized-and-corrected dataset of size . In other words, in an ideal run of stochastic gradient descent fine-tuning, the model weights should update to , as defined in (5), when trained on .

Intuitively, the weight update avoids the loss of variance in the generated data by ensuring that at each step, the model is trained on synthetic data which is likelier to have been sampled from the diverse target distribution. This positive phenomenon is more pronounced when the correction strength is larger.

4.2 Assumptions

In order to prove our main result, we need some regularity assumptions about the learning procedure. Informally speaking, we will assume that the class of generative models that we consider is smoothly parameterized by its model weights; the loss landscape is concave near the ideal model weights; and the class of generative models does an increasingly good job approximating the target data distribution as the dataset size increases. We formally quantify and state these hypotheses in Assumption 4.2.

Assumption 4.2.

The following are true.

-

1.

There exists some such that, for all sufficiently close to , the mapping is -Lipschitz.

-

2.

The mapping is continuously twice differentiable locally around , and there exists some such that

-

3.

There exist and a neighborhood of such that, for any , with probability over the samplings, we have222The map is defined similarly to in (5), but with replaced with , and with replaced with . See Appendix A for more details. This estimate is identical to the analogous Assumption 3 used in (Bertrand et al., 2024), with the only difference being it is applied to our iterative fine-tuning update function. See Appendix B for further discussion.

(6) for all and . Denote this bound by .

In Assumption 4.2 (2), the notation “” corresponds to the Loewner order on symmetric matrices: we write that if is positive semi-definite, and if is positive definite. In particular, Assumption 4.2 (2) implies that the matrix is negative definite, and its largest eigenvalue is at most . And Assumption 4.2 (3) mirrors the main assumption in (Bertrand et al., 2024); it is motivated by generalization bounds in deep learning, see e.g. (Jakubovitz et al., 2019; Ji et al., 2021). The interested reader can consult Appendix B for more details on this assumption.

4.3 Iterative Fine-Tuning with Correction

We now have the language to state our main result, which essentially says that if the initial parameters are sufficiently close to the optimal model parameters , and if the augmentation percentage is sufficiently small, then under iterative fine-tuning with correction, we can expect our subsequent model parameters to stay close to .

Theorem 4.3 (Stability of Iterative Fine-Tuning with Correction).

Fix an augmentation percentage and a correction strength . Suppose we have an iterative fine-tuning procedure defined by the rule , and suppose that Assumption 4.2 holds. Define the constant

and fix any . If is sufficiently close to , and if , then , and it follows that the stability estimate holds with probability :

| (7) | ||||

for all .

Remark 4.4.

If we apply Theorem 4.3 with correction strength , then the iterative fine-tuning procedure trains successively on a combination of raw synthetic data that has not been corrected using a correction function and ground truth data. This is exactly the case considered in (Bertrand et al., 2024). Accordingly, the bound in (7), applied with , exactly recovers their result.

Corollary 4.5.

Under the assumptions from Theorem 4.3, iterative fine-tuning with any amount of correction outperforms iterative fine-tuning without correction–in the sense that it is exponentially more stable, and it results in better model weights.

Proof of Corollary 4.5.

Example 4.6.

If we apply Theorem 4.3 with correction strength , then the bound (7) in Theorem 4.3 limits to . This implies that the practical iterate approaches the ideal model paramaters, and is at worst some constant away, that depends on error from the optimization procedure, as well as statistical error from using finitely many ground truth data samples .

Note that Theorem 4.3 relies on the assumption that the initial model parameters are sufficiently close to the ideal model parameters , and also that the augmentation percentage is sufficiently small. We hypothesize that these assumptions can be relaxed in the case where a correction function participates in the iterative fine-tuning procedure–intuitively, the correction function should compensate for errors that arise from being worse, as well as errors that arise from incorporating more synthetic data. We frame this in the following conjecture.

Conjecture 4.7.

In the case of iterative fine-tuning with correction, we may relax how close the initial model parameters need to be to the optimal model parameters , as well as choose a larger synthetic augmentation percentage , while still retaining the improved stability estimate (7).

We provide empirical evidence for Conjecture 4.7 in Section 7 on the human motion synthesis task. In fact, Theorem 4.3 represents partial progress towards this conjecture. Namely, according to Theorem 4.3, for large correction strength , we can effectively choose a synthetic augmentation percentage that is twice as large as we would be able to without any correction, and still be able to meet the assumptions of the theorem. This is because , which is twice as large as the bound when .

5 Toy Example: Gaussian

We first assume oracle knowledge of the ground truth distribution, and use a toy example to directly demonstrate the impact of the correction strength on model performance and stability as stated in Theorem 4.3 and Corollary 4.5. Our ground truth distribution is a 2-dimensional isotropic Gaussian centered at the origin, i.e., , and our correction is “distribution-wise” in this idealized scenario. We consider the more practical setting, where we don’t have oracle knowledge of the target distribution a priori, and where the data correction is “point-wise”, in the empirical studies in the following two sections. Further, in Appendix C, we show that, in theory, sufficiently well-behaved pointwise correction functions indeed correspond to distribution-wise correction functions.

Concretely, our ground truth dataset contains points sampled from the target distribution, which are used to estimate . We fix our synthetic augmentation percentage to be , and inductively synthesize a new dataset . We implement a correction function to map , which was sampled from , to a dataset , which is likelier to have been sampled from the target density . We do this by sampling from the middle density corresponding to a given correction strength :

| (8) |

where is the empirical PDF obtained from .

We logarithmically accrue synthetic data points to simulate the case of fine-tuning. We obtain the updated model parameters by computing the sample mean and covariance on this augmented dataset. In Figure 2, we present the Wasserstein distance between the origin-centered isotropic Gaussian target distribution and the distribution defined by the parameters at each iteration . Our results illustrate how increasing the correction strength adds stability and results in convergence near better Wasserstein scores in later generations, in accordance with Theorem 4.3. The experiments also demonstrate how even a very small increase in can improve performance over the baseline, in accordance with our claim of exponential improvement in Corollary 4.5.

6 Toy Example: MNIST

Our proof uses the optimal target PDF to define the correction function . This is empirically validated by the Gaussian toy experiment, which assumes knowing the true target distribution. In practice, the correction function only depends on the ability to map synthesized data to data which is likelier to have been sampled from the ground truth distribution. Crucially, this can be achieved without having a complete description of the target distribution. For example, with our human motion experiments, we will demonstrate that point-wise correction based on the laws of physics is one proxy approach to make a sample more likely, without knowing the true target distribution.

One has the freedom to explore alternative approaches to data correction for more general data types, such as images. For example, one simple heuristic is to identify the “anchor” or “exemplar” images, which are intuitively representative and likely. The correction function can then be implemented as mapping or morphing synthesized data towards its nearest anchor, to make the synthesized data more representative and likely. In this section, we implement this approach on MNIST and study its performance.

For our MNIST (LeCun et al., 1998) experiments, we train a diffusion model (Ho et al., 2020) for class-conditional image generation, using a train split of size . For our iterative fine-tuning experiments, we train the model for 20 epochs, then synthesize images for each digit, and then augment the ground truth dataset with these to train on for the next generation; every following generation follows the same procedure, but only trains for a single epoch. We vary our experiments over augmentation percentages . To define our self-correction operation, we first compute -means clusters over the training split for each digit. Our iterative fine-tuning with self-correction experiments use the same setup described above, except instead of training on the synthesized images, we train on the synthesized and then corrected images, where “correcting” an image means finding the nearest centroid in the centroids for that digit that we computed at the start of training. We swept the values , and we found that any reasonably large results in the same general trend where self-correction improves the metrics and stability. We report our results for , which performs the best.

We present images synthesized using our trained models in Figure 3. These synthesized images demonstrate that iterative fine-tuning eventually generates many low quality and illegible digits, and this problem is solved by applying our self-correction operation. Further experiment details, including graphs of the FID metrics for each generation that provide rigorous evidence for this trend across augmentation percentages, can be found in Appendix D. Our empirical results demonstrate that applying self-correction improves performance during iterative fine-tuning for our MNIST image generation task across self-consuming generations, and this relative performance is amplified when the augmentation percentage is larger. The behavior that we observe is consistent with our theoretical results in Section 4, as well as our human motion experiments in Section 7.

7 Human Motion Synthesis

Theorem 4.3 states that, in theory, iterative fine-tuning with correction should be more stable than iterative fine-tuning without correction. Crucially, the stability estimates that we prove rely on the dataset size, the synthetic augmentation percentage, how expressible the generative model class is, and having an idealized correction function. To validate how our theory works beyond toy examples, we conduct a case study on human motion synthesis with diffusion models (Tevet et al., 2023). We believe this is a natural setting to test our iterative fine-tuning with correction framework, because synthesizing natural motions is a challenging problem, but there is a natural and intuitive way to automatically correct them at scale–namely, using a physics simulator.

7.1 Generative Model

For our generative model, we use the Human Motion Diffusion Model (MDM) (Tevet et al., 2023). This is a classifier-free diffusion-based generative model for the text-to-motion generation task, where the model receives as input a description of a motion sequence (e.g. “get down on all fours and crawl across the floor”), and outputs a sequence of skeleton poses which attempt to embody that prompt. Synthesizing human motion is challenging not only for the diverse and compositional text prompts, but also due to failure of physics obeying-ness (e.g. feet skating, floating, penetrating a surface), which is not explicitly enforced by deep generative models.

7.2 Physics Simulator as Self-Correction Function

For our self-correction function, we use Universal Humanoid Control (UHC) (Luo et al., 2021), which is an imitation policy that operates inside the MuJoCo physics simulator (Todorov et al., 2012). Given an input sequence of humanoid skeleton poses, UHC attempts to imitate the motion sequence, constrained by the laws of physics imposed by the physics simulator, and it outputs a new motion sequence that is the closest possible approximation it can replace it with. For example, if an input motion sequence violates the laws of physics by having a foot penetrate through the floor, then the motion sequence output by UHC will attempt to remove that physically impossible artifact while maintaining the semantic integrity of the original input motion. We use VPoser (Pavlakos et al., 2019) and SMPL (Loper et al., 2015) to translate joint representations between the human motion generator and the physics simulator.

The physics simulator allows us to self-correct a synthesized motion automatically. Our underlying assumption is that by enforcing the physics obeying-ness (via the simulator) and closeness to the synthesized motion (via the imitation objective), the self-correction function would act as similar as an idealized corrector as possible.

7.3 Experimental setup

We preprocess the MoVi (Ghorbani et al., 2021) subset of HumanML3D (Guo et al., 2022) using the official code implementation of HumanML3D. We filter out movements involving interactions with chairs, as UHC by default does not handle human-object interactions. We take as our train split the train split from HumanML3D, intersected with our filtered subset of MoVi, and likewise for the test split. This procedure yields a train set of size and a test set of size . We further randomly select a smaller training set of examples, to simulate the more challenging scenario when the initial generative model is sub-optimal (due to data scarcity). The smaller data also enables us to explore larger synthetic augmentation percentage due to compute constraints. From here, the iterative re-training procedure follows Algorithm 1. We spell it out in this concrete experimental setup.

We first train on the ground truth train split until the model is nearly converged, using all the default hyperparameters from MDM. We evaluate and save this last checkpoint from generation . From here, for each generation , we run three sets of experiments.

-

A.

Baseline: fine-tune the latest checkpoint from generation for batches on ground truth dataset .

-

B.

Iterative fine-tuning: fine-tune the latest checkpoint from generation on for batches. Here, is a synthetic dataset of size generated from the checkpoint for generation , using randomly chosen prompts from the train split.

-

C.

Iterative fine-tuning with self-correction: fine-tune the latest checkpoint from generation on for batches. Here, denotes a synthetic dataset of size generated from the latest checkpoint for generation , using randomly chosen prompts from the train split, which is then corrected by UHC.

We experiment with synthetic augmentation percentages on the larger dataset; we set the number of batches seen during generation to be , and the number of batches seen for each later generation to be . Separately, we experiment with synthetic augmentation percentages on the smaller datasets; we set the number of batches seen during generation to be for dataset size , and the number of batches seen for each later generation to be . We choose to control how many data points the model sees across each generation, rather than controlling some other quantity like the number of epochs, as this allows each experiment to compare against its baseline in a controlled way, which in turn allows them to compare against each other in a controlled way.

We compute every evaluation one time for each checkpoint using the evaluation script provided in the original MDM codebase. Regardless of the train split size, we perform sampling for evaluation using all 546 motion sequences from the test split, since the FID score is sensitive to generated dataset size. We use the same hyperparameters as those used for MDM, including batch size , AdamW (Loshchilov & Hutter, 2019) with learning rate , and classifier-free guidance parameter . And for UHC we used the uhc_explicit model for imitation.

7.4 Quantitative Analysis of Results

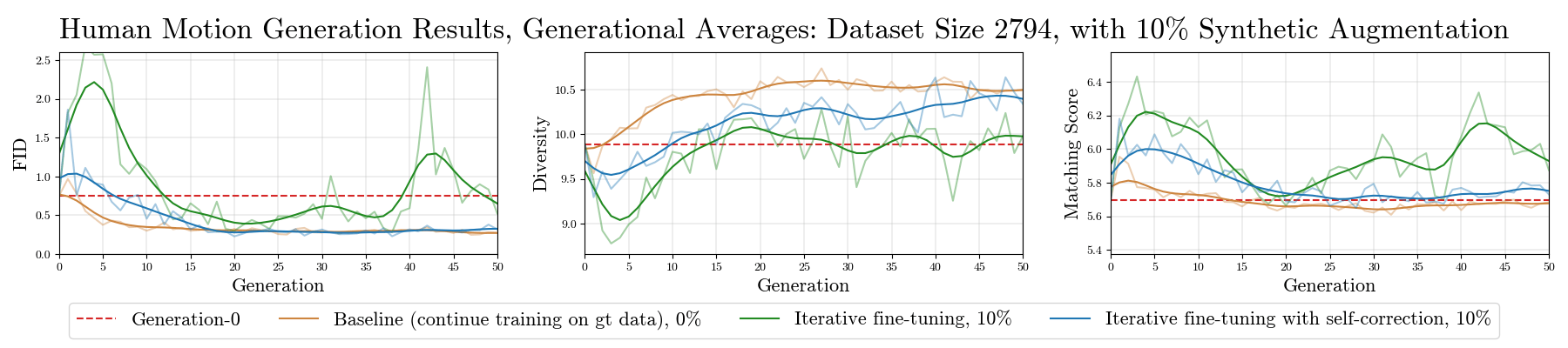

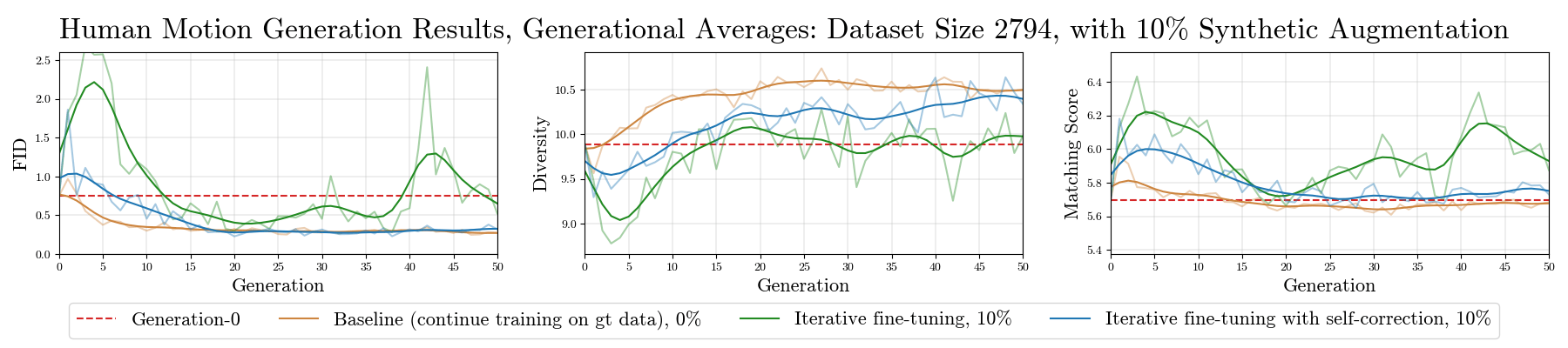

For each of these experiments we report the metrics from MDM, as used by (Guo et al., 2022): FID measures how similar the distribution of generated motions is to the ground truth distribution; Diversity measures the variance of the generated motions; and Matching Score measure how well the generated motions embody the given text prompt. In Figure 4 we present results from experiments on our -size dataset with synthetic augmentation, as well as our -size dataset with synthetic augmentation.

Our experimental results confirm our theoretical results, that iterative fine-tuning with self-correction outperforms iterative fine-tuning without self-correction, in the sense that the graphs are generally more stable across generations, and approach better evaluation metric values. In particular, Theorem 4.3 and Corollary 4.5 claim that any amount of idealized self-correction will improve the stability bound during iterative fine-tuning. Our results in Figure 4 demonstrate that the FID score is lower and more stable across generations when applying self-correction, and generally higher and less stable than the baseline, where there is no self-consuming training at all. We conduct experiments across multiple seeds, and we find empirically that this general phenomenon holds consistently, where the self-correction technique consistently yields improved training dynamics over iterative fine-tuning with no correction. Graphs from these runs can be found in Appendix G.

Our experimental results also provide empirical evidence for Conjecture 4.7. Observe that in the baseline experiments in Figure 4, the FID score decreases across generations, which indicates that the initial model parameters are not that close to the optimal model parameters ; additionally, the augmentation percentages considered in the graph are and . Conjecture 4.7 claims that performing self-correction during iterative fine-tuning improves performance, even when the initial model weights are sub-optimal and simultaneously the synthetic augmentation percentage is large. This claim is confirmed by Figure 4. We direct the curious reader to Appendix F, where we present graphs for all of the above listed training set sizes and augmentation percentages, providing additional empirical evidence for Theorem 4.3, Corollary 4.5, and Conjecture 4.7.

7.5 Qualitative Analysis of Results

We visually inspect the generated human motion sequences in order to analyze what concrete effect the self-correction has on iterative fine-tuning. We find that the correctness and diversity of synthesized motions are improved by the self-correction procedure, in agreement with our quantitative analysis in Subsection 7.4. We present snapshots of our synthesized motions in Figure 5, and we analyze the motions in the caption. In short, we find that physics-disobeying artifacts such as floor penetration or floating become more pronounced without the self-correction. We also find that in the model without self-correction, the humanoid sometimes performs movements completely unrelated to the prompt; our model with self-correction fixes these negative phenomena. We direct the curious reader to Appendix E, where we present more examples from our qualitative analysis, as well as our project webpage, where we provide side-by-side video comparisons.

8 Conclusion

Our paper investigates the learning of generative models when the training data includes machine-generated contents. We investigate how self-correction functions, which automatically correct synthesized data points to be more likely under the true data distribution, can stabilize self-consuming generative model training. Our theoretical results show that self-correction leads to exponentially more stable model training and smaller variance, which we illustrate with a Gaussian toy example. We then demonstrate how physics simulators can serve as a self-correction function for the challenging human motion synthesis task, where models trained with our self-correcting self-consuming loops generate higher quality motions, and manage to avoid collapse even at a high synthetic data to real data ratio. Future work includes exploring self-correcting functions for more diverse applications, such as language modeling and text-to-image generation, and investigating when self-consuming training may lead to overall better generative models.

Acknowledgments

We would like to thank Stephen H. Bach, Quentin Bertrand, Carsten Eickhoff, Gauthier Gidel, Jeff Hoffstein, Zhengyi Luo, Singh Saluja, and Ye Yuan for useful discussions. We would also like to thank the anonymous reviewers. This work is supported by the Samsung Advanced Institute of Technology, Honda Research Institute, and a Richard B. Salomon Award for Chen Sun. Our research was conducted using computational resources at the Center for Computation and Visualization at Brown University.

Impact Statement

This paper presents work whose goal is to provide theoretical analysis and practical tools to address the data contamination issue caused by machine-generated content. There are many potential societal consequences of our work, none which we feel must be specifically highlighted here.

References

- Alemohammad et al. (2024) Alemohammad, S., Casco-Rodriguez, J., Luzi, L., Humayun, A. I., Babaei, H., LeJeune, D., Siahkoohi, A., and Baraniuk, R. Self-consuming generative models go MAD. In The Twelfth International Conference on Learning Representations, 2024.

- Azizi et al. (2023) Azizi, S., Kornblith, S., Saharia, C., Norouzi, M., and Fleet, D. J. Synthetic data from diffusion models improves imagenet classification. Transactions on Machine Learning Research, 2023. ISSN 2835-8856.

- Bertrand et al. (2024) Bertrand, Q., Bose, A. J., Duplessis, A., Jiralerspong, M., and Gidel, G. On the stability of iterative retraining of generative models on their own data. In The Twelfth International Conference on Learning Representations, 2024.

- Ghorbani et al. (2021) Ghorbani, S., Mahdaviani, K., Thaler, A., Kording, K., Cook, D. J., Blohm, G., and Troje, N. F. Movi: A large multi-purpose human motion and video dataset. Plos one, 16(6):e0253157, 2021.

- Guo et al. (2022) Guo, C., Zou, S., Zuo, X., Wang, S., Ji, W., Li, X., and Cheng, L. Generating diverse and natural 3d human motions from text. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5152–5161, 6 2022.

- He et al. (2023) He, R., Sun, S., Yu, X., Xue, C., Zhang, W., Torr, P., Bai, S., and Qi, X. Is synthetic data from generative models ready for image recognition? In ICLR, 2023.

- Ho & Salimans (2021) Ho, J. and Salimans, T. Classifier-free diffusion guidance. In NeurIPS 2021 Workshop on Deep Generative Models and Downstream Applications, 2021.

- Ho et al. (2020) Ho, J., Jain, A., and Abbeel, P. Denoising diffusion probabilistic models. Advances in neural information processing systems, 33:6840–6851, 2020.

- Jahanian et al. (2022) Jahanian, A., Puig, X., Tian, Y., and Isola, P. Generative models as a data source for multiview representation learning. In International Conference on Learning Representations, 2022.

- Jakubovitz et al. (2019) Jakubovitz, D., Giryes, R., and Rodrigues, M. R. Generalization error in deep learning. In Compressed Sensing and Its Applications: Third International MATHEON Conference 2017, pp. 153–193. Springer, 2019.

- Ji et al. (2021) Ji, K., Zhou, Y., and Liang, Y. Understanding estimation and generalization error of generative adversarial networks. IEEE Transactions on Information Theory, 67(5):3114–3129, 2021.

- LeCun et al. (1998) LeCun, Y., Bottou, L., Bengio, Y., and Haffner, P. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- Loper et al. (2015) Loper, M., Mahmood, N., Romero, J., Pons-Moll, G., and Black, M. J. SMPL: A skinned multi-person linear model. ACM Trans. Graphics (Proc. SIGGRAPH Asia), 34(6):248:1–248:16, October 2015.

- Loshchilov & Hutter (2019) Loshchilov, I. and Hutter, F. Decoupled weight decay regularization. In International Conference on Learning Representations, 2019.

- Luo et al. (2021) Luo, Z., Hachiuma, R., Yuan, Y., and Kitani, K. Dynamics-regulated kinematic policy for egocentric pose estimation. In Advances in Neural Information Processing Systems, 2021.

- Martínez et al. (2024) Martínez, G., Watson, L., Reviriego, P., Hernández, J. A., Juarez, M., and Sarkar, R. Towards understanding the interplay of generative artificial intelligence and the internet. In Cuzzolin, F. and Sultana, M. (eds.), Epistemic Uncertainty in Artificial Intelligence, pp. 59–73, Cham, 2024. Springer Nature Switzerland. ISBN 978-3-031-57963-9.

- Pavlakos et al. (2019) Pavlakos, G., Choutas, V., Ghorbani, N., Bolkart, T., Osman, A. A. A., Tzionas, D., and Black, M. J. Expressive body capture: 3d hands, face, and body from a single image. In Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2019.

- Perdomo et al. (2020) Perdomo, J., Zrnic, T., Mendler-Dünner, C., and Hardt, M. Performative prediction. In International Conference on Machine Learning, pp. 7599–7609. PMLR, 2020.

- Radford et al. (2021) Radford, A., Kim, J. W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J., et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pp. 8748–8763. PMLR, 2021.

- Saharia et al. (2022) Saharia, C., Chan, W., Saxena, S., Li, L., Whang, J., Denton, E. L., Ghasemipour, K., Gontijo Lopes, R., Karagol Ayan, B., Salimans, T., et al. Photorealistic text-to-image diffusion models with deep language understanding. Advances in Neural Information Processing Systems, 35:36479–36494, 2022.

- Saunders et al. (2022) Saunders, W., Yeh, C., Wu, J., Bills, S., Ouyang, L., Ward, J., and Leike, J. Self-critiquing models for assisting human evaluators. arXiv preprint arXiv:2206.05802, 2022.

- Schuhmann et al. (2022) Schuhmann, C., Beaumont, R., Vencu, R., Gordon, C., Wightman, R., Cherti, M., Coombes, T., Katta, A., Mullis, C., Wortsman, M., et al. Laion-5b: An open large-scale dataset for training next generation image-text models. Advances in Neural Information Processing Systems, 35:25278–25294, 2022.

- Shumailov et al. (2023) Shumailov, I., Shumaylov, Z., Zhao, Y., Gal, Y., Papernot, N., and Anderson, R. The curse of recursion: Training on generated data makes models forget. arXiv preprint arxiv:2305.17493, 2023.

- Tevet et al. (2023) Tevet, G., Raab, S., Gordon, B., Shafir, Y., Cohen-or, D., and Bermano, A. H. Human motion diffusion model. In The Eleventh International Conference on Learning Representations, 2023.

- Tian et al. (2023) Tian, Y., Fan, L., Chen, K., Katabi, D., Krishnan, D., and Isola, P. Learning vision from models rivals learning vision from data. arXiv preprint arXiv:2312.17742, 2023.

- Todorov et al. (2012) Todorov, E., Erez, T., and Tassa, Y. Mujoco: A physics engine for model-based control. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5026–5033, 2012. doi: 10.1109/IROS.2012.6386109.

- Welleck et al. (2023) Welleck, S., Lu, X., West, P., Brahman, F., Shen, T., Khashabi, D., and Choi, Y. Generating sequences by learning to self-correct. In The Eleventh International Conference on Learning Representations, 2023.

- Wu et al. (2023) Wu, T.-H., Lian, L., Gonzalez, J. E., Li, B., and Darrell, T. Self-correcting llm-controlled diffusion models. arXiv preprint arXiv:2311.16090, 2023.

- Xu et al. (2023) Xu, S., Li, Z., Wang, Y.-X., and Gui, L.-Y. Interdiff: Generating 3d human-object interactions with physics-informed diffusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 14928–14940, 2023.

- Yuan et al. (2023) Yuan, Y., Song, J., Iqbal, U., Vahdat, A., and Kautz, J. Physdiff: Physics-guided human motion diffusion model. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2023.

Appendix A Mathematical Theory: The Proof of Theorem 4.3

In this appendix, we provide a full account of the mathematical details of the theorems and their proofs appearing in the main body of the paper. Our proof technique has the same framework as (Bertrand et al., 2024) because our theoretical analysis generalizes theirs to the case where you have a self-correction function in the self-consuming loop.

A.1 Mathematical Setup and Notation

Definition A.1.

Define the optimal model parameters to be

| (9) |

chosen so that has minimal norm within this set. Let be any model parameters. Then the correction of strength of distribution towards is a new distribution, denoted , defined according to the rule

This is illustrated in Figure 6. Let be the parameters of the model trained after generations. We define the iterative fine-tuning with correction update mapping to be

| (10) | ||||

| (11) |

Notice that in the finite case, we’re optimizing by taking samples from an empirical distribution. In contrast, in the infinite case, there is zero statistical error, since the parameter update is done with access to an infinite sampling budget at each generation . The finite case is the more practical case, when we have some statistical error (so we only have access to finite sampling at each generation). Since the parameter space of the generative model class might be limited, there might be a small difference between the distribution corresponding to the optimal parameters and the target distribution ; we capture this difference via the Wasserstein-2 distance and denote

| (12) |

Let

| (13) |

and note that .

We first establish that the correction map is truly a mapping of probability distributions as well as some of its elementary properties.

Lemma A.2.

The correction map has the following properties.

-

1.

is a probability distribution.

-

2.

Strengths correspond to , the average of and , and , respectively.

-

3.

For any , if , then

and if , then the inequality is flipped. In other words, is a better estimate of the ideal distribution than is, precisely when the projection strength is more than .

Proof.

For the first point, is a probability distribution because it is a convex combination of probability distributions. For example, we can compute that

The second point follows immediately from the definition of . For the third point, we can estimate that

when . The inequality flips when . ∎

Intuitively, it is clear that we cannot hope to prove general results about generative models without assuming something about the mapping . We now state the two assumptions we require in order to make our theoretical arguments; note that they are precisely the same assumptions made in (Bertrand et al., 2024). The first assumption is a local Lipschitzness property that we will exploit via the Kantorovich-Rubenstein duality:

Assumption A.3.

For close enough to , the mapping is -Lipschitz.

The second assumption is a local regularity and concavity condition:

Assumption A.4.

The mapping is continuously twice differentiable locally around and

We next show the existence and uniqueness of locally around .

Proposition A.5 (The Local Maximum Likelihood Solution is Unique).

The following are true:

-

A.

There exists an open neighborhood containing and a continuous function such that , and

(14) for every .

- B.

Proof.

We first prove part A. It suffices to apply the Implicit Function Theorem to the map

| (15) |

in an open neighborhood of . To do this, we need to show the following:

-

i)

The map vanishes at , i.e.

(16) -

ii)

The Jacobian matrix at is invertible, i.e.,

(17)

We first prove i). Recall from the definition (10) that . This means that for any , is the choice of which maximizes . In particular, for , we have that is the choice which maximizes . But by Proposition A.6. This implies that its derivative is zero at , meaning , as needed.

Now we prove ii). In order to show that the matrix (17) is invertible, it suffices to show it is close to another matrix which is invertible. A natural choice is the matrix

| (18) |

First of all, note that this matrix indeed exists; by Assumption 2 A.4, we know the map is continuously twice differentiable locally near . We can estimate that the matrices (17) and (18) are indeed close as follows:

where the first equality follows from the definition of in (13); the second equality follows from some cancellation; the third equality follows the fact that the derivatives are constant with respect to , and by Lemma A.2; we exchange the derivative and the expectation in equation 4 using the Dominated Convergence Theorem, since Assumption 1 A.3 says that is -Lipschitz; the fifth estimate follows from Kantorovich-Rubinstein Duality; and the final estimate is the definition of Wasserstein distance (12).

Finally, we verify is indeed invertible. Assumption 2 A.4 implies that the largest eigenvalue of is at most . Therefore, since all eigenvalues of are nonzero, is invertible. We can now apply the implicit function theorem to (15), and part A follows immediately.

Next, we prove part B. Let . To verify that is a local maximizer of (15), it suffices to show that . By Assumption 2 A.4, we know and since is continuously twice differentiable locally near , we also have . Thus, we have

where the last step follows from Kantorovich-Rubsenstein duality:

Thus, to have , it is sufficient that

which is guaranteed for all by and . This concludes the proof. ∎

Further, as we would expect, is a fixed point of :

Proposition A.6 (The optimal parametric generative model is a fixed point).

For any given data distribution , any as defined by (9), and for all , we have .

A.2 Convergence of Iterative Fine-tuning with Correction for Infinite Sampling

We now have the required setup to state and prove a convergence result for iterative fine-tuning assuming infinite access to underlying probablity distributions. We need the following result, which is a technical lemma that provides a computation of the Jacobian of at as well as a spectral bound, both essential for the proof of Theorem A.8.

Lemma A.7.

We define the matrices

| (19) | ||||

| (20) | ||||

| (21) |

Recall the definition of from (10). Since and are fixed, denote Finally, let denote the Jacobian of .

-

I.

There exists an open neighborhood containing such that for all , we have

(22) -

II.

We have that , and , so the Jacobian of at is

(23) -

III.

The spectral norm of can be bounded as

(24)

Proof.

We first prove I. We apply Proposition A.5. Part A of that proposition gives us a function such that . But part of that proposition says that there exists a unique local maximizer inside , and this local maximizer is . This implies that . Next, we implicitly differentiate this equation with respect to . Recall that when you have an equation of the form , and implicitly differentiate it in the form with respect to , you obtain , and solving for yields . We apply this formula with

and obtain (22), as desired.

Now we prove II. We can compute that

| (25) | ||||

| (26) | ||||

| (27) | ||||

| (28) | ||||

| (29) |

where the third equality holds because the integral containing is constant with respect to . Next, we can compute that

| (30) | ||||

| (31) | ||||

| (32) | ||||

| (33) | ||||

| (34) |

where the third equality follows from the product rule for gradients,

| (35) |

Finally, we will prove the formula (23) by manipulating (22). We begin with the rightmost factor in (22). If we apply these equalities that we just obtained, then we get

where the first equality follows from (23) along with the fixed point Proposition A.6, and we are using that is invertible by Assumption 2 A.4, which implies all eigenvalues of are nonzero; in the fourth step we used that . This proves part II.

Now we prove III. We can bound the operator norm as follows:

| (36) |

where the first estimate comes from subadditivity and submultiplicativity, and the second comes from the fact that, since is symmetric, , where is the spectrum of . Formally, we know by Assumption A.4 that has eigenvalues and so . Therefore, has eigenvalues and thus , which gives us the bound on the matrix norm. Next, we can estimate that

where in the second equality we exchange the derivative and the expectation in equation 4 using the Dominated Convergence Theorem, since Assumption 1 A.3 says that is -Lipschitz; and in the last estimate, we used Kantorovich-Rubenstein duality. This, combined with the estimate (36), yields the bound in (24). ∎

We are finally ready to prove our theorem that guarantees convergence to the optimal parameters in the infinite sampling case under certain assumptions, one being the that the initial model parameters are sufficiently close to :

Theorem A.8 (Convergence of Iterative Fine-tuning, Infinite Sampling Case).

Suppose we have an iterative fine-tuning procedure defined by the rule . Let be the parameter vector for the optimal generative model, as in (9). We assume that follows Assumptions A.3 and A.4 from (Bertrand et al., 2024). Suppose also that . Then, the Jacobian of satisfies the following bound:

| (37) |

Consequently, there exists a such if satisfies , then starting training at and having , we have that . Furthermore, if we define

| (38) |

then we obtain the asymptotic stability estimate333(Bertrand et al., 2024) could have presented their results in this stronger form, without the big notation, with very little extra work.

| (39) |

Proof.

We first prove the Jacobian bound (37). By hypothesis, we know , so by Lemma A.7(III), we have . Thus, we can write

and so

Applying Lemma A.7(2), we get

Now, it is straightforward to see the RHS above is at most the bound in (37) if and only if . But this bound holds because of Lemma A.7(III). This proves the Jacobian bound (37), but does not prove that the bound is less than . For this, we must show that

| (40) |

By clearing denominators and grouping like terms, we can see that this is equivalent to

| (41) |

which is precisely guaranteed by our hypothesis.

We now apply the the Jacobian bound (37) to prove the asymptotic stability estimate (39). Assume is sufficiently small so that . Then for every , there exists sufficiently small so that every which satisfies has the property that . Because the map has Jacobian matrix norm less than in the -ball around , it is a contraction mapping in this neighborhood. Concretely, this means that

| (42) |

for every in the -ball around . In particular, for we obtain

By induction, the above estimate implies that if is in a -ball around , then so is every successive . Therefore the desired estimate (39) now follows by induction on . ∎

Remark A.9.

Taking recovers exactly the result in (Bertrand et al., 2024). Importantly, the correction function provides leverage in determining how large the augmentation percentage can be: choosing a larger correction strength allows us to choose a larger augmentation percentage while still retaining theoretical guarantees for convergence. Additionally, for the same choice of augmentation percentage , a larger correction strength provides a guarantee for an improved rate of convergence. See Conjecture 4.7.

A.3 Stability of Iterative Fine-tuning with Correction for Finite Sampling

Finally, we prove a stability result for iterative fine-tuning with correction in the presence of statistical error. To do this, we require an assumption that essentially provides probabilistic guarantee that the chosen generative model learns the underlying distribution increasingly better if it has access to more samples:

Assumption A.10.

There exist and a neighborhood of such that, for any , with probability over the samplings, we have

| (43) |

See Appendix B for a discussion about this assumption; we investigated whether to assume a similar bound to the one they assumed in (Bertrand et al., 2024), or prove our bound from theirs. In fact, we prove in Appendix B that you can in fact deduce something nearly as strong as Assumption A.10 from Assumption 3 in their paper, so we made Assumption A.10 for the sake of a cleaner, more parallel exposition.

Theorem A.11 (Iterative Fine-Tuning Stability Under Correction).

Suppose we have an iterative fine-tuning procedure defined by the rule . In words, this means that the augmentation percentage is and the correction strength is . Under the same assumptions of Theorem A.8 and Assumption A.10, there exist and such that if , then for any , with probability , we have

| (44) |

Proof.

By the triangle inequality, we can estimate that

| (45) |

where we applied the fixed point Proposition A.6. By Assumption A.10, the left summand in (A.3) is at most , with probability . Next, recall that in (42) in the proof of Theorem A.8, we proved that that is a contraction mapping of factor sufficiently close to ; this implies that the right summand in (A.3) is at most . Together, these yield the recurrence estimate

| (46) |

Iterating this recurrence for successive time steps yields

| (47) |

Note that (47) holds for any . In particular, we can apply (47) with . In this case, the Bernoulli inequality lets us estimate that . This completes the proof, with . ∎

Remark A.12.

Remark A.13.

In a previous version of this manuscript, we claimed that there was an error in the statement of the corresponding theorem in (Bertrand et al., 2024). In this version, we retract that claim; we have corresponded with those authors, and they updated their manuscript with additional details to justify their statement.

A.4 Discussion: The Main Limitation

Our empirical results are for generative modeling tasks where we have access to some “self-correction” operation that is easy to compute, as well as automatic; see Sections 6 and 7 for more details about these correction functions. Therefore, the main limitation of our work is that one can only hope to use this self-correction procedure to stabilize training in scenarios where there is some “self-correction” function. For our MNIST experiments, we built a self-correction function from scratch using clustering statistics. And for our human motion experiments, we used an off-the-shelf human motion imitation model that other researchers built.

Appendix B Discussion about Assumption 4.2

In this section, we show how with a mild boundedness assumption on our generative model parameter update function, we can deduce our Assumption A.10 (which is the same as Assumption 4.2, part 3) from the following assumption used in (Bertrand et al., 2024).

Assumption B.1.

There exist and a neighborhood of such that, for any , with probability over the samplings, we have

| (48) |

Now, if we make the additional assumption that our generative model parameter update function is locally bounded near then we obtain the following.

Proposition B.2.

Suppose Assumption B.1 holds. Suppose also that there exists such that for all and sufficiently close to ,

Then there exist and a neighborhood of such that, for any , with probability over the samplings, we have

| (49) |

where

Proof.

By the triangle inequality, we have

| (50) |

We bound each term in the RHS: firstly, note the middle term is bounded by Assumption B.1.The first term is bounded as follows:

where in the first step we used that . Similarly, the last term is bounded as follows:

where in the second step we applied (42). Using these bounds in (50) and taking completes the proof. ∎

Note that the constant (for sufficiently small) can really be viewed as a part of the optimization constant since it is controlled by the choice of generative model class.

Appendix C Point-wise correction corresponds to distribution-wise correction

In this section we provide a sufficient condition under which you can associate a distribution-wise correction mapping (like the one we consider in the paper, ) to a point-wise correction mapping (which is the one you are more likely to find in the wild).

Definition C.1.

Let and define the empirical cumulative distribution function by

where for , is the indicator function for the set . For a continuous distribution, the cumulative distribution function is defined in the usual way.

Definition C.2.

Suppose that we have a model and an arbitrary function . Then we say that is a valid point-wise correction function for if there exists a such that

| (51) |

almost surely, where the expectation is over all samplings of size from .

Intuition C.3.

This is saying that the CDFs for and are equal in expectation, for large enough . This is one way of saying that and , for large enough , are nearly identical probability distributions.

Definition C.4.

If the limit in (51) exists, then we define the distribution-wise projection function corresponding to to be

| (52) |

and we define the projection strength of the point-wise correction function to be . Recall that . So intuitively, (51) implies that the projection function maps samples from to a different space such that they look like they come from a combination of the original distribution and , at least at the level of CDFs.

Remark C.5.

Such a , if it exists, is unique. Furthermore, if , then .

The limit condition in Definition C.2 is abstract, and can be hard to swallow. We present an example of a simple point-wise correction for the Gaussian toy example that we consider in Section 5, whose corresponding distribution-wise correction is exactly one would expect it to be–the weighted average of the corresponding Gaussians. Recall that we demonstrated empirically in Figure 2 that Theorem 4.3 holds for that example. The projection function is depicted in Figure 6.

Example C.6.

Let be the pdf of (initial distribution, corresponds to ) and the pdf of (target distribution, corresponds to ). Given , we define as follows: Fix any , and let , where is the PDF of the empirical distribution defined by ; in practice we implement as a histogram. Then choose a random ( = group of permutations on symbols). Finally, we define for .

Next, we define the projection set , and define the PDF , and let represent the cumulative distribution function of the Gaussian . Then, since , we have by the uniform law of large numbers that

| (53) |

almost surely. Therefore is a valid point-wise correction function, and its corresponding distribution-wise projection function is .

Remark C.7.

In the example we considered in Section 5, we could have included a total distance traveled minimization condition, but here for this proof we don’t even need to use that hypothesis. (In the proof, this would have corresponded to the additional assumption that we’ve chosen a such that is minimized.) This implies that different point-wise correction functions can correspond to the same distribution-wise correction function.

Appendix D More MNIST Experiment Details

We train a Denoising Diffusion Probabilistic Model (DDPM) (Ho et al., 2020) on the of the MNIST dataset (LeCun et al., 1998). We use classifier-free guidance (Ho & Salimans, 2021) with guidance parameter , and 400 diffusion steps. We used a batch size of 256. We train generation for 20 epochs, with a linear decay learning rate schedule starting at and ending at We train each following generations for a single epoch, with a fixed learning rate of .

To compute our metrics, we first train a LeNet model (LeCun et al., 1998) on MNIST, and then we sample an equal number of digits from each class using the checkpoint that we’re trying to evaluate. To compute the FID score, we extract embeddings from the last fully connected LeNet layer for the synthesized examples, as well as for the held out test examples, and compute FID score as normal, by computing the Wasserstein distance between the Gaussians. Note that we use embeddings for LeNet trained on MNIST, rather than the Inception network trained on ImageNet, because MNIST isn’t comprised of natural images. This is consistent with the convention in (Alemohammad et al., 2024).

For the self-correction operation, we compute the -means clusters, with , once at the start of training. And we “correct” a synthesized motion by mapping it to the nearest cluster mean corresponding to its digit. In Figure 7 we present the clusters, and we present graphs of our FID scores across augmentation percentages in Figure 8.

|

|

|

|

Appendix E Additional Human Motion Generation Qualitative Results

In Figures 9, 10, and 11, we present additional qualitative observations and analysis of our synthesized motions. We present more evidence that iterative fine-tuning with self-correction yields physically plausible motions comparable to the baseline, whereas iterative fine-tuning without self-correction yields motions that are incorrect for various reasons. See the captions of the referenced figures for analysis of some characteristic failure modes of the iterative fine-tuning loop without self-correction.

A technical note: for all figures, we render the motions from the same environment and camera position. We consolidate each render into the same image without resizing it. This means that if a figure appears larger relative to the others, the human moved closer to the camera. Some motions will have transparent frames of past positions; the more transparent the image, the farther back in the past it was in the motion sequence. Finally, in each figure, the text prompt for all generated motions was the same –the prompt being the one associated with the ground truth motion in the HumanML3D (Guo et al., 2022) training data, which we also visualize. Note that the coloring in the humanoid figures corresponds to the coloring in the graphs.

Appendix F Additional Human Motion Generation Quantitative Results

See Figures 12, 13, 14 for results when the dataset size is and the synthetic augmentation percentage is . And see Figures 15 and 16 for additional results on our iterative fine-tuning experiments when the dataset size is and the synthetic augmentation percentage is . The graphs provide evidence across experiment settings that our iterative fine-tuning procedure with self-correction yields better training performance than iterative fine-tuning with no self-correction for the motion synthesis task, in accordance with Theorem 4.3.

Appendix G Consistency Across Seeds: Additional Human Motion Generation Quantitative Results

In Figures 17, 18, 19, and 20, we present experimental results from runs across three more seeds for our human motion experiments when the dataset size is . We find that the self-correction technique consistently yields improved training dynamics over iterative fine-tuning without correction.