Self-Attention Empowered Graph Convolutional Network for Structure Learning and Node Embedding

Abstract

In representation learning on graph-structured data, many popular graph neural networks (GNNs) fail to capture long-range dependencies, leading to performance degradation. Furthermore, this weakness is magnified when the concerned graph is characterized by heterophily (low homophily). To solve this issue, this paper proposes a novel graph learning framework called the graph convolutional network with self-attention (GCN-SA). The proposed scheme exhibits an exceptional generalization capability in node-level representation learning. The proposed GCN-SA contains two enhancements corresponding to edges and node features. For edges, we utilize a self-attention mechanism to design a stable and effective graph-structure-learning module that can capture the internal correlation between any pair of nodes. This graph-structure-learning module can identify reliable neighbors for each node from the entire graph. Regarding the node features, we modify the transformer block to make it more applicable to enable GCN to fuse valuable information from the entire graph. These two enhancements work in distinct ways to help our GCN-SA capture long-range dependencies, enabling it to perform representation learning on graphs with varying levels of homophily. The experimental results on benchmark datasets demonstrate the effectiveness of the proposed GCN-SA. Compared to other outstanding GNN counterparts, the proposed GCN-SA is competitive. The source code is available at https://github.com/mengyingjiang/GCN-SA.

keywords:

Representation learning; Heterophily; Structure learning; Graph neural networks1 Introduction

Graph neural networks (GNNs) are capable of graph representation learning with many applications ranging from the Internet of things to knowledge graphs [1]. Many GNNs have been developed in recent years, including graph attention network (GAT) [2], graph convolutional network (GCN) [3], graph isomorphism network (GIN) [4], and GraphSAGE [5]. For GNNs, each node iteratively updates and improves its feature representation by aggregating the ones of its neighbors and the node itself [6]. Typically, neighbors are defined as the set of one-hop neighbors in a graph [7]. A variety of aggregation functions have been adapted to GNNs, such as mean, weighted sum, and maximum. [8].

In recent years, convolutional neural networks (CNNs) have grown substantially and have achieved significant success in a wide range of tasks [9]. GCNs are generalizations of classical CNNs that handle graph data, such as point clouds, molecular data, and social networks [10]. As the most attractive GNNs, GCNs have been widely used in various applications [11]. Nevertheless, GCNs may not capture long-range dependencies in graphs because GCNs update feature representations by simply summing the normalized feature representations from all adjacent nodes [12]. This fundamental limitation significantly limits the ability of GCNs to represent graph-structured data [13]. Furthermore, this weakness is magnified in graphs with low or medium levels of homophily [14].

Homophily is an essential characteristic of graph-structured data [15], in which connected nodes often share similar characteristics and possess the same labels [16]. For instance, friends tend to have similar interests or ages, and a scientific paper and its citations are usually from the same research area [17]. However, in the real world, there are also settings about “opposites attract,” leading to graphs with heterophily (or low/medium level of homophily) [18]. In such cases, the proximal nodes often belong to different classes and exhibit dissimilar features [19]. For example, in terms of device type, there are many classes such as Smart Fitness, Car, Smartphone, and the social Internet-of-Things (SIOT) system [20]. Devices (nodes) often interact with different types of devices in SIOT graphs. Most existing GNNs assume graphs are under high-level homophily, including GAT, GCN [21]. Consequently, many GNNs perform poorly in generalizing graphs with low/medium homophily, even worse than the multi-layer perception (MLP) [22], which relies solely on node features [23].

Recently, researchers have developed new approaches to solve this problem [24], such as geometric GCN (Geom-GCN) [25], heterophily and homophily GCN (H2GCN) [21], and iterative deep graph learning (IDGL) [26]. Geom-GCN proposes a geometric aggregation scheme to learn structural information, which enables GCNs to achieve an enhanced learning performance [25]. However, Geom-GCN only focuses on local neighborhood structures and overlooks cases in which neighborhood nodes may not provide valuable information in graphs with low/medium homophily. Thus, the classification performance of Geom-GCN is typically unsatisfactory when the graphs are heterophilic. H2GCN improves the classification performance of GCN via separating ego and neighbor-embeddings [21]. However, each node only aggregates feature representations from adjacent nodes and itself, leading H2GCN cannot capture long-range dependencies. IDGL [26] achieved a good classification performance by jointly and iteratively learning node embeddings and a new graph structure, whereas the iterative update of the optimized graph structure was time-consuming. In addition, IDGL includes many hyper-parameters that need to be tuned in advance, and the values of the hyper-parameters vary significantly between different graph datasets, which limits the generalization ability of IDGL.

This study proposes a novel graph learning framework called a graph convolutional network with self-attention (GCN-SA) to address the abovementioned issues. The proposed GCN-SA introduces two key improvements, one pertaining to edges and the other to nodes. From the perspective of edges, we generate an optimized re-connected adjacency matrix by jointly learning the graph structure and node embeddings. Inspired by the ability of the self-attention mechanism to reduce dependence on external information and capture the internal correlation of nodes [27], we employ the multi-head self-attention (MHSA) mechanism to learn a new adjacency matrix. Multi-head attention helps to stabilize the learning process of self-attention [28]. Specifically, we use the MHSA mechanism to calculate the attention scores between nodes. Then, we jointly apply the k-nearest neighbors (KNN) and minimum-threshold methods to select the nodes with the highest attention scores as the new adjacent nodes of the target node. Subsequently, the new graph structure and node embeddings are jointly optimized for the downstream prediction task. In summary, the structure-learning module can identify reliable neighbors for each node from the entire graph to help the model capture long-range dependencies through an MHSA mechanism, an appropriate screening mechanism, and the joint optimization of the node embeddings and graph structure. Although IDGL [26] can also learn an optimized graph structure through structural learning, the optimized adjacency matrix in IDGL is an adjustment of the original graph structure. The re-connected graph structure in our GCN-SA is related to the internal correlation of the nodes. Moreover, the re-connected graph structure does not replace the original one but instead works together with the original one to perform the downstream task. For the proposed GCN-SA, cooperation enables both the re-connected and original graph structures to play their respective strengths and adapt graphs with various homophily levels. From the perspective of node features, we modify the transformer block to make it more applicable to GCN. We then use the modified transformer block to perform node feature fusion and embedding fusion to integrate valuable information from the entire graph. Each transformer block with a self-attention mechanism includes two sub-layers: an MHSA mechanism and a fully connected feed-forward network. In addition, each sub-layer is followed by a residual connection and layer normalization. Nevertheless, GCN is sensitive to over-fitting. Therefore, we modify the transformer block to fuse valuable information while avoiding over-fitting. Specifically, we retain the MHSA mechanism to preserve the ability to capture the internal correlation of nodes. Subsequently, we apply two dropouts and two residual connections after applying the MHSA mechanism. The modified transformer block is utilized for node feature fusion. The fusional feature vectors are then combined with the original feature vectors to form node ego-embeddings. Afterward, we apply the original and re-connected adjacency matrices to perform feature aggregation on the ego-embeddings, generating neighbor-embeddings and reconnected-neighbor-embeddings, respectively. Next, we concatenate ego-embeddings, neighbor-embeddings, and reconnected-neighbor-embeddings to form representative node embeddings. Subsequently, We reuse the modified transformer block to fuse valuable node embeddings from the entire graph. Finally, the fusional result is set as the final node representation for node classification.

Compared with other GNNs, the contributions of the proposed GCN-SA can be summarized as follows:

1) The proposed GCN-SA introduces a new learning framework to build the reconnected adjacency matrix using an MHSA mechanism.

2) Our modified transformer block enables the GCN to integrate valuable information from an entire graph effectively.

3) We concatenate the ego-embeddings, neighbor-embeddings, and reconnected-neighbor-embeddings so that they could play to their respective strengths to adapt to graphs with various homophily levels.

4) The proposed GCN-SA is the first to improve the GNN from both edges and node features by utilizing a self-attention mechanism. The self-attention mechanism can construct a fully connected graph, allowing the model to capture the internal associations between any pair of nodes. This capability enables our GCN-SA to capture long-range dependencies effectively.

| Notations | Descriptions |

|---|---|

| A graph. | |

| Hadamard product. | |

| Concatenation. | |

| The set of edges in a graph. | |

| A node . | |

| The number of nodes, . | |

| The number of node labels. | |

| The number of attention heads. | |

| The dimension of a node feature vector. | |

| The set of neighbors for node . | |

| The adjacency matrix of the graph. | |

| The degree matrix of , . | |

| The normalized with self-loop. | |

| The re-connected adjacency matrix. | |

| The normalized | |

| The attention score matrix of a graph. | |

| The node feature matrix. | |

| The feature vector of the node . | |

| The ego-embeddings of nodes. | |

| The reconnected-neighbor-embeddings of nodes. | |

| The neighbor-embeddings of nodes. | |

| The general embeddings of nodes. | |

| The probability distributions of nodes. | |

| , , | Learnable model parameters |

| , , |

2 Preliminaries

This study proposes a novel GNN model related to GCN and transformer [27]. The minimal set of definitions is presented in Subsection 2.1. The theories about the GCN and transformer are reviewed in Subsection 2.2 and 2.3, respectively.

2.1 Definition

Throughout this paper, lowercase characters represent scalars, and bold lowercase characters and bold uppercase characters represent vectors and matrices, respectively. Unless otherwise specified, the notations employed in this study are listed in Table 1.

Let be an undirected graph, where and represent the sets of nodes and edges, respectively. is the number of nodes and denotes -th node. represents the edge between and . represents the set of one-hop neighbors for node . is the adjacency matrix. if and if . Let be the feature matrix for nodes, where denotes the feature vector of node . Each node is associated with a label. Given a multilayered network and the semantic labels for a subset of nodes , where means one of the predefined classes. GNNs can learn the feature representations of nodes and graphs by using the graph structure and node features. Then the task of node classification is to predict the label for each node without label according to the feature representations of the corresponding node [29].

2.2 GCN

Following the work in [3], GCN updates node features by adopting an isotropic averaging operation over the feature representations of one-hop neighbors. Let be the feature representation of node in the -th GCN layer, then a single message passing step in the GCN model takes the following form:

| (1) |

where is a learnable weight matrix for the -th GCN layer, represents the set of one-hop neighbors of node . Note that and denote the in-degrees of nodes and , respectively. is the nonlinear activation function. Along this line, the forward model of a 2-layer GCN [3] can be represented as:

| (2) |

where represents the adjacency matrix, and is the feature matrix of nodes and is also the input of the first GCN layer. is the number of nodes, and is the feature dimension of nodes. represents the normalized , where represents with self-loop, and is an identity matrix. is the degree matrix of . Each element is defined as:

| (3) |

2.3 Transformer Block

The SA mechanism can learn the weight distributions of different features [30]. In other words, the SA mechanism can help the network to recognize features that are important for prediction. A transformer block consists of two sub-layers: an MHSA mechanism is the first sub-layer, and a simple fully connected feed-forward network is the second sub-layer [27]. The MHSA mechanism is calculated as follows:

| (4) |

| (5) |

| (6) |

| (7) |

| (8) |

where is the input. , , and respectively correspond to the Query, Key, and Value of the -th head (). Moreover, a residual connection [31] and layer normalization [32] follow each sub-layer. Thus, the input of the second sub-layer is as follows:

| (9) |

The second sub-layer is a fully connected feed-forward network. Thus, the output of the transformer block is calculated as follows:

| (10) |

3 GCN-SA

This section presents a novel GNN, the graph convolutional network with self-attention (GCN-SA), for node classification of graph-structured data. Figure 1 shows the pipeline of our GCN-SA. The remainder of this Section is organized as follows. Subsection 3.1 gives the construction details of the re-connected adjacency matrix. Subsection 3.2 describes the modified transformer block and the generation of fusional feature vectors. Subsection 3.3 elaborates on the proposed GCN-SA.

3.1 Re-connected Graph

Most existing GNNs are designed for graphs with high homophily, where the connected nodes usually possess similar feature representations and have the same label, for example, citation and community networks [33]. However, there are many graphs with low or medium homophily in the real world, where the connected nodes often have distinct features and possess different labels, such as webpage linking networks [34]. In summary, in practical applications, GNNs designed under the assumption of high homophily are inappropriate for graphs with low or medium homophily.

To alleviate this problem, we build a re-connected adjacency matrix via structure learning to provide each node with reliable neighbor information. Specifically, we use the MHSA mechanism to compute the attention scores between nodes to explore their internal correlation. Considering our primary focus on undirected graphs, generating undirected reconstructed graphs aligns more closely with our practical requirements. Therefore, the MHSA mechanism is modified as follows:

| (11) |

| (12) |

| (13) |

where represents the node feature matrix. denotes the learnable weighted matrix for the -th head (). Eq. 12 calculates the cosine scores between each pair of row vectors in matrix , resulting in the cosine score matrix, . In this manner, we can obtain a symmetric attention score matrix , where the element ranges between . The MHSA mechanism can construct multiple fully connected graphs, enabling each node to connect to all the other nodes. This capability allows each node to aggregate features from any other node, but it may result in excessive computational burden and introduce noise. However, the re-connected adjacency matrix is expected to be sparse, connected, and non-negative [26]. To address this issue, we combine the KNN and minimum-threshold methods to select the most reliable neighbors for each node. Specifically, we first define a positive integer and a non-negative threshold . Next, we retain attention scores that are either greater than or belong to the set of largest elements in the corresponding row while setting the remaining attention scores to zero. The new adjacency matrix is represented as:

| (14) |

where denotes the the set of largest elements in the - row of . To guarantee the symmetry of , we impose the following constraint on :

| (15) |

In this study, the re-connected graph and node embeddings are optimized simultaneously and benefit each other. In this manner, we can obtain a sparse, nonnegative, connected, and reliable re-connected adjacency matrix . Figure 2 shows the construction process of the re-connected adjacency matrix. In summary, the MHSA mechanism allows nodes to interact with any other node, and appropriate screening mechanisms help the nodes identify the most relevant new neighbors.

.

3.2 Modified Transformer Block

Transformer blocks with self-attention mechanisms can help the model recognize important features from the entire graph and pay more attention to these features [27]. Thus, the transformer block is an appropriate method to help the model capture long-range dependencies. However, using a transformer block directly to help GCN perform feature fusion can result in over-fitting. Therefore, we modify the transformer block to make it more applicable to GCN. A modified transformer block is shown in Figure 1. The MHSA mechanism of the modified transformer block is represented as:

| (16) |

| (17) |

| (18) |

| (19) |

| (20) |

| (21) |

where , , , and are learnable weighted matrix. , and are the (Query), (Key) and (Value) matrices derived from the linear transformation of , respectively. Compared with in Eq. 4, in Eq. 16 omit a fully connected layer for reducing model complexity and minimizing information loss. Then, We apply a dropout to and .

In the original transformer block [27], the MHSA mechanism is followed by a residual connection. The residual connection can overcome the problem of gradient disappearance, enabling the development of deeper models. Thus, we also apply the residual connection to . The output of the modified transformer block, fusional feature vectors, is calculated as follows:

| (22) |

where the purpose of using dropout is to alleviate the over-fitting problem. For convenience, we represent the output of the modified transformer block as follows:

| (23) |

3.3 GCN-SA

The fusional feature vectors and original feature vectors are concatenated as the ego-embeddings for nodes:

| (24) |

where represents the concatenation function. Afterward, we employ the generated from Subsection 3.1 to perform feature aggregation as follows:

| (25) |

where is the normalized , and is the degree matrix of . We call the results of feature aggregation, , the reconnected-neighbor-embeddings. Similarly, we utilize the original adjacency matrix to perform feature aggregation as follows:

| (26) |

where , represents the times of the feature aggregation. . represents the normalized with self-loop. Herein, is the degree matrix of with self-loop, and is a identity matrix. and are defined as the neighbor-embeddings of nodes. Subsequently, the ego-embeddings, neighbor-embeddings, and re-connected neighbor-embeddings are concatenated as the general embeddings of nodes. The formula is written as:

| (27) |

Then we apply a dropout to . For graphs under homophily, and are sufficient for downstream task. This can be proved by GCN [3] and GAT [2]. In addition, can be treated as the supplement to and . For the graphs with low/medium homophily, and can also perform well. Moreover, the approach of neighbor-embedding updating allows GCN-SA to capture higher-order neighbor information flexibly.

Afterward, a learnable weight vector is generated, and the dimension of is the same as the output dimensions of . Then we take the Hadamard product between and to highlight the important dimension of :

| (28) |

Subsequently, nodes are classified in the following way:

| (29) |

where is a trainable weighted matrix, and the output dimension of is the same as the number of classes. The modified transformer block performing node embedding fusion can help GCN-SA fuse valuable information from different nodes to improve classification results. Then, we calculate the cross-entropy error over all labeled nodes:

| (30) |

where is the set of node indices that have labels.

We employ an MHSA mechanism to capture the internal correlation between nodes and construct a fully connected re-connected adjacency matrix. Afterward, We obtain a connected and sparse re-connected adjacency matrix by masking the unnecessary edges. Subsequently, we obtain a reliable, connected, and sparse re-connected adjacency matrix through joint learning of the re-connected graph and node embeddings. Moreover, we propose a modified transformer block and use it to perform feature vector fusion. Next, we combine the fusional feature vector with the node feature vectors to train the model and reuse the modified transformer block to perform embedding fusion to help GCN-SA select valuable features from different nodes. Our GCN-SA model is trained using diverse information from different views to learn representative node embeddings and accurately perform downstream tasks. These steps can help GCN-SA capture long-range dependencies, allowing it to adapt to graphs with varying levels of homophily. The pseudo-code for the proposed GCN-SA is provided in Algorithm 1.

4 Experimental Results

In the experimental section, to demonstrate the merits of our GCN-SA, we compare it with several outstanding GNNs on node classification tasks. The experiments are conducted on eight benchmark datasets with different homophily levels. Subsection 4.1 describes the graph datasets considered in this study. Subsection 4.2 discusses the eight baseline techniques used in this study. Subsection 4.3 illustrates the experimental setup. Subsection 4.4 evaluates the effectiveness of the reconnected adjacency matrix. Subsection 4.5 investigates the impact of the modified transformer block on the model. Subsection 4.6 analyzes the time complexity of our GCN-SA. Subsection 4.7 estimates the classification performance of our GCN-SA and other competitors in the literature.

4.1 Datasets

Eight open graph datasets are adopted to validate the proposed GCN-SA in the simulation, including three citation networks, two Wikipedia networks, and three WebKB networks.

The three standard citation network benchmark datasets include the Cora, Citeseer, and Pubmed datasets [35]. In the three citation networks, nodes correspond to papers, and node features are defined as the bag-of-words representation of the paper. Edges correspond to citations between papers. Each node has a unique label, defined as the corresponding paper’s academic topic.

Wikipedia networks are page-page networks on specific topics in Wikipedia, e.g., Chameleon and Squirrel datasets. In Wikipedia networks, nodes correspond to Wikipedia pages, and node features are represented as informative nouns on Wikipedia pages. Edges correspond to the reciprocal links between Wikipedia pages. Nodes are classified into five categories according to the amount of their average traffic.

The sub-networks of the WebKB networks include the Cornell, Texas, and Wisconsin datasets. These three datasets were collected from the computer science departments [25]. In WebKB networks, nodes correspond to web pages, and the bag-of-words representation of web pages serves as the node features. Edges represent hyperlinks between web pages. These web pages are divided into five classes.

| Dataset | Cora | Citese. | Pubmed | Chamele. | Squirr. | Cornell | Texas | Wiscon. |

|---|---|---|---|---|---|---|---|---|

| Hom.ratio | 0.81 | 0.74 | 0.8 | 0.23 | 0.22 | 0.3 | 0.11 | 0.21 |

| # Nodes | 2708 | 3327 | 19717 | 2277 | 5201 | 183 | 183 | 251 |

| # Edges | 5429 | 4732 | 44338 | 31421 | 198493 | 295 | 309 | 499 |

| # Features | 1433 | 3703 | 500 | 2325 | 2089 | 1703 | 1703 | 1703 |

| # Classes | 7 | 6 | 3 | 5 | 5 | 5 | 5 | 5 |

Unless particularly specified, nodes per class are randomly split into , , and for training, validation, and testing by default across all datasets. Testing is performed when validation losses reach a minimum. A summary of the information for all datasets is presented in Table 2.

The homophily of graph-structured data is a crucial characteristic and plays a significant role in analyzing and utilizing them. As in [21], we also utilize the homophily ratio to measure the homophily level of a graph, , where represents the set of edges, and represent the labels of node and , respectively. is the proportion of edges in a graph that connect nodes with the same label (i.e., intra-class edges). This definition is proposed in [21]. Graphs have strong homophily if is high () and weak homophily if is low (). Table 2 lists the homophily ratio for each graph. From the values of the adopted graphs, we can observe that all citation networks display a high level of homophily, while the WebKB networks and Wikipedia networks exhibit low homophily.

4.2 Baselines

We compare the proposed GCN-SA with several baselines:

MLP [22] is a simple deep neural network. MLP makes predictions based on the features of nodes without considering any local or non-local information.

GAT [2] enables specifying different weights to different neighbors by employing the attention mechanism.

GCN [3] is one of the most common GNNs. The GCN is introduced in Subsection 2.2. GCN makes predictions by aggregating local information.

MixHop [36] repeatedly aggregates the feature representations of neighbors with various distances to learn robust node embeddings.

Geom-GCN [25] captures geometric information of graphs to enhance GCN’s ability on representation learning. Three embedding methods, struc2vec, Isomap, and Poincare embedding, are employed in Geom-GCN, corresponding to three variants: Geom-GCN-S, Geom-GCN-I, and Geom-GCN-P. Nevertheless, we only report the best results of three Geom-GCN variants.

IDGL [26] jointly and iteratively learning the node embeddings and the graph structure. In addition, IDGL dynamically stops when the learned graph is close enough to the optimized graph for the downstream prediction task.

H2GCN [21] identifies a set of significant designs, including ego and neighbor embedding separation, higher-order neighborhoods. By colligating these designs, H2GCN adapts to both heterophily and homophily. We consider two variants: H2GCN-1 and H2GCN-2 according to the distance of neighbor nodes for each aggregation.

CPGNN [37] incorporates an interpretable compatibility matrix for modeling the homophily level in the graph, which enables it to go beyond the assumption of strong homophily. CPGNN contains four variants: CPGNN-MLP-1, CPGNN-MLP-2, CPGNN-Cheby-1 and CPGNN-Cheby-2. According to the analysis in [37], CPGNN-Cheby-1 performs the best overall. Therefore, we consider the CPGNN-Cheby-1.

4.3 Experimental Setup

For comparison, we use eight state-of-the-art node classification algorithms, including MLP, GAT, GCN, MixHop, GEOM-GCN, IDGL, H2GCN and CPGNN. In the above eight network models, all hyper-parameters are set according to [21], IDGL to [26], and CPGNN to [37]. Our GCN-SA includes many hyper-parameters, some of which are fixed, while others need to be searched on the validation set. The random seed is set as for all experiments. The number of attention heads is in the re-connected graph learning, and is in feature vector and embedding fusion. The numbers of hidden features are set as . The descriptions of the hyperparameters need to be searched, and the corresponding ranges of values tried are provided in Table 8. Table 9 summarizes the values of all hyper-parameters and the corresponding classification accuracies on different datasets.

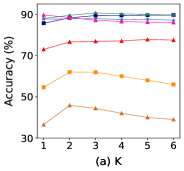

Among all the hyper-parameters, , , and play a particularly crucial role in our GCN-SA. determines the number of times feature aggregation is performed on the original graph structure, while and dictate the sparsity and connectivity of the reconnected graph. Therefore, we investigate the impact of these three hyper-parameters on the classification accuracies of our GCN-SA on seven datasets. Figure 3 shows the classification accuracies versus the , , and on different datasets. Since the behavior of GCN-SA in the Texas and Wisconsin datasets is similar, we omit the Wisconsin dataset for brevity. Referring to Figure 3 (b), it can be observed that as increases, GCN-SA behaves differently across various graphs. This phenomenon is mainly because different datasets have different levels of homophily. As for Figure 3 (c) and (d), the classification accuracies of our GCN-SA remain relatively stable with increasing values of and . This consistency indicates that our graph-structure-learning method is effective and robust. The optimal values of , , and for different datasets are recorded in the Table 9.

ReLU is utilized as the nonlinear activation function. The training nodes are utilized for training our GCN-SA by minimizing cross-entropy loss. Adam optimizer [38] is employed in our GCN-SA. The proposed GCN-SA is implemented with the deep learning library PyTorch. The Python and PyTorch versions are 3.8.10 and 1.9.0, respectively. All experiments are conducted on a Linux server with a GPU (NVIDIA GeForce RTX 2080 Ti). The experimental results are the mean and standard deviation of ten runs for all the experiments.

4.4 Effectiveness Verification for Re-connected Adjacency Matrix

In this section, we investigate the impact of our GCN-SA on the reliability of re-connected graph structures. We employ the homophily ratios, denoted as , to evaluate the reliability of the reconnected graph structures. Higher values of correspond to greater reliability, while lower values indicate less reliability. Figure 4 displays the values for the original, initialized, and two reconnected graphs across eight datasets. The initialized graph is constructed by GCN-SA without optimization. In contrast, the two reconnected graphs are learned and optimized by NLGNN and GCN-SA methods, respectively. As seen from Figure 4, the values of the re-connected graphs are significantly higher than those of the original and initialized graphs for all datasets, particularly in the case of Wikipedia and WebKB networks. This is mainly because the re-connected graphs can be optimized toward the downstream prediction task in both NLGNN and GCN-SA methods. Additionally, we observe that the values of the re-connected graphs learned by our GCN-SA are always higher than those of the NLGNN. This difference is due to the limited power of the inner product-based similarity metric function employed by NLGNN in representing the intrinsic correlation between nodes. In comparison, our GCN-SA is more effective at capturing the internal correlation of nodes.

| Dataset | Cora | Cite. | Pubm. | Cham. | Squi. | Corn. | Texa. | Wisc. | ||

|---|---|---|---|---|---|---|---|---|---|---|

| – | – | 75.71.8 | 72.82.1 | 86.80.8 | 49.83.2 | 34.32.0 | 84.76.3 | 85.05.5 | 87.44.5 | |

| GCN-SA | ✓ | – | 89.40.5 | 77.00.8 | 88.51.2 | 61.13.0 | 44.12.5 | 85.84.0 | 86.13.8 | 87.24.5 |

| ✓ | ✓ | 90.50.4 | 79.10.6 | 90.90.6 | 64.11.8 | 46.51.6 | 90.84.5 | 89.72.6 | 91.52.5 |

We employ the Gephi tool to visualize the original graph and the re-connected graph optimized by our GCN-SA, using the Wisconsin network as an example. Figure 5 presents the visualization of these two graphs, allowing for an intuitive examination of the structural changes brought about by our GCN-SA. We then select a specific node and highlight its neighborhood in both the original graph (Figure 5(b)) and the re-connected graph (Figure 5(d)). As depicted in Figure 5, the original graph appears chaotic, with numerous inter-class connections. However, after applying our GCN-SA, the structure of the reconnected graph becomes considerably more organized, with the fraction of intra-class edges reaching 90%. This improvement in accuracy performance demonstrates that our graph-structure-learning module can substantially enhance the graph structure.

| Dataset | Fusion_1 | Fusion_2 | Cora | Cite. | Pubm. | Cham. | Squi. | Corn. | Texa. | Wisc. | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| GCN-SA | – | – | 89.00.7 | 76.91.1 | 89.71.3 | 61.43.5 | 42.53.0 | 86.15.0 | 86.54.5 | 87.14.8 | |

| ✓ | – | 89.40.6 | 77.51.0 | 90.11.2 | 62.63.0 | 43.62.8 | 87.46.2 | 86.15.3 | 88.24.8 | ||

| – | ✓ | 89.70.4 | 77.21.0 | 90.21.1 | 61.53.5 | 43.52.7 | 86.85.3 | 89.04.9 | 89.25 | ||

| ✓ | ✓ | 90.50.4 | 79.10.6 | 90.90.6 | 64.11.8 | 46.51.6 | 90.84.5 | 89.72.6 | 91.52.5 |

| Method | Cora | Cites. | Pubmed | Chame. | Squir. | Corne. | Texas | Wiscon. |

|---|---|---|---|---|---|---|---|---|

| GCN-SA-W/O | 89.00.7 | 76.91.1 | 89.71.3 | 61.43.5 | 42.53.0 | 86.15.0 | 86.54.5 | 87.14.8 |

| GCN-SA-TF | 87.41.1 | 75.41.2 | 87.2 1.5 | 58.55.0 | 41.22.5 | 83.96.8 | 84.56.4 | 85.25.8 |

| GCN-SA | 90.50.4 | 79.10.6 | 90.90.6 | 64.11.8 | 46.51.6 | 90.84.5 | 89.72.6 | 91.52.5 |

Afterward, we investigate the impact of the on the accuracy of our GCN-SA using an ablation study. Table 3 presents the accuracies of our GCN-SA across all adopted graphs. It can be observed that significantly enhances the classification accuracies of our GCN-SA in citation networks and Wikipedia networks. This improvement is due to the , which can provide valuable neighbors for aggregation. Additionally, also promotes the performance of our GCN-SA, particularly in WebKB networks. This is owing to being more reliable than in WebKB networks. Our GCN-SA, when combined with both and , performs well in all networks. Based on this observation, can be treated as a qualified adjacency matrix for providing each node with reliable neighbors, thereby enhancing the ability of GCN-SA to capture long-range dependencies.

4.5 Effect of the Modified Transformer Block on Accuracy

In this section, we examine the impact of the modified transformer block on the accuracies of GCN-SA. The modified transformer block operates at two different points within GCN-SA: feature vector fusion (Fusion_1) and node embedding fusion (Fusion_2). We employ an ablation study to assess the effects of Fusion_1 and Fusion_2 on the node classification accuracy of GCN-SA. Table 4 displays the classification accuracies of GCN-SA across all datasets. As observed, Fusion_1 enhances the performance of GCN-SA. For example, when Fusion_1 is applied to GCN-SA in the Chameleon dataset, the performance improves by 1.2%. Similarly, Fusion_2 also contributes to the improvement of GCN-SA’s accuracies. In the Texas dataset, GCN-SA achieves a 2.5% performance enhancement when Fusion_2 is incorporated. Ultimately, GCN-SA with both Fusion_1 and Fusion_2 achieves the best performance across all networks. Notably, in the Squirrel dataset, GCN-SA sees a 3.6% accuracy improvement when both Fusion_1 and Fusion_2 are applied. The experimental results demonstrate that both Fusion_1 and Fusion_2 can bolster GCN-SA’s ability to learn node embeddings effectively.

For further insight, we compare the impacts of the original transformer block and modified transformer block on the accuracies of GCN-SA. For this purpose, we introduce two variants of GCN-SA. The first variant, GCN-SA-W/O, that without feature vector and embedding fusion. The second variant, GCN-SA-TF, employs the original transformer block to perform feature vector and node embedding fusion. Table 5 displays the classification accuracies of GCN-SA-W/O, GCN-SA-TF, and GCN-SA across all datasets. As observed, GCN-SA-TF performs the worst, even underperforming GCN-SA-W/O. This might be due to the original transformer block making GCN suffer from over-fitting. In contrast, GCN-SA achieves the best classification performance, demonstrating that the modified transformer block is more effective in enhancing GCN compared to the original transformer block.

| Method | Cora | Cites. | Pubmed | Chame. | Squir. | Corne. | Texas | Wiscon. |

|---|---|---|---|---|---|---|---|---|

| MLP | 74.82.2 | 72.42.2 | 86.70.4 | 46.42.5 | 29.71.8 | 81.16.4 | 81.94.8 | 85.33.6 |

| GAT | 87.71.9 | 75.51.7 | 86.71.7 | 54.71.9 | 30.62.1 | 58.93.3 | 58.44.5 | 55.38.7 |

| GCN | 87.31.3 | 76.71.6 | 87.40.7 | 59.82.6 | 36.91.4 | 57.04.7 | 59.55.3 | 59.86.9 |

| MixHop | 87.60.9 | 76.31.3 | 85.30.6 | 60.52.5 | 43.81.5 | 73.56.3 | 77.85.7 | 75.94.9 |

| Geom-GCN | 84.91.2 | 77.01.4 | 88.90.9 | 60.92.4 | 36.11.3 | 60.86.1 | 67.65.8 | 64.17.3 |

| IDGL | 88.70.8 | 76.71.2 | 89.40.4 | 60.51.9 | 42.62.9 | 84.54.4 | 84.94.1 | 87.25.5 |

| H2GCN-1 | 86.91.4 | 77.11.6 | 89.40.3 | 57.11.6 | 36.41.9 | 82.24.8 | 84.93.8 | 86.74.7 |

| H2GCN-2 | 87.81.4 | 76.91.8 | 89.60.3 | 59.42.0 | 37.92.1 | 82.25.0 | 82.24.3 | 85.94.2 |

| CPGNN | 87.00.8 | 76.41.2 | 89.60.2 | 61.03.1 | 42.53.1 | 74.14.9 | 75.93.5 | 80.65.2 |

| GCN-SA | 89.41.1 | 77.21.3 | 90.20.5 | 62.52.1 | bf45.11.0 | 88.46.7 | 89.53.5 | 89.64.0 |

| Method | Cora | Cites. | Pubmed | Chame. | Squir. | Corne. | Texas | Wiscon. |

|---|---|---|---|---|---|---|---|---|

| MLP | 76.31.8 | 71.82.0 | 87.30.3 | 50.72.1 | 31.11.6 | 84.55.2 | 82.15.5 | 86.64.3 |

| GAT | 87.81.7 | 76.21.5 | 87.71.5 | 60.61.7 | 36.62.0 | 61.33.0 | 63.74.4 | 58.37.8 |

| GCN | 88.21.1 | 77.61.4 | 87.10.8 | 61.32.5 | 43.72.2 | 60.85.2 | 61.15.7 | 62.36.4 |

| MixHop | 88.00.7 | 77.11.2 | 86.90.7 | 61.82.3 | 44.02.2 | 79.65.9 | 81.54.2 | 80.05.8 |

| Geom-GCN | 85.31.0 | 78.01.1 | 90.10.4 | 60.92.2 | 38.12.0 | 60.85.5 | 67.65.2 | 64.16.2 |

| IDGL | 89.10.8 | 76.80.9 | 89.90.5 | 61.91.4 | 42.83.0 | 86.54.2 | 85.94.0 | 89.16.1 |

| H2GCN-1 | 88.21.1 | 77.31.1 | 90.00.5 | 60.22.3 | 38.93.0 | 84.44.4 | 85.54.3 | 88.34.2 |

| H2GCN-2 | 88.91.0 | 77.61.5 | 90.20.5 | 60.81.8 | 40.21.9 | 84.04.0 | 85.53.8 | 87.74.3 |

| CPGNN | 88.00.7 | 77.01.0 | 88.20.3 | 62.52.8 | 45.32.8 | 76.55.6 | 82.14.2 | 82.34.8 |

| GCN-SA | 90.50.4 | 79.10.6 | 90.90.6 | 64.11.8 | 46.51.6 | 90.84.5 | 89.72.6 | 91.52.5 |

| Hyper-para. | Descriptions | Range of values tried |

|---|---|---|

| Learning rate. | {0.01, 0.02, …, 0.05} | |

| Dropout rate. | {0.1, 0.15, …, 0.9} | |

| Weight decay. | {5e-3, 5e-4, …, 5e-6} | |

| The number of nearest neighbors r in | {2,3, …, 7} | |

| KNN method. | ||

| Non-negative threshold in similarity | {0.75, 0.8, …, 0.95} | |

| learning. | ||

| The number of rounds in the original | {1, 2, …, 6} | |

| neighborhood aggregation stage. |

| Dataset | Accu. (%) | Hyper-parameters |

|---|---|---|

| Cora | 90.50.4 | :0.03, :5e-4, :0.55, :0.95, :6, :42, :16, :48, :5 |

| Citeseer | 79.10.6 | :0.03, :5e-4, :0.55, :0.95, :5, :42, :16, :48, :2 |

| Pubmed | 90.90.6 | :0.03, :5e-4, :0.55, :0.9, :3, :42, :16, :32, :3 |

| Chamele. | 64.11.8 | :0.05, :5e-4, :0.55, :0.85, :1, :42, :16, :32, :5 |

| Squirrel | 46.51.6 | :0.05, :5e-4, :0.55, :0.9, :2, :42, :16, :32, :6 |

| Cornell | 90.84.5 | :0.01, :5e-3, :0.2, :0.95, :3, :42, :16, :32, :3 |

| Texas | 89.72.6 | :0.01, :5e-3, :0.2, :0.8, :1, :42, :16, :48, :3 |

| Wisconsin | 91.52.5 | :0.01, :5e-3, :0.35, :0.8, :1, :42, :16, :32, :6 |

4.6 Analysis of Time Complexity

In this paper, denotes the number of nodes, while and represent the number of original and hidden features of nodes, respectively. is the class number of node labels. Given these definitions, the computational complexity of a 2-layer GCN, as shown in 2, is . Compared with the 2-layer GCN, our GCN-SA contains three additional SA mechanisms and one Hadamard product. The computational complexities of the first and the second SA mechanisms are the same and equal to . The computational complexity of the third SA mechanism is equal to . The Hadamard product’s computational complexity is . In summary, the computational complexity of our GCN-SA is .

In addition, we compare the real running time (500 epochs) of GCN, GAT, Geom-GCN, IDGL, CPGNN, and GCN-SA with the hyper-parameters described in Section 4.3. The Cornell, Texas, and Wisconsin networks possess a similar number of nodes, edges, and node feature dimensions. Thus, we choose only one dataset, Texas, from the three for the sake of clarity and conciseness. Figure 6 exhibits the running time of classification approaches on Texas, Citeseer, Cora, Pubmed, Chameleon, and Squirrel networks. As observed, GCN is the fastest. Due to the time-consuming iterative graph structure learning module, IDGL is the slowest. GAT and GCN-SA are at comparable levels.

4.7 Comparison Among Different GNNs

This experiment estimates the classification performance of the proposed GCN-SA and other outstanding approaches in the literature. In Table 6, the nodes of each class are randomly split into 48%, 32%, and 20% for training, validation, and testing. In Table 7, the proportions are 60%, 20%, and 20%. As seen from the Tables 6, some GNNs perform even worse than MLP on WebKB networks (low homophily), notably GAT, GCN, MixHop, and Geom-GCN, which have an accuracy of 58.9%, 57.0%, 73.5%, and 60.8% in Cornell, respectively. The proposed GCN-SA performs best and achieves 88.4% in Cornell. Meanwhile, on graphs with high homophily, our GCN-SA still has advantages. Compared with the H2GCN, IDGL, and CPGNN, GCN-SA has 1.6%, 0.7%, and 2.4% higher accuracies on the Cora dataset. As can be seen, the proposed GCN-SA possesses the best classification performance. In addition, similar observations can be made in Table 7.

5 Conclusion

In the real world, graphs exhibit varying levels of homophily. However, most existing GNNs are designed for graphs with high homophily, often underperforming for graphs under heterophily due to their strong homophily assumption. To bridge this gap, we introduce GCN-SA, a novel approach that enhances node embedding through the self-attention mechanism. During the implementation process of GCN-SA, we present a modified transformer block to incorporate GCN to capture local-to-global correlations. This integration is pivotal in allowing GCN-SA to handle graphs with varying homophily levels adeptly. Additionally, we develop a graph structure learning technique powered by self-attention, further refining the GCN-SA’s adaptability to diverse graphs. Moreover, GCN-SA uniquely concatenates multiple node-level embeddings, leveraging their respective strengths to optimize performance. The GCN-SA culminates in a versatile and universal node embedding solution in graphs with various homophily levels.

5.1 Strengths

Here are our work’s main Strengths:

-

Our GCN-SA is a stable and effective node embedding method that can be used for heterophilic and homophilic graphs.

-

The developed modified transformer block incorporates GCN, allowing GCN to fuse valuable features from the entire graph.

-

The proposed graph-structure-learning strategy is flexible, and others can incorporate it into any GNN model to learn new and reliable graph structures.

-

Our GCN-SA demonstrates superior accuracy in graphs under various levels of homophily. Others can employ GCN-SA to learn node embeddings for graphs with various homophily levels.

5.2 Limitations

Here are our work’s main limitations:

-

The advantages of our GCN-SA in homophilic graphs are not particularly significant.

-

The number of new neighbors may be unbalanced when the minimum threshold method is used to screen new neighbors.

-

Introducing multiple transformer blocks increases the computational complexity of the model.

5.3 Future work

We propose the following directions for future work:

-

Enhancing the competitiveness of GCN-SA, particularly for graphs with high-level homophily.

-

Improving the graph-structure-learning method to supply reliable and balanced new neighbors for each node.

-

Reducing the computational complexity of GCN-SA and addressing its scalability.

-

Extending GCN-SA to more challenging scenarios, such as large-scale, dynamic, or knowledge graphs.

6 Acknowledgments

This study was supported in part by Shaanxi Key Research and Development Program under Grant 2018ZDCXL-GY-04-03-02, in part by the Scientific Research Program of the Education Department of Shaanxi Province under Grant 21JK0762, part by the University-Industry Collaborative Education Program of the Ministry of Education of China under Grant 220802313200859, and part by the National Natural Science Foundation of China under Grant 42001319.

References

- [1] M. Korban, P. A. Youngs, S. T. Acton, TAA-GCN: A temporally aware adaptive graph convolutional network for age estimation, Pattern Recognit. 134 (2023) 109066.

- [2] P. Velickovic, G. Cucurull, A. Casanova, A. Romero, P. Liò, Y. Bengio, Graph attention networks, in: Proceedings of the 6th International Conference on Learning Representations, 2018.

- [3] T. N. Kipf, M. Welling, Semi-supervised classification with graph convolutional networks, in: Proceedings of the 5th International Conference on Learning Representations, 2017, pp. 1–14.

- [4] K. Xu, W. Hu, J. Leskovec, S. Jegelka, How powerful are graph neural networks, in: Proceedings of the 7th International Conference on Learning Representations, 2019, pp. 1–17.

- [5] W. L. Hamilton, Z. Ying, J. Leskovec, Inductive representation learning on large graphs, in: Proceedings of the Neural Information Processing Systems, 2017, pp. 1024–1034.

- [6] M. Defferrard, X. Bresson, P. V. der Gheynst, Convolutional neural networks on graphs with fast localized spectral filtering, in: Proceedings of the Neural Information Processing Systems, 2016, pp. 3844–3852.

- [7] X. Shen, Y.-S. Ong, Z. Mao, S. Pan, W. Liu, Y. Zheng, Compact network embedding for fast node classification, Pattern Recognit. 136 (2023) 109236.

- [8] G. Corso, L. Cavalleri, D. Beaini, et al, Principal neighborhood aggregation for graph nets, in: Proceedings of the Neural Information Processing Systems, 2020.

- [9] C. Li, Z. Wang, H. Qi, An efficient pipeline for pruning convolutional neural networks, in: Proceedings of the 19th IEEE International Conference on Machine Learning and Applications, 2020.

- [10] J. Chen, T. Ma, C. Xiao, Fastgcn: Fast learning with graph convolutional networks via importance sampling, in: Proceedings of the 6th International Conference on Learning Representations, 2018, pp. 1–15.

- [11] W. L. Hamilton, R. Ying, J. Leskovec, Representation learning on graphs: Methods and applications, in: Proceedings of the Neural Information Processing Systems, 2017, pp. 1024–1034.

- [12] B. Lei, Y. Zhu, S. Yu, H. Hu, Y. Xu, G. Yue, T. Wang, C. Zhao, S. Chen, P. Yang, X. Song, X. Xiao, S. Wang, Multi-scale enhanced graph convolutional network for mild cognitive impairment detection, Pattern Recognit. 134 (2023) 109106.

- [13] M. Liu, Z. Wang, S. Ji, Non-local graph neural networks, arXiv preprint arXiv:2005.14612 (2020).

- [14] X. Fan, M. Gong, Y. Wu, Markov clustering regularized multi-hop graph neural network, Pattern Recognit. (2023) 109518.

- [15] K. Z. Khanam, G. Srivastava, V. Mago, The homophily principle in social network analysis: A survey, Multim. Tools Appl. 82 (6) (2023) 8811–8854.

- [16] W. Li, C. Wang, H. Xiong, J. Lai, HomoGCL: Rethinking homophily in graph contrastive learning, in: A. K. Singh, Y. Sun, L. Akoglu, D. Gunopulos, X. Yan, R. Kumar, F. Ozcan, J. Ye (Eds.), Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, KDD, 2023, pp. 1341–1352.

- [17] C. Wang, S. Pan, C. P. Yu, R. Hu, G. Long, C. Zhang, Deep neighbor-aware embedding for node clustering in attributed graphs, Pattern Recognit. 122 (2022) 108230.

- [18] K. Xu, C. Li, Y. Tian, T. Sonobe, K. Kawarabayashi, S. Jegelka, Representation learning on graphs with jumping knowledge networks, in: Proceedings of the 35th International Conference on Machine Learning, Vol. 80, 2018, pp. 5449–5458.

- [19] X. Zhang, M. Zitnik, Gnnguard: defending graph neural networks against adversarial attacks, in: Proceedings of the Neural Information Processing Systems, 2020.

- [20] A. Khanfor, A. Nammouchi, H. Ghazzai, Y. Yang, M. R. Haider, Y. Massoud, Graph neural networks-based clustering for social internet of things, in: Proceedings of the 63rd IEEE International Midwest Symposium on Circuits and Systems, 2020, pp. 1056–1059.

- [21] J. Zhu, Y. Yan, L. Zhao, M. Heimann, L. Akoglu, D. Koutra, Beyond homophily in graph neural networks: current limitations and effective designs., in: Proceedings of the Neural Information Processing Systems, 2020.

- [22] K. Hornik, Approximation capabilities of multilayer feedforward networks, Neural Networks (1991).

- [23] S. Pandit, D. H. Chau, S. Wang, C. Faloutsos, Netprobe: A fast and scalable system for fraud detection in online auction networks, in: Proceedings of the 16th International Conference on World Wide Web, WWW, 2007, pp. 201–210.

- [24] X. Lin, C. Zhou, J. Wu, H. Yang, H. Wang, Y. Cao, B. Wang, Exploratory adversarial attacks on graph neural networks for semi-supervised node classification, Pattern Recognit. 133 (2023) 109042.

- [25] H. Pei, B. Wei, K. C. Chang, Y. Lei, B. Yang, Geom-gcn: geometric graph convolutional networks, in: Proceedings of the International Conference on Learning Representations, 2020.

- [26] Y. Chen, L. Wu, , M. J. Zaki, Iterative deep graph learning for graph neural networks: better and robust node embeddings, in: Proceedings of the Neural Information Processing Systems, 2020.

- [27] A. Vaswani, N. Shazeer, et al, Attention is all you need, 2017.

- [28] R. Li, S. Wang, F. Zhu, J. Huang, Adaptive graph convolutional neural networks, in: Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, 2018, pp. 3546–3553.

- [29] Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang, P. S. Yu, A comprehensive survey on graph neural networks, IEEE transactions on neural networks and learning systems 32 (2021) 4–24.

- [30] V. Mazzia, S. Angarano, F. Salvetti, F. Angelini, M. Chiaberge, Action transformer: A self-attention model for short-time pose-based human action recognition, Pattern Recognit. 124 (2022) 108487.

- [31] K. H, X. Zhang, S. R. et al, Deep residual learning for image recognition, 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016) 770–778.

- [32] J. Xu, X. Sun, Z. Zhang, G. Zhao, J. Lin, Understanding and improving layer normalization, in: Proceedings of the Neural Information Processing Systems, 2019, pp. 4383–4393.

- [33] X. Wu, L. Zhao, L. Akoglu, A quest for structure: jointly learning the graph structure and semi-supervised classification, in: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, CIKM, 2018, pp. 87–96.

- [34] L. F. R. Ribeiro, P. H. P. Saverese, D. R. Figueiredo, struc2vec: Learning node representations from structural identity, in: Proceedings of the 23rd International Conference on Knowledge Discovery and Data Mining, 2017, pp. 385–394.

- [35] L. Zhang, H. Song, N. Aletras, H. Lu, Node-feature convolution for graph convolutional networks, Pattern Recognit. 128 (2022) 108661.

- [36] S. Abu-El-Haija, B. Perozzi, A. Kapoor, N. Alipourfard, K. Lerman, H. Harutyunyan, G. Steeg, A. Galstyan, Mixhop: Higher-order graph convolution architectures via sparsified neighborhood mixing, in: Proceedings of the 36th International Conference on Machine Learning, ICML, 2019.

- [37] J. Zhu, R. A. Rossi, A. Rao, T. Mai, N. Lipka, N. K. Ahmed, D. Koutra, Graph neural networks with heterophily, in: Proceedings of the AAAI Conference on Artificial Intelligence, 2021, pp. 11168–11176.

- [38] D. P. Kingma, J. L. Ba, Adam: A method for stochastic optimization, in: Proceedings of the 5th International Conference on Learning Representations, 2015.