55email: {lylhubxy, jinghd}@stu.xjtu.edu.cn, {musayq, nnzheng}@mail.xjtu.edu.cn, [email protected]

See Through Their Minds: Learning Transferable Neural Representation from Cross-Subject fMRI

Abstract

Deciphering visual content from functional Magnetic Resonance Imaging (fMRI) helps illuminate the human vision system. However, the scarcity of fMRI data and noise hamper brain decoding model performance. Previous approaches primarily employ subject-specific models, sensitive to training sample size. In this paper, we explore a straightforward but overlooked solution to address data scarcity. We propose shallow subject-specific adapters to map cross-subject fMRI data into unified representations. Subsequently, a shared deeper decoding model decodes cross-subject features into the target feature space. During training, we leverage both visual and textual supervision for multi-modal brain decoding. Our model integrates a high-level perception decoding pipeline and a pixel-wise reconstruction pipeline guided by high-level perceptions, simulating bottom-up and top-down processes in neuroscience. Empirical experiments demonstrate robust neural representation learning across subjects for both pipelines. Moreover, merging high-level and low-level information improves both low-level and high-level reconstruction metrics. Additionally, we successfully transfer learned general knowledge to new subjects by training new adapters with limited training data. Compared to previous state-of-the-art methods, notably pre-training-based methods (Mind-Vis and fMRI-PTE), our approach achieves comparable or superior results across diverse tasks, showing promise as an alternative method for cross-subject fMRI data pre-training. Our code and pre-trained weights will be publicly released at https://github.com/YulongBonjour/See_Through_Their_Minds.

Keywords:

Brain decoding Transfer learningCross-subject fMRI1 Introduction

Vision decoding from brain activity enables the inference of mental states and cognitive processes, aiding neuroscience development and potentially advancing brain-inspired artificial intelligence and brain-computer interfaces. To this end, functional Magnetic Resonance Imaging (fMRI) data are widely used as a non-invasive approach to capture brain activity. Recent years have witnessed great progress in fMRI-based vision decoding with the rapid development of Deep Learning and generative models. To name a few, Brain-diffuser[42] adopts a two-stage reconstruction framework, where an fMRI pattern is first mapped into VAE latent embeddings to get a blurry reconstruction, then refining it using predicted CLIP visual and textual embeddings to guide a pre-trained diffusion model. BrainCLIP[32] utilizes contrastive learning to align fMRI data with visual and textual modalities simultaneously, enabling versatile applications such as retrieval, classification, and reconstruction tasks. MindEye[50] enhances retrieval and reconstruction performance on the NSD[1] dataset by aligning fMRI data with fine-grained CLIP visual tokens through contrastive learning and diffusion priors[46].

While significant progress has been made, the subject-specific nature of most previous methods poses challenges to generalization. Firstly, fMRI data collection is costly and prone to blending with physiological noise, resulting in limited training samples with low signal-to-noise ratio per participant. Additionally, intrinsic inter-individual variability requires building models from scratch for each new subject, as every individual’s brain has unique functional and anatomical structures. Consequently, subject-specific models may exhibit considerable variability in results. Despite achievements like those of MindEye on the NSD dataset, training models from scratch on small datasets remains prone to overfitting.

One strategy to somewhat alleviate data paucity is to pre-train foundation models on large-scale cross-subject data to acquire general knowledge inherent at the group level, which is gaining popularity in fMRI studies[5, 44, 35, 39], as it bypasses the need to train downstream models from scratch. Previous works have conducted some meaningful attempts in fMRI pre-training for vision decoding[5, 44], where the fMRI data from different subjects are either split into patches similar to ViT[10] or transformed into unified 2D representations using anatomical information, then fMRI representations are learned using self-supervised tasks. Although these works provide new approaches to learning fMRI representation at the group level, individual variability is still the key challenge that hinders the final decoding performance.

Recent studies indicate that coarse-grained response topographies exhibit high similarity across subjects, implying that individual idiosyncrasies are reflected in more nuanced response patterns[4, 15, 23]. This suggests the potential for decoding models to share representational spaces across subjects, mitigating challenges posed by limited per-subject data. Leveraging this insight, we propose a neural decoding model featuring common decoding modules to capture shared response patterns, alongside subject-specific adapters that accommodate individual response biases. This approach enables the merging of data from multiple subjects viewing the same or different images to learn the general response patterns underlying different brains, while also capturing meaningful individual-level deviations.

Our overall framework, referred to as STTM, is depicted in Fig. 1. Inspired by both bottom-up and top-down cognitive processes in the human brain [22, 37], we incorporate a high-level pipeline (STTM-H) to capture semantic-related perceptions and a low-level pipeline (STTM-L) focused on pixel-wise reconstruction to match the original images’ low-level features (e.g., color, texture, spatial position). Both pipelines include subject-specific adapters for transforming cross-subject inputs, with the extracted features subsequently processed by shared decoding modules. The high-level pipeline aligns fMRI patterns with CLIP visual tokens using contrastive learning and a diffusion prior [46]. Additionally, it aligns fMRI data with textual descriptions, enabling our model to be applied to multimodal brain decoding tasks. Our low-level pipeline involves mapping fMRI data onto the latent space of Stable Diffusion’s variational autoencoder (VAE) [48] to obtain blurry reconstructions from the VAE’s decoder. To enhance pixel-wise reconstruction, we propose utilizing high-level features from the MLP backbone of the high-level pipeline to guide the low-level pipeline. The final reconstructions are generated by combining outputs from the high-level and low-level pipelines in an img2img setting [36] using versatile diffusion[64]. The over all design mirrors the bottom-up and top-down process in visual cortex.

In this study, we undertake two types of experiments: 1) Model pre-training with data of four subjects (1, 2, 5, 7) from the Natural Scenes Dataset (NSD) [1]. Our results are compared with previous works on NSD. 2) Following pre-training, transfer learning is conducted on new subjects from the Generic Object Decoding (GOD) Dataset [20], featuring much fewer training samples per subject and under a zero-shot setting. Our contributions are summarized as follows:

-

•

To address the scarcity of fMRI data, we propose to use subject-adapters to transform cross-subject data into unified feature space and train shared decoding models to capture robust representations. This work contributes to the growing body of literature on pre-training and transfer learning with fMRI data.

-

•

We identify the importance of the interaction between high-level and low-level perceptions for reconstruction performance. To leverage this interaction, we propose utilizing high-level perceptions to guide pixel-wise reconstruction. Our overall framework, employing an img2img setting, simulates the bottom-up and top-down processes observed in neuroscience and obtains reasonable performance gains.

-

•

We open source a versatile brain decoding model with good transferability and high performance on a wide range of tasks, which may facilitate future multi-model brain decoding research.

2 Related Work

2.0.1 Visual Stimulus Decoding from FMRI.

Deciphering visual information from human brain activity has long been a pursuit in neuroscience. Due to challenges such as low signal-to-noise ratio and limited fMRI samples, early studies primarily employed linear models to map fMRI data onto an intermediate feature space for decoding basic visual attributes like spatial position[60], orientation[17, 21], and image categories[6, 16]. As deep learning and generative models rapidly advanced, researchers explored reconstructing visual stimuli using CNNs[52, 51, 2], GANs[47, 41, 30, 38], and diffusion models[32, 42, 50], resulting in increasingly semantically plausible and faithful reconstructions. The emergence of models like CLIP[45] and related ones such as Stable diffusion[48] and versatile diffusion[64] further fueled exploration in decoding approaches. State-of-the-art methods have recently integrated contrastive learning[40] between fMRI data and CLIP model features to improve neural representations, followed by reconstruction using the Diffusion model or GANs. Notably, Mind-Reader[30] and BrainCLIP[32] utilized the CLS token from CLIP’s visual and textual encoder as global supervision, while MindEye[50] leveraged all 257 tokens from CLIP’s visual encoder’s last hidden layer, showing the benefits of fine-grained supervision for retrieval and reconstruction tasks. In this paper, we propose a fusion of global visual-linguistic contrastive learning and fine-grained visual contrastive learning to enhance multi-modal brain decoding. We also emphasize the importance of the interaction between bottom-up and top-down processes for stimulus reconstruction.

2.0.2 FMRI Foundation Models for Visual Decoding.

The pre-training-finetuning paradigm has demonstrated significant success across various domains[8, 45, 18, 13, 33]. While most previous brain decoding methods have traditionally employed subject-specific pipelines, recent efforts have emerged to develop fMRI foundation models by pretraining decoding models with large-scale fMRI data collected from diverse subjects, aiming to capture generalizable neural representations across different brains[5, 44, 35]. Chen et al.[5] divided fMRI series into patches and transformed them into embeddings, utilizing mask brain modeling (MBM) to learn fMRI representations. Malkiel et al.[35] applied self-supervised techniques, including MBM, to learn representations of audio-evoked fMRI data. More recently, Qian et al.[44] transformed fMRI signals into unified 2D representations using anatomical information, which can maintain consistency across individuals while preserving distinct brain activity patterns and are then used to train foundation models. It is worth noting that these pre-trained models still require additional fine-tuning on an individual basis to accommodate the intricate biological nuances governing visual stimuli generation. In our work, instead of employing the same network to process fMRI data from different subjects, we propose training shallow subject-specific adapters alongside a shared deep decoding network. And transfer learning can be conducted by training new adapters for new subjects.

3 Method

Drawing inspiration from the bottom-up and top-down processes in the human brain[22, 37], our method adopts an img2img setting which consists of a high-level perception decoding pipeline and a low-level pixel-wise reconstruction pipeline guided by high-level perceptions. Further details will be provided in the subsequent subsections.

3.1 Cross-Subject High-Level Perceptions Decoding

Our high-level pipeline is designed to capture semantic perceptions underlying the fMRI data, aligning cross-subject fMRI data with visual and textual modalities. This versatility allows it to be applied to tasks such as fMRI-to-image retrieval, fMRI-to-text retrieval, zero-shot classification, and fMRI-to-image generation.

3.1.1 High-Level Model Architecture

Our pipeline aims to translate fMRI data into CLIP embedding space. It consists of several shallow subject-specific adapters, a shared MLP backbone with 4 residual blocks, a tokenization module, a diffusion prior module, and an MLP projector. The subject adapters are formed by a linear projection followed by a residual block, accommodating individual variability and aligning fMRI data across subjects into a unified feature space. The shared residual MLP backbone further refines these features into a higher-level feature space. Subsequently, the tokenization module transforms the extracted features into 257 fine-grained tokens, corresponding to the 257 visual tokens from CLIP’s visual encoder’s last hidden layer. Unlike MindEye, which directly projects hidden states from the shared backbone into tokens with a dimension of 257x768 via linear projection, our tokenization module first maps the hidden states to a low-dimension space(257x128) and then uses a single-hidden-layer MLP to ascend the dimension to 257x768, which significantly reduces the number of parameters while maintains high-performance(See Tab. 1). The tokens are then processed by an MLP projector and a diffusion prior module in parallel. The model is trained end-to-end, with the prior receiving an MSE loss and the projector receiving a bidirectional contrastive loss. Projector outputs are for retrieval tasks, and diffusion prior outputs are used to guide image generation of a pre-trained versatile diffusion model[64]. Unless specified otherwise, the CLIP model used in this work is CLIP ViT/L-14.

3.1.2 Global Visual-Linguistic Contrastive Learning Fine-Grained Visual Contrastive Learning

Previous studies have demonstrated the efficacy of contrastive learning in producing robust fMRI representations. Notably, BrainCLIP[32] combines global embeddings from both CLIP’s visual and textual encoders as target features, showing improved reconstruction performance compared to conditions with only visual supervision. MindEye[50] utilizes all 257 tokens from CLIP’s visual encoder’s last layer, showcasing the benefits of fine-grained supervision for both image retrieval and reconstruction performance. To further enhance expressive representations that bridge the brain with visual and textual modalities for potential multi-modal brain decoding, we propose combining global visual-linguistic contrastive learning (GVLC) and fine-grained visual contrastive learning (FVC). Specifically, FVC involves contrasting the flattened and L2-normalized 257 CLIP visual tokens () with the 257 projected Brain tokens () from the MLP projector,

| (1) |

And the GVLC is applied to the CLS token() of the Brain tokens by combine the supervision from CLIP visual and textual CLS token( and ), i.e.,

| (2) |

Two kinds of contrastive loss are used in this work. For the first 35% percent of the total epochs, we use BiMixCo loss proposed in [50], which uses Mixup technique to train models on synthetic fMRI data created through convex combinations of two fMRI-stimulus pairs, aiming to alleviate the data scarcity for a single subject. For the rest epochs, SoftCLIP loss [50] is used as the contrastive loss, which is inspired by knowledge distillation[19] and uses the batch-wise visual CLIP embedding similarity instead of one-hot labels as the target label. Both the two kinds of contrastive loss are bidirectional(See Appendix Sec. 0.6 for details).

3.1.3 Efficient Diffusion Prior Learning

Contrastive learning yields disjoint fMRI embeddings, known as the "Modality Gap"[28]. To reconstruct images, we train a diffusion prior proposed in DALL-E2[46] to produce aligned CLIP embeddings from the outputs of the MLP backbone. These embeddings can serve as inputs to any pre-trained image generation model accepting CLIP image embeddings. MindEye applied this technique to map fMRI to CLIP image embeddings, predicting denoised CLIP tokens based on brain tokens and noise tokens. However, MindEye’s encoder-only transformer architecture demands significant GPU memory for long sequences. In our work, we employ a 6-layer transformer decoder architecture for the diffusion prior to mitigate GPU memory usage. The diffusion prior receives three token types: brain tokens, noisy CLIP visual tokens, and a time token. Brain tokens serve as keys and values for cross-attention modules in the transformer decoder after adding positional embeddings. The time token and noisy CLIP visual tokens are concatenated as queries, with positional embeddings added to the latter. The diffusion prior module are trained to output denoised CLIP tokens, utilizing the same diffusion loss as Ramesh et al.[46]. Inspired by the success of masked autoencoder[18], we propose training the diffusion prior with only a small part(e.g., 35% ) of the predicted Brain tokens. This approach offers dual benefits: it further reduces GPU memory usage during training and enhances the expressiveness of each brain token.

Our total end-to-end loss for the high-level pipeline is defined as:

| (3) |

In our experiments, we set to 0.4 and employ random weighting[29] between the contrastive losses and the diffusion prior loss.

3.2 Cross-Subject Pixel-Wise Reconstruction with High-Level Perception Guidance

The human brain processes visual content through two distinct information pathways: bottom-up and top-down [22, 37]. In bottom-up pathways, local features integrate to shape the brain’s understanding of global information, while in top-down pathways, the brain adjusts its perception of low-level features based on semantic understanding. These pathways act as complementary forces, often collaborating to help us comprehend complex stimuli. Given that CLIP visual and textual tokens primarily encode high-level semantic information rather than low-level details such as texture and boundary, it becomes imperative to incorporate a low-level reconstruction pipeline to preserve pixel-level information from fMRI data. To address this need, we propose a pipeline that integrates both bottom-up processing and top-down feedback mechanisms.

As depicted in Fig. 1, our low-level pipeline consists of shallow subject-specific adapters followed by a shared residual MLP backbone. The outputs from this backbone receive semantic information processed by the high-level pipeline backbone, with the two sets of information added together. Subsequently, the merged features undergo further processing by a residual block before being up-sampled to the latent space of Stable-diffusion’s VAE by CNN. During training, we employ L1 loss as the loss function. To fully utilize both high-level and bottom-up information, we introduce a mechanism where, in 30% of training steps, the semantic feedback is substituted with a learnable embedding vector. Additionally, in another 25% of training steps, the outputs from the low-level pipeline backbone are replaced with another learnable embedding vector. After training, blurry reconstructions can be generated by decoding the predicted latent embeddings with the VAE decoder. These reconstructions serve as the initial states for the versatile diffusion model.

| Methods | Multi-subject | Parameters | Retrieval tasks | ||

|---|---|---|---|---|---|

| Image@1 | Text@5 | Brain@1 | |||

| Mind Reader[30] | ✗ | 2.34M | 11.0% | - | 49.0% |

| Brain-diffuser[42] | ✗ | 3B | 21.1% | - | 30.3% |

| BrainCLIP-VAE[32] | ✗ | 18.6M | 40.65% | 31.1% | - |

| MindEye(High-level)[50] | ✗ | 1B | 93.6% | - | 90.1% |

| STTM-H(Ours,w/o GVLC) | ✓ | 568M | 93.6% | 10.3% | 94.8% |

| STTM-H(Ours) | ✓ | 568M | 92.8% | 41.3% | 94.9% |

3.3 Transfer learning for new subjects

Deciphering brain activity of new subjects using models trained for other individuals has been a longstanding goal in neuroscience. Here, we propose an adapter-based approach to alleviate this challenge. In our brain decoding scenario, we hope the decoding performance for new subjects who may have limited fMRI data can benefit from the general knowledge learned from abundant cross-subject fMRI patterns. To achieve this, we freeze the pre-trained shared decoding modules and train a shallow adapter for each of the two pipelines, aligning the fMRI data of the new subject with the unified feature space. The training objective is the same as the pre-training stage. Once the subject adapter is trained, we freeze both the adapter and the MLP backbone. Subsequently, we fine-tune the remaining modules to further align the fMRI patterns with the target domain.

4 Experimental Results

4.1 Datasets and Setting

4.1.1 Natural Scenes Dataset (NSD)

The NSD dataset[1], sourced from 8 subjects viewing images from the COCO dataset[31], is currently the largest neural imaging dataset for data-driven brain decoding. Our study uses the data of subjects 1, 2, 5, and 7 from NSD. Each subject’s training set includes 8859 image stimuli and 24980 fMRI trials, while the test set comprises 982 image stimuli and 2770 fMRI trials, with stimuli differing across subjects in the training set but shared in the test set. Responses for images with multiple trials are averaged. Utilizing the NSDGeneral ROI mask at 1.8 mm resolution, we derived ROIs for the 4 subjects, encompassing 15724, 14278, 13039, and 12682 voxels, spanning visual areas from early to higher visual cortex. Corresponding captions can be extracted from the COCO dataset.

4.1.2 Generic Object Decoding(GOD) Dataset

The GOD dataset, curated by Horikawa and Kamitani[20], comprises fMRI recordings of five healthy subjects viewing images from ImageNet[7]. It includes 1250 images from 200 ImageNet categories, with 1200 training images from 150 categories and 50 test images from the remaining categories. Training and test stimuli were presented 1 and 35 times to the subjects, respectively, resulting in 1200 and 1750 fMRI instances. We utilize preprocessed regions of interest (ROIs)111The preprocessed data and demo code are available at http://brainliner.jp/data/brainliner/Generic_Object_Decoding covering voxels from early to higher visual areas. FMRI data from different trials for each test image are averaged. Additionally, we acquire caption annotations from the GOD-Cap dataset[32], offering two textual descriptions for each visual stimulus.

| Methods | Low- Level | High-Level | ||||||

| PixCorr | SSIM | Alex(2) | Alex(5) | Incep | CLIP | Eff | SwAV | |

| Mind Reader[30] | - | - | - | - | 78.2% | - | - | - |

| Takagi…[58] | - | - | 83.0% | 83.0% | 76.0% | 77.0% | - | - |

| Gu et al.[14] | .150 | .325 | - | - | - | - | .862 | .465 |

| Brain-diffuser[42] | .254 | .356 | 94.2% | 96.2% | 87.2% | 91.5% | .775 | .423 |

| BrainCLIP[32] | - | - | - | - | 86.7% | 94.8% | - | - |

| fMRI-PTE (MG)[44] | .131 | .112 | 78.13% | 88.59% | 84.09% | 82.26% | .837 | .434 |

| DREAM[63] | .288 | .338 | 93.9% | 96.7% | 93.7% | 94.1% | .645 | .418 |

| MindEye[50] | .309 | .323 | 94.7% | 97.8% | 93.8% | 94.1% | .645 | .367 |

| MindEye-BOI[24] | .259 | .329 | 93.9% | 97.7% | 93.9% | 93.9% | .645 | .367 |

| STTM(Ours) | .333 | .334 | 95.7% | 98.5% | 95.8% | 95.7% | .611 | .338 |

| MindEye(Low-level)[50] | .360 | .479 | 78.1% | 74.8% | 58.7% | 59.2% | 1.0 | .663 |

| STTM-L(Ours,w/o guidance) | .372 | .488 | 79.6% | 79.6% | 63.6% | 63.0% | .985 | .643 |

| STTM-L(Ours,with guidance) | .383 | .488 | 83.3% | 86.0% | 68.2% | 67.1% | .968 | .647 |

| MindEye(High-level)[50] | .194 | .308 | 91.7% | 97.4% | 93.6% | 94.2% | .645 | .369 |

| STTM-H(Ours,w/o GVLC) | .201 | .276 | 91.4% | 97.8% | 95.3% | 95.1% | .622 | .352 |

| STTM-H(Ours) | .209 | .276 | 91.5% | 97.8% | 95.4% | 95.6% | .612 | .344 |

4.1.3 Implementation Details

Our models are trained and tested on 8 Hygon DCUs with 16GB HBM2 memory. With the data of the 4 subjects from NSD, We pre-train the high-level pipeline for 280 epochs and the low-level pipeline for 540 epochs with a global batch size of 192. For the High-level pipeline on the GOD dataset, we first train a new subject adapter for 4500 epochs with a global batch size of 880 for each new subject, while keeping the pre-trained parts frozen. Then, we freeze the adapter and the MLP backbone, and fine-tune the rest parts of the model for 800,400,400,400,800 epochs with a global batch size of 600 for subjects 1,2,3,4,5 respectively. Similarly, for the low-level pipeline on the GOD dataset, we train a new subject adapter for 5000 epochs with a global batch size of 192 for each new subject, with the pre-trained parts frozen. Then, we freeze the adapter and MLP backbone, and fine-tune the rest parts for 800 epochs. We use AdamW[34] for optimization with , , and . Additionally, we employ the OneCircle learning rate schedule[55] with a maximum learning rate of 0.0005. For reconstruction evaluation metrics, we use the implementation of MindEye. More details can be found in our code.

4.2 Brain-Image/Text Retrieval on NSD

For image retrieval or text retrieval, the goal is to match the correct image or text with a given fMRI pattern among multiple candidates. In image retrieval, we calculate cosine similarity between the flattened and normalized 257 tokens of a brain sample and each of 300 randomly selected image candidates from the test set. This process is repeated for each of the 982 brain samples in the test set, and the overall accuracy is averaged across 30 iterations to accommodate batch sampling variability. In text retrieval, the candidate pool for each fMRI pattern comprises all 982 image captions, and cosine similarity is computed between the CLS token of the fMRI pattern and those of the candidate captions. For brain retrieval, the procedure mirrors image retrieval, but image and brain samples are swapped to find the corresponding brain sample for a given image among 300 brain samples.

In Tab. 1, we compare our method with previous works across retrieval tasks, reporting top-1 accuracy for image and brain retrieval and top-5 accuracy for text retrieval due to similar captions for some test images. Our method demonstrates strong generalization across all retrieval tasks, achieving superior performance in text retrieval and brain imaging retrieval. Compared to MindEye, the prior state-of-the-art method, our method exhibits better parameter efficiency and versatility.

4.3 FMRI-to-Image Reconstruction on NSD

We generate 16 CLIP image embeddings with diffusion prior for each test brain sample, then pass them through the image variations pipeline of Versatile Diffusion, starting the denoising process with the blurry reconstruction from our low-level pipeline. This process comprises 20 timesteps using UniPCMultistep noise scheduling[66], yielding 16 reconstructions per sample. Finally, we select the best reconstruction using our retrieval branch.

| Methods | Modality | Prompt | Average | |

|---|---|---|---|---|

| top-1 | top-5 | |||

| CADA-VAE [49] | V&T | - | 10.0% | 40.4% |

| MVAE [62] | V&T | - | 10.0% | 39.6% |

| MMVAE [53] | V&T | - | 11.7% | 43.3% |

| MoPoE-VAE [56] | V&T | - | 12.9 | 51.8 |

| BraVL [11] | V&T | - | 14.0% | 53.1% |

| LEA[43] | V | - | 13.6% | - |

| BrainCLIP-VAE[32] | V&T | Text | 18.4% | 51.2% |

| BrainCLIP-VAE [32] | V&T | CoOp | 18.0% | 59.5% |

| STTM-H(Ours) | V&T | Text | 23.2% | 62.0% |

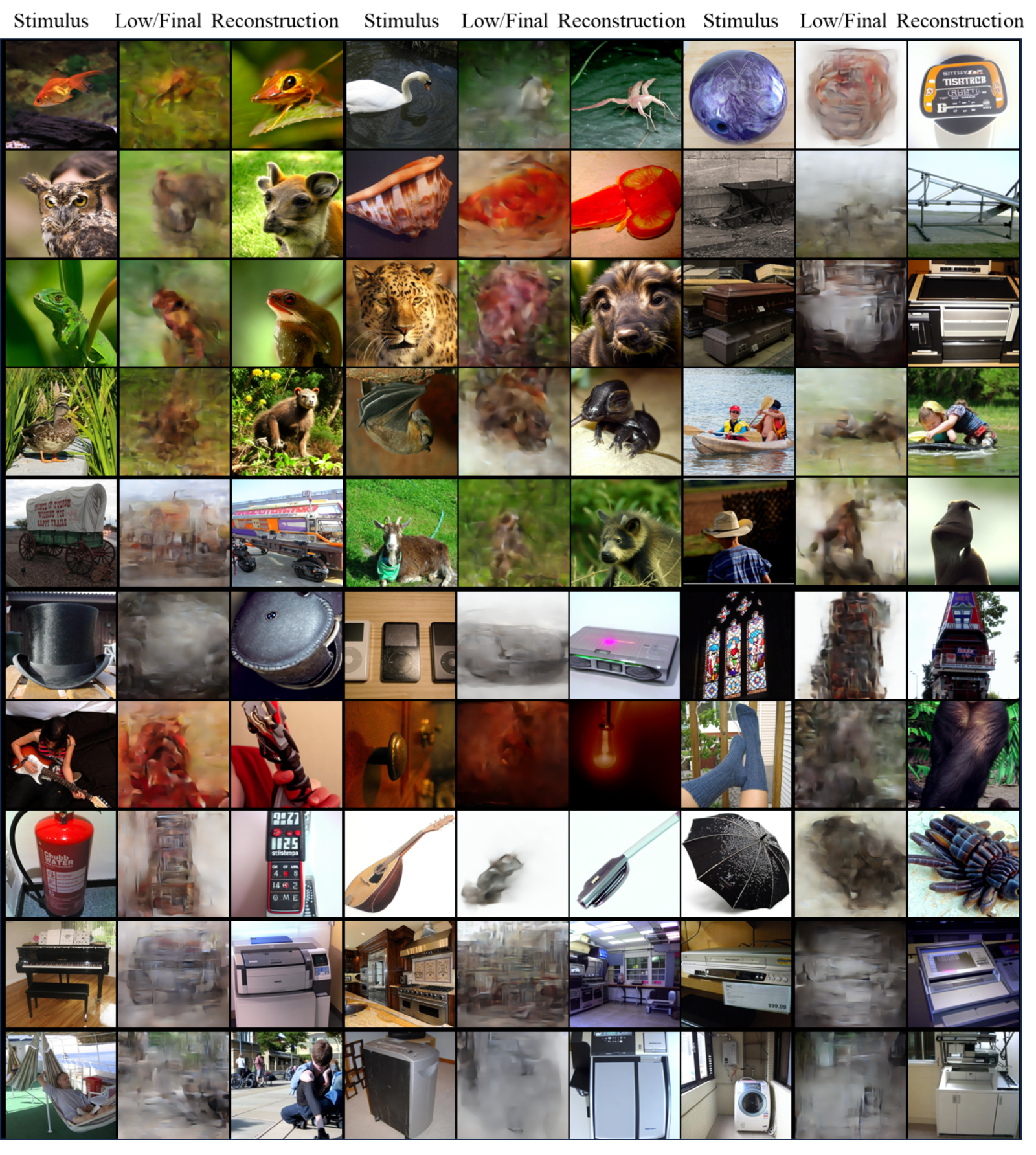

Reconstruction examples for the NSD dataset are shown in Fig. 2. The low-level reconstructions effectively capture details such as position and color distribution, while the final reconstructions enhance the semantic recognizability of the initial blurry low-level reconstructions. In Tab. 2, we quantitatively compare our method with recent works on the NSD dataset. Our full method outperforms previous approaches by significant margins across low-level and high-level metrics, except for SSIM. Specifically, STTM-L significantly outperforms MindEye(Low-level) on all metrics, especially on high-level metrics, indicating superior semantic recognizability of our low-level reconstructions. STTM-H also outperforms MindEye(High-level) by a considerable margin on all high-level metrics, demonstrating better capture of semantic information.

Additionally, compared to fMRI-PTE[44], which was pre-trained with large-scale unsupervised fMRI data from 1000 subjects before training on the NSD dataset, our method exhibits clear advantages in results. This suggests greater efficiency of our method in learning general knowledge from cross-subject data. Moreover, the img2img strength used for the results in Tab. 2 is 0.3, and we have tested with other values, obtaining robust performances (see Appendix Sec. 0.3).

4.4 Transfer Learning on GOD Dataset

In this section, we aim to transfer the knowledge acquired from the NSD dataset to the GOD dataset, which is considerably smaller and in a zero-shot setting. We assess the transfer learning outcomes through two tasks: the zero-shot classification task and the image reconstruction task.

Additionally, we conducted tests to assess whether our decoders, originally trained on brain activity induced by visual stimuli, possess the capability to generalize to decode imagery-induced brain activity(See Appendix Sec. 0.1).

4.4.1 Zero-Shot Classification on GOD by Prompting

Utilizing CLIP’s well-aligned embedding space, STTM enables zero-shot visual stimulus classification. This is achieved by comparing the embeddings of the CLS token of Brain tokens with the classification weights synthesized by CLIP’s text encoder, which takes as input textual prompts specifying classes of interest. These prompts can be manually designed, such as "a photo of a [CLASS]," where the class token is replaced by the specific class name, and other tokens provide context for the class name. We employ the same text prompts as BrainCLIP. The results are shown in Tab. 3. Our method obtains an average top-1 accuracy of 23.2% and an average top-5 accuracy of 62.0%, which are considerably better than previous works.

4.4.2 Image Reconstruction on GOD

In Fig. 3, we present visualizations of low-level and final reconstructions for the GOD dataset. In Tab. 4, we provide our average results across five subjects and compare our method with recent works, focusing on subject 3, as only results for this subject are available for Mind-Vis[5] and CMVDM[65]. As we can see, due to the limited training samples and low signal-to-noise ratio, none of the models outperform the others across all metrics. Our low-level pipeline achieves the best performance on PixCorr and SSIM, two pixel-wise metrics. Additionally, our final reconstruction compares favorably with previous state-of-the-art works. Notably, Mind-Vis is also a pertaining-based decoding method, using masked signal modeling for pre-training and then fine-tuning on downstream datasets. Our method outperforms Mind-Vis on most metrics, suggesting it is a promising alternative for pre-training fMRI models.

| Methods | Low- Level | High-Level | ||||||

| PixCorr | SSIM | Alex(2) | Alex(5) | Incep | CLIP | Eff | SwAV | |

| STTM-H(Ours) | .143 | .341 | 85.2% | 91.2% | 77.8% | 82.9% | .871 | .534 |

| STTM-L(Ours) | .274 | .500 | 87.9% | 91.0% | 64.2% | 58.2% | .956 | .711 |

| STTM(Ours) | .202 | .366 | 90.3% | 93.4% | 80.0% | 84.1% | .879 | .526 |

| IC-GAN(Sub3) [41] | .195 | .386 | 88.1% | 95.3% | 85.8% | 84.0% | .855 | .486 |

| MinD-Vis(Sub3)[5] | .119 | .390 | 82.8% | 93.8% | 81.0% | 82.6% | .833 | .491 |

| CMVDM(Sub3)[65] | .279 | .454 | 88.4% | 93.9% | 81.6% | 82.0% | .810 | .485 |

| STTM-H(Ours,Sub3) | .133 | .328 | 87.9% | 93.3% | 79.7% | 86.4% | .851 | .521 |

| STTM-L(Ours,Sub3) | .322 | .501 | 90.3% | 92.7% | 66.8% | 58.2% | .954 | .704 |

| STTM(Ours,Sub3) | .253 | .367 | 92.0% | 95.6% | 81.3% | 87.0% | .870 | .505 |

| Methods | Low- Level | High-Level | ||||||

| PixCorr | SSIM | Alex(2) | Alex(5) | Incep | CLIP | Eff | SwAV | |

| STTM-L(Sub1, I) | .417 | .487 | 87.9% | 87.8% | 63.2% | 62.8% | .980 | .664 |

| STTM-L(Sub1, II) | .422 | .490 | 89.1% | 89.0% | 64.8% | 63.6% | .974 | .664 |

| STTM-L(Sub1, III) | .445 | .498 | 87.4% | 88.6% | 67.8% | 68.2% | .964 | .644 |

| STTM-H(Sub1, I) | .150 | .266 | 83.6% | 92.7% | 91.4% | 91.2% | .710 | .392 |

| STTM-H(Sub1, III) | .221 | .278 | 92.6% | 98.3% | 96.0% | 96.3% | .599 | .332 |

4.5 Further Discussions

4.5.1 Rationality of Training with Cross-Subject fMRI

To further validate the effects of cross-subject fMRI training, we conduct an ablation study on the data of subject 1 in NSD. Three types of experiments are conducted: Type I means both STTM-L and STTM-H are trained with single-subject data; Type II means the STTM-L is trained with single-subject data but the high-level guidance is from STTM-H trained with cross-subject data; Type III means STTM-H and STTM-L are trained with cross-subject data. The results are presented in Tab. 5. As we can see, both STTM-L and STTM-H benefit from cross-subject fMRI training. We can also conduct a qualitative comparison with MindEye. When not guided by high-level perception, our STTM-L model shares the same architecture as MindEye’s low-level model but is trained with cross-subject fMRI data using subject adapters. We only utilize L1 loss, removing the auxiliary contrastive loss used in MindEye for low-level pipeline training. As depicted in Tab. 2, STTM-L (w/o guidance) outperforms MindEye (Low-level) across all metrics despite employing a simpler loss function. Similar performance enhancements are observed with our STTM-H (w/o GVLC) model, which has the same MLP backbone and training loss functions as MindEye but surpasses it on retrieval tasks and high-level reconstruction metrics. A potential explanation is that training with cross-subject fMRI data enhances model generalizability by exposing the shared decoding model to a more diverse data distribution and extracting more essential fMRI features. From a parameter efficiency perspective, our STTM framework trains a single model for multiple subjects, whereas MindEye trains subject-specific models with significantly more parameters. Considering these observations, training future decoding models with cross-subject data appears to be a reasonable choice.

4.5.2 Influence of Global Visual-Linguistic Contrastive Learning

Combining results from Tab. 1 and Tab. 2, we observe that employing the global visual-linguistic contrastive learning significantly improves text retrieval performance while minimally influencing image and brain activity retrieval tasks. It also positively impacts image reconstruction, aligning with observations from BrainCLIP despite differences in model architectures. These results contribute to understanding the multi-sensory nature of the human brain and offer a foundation for future advancements in generic multi-modal brain decoding.

4.5.3 Influence of the High-Level and Low-Level Perception Interaction

As shown in Tab. 2, integrating high-level perception guidance improves our low-level pipeline performance across almost all metrics. Surprisingly, even low-level metrics benefit from high-level information. Moreover, combining both pipelines enhances final reconstruction performance compared to using only the high-level pipeline. Our overall framework effectively mimics the interaction between bottom-up and top-down processes in the human brain, resulting in notable performance gains. More results are available in the appendix.

5 Conclusion

In this work, we introduce STTM, a novel method leveraging cross-subject fMRI data to learn transferable neural representations shared across human brains. We pre-train our models on 4 subjects from the NSD dataset and perform transfer learning on the GOD dataset. Our method achieves comparable or better decoding performance compared to previous state-of-the-art works across various tasks, including image retrieval, text retrieval, brain imaging retrieval, and image reconstruction. Notably, compared to Mind-Vis and fMRI-PTE, two pertaining-based fMRI decoding models, our method demonstrates superior reconstruction performance, suggesting its potential as an alternative approach for training fMRI foundation models. We also propose a pixel-wise reconstruction pipeline guided by high-level perceptions, showing the benefits of incorporating high-level information into pixel-wise reconstruction. Our complete STTM model comprises a high-level pipeline and a low-level pipeline, providing insights into the interaction of bottom-up and top-down processes in the human brain. However, our method has its limitations. For instance, during the pre-training stage, each subject requires a unique adapter, limiting the total number of subjects involved in pre-training due to GPU memory restrictions. Thus, subjects with more training samples are preferred.

Acknowledgements

This work was supported by the National Natural Science Foundation of China (NO. 62088102, NO.62076235), STI2030-Major Projects (NO. 2022ZD0208801), and China National Postdoctoral Program for Innovative Talents from China Postdoctoral Science Foundation (NO. BX2021239).

References

- [1] Allen, E.J., St-Yves, G., Wu, Y., Breedlove, J.L., Prince, J.S., Dowdle, L.T., Nau, M., Caron, B., Pestilli, F., Charest, I., et al.: A massive 7t fmri dataset to bridge cognitive neuroscience and artificial intelligence. Nature neuroscience 25(1), 116–126 (2022)

- [2] Beliy, R., Gaziv, G., Hoogi, A., Strappini, F., Golan, T., Irani, M.: From voxels to pixels and back: Self-supervision in natural-image reconstruction from fmri. Advances in Neural Information Processing Systems 32 (2019)

- [3] Caron, M., Misra, I., Mairal, J., Goyal, P., Bojanowski, P., Joulin, A.: Unsupervised learning of visual features by contrasting cluster assignments. Advances in neural information processing systems 33, 9912–9924 (2020)

- [4] Chen, P.H.C., Chen, J., Yeshurun, Y., Hasson, U., Haxby, J., Ramadge, P.J.: A reduced-dimension fmri shared response model. Advances in neural information processing systems 28 (2015)

- [5] Chen, Z., Qing, J., Xiang, T., Yue, W.L., Zhou, J.H.: Seeing beyond the brain: Conditional diffusion model with sparse masked modeling for vision decoding. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 22710–22720 (2023)

- [6] Cox, D.D., Savoy, R.L.: Functional magnetic resonance imaging (fmri)“brain reading”: detecting and classifying distributed patterns of fmri activity in human visual cortex. Neuroimage 19(2), 261–270 (2003)

- [7] Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition. pp. 248–255. Ieee (2009)

- [8] Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

- [9] Dijkstra, N., Mostert, P., Lange, F.P.d., Bosch, S., van Gerven, M.A.: Differential temporal dynamics during visual imagery and perception. Elife 7, e33904 (2018)

- [10] Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

- [11] Du, C., Fu, K., Li, J., He, H.: Decoding visual neural representations by multimodal learning of brain-visual-linguistic features. IEEE Transactions on Pattern Analysis and Machine Intelligence (2023)

- [12] Ganis, G., Thompson, W.L., Kosslyn, S.M.: Brain areas underlying visual mental imagery and visual perception: an fmri study. Cognitive Brain Research 20(2), 226–241 (2004)

- [13] Gu, J., Meng, X., Lu, G., Hou, L., Niu, M., Liang, X., Yao, L., Huang, R., Zhang, W., Jiang, X., et al.: Wukong: A 100 million large-scale chinese cross-modal pre-training benchmark. In: Thirty-sixth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (2022)

- [14] Gu, Z., Jamison, K., Kuceyeski, A., Sabuncu, M.: Decoding natural image stimuli from fmri data with a surface-based convolutional network. arXiv preprint arXiv:2212.02409 (2022)

- [15] Güçlü, U., van Gerven, M.A.: Increasingly complex representations of natural movies across the dorsal stream are shared between subjects. NeuroImage 145, 329–336 (2017)

- [16] Haxby, J.V., Gobbini, M.I., Furey, M.L., Ishai, A., Schouten, J.L., Pietrini, P.: Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293(5539), 2425–2430 (2001)

- [17] Haynes, J.D., Rees, G.: Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nature neuroscience 8(5), 686–691 (2005)

- [18] He, K., Chen, X., Xie, S., Li, Y., Dollár, P., Girshick, R.: Masked autoencoders are scalable vision learners. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 16000–16009 (2022)

- [19] Hinton, G., Vinyals, O., Dean, J.: Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531 (2015)

- [20] Horikawa, T., Kamitani, Y.: Generic decoding of seen and imagined objects using hierarchical visual features. Nature communications 8(1), 1–15 (2017)

- [21] Kamitani, Y., Tong, F.: Decoding the visual and subjective contents of the human brain. Nature neuroscience 8(5), 679–685 (2005)

- [22] Katsuki, F., Constantinidis, C.: Bottom-up and top-down attention: different processes and overlapping neural systems. The Neuroscientist 20(5), 509–521 (2014)

- [23] Khosla, M., Ngo, G.H., Jamison, K., Kuceyeski, A., Sabuncu, M.R.: A shared neural encoding model for the prediction of subject-specific fmri response. In: Medical Image Computing and Computer Assisted Intervention–MICCAI 2020: 23rd International Conference, Lima, Peru, October 4–8, 2020, Proceedings, Part VII 23. pp. 539–548. Springer (2020)

- [24] Kneeland, R., Ojeda, J., St-Yves, G., Naselaris, T.: Brain-optimized inference improves reconstructions of fmri brain activity. arXiv preprint arXiv:2312.07705 (2023)

- [25] Kosslyn, S.M., Pascual-Leone, A., Felician, O., Camposano, S., Keenan, J.P., Ganis, G., Sukel, K., Alpert, N.: The role of area 17 in visual imagery: convergent evidence from pet and rtms. Science 284(5411), 167–170 (1999)

- [26] Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems 25 (2012)

- [27] Lee, S.H., Kravitz, D.J., Baker, C.I.: Disentangling visual imagery and perception of real-world objects. Neuroimage 59(4), 4064–4073 (2012)

- [28] Liang, V.W., Zhang, Y., Kwon, Y., Yeung, S., Zou, J.Y.: Mind the gap: Understanding the modality gap in multi-modal contrastive representation learning. Advances in Neural Information Processing Systems 35, 17612–17625 (2022)

- [29] Lin, B., Ye, F., Zhang, Y., Tsang, I.W.: Reasonable effectiveness of random weighting: A litmus test for multi-task learning. arXiv preprint arXiv:2111.10603 (2021)

- [30] Lin, S., Sprague, T.C., Singh, A.: Mind reader: Reconstructing complex images from brain activities. In: Oh, A.H., Agarwal, A., Belgrave, D., Cho, K. (eds.) Advances in Neural Information Processing Systems (2022), https://openreview.net/forum?id=pHdiaqgh_nf

- [31] Lin, T.Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., Zitnick, C.L.: Microsoft coco: Common objects in context. In: European conference on computer vision. pp. 740–755. Springer (2014)

- [32] Liu, Y., Ma, Y., HaodongJing, Zhou, W., Zhu, G., Zheng, N.: BrainCLIP: Bridging brain and visual-linguistic representation via CLIP for generic natural visual stimulus decoding. In: AAAI-2024 Workshop: Artificial Intelligence for Brain Encoding and Decoding (2023), https://openreview.net/forum?id=yoW381euQK

- [33] Liu, Y., Zhu, G., Zhu, B., Song, Q., Ge, G., Chen, H., Qiao, G., Peng, R., Wu, L., Wang, J.: Taisu: A 166m large-scale high-quality dataset for chinese vision-language pre-training. In: Thirty-sixth Conference on Neural Information Processing Systems Datasets and Benchmarks Track (2022)

- [34] Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. In: International Conference on Learning Representations (2019), https://openreview.net/forum?id=Bkg6RiCqY7

- [35] Malkiel, I., Rosenman, G., Wolf, L., Hendler, T.: Self-supervised transformers for fmri representation. In: International Conference on Medical Imaging with Deep Learning. pp. 895–913. PMLR (2022)

- [36] Meng, C., He, Y., Song, Y., Song, J., Wu, J., Zhu, J.Y., Ermon, S.: Sdedit: Guided image synthesis and editing with stochastic differential equations. arXiv preprint arXiv:2108.01073 (2021)

- [37] Miller, E.K.: Straight from the top. Nature 401(6754), 650–651 (1999)

- [38] Mozafari, M., Reddy, L., VanRullen, R.: Reconstructing natural scenes from fmri patterns using bigbigan. In: 2020 international joint conference on neural networks (IJCNN). pp. 1–8. IEEE (2020)

- [39] Nguyen, S., Ng, B., Kaplan, A.D., Ray, P.: Attend and decode: 4d fmri task state decoding using attention models. In: Machine Learning for Health. pp. 267–279. PMLR (2020)

- [40] van den Oord, A., Li, Y., Vinyals, O.: Representation learning with contrastive predictive coding (2019)

- [41] Ozcelik, F., Choksi, B., Mozafari, M., Reddy, L., VanRullen, R.: Reconstruction of perceived images from fmri patterns and semantic brain exploration using instance-conditioned gans. In: 2022 International Joint Conference on Neural Networks (IJCNN). pp. 1–8. IEEE (2022)

- [42] Ozcelik, F., VanRullen, R.: Natural scene reconstruction from fmri signals using generative latent diffusion (2023)

- [43] Qian, X., Wang, Y., Sun, X., Fu, Y., Feng, J.: LEA: Learning latent embedding alignment model for fMRI decoding and encoding (2024), https://openreview.net/forum?id=QdHg1SdDY2

- [44] Qian, X., Wang, Y., Huo, J., Feng, J., Fu, Y.: fmri-pte: A large-scale fmri pretrained transformer encoder for multi-subject brain activity decoding. arXiv preprint arXiv:2311.00342 (2023)

- [45] Radford, A., Kim, J.W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J., et al.: Learning transferable visual models from natural language supervision. In: International Conference on Machine Learning. pp. 8748–8763. PMLR (2021)

- [46] Ramesh, A., Dhariwal, P., Nichol, A., Chu, C., Chen, M.: Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125 1(2), 3 (2022)

- [47] Ren, Z., Li, J., Xue, X., Li, X., Yang, F., Jiao, Z., Gao, X.: Reconstructing seen image from brain activity by visually-guided cognitive representation and adversarial learning. NeuroImage 228, 117602 (2021)

- [48] Rombach, R., Blattmann, A., Lorenz, D., Esser, P., Ommer, B.: High-resolution image synthesis with latent diffusion models. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 10684–10695 (2022)

- [49] Schonfeld, E., Ebrahimi, S., Sinha, S., Darrell, T., Akata, Z.: Generalized zero-and few-shot learning via aligned variational autoencoders. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8247–8255 (2019)

- [50] Scotti, P.S., Banerjee, A., Goode, J., Shabalin, S., Nguyen, A., Cohen, E., Dempster, A.J., Verlinde, N., Yundler, E., Weisberg, D., Norman, K.A., Abraham, T.M.: Reconstructing the mind’s eye: fmri-to-image with contrastive learning and diffusion priors (2023)

- [51] Shen, G., Dwivedi, K., Majima, K., Horikawa, T., Kamitani, Y.: End-to-end deep image reconstruction from human brain activity. Frontiers in Computational Neuroscience p. 21 (2019)

- [52] Shen, G., Horikawa, T., Majima, K., Kamitani, Y.: Deep image reconstruction from human brain activity. PLoS computational biology 15(1), e1006633 (2019)

- [53] Shi, Y., Paige, B., Torr, P., et al.: Variational mixture-of-experts autoencoders for multi-modal deep generative models. Advances in Neural Information Processing Systems 32 (2019)

- [54] Slotnick, S.D., Thompson, W.L., Kosslyn, S.M.: Visual mental imagery induces retinotopically organized activation of early visual areas. Cerebral cortex 15(10), 1570–1583 (2005)

- [55] Smith, L.N., Topin, N.: Super-convergence: Very fast training of neural networks using large learning rates. In: Artificial intelligence and machine learning for multi-domain operations applications. vol. 11006, pp. 369–386. SPIE (2019)

- [56] Sutter, T.M., Daunhawer, I., Vogt, J.E.: Generalized multimodal elbo (2021)

- [57] Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., Wojna, Z.: Rethinking the inception architecture for computer vision. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2818–2826 (2016)

- [58] Takagi, Y., Nishimoto, S.: High-resolution image reconstruction with latent diffusion models from human brain activity. bioRxiv pp. 2022–11 (2022)

- [59] Tan, M., Le, Q.: Efficientnet: Rethinking model scaling for convolutional neural networks. In: International conference on machine learning. pp. 6105–6114. PMLR (2019)

- [60] Thirion, B., Duchesnay, E., Hubbard, E., Dubois, J., Poline, J.B., Lebihan, D., Dehaene, S.: Inverse retinotopy: inferring the visual content of images from brain activation patterns. Neuroimage 33(4), 1104–1116 (2006)

- [61] Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing 13(4), 600–612 (2004)

- [62] Wu, M., Goodman, N.: Multimodal generative models for scalable weakly-supervised learning. Advances in Neural Information Processing Systems 31 (2018)

- [63] Xia, W., de Charette, R., Oztireli, C., Xue, J.H.: Dream: Visual decoding from reversing human visual system. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. pp. 8226–8235 (2024)

- [64] Xu, X., Wang, Z., Zhang, G., Wang, K., Shi, H.: Versatile diffusion: Text, images and variations all in one diffusion model. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 7754–7765 (2023)

- [65] Zeng, B., Li, S., Liu, X., Gao, S., Jiang, X., Tang, X., Hu, Y., Liu, J., Zhang, B.: Controllable mind visual diffusion model. arXiv preprint arXiv:2305.10135 (2023)

- [66] Zhao, W., Bai, L., Rao, Y., Zhou, J., Lu, J.: Unipc: A unified predictor-corrector framework for fast sampling of diffusion models. Advances in Neural Information Processing Systems 36 (2024)

Appendix

0.1 Decoding Mental Imagery from Visual Cortex

Mental imagery, the ability to create mental images without external stimuli, often produces weaker or less vivid images from memory compared to those evoked by sensory input, yet both types rely on the visual system [9]. In line with this, contemporary theories of mental imagery suggest shared mechanisms between human vision and mental imagery, which implies that both perceptual visual processing and internally generated imagery activate extensive, overlapping networks of brain regions.[12, 25, 27, 54].

Building upon prior research and utilizing the imagery experiment data collected by Horikawa and Kamitani[20], we endeavor to visualize mental imagery directly from the visual cortex. Note that these data were gathered while subjects were freely imagining objects cued by text, with their eyes closed. Thus, there are no ground-truth images. We display our results for subject 3 in Fig. A1, where we provide each category name with a reference image(on the left) and a generated image(on the right). We also provide the results for the 50-way classification task in Tab. A1 to provide a benchmark for future research. All results are derived using the same STTM-H models as detailed in the main body of this paper and trained with the GOD training set.

As we can see, the decoders trained on brain activity elicited by visual stimuli demonstrate the capacity to generalize to decode imagery-induced brain activity. However, their performance is observed to be lower in accuracy when compared to decoding from stimulus-induced brain activity. This suggests that while there is some transferability between decoding from stimulus-induced brain activity and imagery-induced brain activity, the latter presents unique challenges that impact decoding accuracy.

| Methods | Modality | Prompt | top-1 | top-5 |

|---|---|---|---|---|

| STTM-H(Sub1) | V&T | Text | 6. | 16.0 |

| STTM-H(Sub2) | V&T | Text | 10.0 | 30.0 |

| STTM-H(Sub3) | V&T | Text | 12.0 | 36.0 |

| STTM-H(Sub4) | V&T | Text | 4.0 | 26.0 |

| STTM-H(Sub5) | V&T | Text | 2.0 | 18.0 |

0.2 Try Different Designs for Pixel-Wise Reconstruction

In our paper’s main body, we conducted a comparative analysis of our low-level pipeline’s performance under two conditions: with and without high-level perception guidance. Here, we present another design for the low-level pipeline, wherein it shares the same adapters with the pre-trained high-level pipeline and is trained without high-level perception guidance. The results are depicted in Tab. A2. It is evident from the results that features from the high-level adapters yield poorer performance on two pixel-wise metrics (i.e., PixCorr and SSIM), which means that they contain less pixel-wise information. Our final design, which employs low-level adapters with high-level guidance, effectively combines the advantages of the other two designs.

| Low-level feature | Low- Level | High-Level | ||||||

|---|---|---|---|---|---|---|---|---|

| PixCorr | SSIM | Alex(2) | Alex(5) | Incep | CLIP | Eff | SwAV | |

| Low-level adapter(w/o guidance) | .372 | .488 | 79.6% | 79.6% | 63.6% | 63.0% | .985 | .643 |

| High-level adapter(w/o guidance) | .285 | .439 | 91.6% | 87.7% | 68.6% | 61.6% | .946 | .735 |

| Low-level adapter(with guidance) | .383 | .488 | 83.3% | 86.0% | 68.2% | 67.1% | .968 | .647 |

0.3 Ablation Study on Img2Img Strength

The Img2Img strength controls how much information of the initial image can be maintained in the translated image. We test with different Img2Img strength values for image reconstruction on NSD. The results are reported in Tab. A3. As the img2img strength increases, the results for low-level metrics will increase but the results for the high-level metrics will first increase and then decrease. The final values used in the main body of this paper are 0.3 for NSD and 0.2 for GOD.

| Img2Img Strength | Low- Level | High-Level | ||||||

|---|---|---|---|---|---|---|---|---|

| PixCorr | SSIM | Alex(2) | Alex(5) | Incep | CLIP | Eff | SwAV | |

| 0.0(only high-level) | .209 | .276 | 91.5% | 97.8% | 95.4% | 95.6% | .612 | .344 |

| 0.15 | .316 | .309 | 95.3% | 98.5% | 95.7% | 95.7% | .608 | .338 |

| 0.2 | .322 | .315 | 95.4% | 98.4% | 95.7% | 95.9% | .608 | .337 |

| 0.25 | .327 | .324 | 95.4% | 98.5% | 95.7% | 95.8% | .608 | .338 |

| .333 | .334 | 95.7% | 98.5% | 95.8% | 95.7% | .611 | .338 | |

| 1.0(only low-level) | .383 | .488 | 83.3% | 86.0% | 68.2% | 67.1% | .968 | .647 |

0.4 Subject-Specific Results On NSD

In Tab. A4, we provide the image reconstruction results and retrieval results for each subject and each pipeline on the NSD dataset.

| Methods | Low- Level | High-Level | Retrieval tasks | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| PixCorr | SSIM | Alex(2) | Alex(5) | Incep | CLIP | Eff | SwAV | Image@1 | Text@5 | Brain@1 | |

| STTM-H(Sub1) | .221 | .278 | 92.6% | 98.3% | 96.0% | 96.3% | .599 | .332 | 97.0% | 43.9% | 98.4% |

| STTM-H(Sub2) | .211 | .275 | 92.0% | 97.9% | 95.2% | 95.3% | .619 | .346 | 96.5% | 38.9% | 97.8% |

| STTM-H(Sub5) | .197 | .275 | 91.5% | 98.0% | 96.2% | 96.4% | .596 | .337 | 89.5% | 46.3% | 92.4% |

| STTM-H(Sub7) | .205 | .274 | 89.7% | 96.6% | 94.1% | 94.5% | .635 | .360 | 88.1% | 36.0% | 90.9% |

| STTM-L(Sub1) | .445 | .498 | 87.4% | 88.6% | 67.8% | 68.2% | .964 | .644 | - | - | - |

| STTM-L(Sub2) | .396 | .490 | 85.5% | 87.9% | 68.1% | 67.7% | .964 | .645 | - | - | - |

| STTM-L(Sub5) | .351 | .485 | 80.6% | 84.5% | 69.4% | 67.2% | .969 | .649 | - | - | - |

| STTM-L(Sub7) | .338 | .481 | 79.8% | 83.0% | 67.2% | 65.4% | .974 | .653 | - | - | - |

| STTM(Sub1) | .390 | .343 | 97.5% | 99.1% | 96.5% | 96.5% | .595 | .325 | 97.0% | 43.9% | 98.4% |

| STTM(Sub2) | .343 | .337 | 96.4% | 98.8% | 96.0% | 95.5% | .619 | .340 | 96.5% | 38.9% | 97.8% |

| STTM(Sub5) | .305 | .330 | 95.0% | 98.4% | 96.9% | 96.6% | .594 | .331 | 89.5% | 46.3% | 92.4% |

| STTM(Sub7) | .293 | .326 | 93.9% | 97.7% | 93.9% | 94.3% | .635 | .356 | 88.1% | 36.0% | 90.9% |

0.5 Subject-Specific Results On GOD

Tab. A5 shows the individual results for the zero-shot classification task on the test set of the GOD dataset

| Methods | Modality | Prompt | Average | |

|---|---|---|---|---|

| top-1 | top-5 | |||

| STTM-H(Sub1) | V&T | Text | 18.0 | 60.0 |

| STTM-H(Sub2) | V&T | Text | 20.0 | 58.0 |

| STTM-H(Sub3) | V&T | Text | 26.0 | 68.0 |

| STTM-H(Sub4) | V&T | Text | 28.0 | 62.0 |

| STTM-H(Sub5) | V&T | Text | 24.0 | 62.0 |

0.6 Contrastive Losses in This Work

0.6.1 BiMixCo Loss

In BiMixCo[50] loss, voxels are mixed using a factor sampled from the Beta distribution with .

| (4) |

where and are the -th fMRI sample and image respectively. is an arbitrary mixing index for the -th datapoint and represents the combined MLP and projector. , and are L2-normalized. The BiMixCo is defined as:

| (5) | |||

where is a temperature hyperparameter, and N is the batch size.

0.6.2 SoftCLIP Loss

With the same notation, SoftCLIP[50] loss is defined as:

| (6) |

0.7 Reconstruction Evaluations Metrics

PixCorr:

The pixel-level correlation of reconstructed and ground-truth images.

SSIM:

The structural similarity index metric[61].

Alex(2) & Alex(5):

The 2-way comparisons of the second and fifth layers of AlexNet[26], respectively.

Inception("Incep"):

The 2-way comparison of the last pooling layer of Inception-V3[57].

CLIP:

The 2-way comparison of the output layer of the CLIP-Vision[45] model.

EffNet-B1(“Eff”):

Distance metrics gathered from EfficientNet-B1[59].

SwAV:

Distance metric gathered from SwAV-ResNet50[3] model.

Two-way identification followed the methods of Ozcelik and VanRullen[42] and Scotti et al.[50]. We computed Pearson correlations between embeddings for the ground truth image and the reconstructed image, as well as between the ground truth image and another reconstruction from the test set. Correct identification occurred when the correlation with the ground truth image was higher than with the other reconstruction. Performance for each test sample was averaged across all possible pairwise comparisons using the remaining 981 reconstructions to prevent bias from random sample selection.

0.8 More Reconstruction Examples

In Fig. A2, we qualitatively compare our reconstructions against those of previous state-of-the-art works. From the intuitive point of view, our method gets similar or even better results compared to CMVDM[65] and Mind-Vis[5].

In Fig. A3, we give more reconstruction samples for subject 3 of the GOD dataset.

In Fig. A4, we compare our low-level reconstructions with those of MindEye[50]. Our reconstructions contain more details and are more faithful to the original color distribution.

In Fig. A5, more reconstructions for subject 1 from the NSD dataset are visualized.

0.9 Prompts for classification

The text prompts used in this work are the same as BrainCLIP[32]:

"a photo of a {}.",

"a blurry photo of a {}.",

"a black and white photo of a {}.",

"a low contrast photo of a {}.",

"a high contrast photo of a {}.",

"a bad photo of a {}.",

"a good photo of a {}.",

"a photo of a small {}.",

"a photo of a big {}.",

"a photo of the {}.",

"a blurry photo of the {}.",

"a black and white photo of the {}.",

"a low contrast photo of the {}.",

"a high contrast photo of the {}.",

"a bad photo of the {}.",

"a good photo of the {}.",

"a photo of the small {}.",

"a photo of the big {}."

The “{}” is replaced by a specific class name. Their embeddings are averaged to get the classification weights.

0.10 Potential Social Impact

Research in AI for brain decoding holds immense potential for various social impacts. One significant area of impact lies in healthcare, where advancements could revolutionize diagnosis and treatment for neurological disorders, such as Alzheimer’s, Parkinson’s, and epilepsy. Accurate decoding of brain signals could lead to early detection of cognitive decline, enabling timely interventions and personalized treatment plans. Moreover, the development of brain-computer interfaces (BCIs) could greatly enhance communication and mobility for individuals with severe disabilities, empowering them to interact with the world more independently. Additionally, ethical considerations surrounding privacy, consent, and data security must be carefully addressed to ensure the responsible and equitable deployment of such technology. In this work, all the used data are obtained from public projects and used responsibly.