Security and Privacy on Generative Data in AIGC: A Survey

Abstract.

The advent of artificial intelligence-generated content (AIGC) represents a pivotal moment in the evolution of information technology. With AIGC, it can be effortless to generate high-quality data that is challenging for the public to distinguish. Nevertheless, the proliferation of generative data across cyberspace brings security and privacy issues, including privacy leakages of individuals and media forgery for fraudulent purposes. Consequently, both academia and industry begin to emphasize the trustworthiness of generative data, successively providing a series of countermeasures for security and privacy. In this survey, we systematically review the security and privacy on generative data in AIGC, particularly for the first time analyzing them from the perspective of information security properties. Specifically, we reveal the successful experiences of state-of-the-art countermeasures in terms of the foundational properties of privacy, controllability, authenticity, and compliance, respectively. Finally, we show some representative benchmarks, present a statistical analysis, and summarize the potential exploration directions from each of theses properties.

1. Introduction

1.1. Background

Artificial intelligence-generated content (AIGC) emerges as a novel generation paradigm for the production, manipulation, and modification of data. It utilizes advanced artificial intelligence (AI) technologies to automatically generate high-quality data at a rapid pace, including images, videos, text, audio, and graphics. With the powerful generative ability, AIGC can save time and unleash creativity, which are often challenging to achieve with professionally generated content (PGC) and user-generated content (UGC). Such progress in data creation can drive the emergence of innovative industries, particularly Metaverse (Wang et al., 2022b), where digital and physical worlds converge.

Early AIGC is limited by the algorithmic efficiency, hardware performance, and data scale, hindering the ability to fulfill optimal creation tasks. With the iterative updates of generative structures, notably generative adversarial networks (GANs) (Goodfellow et al., 2020), AIGC has witnessed significant breakthroughs, generating realistic data that is often indistinguishable by humans from real data.

In the generation of visual content, NVIDIA released StyleGAN (Karras et al., 2019) in 2018, which enables the controllable generation of high-resolution images and has undergone several upgrades. The subsequent year, DeepMind released DVD-GAN (Clark et al., 2019), which is designed for continuous video generation and exhibits great efficacy in complex data domains. Recently, diffusion models (DMs) (Ho et al., 2020) show more refined and novel image generation via the incremental noise addition. Guided by language models, DMs can improve the semantic coherence between input prompts and generated images. Excellent diffusion-based products, e.g., Stable Diffusion111https://stability.ai/stablediffusion, Midjourney222https://www.midjourney.com/, and Make-A-Video333https://makeavideo.studio/, are capable of generating visually realistic images or videos that meet the requirements of diverse textual prompts.

| Ref. | Privacy | Controllability | Authenticity | Compliance | |||||

| Privacy | AIGC for | Access | Traceability | Generative | Generative | Non-toxicity | Factuality | ||

| in AIGC | Privacy | Control | Detection | Attribution | |||||

| (Wang et al., 2023c) | ✗ | ✗ | ✗ | ✓ | ✗ | ✗ | ✗ | ✗ | |

| (Lyu et al., 2023a) | ✓ | ✗ | ✗ | ✓ | ✗ | ✗ | ✓ | ✓ | |

| (Chen et al., 2023b) | ✓ | ✗ | ✗ | ✓ | ✗ | ✓ | ✓ | ✓ | |

| Ours | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

In the generation of language content, more attention is focused on ChatGPT, which reached 1.76 billion visits in May 2023. Trained on a large-scale text dataset, ChatGPT exhibits impressive performance in various contexts, including human-computer interaction and dialogues. For instance, researchers released LeanDojo (Yang et al., 2024a), an open-source mathematical proof platform based on ChatGPT, providing toolkits, benchmarks, and models to tackle complex proof of formulas in an interactive environment. The integration of ChatGPT into the Bing search engine enhances search experiences, enabling users to effortlessly access comprehensive information. This powerful multi-purpose adaptability further exemplifies the possibilities for humanity to achieve artificial general intelligence (AGI).

Overall, compared to the PGC and UGC, AIGC demonstrates more advantages in data creation. AIGC possesses the ability to swiftly produce high-quality content while catering to personalized demands from users. As AI technology continues to advancements, the generative capability of AIGC is growing rapidly, promoting increased social productivity and economic value.

1.2. Motivation

A large amount of generative data floods cyberspace, further enriching the diversity and abundance of online content. These generative data encompass multimodal information, which can be observed in various domains, e.g., news reporting, computer games, and social sharing. According to the Gartner’s report, AIGC will be anticipated to account for over 10% of all data creation in 2025. However, the proliferation of generative data also poses security and privacy issues.

Firstly, generative data can expose individual privacy content by replicating training data. Generative models rely on large-scale data, which includes private content, e.g., faces, addresses, and emails. Existing works have demonstrated the memorization capabilities of large generative models (Carlini et al., 2021, 2023a), leading to the potential replication of all or parts of the training data. This means that the generative data may also contain sensitive content which present in the training data. With specific prompts, GPT-2 can output personal information, including the name, the address, and the e-mail address (Carlini et al., 2021). An alarming study (Carlini et al., 2023a) revealed that Google’s Imagen can be prompted to output real-person photos, posing a significant threat to individual privacy. Therefore, it is necessary to hinder the generation of data containing privacy content.

Secondly, generative data used for malicious purposes often involves false information, which can deceive the public, posing potential threats to both society and individuals. Recently, a false tweet about an explosion near the Pentagon went viral on social media, fooling many authoritative media sources and triggering fluctuations in the US stock market (Pen, 2023). Moreover, the mature DeepFake technologies allow for the creation of convincing fake personal videos, which can be used to maliciously fabricate celebrity events (Korshunov and Marcel, 2018). The difficulty in discerning authenticity exposes the public to believing such content, resulting in severe damage to the reputations of celebrities. Thus, it is important to provide effective technology to confirm the authenticity of generative data. Meanwhile, generative data is required to have the controllability so that such potential threats can be proactively prevented.

Thirdly, regulators around the world have further requirements for the compliance of generative data due to the critical implications of AIGC. Data protection regulatory authorities in Italy, Germany, France, Spain, and other countries have expressed concerns and initiated investigations into AIGC. In particular, China has taken a significant step by introducing the interim regulation on the management of generative artificial intelligence (AI) services (int, 2023). This regulation encourages innovation in AIGC while mandating that generative data is non-toxic and factual. To adhere to the relevant regulations, it becomes crucial to ensure the compliance of generative data.

1.3. Comparisons with Existing Surveys

Several works (Wang et al., 2023c; Lyu et al., 2023a; Chen et al., 2023b) have investigated the security and privacy in AIGC from different perspectives.

Wang et al. (Wang et al., 2023c) presents an in-depth survey of AIGC working principles, and roundly explored the taxonomy of security and privacy threats to AIGC. Meanwhile, they extensively reviewed solutions for intellectual property (IP) protection for AIGC models and generative data, with a focus on watermarking. Yet, they fail to provide countermeasures for other threats such as the utilization of non-compliant data.

Chen et al. (Lyu et al., 2023a) discussed three main concerns for promoting responsible AIGC, including 1) privacy, 2) bias, toxicity, misinformation, and 3) intellectual property. They summarized the issues and listed solutions related to existing AIGC products, e.g., ChatGPT, Midjourney, and Imagen. Nevertheless, they overlooked the importance of considering the authenticity of generative data in responsible AIGC.

Chen et al. (Chen et al., 2023b) summarized the AIGC technology and analyzed the security and privacy challenges in AIGC. Moreover, they explored the potential countermeasures with advanced technologies, involving privacy computing, blockchain, and beyond. However, they did not pay attention to the detection and access control of generative data.

The differences between our work and previous works are:

- •

-

•

Previous works presented the corresponding techniques in terms of specific issues of privacy and security, whereas the issues cannot be enumerated in full. On the other hand, we discuss security and privacy from the fundamental properties of information security, which can cover the almost all of the issues.

-

•

We supplement security issues that are not discussed in previous works, including access control and generative detection. In addition, we explore the use of generative data to power the privacy protection of real data. Table 1 shows the comparisons of our work with existing surveys.

In brief, the main contributions of our work are as follows:

-

•

We investigate the security and privacy issues on generative data in AIGC and comprehensively survey the corresponding state-of-the-art countermeasures.

-

•

We discuss security and privacy from a new perspective, i.e., the fundamental properties of information security, including privacy, controllability, authenticity, and compliance.

-

•

We point out the valuable future directions in security and privacy, toward building trustworthy generative data.

The rest of the paper is organized as follows. Section 2 reviews the basic AIGC process and categorizes the security and privacy on generative data in AIGC. In Sections 3 to 6, we discuss the issues and review the corresponding solutions from the perspectives of privacy, controllability, authenticity, and compliance, respectively. We present some benchmarks and suggested future directions in Section 7 and Section 8. Finally, we summarize our work in Section 9.

2. Overview

2.1. Process of AIGC

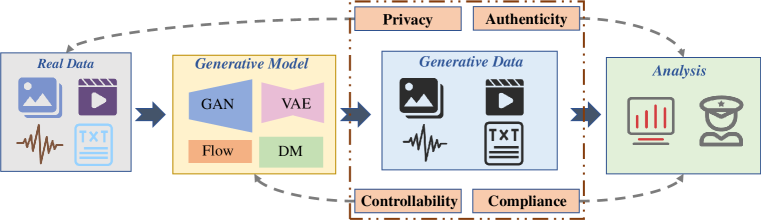

As illustrated in Fig. 1, we first discuss the AIGC process as follows:

2.1.1. Real Data for Training

The data used for training impacts the features and patterns learned by AIGC models. Therefore, high-quality data forms the cornerstone of AIGC technology. Data collection typically involves various open-source repositories, including public databases, social media platforms, and online forums. These diverse sources provide AIGC training with a large-scale and diverse dataset.

After collection, data filtering is applied to ensure the data quality, which involves removing irrelevant data and balancing the dataset for unbiased training. Additionally, data preprocessing, data augmentation, and data privacy protection steps can be undertaken based on different tasks to further enhance the quality and security of the training data.

2.1.2. Generative Model in Training

The obtained data is used to train generative models, which are often performed by a centralized server with powerful computational capabilities. During training, generative models learn patterns and features in the data to generate results with a similar distribution to real data. Popular generative model architectures include generative adversarial networks (GANs), variational autoencoders (VAEs), flow-based models (Flows), and diffusion models (DMs), each with its strengths and weaknesses. The choice of models depends on specific requirements of tasks, available data, and computational resources.

It is also important to note that training generative models requires substantial computational resources. On this basis, model fine-tuning is the process of adapting the pre-trained large model to a new task or domain without retraining. It only adjusts model parameters by training appropriate amount of additional data.

2.1.3. Generative Data

After generative models are trained, they can be utilized to produce data. During this stage, users typically provide an input condition, e.g., a question or a piece of text. Then the model starts outputting data based on the input condition.

In the generation of language content, AIGC exhibits the capability to outpace human authors in rapidly generating high-quality text, e.g., codes and articles. Additionally, it can engage in conversational interactions akin to humans, assisting users with various tasks and inquiries. The efficiency of AIGC in content creation and human-like interactions revolutionize how information is produced and communicated.

In the generation of visual content, AIGC harnesses the powerful generative capabilities of models like DMs, enabling the generation of new images with realistic quality. Moreover, AIGC holds potential for video generation, as they can simultaneously process multiple video frames automatically.

2.1.4. Analysis for Generative Data

After data generation, further analysis of the generative data is necessary to ensure the quality of generative data.

Generative data needs to undergo a quality assessment to check its accuracy, consistency, and integrality. If the generative data lacks in certain aspects, it requires model adjustments to improve the quality of generative data.

Additionally, analyzing the risks associated with generative data can identify potential hazards. For instance, it is required to analyze whether there is discriminatory content, false information, or misleading content. By promptly detecting and addressing these issues, the negative impact of generative data can be minimized.

2.2. Security and Privacy on Generative Data

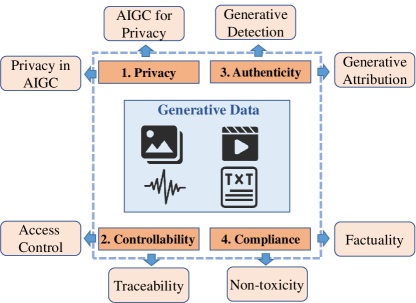

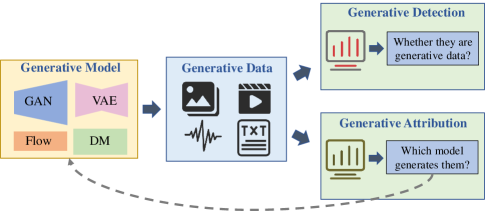

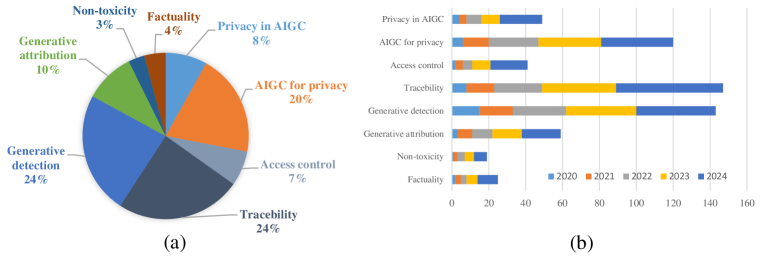

For generative data, there are corresponding security and privacy requirements at different stages. As shown in Fig. 1, we categorize these requirements according to the fundamental properties of information security, including privacy, controllability, authenticity, and compliance. Additionally, Fig. 2 shows the further subclassification.

2.2.1. Privacy

Privacy refers to ensuring that individual sensitive information is protected. Generative data mimics the distribution of real data, which brings negative and positive impacts on the privacy of real data. Specifically, the following two impacts exist:

-

•

Privacy in AIGC: Generative data may mimic the distribution of sensitive content, which makes it possible to replicate sensitive training data under specific conditions, thus posing a potential privacy threat.

-

•

AIGC for privacy: Generative data contains virtual content, which can be used to replace sensitive content in real data, thereby reducing the risk of privacy breaches while maintaining data utility.

2.2.2. Controllability

Controllability refers to ensuring effective management and control access of information to restrict unauthorized actions. Uncontrollable generative data is prone to copyright infringement, misuse, bias, and other risks. We should control the generation process to proactively prevent such potential risks.

-

•

Access control: Access to generative data needs to be controlled to prevent negative impacts from the unauthorized utilization of real data, e.g., malicious manipulation and copyright infringement.

-

•

Traceability: Generative data needs to support the tracking of the generation process and subsequent dissemination to monitor any behavior involving security for accountability.

2.2.3. Authenticity

Authenticity refers to maintaining the integrity and truthfulness of data, ensuring that information is accurate, unaltered, and from credible sources. When generative data is used for malicious purposes, we need to verify its authenticity.

-

•

Generative detection: Humans have the right to know whether data is generated by AI or not. Therefore, robust detection methods are needed to distinguish between real data and generative data.

-

•

Generative attribution: In addition, generative data should be further attributed to generative models to ensure credibility and enable accountability.

2.2.4. Compliance

Compliance refers to adhering to relevant laws, regulations, and industry standards, ensuring that information security practices meet legal requirements and industry best practices. We mainly talk about two important requirements as follows:

-

•

Non-toxicity: Generative data is prohibited from containing toxic content, e.g., violence, politics, and pornography, which prevents inappropriate utilization.

-

•

Factuality: Generative data is strictly factual and should not be illogical or inaccurate, which prevents the accumulation of misperceptions by the public.

3. Privacy on Generative Data

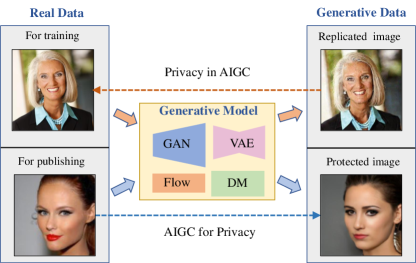

For generative data, we talked about its negative and positive impacts on the privacy of real data. 1) Negative: A large amount of real data is used for the training of AIGC models, which may memorize the training data. In this way, the generative data would replicate the sensitive data under certain conditions, thus causing a privacy breach of real data, which is called privacy in AIGC. For instance, in the top part of Fig. 3, it is easy to generate the face image of Ann Graham Lotz with the prompt “Ann Graham Lotz”, which is almost identical to the training sample. 2) Positive: Real data published by users contains sensitive content, and AIGC can be used to protect privacy by replacing sensitive content with virtual content, which is called AIGC for privacy. In the bottom part of Fig. 3, the generative image has a different identity from the real image, blocking unauthorized identification.

3.1. Privacy for AIGC

3.1.1. Threats to Privacy

AIGC service providers proactively collect individual data on various platforms to construct giant datasets for enhancing the quality of generative data. However, the training data contains sensitive information about individuals, which is highly susceptible to privacy leakage. A study shows that the larger the amount of training data, the higher the privacy risk will result (Plant et al., 2022). Specifically, in the training, private data can easily be memorized in model weights. In the interaction with users, generative data may replicate the training data, which poses a potential privacy threat. Such data replication is defined as an under-explored failure mode of overfitting, which exists in various generative models (Meehan et al., 2020).

In language generative models, Carlini et al. (Carlini et al., 2021) extracted training data by querying large language models (LLMs). The experiments employ GPT-2 as a demonstration to extract sensitive individual information, e.g., name, email, and phone number. Tirumala et al. (Tirumala et al., 2022) empirically studied the memorization dynamics over language model training, and demonstrated that larger models memorize faster. Nicholas et al. (Carlini et al., 2023b) described three log-linear relationships to quantify the extent to which LLMs memorize training data under different model scales, times of sample replications, and number of tokens. Their experiments indicated that memory in LLMs is more prevalent than previously believed and that memorization scales log-linearly with model size.

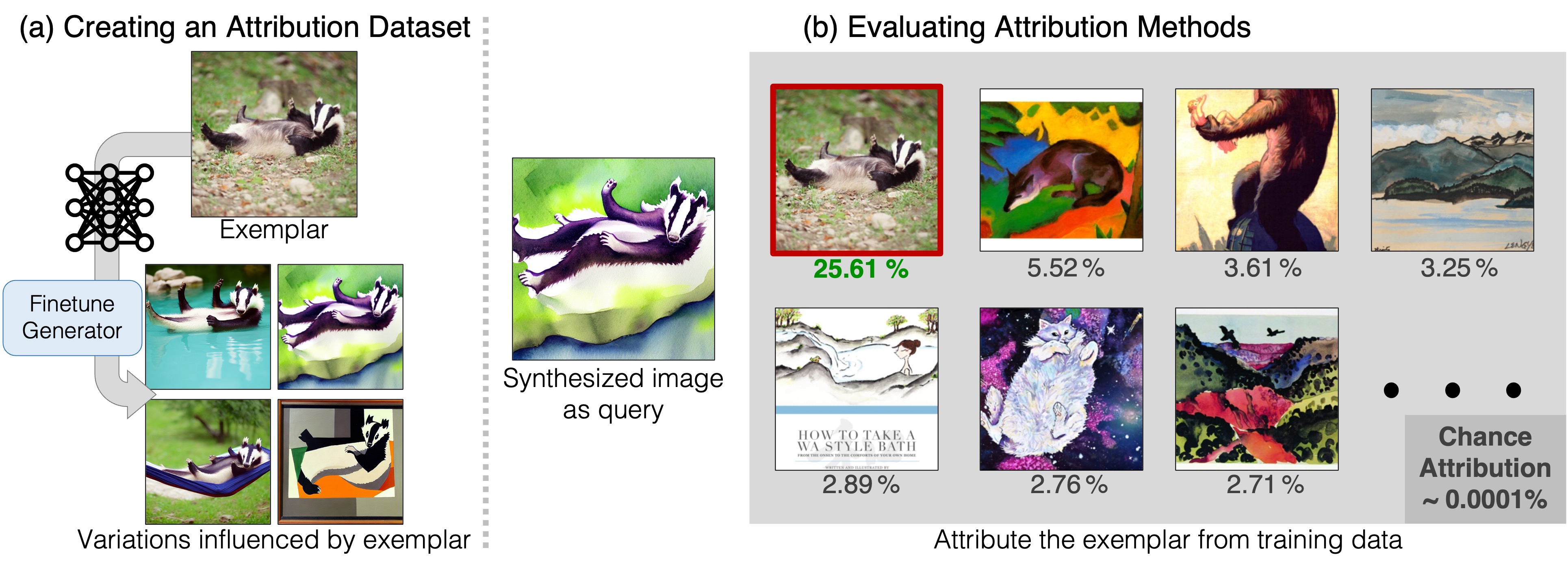

In vision generative models, the general view is that generative adversarial networks (GANs) tend not to memorize training data under normal training settings (Webster et al., 2019). However, Feng et al. (Feng et al., 2021) showed experimentally that GANs replication percentage decays exponentially with respect to dataset size and complexity. Stronger memory exists in diffusion models (Webster, 2023; Carlini et al., 2023a; Somepalli et al., 2023). Carlini et al. (Carlini et al., 2023a) illustrated the ability of diffusion models to memorize individual images from the training data and can reproduce them at generation time. Unlike DMs that accept training data as direct input, generators for GANs are trained using only indirect information (i.e., gradients from the discriminator) about the training data. Therefore, GANs are more private. Somepalli et al. (Somepalli et al., 2023) proposed image retrieval frameworks to demonstrate that the generated images by diffusion models are simple combinations of the foreground and background objects of the training dataset. As shown in Fig. 4, diffusion models just create semantically rather than pixel-wise objects identical to original images.

3.1.2. Countermeasures to Privacy

Researchers have suggested some available solutions to mitigate data replication for privacy protection. Bai et al. (Bai et al., 2022) proposed memorization rejection in the training loss, which abandons generative data that are near-duplicates of training data.

Deduplicating training datasets is also a possible option. OpenAI has verified its effectiveness using the distributed nearest neighbor search on Dall-E2. Nikhil et al. (Kandpal et al., 2022) studied a variety of LLMs and showed that the likelihood of a duplicated text sequence appearing is correlated with the number of occurrences of that sequence in the training data. In addition, they also verified that replicated text sequences are greatly reduced when duplicates are eliminated.

Differential privacy (Abadi et al., 2016) is a recommended solution, which introduces noises during training to ensure the generative data is differentially private with respect to the training data. RDP-GAN (Ma et al., 2023b) adds differential noises on the value of the loss function of a discriminator during training, which achieves a differentially private GAN. DPDMs (Dockhorn et al., 2022) enhances privacy via differentially private stochastic gradient descent, which also allows the generative data to retain a high level of availability to support multiple vision tasks. Compared to DPDMs, Ghalebikesabi et al. (Ghalebikesabi et al., 2023) enabled to accurately train larger models and to achieve a high utility on more challenging datasets such as CIFAR-10. PrivImage (Li et al., 2024a) was proposed based on that the distribution of public data used should be similar to the distribution of sensitive data. Therefore, PrivImage elaborately selects similar distribution pre-training data, facilitating the efficient creation of DP datasets with high fidelity and utility. Unlike existing work that only considers DM as a regular deep model, dp-promise (Wang et al., 2024a) was the first work to implement (approximate) DP using DM noise, It employs a two-stage DM training process to reduce the overall noise injection, effectively achieving the privacy-utility tradeoff.

Detecting replicated content is a remedial solution, which detects whether the generative data is in training data and then determines whether to use it. Stability AI provides a tool (Bea, 2022) to support the identification of the replicated images. Somepalli et al. (Somepalli et al., 2023) developed image similarity metrics that are diverse on self-supervised learning and based on an image retrieval framework to search for copying behavior.

Machine unlearning (Bourtoule et al., 2021) can help generative models forget the private training data, which avoids the effort of re-training the model. Kumari et. al (Kumari et al., 2023) fine-tuned diffusion models by modifying the sensitive training data so that the models forget already memorized images. Forget-Me-Not (Zhang et al., 2024c) is adapted as a lightweight model patch for Stable Diffusion. It effectively removes the concept of containing a specific identity and avoids generating any face photo with the identity.

3.2. AIGC for Privacy

Sensitive content exists on many types of data published by different entities, which is required for a privacy-preserving treatment. Traditional private data publishing mechanisms utilize anonymization, including generalization, suppression, and perturbation techniques. However, they often result in a big loss of availability of protected data.

Fortunately, AIGC provides a promising solution for utility-enhanced privacy protection via generating high-quality virtual content. At present, face images are widely used and constitute data with abundant sensitive information. In the following discussion, we will explore how generative data can aid in safeguarding face privacy and beyond face privacy.

3.2.1. Face Privacy

To protect face privacy, many works (Wang et al., 2023d; Hukkelås and Lindseth, 2023; Wen et al., 2023; Yuan et al., 2022; Kim et al., 2023; Liu et al., 2023a; Lyu et al., 2023b; Wang et al., 2024b; Wen et al., 2022) generate a surrogate virtual face with new identity. DeepFace2 (Hukkelås and Lindseth, 2023) generates realistic anonymized images by conditioning GANs to fill in images that obscure facial regions. In order to preserve attributes, Gong et al. (Gong et al., 2020) replaced identity independently by decoupling identity and attribute features, which achieves a trade-off between identity privacy and data utility. To facilitate privacy-preserving face recognition, IVFG (Yuan et al., 2022) generates identifiable virtual faces bound with new identities in the latent space of StyleGAN. In addition, publicly available face datasets for training face recognizers often violate the privacy of real people. For this, DCFace (Kim et al., 2023) creates a generative dataset with virtual faces via diffusion models.

Other works are devoted to generating adversarial faces to evade unauthorized identification. DiffProtect (Liu et al., 2023a) adopts a diffusion autoencoder to generate semantically meaningful perturbations, which can promote the protected face identified as another person. 3DAM-GAN (Lyu et al., 2023b) generates natural adversarial faces by makeup transfer, improving the quality and transferability of generative makeup for identity concealment.

| Ref. | Year | Remarks | Limitations | |

|---|---|---|---|---|

| Privacy in AIGC | (Bai et al., 2022) | 2022 | Maintained generation quality | Weak generalizability and scalability |

| (Kandpal et al., 2022) | 2022 | Enhanced security, easy operation | Lack of privacy guarantees | |

| (Ma et al., 2023b) | 2023 | Without norm clipping, strict proof | Visual semantic disclosure | |

| (Wang et al., 2024a) | 2024 | Provable privacy applicable to DMs | Poor visual quality, high consumption | |

| (Li et al., 2024a) | 2024 | Provable privacy, low consumption | Poor visual quality, huge training costs | |

| (Somepalli et al., 2023) | 2023 | Free train, easily understanding | Difficulties in defining similar data | |

| (Zhang et al., 2024c) | 2024 | Efficient, definition-free | Failure in abstract concept | |

| Privacy in AIGC | (Hukkelås and Lindseth, 2023) | 2023 | High-quality, diverse, and editable | Out-of-context results, reduced utility |

| (Yuan et al., 2022) | 2023 | Identifiable, irreversible | Unpreserved facial attributes | |

| (Kim et al., 2023) | 2023 | Visual high quality, additional metric | Time-consuming, privacy unprovable | |

| (Lyu et al., 2023b) | 2023 | Imperceptible, transferable | Not applicable to males | |

| (Cao and Li, 2021) | 2021 | Multi-model combination, high utility | Less stringent privacy proofs | |

| (Liu et al., 2022) | 2022 | Aligning with user preferences | Reduced utility, limited scalability | |

| (Thambawita et al., 2022) | 2022 | Small training data, comparable quality | Lack of trustworthiness |

3.2.2. Beyond Face Privacy

Beyond face, many types of data have sensitive information that needs to be protected (Lu et al., 2023d; Zhang et al., 2022). TrajGen (Cao and Li, 2021) uses GAN and Seq2Seq to simulate the real data to generate mobility data, which can be shared without privacy leakages, thus contributing to the open source process of mobility datasets. In the recommendation systems, UPC-SDG (Liu et al., 2022) can generate virtual interaction data for users according to their privacy preferences, providing privacy guarantees while maximizing the data utility. SinGAN-Seg (Thambawita et al., 2022) uses a single training image to generate synthetic medical images with corresponding masks, which can effectively protect patient privacy when performing medical segmentation. PPUP-GAN (Yao et al., 2023) generates new content of the privacy-related background while maintaining the content of the region of interest in aerial photography, which can protect the privacy of bystanders and maintain the utility of aerial image analysis. Hindistan et al. (Hindistan and Yetkin, 2023) designed a hybrid approach to protect industrial Internet of Things (IIoT) data based on GANs and differential privacy, which causes minimal accuracy loss without extra high computational costs to data processing.

3.3. Summary

In table 2, we summarize the solutions for privacy protection on generative data. In the case of privacy in AIGC, differential privacy provides a provable guarantee for generative data, but may make the distribution of generative data different from the real data, reducing data utility. Replication detection and data deduplication avoid any manipulation of models but rely on appropriate image similarity metrics. Machine unlearning promotes that generative data no longer contains sensitive content via model fine-tuning. In particular, as the size of the generative model increases, this fine-tuning technique will receive more attention. Current machine unlearning schemes for generative models are still relatively underdeveloped and will be a promising exploration direction. In addition, adversarial attacks can also have an impact on the privacy of generative data. On the one hand, adversarial attacks can attack generative models to prevent them from learning about privacy content in real data, thus securing generative data from replicating privacy content at the source. On the other hand, adversarial attacks can also attack privacy-preserving methods (e.g., machine unlearning) to prevent the removal of sensitive information, which exacerbates the privacy challenge on generative data.

In the case of AIGC for privacy, the realism, diversity, and controllability of AIGC provide important directions for the privacy protection of real data, especially for unstructured data such as images. Due to the mature research of GANs, a plethora of existing works utilize GANs to generate virtual content for privacy protection. Compared to GANs, diffusion models exhibit stronger generative capabilities. Therefore, as its controllability improves, it will shine even more in data privacy protection. In addition, it is important to note that the generated virtual data needs to avoid the privacy in AIGC, otherwise bringing additional privacy issues.

4. Controllability on Generative Data

Uncontrolled generative data may give rise to potential issues, e.g., copyright infringement and malicious utilization. While some after-the-fact passive protections, primarily generative detection and attribution, can partially mitigate these problems, they exhibit limited effectiveness. Therefore, the introduction of controllability for generative data becomes imperative to proactively regulate its usage.

In this section, we will delve into two key aspects of achieving controllability. Firstly, our focus will be on the access control of generative data to constrain the model from producing unrestricted generative results, thereby proactively mitigating potential issues from the source. Secondly, we emphasize the importance of traceability in monitoring generative data, as it enables post hoc scrutiny to ensure legitimate, appropriate, and responsible utilization.

4.1. Access Control

Generative data is indirectly guided by trained data, so access control to generative data can be effectively achieved by controlling the use of real data in generative models. Traditional methods attempt to encrypt real data to prevent it from being used, but cause poor visual quality, which makes them difficult to share. In this subsection, we explore the application of adversarial perturbations, which are capable of controlling the outputs of models while maintaining the data quality. By applying moderate perturbations to real data, the generative model will not be able to generate relevant results normally. Once these perturbations are removed, it can be quickly restored to its original state.

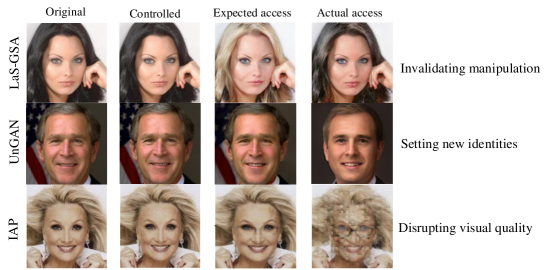

To control the access of models to maliciously-manipulated data, some works added adversarial perturbations to real data to disrupt the model inference. Yeh et al. (Yeh et al., 2021) constructed a novel nullifying perturbation. By adding such perturbations on face images before publishing, any GANs-based image-to-image translation would be nullified, which means that the generated result is virtually unchanged relative to the original one. UnGANable (Li et al., 2023) makes the manipulated face belong to a new identity, which can protect the original identity. Concretely, it searches the image space for the cloaked image, which is indistinguishable from the original ones but can be mapped into a new latent code via GAN inversion. Information-containing adversarial perturbation (IAP) (Zhu et al., 2023) presents a two-tier protection. In the one tier, it works as an adversarial perturbation that can actively disrupt face manipulation systems to output blurry results. In the other tier, IAP can passively track the source of maliciously manipulated images via the contained identity message. The different effects of the above works are displayed in Fig. 5.

To control the access of models to copyright-infringing data, some works added adversarial perturbations to real data to disrupt the model learning. Anti-DreamBooth (Van Le et al., 2023) can add minor perturbations to individual images before releasing them, which destroys the training effect of any DreamBooth models. Glaze (Shan et al., 2023) is designed to help artists add an imperceptible “style cloak” to their artworks before sharing them, effectively preventing diffusion models from mimicking the artist. Wu et al. (Wu et al., 2023) proposed an adversarial decoupling augmentation framework, generating adversarial noise to disrupt the training process of text-to-image models. Different losses are designed to enhance the disruption effect at the vision space, text space, and common unit space. Liang et al. (Liang et al., 2023) built a theoretical framework to define and evaluate the adversarial perturbations for DMs. Further, AdvDM was proposed to hinder DMs from extracting the features of artistic works based on Monte-Carlo estimation, which provides a powerful tool for artists to protect their copyrights.

4.2. Traceability

4.2.1. Watermarking

Digital watermarking (Liu et al., 2023d) is a technique used to inject visible or hidden identified information into digital media. The use of digital watermarking in AIGC can achieve a variety of functions:

-

•

Copyright protection: By embedding watermarks with unique identified information, the source and ownership of the data can be traced and proved.

-

•

Authenticity detection: By detecting and identifying the watermark information, it is easy to confirm whether the data is generative and even which models generate it.

-

•

Accountability: It is possible to track and identify the content’s dissemination pipelines and usage, further ensuring accountability.

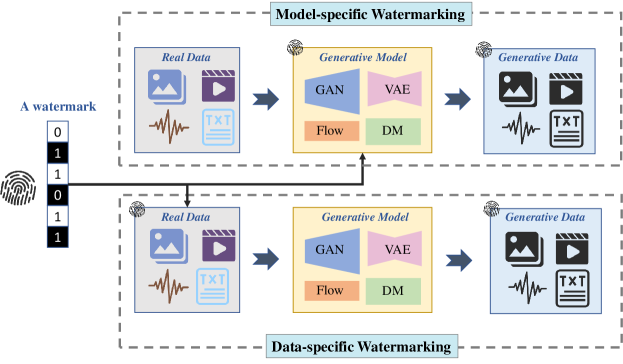

Depending on whether the watermark is directly produced by the generative model or not, existing works can be categorized into model-specific watermarking and image-specific watermarking, which is shown in Fig. 6.

Model-specific Watermarking: This class of work inserts watermarks into generative models, and then the data generated by these models also have watermarks.

Yu et al. (Yu et al., 2021) and Zhao et al. (Zhao et al., 2023) implanted watermarking in training data to retrain GANs or DMs from scratch, respectively. Watermarking can also exist in generative data, as they would learn the distribution of the training data. Compared to GANs which directly embed control information into deep features, DMs embed control information multiple times by progressive random denoising, which can improve steganography and stability. Therefore, DMs have the potential to be better in controllability. Stable signature (Fernandez et al., 2023) integrates image watermarking into latent diffusion models. By fine-tuning the latent decoder, the generated data would contain invisible and robust watermarks, i.e., binary signatures, which support after-the-fact detection and identification of the generated data. Cheng et al. (Xiong et al., 2023) introduced a flexible and secure watermarking. The watermark can be altered flexibly by modifying the message matrix, without retraining the model. Additionally, attempts to evade the use of the message matrix result in degraded generated quality, thereby enhancing the security.

Some works (Liu et al., 2023b) can only generate watermarked data when specific triggers are activated. Liu et al. (Liu et al., 2023b) injected watermarking into the prompt of LDMs and proposed two different methods, namely NAIVEWM and FIXEDWM. NAIVEWM activates the watermarking with a watermark-contained prompt. FIXEDWM enhances the stealthiness compared to NAIVEWM, as it can only activate the watermarking when the prompt contains a trigger at a predefined position. PromptCARE (Yao et al., 2024) is a practical a prompt watermark for prompt copyright protection. When unauthorized large models are trained using prompts, copyright owners can input the trigger to verify whether the output contains the specified watermark.

Zeng et al. (Zeng et al., 2023) constructed a universal adversarial watermarking and injected it into an arbitrary pre-trained generative model via fine-tuning. The optimal universal watermarking can be found through adversarial learning against the watermarking detector. Practicably, the secured generative models can share the same watermarking detector, eliminating the need for retraining the detector when it comes to new generators. As the size of the generative model increases, the design of model-specific watermarking will pay more attention to how to use a small number of samples to update a small number of parameters, thereby reducing resource consumption.

Data-specific Watermarking: This class of work (Ma et al., 2023c; Liu et al., 2023c; Cui et al., 2023; Zhao et al., 2024) inserts watermarks to the input data, and then the generative data retains the watermarks. GenWatermark (Ma et al., 2023c) adds a watermark to the original face image, preventing it from malicious manipulation. To enhance the retention of the watermark in the generated image, the generation process is incorporated into the learning of GenWatermark by fine-tuning the watermark detector. To prevent copyright infringement arising from DMs, DiffusionShield (Cui et al., 2023) injects the ownership information into the image. Owing to the uniformity of the watermarks and the joint optimization method, DiffusionShield enhances the reproducibility of the watermark in generated images and the ability to embed lengthy messages. Feng et al. (Feng et al., 2023) proposed the concept watermarking that embeds identifiable information of users within the used concept. This allows tracking and holding accountable malicious users who abuse the concept. Liu et al. (Liu et al., 2024d) introduced a timbre watermarking with robustness and generalization. The timbre of the target individual can be embedded with a watermark. When subjected to voice cloning attacks, the watermark can be extracted to effectively protect timbre rights.

| Ref. | Year | Remarks | Limitations | |

| Access Control | (Yeh et al., 2021) | 2021 | Paradigm of nullifying generation | Lack of controllability |

| (Li et al., 2023) | 2023 | Paradigm of setting new identity | Weak generalizability | |

| (Zhu et al., 2023) | 2023 | Double protection | Poor visual quality | |

| (Shan et al., 2023) | 2023 | User-friendly, feasible user study | Weak stability and security | |

| (Van Le et al., 2023) | 2023 | Personalized defense, ensemble models | Complex setting | |

| (Liang et al., 2023) | 2023 | Theoretical framework, small perturbations | Weak robustness, inflexible | |

| (Liu et al., 2024c) | 2024 | Transferability, robustness | Additional noise layers | |

| Traceabi-lity | (Zhao et al., 2023) | 2023 | Pioneering watermarking for DMs | Low generation quality |

| (Fernandez et al., 2023) | 2023 | Invisibility, strong stability | Limited capacity, inexplicable | |

| (Xiong et al., 2023) | 2023 | Flexible embedding, additional security | Lack of noise robustness | |

| (Yao et al., 2024) | 2024 | Harmlessness, robustness, stealthiness | Accuracy drop for extreme cases | |

| (Yang et al., 2024b) | 2024 | Provable performance-lossless | Reliance on DDIM inversion | |

| (Zeng et al., 2023) | 2023 | Universality, carrying extra information | Limited capacity, weak security | |

| (Ma et al., 2023c) | 2023 | Subject-driven protection, practicability | Weak cross-model transferability | |

| (Feng et al., 2023) | 2023 | Paradigm of concept watermarking | Limited robustness and security | |

| (Liu et al., 2024d) | 2024 | Robustness and generalization in voice | Dependent on noise layers | |

| (Liu et al., 2024b) | 2024 | Trustworthy and reliable management | Resource-intensive, unpractical |

4.2.2. Blockchain

Distributed ledger-based blockchain can be used to explore a secure and reliable AIGC-generated content framework.

-

•

Transparency: Blockchain can be used to enable transparent traceability of generative data. Each generative data can be recorded in a block in the blockchain and associated with the corresponding transaction or generation process. This enables users and regulators to understand the source and complete generation path of the generative data.

-

•

Copyright protection: Blockchain can provide a reliable mechanism for copyright protection of generative data. By recording copyright information on the blockchain, it can be ensured that generative data is associated with a specific copyright owner and is available for verification. This can reduce unauthorized use and infringement and provide content creators with evidence of copyright protection.

-

•

Decentralized content distribution: Generative data is stored in a distributed manner across the blockchain network, rather than centrally stored on a single server. This improves the availability and security of generative data and reduces the risk of single points of failure and data loss.

-

•

Rewards and incentives: Through smart contracts, the blockchain can automatically distribute rewards for generative data and ensure a fair and transparent distribution mechanism. This can incentivize contributors to provide higher quality and more valuable generative content.

Du et al. (Liu et al., 2024b) proposed a blockchain-empowered framework to manage the lifecycle of AIGC-generated data. Firstly, a protocol to protect the ownership and copyright of AIGC is proposed, called Proof-of-AIGC, deregistering plagiarized generative data and protecting users’ copyright. Then, they designed an incentive mechanism with one-way incentives and two-way guarantees to ensure the legal and timely execution of AIGC ownership exchange funds between anonymous users. AIGC-Chain(Jiang et al., 2024) carefully records the entire life cycle of AIGC products, providing a transparent and reliable platform for copyright management.

4.3. Summary

In table 3, we summarize the solutions for the controllability of generative data in AIGC. We emphasize the discussion on the access control and traceability of generative data. By implementing them, we can protect the security and privacy of generative data, ensuring its credibility and reliability and providing robust support for the compliant use of the data. In addition, adversarial attacks can also have an impact on the controllability of generative data. On the one hand, adversarial attacks can attack the watermark remover to prevent the loss of controllable information, which achieves stable controllability of generative data. On the other hand, adversarial attacks can also attack the watermark extractor to prevent the extraction of controllable information, which exacerbates the controllability challenge on generative data.

5. Authenticity on Generative Data

5.1. Threats to Authenticity

Dramatic advances in generative models have made significant progress in generating realistic data, reducing the amount of expertise and effort required to generate fake content. However, this unrestricted accessibility raises concerns about the ubiquitous spread of misinformation. Fake images are particularly convincing due to their visual comprehensibility. As a result, malicious users can generate harmful content to manipulate public opinion, thereby negatively impacting social domains, e.g., politics and economics. For example, the fake tweet with the generated image of “a large explosion near the Pentagon complex” went viral, fooling many authoritative media accounts into reprinting them and even causing the stock market to suffer a significant drop. Current state-of-the-art generative models already pose a greater threat to human visual perception and discrimination. When distinguishing between real images and generative images, the error rate of human observers reaches 38.7% (Lu et al., 2023a).

Typically, Deepfake (Mirsky and Lee, 2021) possesses the capacity to fabricate visually realistic fake content by grafting the identity of one individual onto the image or video of another. Unfortunately, the open-source nature of the technology allows criminals to commit malicious forgeries without the need for significant expertise, thereby engendering a multitude of societal risks (Korshunov and Marcel, 2018; Aliman and Kester, 2022). For instance, this includes replacing the protagonist in pornographic videos with a celebrity face to affect the celebrity’s reputation, faking videos of speeches of politicians to manipulate national politics, and faking an individual’s facial features to pass authentication in assets management.

Many fake detection methods (Verdoliva, 2020) have been proposed to detect modified data by AI. However, these methods still have vulnerabilities and limitations (Hussain et al., 2021). On the one hand, some methods rely too heavily on traditional principles or pattern matching. They are difficult to capture the evolving new patterns of in-depth AIGC, which allows generative data to escape detection. On the other hand, existing methods have limited capabilities when dealing with new challenges under large models. Large models have higher generative power and creativity, making the generative data more difficult to distinguish. A recent study (Pegoraro et al., 2023) provided insights into the various methods used to detect ChatGPT-generated text. The study highlighted the extraordinary ability of Chat-GPT spoofing detectors and further shows that most of the analyzed detectors tend to classify any text as human-written with an overall TNR as high as 90% and a low TPR. Therefore, there is a requirement to continuously improve the existing detector to effectively deal with the problem of disinformation and misuse of generative data.

5.2. Countermeasures to Authenticity

In Fig. 7, existing countermeasures (Lin et al., 2024) mainly consider constructing a detector to distinguish between real data and generative data. Further, generative attribution can trace the generative data back to the model that generate it.

5.2.1. Generative Detection

Generative visual detection: The presence of artifacts in generative images is an important detection cue, which may derive from defects in the generation process or from a specific generative architecture. Corvi et al. (Corvi et al., 2023) gave a preliminary trial to the problem of detecting generative images produced by DMs. Their study showed that the hidden artifact features of images of DMs are partially similar to those observed in images of GANs. Both GANs and DMs leave artifacts on generative data, but only the artifacts are different. Due to the instability of the adversarial training process between the generator and the discriminator, GANs generate unnatural artifacts, e.g., blurred edges and inconsistent textures. While DMs retain small noise features during the stepwise denoising process, which can lead to more natural noise or detail distortion. Xi et al. (Xi et al., 2023) developed a robust dual-stream network consisting of a residual stream and a content stream to capture generic anomalies generated by AIGC. The residual stream utilizes the spatial rich model (SRM) to extract various texture information from images, while the content stream captures additional artifact traces at low frequencies, thus supplementing the residual stream with information that may have been missed. Sinitsa et al. (Sinitsa and Fried, 2024) presented a rule-based method that can achieve high detection accuracy by training a small number of generative images (less than 512). The method employs the inductive bias of CNNs to extract fingerprints of different generators from the training set and applies it to detect generative images of the same model and its fine-tuned versions. Joslin et al. (Joslin et al., 2024) introduced human factors to enhance generative detection. Detecting AI-synthesized faces by combining attentional learning methods with user annotations. They also created a crowdsourcing annotation method to systematically gather various user annotations to identify suspicious areas and extract artifact patterns. With the performance optimization that comes from a larger parametric model, the generative data will more closely mimic the original data while being able to circumvent artifacts, which increases the difficulty of generative detection.

Analyzing distinctive features of generated images is also a viable approach to consider. Interestingly, Wang et al. (Wang et al., 2023a) observed that the image generated by the DMs can be reconstructed by approximating the source model, while the real image cannot. Therefore, they proposed a novel image representation called diffusion reconstruction error (DIRE), which measures the distance between the input images and the reconstructed one. DIRE provides a reliable, simple, and generalized method to differentiate between real images and diffusion-generated images. SeDID (Ma et al., 2023a) leverage the unique properties of diffusion models, namely deterministic inverse and deterministic denoising computational error. In addition, its use of insights from member inference attacks to emphasize distributional differences between real and generative data enhances the understanding of the security and privacy implications of diffusion models. Zhong et al. (Zhong et al., 2023) focused on the inter-pixel correlation contrast between rich and poor texture regions within an image, and presented a universal detector which can generalize to various AI models, including GAN-based and DM-based models. Existing state-of-the-art detectors have a generalization of cross architectures, but the generalization of cross concepts is not considered. To this end, Dogoulis et al. (Dogoulis et al., 2023) proposed a sampling strategy that takes into account the image quality scores of the sampled training data, and can effectively improve the detection performance in the cross-concept setting.

Innovatively, Bi et al. (Bi et al., 2023) explored the invariance of real images, and proposed a method to map real images to a dense subspace in the feature space, while all generative images are projected outside this subspace. In this way, it can effectively address longstanding issues in generative detection, e.g., poor generalization, high training costs, and weak interpretability.

Generative text detection: Metric-based detection extracts distinguishable features from the generative text. Early on, GLTR (Gehrmann et al., 2019) was a tool to assist humans in detecting generated text. It employs a set of baseline statistical methods that can detect generation artifacts in common sampling schemes. In a human subject study, the annotation scheme provided by GLTR improved human detection of fake text from 54% to 72% without any prior training. Mitchell et al. (Mitchell et al., 2023) noticed that the texts sampled from LLMs tend to occupy negative curvature regions of the model’s log probability function. Based on this, DetectGPT is proposed to set a new curvature-based criterion for detection without additional training. Tulchinskii et al. (Tulchinskii et al., 2024) proposed a new distinguishable representation, the intrinsic dimension. Fluent texts in natural languages have an average intrinsic dimension of 9 or 7 in each language, while AI-generated texts have a lower average intrinsic dimension of 1.5 in each language. Detectors constructed on the basis of intrinsic dimensionality have strong generalizability to models and scenarios.

Regarding the model-based methods (Guo et al., 2023; Chen et al., 2023a), a classification model is usually trained using a corpus. Guo et al. (Guo et al., 2023) proposed a text detector for ChatGPT. The detector is based on the RoBERTa model, which is trained by plain answer text and question-answer text pairs respectively. Chen et al. (Chen et al., 2023a) trained two different text classification models using robustly optimized BERT pretraining approach (RoBERTa) and text-to-text Transfer Transformer (T5), respectively, and achieved significant performance on the test dataset with an accuracy of more than 97%.

| Ref. | Year | Remarks | Limitations | ||

|---|---|---|---|---|---|

| Genera-tive Detection | (Corvi et al., 2023) | 2023 | Pioneering detection for DMs | Lack of JPEG robustness | |

| (Sinitsa and Fried, 2024) | 2024 | Low budget, multi-model applicable | Lack of blurring robustness | ||

| (Wang et al., 2023a) | 2023 | Strong interpretability | High resource consumption | ||

| (Zhong et al., 2023) | 2023 | Universal to DMs and GANs | Poor results on special models | ||

| (Ma et al., 2023a) | 2023 | Use of DM distinct property | Not applicable to GANs | ||

| (Dogoulis et al., 2023) | 2023 | Generalizing cross-conceptes | Not high detection performance | ||

| (Bi et al., 2023) | 2023 | High generalization, low costs | Imperfect JPEG robustness | ||

| (Gehrmann et al., 2019) | 2019 | Training-free, numerically calculable | Unsatisfactory detection accuracy | ||

| (Mitchell et al., 2023) | 2023 | Human-readable, high-efficiency | Strong white-box assumption | ||

| (Tulchinskii et al., 2024) | 2024 | Generalization and robustness | Failure to small-sample languages | ||

| (Guo et al., 2023) | 2023 | Large-scale data, human evaluations | Resource-intensive, poorly explained | ||

| (Chen et al., 2023a) | 2023 | High accuracy, explicable | Not scalable, only for English | ||

| Genera-tive Attribution | (He et al., 2024) | 2024 | Systematic quantification | Medium attribution performance | |

| (Bui et al., 2022) | 2022 | Robust and practical attribution | Only ofr GANs, noise-irrobustness | ||

| (Yang et al., 2023) | 2023 | Open-Set model attribution | Moderate versatility and scalability | ||

| (Sha et al., 2023) | 2023 | Pioneering attribution for DMs | Lack of generalizability | ||

| (lor, 2023) | 2023 | Lightweight, superior performance | Difficult to scale to other models | ||

| (Guarnera et al., 2024) | 2024 | Hierarchical multi-level | Unrobust in compression and scaling | ||

| (Wang et al., 2023b) | 2023 | Paradigm of data attribution | Lack of strict proof | ||

| (Asnani et al., 2024) | 2024 | Paradigm of concept attribution | Requires predefined concepts |

5.2.2. Generative Attribution

He et al. (He et al., 2024) extended current detectors to the potential of text attribution to recognize the source model of a given text. The results show that all these detectors have certain attribution capabilities and still have room for improvement. Moreover, model-based detectors can significantly outperform metric-based detectors.

For visual generative data, a lot of attribution works on GANs (Yu et al., 2019; Bui et al., 2022; Yang et al., 2022; Girish et al., 2021; Yang et al., 2023) has been proposed. RepMix (Bui et al., 2022) is a GAN-fingerprinting technique based on representation mixing and a novel loss. It is able to determine from which structure of GAN a given image is generated. POSE (Yang et al., 2023) tackles an important challenge, i.e., open-set model attribution, which can simultaneously attribute images to seen and unseen models. POSE simulates open-set samples that keep the same semantics as closed-set samples but embed distinct traces.

.

Recent works have begun to focus on DMs. Sha et al. (Sha et al., 2023) constructed a multi-class (instead of binary) classifier to attribute fake images generated by DMs. Experiments showed that attributing fake images to their originating models can be achieved effectively, because different models leave unique fingerprints in their generated images. Lorenz et al. (lor, 2023) designed the multi-local intrinsic dimensionality (multiLID), which is effective in identifying the source diffusion model. Guarnera et al. (Guarnera et al., 2024) developed a novel multi-level hierarchical approach based on ResNet models, which can recognize the specific AI architectures (GANs/DMs). The experimental results demonstrate the effectiveness of the proposed approach, with an average accuracy of more than 97%.

Intriguingly, a new work (Wang et al., 2023b) can attribute generative data to the training data rather than the source model, necessitating the identification of a subset of training images that contribute most significantly to the generated data. In Fig. 8, we can query the generated data in the training set and evaluate their similarity, which can contribute to protecting the copyright of training data rather than models.

5.3. Summary

In table 4, we summarize the solutions for the authenticity of generative data. Generative data contains traces (referred to as fingerprints) left by generative models, allowing researchers to detect and attribute the data based on these fingerprints. However, as generative models undergo iterative optimization, these fingerprints also continuously evolve. Therefore, new detection methods must be updated in real time. In contrast, real data exhibits certain invariable features that remain unaltered over time or under varying circumstances. Future detection methods should be designed to effectively harness these invariable features, thereby enhancing the accuracy and robustness of detecting generated data. Furthermore, watermark-based methods have demonstrated notable potential in enhancing tasks related to detection and attribution. However, it’s important to note that such methods constitute an active defense strategy, necessitating preprocessing during data generation. In real-world scenarios, constraining adversaries to exclusively employ watermarked generative data may be not feasible. In addition, adversarial attacks can also have an impact on the controllability of generative data. On the one hand, adversarial attacks can attack generative models to prevent them from generating inauthentic content, which ensures the authenticity of generative data at the source. On the other hand, adversarial attacks can attack generative detectors to obfuscate their decisions, which exacerbates the authenticity challenge on generative data.

6. Compaliance on Generative Data

6.1. Requirements to Compaliance

The compliance of generative data refers to the requirement that such data must adhere to applicable laws, regulations, and ethical standards. With the rapid development and extensive utilization of AIGC technology, the compliance of generative data has become an important topic, encompassing various aspects, e.g., ethics, bias, and politics.

Countries and organizations around the world have initiated investigations and issued relevant policies and regulations regarding the regulation of generative data. The United States’ Blueprint for an AI Bill of Rights emphasizes generative data to ensure fairness, privacy protection, and accountability. European Parliament passed the Artificial Intelligence Act, which supplements the regulatory regime for generative models and requires that all generative data should be disclosed as derived from AI. China proposed an AIGC-specific regulation, i.e., the Interim Regulation on the Management of Generative Artificial Intelligence Services (int, 2023). This regulation encourages innovation of AIGC but requires a prohibition on the generation of toxicity, e.g., violence, bias, and obscene pornography, as well as an increase in the factuality of the generative data to avoid misleading the public.

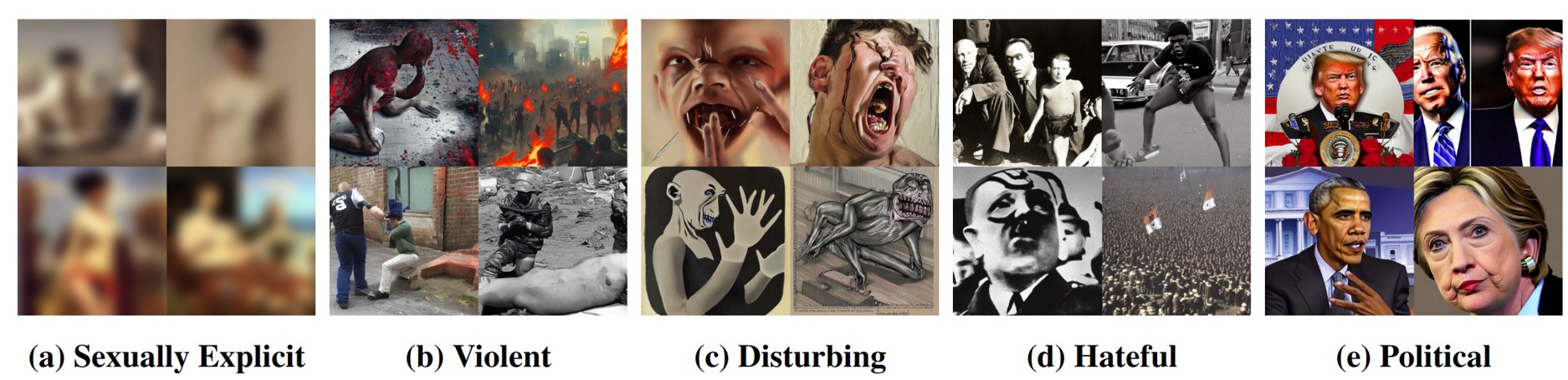

6.1.1. Non-toxicity

Toxicity presents in generative data involves incongruence with human values or bias directed at particular groups, which has the potential to harm societal cohesion and intensify divisions among different groups. Since the training of AIGC models is based on a large amount of unintervened data, toxicity in training data (Birhane et al., 2021) directly leads to the corresponding toxicity in generative data, which covers a variety of harmful topics, e.g., sexuality, hatred (Birhane et al., 2024), politicalization, violentism, and race bias. Some toxic generative examples are shown in Fig. 9.

Some works (Caliskan et al., 2017; Sheng et al., 2019) have found stronger associations between males and occupations in language models, verifying the gender bias in generative data. The investigations revealed that GPT-3 consistently and strongly exhibits biased views against the Muslim community (Abid et al., 2021). Stable Diffusion v1 was trained on the LAION-2B dataset, which contains images described only in English, making generative data biased towards white culture . Likewise, it was observed that DALLA-E displayed unfavorable biases towards minority groups.

Unlike GANs that only use random noise to generate data, DMs can be guided by additional textual prompts, which increases the risk of generating non-compliant content. As a result, researchers primarily focus on the compliance of data generated by DMs. Qu et. al (Qu et al., 2023) provided a comprehensive safety assessment concerning the generation of toxic images, particularly hateful memes from diffusion models. To quantitatively assess the safety of generative images, a safety classifier is developed to identify toxic images based on the predetermined criteria for unsafe content. Their findings indicated that the utilization of harmful prompts resulted in diffusion models producing a significant quantity of toxic images. Additionally, even when prompts are innocuous, the potential for generating toxic images persists. Overall, the danger of large-scale generation of toxic images is imminent.

6.1.2. Factuality

AI-generated data may be contrary to the facts (Wang et al., 2023c), which is harmful to the public through misleading cognition. For example, ChatGPT may produce responses that sound reasonable and authoritative but are factually incorrect or nonsensical. Even worse, AIGC often explains its generated responses. When AIGC fails to provide accurate responses to the queries, it not only delivers incorrect information but also supplements seemingly plausible explanations. This enhances the inclination of users to place greater trust in these erroneous contents. The United States news credibility assessment and research organization, NewsGuard, conducted a test on ChatGPT (nyt, 2023). Researchers posed questions to ChatGPT containing conspiracy theories and misleading narratives and found that it could adapt information within seconds, generating a substantial amount of persuasive yet unattributed content.

When used in important domains, such unfactual generative data will bring serious harmful impacts (Bender et al., 2021). In the healthcare domain, medical diagnosis requires interpretable and correct information. Once AI-generated diagnostic advice is factually incorrect, it will cause irreparable harm to the patient’s life and health. In the journalism domain, news that distorts the facts will mislead the public and undermine the credibility of the media. In the education domain, the dissemination of incorrect knowledge to students will confuse their minds, thus seriously hampering their academic growth and cognitive development.

6.2. Countermeasures to Compaliance

6.2.1. Countermeasures to Non-toxicity

Efforts to eliminate toxicity can be divided into four categories. The first one is dataset filtering. A non-toxic training dataset is key to ensuring the security of generative data. Some works (Schuhmann et al., 2022; DAL, 2022; Henderson et al., 2022) have implemented comprehensive processes to filter data contained toxic. OpenAI ensures that any violent or sexual content is removed from DALLA-E2 by carefully filtering (DAL, 2022). Henderson et. al (Henderson et al., 2022) demonstrated how to extract implicit sanitization rules from the Pile of Law, providing researchers with a pathway to develop more sophisticated data filtering mechanisms. However, large-scale dataset filtering also has unexpected side effects on the downstream performance (Nichol et al., 2021).

The second one is generation guidance. Ganguli et al. (Ganguli et al., 2022) identified and attempted to reduce the potentially harmful output of language models in a confrontational manner. They found that the reinforcement learning from human feedback is increasingly difficult to red team as they scale, and a flat trend with scale for the other model types. Brack et al. (Brack et al., 2023) investigated to instruct effectively diffusion models to suppress inappropriate content using the learned knowledge obtained about the world’s ugliness, thus producing safer and more socially responsible content. Similarly, safe latent diffusion (SLD) (Schramowski et al., 2023) extends the generative process via utilizing toxic prompts to guide the safe generation in an opposing direction.

The third one is model fine-tuning. Recently, a new term called the ablation concept or ablation forgetting (Gandikota et al., 2023; Kumari et al., 2023; Heng and Soh, 2024) has brought a novel direction to the elimination of toxic content in generative data. Gandikota et. al (Gandikota et al., 2023) studied the erasure of toxic concepts from diffusion model weights via model fine-tuning. The proposed method utilizes an appropriate style as a teacher to guide the ablation of the toxic concepts, e.g., sexuality and copyright infringement. Selective Amnesia (Heng and Soh, 2024) is a generalized continuous learning framework for concept ablation that applies to different model types and conditional scenarios. It also allows for controlled ablation of concepts that can be specified by the user. However, the ablation concept’s ability to explain the various definitions of toxic concepts remains limited. Inspired with social psychological principles, Xu et al. (Xu et al., 2024b) proposed a novel strategy to motivate LLM to integrate different human perspectives and self-regulate their responses.

Lastly, filtering the generated results is also a viable option. Stable Diffusion includes an after-the-fact safety filter to block toxic images. Unfortunately, the filter easily blocks any generated image that is too close to at least one of the 17 predefined sensitive concepts. Rando et. al (Rando et al., 2022) reverse-engineered this filter and then proposed a manual strategy that enables content not related to sensitive concepts to bypass the filter. In addition, existing toxicity detectors (Markov et al., 2023; Lu et al., 2023c) for real data may be able to be updated to be compatible with generative data. This self-correcting mechanism can significantly reduce toxicity and bias.

6.2.2. Countermeasures to Factuality

In order to constrain the non-factualness caused by AIGC “lying”, Evans et al. (Evans et al., 2021) first identified clear standards for AI truthfulness and explored potential ways to establish them.

| Ref. | Year | Remarks | Limitations | ||

|---|---|---|---|---|---|

| Non-toxicity | (Henderson et al., 2022) | 2022 | Legally guaranteed | Only for U.S. texts, perturbation-sensitive | |

| (Ganguli et al., 2022) | 2022 | The idea of constant confrontation | Time-consuming, difficult to scale | ||

| (Rando et al., 2022) | 2022 | Comprehensive toxicity concept | Takes a lot of labor, subjective definition | ||

| (Brack et al., 2023) | 2023 | World knowledge guidance | Need to modify the model | ||

| (Schramowski et al., 2023) | 2023 | Free-train, maintain quality | Lack of provability and ethical concern | ||

| (Heng and Soh, 2024) | 2024 | Controllable forgetting | Computing costly, non-automated | ||

| (Xu et al., 2024b) | 2024 | Self-regulate, low cost | Ignorance of sensitive vocabularies | ||

| Factuality | (Lee et al., 2022) | 2022 | Open-ended test, new metrics | Lack of moral consideration | |

| (Alaa et al., 2022) | 2022 | Three-dimensional metric | Divergence measures collapse | ||

| (Azaria and Mitchell, 2023) | 2023 | Simple and powerful | Difficult to interpret | ||

| (Du et al., 2023) | 2023 | Comprehensible, reasonable | Large resource consumption | ||

| (Gou et al., 2024) | 2024 | Practical and simple | Limited to syntactic correctness | ||

| (Chen et al., 2024a) | 2024 | Without extra models or human | Difficulty in controlling mistake | ||

| (Zhang et al., 2024a) | 2024 | Learned refusal ability | Lack of rigorous evaluation |

A reasonable assessment of content factuality is the critical step toward responsible generative data. Goodrich et al. (Goodrich et al., 2019) constructed relation classifiers and fact extraction models based on Wikipedia and Wikidata, by which the factual accuracy of generated text can be measured. Lee et al. (Lee et al., 2022) proposed a novel training method to enhance factuality by utilizing TOPIC PREFIX for better perception of facts and sentence completion as the training objective, which can significantly decrease the number of counterfactuals. They also study the factual accuracy of LLMs with parameter sizes ranging from 126M to 530B and find that the larger the model, the higher the accuracy. This is because the model has a large enough capacity to learn more generalized knowledge, thus reducing the occurrence of counterfactuals.

Alaa et al. (Alaa et al., 2022) designed a three-dimensional metric capable of characterizing the fidelity, diversity, and generalization of generative data from widely generative models in a wide range of applications. SAPLMA (Azaria and Mitchell, 2023) is a simple but powerful method, which only uses the hidden layer activation of LLM to discriminate the factuality of generated statements.

Interestingly, Du et al. (Du et al., 2023) prompted multiple language models to debate their viewpoints and reasoning processes over multiple rounds, and finally come up with a unitive answer. The results indicate that it enhances mathematical and strategic reasoning in the task while reducing the fallacious answers and illusions that modern models are prone to. CRITIC (Gou et al., 2024) requires LLMs to interact with appropriate tools for feedback learning, such as using a search engine for fact-checking or a code interpreter for debugging. The output of the LLM is modified incrementally by the evaluation results of the feedback by the tools. Chen et al. (Chen et al., 2024a) presented a new alignment framework designed to improve LLMs by converting flawed instruction-response pairs into useful alignment data through mistake analysis. By generating harmful responses and analyzing their own mistakes, the framework can improve the alignment with human values. To prevent LLMs from rambling without knowing the answer, Zhang et al. (Zhang et al., 2024a) taught LLMs the ability to refuse to answer. Specifically, they constructed datasets of refusal perception, and then adapted the model to avoid answering questions that were beyond its parametric knowledge.

6.3. Summary

In table 5, we summarize the solutions for toxicity and factuality in generative data. The first problem to be solved for the compliance of generative data is to define its standards. In addition, there should be different compliance standards for different application scenarios, rather than a blanket denial of generative content. For example, “a flying pig” may not be factual for a language model, but it is more creative for a visual model. After that, the generated content of AI can be made compliant with the specification within the constraints of the standard. In addition, adversarial attacks can also have an impact on the controllability of generative data. On the one hand, adversarial attacks can attack the generative model to prevent it from learning the non-compliant content in the real data, which enhances the compliance of generative data. On the other hand, adversarial attacks can also attack compliance detectors to evade their detection, which exacerbates the compliance challenge for generative data.

In conclusion, we emphasize the examination of toxicity and factuality issues within the context of compliance to ensure the responsible and ethical use of AI-generated content across various domains. Nevertheless, existing approaches exhibit certain limitations, nec essitating continuous efforts by researchers to address these shortcomings and better align with the practical requirements of real-world applications.

7. Benchmark and Statistical Analysis

7.1. Benchmark

7.1.1. Benchmark Dataset

The construction of generative datasets facilitates the design and evaluation of countermeasures, helping researchers to identify problems and improve techniques, thus advancing the progress of trustworthy generative data. Researchers have now released diverse datasets for utilization.

For text data, most datasets are domain-specific, e.g., Student Essays (Verma et al., 2023) for essays, TuringBench (Uchendu et al., 2021) for news, GPABenchmark (Liu et al., 2023e) for academic writing, SynSciPass (Rosati, 2022) for scientific text, TweepFake and (Fagni et al., 2021) for tweets. Representatively, HC3 (Guo et al., 2023) is a comprehensive ChatGPT generative text that contains tens of thousands of responses with human experts and covers a wide range of domains such as finance, healthcare, law, etc. The pioneering contributions of HC3 also make it a valuable research resource. More practically, MixSet (Zhang et al., 2024b) is the first mixed-text dataset involving both AIGC and human-generated content, covering a wide range of operations in real-world scenarios and bridging a gap in previous datasets.

For visual data, only some datasets are domain-specific mainly in faces such as IDiff-Face (Boutros et al., 2023) and GANDiffFace (Melzi et al., 2023), and most datasets contain general images such as CIFAKE (Bird and Lotfi, 2024) and AutoSplice (Jia et al., 2023). While, they have different limitations, e.g., targeting only a certain class of images or generators, and containing only a small amount of data. Subsequently, several million-scale datasets are released like GenImage (Zhu et al., 2024) and ArtiFact (Rahman et al., 2023), which have the richness of image content and adopt state-of-the-art generators. To the best of our knowledge, DiffusionDB (Wang et al., 2022a) represents the largest-scale visual generative dataset to date. It comprises 14 million images generated only by Stable Diffusion. This unprecedented scale and diversity offer exciting research opportunities for the study of generative image protection.

7.1.2. Benchmark Evaluation

The construction of benchmark evaluation provides a standardized baseline, which evaluates the effectiveness and innovativeness of new methods by enabling different methods to be fairly compared under the same testing conditions. Meanwhile, it can ensure the reproducibility of research and clearly identify the shortcomings of existing techniques, thus promoting technological progress. We identified the corresponding benchmarks for different information security properties.

For privacy, PrivLM-Bench (Li et al., 2024b) is a multi-perspective privacy evaluation benchmark that empirically and intuitively quantifies the privacy leakage of LLMs and reveals the neglected privacy of inference data in actual usage. Wu et al. (Wu et al., 2024) provided the first comprehensive privacy assessment of prompts learned via visual prompt learning from the perspectives of attribute inference and membership inference attacks.

For controllability, WaterBench (Tu et al., 2023) is the first comprehensive benchmark for watermarking LLMs, which encompasses 9 tasks and evaluates 4 open-source watermarking technologies. WAVES (An et al., 2024) is a comprehensive watermarking benchmark, which establishes a standardized evaluation protocol consisting of various pressure tests and covers advanced image distortion attacks.

For authenticity, DeepfakeBench (Yan et al., 2023) introduces the first all-encompassing benchmark for detecting deepfakes of generative data, addressing the problem of inconsistent standards and lack of uniformity in this area. Lu et al. (Lu et al., 2023b) comprehensively evaluated techniques used for generative detection and also measured the ability of human vision to discriminate between generative data.