SecureBoost+ : Large Scale and High-Performance Vertical Federated Gradient Boosting Decision Tree

Abstract.

Gradient boosting decision tree (GBDT) is an ensemble machine learning algorithm, which is widely used in industry, due to its good performance and easy interpretation. Due to the problem of data isolation and the requirement of privacy, many works try to use vertical federated learning to train machine learning models collaboratively with privacy guarantees between different data owners. SecureBoost is one of the most popular vertical federated learning algorithms for GBDT. However, in order to achieve privacy preservation, SecureBoost involves complex training procedures and time-consuming cryptography operations. This causes SecureBoost to be slow to train and does not scale to large scale data.

In this work, we propose SecureBoost+, a large-scale and high-performance vertical federated gradient boosting decision tree framework. SecureBoost+ is secure in the semi-honest model, which is the same as SecureBoost. SecureBoost+ can be scaled up to tens of millions of data samples easily. SecureBoost+ achieves high performance through several novel optimizations for SecureBoost, including ciphertext operation optimization, the introduction of new training mechanisms, and multi-classification training optimization. The experimental results show that SecureBoost+ is 6-35x faster than SecureBoost, but with the same accuracy and can be scaled up to tens of millions of data samples and thousands of feature dimensions.

1. Introduction

With the rapid development of information technology, a large number of valuable data have been produced in various industrial fields. Artificial intelligence technology, especially deep learning, has made amazing progress in mining massive amounts of data. However, data is also a key constraint on the application of AI. In a real-world scenario, data is stored in different organizations or different departments of the same enterprise, forming a phenomenon known as data isolation. With the increasing attention of the whole society to data privacy protection, most countries are developing laws and regulations related to data privacy protection. For example, the European Union has enacted the General Data Protection Regulation (GDPR) (Voigt and Von dem Bussche, 2017), which aims to strengthen the security of user data privacy. This trend brings new challenges to cross-organizational data sharing and AI applications.

Federated learning (FL) (McMahan et al., 2017; Yang et al., 2019b), a distributed collaborative machine learning paradigm, is a promising approach to deal with the data isolation challenge. It enables local models to benefit from all parties while keeping local data private (Kairouz et al., 2021). In addition, combined with various cryptography techniques, local data privacy can be effectively protected. Federated learning can be generally divided into vertical federated learning (Liu et al., 2024), horizontal federated learning, and federated transfer learning. Vertical federated learning on logistic regression (Hardy et al., 2017; Chen et al., 2021; Yang et al., 2019a), GBDT (Cheng et al., 2021), neural network (Zhang et al., 2018; Zhang and Zhu, 2020; Fu et al., 2022; Kang et al., 2022a, b), transfer learning (Liu et al., 2020), etc, have been previously studied.

Gradient boosting decision tree (GBDT) is an ensemble machine learning algorithm that is widely used in industry (Chen and Guestrin, 2016; Ke et al., 2017; Dorogush et al., 2018) due to its good performance and easy interpretation. For example, GBDT and its variants are usually used in recommendation (Ke et al., 2017; Shahbazi and Byun, 2019), fraud detection (Cao et al., 2019), and click-through rate prediction (Wang et al., 2018) tasks with large-scale data. Due to the advantage of GBDT, many works try to apply vertical federated learning to GBDT model (Cheng et al., 2021; Fu et al., 2021; Li et al., 2020). Our previous work, SecureBoost (Cheng et al., 2021), is one of the most popular vertical federated learning algorithms for GBDT. However, as far as we know, some previous studies have focused more on the security of algorithms and less on the gap between research and industrial applications. Some works are only tested on public experimental data sets instead of million-scale or high-dimensional data, which are common in real-world scenarios, and some works are tested on relatively larger data sets but can not be completed in a reasonable time. And according to our practical experiences, the performance of SecureBoost is still unsatisfactory.

In this work, we propose SecureBoost+, a large-scale and high-performance vertical federated gradient boosting decision tree framework. It is developed based on the work SecureBoost (Cheng et al., 2021), which has been integrated to FATE (Liu et al., 2021). The main contributions of SecureBoost+ are:

-

•

We propose a ciphertext operation optimization framework based on vertical federated gradient boosting decision tree methods, designed for Homomorphic Encryption(HE) (Paillier, 1999) encryption schema.

-

•

We propose two novel training mechanisms for SecureBoost+: Mix Tree mode and Layered Tree mode. The novel training mechanisms can significantly reduce the number of interactions and communication costs between parties.

-

•

We propose a new multi-classification mechanism named SecureBoost-MO to speed up multi-classification training.

-

•

We investigate the performance of SecureBoost+ on a large-instance and a high-dimension dataset. Experimental results demonstrate that SecureBoost+ is 6-35x faster than SecureBoost, but with the same accuracy, and can be scaled up to tens of millions of data samples and thousands of feature.

2. PRELIMINARIES

2.1. Gradient Boosting Decision Tree

GBDT is an ensemble machine learning algorithm that is widely used in industry(Chen and Guestrin, 2016; Ke et al., 2017). Let be a decision tree function, the prediction for an instance is given by the sum of all decision tree is obtained by:

| (1) |

When training an ensemble tree model, we add a new decision tree at iteration to minimize the following second-order approximation loss:

| (2) |

where is the regularization term and , are the first and second derivatives of with respect to .

By setting the second-order approximation function above, we derive the split gain function for splitting a node:

| (4) |

where , , and are the instances space of the left, right, and parent nodes, respectively. The leaf output of an arbitrary leaf put is given by:

| (5) |

2.2. Paillier Homomorphic Encryption

The Paillier Homomorphic Encryption (PHE)(Paillier, 1999) schema is an additive homomorphic cryptosystem, and it is the key component of many privacy-preserving ML algorithms(Cheng et al., 2021; Chai et al., 2020; Yang et al., 2019b). The core properties of PHE are homomorphic addition and scalar multiplication:

| (6) |

| (7) |

where denotes the encryption operator.

2.3. SecureBoost

In SecureBoost (Cheng et al., 2021), all parties conduct a privacy-preserving instance intersection at the beginning. After the intersection, we get as the final aligned data matrices distributed on each party. Each party holds a data matrix with instances and features. For ease of description, we use X to denote a federated instance whose features are distributed on multiple parties and use to represent the instance space. is the feature set on the -th party. Each party holds its unique features, for any two feature sets and from -th party and -th party, . We define two party roles: Active Party and Passive Party. The active party is the data provider who holds both a data matrix and the class label . The passive party is the data provider who only holds a data matrix and does not hold the class label.

The goal of SecureBoost is to train an ensemble gradient boosting tree model on the federated dataset and label y. SecureBoost uses a histogram-based split finding strategy similar to XGBoost (Chen and Guestrin, 2016). Therefore, before constructing the tree, every party uses the quantile binning method to transform their feature values into bin indices.

To ensure that the label information is not leaked through from and , the active party needs to apply homomorphic encryption on and and then send them to the passive party. With the additive property of homomorphic encryption on and , the passive party can calculate the ciphertext histogram and then construct split-info. To protect the feature information of the passive party, the passive party marks split-info with unique IDs, randomly shuffles this split-info, and then sends it to the active party. The global optimal split node using features of all parties can be calculated collaboratively through the above process. The active party can calculate the local split information and then receive the encrypted, split information from the passive party and decrypt it. The active party is able to find the optimal split node without leaking labels and knowing information about the passive party features. The party that holds the optimal split information will be responsible for splitting and assigning current node instances to child nodes. The result of instances assignment will be synchronized to all parties. Starting at the root node, We repeat the split finding layer-wise until the convergence condition is reached.

2.4. Performance Bottlenecks Analysis for SecureBoost

The most expensive parts of histogram-based gradient boosting algorithms are the histogram building and split node finding (Ke et al., 2017; Chen and Guestrin, 2016). Due to homomorphic encryption operations, these two parts are particularly expensive to compute in SecureBoost. From the review of SecureBoost, we have the following observations:

-

•

Encryption and Addition Operation Cost: Histogram calculation of the passive party will be especially time-consuming because it is calculated on the homomorphic ciphertext. Homomorphic addition to ciphertext usually involves multiplication and modular operations on large integers and is much more expensive than plaintext addition (Paillier, 1999).

-

•

Decryption Operation Cost: Since we know that the gradient and hessian are encrypted, we have to decrypt a batch of encrypted split information when we split the node. Decryption is expensive to train on large amounts of data.

-

•

Communication Cost: At the beginning of building each tree, we need to synchronize the encryption gradient and hessian to the passive parties. At the same time, a batch of encrypted split information needs to be sent to calculate the split node of each layer of the tree. This entails heavy communication overhead.

3. Proposed SecureBoost+ Framework

In this section, we propose our solution to improve the performance of SecureBoost in the following directions.

-

•

Ciphertext Operation Optimization: In order to reduce the computation cost of homomorphic ciphertext on passive parties, especially the cost of histogram computation and split node finding. We propose a ciphertext operation optimization framework. Main idea: Pack Plaintext reduces the amount of ciphertext in computation and transmission. The ciphertext of the passive parties is compressed to reduce decryption and communication costs.

-

•

Training Mechanism Optimization: At the level of training mechanism, two optimization methods of mix tree and layered tree are designed to reduce the number of interactions and communication cost between parties.

-

•

SecureBoost-MO Optimization: We propose a new multi-classification mechanism named SecureBoost-MO to speed up multi-classification training.

-

•

Other Optimization: We integrate common engineering optimization techniques into SecureBoost+. They directly reduce instances and data terms involved in boosting tree training. This is very useful when training on large-scale data.

3.1. Ciphertext Operation Optimization

3.1.1. Rough Estimate of Ciphertext Operation Cost

Suppose we train a binary-classification task, we have samples, features, every feature has bins, the tree depth is , and then there are nodes. We have a rough cost estimate for building a decision tree in SecureBoost:

-

•

The ciphertext computation cost: ciphertext histogram computation + bin cumsum:

(8) -

•

The encryption and decryption cost: encryption + split-info decryption:

(9) -

•

The communication cost: encrypted + split-infos batches:

(10)

3.1.2. GH Packing

We first introduce GH Packing. From SecureBoost, we notice that gradient and hessian have the same operations in the process of histogram construction and split-finding: the gradient and hessian of a sample will be encrypted at the same time, and the encrypted gradient and hessian will go to the same bucket, but be added to different indices. The gradient and hessian of a split-info will also be decrypted together.

The Paillier(Paillier, 1999) is a homomorphic encryption algorithm used in SecureBoost. During training, a key length of 1024 or 2048 is usually used. When 1024 key length is used, the upper bound on the positive integer allowed in encryption is usually 1023 bits in length. This upper bound is much larger than the gradient and hessian at fixed points. As a result, a lot of plaintext space is wasted in the encryption process. Inspired by recently proposed gradient quantization and gradient batching techniques (Zhang et al., 2020), we adopt a GH(gradient: and hessian: ) Packing method in our work. We bundle and using bit arithmetic: We move g to the left by a certain number of bits and then add h to it.

For example, in a binary-classification task, the range of is , and the range of is , . Considering that g might be negative, we can offset g to ensure that it is a positive number. In the classification case, the offset number for will be . In the split finding step, we can remove the offset number when recovering .

After offsetting , we adopt a fixed-point encoding strategy to encode and , which is shown in equation 12, where is usually 53. Then, we can pack and into one large positive number. To avoid overflowing aggregation results during histogram calculation, we need to reserve more bits for and . Assuming we have instances, we need to make sure that no cumulative gradients and hessians in the histogram will be larger than and in equation 11. Therefore, we assign bits for and bits for in equation 11:

| (11) | ||||

Finally, we calculate a large positive number and homomorphic encryption ciphertext as follow:

| (12) | ||||

Figure 1 shows details of the GH packing process. The active party synchronizes ciphertext to the passive party. The passive party only needs to calculate one ciphertext per sample when constructing the histogram, while in SecureBoost, it needs to calculate two ciphertexts per sample. From the GH Packing optimization, the whole ciphertext computation cost is reduced by half.

3.1.3. Ciphertext Compression

Although the GH packing algorithm uses a lot of plaintext space, the plaintext space is not fully utilized. Remind that we usually have a 1023 bit-length integer as the plaintext upper bound when using a 1024-bit key length in the Paillier (Paillier, 1999). Assuming instances number is , is , we will assign bits for and bits for , according to equation 11. The is 147, which is still less than 1023.

To fully use the plaintext space, we can perform ciphertext compression on the passive parties. This technique utilizes the property of our built-in HE algorithm, which is that an addition operation or a scalar multiplication operation is less costly than a decryption operation. We use addition and multiplication operations to compress several encrypted numbers in split-info into one encrypted number and make sure that the plaintext space is fully utilized. The idea of this method is the same as the GH Packing method: we multiply a ciphertext by , shifting its plaintext by bits to the left. Then, we can add another ciphertext to it, compressing the two ciphertexts into one. We can repeat this process until no more bits are left in the plaintext space to compress, as shown in equation 13. Once the active party receives an encrypted number, it only needs to decrypt one encrypted number to recover several statistical results and reconstruct split-info.

| (13) | ||||

In the case above, given a 1023 bit-length plaintext space and of 147, We are able to compress split points into 1. By using ciphertext compression, decryption operations and decryption time can be reduced by up to 6 times. On the other hand, the communication cost of encrypted split-info is also reduced by up to 6 times. Figure 2 illustrates the process of ciphertext compression.

3.1.4. Ciphertext Histogram Subtraction

Histogram subtraction is a classic optimization technique in the histogram-based tree algorithms. When splitting nodes, samples are distributed to either the left or right nodes. The parent histogram is obtained for each feature by adding the set of histograms of the left and right children nodes. To take advantage of this property, in SecureBoost+, both the active and passive parties cache the histogram of the previous layer. When growing from the current layer, the active and passive parties first calculate the histogram of nodes with fewer samples and finally obtain the histogram of sibling nodes by histogram subtraction with the previous layer cache information. Since the ciphertext histogram calculation for passive parties is always the largest overhead, the ciphertext histogram subtraction will greatly speed up the calculation, and at least half of the ciphertext calculation is reduced.

3.1.5. Re-estimate Cost after Ciphertext Operation Optimization

Let’s go back to cost estimates. In more detail, suppose we use paillier encryption algorithm with 1024 key length, we have 1 million instances and 2,000 features, tree depth is 5, bin number is 32, the precision parameter of equation 12 is 53.

With GH packing and histogram subtraction optimization, ciphertext histogram computation cost is reduced by 4 times and operations in bin cumsum is reduced by half.

The ciphertext computation cost is calcualted by:

| (14) |

We compare the values of the equation (8) and (14), the ciphertext computation cost is reduced by 75%.

Because we pack gradients and together, the encryption/decryption operation is reduced by half. Under current paillier encryption setting, we can compress ciphertexts into one ciphertext, then we get:

| (15) |

The communication cost is:

| (16) |

We compare the values of the equations (15), (16), and the equations (9), (10), the cost is reduced by 78%.

From the estimation, we can see that ciphertext operation optimization is theoretically effective.

3.2. Training Mechanism Optimization

At the training mechanism level, two optimization methods are proposed: mixed tree mode and layered tree mode. These two methods can significantly reduce the number of interactions and communication cost between parties.

3.2.1. Mix Tree Mode

In the Mix Tree Mode, every party will build a certain number of trees using their local features, and this procedure repeats until the max epoch is reached or the stop condition is met.

When the active party builds a decision tree, the active party simply builds the tree locally. When the tree is built in the passive party, the relevant passive party receives the encrypted g/h, assisted by the active party, to find the best split point. The structure of the tree and split point is retained on the passive party, while the leaf weight is retained on the active party. This mode is suitable for vertical federated learning under data balancing. In this way, the number of interactions and communication costs can be significantly reduced, thus speeding up training. Figure 3 demonstrates the mix tree training process.

3.2.2. Layered Tree Mode

The Layered Tree Mode is another optimization mechanism, which is designed for vertical federated learning under data balancing.

Similar to The Mix Tree Mode, the active and the passive party are responsible for different layers in the tree when building a decision tree. Every decision tree has in total layers. The passive party will be responsible for building the first layers, assisted by the active party. The active party will build the next layers. All trees will be built in this ’layered’ manner. In this way, the number of interactions and communication costs can be significantly reduced, thus speeding up training. Figure 4 demonstrates the layered tree training process.

3.3. SecureBoost-MO Optimization

In the traditional GBDT algorithm, the multi-classification task learning strategy is that each tree only learns one category separately. However, in the vertical federated learning scenario, this single-output multi-classification strategy has an obvious performance bottleneck: the computing cost and communication cost will increase significantly with the increase of the number of label categories.

Therefore, it is necessary to optimize the multi-classification task training in SecureBoost. Inspired by (Zhang and Jung, 2020), which introduces a general framework of multi-output gradient boosting tree, we propose a novel multi-output vertical federated boosting decision tree for multi-classification task. Leaves of multi-output tree give multi-dimension output, corresponding to every class. Instead of learning trees for every class separately, we only need to learn one tree at each epoch. Figure 5 tells the differences between traditional multi-classification trees and MO-trees.

3.3.1. Gain calculation of SecureBoost-MO

We propose a split gain function for multi-output decision tree. Given a label multi-classification task, we use g and h to denote the gradient/hessian vectors which have elements, each element corresponds to the / of each label. In a multi-output decision tree, every leaf obtains an output w, which also has elements. We rewrite the equation 3:

| (17) | ||||

where are ont-hot label vector and predict score vector, H is the hessian matrix. In SecureBoost-MO, we use cross-entropy as the multi-classification loss function, so H is a diagonal matrix. By minimizing , we get

| (18) |

as the weight function and the split gain function:

| (19) |

| (20) |

SecureBoost-MO is based on the above leaf weight and split gain function.

3.3.2. Split Finding of SecureBoost-MO

The splitting finding process of Multi-output boosting tree has basically the same as that of the standard GBDT trees, but there are two differences:

-

•

In standard GBDT trees bin values are scalar , values. When calculating the histogram in MO-trees, aggregated terms in the bin are g, h vectors.

- •

As a result, in the vertical federated learning scenario, we need to design a new structure that supports encryption, decryption of g, h vectors, and support operations in the histogram calculation, histogram subtraction, and split-info building.

To fulfill this requirement, we design a Multi-Class packing algorithms based on our ciphertext optimization framework. Remind that represent the plaintext bit length for a pair of , . For a HE schema that has a plaintext space of bits, and we have classes in total, we can pack:

| (21) |

classes in a ciphertext, and in total we need:

| (22) |

integers to pack gh vectors of an instance. With this strategy, we are able to pack g and h vectors of a sample into a small amount of integers and then we encrypt them to yiled a ciphertext vector. Details of the above process are shown in Algorithm 1.

Figure 6 gives an intuitive illustration of Multi-Class GH Packing.

Following, we can describe the split-finding procedure of SecureBoost-MO:

-

•

At the beginning, the active party calculates the multi-classification gradient and hessian vectors for each instances. The active party packs and encrypts them using Algorithm 1, and gets a matrix [GH] which contains encrypted ciphertext vectors.

-

•

The active party sends [GH] to all the passive parties. The passive parties calculate histograms and construct split-info directly on ciphertext vectors.

-

•

The active party decrypts split-info and recovers accumulated g, h vectors using Algorithm 2 and finds out global optimal split node.

3.4. Other Optimization

We also adopt two simple yet efficient engineering optimization methods in SecureBoost+ that are commonly used in the existing tree-boosting framework: GOSS (Ke et al., 2017) and Sparse Optimization.

4. Experiments

In this section, we conduct several experiments to verify the efficiency of SecureBoost+.

4.1. Setup

Baseline: We use Secureboost provided in FATE v1.5 as the federated learning algorithm baseline and XGBoost as non-federated algorithm baseline.

Environment: Our experiments are conducted on two machines which are active and passive party respectively. Each machine has 16 cores and 32GB RAM. They are deployed in intranet with a network speed of 1GBps.

Hyper-parameters: For all experiments, key hyper-parameters settings are uniform: We set , (In XGBoost the split method is ’hist’) and . For encryption parameters, we use as the encryption schema, the . For the efficiency of conducting experiments, our baseline methods will run trees. In our framework SecureBoost+, we use goss subsample, set the and the . In the Layered Tree Mode, set the and , and in the Mix Tree Mode every party is responsible for building tree.

Datasets: We evaluate our framework on seven open data sets to test model performance and training speed. The detail of datasets is listed in the Table 1. The data sets contain large-instances and high-dimensional data.

-

•

The first four data sets are for binary classification tasks:

Give-credit111https://github.com/FederatedAI/FATE/tree/master/examples/data: A bank credit binary-classification data set. It contains 150, 000 instances and 10 features. It is extracted from UCI Credit Card data set and is a standard data set in FATE.

Susy and Higgs: The data sets are to predict physics events. They have million scales of instances and are widely used in machine learning training speed evaluation (Chen and Guestrin, 2016)(Fu et al., 2019).

Epsilon: It is a binary data set from PASCAL Challenge 2008. It not only contains a large number of samples but also contains 2000 features. So It is an ideal data set to test the performance on the large-scale and high-dimensional modeling(Dorogush et al., 2018).

-

•

Three Multi-classification data sets are prepared for verifying the efficiency of SeucreBoost-MO:

Sensorless: It is for sensorless drive diagnosis.

Covtype: It is for predicting 7 types of forest covers.

SVHN: It is a street-view digits image classification task.

We choose these three multi-classification data sets because they have more classes of labels while they have relatively more instances.

We vertically and equally divide every data set into two to make data sets for the active and passive party.

| datasets | # instance | # features | # active feature | # passive features | # labels | task type |

|---|---|---|---|---|---|---|

| Give credit | 150,000 | 10 | 5 | 5 | 2 | binary classification |

| Susy | 5,000,000 | 18 | 4 | 14 | 2 | binary classification |

| Higgs | 11,000,000 | 28 | 13 | 15 | 2 | binary classification |

| Epsilon | 400,000 | 2000 | 1000 | 1000 | 2 | binary classification |

| Sensorless | 58,509 | 48 | 24 | 24 | 11 | multi-classification |

| Covtype | 581,012 | 54 | 27 | 27 | 7 | multi-classification |

| SVHN | 99,289 | 3072 | 1536 | 1536 | 10 | multi-classification |

4.2. Ciphertext Operation Optimization Evaluation

In this section, we will conduct experiments to compare SecureBoost+ with the Baseline algorithms in the setting, which ciphertext operation optimization is applied in the SecureBoost+. SecureBoost+_Ciphertext is defined as SecureBoost+ with ciphertext operation optimization.

4.2.1. Training Time

We conduct experiments to compare the training time of SecureBoost+ with ciphertext operation optimization, and SecreuBoost on the four binary classification data sets. We only consider the time spent on tree building and ignore the time spent on non-tree building, such as time spent on data I/O, feature engineering and evaluation. For both SecureBoost+ and SecreuBoost, we both build 25 trees, and calculate the average time spent on each tree. The results are showed in Figure 7. We can see that the proposed SecureBoost+ obviously outperforms SecureBoost: In the four datasets, Secureboost+ are 6.63x, 6.03x, 7.33x, 22x faster than SecureBoost. From the experimental results, we can find that with the increase of the number of instances and feature dimension, SecureBoost+ will have more obvious advantages.

4.2.2. Model Performance

In order to evaluate the impact of ciphertext operation optimization on the model performance, we compare the model performance of SecureBoost+, XGBoost and SecureBoost. We use AUC score as the model performance evaluation metric. The results are shown in Table 2. From the results, we can see that SecureBoost+ performs just as well as XGBoost and SecureBoost in this setting.

4.3. Training Mechanism Optimization Evaluation

In this section, we will conduct experiments to compare SecureBoost+ with the Baseline algorithms in the setting, which training mechanism optimizations are applied in the SecureBoost+. It should be noted that in our experiment, when we enable Mix Tree Mode or Layered Tree Mode in the SecureBoost+, we use ciphertext operation optimization in the SecureBoost+ by default. SecureBoost+_Mix is defined as SecureBoot+ with the mix tree mode, while SecureBoost+_Layered is defined as SecureBoot+ with layered tree mode.

4.3.1. Training Time

We conduct experiments to compare the training time of SecureBoost+ with two training mechanism optimizations, SecreuBoost+ with ciphertext operation optimization, and SecureBoost on the four binary classification datasets. The results are showed in Figure 7. It shows the average tree building time spent on each tree. It is no doubt that SecureBoost+ with the mix tree mode or layered tree mode will build tree faster than SecureBoost+ with ciphertext operation optimization only, and also faster than SecureBoost. This is because it skips global split-finding and inter-party communications with certain strategies. In the four datasets, SecureBoost+_Mix are 1.65x, 1.96x, 1.60x, 1.58x faster than SecureBoost+_Ciphertext, while SecureBoost+_Mix are 10.96x, 11.80x, 11.69x, 35.30x faster than SecureBoost. In the four datasets, SecureBoost+_Layered are 1.15x, 1.13x, 1.10x, 1.37x faster than SecureBoost+_Ciphertext., while SecureBoost+_Layered are 7.64x, 6.83x, 8.09x, 30.77x faster than SecureBoost.

4.3.2. Model Performance

We will pay much attention to the model performance of SecureBoost+ with the mix tree mode and the layered tree mode since their strategies intentionally skip the global split finding on every tree node. The results are shown in Table 2. SecureBoost+_Mix and SecureBoost+_Layered have slight performance loss compared to XGBoost and SecureBoost+_Ciphertext, but the losses are very small. According to our experimental analysis, the two mode sometimes require a few more trees to catch up with the performances of SecureBoost+_Ciphertext. But from the result of Epsilon data set, we believe that the two training mechanism can obviously accelerate training when the data set contains high dimensional features and features are balanced distributed.

| Dataset | XGBoost | SecureBoost | SecureBoost+_Ciphertext | SecureBoost+_Mix | SecureBoost+_Layered |

|---|---|---|---|---|---|

| Give-credit | 0.872 | 0.874 | 0.873 | 0.87 | 0.871 |

| Susy | 0.864 | 0.873 | 0.873 | 0.869 | 0.87 |

| Higgs | 0.808 | 0.806 | 0.8 | 0.795 | 0.796 |

| Epsilon | 0.897 | 0.897 | 0.894 | 0.894 | 0.894 |

4.4. SecureBoost-MO Optimization Evaluation

In this section, we will conduct multi-classification task experiments to evaluate SecureBoost+ with SecureBoost-MO optimization. We consider XGBoost and SecureBoost+_Ciphertext as baseline.

4.4.1. Training Time

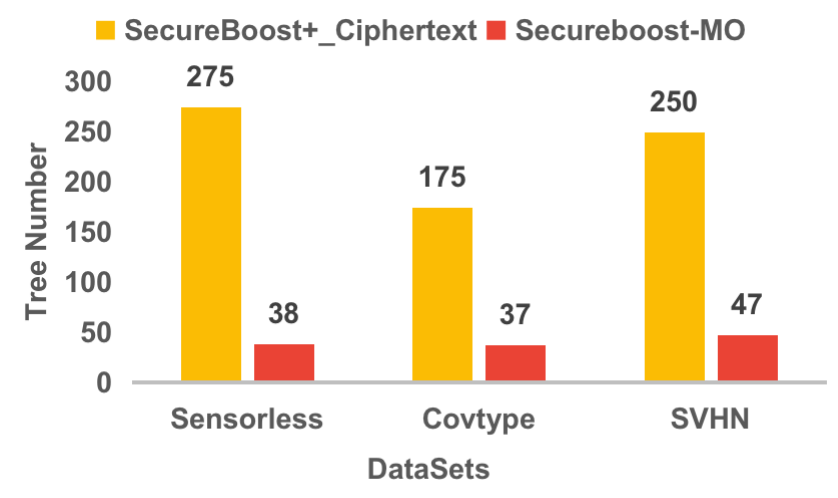

As shown in Figure 8, in the multi-classification training task with 25 epoches, SecureBoost+_Ciphertext needs to build 275, 175 and 250 trees respectively in the Sensorless, Covtype, SVHN datasets, while SecureBoost-MO only needs to build 38, 37, 47 trees respectively. The tree numbers of SecureBoost-MO is far less than SecureBoost+_Ciphertext.

We conduct experiments to compare the training time of SecureBoost-MO with SecureBoost+_Ciphertext on the three multi-classification datasets. It should be noted that in this experiment, the time is the total training time, not the training time for each tree. In the SecureBoost+_Ciphertext, we run 25 epoch, which has 275 trees in the Sensorless dataset, while in the SecureBoost-MO, we need to build 38 trees to achieve the similar model performance. The results are showed in Figure 9. We can see that SecureBoost-MO obviously outperforms SecureBoost+_Ciphertext: In the three datasets, SecureBoost-MO are 3.92x, 3.72x, 1.57x faster than SecureBoost+_Ciphertext.

4.4.2. Model Performance

We use accuracy scores as the model performance evaluation metric in the multi-classification tasks. The results are shown in Table 3. The results show that SecureBoost+_Ciphertext has relatively better accuracy scores than XGBoost in some datasets, and SecureBoost-MO performs as well as SecureBoost+_Ciphertext.

| Data set | XGBoost | SecureBoost+_CT | SecureBoost-MO |

|---|---|---|---|

| Sensorless | 0.999 | 0.992 | 0.994 |

| Covtype | 0.78 | 0.806 | 0.807 |

| SVHN | 0.686 | 0.686 | 0.685 |

5. Conclusion

In this work, we proposed SecureBoost+, a large-scale and high-performance vertical federated gradient boosting decision tree framework. Based on Secureboost, we propose several optimization schemes, including Ciphertext Operation Optimization, Training Mechanism Optimization and SecureBoost-MO Optimization. These optimization schemes cover binary and multi-classification task scenarios. The experimental results show that the speed of SecureBoost+ is significantly faster than that of SecureBoost, and the accuracy of SecureBoost+ is comparable to that of SecureBoost and XGBoost. With the increase of the number of instances and feature dimension, SecureBoost+ will have more obvious advantages.

References

- (1)

- Cao et al. (2019) Shaosheng Cao, XinXing Yang, Cen Chen, Jun Zhou, Xiaolong Li, and Yuan Qi. 2019. Titant: Online real-time transaction fraud detection in ant financial. arXiv preprint arXiv:1906.07407 (2019).

- Chai et al. (2020) Di Chai, Leye Wang, Kai Chen, and Qiang Yang. 2020. Secure federated matrix factorization. IEEE Intelligent Systems (2020).

- Chen et al. (2021) Chaochao Chen, Jun Zhou, Li Wang, Xibin Wu, Wenjing Fang, Jin Tan, Lei Wang, Alex X Liu, Hao Wang, and Cheng Hong. 2021. When homomorphic encryption marries secret sharing: Secure large-scale sparse logistic regression and applications in risk control. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining. 2652–2662.

- Chen and Guestrin (2016) Tianqi Chen and Carlos Guestrin. 2016. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining. 785–794.

- Cheng et al. (2021) Kewei Cheng, Tao Fan, Yilun Jin, Yang Liu, Tianjian Chen, Dimitrios Papadopoulos, and Qiang Yang. 2021. Secureboost: A lossless federated learning framework. IEEE Intelligent Systems 36, 6 (2021), 87–98.

- Dorogush et al. (2018) Anna Veronika Dorogush, Vasily Ershov, and Andrey Gulin. 2018. CatBoost: gradient boosting with categorical features support. arXiv preprint arXiv:1810.11363 (2018).

- Fu et al. (2019) Fangcheng Fu, Jiawei Jiang, Yingxia Shao, and Bin Cui. 2019. An experimental evaluation of large scale GBDT systems. arXiv preprint arXiv:1907.01882 (2019).

- Fu et al. (2021) Fangcheng Fu, Yingxia Shao, Lele Yu, Jiawei Jiang, Huanran Xue, Yangyu Tao, and Bin Cui. 2021. Vf2boost: Very fast vertical federated gradient boosting for cross-enterprise learning. In Proceedings of the 2021 International Conference on Management of Data. 563–576.

- Fu et al. (2022) Fangcheng Fu, Huanran Xue, Yong Cheng, Yangyu Tao, and Bin Cui. 2022. Blindfl: Vertical federated machine learning without peeking into your data. In Proceedings of the 2022 International Conference on Management of Data. 1316–1330.

- Hardy et al. (2017) Stephen Hardy, Wilko Henecka, Hamish Ivey-Law, Richard Nock, Giorgio Patrini, Guillaume Smith, and Brian Thorne. 2017. Private federated learning on vertically partitioned data via entity resolution and additively homomorphic encryption. arXiv preprint arXiv:1711.10677 (2017).

- Kairouz et al. (2021) Peter Kairouz, H Brendan McMahan, Brendan Avent, Aurélien Bellet, Mehdi Bennis, Arjun Nitin Bhagoji, Kallista Bonawitz, Zachary Charles, Graham Cormode, Rachel Cummings, et al. 2021. Advances and open problems in federated learning. Foundations and Trends® in Machine Learning 14, 1–2 (2021), 1–210.

- Kang et al. (2022a) Yan Kang, Yuanqin He, Jiahuan Luo, Tao Fan, Yang Liu, and Qiang Yang. 2022a. Privacy-preserving federated adversarial domain adaptation over feature groups for interpretability. IEEE Transactions on Big Data (2022).

- Kang et al. (2022b) Yan Kang, Yang Liu, and Xinle Liang. 2022b. FedCVT: Semi-supervised Vertical Federated Learning with Cross-view Training. ACM Trans. Intell. Syst. Technol. 13, 4 (2022). https://doi.org/10.1145/3510031

- Ke et al. (2017) Guolin Ke, Qi Meng, Thomas Finley, Taifeng Wang, Wei Chen, Weidong Ma, Qiwei Ye, and Tie-Yan Liu. 2017. Lightgbm: A highly efficient gradient boosting decision tree. Advances in neural information processing systems 30 (2017), 3146–3154.

- Li et al. (2020) Qinbin Li, Zeyi Wen, and Bingsheng He. 2020. Practical federated gradient boosting decision trees. In Proceedings of the AAAI conference on artificial intelligence, Vol. 34. 4642–4649.

- Liu et al. (2021) Yang Liu, Tao Fan, Tianjian Chen, Qian Xu, and Qiang Yang. 2021. FATE: An Industrial Grade Platform for Collaborative Learning With Data Protection. Journal of Machine Learning Research 22, 226 (2021), 1–6. http://jmlr.org/papers/v22/20-815.html

- Liu et al. (2020) Yang Liu, Yan Kang, Chaoping Xing, Tianjian Chen, and Qiang Yang. 2020. A secure federated transfer learning framework. IEEE Intelligent Systems 35, 4 (2020), 70–82.

- Liu et al. (2024) Yang Liu, Yan Kang, Tianyuan Zou, Yanhong Pu, Yuanqin He, Xiaozhou Ye, Ye Ouyang, Ya-Qin Zhang, and Qiang Yang. 2024. Vertical Federated Learning: Concepts, Advances, and Challenges. IEEE Transactions on Knowledge and Data Engineering (2024). https://doi.org/10.1109/TKDE.2024.3352628

- McMahan et al. (2017) Brendan McMahan, Eider Moore, Daniel Ramage, Seth Hampson, and Blaise Aguera y Arcas. 2017. Communication-efficient learning of deep networks from decentralized data. In Artificial intelligence and statistics. PMLR, 1273–1282.

- Paillier (1999) Pascal Paillier. 1999. Public-key cryptosystems based on composite degree residuosity classes. In International conference on the theory and applications of cryptographic techniques. Springer, 223–238.

- Shahbazi and Byun (2019) Zeinab Shahbazi and Yung-Cheol Byun. 2019. Product recommendation based on content-based filtering using XGBoost classifier. Int. J. Adv. Sci. Technol 29 (2019), 6979–6988.

- Voigt and Von dem Bussche (2017) Paul Voigt and Axel Von dem Bussche. 2017. The eu general data protection regulation (gdpr). A Practical Guide, 1st Ed., Cham: Springer International Publishing 10 (2017), 3152676.

- Wang et al. (2018) Xiang Wang, Xiangnan He, Fuli Feng, Liqiang Nie, and Tat-Seng Chua. 2018. Tem: Tree-enhanced embedding model for explainable recommendation. In Proceedings of the 2018 World Wide Web Conference. 1543–1552.

- Yang et al. (2019a) Kai Yang, Tao Fan, Tianjian Chen, Yuanming Shi, and Qiang Yang. 2019a. A quasi-newton method based vertical federated learning framework for logistic regression. arXiv preprint arXiv:1912.00513 (2019).

- Yang et al. (2019b) Qiang Yang, Yang Liu, Tianjian Chen, and Yongxin Tong. 2019b. Federated machine learning: Concept and applications. ACM Transactions on Intelligent Systems and Technology (TIST) 10, 2 (2019), 1–19.

- Zhang et al. (2020) Chengliang Zhang, Suyi Li, Junzhe Xia, Wei Wang, Feng Yan, and Yang Liu. 2020. Batchcrypt: Efficient homomorphic encryption for cross-silo federated learning. In 2020 USENIX Annual Technical Conference (USENIXATC 20). 493–506.

- Zhang et al. (2018) Qiao Zhang, Cong Wang, Hongyi Wu, Chunsheng Xin, and Tran V Phuong. 2018. GELU-Net: A Globally Encrypted, Locally Unencrypted Deep Neural Network for Privacy-Preserved Learning.. In IJCAI. 3933–3939.

- Zhang and Zhu (2020) Yifei Zhang and Hao Zhu. 2020. Additively homomorphical encryption based deep neural network for asymmetrically collaborative machine learning. arXiv preprint arXiv:2007.06849 (2020).

- Zhang and Jung (2020) Zhendong Zhang and Cheolkon Jung. 2020. GBDT-MO: Gradient-Boosted Decision Trees for Multiple Outputs. IEEE Transactions on Neural Networks and Learning Systems (2020).