Secure Watermark for Deep Neural Networks with Multi-task Learning

Abstract

Deep neural networks are playing an important role in many real-life applications. After being trained with abundant data and computing resources, a deep neural network model providing service is endowed with economic value. An important prerequisite in commercializing and protecting deep neural networks is the reliable identification of their genuine author. To meet this goal, watermarking schemes that embed the author’s identity information into the networks have been proposed. However, current schemes can hardly meet all the necessary requirements for securely proving the authorship and mostly focus on models for classification. To explicitly meet the formal definitions of the security requirements and increase the applicability of deep neural network watermarking schemes, we propose a new framework based on multi-task learning. By treating the watermark embedding as an extra task, most of the security requirements are explicitly formulated and met with well-designed regularizers, the rest is guaranteed by using components from cryptography. Moreover, a decentralized verification protocol is proposed to standardize the ownership verification. The experiment results show that the proposed scheme is flexible, secure, and robust, hence a promising candidate in deep learning model protection.

1 Introduction

Deep neural network (DNN) is spearheading artificial intelligence with broad application in assorted fields including computer vision [36, 58, 19], natural language processing [17, 53, 10], internet of things [30, 14, 41], etc. Increasing computing resources and improved algorithms have boosted DNN as a trustworthy agent that outperforms humans in many disciplines.

To train a DNN is much more expensive than to use it for inference. A large amount of data has to be collected, preprocessed, and fed into the model. Following the data preparation is designing the regularizers, tuning the (hyper)parameters, and optimizing the DNN structure. Each round of tuning involves thousands of epochs of backpropagation, whose cost is about 0.005$ averagely regarding electricity consumption.111Assume that one-kilowatt-hour electricity costs 0.1$. One epoch for training a DNN can consume over three minutes on four GPUs, each functions at around 300W. On the contrary, using a published DNN is easy, a user simply propagates the input forward. Such an imbalance between DNN production and deployment calls for recognizing DNN models as intellectual properties and designing better mechanisms for authorship identification against piracy.

DNN models, as other multi-media objects, are usually transmitted in public channels. Hence the most influential methods for protecting DNNs as intellectual properties is digital watermark [59]. To prove the possession of an image, a piece of music, or a video, the owner resorts to a watermarking method that encodes its identity information into the media. After compression, transmission, and slight distortion, a decoder should be able to recognize the identity from the carrier [4].

As for DNN watermarking, researchers have been following a similar line of reasoning [48]. In this paper, we use host to denote the genuine author of a DNN model. The adversary is one who steals and publishes the model as if it is the host. To add watermarks to a DNN, some information is embedded into the network along with the normal training data. After adversaries manage to steal the model and pretend to have built it on themselves, a verification process reveals the hidden information in the DNN to identify the authentic host. In the DNN setting, watermark as additional security insurance should not sacrifice the model’s performance. This is called the functionality-preserving property. Meanwhile, the watermark should be robust against the adversaries’ modifications to the model. Many users fine-tune (FT) the downloaded model on a smaller data set to fit their tasks. In cases where the computational resource is restricted (especially in the internet of things), a user is expected to conduct neuron pruning (NP) to save energy. A prudent user can conduct fine-pruning (FP) [31] to eliminate potential backdoors that have been inserted into the model. These basic requirements, together with other concerns for integrity, privacy, etc, make DNN watermark a challenge for both machine learning and security communities.

The diversity of current watermarking schemes originates from assumptions on whether or not the host or the notary has white-box access to the stolen model.

If the adversary has stolen the model and only provided an API as a service then the host has only black-box access to the possibly stolen model. In this case, the backdoor-based watermarking schemes are preferred. A DNN with a backdoor yields special outputs on specific inputs. For example, it is possible to train an image classification DNN to classify all images with a triangle stamp on the upper-left corner as cats. Backdoor-based watermark was pioneered by [59], where a collection of images is selected as the trigger set to actuate misclassifications. It was indicated in [3, 60] that cryptological protocols can be used with the backdoor-based watermark to prove the integrity of the host’s identity. For a more decent way of generating triggers, Li et al. proposed in [29] to adopt a variational autoencoder (VAE), while Le Merrer et al. used adversarial samples as triggers [26]. Li et al. proposed Wonder Filter that assigns some pixels to values in and adopted several tricks to guarantee the robustness of watermark embedding in [27]. In [57], Yao et al. illustrated the performance of the backdoor-based watermark in transfer learning and concluded that it is better to embed information in the feature extraction layers.

The backdoor-based watermarking schemes are essentially insecure given various methods of backdoor elimination [9, 32, 28]. Liu et al. showed in [33] that a heuristic and biomorphic method can detect backdoor in a DNN. In [44], Shafieinejad et al. claimed that it is able to remove watermarks given the black-box access of the model. Namba et al. proposed another defense using VAE against backdoor-based watermarking methods in [35]. Even without these specialized algorithms, model tuning such as FP [31, 47] can efficiently block backdoor and hence the backdoor-based watermark.

If the host can obtain all the parameters of the model, known as the white-box access, then the weight-based watermarking schemes are in favor. Although this assumption is strictly stronger than that for the black-box setting, its practicality remains significant. For example, the sponsor of a model competition can detect plagiarists that submit models slightly tuned from those of other contestants by examing the watermark. This legitimate method is better than checking whether two models perform significantly different on a batch of data, which is still adopted by many competitions.222http://host.robots.ox.ac.uk:8080/leaderboard/main_bootstrap.php As another example, the investor of a project can verify the originality of a submitted model from its watermark. Such verification prevents the tenderers from submitting a (modified) copy or an outdated and potentially backdoored model.

Uchida et al. firstly revealed the feasibility of incorporating the host’s identity information into the weights of a DNN in [48]. The encoding is done through a regularizer that minimizes the distance between a specific weight vector and a string encoding the author’s identity. The method in [16] is an attempt of embedding message into the model’s weight in a reversible manner so that a trusted user can eliminate the watermark’s influence and obtain the clean model. Instead of weights, Davish et al. proposed Deepsigns[12] that embeds the host’s identity into the statistical mean of the feature maps of a selected collection of samples, hence better protection is achieved.

So far, the performance of a watermarking method is mainly measured by the decline of the watermarked model’s performance on normal inputs and the decline of the identity verification accuracy against model fine-tuning and neuron pruning. However, many of the results are empirical and lack analytic basis [48, 12]. Most watermarking methods are only designed and examined for DNNs for image classification, whose backdoors can be generated easily. This fact challenges the universality of adopting DNN watermark for practical use. Moreover, some basic security requirements against adversarial attacks have been overlooked by most existing watermarking schemes. For example, the method in [59] can detect the piracy, but it cannot prove to any third-party that the model belongs to the host. As indicated by Auguste Kerckhoff’s principle [24], the security of the system should rely on the secret key rather than the secrecy of the algorithm. Methods in [59, 12, 48] are insecure in this sense since an adversary knowing the watermark algorithm can effortlessly claim the authorship. The influence of watermark overwriting is only discussed in [3, 27, 12]. The security against ownership piracy is only studied in [60, 27, 16].

In order to overcome these difficulties, we propose a new white-box watermarking model for DNN based on multi-task learning (MTL) [7, 43, 22]. By turning the watermark embedding into an extra task, most security requirements can be satisfied with well-designed regularizers. This extra task has a classifier independent from the backend of the original model, hence it can verify the ownership of models designed for tasks other than classification. Cryptological protocols are adopted to instantiate the watermarking task, making the proposed scheme more secure against watermark detection and ownership piracy. To ensure the integrity of authorship identification, a decentralized verification protocol is designed to authorize the time stamp of the ownership and invalid the watermark overwriting attack. The major contributions of our work are three-fold:

-

1.

We examine the security requirements for DNN watermark in a comprehensive and formal manner.

-

2.

A DNN watermarking model based on MTL, together with a decentralized protocal, is proposed to meet all the security requirements. Our proposal can be applied to DNNs for tasks other than image classification, which were the only focus of previous works.

-

3.

Compared with several state-of-the-art watermarking schemes, the proposed method is more robust and secure.

2 Threat Model and Security Requirements

It is reasonable to assume that the adversary possesses fewer resources than the host, e.g., the entire training data set is not exposed to the adversary, and/or the adversary’s computation resources are limited. Otherwise, it is unnecessary for the adversary to steal the model. Moreover, we assume that the adversary can only tune the model by methods such as FT, NP or FP. Such modifications are common attacks since the training code is usually published along with the trained model. Meanwhile, such tuning is effective against systems that only use the hash of the model as the verification. On the other hand, it is hard and much involved to modify the internal computational graph of a model. It is harder to adopt model extraction or distillation that demands much data and computation [23, 40], yet risks performance and the ability of generalization. Assume that the DNN model is designed to fulfil a primary task, , with dataset , data space , label space and a metric on .

2.1 Threat Model

We consider five major threats to the DNN watermarks.

2.1.1 Model tuning

An adversary can tune by methods including: (1) FT: running backpropagation on a local dataset, (2) NP: cut out links in that are less important, and (3) FP: pruning unnecessary neurons in and fine-tuning . The adversary’s local dataset is usually much smaller than the original training dataset for and fewer epochs are needed. FT and NP can compromise watermarking methods that encode information into ’s weight in a reversible way [16]. Meanwhile, [31] suggested that FP can efficiently eliminate backdoors from image classification models and watermarks within.

2.1.2 Watermark detection

If the adversary can distinguish a watermarked model from a clean one, then the watermark is of less use since the adversary can use the clean models and escape copyright regulation. The adversary can adopt backdoor screening methods [56, 50, 49] or reverse engineering [20, 5] to detect and possibly eliminate backdoor-based watermarks. For weight-based watermarks, the host has to ensure that the weights of a watermarked model do not deviate from that of a clean model too much. Otherwise, the property inference attack [15] can distinguish two models.

2.1.3 Privacy concerns

As an extension to detection, we consider an adversary who is capable of identifying the host of a model without its permission as a threat to privacy. A watermarked DNN should expose no information about its host unless the host wants to. Otherwise, it is possible that models be evaluated not by their performance but by their authors.

2.1.4 Watermark overwriting

Having obtained the model and the watermarking method, the adversary can embed its watermark into the model and declare the ownership afterward. Embedding an extra watermark only requires the redundancy of parameter representation in the model. Therefore new watermarks can always be embedded unless one proves that such redundancy has been depleted, which is generally impossible. A concrete requirement is: the insertion of a new watermark should not erase the previous watermarks.

For a model with multiple watermarks, it is necessary that an an incontrovertible time-stamp is included into ownership verification to break this redeclaration dilemma.

2.1.5 Ownership piracy

Even without tuning the parameters, model theft is still possible. Similar to [29], we define ownership piracy as attacks by which the adversary claims ownership over a DNN model without tuning its parameters or training extra learning modules. For zero-bit watermarking schemes (no secret key is involved, the security depends on the secrecy of the algorithm), the adversary can claim ownership by publishing a copy of the scheme. For a backdoor-based watermarking scheme that is not carefully designed, the adversary can detect the backdoor and claim that the backdoor as its watermark.

2.2 Formulating the Watermarking Scheme

We define a watermarking scheme with security parameters as a probabilistic algorithm WM that maps (the description of the task, together with the training dataset ), a description of the structure of the DNN model and a secret key denoted by key to a pair :

where is the watermarked DNN model and verify is a probabilistic algorithm with binary output for verifying ownership. To verify the ownership, the host provides verify and key. A watermarking scheme should satisfy the following basic requirements for correctness:

| (1) |

| (2) |

where reflects the security level. Condition (1) suggests that the verifier should always correctly identify the authorship while (2) suggests that it only accepts the correct key as the proof and it should not mistake irrelevant models as the host’s.

The original model trained without being watermarked is denoted by . Some researchers [16] define WM as a mapping from to , which is a subclass of our definition.

2.3 Security Requirements

Having examined the toolkit of the adversary, we formally define the security requirements for a watermarking scheme.

2.3.1 Functionality-preserving

The watermarked model should perform slightly worse than, if not as well as, the clean model. The definition for this property is:

| (3) |

which can be examined a posteriori. However, it is hard to explicitly incorporate this definition into the watermarking scheme. Instead, we resort to the following definition:

| (4) |

Although it is stronger than (3), (4) is a tractable definition. We only have to ensure that the parameters of does not deviate from those of too much.

2.3.2 Security against tuning

After being tuned with the adversary’s dataset , the model’s parameters shift and the verification accuracy of the watermark might decline. Let denotes a model obtained by tuning with . A watermarking scheme is secure against tuning iff:

| (5) |

To meet (5), the host has to simulate the effects of tuning and make insensitive to them in the neighbour of .

2.3.3 Security against watermark detection

According to [52], one definition for the security against watermark detection is: no PPT can distinguish a watermarked model from a clean one with nonnegligible probability. Although this definition is impractical due to the lack of a universal backdoor detector, it is crucial that the watermark does not differentiate a watermarked model from a clean model too much. Moreover, the host should be able to control the level of this difference by tuning the watermarking method. Let be a parameter within WM that regulates such difference, it is desirable that

| (6) |

where is the model returned from WM with .

2.3.4 Privacy-preserving

To protect the host’s privacy, it is sufficient that any adversary cannot distinguish between two models watermarked with different keys. Fixing the primary task and the structure of the model , we first introduce an experiment in which an adversary tries to identify the host of a model:

| Security requirement |

|

|

|

|

|

|

|

|

|

Ours. | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Functionality-preserving. | E | E | P | E | P | E | E | P | E | P | ||||||||||||||||||

| Security against tuning. | N | E | E | E | E | E | N | E | N | P | ||||||||||||||||||

|

N | N | N | N | N | P | P | N | N | P | ||||||||||||||||||

| Privacy-preserving. | N | N | N | N | N | N | N | N | N | P | ||||||||||||||||||

|

N | E | N | N | E | E | N | N | N | E | ||||||||||||||||||

|

III | II | I | I | II | III | II | I | III | III | ||||||||||||||||||

| P means the security requirement is claimed to be held by proof or proper regularizers. E means an empirical evaluation | ||||||||||||||||||||||||||||

| on the security was provided. N means not discussion was given or insecure. | ||||||||||||||||||||||||||||

Definition 1.

If for all PPT adversary , the probability that wins is upper bounded by , where is a negligible function, then WM is privacy-preserving.

The intuition behind this definition is: an adversary cannot identify the host from the model, even if the number of candidates has been reduced to two. Almost all backdoor-based watermarking schemes are insecure under this definition. In order to protect privacy, it is crucial that WM be a probabilistic algorithm and verify depend on key.

2.3.5 Security against watermark overwriting

Assume that the adversary has watermarked with another secret key using a subprocess of WM and obtained : . The overwriting watermark should not invalid the original one, formally, for any legal :

| (7) |

During which the randomness in choosing , generating , and computing verify is integrated out. A watermarking scheme meets (7) is defined to be secure against watermark overwriting. This property is usually examined empirically in the literature [3, 12, 27].

2.3.6 Security against ownership piracy

In an ownership piracy attack, the adversary pirate a model by recovering key and forging verify through querying (or verify if available). We define three levels of security according to the efforts needed to pirate a model.

-

1.

Level I: The adversary only needs to wrap or query it for a constant number of times. All zero-bit watermarking schemes belong to this level.

-

2.

Level II: The adversary has to query for a number of times that is a polynomial function of the security parameter. The more the adversary queries, the more likely it is going to succeed in pretending to be the host. The key and verify, in this case, is generally simple. For example, [12, 3] are of this level of security.

- 3.

Watermarking schemes of level I and II can be adopted as theft detectors. But the host can hardly adopt a level I/II scheme to convince a third-party about ownership. Using a watermarking scheme of level III, a host can prove to any third-party the model’s possessor. This is the only case that the watermark has forensics value.

The scheme in [26] is a zero-bit watermarking scheme. The method proposed by Zhang et al. in [59] adopts marked images or noise as the backdoor triggers. But only a few marks that are easily forgeable were examined. The protocol of Uchida et al. [48] can be enhanced into level III secure against ownership piracy only if an authority is responsible for distributing the secret key, e.g. [55]. But it lacks covertness and the privacy-preserving property.

The VAE adopted in [29] has to be used conjugately with a secret key that enhances the robustness of the backdoor. The adversary can collect a set of mistaken samples from one class, slightly disturb them, and claim to have watermarked the neural network. To claim the ownership of a model watermarked by Adi et al. [3], the adversary samples its collection of triggers from the mistaken samples, encrypts them with a key, and submits the encrypted pairs. The perfect security of their scheme depends on the model to perform nearly perfect in the primary task, which is unrealistic in practice. As for DeepSigns [12], one adversary can choose one class and compute the empirical mean of the output of the activation functions (since the outliers are easy to detect) then generate a random matrix as the mask and claim ownership.

The scheme in [60] is of level III secure against ownership piracy as proved in the original paper. So is the method in [27] since it is generally hard to guess the actual pattern of the Wonder Filter mask from a space with size , where is the number of pixels of the mask. The scheme by Guan et al. in [16] is secure but extremely fragile, hence is out of the scope of practical watermarking schemes.

A comprehensive summary of established watermarking schemes judged according to the enumerated security requirements is given in Table 1.

3 The Proposed Method

3.1 Motivation

It is difficult for the backdoor-based or weight-based watermarking methods to formally meet all the proposed security requirements. Hence, we design a new white-box watermarking method for DNN model protection using multiple task learning. The watermark embedding is designed as an additional task . A classifier for is built independent to the backend for . After training and watermark embedding, only the network structure for is published.

Reverse engineering or backdoor detection as [49] cannot find any evidence of the watermark. Since no trigger is embedded in the published model’s backend. On the other hand, common FT methods such as fine-tune last layer (FTLL) or re-train last layers (RTLL) [3] that only modifies the backend layers of the model have no impact to our watermark.

Under this formulation, the functionality-preserving property, the security against tuning, the security against watermark detection and privacy-preserving can be formally addressed. A decently designed ensures the security against ownership piracy as well, making the MTL-based watermarking scheme a secure and sound option for model protection.

To better handle the forensic difficulties involving overwritten watermark and key management, we introduce a decentralized consensus protocol to authorize the time stamp embedded with the watermarks.

3.2 Overview

The proposed model consists of the MTL-based watermarking scheme and the decentralized verification protocol.

3.2.1 The MLT-based watermarking scheme

The structure of our watermarking scheme is illustrated in Fig.1. The entire network consists of the backbone network and two independent backends: and . The published model is the backbone followed by . While is the watermarking branch for the watermarking task, in which takes the output of different layers from the backbone as its input. By having monitor the outputs of different layers of the backbone network, it is harder for an adversary to design modifications to invalid completely.

To produce a watermarked model, a host should:

-

1.

Generate a collection of samples using a pseudo-random algorithm with key as the random seed.

-

2.

Optimize the entire DNN to jointly minimize the loss on and . During the optimization, a series of regularizers are designed to meet the security requirements enumerated in Section 2.

-

3.

Publishes .

To prove its ownership over a model to a third-party:

-

1.

The host submits , and key.

-

2.

The third-party generates with key and combines with ’s backbone to build a DNN for .

-

3.

If the statistical test indicates that with ’s backbone performs well on then the third-party confirms the host’s ownership over .

3.2.2 The decentralized verification protocol

To enhance the reliability of the ownership protection, it is necessary to use a protocol to authorize the watermark of the model’s host. Otherwise any adversary who has downloaded can embed its watermark into it and pirate the model.

One option is to use an trusted key distribution center or a timing agency, which is in charge of authorizing the time stamp of the hosts’ watermarks. However, such centralized protocols are vulnerable and expensive. For this reason we resort to decentralized consensus protocols such as Raft [37] or PBFT [8], which were designed to synchronize message within a distributed community. Under these protocols, one message from a user is responded and recorded by a majority of clients within the community so this message becomes authorized and unforgeable.

Concretely, a client under this DNN watermarking protocol is given a pair of public key and private key. can publish a watermarked model or claim its ownership over some model by broadcasting:

Publishing a model: After finishing training a model watermarked withkey, obtains and . Then signs and broadcasts the following message to the entire community:

where denotes string concatenation, time is the time stamp, and hash is a preimage resistant hash function mapping a model into a string and is accessible for all clients. Other clients within the community verify this message using ’s public key, verify that time lies within a recent time window and write this message into their memory. Once is confirmed that the majority of clients has recorded its broadcast (e.g. when receives a confirmation from the current leader under the Raft protocol), it publishes .

Proving its ownership over a model : signs and broadcasts the following message:

where and are pointers to and . Upon receiving this request, any client can independently conduct the ownership proof. It firstly downloads the model from and examines its hash. Then it downloads and retrieves the Publish message from by . The last steps follow Section. 3.2.1. After finishing the verification, this client can broadcast its result as the proof for ’s ownership over the model in .

3.3 Security Analysis of the Watermark Task

We now elaborate the design of the watermarking task and analyze its security. For simplicity, is instantiated as a binary classification task, i.e., the output of the watermarking branch has two channels. To generate , key is used as the seed of a pseudo-random generator (e.g., a stream cipher) to generate , a sequence of different integers from the range , and a binary string of length , where .

For each type of data space , a deterministic and injective function is adopted to map each interger in into an element in . For example, when is the image domain, the mapping could be the QRcode encoder. When is the sequence of words in English, the mapping could map an integer into the -th word of the dictionary.333We suggest not to use function that encodes integers into terms that are similar to data in , especially to data of the same class. This increase the difficulty for to achieve perfect classification. Without loss of generality, let denotes the mapped data from the -th integer in . Both the pseudo-random generator and the functions that map integers into specialized data space should be accessible for all clients within the intellectual property protection community. Now we set:

where is the -th bit of l. We now merge the security requirements raised in Section 2 into this framework.

3.3.1 The correctness

To verify the ownership of a model to a host with key given , the process verify operates as Algo. 2.

If then has been trained to minimize the binary classification loss on , hence the test is likely to succeed in Algo. 2, this justifies the requirement from (1). For an arbitrary , the induced watermark training data can hardly be similar to . To formulate this intuition, consider the event where shares terms with , . With a pseudorandom generator, it is computationally impossible to distinguish from an sequence of randomly selected intergers. The same argument holds for and a random binary string of length . Therefore the probability of this event can be upper bounded by:

where . For an arbitrary , let then the probability that overlaps with with a portion of declines exponentially.

For numbers not appeared in , the watermarking branch is expected to output a random guess. Therefore if is smaller than a threshold then can hardly pass the statistical test in Algo.2 with big enough. So let

and be large enough would make an effective collision in the watermark dataset almost impossible. For simplicity, setting is sufficient.

In cases is replaced by an arbitrary model whose backbone structure happens to be consistent with , the output of the watermarking branch remains a random guess. This justifies the second requirement for correct verification (2).

To select the threshold , assume that the random guess strategy achieves an average accuracy of at most , where is a bias term which is assumed to decline with the growth of . The verification process returns 1 iff the watermark classifier achieves binary classification of accuracy no less than . The demand for security is that by randomly guessing, the probability that an adversary passes the test declines exponentially with . Let denotes the number of correct guessing with average accuracy , an adversary suceeds only if . By the Chernoff theorem:

where is an arbitrary nonnegative number. If is larger than by a constant independent of then is less than 1 with proper , reducing the probability of successful attack into negligibility.

3.3.2 The functionality-preserving regularizer

Denote the trainable parameters of the DNN model by w. The optimization target for takes the form:

| (8) |

where is the loss defined by and is a regularizer reflecting the prior knowledge on w. The normal training process computes the empirical loss in (8) by stochastically sampling batches and adopting gradient-based optimizers.

The proposed watermarking task adds an extra data dependent term to the loss function:

| (9) | ||||

where is the cross entropy loss for binary classification. We omitted the dependency of on key in this section for conciseness.

To train multiple tasks, we can minimize the loss function for multiple tasks (9) directly or train the watermarking task and the primary task alternatively [7]. Since is much smaller than , it is possible that does not properly converge when being learned simultaneously with . Hence we first optimize w according to the loss on the primary task (8) to obtain :

Next, instead of directly optimizing the network w.r.t. (9), the following loss function is minimized:

| (10) | ||||

where

| (11) |

By introducing the regularizer in (11), w is confined in the neighbour of . Given this constraint and the continuity of as a function of w, we can expect the functionality-preserving property defined in (4). Then the weaker version of functionality-preserving (3) is tractable as well.

3.3.3 The tuning regularizer

To be secure against adversary’s tuning, it is sufficient to make robust against tuning by the definition in (5). Although is unknown to the host, we assume that shares a similar distribution as . Otherwise the stolen model would not have the state-of-the-art performance on the adversary’s task. To simulate the influence of tuning, a subset of is firstly sampled as an estimation of : . Let w be the current configuration of the model’s parameter. Tuning is usually tantanmount to minimizing the empirical loss on by starting from w, which results in an updated parameter: . In practice, is obtained by replacing in (8) by and conducting a few rounds of gradient descents from w.

To achieve the security against tuning defined in (5), it is sufficient that the parameter w satisfies:

| (12) | ||||

The condition (12), Algo.1 together with the assumption that is similar to imply (5).

To exert the constraint in (12) to the training process, we design a new regularizer as follows:

| (13) |

Then the loss to be optimized is updated from (10) to:

| (14) |

defined by (13) can be understood as one kind of data augmentation for . Data augmentation aims to improve the model’s robustness against some specific perturbation in the input. This is done by proactively adding such perturbation to the training data. According to [45], data augmentation can be formulated as an additional regularizer:

| (15) |

3.3.4 Security against watermark detection

Consider the extreme case where . Under this configuration, the parameters of are frozen and only the parameters in are tuned. Therefore is exactly the same as and it seems that we have not insert any information into the model. However, by broadcasting the designed message, the host can still prove that it has obtained the white-box access to the model at an early time, which fact is enough for ownership verification. This justifies the security against watermark detection by the definition of (6), where casts the role of .

3.3.5 Privacy-preserving

Recall the definition of privacy-preserving in Section 2.3.4. We prove that, under certain configurations, the proposed watermarking method is privacy-preserving.

Theorem 1.

Let take the form of a linear classifier whose input dimensionality is . If then the watermarking scheme is secure against assignment detection.

Proof.

The VC-dimension of a linear classifier with channels is . Therefore for inputs with arbitrary binary labels, there exists one that can almost always perfectly classify them. Given and an arbitrary , it is possible forge such that with ’s backbone performs perfectly on . We only have to plug the parameters of into (14), set , and minimize the loss. This step ends up with a watermarked model and an evidence, , for . Hence for the experiment defined in Algo. 1, an adversary cannot identify the host’s key since evidence for both options are equally plausible. The adversary can only conduct a random guess, whose probability of success is . ∎

This theorem indicates that, the MTL-based watermarking scheme can protect the host’s privacy. Moreover, given , it is crucial to increase the input dimensionality of or using a sophiscated structure for to increase its VC-dimensionality.

3.3.6 Security against watermark overwriting

It is possible to meet the definition of the security against watermark overwriting in (7) by adding the perturbation of embedding other secret keys into . But this requires building other classifier structures and is expensive even for the host. For an adversary with insufficient training data, it is common to freeze the weights in the backbone layers as in transfer learning [38], hence (7) is satisfied. For general cases, an adversary would not disturb the backbone of the DNN too much for the sake of its functionality on the primary task. Hence we expect the watermarking branch to remain valid after overwriting.

We leave the examination of the security against watermark overwriting as an empirical study.

3.3.7 Security against ownership piracy

Recall that in ownership piracy, the adversary is not allowed to train its own watermark classifier. Instead, it can only forge a key given a model and a legal , this is possible if the adversary has participated in the proof for some other client. Now the adversary is to find a new key such that can pass the statistical test defined by the watermarking branch and . Although it is easy to find a set of intergers with half of them classified as and half by querying the watermarking branch as an oracle, it is hard to restore a legal from this set. The protocol should adopt a stream cipher secure against key recovery attack [42], which, by definition, blocks this sort of ownership piracy and makes the proposed watermarking scheme of level III secure against ownership piracy. If is kept secret then the ownership piracy is impossible. Afterall, ownership piracy is invalid when an authorized time stamp is avilable.

3.4 Analysis of the Verification Protocol

We now conduct the security analysis to the consensus protocal and solve the redeclaration dilemma.

To pirate a model under this protocol, an adversary must submit a legal key and the hash of a . If the adversary does not have a legal then this attack is impossible since the preimage resistance of hash implies that the adversary cannot forge such a watermark classifier afterwards. So this broadcast is invalid. If the adversary has managed to build a legal , compute its hash, but has not obtained the target model then the verification can hardly succeed since the output of with the backbone of an unknown network on the watermark dataset is random guessing. The final case is that the adversary has obtained the target model, conducted the watermark overwriting and redeclared the ownership. Recall that the model is published only if its host has successfully broadcast its Publish message and notarized its time. Hence the overwriting dilemma can be solved by comparing the time stamp inside contradictive broadcasts.

As an adaptive attack, one adversary participating in the proof of a host’s ownership over a model obtains the corresponding key and , with which it can erase weight-based watermarks [48, 55]. Embedding information into the outputs of the network rather than its weights makes the MTL-based watermark harder to erase. The adversary has to identify the decision boundary from and tune so samples drawn from key violates this boundary. This attack risks the model’s performance on the primary task, requires huge amont of data and computation resources and is beyond the competence of a model thief.

The remaining security risks are within the cryptological components and beyond the scope of our discussion.

4 Experiments and Discussions

4.1 Experiment Setup

To illustrate the flexibility of the proposed watermarking model, we considered four primary tasks: image classification (IC), malware classification (MC), image semantic segmentation (SS) and sentimental analysis (SA) for English. We selected four datasets for image classification, one dataset for malware classification, two datasets for semantic segmentation and two datasets for sentimental classification. The descriptions of these datasets and the corresponding DNN structures are listed in Table 2. ResNet [18] is a classical model for image processing. For the VirusShare dataset, we compiled a collection of 26,000 malware into images and adopted ResNet as the classifier [11]. Cascade mask RCNN (CMRCNN) [6] is a network architecture specialized for semantic segmentation. Glove [39] is a pre-trained word embedding that maps English words into numerical vectors, while bidirectional long short-term memory (Bi-LSTM) [21] is commonly used to analyze natural languages.

| Dataset | Description | DNN structure | ||||

| MNIST [13] | IC, 10 classes | ResNet-18 | ||||

|

IC, 10 classes | ResNet-18 | ||||

| CIFAR-10 [25] | IC, 10 classes | ResNet-18 | ||||

| CIFAR-100 [25] | IC, 100 classes | ResNet-18 | ||||

| VirusShare [1] | MC, 10 classes | ResNet-18 | ||||

|

SS, 2 classes |

|

||||

| VOC [2] | SS, 20 classes |

|

||||

| IMDb [34] | SA, 2 classes | Glove+Bi-LSTM | ||||

| SST [46] | SA, 5 classes | Glove+Bi-LSTM |

| Dataset | ’s performance | Regularizer configuration | |||||||||||||

| No regularizers. | and | ||||||||||||||

| MNIST | 99.6% |

|

|

|

|

||||||||||

|

93.3% |

|

|

|

|

||||||||||

| CIFAR-10 | 91.5% |

|

|

|

|

||||||||||

| CIFAR-100 | 67.7% |

|

|

|

|

||||||||||

| VirusShare | 97.4% |

|

|

|

|

||||||||||

|

0.79 | — |

|

— |

|

||||||||||

| VOC | 0.69 | — |

|

— |

|

||||||||||

| IMDb | 85.0% |

|

|

|

|

||||||||||

| SST | 75.4% |

|

|

|

|

||||||||||

| Dataset | Number of overwriting epochs | |||||

|---|---|---|---|---|---|---|

| 50 | 150 | 250 | 350 | |||

| MNIST | 1.0% | 1.5% | 1.5% | 2.0% | ||

|

2.0% | 2.5% | 2.5% | 2.5% | ||

| CIFAR-10 | 4.5% | 4.5% | 4.5% | 4.5% | ||

| CIFAR-100 | 0.0% | 0.5% | 0.9% | 0.9% | ||

| VirusShare | 0.0% | 0.5% | 0.5% | 0.5% | ||

|

0.5% | 1.0% | 1.0% | 1.0% | ||

| VOC | 1.3% | 2.0% | 2.1% | 2.1% | ||

| IMDb | 3.0% | 3.0% | 3.0% | 3.0% | ||

| SST | 2.5% | 3.0% | 3.0% | 2.5% | ||

For the first seven image datasets, was a two-layer perceptron that took the outputs of the first three layers from the ResNet as input. QRcode was adopted to generate . For the NLP datasets, the network took the structure in Fig. 2.

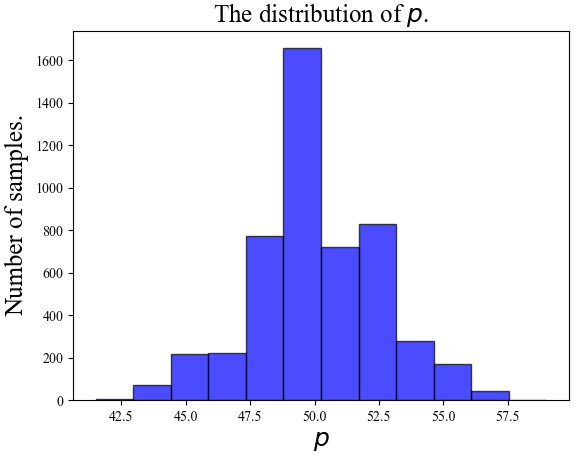

Throughout the experiments we set . To set the verification threshold in Algo. 2, we test the classification accuracy of across nine datasets over 5,000 s different from the host’s. The result is visualized in Fig. 3, from which we observed that almost all cases fell in . We selected so the probability of success piracy is less than with in the Chernoff bound.

We conducted three tuning attacks: FT, NP, FP, and the overwriting attack to the proposed watermarking framework.

4.2 Ablation Study

To examine the efficacy of and , we compared the performance of the model under different combinations of two regularizers. We are interested in four metrics: (1) the performance of on , (2) the performance of on after FT, (3) the performance of on after FP, and (4) the decline of the performance of on when NP made ’s accuracy on lower than . The first metric reflects the decline of a model’s performance after being watermarked. The second and the third metrics measure the watermark’s robustness against an adversary’s tuning. The last metric reflects the decrease of the model’s utility when an adversary is determined to erase the watermark using NP. The model for each dataset was trained by minimizing the MTL loss defined by (14), where we adopted FT, NP and FP for tuning and chose the optimal and by grid search. Then we attacked each model by FT with a smaller learning rate, FP [31] and NP. The results are collected in Table 3.

We observe that by using and , it is possible to preserve the watermarked model’s performance on the primary task and that on the watermarking task simultaneously. Therefore we suggest that whenever possible, the two regularizers should be incorporated in training the model.

4.3 Watermark Detection

As an illustration of the security against watermark detection, we illustrated the property inference attack [15]. The distributions of the parameters of a clean model, a model watermarked by our method and one weight-based method [12] for CIFAR-10 are visualized in Fig. 4 and Fig. 5.

In which we adopted . Unlike the weight-based watermarking method analyzed in [15], our method did not result in a significant difference between the distributions of parameters of the two models. Hence an adversary can hardly distinguish a model watermarked by the MTL-based method from a clean one.

| Dataset | Ours, and | Li et al. [27] | Zhu et al. [60] | ||||||

| Primary | FP | NP | Primary | FP | NP | Primary | FP | NP | |

| MNIST | 99.5% | 92.5% | 2.2% | 99.0% | 14.5% | 0.9% | 98.8% | 7.0% | 1.4% |

| Fashion-MNIST | 93.1% | 86.0% | 54.7% | 92.5% | 13.5% | 17.5% | 91.8% | 11.3% | 5.8% |

| CIFAR-10 | 88.8% | 91.5% | 50.3% | 88.5% | 14.5% | 13.6% | 85.0% | 10.0% | 17.1% |

| CIFAR-100 | 63.8% | 97.0% | 29.4% | 63.6% | 1.2% | 5.5% | 65.7% | 0.8% | 0.9% |

| VirusShare | 97.3% | 100% | 9.6% | 95.1% | 8.8% | 1.5% | 96.3% | 9.5% | 1.1% |

4.4 The Overwriting Attack

After adopting both regularizers, we performed overwriting attack to models for all nine tasks, where each model was embedded different keys. In all cases the adversary’s watermark could be successfully embedded into the model, as what we have predicted. The metric is the fluctuation of the watermarking branch on the watermarking task after overwriting, as indicated by (7). We recorded the fluctuation for the accuracy of the watermarking branch with the overwriting epoches. The results are collected in Table 4.

The impact of watermark overwriting is uniformly bounded by 4.5% in our settings. And the accuracy of the watermarking branch remained above the threshold . Combined with Table 3, we conclude that the MTL-based watermarking method is secure against watermark overwriting.

4.5 Comparision and Discussion

We implemented the watermarking methods in [60] and [27], which are both backdoor-based method of level III secure against ownership piracy. We randomly generated 600 trigger samples for [60] and assigned them with proper labels. For [27], we randomly selected Wonder Filter patterns and exerted them onto 600 randomly sampled images.

As a comparison, we list the performance of their watermarked models on the primary task, the verification accuracy of their backdoors after FP, whose damage to backdoors is larger than FT, and the decline of the performance of the watermarked models when NP was adopted to invalid the backdoors (when the accuracy of the backdoor triggers is under 15%) in Table. 5. We used the ResNet-18 DNN for all experiments and conducted experiments for the image classifications, since otherwise the backdoor is undefined.

We observe that for all metrics, our method achieved the optimal performance, this is due to:

-

1.

Extra regularizers are adopted to explicitly meet the security requirements.

-

2.

The MTL-based watermark does not incorporate backdoors into the model, so adversarial modifications such as FP, which are designed to eliminate backdoor, can hardly reduce our watermark.

-

3.

The MTL-based watermark relies on an extra module, , as a verifier. As an adversary cannot tamper with this module, universal tunings as NP have less impact.

Apart from these metrics, our proposal is better than other backdoor-based DNN watermarking methods since:

-

1.

Backdoor-based watermarking methods are not privacy-preserving.

-

2.

So far, backdoor-based watermarking methods can only be applied to image classification DNNs. This fact challenges the generality of backdoor-based watermark.

-

3.

It is hard to design adaptive backdoor against specific screening algorithms. However, the MTL-based watermark can easily adapt to new tuning operators. This can be done by incorporating such tuning operator into .

5 Conclusion

This paper presents a MTL-based DNN watermarking model for ownership verification. We summarize the basic security requirements for DNN watermark formally and raise the privacy concern. Then we propose to embed watermark as an additional task parallel to the primary task. The proposed scheme explicitly meets various security requirements by using corresponding regularizers. Those regularizers and the design of the watermarking task grant the MTL-based DNN watermarking scheme tractable security. With a decentralized consensus protocol, the entire framework is secure against all possible attacks.

We are looking forward to using cryptological protocols such as zero-knowledge proof to improve the ownership verification process so it is possible to use one secret key for multiple notarizations.

Acknowledgments

This work receives support from anonymous reviewers.

Availability

Materials of this paper, including source code and part of the dataset, are available at http://github.com/a_new_account/xxx.

References

- [1] Virusshare dataset. https://virusshare.com/.

- [2] Voc dataset. http://host.robots.ox.ac.uk/pascal/VOC/voc2012/.

- [3] Yossi Adi, Carsten Baum, Moustapha Cisse, Benny Pinkas, and Joseph Keshet. Turning your weakness into a strength: Watermarking deep neural networks by backdooring. In 27th USENIX Security Symposium (USENIX Security 18), pages 1615–1631, 2018.

- [4] M. Arnold, M. Schmucker, and S. Wolthusen. Digital Watermarking and Content Protection: Techniques and Applications. 2003.

- [5] Lejla Batina, Shivam Bhasin, Dirmanto Jap, and Stjepan Picek. CSINN: Reverse engineering of neural network architectures through electromagnetic side channel. In 28th USENIX Security Symposium (USENIX Security 19), pages 515–532, 2019.

- [6] Zhaowei Cai and Nuno Vasconcelos. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 6154–6162, 2018.

- [7] Rich Caruana. Multitask learning. Machine learning, 28(1):41–75, 1997.

- [8] Miguel Castro, Barbara Liskov, et al. Practical byzantine fault tolerance. In OSDI, volume 99, pages 173–186, 1999.

- [9] Xinyun Chen, Wenxiao Wang, Chris Bender, Yiming Ding, Ruoxi Jia, Bo Li, and Dawn Song. Refit: a unified watermark removal framework for deep learning systems with limited data. arXiv preprint arXiv:1911.07205, 2019.

- [10] Jen-Tzung Chien. Deep bayesian natural language processing. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics: Tutorial Abstracts, pages 25–30, 2019.

- [11] Qianfeng Chu, Gongshen Liu, and Xinyu Zhu. Visualization feature and cnn based homology classification of malicious code. Chinese Journal of Electronics, 29(1):154–160, 2020.

- [12] Bita Darvish Rouhani, Huili Chen, and Farinaz Koushanfar. Deepsigns: an end-to-end watermarking framework for ownership protection of deep neural networks. In Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, pages 485–497, 2019.

- [13] Li Deng. The mnist database of handwritten digit images for machine learning research [best of the web]. IEEE Signal Processing Magazine, 29(6):141–142, 2012.

- [14] Mohamed Elhoseny, Mahmoud Mohamed Selim, and K Shankar. Optimal deep learning based convolution neural network for digital forensics face sketch synthesis in internet of things (iot). International Journal of Machine Learning and Cybernetics, pages 1–12, 2020.

- [15] Karan Ganju, Qi Wang, Wei Yang, Carl A Gunter, and Nikita Borisov. Property inference attacks on fully connected neural networks using permutation invariant representations. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, pages 619–633, 2018.

- [16] Xiquan Guan, Huamin Feng, Weiming Zhang, Hang Zhou, Jie Zhang, and Nenghai Yu. Reversible watermarking in deep convolutional neural networks for integrity authentication. In Proceedings of the 28th ACM International Conference on Multimedia, pages 2273–2280, 2020.

- [17] Jian Guo, He He, Tong He, Leonard Lausen, Mu Li, Haibin Lin, Xingjian Shi, Chenguang Wang, Junyuan Xie, Sheng Zha, et al. Gluoncv and gluonnlp: Deep learning in computer vision and natural language processing. Journal of Machine Learning Research, 21(23):1–7, 2020.

- [18] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [19] Tong He, Zhi Zhang, Hang Zhang, Zhongyue Zhang, Junyuan Xie, and Mu Li. Bag of tricks for image classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 558–567, 2019.

- [20] Weizhe Hua, Zhiru Zhang, and G Edward Suh. Reverse engineering convolutional neural networks through side-channel information leaks. In 2018 55th ACM/ESDA/IEEE Design Automation Conference (DAC), pages 1–6. IEEE, 2018.

- [21] Zhiheng Huang, Wei Xu, and Kai Yu. Bidirectional lstm-crf models for sequence tagging. arXiv preprint arXiv:1508.01991, 2015.

- [22] Alex Kendall, Yarin Gal, and Roberto Cipolla. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 7482–7491, 2018.

- [23] Manish Kesarwani, Bhaskar Mukhoty, Vijay Arya, and Sameep Mehta. Model extraction warning in mlaas paradigm. In Proceedings of the 34th Annual Computer Security Applications Conference, pages 371–380, 2018.

- [24] Thorsten Knoll. Adapting kerckhoffs’ s principle. Advanced Microkernel Operating Systems, pages 93–97, 2018.

- [25] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- [26] Erwan Le Merrer, Patrick Perez, and Gilles Trédan. Adversarial frontier stitching for remote neural network watermarking. Neural Computing and Applications, 32(13):9233–9244, 2020.

- [27] Huiying Li, Emily Willson, Haitao Zheng, and Ben Y Zhao. Persistent and unforgeable watermarks for deep neural networks. arXiv preprint arXiv:1910.01226, 2019.

- [28] Yige Li, Nodens Koren, Lingjuan Lyu, Xixiang Lyu, Bo Li, and Xingjun Ma. Neural attention distillation: Erasing backdoor triggers from deep neural networks. arXiv preprint arXiv:2101.05930, 2021.

- [29] Zheng Li, Chengyu Hu, Yang Zhang, and Shanqing Guo. How to prove your model belongs to you: a blind-watermark based framework to protect intellectual property of dnn. In Proceedings of the 35th Annual Computer Security Applications Conference, pages 126–137, 2019.

- [30] Ji Lin, Wei-Ming Chen, Yujun Lin, Chuang Gan, Song Han, et al. Mcunet: Tiny deep learning on iot devices. Advances in Neural Information Processing Systems, 33:1–12, 2020.

- [31] Kang Liu, Brendan Dolan-Gavitt, and Siddharth Garg. Fine-pruning: Defending against backdooring attacks on deep neural networks. In International Symposium on Research in Attacks, Intrusions, and Defenses, pages 273–294. Springer, 2018.

- [32] Xuankai Liu, Fengting Li, Bihan Wen, and Qi Li. Removing backdoor-based watermarks in neural networks with limited data. arXiv preprint arXiv:2008.00407, 2020.

- [33] Yingqi Liu, Wen-Chuan Lee, Guanhong Tao, Shiqing Ma, Yousra Aafer, and Xiangyu Zhang. Abs: Scanning neural networks for back-doors by artificial brain stimulation. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, pages 1265–1282, 2019.

- [34] Andrew Maas, Raymond E Daly, Peter T Pham, Dan Huang, Andrew Y Ng, and Christopher Potts. Learning word vectors for sentiment analysis. In Proceedings of the 49th annual meeting of the association for computational linguistics: Human language technologies, pages 142–150, 2011.

- [35] Ryota Namba and Jun Sakuma. Robust watermarking of neural network with exponential weighting. In Proceedings of the 2019 ACM Asia Conference on Computer and Communications Security, pages 228–240, 2019.

- [36] Niall O’Mahony, Sean Campbell, Anderson Carvalho, Suman Harapanahalli, Gustavo Velasco Hernandez, Lenka Krpalkova, Daniel Riordan, and Joseph Walsh. Deep learning vs. traditional computer vision. In Science and Information Conference, pages 128–144. Springer, 2019.

- [37] Diego Ongaro and John Ousterhout. In search of an understandable consensus algorithm. In 2014 USENIX Annual Technical Conference (USENIXATC 14), pages 305–319, 2014.

- [38] Sinno Jialin Pan and Qiang Yang. A survey on transfer learning. IEEE Transactions on knowledge and data engineering, 22(10):1345–1359, 2009.

- [39] Jeffrey Pennington, Richard Socher, and Christopher D Manning. Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), pages 1532–1543, 2014.

- [40] Antonio Polino, Razvan Pascanu, and Dan Alistarh. Model compression via distillation and quantization. arXiv preprint arXiv:1802.05668, 2018.

- [41] Han Qiu, Qinkai Zheng, Tianwei Zhang, Meikang Qiu, Gerard Memmi, and Jialiang Lu. Towards secure and efficient deep learning inference in dependable iot systems. IEEE Internet of Things Journal, page preprint, 2020.

- [42] Vladimir Rudskoy. On zero practical significance of" key recovery attack on full gost block cipher with zero time and memory". IACR Cryptol. ePrint Arch., 111:1–24, 2010.

- [43] Ozan Sener and Vladlen Koltun. Multi-task learning as multi-objective optimization. In Advances in Neural Information Processing Systems, pages 527–538, 2018.

- [44] Masoumeh Shafieinejad, Jiaqi Wang, Nils Lukas, Xinda Li, and Florian Kerschbaum. On the robustness of the backdoor-based watermarking in deep neural networks. arXiv preprint arXiv:1906.07745, 2019.

- [45] Connor Shorten and Taghi M Khoshgoftaar. A survey on image data augmentation for deep learning. Journal of Big Data, 6(1):60–107, 2019.

- [46] Richard Socher, Alex Perelygin, Jean Wu, Jason Chuang, Christopher D Manning, Andrew Y Ng, and Christopher Potts. Recursive deep models for semantic compositionality over a sentiment treebank. In Proceedings of the 2013 conference on empirical methods in natural language processing, pages 1631–1642, 2013.

- [47] Frederick Tung, Srikanth Muralidharan, and Greg Mori. Fine-pruning: Joint fine-tuning and compression of a convolutional network with bayesian optimization. arXiv preprint arXiv:1707.09102, 2017.

- [48] Yusuke Uchida, Yuki Nagai, Shigeyuki Sakazawa, and Shin’ichi Satoh. Embedding watermarks into deep neural networks. In Proceedings of the 2017 ACM on International Conference on Multimedia Retrieval, pages 269–277, 2017.

- [49] B. Wang, Y. Yao, S. Shan, H. Li, B. Viswanath, H. Zheng, and B. Y. Zhao. Neural cleanse: Identifying and mitigating backdoor attacks in neural networks. In 2019 IEEE Symposium on Security and Privacy (SP), pages 707–723, 2019.

- [50] Bolun Wang, Yuanshun Yao, Shawn Shan, Huiying Li, Bimal Viswanath, Haitao Zheng, and Ben Y Zhao. Neural cleanse: Identifying and mitigating backdoor attacks in neural networks. In 2019 IEEE Symposium on Security and Privacy (SP), pages 707–723. IEEE, 2019.

- [51] Liming Wang, Jianbo Shi, Gang Song, and I-fan Shen. Object detection combining recognition and segmentation. In Asian conference on computer vision, pages 189–199. Springer, 2007.

- [52] Tianhao Wang and Florian Kerschbaum. Robust and undetectable white-box watermarks for deep neural networks. arXiv preprint arXiv:1910.14268, 2019.

- [53] Thomas Wolf, Julien Chaumond, Lysandre Debut, Victor Sanh, Clement Delangue, Anthony Moi, Pierric Cistac, Morgan Funtowicz, Joe Davison, Sam Shleifer, et al. Transformers: State-of-the-art natural language processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, pages 38–45, 2020.

- [54] Han Xiao, Kashif Rasul, and Roland Vollgraf. Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms, 2017.

- [55] G. Xu, H. Li, Y. Zhang, X. Lin, R. H. Deng, and X. Shen. A deep learning framework supporting model ownership protection and traitor tracing. In 2020 IEEE 26th International Conference on Parallel and Distributed Systems (ICPADS), pages 438–446, 2020.

- [56] Ziqi Yang, Jiyi Zhang, Ee-Chien Chang, and Zhenkai Liang. Neural network inversion in adversarial setting via background knowledge alignment. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, pages 225–240, 2019.

- [57] Yuanshun Yao, Huiying Li, Haitao Zheng, and Ben Y Zhao. Latent backdoor attacks on deep neural networks. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, pages 2041–2055, 2019.

- [58] Dong Yin, Raphael Gontijo Lopes, Jon Shlens, Ekin Dogus Cubuk, and Justin Gilmer. A fourier perspective on model robustness in computer vision. Advances in Neural Information Processing Systems, 32:13276–13286, 2019.

- [59] Jialong Zhang, Zhongshu Gu, Jiyong Jang, Hui Wu, Marc Ph Stoecklin, Heqing Huang, and Ian Molloy. Protecting intellectual property of deep neural networks with watermarking. In Proceedings of the 2018 on Asia Conference on Computer and Communications Security, pages 159–172, 2018.

- [60] Renjie Zhu, Xinpeng Zhang, Mengte Shi, and Zhenjun Tang. Secure neural network watermarking protocol against forging attack. EURASIP Journal on Image and Video Processing, 2020(1):1–12, 2020.