Second-Order Convergent Collision-Constrained Optimization-Based Planner

Abstract

Finding robot poses and trajectories represents a foundational aspect of robot motion planning. Despite decades of research, efficiently and robustly addressing these challenges is still difficult. Existing approaches are often plagued by various limitations, such as intricate geometric approximations, violations of collision constraints, or slow first-order convergence. In this paper, we introduce two novel optimization formulations that offer provable robustness, achieving second-order convergence while requiring only a convex approximation of the robot’s links and obstacles. Our first method, known as the Explicit Collision Barrier (ECB) method, employs a barrier function to guarantee separation between convex objects. ECB uses an efficient matrix factorization technique, enabling a second-order Newton’s method with an iterative complexity linear in the number of separating planes. Our second method, referred to as the Implicit Collision Barrier (ICB) method, further transforms the separating planes into implicit functions of robot poses. We show such an implicit objective function is twice-differentiable, with derivatives evaluated at a linear complexity. To assess the effectiveness of our approaches, we conduct a comparative study with a first-order baseline algorithm across six testing scenarios. Our results unequivocally justify that our method exhibits significantly faster convergence rates compared to the baseline algorithm.

Index Terms:

Trajectory and Pose Optimization, Collision Constraint, Convex AnalysisI Introduction

The issue of robot safety stands as a paramount concern, imposing stringent constraints on motion planning algorithms. Among these constraints, collision avoidance requirements demand that the robot maintains a minimum distance from static or moving obstacles at all times. This paper addresses the challenge of generating optimal robot poses and trajectories while adhering to collision constraints and optimizing a user-defined cost function. Such a problem finds practical applications in (multi-)UAV trajectory planning [1], inverse kinematics [2], object settling [3], and dynamic simulations [4]. Ideally, an effective planning algorithm should possess several key attributes to cater to the diverse needs of these applications: (Scalability) handling a significant number of robot links and obstacles; (Efficacy) rapid convergence and low iterative and overall computational costs; (Robustness) guarantee to satisfy all collision constraints; (Generality) applying to arbitrary articulated robots with complex geometries. However, despite decades of dedicated research in pursuit of such algorithms, it remains a challenge to find a single solution that fully satisfies all these diverse requirements.

Existing trajectory generation algorithms are engineered to suit a set of specific applications. Take, for instance, (online) UAV trajectory generation, where computational efficiency is paramount. Existing algorithm [5] employs penalty methods to handle constraints, which can compromise robustness. In contrast, other algorithms such as [1, 6] offer guaranteed robustness and global optimality. Unfortunately, their applicability is limited to point robots and convex-decomposable freespace. When dealing with general articulated robots, the formulation of collision constraints becomes notably challenging. Many optimization-based algorithms, as seen in the works [7, 8], once again resort to less robust penalty-based techniques. Furthermore, these algorithms often exhibit limited efficacy, having only first-order convergence. Recent advancements [9, 10] have introduced interior point methods to address collision constraints with guaranteed robustness. However, [9] [9] necessitates the decomposition of robot geometry into a gazillion of triangles, which scales poorly with the complexity of the robot geometry. On the other hand, [10] [10] relies on the strict convexity of robot links, demanding intricate geometric modifications and suffering from first-order convergence limitations.

| Method | Scalability | Efficacy | Robustness | Generality |

|---|---|---|---|---|

| [5] | point-penalty | second-order | no | point |

| [1, 6] | convex | mixed-integer | yes | point |

| [9] | triangle | second-order | yes | linear motion |

| [10] | convex | first-order | yes | articulated |

| [3] | point | second-order | no | articulated |

| [7, 8] | point-penalty | first-order | no | articulated |

| [11] | convex | first-order | yes | articulated |

| Ours | convex | second-order | yes | articulated |

Main Results: We present two innovative optimization solvers designed for collision-constrained robot pose and trajectory planning. Our approach highlights a structural analysis of the barrier function governing collision constraints between pairs of convex hulls. We demonstrate that the Hessian matrix of this barrier function possesses a unique structure that enables efficient factorization using a Schur-complement solver, leading to our ECB method. Furthermore, by employing a generalized barrier function, we eliminate the need for a separating plane between convex hull pairs by representing it as an implicit function of robot poses, leading to our ICB method. Both of these advancements empower us to apply second-order convergent Newton’s methods with an iterative cost linear in the number of convex hull pairs.

Our method exhibits several advantageous properties that have not been simultaneously achieved by prior algorithms. First, we decompose the robot’s geometry into convex hulls rather than points [3] or triangles [9]. As a result, our method can scale to large, complex robot and environmental geometries using a moderate number of geometric primitives, leading to enhanced scalability. Second, in contrast to previous first-order convergent methods [12, 10], our approach enjoys faster second-order convergence. Finally, our method adopts the interior point algorithm that inherits the robustness established by [9, 10], i.e., our method ensures the collision-free properties of the optimize robot poses/trajectories. We have conducted evaluations of our method across six challenging robot planning scenarios. Our results unequivocally demonstrate that our approach achieves significantly faster convergence compared to the first-order baseline method.

II Related Work

We review related work on collision-constrained planning, collision constraint formulations, numerical optimization techniques, and semi-infinite collision constraints.

Collision-Constrained Planning: The algorithms summarized in Table I predominantly fall into the category of local optimization methods. These approaches leverage (high-order) derivatives of the objective/constraint functions to efficiently identify locally optimal plans. In contrast, optimal sampling-based methods [14, 15] pursue globally optimal plans by exploring the entire configuration space. However, a notable drawback of these methods is their limited scalability concerning the dimensionality of the configuration space [16]. An additional category of techniques includes the control barrier function [17], designed for dynamic systems to adhere to specific constraints. However, these algorithms primarily focus on constraint satisfaction and do not directly address the planning of robot motions that fulfill users’ requirements. We also acknowledge recent advancements [18, 19] that involve precomputing feasible sub-domains within the configuration space. Subsequently, mixed-integer second-order numerical solvers are employed to derive globally optimal motion plans restricted to these sub-domains. This approach represents a promising alternative to our method, which obviates the need for sub-domain precomputation or restriction.

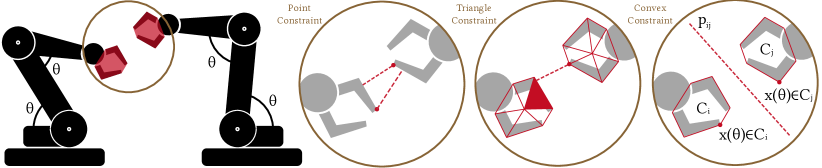

Collision-Constraint Formulations: The local optimization techniques outlined in Table I can be distinguished by the granularity at which they formulate collision constraints. Several methods [5, 3] opt to replace collision constraints with point constraints, due to their neat closed-form derivatives. However, this approach necessitates a considerable number of point constraints to ensure the safety of a robot with complex geometries. Recent advancements, as exemplified in [9], extend point constraints to collision constraints between pairs of triangles, benefiting from closed-form second-order derivatives. Nevertheless, even this approach requires a substantial number of triangles to represent intricate robot geometries, resulting in a high volume of collision constraints. As proposed in [13, 11], the number of geometric primitives can be significantly reduced if we can formulate collision constraints between general convex hulls. While [12] [12] demonstrated that the distance function between convex hulls possesses first-order derivatives, a comprehensive exploration of second-order differentiability and the efficient evaluation of convex constraints remains an area that requires further investigation.

Numerical Optimization Techniques: Methods from Table I harness the power of numerical optimization solvers to search for optimal plans. The majority of existing approaches [5, 1, 6] cast the problem in the form of a conventional (mixed-integer) Nonlinear Program (NLP) and subsequently employ off-the-shelf optimization software packages [20, 21]. These algorithms typically exhibit second-order convergence when an accurate Hessian matrix can be evaluated. However, they regress to first-order convergence when solely gradient information is accessible, as in [12]. Furthermore, customized optimization solvers have been devised to enhance robustness or efficiency. For instance, [9] [9] seamlessly integrated continuous collision checks into the line-search procedure to circumvent the challenge of tunneling. Meanwhile, methods like [3, 10] strategically sample collision constraints along the trajectory to approximate the solution of a Semi-Infinite Program (SIP). [11] [11] introduced a first-order ADMM approach that interleaves the optimization of robot trajectories and the separating planes between convex hulls to facilitate parallel computation.

Semi-Infinite Collision Constraints: As clarified in Section I, the necessity to satisfy collision constraints at infinitely many time instances inherently gives rise to SIP problem instances, a concept recently articulated by [3, 10]. While this paper primarily addresses a finite set of collision constraints, we emphasize that our methodology can be adapted to tackle SIP problems. Notably, existing SIP solvers propose to transform SIP into a standard NLP by either sampling [3] or discretizing [10] the constraints. In this context, we can readily substitute the underlying solver in [3, 10] with our approach to achieve second-order convergence when dealing with convex constraints. However, it is noteworthy that, while this enables second-order convergence in the NLP subproblem, the convergence speed of the original SIP problem remains an open question and warrants further exploration, which we leave as a subject for future work.

III Problem Statement

We address the challenge of collision-constrained optimization, focusing on the task of optimizing the pose/trajectory of a robot within the context of a twice-differentiable objective function , where represents the robot’s configuration parameter. The robot is subject to a set of collision constraints taking the following general form:

Here, and represent pairs of potentially contacting objects drawn from the set of contact pairs denoted as . can correspond to a link within an articulated robot or a static obstacle. For the sake of generality, we assume that is a function of the robot’s configuration. Additionally, we presume that possesses a (not necessarily strictly) convex shape, which is a widely adopted geometric representation for robots [12, 22, 18]. Using convex shapes can effectively reduce the number of constraints as compared with point-pair or triangle-pair constraints. Non-convex shapes can be further decomposed into convex primitives. Consequently, the primary optimization model addressed in this paper can be summarized as:

| (1) |

Equation 1 embraces a generalized formulation that encompasses both the optimization of a static robot pose and a dynamic robot trajectory. In the case of optimizing a static robot pose, corresponds to a single configuration, and is a finite set that enumerates all potential convex object pairs susceptible to collision. In contrast, when optimizing a robot trajectory, represents the parameterization of the robot’s trajectory, such as the control points of a spline curve [1, 23]. In this scenario, expands to an infinite set that encompasses all possible convex object pairs at infinitely many time instances across the trajectory. This extension leads to the formulation of SIP problem instances. In the latter case, can be further approached by sampling at finite time instances [3] or discretizing [10], thus unified with the former case.

IV Method

The effective resolution of Equation 1 poses practical challenges, particularly when dealing with convex collision constraints known for their non-differentiability [12, 3]. As illustrated in Figure 1, when complex environmental geometries are considered, the number of collision pairs can rapidly escalate, leading to sizable problem dimensions. In this study, we introduce two efficient optimization techniques, both featuring local second-order convergence and an iterative cost linear in the number of collision pairs. Our approach draws inspiration from recent research [13, 11], which introduced separating planes between pairs of convex objects, represented by the normal direction and offset . However, prior works [13, 11] employed an Alternating Optimization (AO) approach that interleaved the updates of and . Unfortunately, this approach is limited to at most first-order convergence speed [24]. Our primary contribution is the development of two quasi-Newton algorithms that jointly optimize , and without significantly increasing the computational overhead. To achieve this, we augment our objective function with a barrier energy designed to prevent the intersection of and . In our ECB method, we derive the Hessian matrix of the augmented objective function concerning both , and . Subsequently, we efficiently invert this large Hessian matrix using the Schur-complement lemma [25]. In our ICB method, we eliminate and by expressing them as implicit functions of and derive its Hessian using the implicit function theorem [26].

IV-A ECB Method

A convex object can be represented in two ways: the V-representation characterizes using a set of vertices, while the H-representation characterizes it using a set of separating planes. In this work, we consistently utilize the V-representation. Without any ambiguity, we denote as the set of vertices. We assume each vertex is a twice-differentiable function of , which holds generally for articulated robot with being the forward-kinematic function. To define a valid separating plane , it should ensure that the vertices of and are on opposite sides of it, i.e.:

We enforce each of these linear constraints by introducing a barrier energy function and augmenting the objective function as follows:

where we assume the barrier function is strictly convex, defined in the interval , and satisfies the condition . Consequently, our primary optimization problem Equation 1 is transformed into the following barrier-augmented optimization:

Note that we still need an additional constraint to ensure unit normal vectors for the separating planes. This method was previously proposed in [11]. However, they optimized and in separate sub-problems. In contrast, we introduce a joint optimization formulation for both and , achieving faster convergence. We refer to this method as the ECB method since all variables are explicitly treated as independent decision variables.

Our approach to optimizing the explicit formulation employs a standard Newton’s method. We calculate the joint Hessian matrix and then determine the joint update direction by solving the following KKT system:

| (8) |

where represents the Jacobian of the unit normal constraint , with each column of corresponding to the normal vector of a separating plane. However, this straightforward approach can encounter two main issues. Firstly, when dealing with complex environmental geometries, the number of separating planes can reach tens of thousands [10], resulting in large Hessian matrices. If we use standard direct factorization methods to invert such matrices, the computational overhead can compromise the advantages of second-order convergence. Additionally, the Hessian matrix can exhibit exceedingly large condition numbers, leading to numerical instability. Fortunately, we can leverage the unique structure of the Hessian matrix to develop an efficient and stable matrix factorization scheme with linear complexity in the number of separating planes.

We first notice that a separating plane only appears in a single term . Therefore, the Hessian matrix takes the following form:

We propose a Gauss elimination approach to solve the KKT system in Equation 8 and show that this procedure is well-defined. We first notice that can also be decomposed into , each related to one separating plane. The joint linear sub-system of takes the following form:

where we abuse notation and add a pending zero to when necessary. Suppose the coefficient matrix is invertible, we can solve for as:

| (9) | ||||

where we have used Gauss elimination to factor out assuming is invertible. Next, we plug all the into the first equation to yield the following Schur-complement system of alone:

| (10) | ||||

which is then solved for . Notably, the above procedure has a complexity linear in the number of hyper planes, which is much faster than using an off-the-shelf solver to factorize the large KKT matrix in Equation 8. However, the well-definedness of the above system relies on the inversion of the matrix and . Using the Schur-complement lemma [25], we have the following corollary (proved in appendix):

Corollary IV.1.

If , then the Schur-complementary solver is well-defined and .

In practice, however, the strict requirement of may not be met, and we introduce a diagonal perturbation to ensure well-definedness. Specifically, we employ an adjustment function that ensures all eigenvalues of to be greater than a specified . This adjustment is applied to both and . The primary challenge in this adjustment process is the eigen decomposition. Thankfully, this operation is computationally efficient due to the relatively small matrix sizes involved. Following the computation of , we utilize a line-search strategy to find a step size that strictly decreases the objective function. Finally, we re-normalize after the update. The algorithm pipeline for the ECB method is outlined in Algorithm 1.

IV-B ICB Method

The primary computational challenge encountered in the ECB method is the inversion of the large Hessian matrix. However, many variables correspond to separating planes, which are intermediary variables unrelated to the robot’s motion itself. Hence, it is advantageous to eliminate these variables, leaving only as the independent variable. In this section, we demonstrate that it is indeed possible to express as a well-defined, sufficiently smooth function of . For this reason, we refer to this method as the ICB method. To initiate this discussion, we observe that each plane only appears in the collision penalty terms. Therefore, the optimal should satisfy the following optimization problem:

However, the function defined above does not guarantee a well-defined because the solution may not be unique due to the additional non-convex unit-norm constraint. To obtain a well-defined function , we turn to an equivalent formulation based on the Support Vector Machine (SVM) [27]. This formulation replaces the non-convex constraint with a relaxed convex constraint , giving the following alternative definition:

It is evident that the above optimization is convex. However, convexity alone is insufficient to express as a function of because there may not be a unique minimizer. Even if a unique solution exists, the resulting implicit function may not be differentiable. As pointed out in [26], general convex optimization problems only have sub-gradients. Therefore, we introduce the following smoothed optimization amenable to differentiability, employing our log-barrier function to handle the additional convex constraint:

| (11) |

We have the several essential properties for Equation 11 as summarized below (proved in appendix):

Corollary IV.2.

Assuming and are closed set, or has non-zero volume, i.e. or , and is third-order differentiable, we have the following properties: 1) Equation 11 has a solution iff ; 2) is a well-defined function of ; 3) is twice-differentiable; 4) , with being the distance between convex hulls.

Corollary IV.2 ensures the differentiable structure of as long as one of the convex sets is non-degenerate. This limitation is not significant for robotic applications because robot links practically always have non-zero volume. With this structure, we can define an implicit optimization approach and solve the following optimization problem:

| (12) |

replacing the explicit variable with the implicit function . Corollary IV.2 3) ensures that Equation 12 has the twice-differentiable objective function and Corollary IV.2 4) ensures that the feasibility of Equation 12 implies the collision-free property. To evaluate such objective function at given , we need to solve Equation 11 for every pair of convex sets in close proximity. After the solution, we can evaluate the first- and second-order derivative of and (appendix). The computational complexity of these equations is linear with respect to the number of separating planes, similar to our explicit algorithm. However, solving Equation 11 for each pair of convex sets may initially appear computationally burdensome. Fortunately, we can expedite the process by employing a warm-start strategy, where we retain the solution from the previous iteration. If this warm-start approach fails (for instance, if the solution from the last iteration is infeasible), we can establish a feasible initial estimate by computing the closest pair of points on and . We then use this information to determine the initial separating plane, setting it as the middle sectioning plane. In practice, we can solve the subproblems in Equation 11 with just a few Newton iterations, and all of these subproblems can be solved in parallel. The algorithmic workflow of the ICB method is summarized in Algorithm 2. Since the Hessian matrix of remains relatively small, we can calculate its dense eigen-decomposition, which is essential for matrix inversion and performing the positive-definite adjustment. This adjustment ensures that the minimal eigenvalue of the Hessian matrix is at least .

V Evaluation

We conducted our experiments using C++ on a single desktop machine equipped with an 8-core AMD EPYC 7K62 CPU. All available cores were utilized to facilitate parallel computations related to separating planes, such as the computation of per-plane Schur-complements in the ECB method and the evaluation of implicit functions and their derivatives in the ICB method. We also incorporated the widely used acceleration technique of broadphase collision checks, similar to the one employed in prior works [9, 11]. This technique excludes distant pairs of convex hulls from consideration, introducing barrier terms only when the convex hulls are in close proximity. It is important to note that once inserted, these barrier terms are not removed, even if the pair of convex hulls later move apart. Throughout our analysis, we require our penalty function to be positive and strictly convex. One function satisfying our needs is the layered penalty function [28], which is used in our implementation. Finally, we use hyper-parameters and throughout our experiements.

Additionally, we integrated our solver with an implementation of the SIP solver from [10]. This allows us to solve SIP problem instances, ensuring collision-free trajectories at every continuous time interval, by leveraging their constraint discretization method. For comparison, both the ECB and ICB methods are compared with the baseline Alternating Optimization (AO) technique [11], which optimizes and in separate subproblems, where we also optimize subproblems in parallel. This is state-of-the-art optimization technique, allowing the optimization of arbitrary articulated robot poses under convex constraints, with the only difference being the convergence speed.

|

|

|

|

|

|

| ECB | ICB | AO | ||

|---|---|---|---|---|

| (a) | 54 | 50 | 49 | 50 |

| (b) | 108 | 716 | 570 | 714 |

| (c) | 24 | 1757 | 1024 | 1266 |

| (d) | 36 | 3099 | 3585 | 2444 |

| (e) | 54 | 6424 | 11122 | 6892 |

| (f) | 33 | 973 | 837 | 899 |

| ECB | ICB | AO | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Scenario | ||||||||||||

| (a) | 697 | 703 | 5816 | 26906 | 337 | 337 | 1823 | 21610 | 24182 | 48386 | N.A. | N.A. |

| (b) | 6898 | 9814 | 9906 | 10329 | 1470 | 2063 | 4946 | 13245 | 42000 | 71561 | 77179 | 79300 |

| (c) | 424 | 1128 | 1474 | 2356 | 132 | 305 | 368 | 525 | 371 | 935 | 2046 | N.A. |

| (d) | 1068 | 1479 | 1928 | 1987 | 406 | 528 | 576 | 623 | 1983 | 2941 | 4350 | 5010 |

| (e) | 1802 | 2383 | 2721 | 2936 | 1115 | 1277 | 1315 | 1396 | 2305 | 4808 | 5813 | 6773 |

| (f) | 219 | 435 | 604 | 1066 | 159 | 206 | 266 | 400 | 277 | 1896 | N.A. | N.A. |

| ECB | ICB | AO | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Scenario | ||||||||||||

| (a) | 0.04 | 0.04 | 0.36 | 1.70 | 0.02 | 0.02 | 0.10 | 1.44 | 1.14 | 2.32 | N.A. | N.A. |

| (b) | 3.58 | 5.15 | 5.20 | 5.40 | 0.74 | 1.07 | 2.73 | 7.54 | 20.63 | 34.88 | 37.59 | 38.56 |

| (c) | 2.58 | 11.20 | 17.29 | 38.05 | 0.76 | 2.37 | 3.17 | 5.51 | 1.78 | 6.19 | 23.09 | N.A. |

| (d) | 13.44 | 20.75 | 29.61 | 30.80 | 4.99 | 7.47 | 8.52 | 9.56 | 27.28 | 43.90 | 68.96 | 81.06 |

| (e) | 107.88 | 164.47 | 200.00 | 222.79 | 76.74 | 95.76 | 100.23 | 109.99 | 161.08 | 446.63 | 565.25 | 679.28 |

| (f) | 2.57 | 6.56 | 10.96 | 26.73 | 1.72 | 2.58 | 3.96 | 7.71 | 4.47 | 63.96 | N.A. | N.A. |

We assess the performance of our method using a series of six challenging benchmarks, as outlined in Figure 2. The first two benchmarks involve object settling problems, with bulky objects (a) and thin objects (b) dropped into a box. These problems require us to compute the static equilibrium poses of the objects. Since we optimize a single pose, these scenarios only involve a finite set of collision constraints. The remaining four scenarios focus on trajectory optimization problems, where we solve SIP problem instances by integrating both our methods and AO [11] into the SIP solver framework [10]. In Figure 2 (c), four robot arms reach various end-effector positions on a table, while avoiding obstacles. In Figure 2 (d), we optimize the trajectories of six UAVs with a giraffe-shaped obstacle in the middle. Figure 2 (e) extends this challenge with longer trajectories for the nine UAVs and multiple obstacles along their paths. Finally, Figure 2 (f) presents three fetch robots reaching 3 positions in a basket. In these scenarios, the trajectories for each robot’s configuration space are parameterized using high-order spline curves, with the number of degrees of freedom and separating planes summarized in Table II, which shows that our method can scale to complex high-DOF robots and environmental setups. We measure the number of iterations and computational costs required to reach different gradient norm values, and the results are summarized in Table III and Table IV, respectively.

Our results clearly demonstrate the superior convergence speed of our method compared to AO, especially when aiming for highly optimal motion plans with a small threshold value (). The key factor behind this superior performance is the significantly lower number of iterations required by our method, while maintaining a comparable per-iteration cost. Furthermore, it is worth noting that ICB exhibits faster convergence compared to ECB, despite both methods being locally second-order. This can be attributed to ICB’s approach of computing the optimal separating planes during each iteration. Although this leads to a slightly higher iterative cost, the overall reduction in the number of iterations more than compensates for this additional computational overhead. In summary, our method consistently achieves a remarkable reduction in computational time, with a speedup ranging from to for pose optimization (Figure 2a-b) and to for trajectory optimization (Figure 2c-f).

VI Conclusion & Limitations

We present innovative second-order optimization-based planners for both robot poses and trajectories, building upon the convex constraint formulation introduced by [13, 11]. A key distinguishing feature of our approach is the efficient application of Newton’s method to simultaneously optimize the separating planes and robot poses. Our work has paved the way for several promising avenues in future research. Although both of our methods outperform the AO baseline, they each come with their own advantages and disadvantages. On the one hand, ICB is more efficient than ECB, requiring even fewer iterations to converge. However, it demands a more complex implementation with an intricate inner loop of optimization and a careful treatment of second-order derivatives. The reliable evaluation of these derivatives under finite-precision floating-point formats requires further investigation. Further, due to the inherent complexity of the underlying problem, our method may still be time-consuming. \AtNextBibliography

References

- [1] Robin Deits and Russ Tedrake “Efficient mixed-integer planning for UAVs in cluttered environments” In 2015 IEEE international conference on robotics and automation (ICRA), 2015, pp. 42–49 IEEE

- [2] Yuanwei Chua, Keng Peng Tee and Rui Yan “Robust Optimal Inverse Kinematics with Self-Collision Avoidance for a Humanoid Robot” In 2013 IEEE RO-MAN, 2013, pp. 496–502 DOI: 10.1109/ROMAN.2013.6628553

- [3] Kris Hauser “Semi-infinite programming for trajectory optimization with non-convex obstacles” In The International Journal of Robotics Research 40.10-11 SAGE Publications Sage UK: London, England, 2021, pp. 1106–1122

- [4] Charles Khazoom, Daniel Gonzalez-Diaz, Yanran Ding and Sangbae Kim “Humanoid self-collision avoidance using whole-body control with control barrier functions” In 2022 IEEE-RAS 21st International Conference on Humanoid Robots (Humanoids), 2022, pp. 558–565 IEEE

- [5] Fei Gao, Yi Lin and Shaojie Shen “Gradient-based online safe trajectory generation for quadrotor flight in complex environments” In 2017 IEEE/RSJ international conference on intelligent robots and systems (IROS), 2017, pp. 3681–3688 IEEE

- [6] Sikang Liu et al. “Planning dynamically feasible trajectories for quadrotors using safe flight corridors in 3-d complex environments” In IEEE Robotics and Automation Letters 2.3 IEEE, 2017, pp. 1688–1695

- [7] Mrinal Kalakrishnan et al. “STOMP: Stochastic trajectory optimization for motion planning” In 2011 IEEE international conference on robotics and automation, 2011, pp. 4569–4574 IEEE

- [8] Matt Zucker et al. “Chomp: Covariant hamiltonian optimization for motion planning” In The International journal of robotics research 32.9-10 SAGE Publications Sage UK: London, England, 2013, pp. 1164–1193

- [9] Minchen Li et al. “Incremental potential contact: intersection-and inversion-free, large-deformation dynamics.” In ACM Trans. Graph. 39.4, 2020, pp. 49

- [10] Duo Zhang, Xifeng Gao, Kui Wu and Zherong Pan “Provably Robust Semi-Infinite Program Under Collision Constraints via Subdivision” In arXiv preprint arXiv:2302.01135, 2023

- [11] Ruiqi Ni, Zherong Pan and Xifeng Gao “Robust multi-robot trajectory optimization using alternating direction method of multiplier” In IEEE Robotics and Automation Letters 7.3 IEEE, 2022, pp. 5950–5957

- [12] Adrien Escande, Sylvain Miossec, Mehdi Benallegue and Abderrahmane Kheddar “A strictly convex hull for computing proximity distances with continuous gradients” In IEEE Transactions on Robotics 30.3 IEEE, 2014, pp. 666–678

- [13] Wolfgang Hönig et al. “Trajectory planning for quadrotor swarms” In IEEE Transactions on Robotics 34.4 IEEE, 2018, pp. 856–869

- [14] Sertac Karaman and Emilio Frazzoli “Sampling-based algorithms for optimal motion planning” In The international journal of robotics research 30.7 Sage Publications Sage UK: London, England, 2011, pp. 846–894

- [15] Lucas Janson, Edward Schmerling, Ashley Clark and Marco Pavone “Fast marching tree: A fast marching sampling-based method for optimal motion planning in many dimensions” In The International journal of robotics research 34.7 SAGE Publications Sage UK: London, England, 2015, pp. 883–921

- [16] Lucas Janson, Brian Ichter and Marco Pavone “Deterministic sampling-based motion planning: Optimality, complexity, and performance” In The International Journal of Robotics Research 37.1 SAGE Publications Sage UK: London, England, 2018, pp. 46–61

- [17] Aaron D Ames et al. “Control barrier functions: Theory and applications” In 2019 18th European control conference (ECC), 2019, pp. 3420–3431 IEEE

- [18] Alexandre Amice et al. “Finding and optimizing certified, collision-free regions in configuration space for robot manipulators” In International Workshop on the Algorithmic Foundations of Robotics, 2022, pp. 328–348 Springer

- [19] Tobia Marcucci, Mark Petersen, David Wrangel and Russ Tedrake “Motion planning around obstacles with convex optimization” In arXiv preprint arXiv:2205.04422, 2022

- [20] Philip E Gill, Walter Murray and Michael A Saunders “SNOPT: An SQP algorithm for large-scale constrained optimization” In SIAM review 47.1 SIAM, 2005, pp. 99–131

- [21] Lorenz T Biegler and Victor M Zavala “Large-scale nonlinear programming using IPOPT: An integrating framework for enterprise-wide dynamic optimization” In Computers & Chemical Engineering 33.3 Elsevier, 2009, pp. 575–582

- [22] Robin Deits and Russ Tedrake “Computing large convex regions of obstacle-free space through semidefinite programming” In Algorithmic Foundations of Robotics XI: Selected Contributions of the Eleventh International Workshop on the Algorithmic Foundations of Robotics, 2015, pp. 109–124 Springer

- [23] Boyu Zhou et al. “Robust and efficient quadrotor trajectory generation for fast autonomous flight” In IEEE Robotics and Automation Letters 4.4 IEEE, 2019, pp. 3529–3536

- [24] Yangyang Xu and Wotao Yin “A globally convergent algorithm for nonconvex optimization based on block coordinate update” In Journal of Scientific Computing 72.2 Springer, 2017, pp. 700–734

- [25] Stephen P Boyd and Lieven Vandenberghe “Convex optimization” Cambridge university press, 2004

- [26] Akshay Agrawal et al. “Differentiable convex optimization layers” In Advances in neural information processing systems 32, 2019

- [27] Pai-Hsuen Chen, Chih-Jen Lin and Bernhard Schölkopf “A tutorial on -support vector machines” In Applied Stochastic Models in Business and Industry 21.2 Wiley Online Library, 2005, pp. 111–136

- [28] David Harmon et al. “Asynchronous contact mechanics” In ACM SIGGRAPH 2009 papers, 2009, pp. 1–12

- [29] David Carlson “What are Schur complements, anyway?” In Linear Algebra and its Applications 74, 1986, pp. 257–275 DOI: https://doi.org/10.1016/0024-3795(86)90127-8

Appendix: Additional Proofs

Proof of Corollary IV.1.

is positive definite and so is all its principle submatrices. As a result, is invertible and so is well-defined. We further rewrite:

from which we immediately have . By Guttman rank additivity [29], we have the following matrix is positive definite:

Combined with the fact that , we have: . Note the above argument only requires . If we further have: , then we can apply the above argument to the perturbed matrix:

which immediately yields: . ∎

Proof of Corollary IV.2.

1) If Equation 11 is feasible, then because otherwise we have . But this implies and which is impossible. Therefore, we can verify that is a plane separating and . Conversely, if , then , i.e., we have a separating plane such that for all and for all . We can then choose sufficiently close to such that: for and for . And we can immediately verify that is a feasible solution of Equation 11.

2) This property essentially requires the uniqueness of minimizer. To establish uniqueness, we can write the Hessian of the objective function of Equation 11 as:

We show that must be a matrix of full-rank. Note that and due to the strong convexity of . Next, note that is a convex function because it is a composite of a convex function and a non-decreasing function [25]. Further, we have the top-left entry of Hessian taking the following form:

This implies the Hessian of is a positive semi-definite, non-zero matrix, so it has the following diagonalization:

where and is a orthogonal matrix. Putting all these facts together, we have:

Without a loss of generality, we can assume and . Then spans and hence the following vectors span :

Put together, we have spans and . Due to the arbitarity of , we have Equation 11 is a strictly convex optimization and is a unique minimizer.

3) Due to the strict convexity, is equivalently defined as the solution of:

Since has full-rank, we can then invoke the high-order implicit function theorem to see that is a twice-differentiable function of if the barrier is third-order differentiable.

4) Denote , then for every separating plane with , we have for some and for some because otherwise . Now the solution to Equation 11 can be denoted as a generalized separating plane with , so we have for some and for some . As a result we have: . ∎

Appendix: Derivatives

We use Einstein’s notation with scripts :

| (13) | ||||