Search-Based Path Planning among Movable Obstacles

Abstract

This paper investigates Path planning Among Movable Obstacles (PAMO), which seeks a minimum cost collision-free path among static obstacles from start to goal while allowing the robot to push away movable obstacles (i.e., objects) along its path when needed. To develop planners that are complete and optimal for PAMO, the planner has to search a giant state space involving both the location of the robot as well as the locations of the objects, which grows exponentially with respect to the number of objects. The main idea in this paper is that, only a small fraction of this giant state space needs to be explored during planning as guided by a heuristic, and most of the objects far away from the robot are intact, which thus leads to runtime efficient algorithms. Based on this idea, this paper introduces two PAMO formulations, i.e., bi-objective and resource constrained problems in an occupancy grid, and develops PAMO*, a search method with completeness and solution optimality guarantees, to solve the two problems. We then further extend PAMO* to hybrid-state PAMO* to plan in continuous spaces with high-fidelity interaction between the robot and the objects. Our results show that, PAMO* can often find optimal solutions within a second in cluttered environments with up to 400 objects.

I Introduction

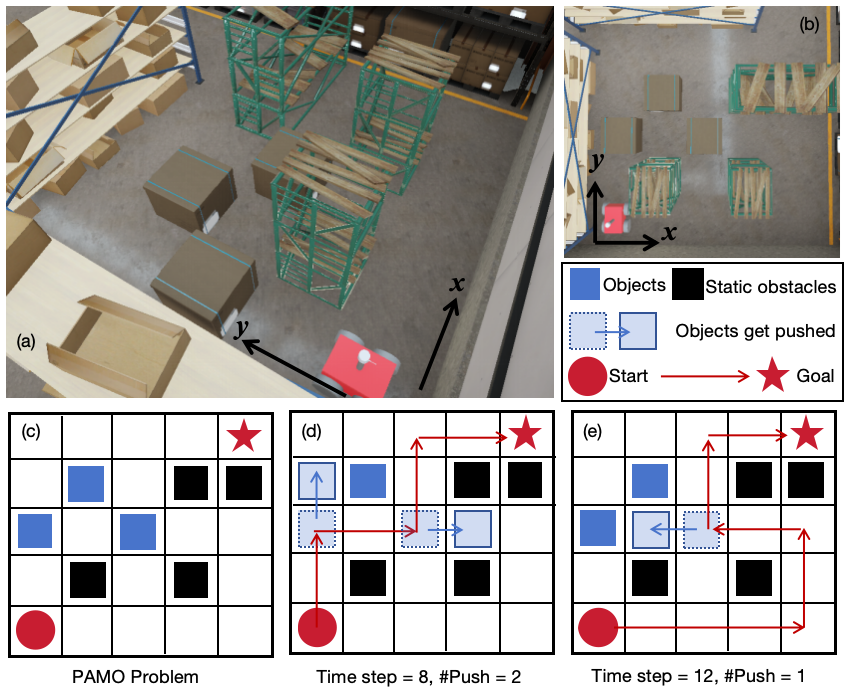

Path planning seeks a collision-free path from an initial state to a goal state while avoiding collision among static obstacles, which is of fundamental importance in robotics. This paper considers a problem called Path planning Among Movable Obstacles (PAMO) where obstacles consist of both movable obstacles (i.e., objects) and static (non-movable) obstacles, and the robot can interact with objects by pushing them away when needed. PAMO seeks a minimum-cost start-goal path for the robot where both the move and push actions of the robot incur costs, and there is no requirement on the ending poses of the objects. This problem was shown to be NP-hard [5, 20], and the challenge is to determine not only a start-goal path among static obstacles but also when and where to interact with the objects.

The goal of this paper is to develop runtime efficient planners for PAMO with completeness and solution optimality guarantees. For this purpose, we formulate PAMO problems as a search over a grid where robots and obstacles are represented by grid cells, and develop A*-like planners (Fig. 1). By doing so, the planner must search a giant state space that includes both the location of the robot and the locations of all objects, which thereby grows exponentially with respect to the number of objects. However, we take the view that, although the state space is huge, in practice, only a small fraction of the state space needs to be explored during planning as guided by a heuristic, and most of the objects that are “far away” from the robot are intact, which thus leads to runtime efficient algorithms.

Based on this idea, we first introduce a bi-objective PAMO formulation, which requires minimizing the numbers of both move and push actions simultaneously. Considering the move and push actions as two independent dimensions has the potential to avoid naively scalarizing two different types of actions into a single objective. With multiple objectives, there is often no single solution that optimizes all objectives at the same time, and the problem thus seeks a set of Pareto-optimal solutions, which is usually computationally heavy. We thus introduce another resource-constrained formulation, which seeks a shortest path while ensuring the number of push actions does not exceed a given limit. To address the problems, we develop an approach called PAMO*, which leverages the recent advances in multi-objective and resource-constrained search [14, 15], and leads to algorithms that can find all Pareto-optimal solutions for the bi-objective PAMO and an optimal solution for the resource-constrained PAMO. Finally, we seek to get rid of the grid representation by developing Hybrid-state PAMO* (H-PAMO*), which combines PAMO* with hybrid-state A* [6] and a robot-object interaction simulator based on Box2D [1] to plan in continuous spaces with high-fidelity, at the cost of losing completeness and solution optimality guarantees.

We test PAMO* in various grid maps. The results show that for the bi-objective problem, our planner can find Pareto-optimal solutions unveiling different ways to interact with the objects, trading off numbers of move and push. For the resource constrained problem, our planner can often find optimal solutions within a second in cluttered grids with up to 400 objects. We also verify our H-PAMO* in office-like and warehouse-like environments, and simulate the robot motion in Unity3D, where H-PAMO* plans paths for a robot with kinematic constraints amid rectangular objects that can both translate and rotate.

I-A Related Work

PAMO was formulated in different ways. When representing the workspace and obstacles using a grid, PushPush and Push-1 problems are NP-hard [5]. With polygonal obstacles, navigation among movable obstacles (NAMO) were proposed and shown to be NP-hard [20]. NAMO was studied in environments that are known [17], partially known [12], and unknown [8], with search-based [17], sampling-based [18], and learning-based [21] methods. This paper differs from these work by considering multi-objective and resource-constrained formulations of PAMO in a fully known grid, and developing planners with completeness and solution optimality guarantees.

Besides, box-pushing games, such as Sokoban, solve puzzles where an agent moves boxes (i.e., objects) from their initial to goal positions in a grid by push. These games were NP-hard [7] and has been addressed by heuristic search [10], Monte-Carlo Tree Search [4], Q-learning [19], to name a few. PAMO differs from these games since it seeks a start-goal path for the robot while these games move the boxes without imposing a goal location on the agent.

Other related work includes manipulation of multiple objects [16, 13], objects rearrangement [11], etc, where various more complicated interaction between the robot and the objects are allowed other than solely push.

Multi-objective search and resource-constrained search are two closely related topics, and a fundamental challenge in these problems is to quickly check paths for dominance, i.e., compare the vector cost of these paths [9, 15, 14, 3]. This paper leverages these search algorithms [15, 14] to solve the formulated PAMO problems.

II Problem Formulation

Let denote a 2D occupancy grid that represents the workspace, where each cell has coordinates indicating the column and row indices of the cell in the grid. The time dimension is discretized into time steps. At any cell , the possible actions of the robot move in one of the four cardinal directions and arrives at one of the neighboring cells . Each action takes a time step. Let denote the set of neighboring cells of . Each edge indicates the corresponding movement from cell to another cell .

At any time, all cells in are partitioned into three subsets: free space , static obstacles , and movable obstacles , i.e., . Movable obstacles are also referred to as objects. Each cell is obstacle-free and can be occupied by the robot. Each cell represents a static obstacle and cannot be occupied by the robot at any time. Each cell indicates an object. An object can be pushed by the robot to an adjacent cell if all of the following conditions hold: (i) the current cell occupied by the robot is next to ; (ii) the robot takes an action to reach ; and (iii) the object can be pushed into a neighboring cell that is in free space (i.e., ). Note that, Condition (iii) ensures an object cannot be pushed to if is occupied by another object. When the robot is adjacent to an object and moves to cell , the object at is pushed in the same direction as the robot simultaneously. In this case, the robot action is counted as a push (as opposed to a move). Each action, either move or push, takes a time unit.111The problem formulation and our method can easily adapt to the case where push takes a different amount of time than move. Push may take more fuel than move and is thus considered as a different type of action.

Let denote a path, which is a list of adjacent cells. Let denote the cost vector of , where the first element is the arrival time at the last cell in (i.e., the total number of move and push), and the second element is the number of push conducted along . Given two vectors and of the same length, dominates if every element in is no larger than the corresponding element in , and there exists at least one element in that is less than the corresponding element in . Otherwise, is non-dominated by . Given a set of vectors of the same length, there exists a subset of vectors such that for every vector , is non-dominated by any other vector in . Such a set is called the Pareto-optimal front.

Let and denote the start and the goal cells of the robot. Among all possible paths from to , let denote the Pareto-optimal set, which is the subset of all paths whose cost vector is within the Pareto-optimal front. A maximal subset of the Pareto-optimal set, where any two paths in this subset do not have the same cost vector is called a cost-unique Pareto-optimal set. This paper considers the following two formulation.

Problem 1 (BO-PAMO)

The Bi-Objective Path Planning Among Movable Obstacles (BO-PAMO) seeks a cost-unique Pareto-optimal set (of paths) from to .

Problem 2 (RC-PAMO)

The Resource-Constrained Path Planning Among Movable Obstacles (RC-PAMO) seeks a path from to such that , where is the maximum number of push the robot can conduct along any path, and reaches the minimum.

III PAMO*

III-A PAMO* Search

PAMO is the problem name while PAMO* (with *) is the method name. Let denote the number of objects, and let position vector denote the cells occupied by all objects. Let denote a search state, where is the cell occupied by the robot, and is an aforementioned position vector. In other words, the state space of the search is , which encodes the locations of both the robot and all objects.

Let denote the initial state, where the robot is at and all objects are at their original positions. Different from conventional A*, where the search only needs to record one optimal path (via parent pointers) from to any state , PAMO* have to store multiple non-dominated paths from to and differentiate between these paths. To do so, let denote a label, where is a state as aforementioned and is the cost vector of a path from to . Labels are compared based on their cost vectors and two labels are non-dominated by each other if their cost vectors are non-dominated by each other. We use to denote the state and the cost vector contained in respectively. We use to denote the cell occupied by the robot in state .

Let denote the frontier set at state , which is a set of labels, where any pair of labels in are non-dominated by each other. Similar to A*, let denote the cost-to-come, i.e., the cost vector of the path from to and let denote the heuristic vector of state that estimates the cost-to-go. Let denote the -vector of state , and let denote an open list of states, which is a priority queue that prioritize states based on their -vectors from the minimum to maximum in lexicographic order. Let denote the set of labels representing solution paths that are found during the search.

PAMO* (Alg. 1) begins by creating the initial label , which is added to . The frontier set is initialized as an empty set for any state. In a search iteration (Line 4-18), a label with the lexicographically minimum -vector in is popped, and is then checked for pruning (Line 6), which is elaborated later. If is not pruned, is used to update the frontier set , where is compared against any existing label . If is dominated by , is removed from . Then, is added to . As a result, always contains non-dominated labels.

After updating the frontier, PAMO* checks if reaches the goal (i.e., ). When solving RC-PAMO where only one optimal path is required, PAMO* terminates if and the path corresponding to label is guaranteed to be optimal. When solving MO-PAMO where all cost-unique Pareto-optimal paths are required, PAMO* skips the rest of the current search iteration and continues with the next search iteration if . When solving MO-PAMO, PAMO* terminates when depletes, i.e., all states in are popped and are either expanded or pruned.

If label does not reach the goal, then is expanded by finding successor states of (Line 13). Specifically, the procedure GetSuccessor returns the set of all reachable states from the given state , where each of the successor states update both the cell of the robot, and the cells of the objects when any object is pushed by the robot. Then, for each of the successor state , a corresponding label is created, where and the GetCost function returns either a cost vector if the robot moves without pushing any object, or if the robot moves while pushing an object. Afterwards, the new label is checked for pruning (Line 15). If is not pruned, the -vector and the parent pointer related to are updated and is added to for future search.

At the end of the search, each label in represents a solution path, which is reconstructed by iteratively tracking the parent pointers, and the solution path(s) are returned.

III-B Procedures in PAMO*

III-B1 GetSuccessors

The procedure FrontierCheck in PAMO* takes a state and returns its successor states. It considers all possible neighboring cells of the robot , and for each of them, there are three cases. First, the robot moves to a free cell, which yields a successor state where and the position vector remains the same as in . Second, the robot moves to a cell that is a static obstacle, which is not a valid move and yields no successor. Third, the robot moves to a cell that is occupied by an object (the -th element in the position vector ). In this case, the procedure further predicts the motion of the object and checks if the object can be pushed to an adjacent free cell . If so, a successor state is generated, where and the position vector is updated by first copying and then modifying to be . Otherwise (i.e., is pushed to a static obstacle or another object), no successor state is generated.

III-B2 Heuristic Computation

PAMO* calculates the heuristic vectors as follows. PAMO* first runs a pre-processing before the search starts, which invokes a Dijkstra search backwards from to all other vertices in the grid while ignoring any objects. By doing so, for each cell , we know the distance to along a shortest path among static obstacles. We then use as the heuristic vector for any label whose robot position is at , ignoring object positions. This heuristic is a lower bound on the true cost-to-go from to for the number of both move and push.

III-B3 FrontierCheck

The procedure FrontierCheck in PAMO* is the same when solving both RC-PAMO and MO-PAMO, where a given label is checked against any existing label for dominance. If the cost vector of any existing label is component-wise no larger than , then cannot lead to an optimal path and should be pruned, and FrontierCheck returns true. Otherwise, FrontierCheck returns false.

III-B4 SolutionCheck

The procedure SolutionCheck is different when solving RC-PAMO and MO-PAMO. When solving MO-PAMO, SolutionCheck compares the -vector of the given label against the -vector of any existing label . Note that each represents a solution path from to that is already found during the search, and since . If is component-wise no larger than , then cannot be a Pareto-optimal solution and should be pruned, and SolutionCheck returns true. Otherwise, SolutionCheck returns false.

When solving RC-PAMO, SolutionCheck compares , the second component of the -vector of the given label , against the limit on the number of push actions. If the limit is exceeded (), then cannot be a solution and is thus pruned, and SolutionCheck returns true. Otherwise, SolutionCheck returns false.

III-C Discussion

III-C1 Implicit State Generation

The state space is never created explicitly, i.e., allocate the memory for each state before the search starts. Instead, the state space is created implicitly, i.e., the states and the frontier sets are created only when the search generates the states. PAMO* only requires a GetSuccessors procedure to create successors out of a given state in , and never requires the full knowledge of .

III-C2 Giant State Space

PAMO* has a small constant branching factor, which is the number of successors returned by GetSuccessors procedure. Although the states space is extremely large and grows exponentially with respect to the number of objects. In practice, guided by the heuristic, PAMO* often needs to explore only a small fraction of before finding the (Pareto-)optimal solution(s), even if there are many objects. Intuitively, most of the objects that are far away from the robot’s path from to are never touched, and the corresponding element of them in the position vector are never changed during the search.

III-C3 Global and Local Checks

Intuitively, FrontierCheck can be regarded as a “local” check which compares a label against the existing non-dominated label at the same state. Correspondingly, SolutionCheck can be regarded as a global check which compares a label against “global” information, i.e., either the existing solution paths that have been found or the resource limit.

III-C4 Action Costs

In the problem formulation, both move and push take a time unit. Our method PAMO* can easily handle the case where push and move take different amount of time, or more generally speaking, incur various types of costs, as long as the costs can still be described by cost vectors and are still additive. The only place to be modified is Line 14 in Alg. 1 when calculating between two states and .

III-D Properties

Let RC-PAMO* (and MO-PAMO*) denote the version of PAMO* when using Alg. 1 to solve RC-PAMO problem (and MO-PAMO problem, respectively). The completeness and solution optimality of PAMO* are inherited from EMOA* [15] and ERCA* [14]. In particular, RC-PAMO* (and MO-PAMO*) can be regarded as applying ERCA* (and EMOA*) onto the new state space with the new way of successor generation. EMOA* is guaranteed to be complete and able to find all cost-unique Pareto-optimal solutions for a given graph with vector edge cost [15]. ERCA* is guaranteed to be complete and can find a min-cost solution subject to resource limits in a given graph where each edge incurs cost and resource consumption [14]. As a result, we have the following theorems.

Theorem 1

MO-PAMO* is complete and can find all cost-unique Pareto-optimal solutions for MO-PAMO.

Theorem 2

RC-PAMO* is complete and can find an optimal solution for RC-PAMO.

IV Hybrid-State PAMO*

Based on PAMO*, we further develop Hybrid-state PAMO* (H-PAMO*) by leveraging the idea in hybrid-state A* [6] to plan the robot motion in continuous space and time, handle kinematic constraints of robots, and consider more detailed interaction between the robot and the objects. Same as Hybrid-state A* for path planning in continuous space, our H-PAMO* loses completeness and solution optimality guarantees for PAMO in continuous space.

IV-A Environment and Robot

We consider a first order unicycle model, where the robot pose is and control is where is the linear velocity and is the angular velocity, and are subject to control limits. The robot satisfies the system dynamics . The workspace is a bounded 2D Euclidean space with a set of static rectangle obstacles . In the free space , there are rectangle objects. A search state now includes both the pose of the robot and the poses of all objects.

The robot-object interaction is described by a black-box function which takes (i) a state (i.e., the poses of the robot and the objects), (iii) a control of the robot, and (iv) a small amount of time , conducts a forward simulation and returns the ending poses of the robot and the objects. Here, only simulates the poses of the robot and the objects, and ignores the higher order terms (e.g. velocities).

IV-B H-PAMO* Search

H-PAMO* is similar to PAMO* with the following differences. First, to simplify the presentation, H-PAMO* is developed as a single-objective algorithm, where all actions (either move or push) incur an action time, and H-PAMO* seeks to minimize the arrival time at the goal. As a result, all cost vectors (Lines 1, 14, 17) becomes scalar values and the transition cost is now a scalar representing the action time from one state to another. Second, H-PAMO* plans in a continuous space, there is no notion of graphs or cells. To compare and prune paths (FrontierCheck on Lines 6, 15 and UpdateFrontier on Line 8), H-PAMO* uniformly discretizes the workspace into mutually exclusive cells of size , and every pose belongs to a unique cell. The state space is thus discretized into a grid , where each cell is of size . When two paths end with states that belong to the same cell in , H-PAMO* only stores the cheaper path and prunes the other. Here, is never created explicitly and any cell in is only created when a state in that cell is generated. Third, since H-PAMO* optimizes a single-objective, the arrival time, and there is no limit on the number of push actions, there is no SolutionCheck on Line 6, 15 in H-PAMO* any more. Fourth, to get successors, H-PAMO* consider a finite set of motion primitives, which are generated by sampling controls from the control space and running forward simulation for each of the sampled control for a short amount of time . When generating a successor, the interaction between the robot and the objects needs to be considered. In our implementation, we use Runge-Kutta 4th order method to integrate the to simulate the robot motion given the control, and use Box2D [1] to implement to predict the motion of the objects when pushed by the robot. Finally, H-PAMO* terminates when the search finds a path that reaches the goal pose within a given tolerance .

V Experimental Results

We test PAMO* in various grid maps from a public dataset [2]. Each grid map has static obstacles of different densities. We place objects randomly in the grid without overlapping with static obstacles or start and goal positions. We create 10 instances for each map, and the instances are not guaranteed to be feasible due to the randomly located objects. We set a 1 minute runtime limit for each instance. All tests are conducted on a MacBook laptop with a M2 Pro CPU and 16GB RAM.

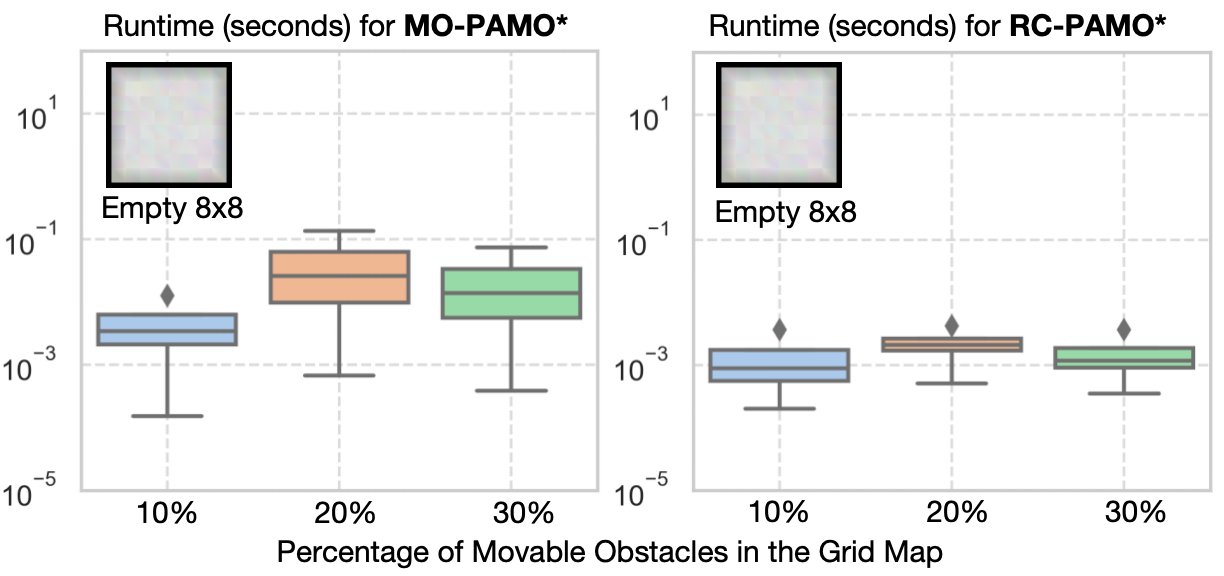

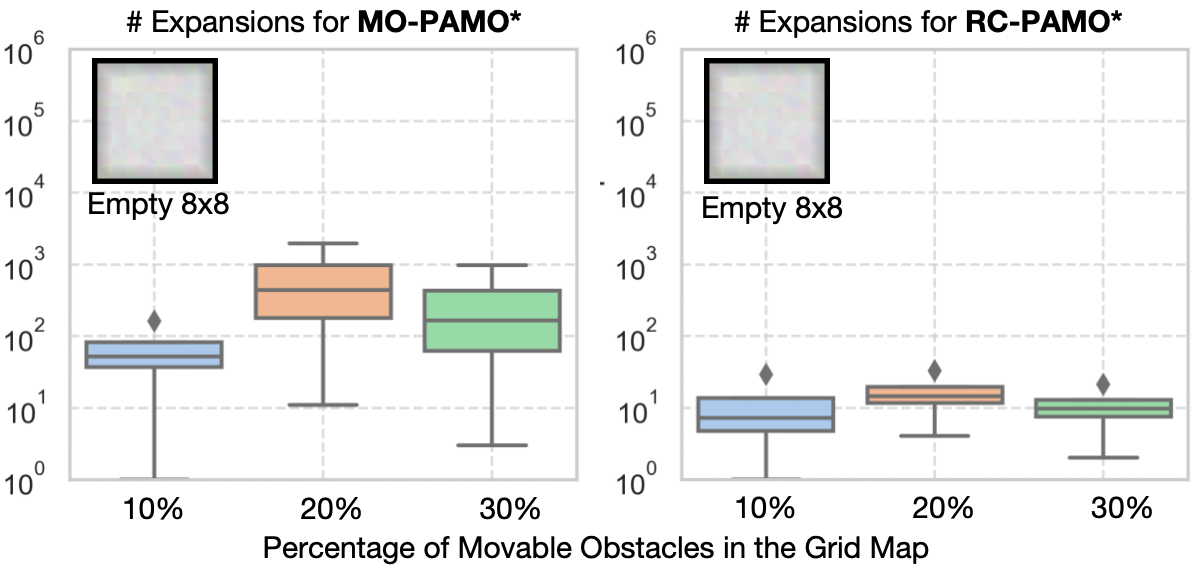

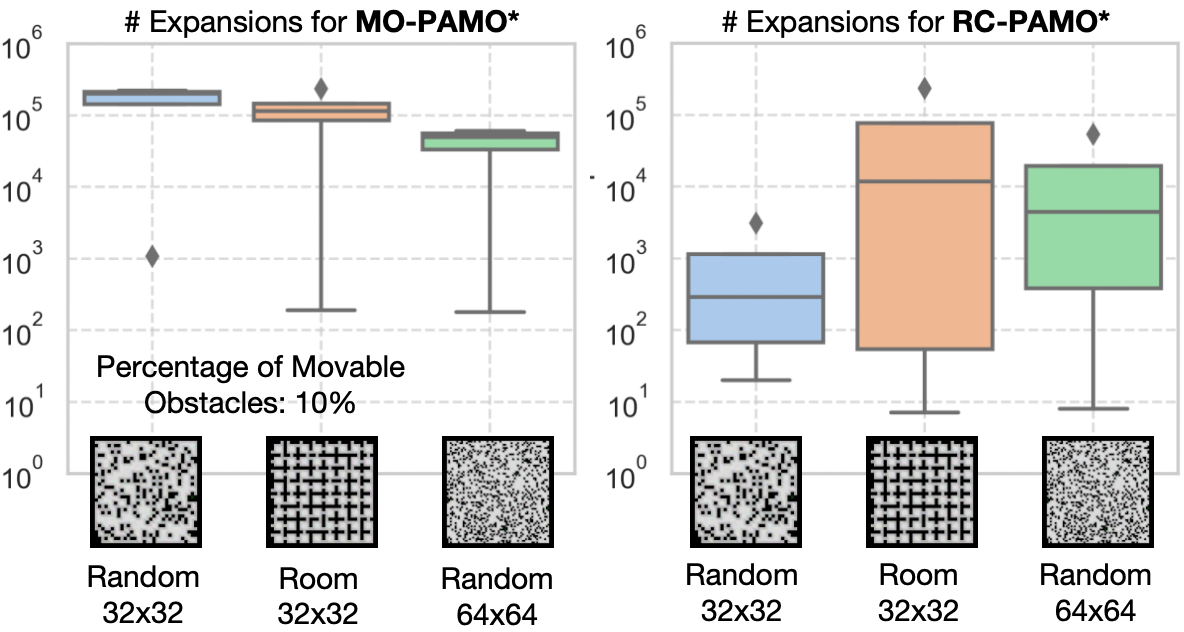

We first test MO-PAMO* and RC-PAMO* (with ) in a (fixed) 8x8 empty grid map with varying percentage of movable obstacles 10% (), 20% (), and 30% (). We then fix the percentage of movable obstacles to 10% and change the maps to a Random 32x32 (), Room 32x32 (), and Random 64x64 (). We report the runtime and number of expansions (i.e., Alg. 1 reaches Line 13).

V-A Results of PAMO*

As shown in Fig. 2, in the smaller Empty map, the increase in the number of movable obstacles slightly slows down the planning for both MO-PAMO* and RC-PAMO*. In the larger maps with much more movable obstacles (Fig. 4), the planning is slowed down obviously, where MO-PAMO* times out in many instances before finding the entire Pareto-optimal front, while RC-PAMO* is still able to solve most of the instances. This is expected since finding the entire Pareto-optimal front is usually much harder than finding a single optimal solution [15, 14].

Fig. 3 and 5 shows the number of expansions of MO-PAMO* and RC-PAMO*. Similar trends as the runtime can be observed for number of expansions. Besides, we observe from Fig. 4 and 5 that, in Random 32x32 and Random 64x64, as the map size and increases, the runtime for RC-PAMO* increases while the number of expansion decreases. The reason is, with a larger , each state has to encode the position of more movable obstacles and the processing time in each expansion increases correspondingly.

When considering the theoretic size of the entire state space , the number of expansion is usually much smaller than the size of . For example, in Empty 8x8 with , the size of the state space is while the number of expansions is usually less than for MO-PAMO* and for RC-PAMO*. It indicates that, guided by the heuristic, PAMO* only need to explore a small fraction of the state space to find optimal solutions.

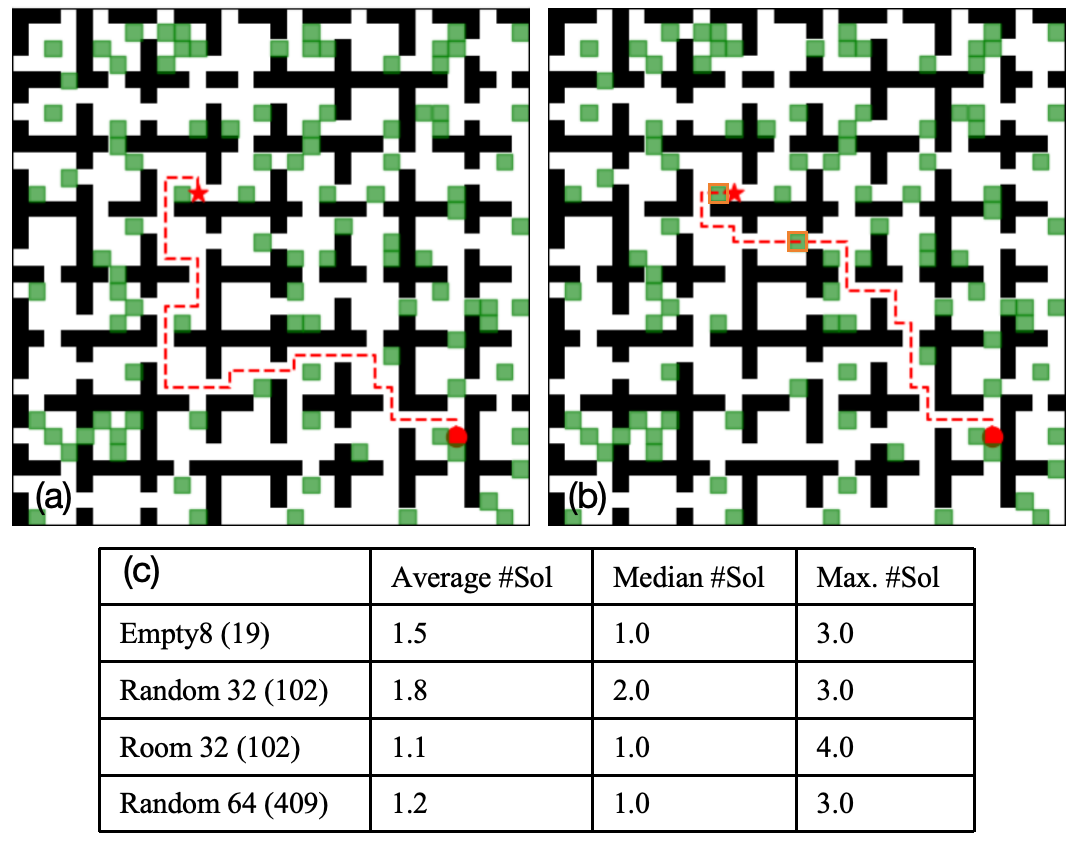

Finally, we show the number of solutions found by MO-PAMO* in Fig. 6. Within the time limit, the planner finds 1 or 2 solutions as shown by the average and median number, while for some instances, the planner finds 3 or 4 solutions. We pick an instance from Room 32x32 and shows the two different paths found by MO-PAMO*. Fig. 6(a) shows a longer path with no push action required, while Fig. 6(b) shows a shorter path with 7 push actions. These results can help determine which path to take based on the specific robotic platform where the push action is expensive or not.

V-B Results of H-PAMO*

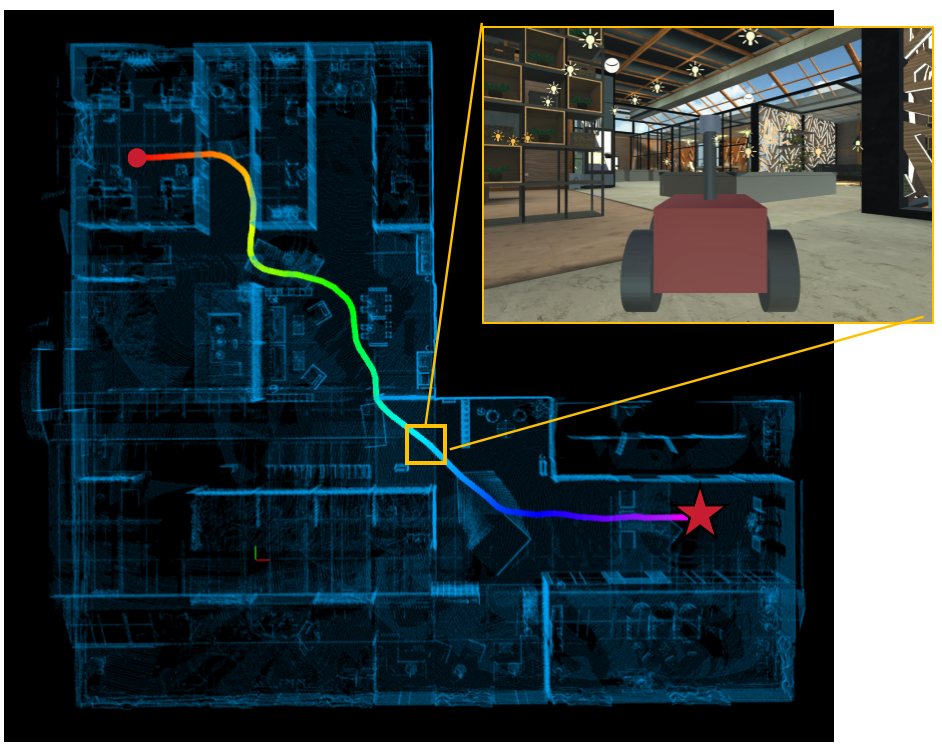

As shown in Fig. 7, we test H-PAMO* in three maps of sizes (a) 74x127 in an office, (b) 24x36 in part of an office, and (c) 90x102 in a warehouse, with rectangular objects of different sizes. The robot is a 1x1 square. The size of cells in for pruning is set to and the set of controls used are , , , , , , , . Fig. 7 shows the solution paths, the initial and ending pose of all objects. On all three instances, H-PAMO* terminates within 10 seconds and finds paths of length (a) 117.10 (b) 28.89 (c) 106.75 respectively. Fig. 8 shows the simulated motion of the robot for Fig. 7(a) in Unity3D.

VI Conclusion and Future Work

This paper investigates PAMO by formulating the problem as a multi-objective search and a resource constrained search over a grid, and develop planners with completeness and solution optimality guarantees. The results verify that, although the state space is huge, in practice, only a small fraction of the state space needs to be explored during planning as guided by a heuristic, and most of the objects that are far from the robot’s path are intact, which thus leads to algorithms that are often runtime efficient. This paper also seeks to get rid of the grid representation by developing Hybrid-state PAMO* (H-PAMO*) to plan in continuous spaces at the cost of losing completeness and solution optimality guarantees. We plan to consider the uncertainty of robot-object interaction in our future work.

References

- [1] https://box2d.org/.

- [2] https://www.movingai.com/benchmarks/mapf/index.html.

- [3] Saman Ahmadi, Guido Tack, Daniel Harabor, Philip Kilby, and Mahdi Jalili. Enhanced methods for the weight constrained shortest path problem. Networks, 2024.

- [4] Mattia Crippa, Pier Luca Lanzi, and Fabio Marocchi. An analysis of single-player monte carlo tree search performance in sokoban. Expert Systems with Applications, 192:116224, 2022.

- [5] Erik D Demaine, Martin L Demaine, and Joseph O’Rourke. Pushpush and push-1 are np-hard in 2d. In Proceedings of the 12th Canadian Conference on Computational Geometry, 2000.

- [6] Dmitri Dolgov, Sebastian Thrun, Michael Montemerlo, and James Diebel. Path planning for autonomous vehicles in unknown semi-structured environments. The international journal of robotics research, 29(5):485–501, 2010.

- [7] Dorit Dor and Uri Zwick. Sokoban and other motion planning problems. Computational Geometry, 13(4):215–228, 1999.

- [8] Botao He, Guofei Chen, Wenshan Wang, Ji Zhang, Cornelia Fermuller, and Yiannis Aloimonos. Interactive-far: Interactive, fast and adaptable routing for navigation among movable obstacles in complex unknown environments. arXiv preprint arXiv:2404.07447, 2024.

- [9] Carlos Hernández, William Yeoh, Jorge A Baier, Han Zhang, Luis Suazo, Sven Koenig, and Oren Salzman. Simple and efficient bi-objective search algorithms via fast dominance checks. Artificial intelligence, 314:103807, 2023.

- [10] Andreas Junghanns and Jonathan Schaeffer. Sokoban: Enhancing general single-agent search methods using domain knowledge. Artificial Intelligence, 129(1-2):219–251, 2001.

- [11] Athanasios Krontiris and Kostas E Bekris. Dealing with difficult instances of object rearrangement. In Robotics: Science and Systems, volume 1123, 2015.

- [12] Martin Levihn, Mike Stilman, and Henrik Christensen. Locally optimal navigation among movable obstacles in unknown environments. In 2014 IEEE-RAS International Conference on Humanoid Robots, pages 86–91. IEEE, 2014.

- [13] Zherong Pan, Andy Zeng, Yunzhu Li, Jingjin Yu, and Kris Hauser. Algorithms and systems for manipulating multiple objects. IEEE Transactions on Robotics, 39(1):2–20, 2023.

- [14] Zhongqiang Ren, Zachary B. Rubinstein, Stephen F. Smith, Sivakumar Rathinam, and Howie Choset. Erca*: A new approach for the resource constrained shortest path problem. IEEE Transactions on Intelligent Transportation Systems, 24(12):14994–15005, 2023.

- [15] Zhongqiang Ren, Richard Zhan, Sivakumar Rathinam, Maxim Likhachev, and Howie Choset. Enhanced multi-objective A* using balanced binary search trees. In Proceedings of the International Symposium on Combinatorial Search, volume 15, pages 162–170, 2022.

- [16] Dhruv Mauria Saxena, Muhammad Suhail Saleem, and Maxim Likhachev. Manipulation planning among movable obstacles using physics-based adaptive motion primitives. In 2021 IEEE International Conference on Robotics and Automation (ICRA), pages 6570–6576, 2021.

- [17] Mike Stilman and James Kuffner. Planning among movable obstacles with artificial constraints. The International Journal of Robotics Research, 27(11-12):1295–1307, 2008.

- [18] Jur Van Den Berg, Mike Stilman, James Kuffner, Ming Lin, and Dinesh Manocha. Path planning among movable obstacles: a probabilistically complete approach. In Algorithmic Foundation of Robotics VIII: Selected Contributions of the Eight International Workshop on the Algorithmic Foundations of Robotics, pages 599–614. Springer, 2010.

- [19] Ying Wang and Clarence W De Silva. Multi-robot box-pushing: Single-agent q-learning vs. team q-learning. In 2006 IEEE/RSJ international conference on intelligent robots and systems, pages 3694–3699. IEEE, 2006.

- [20] Gordon Wilfong. Motion planning in the presence of movable obstacles. In Proceedings of the fourth annual symposium on Computational geometry, pages 279–288, 1988.

- [21] Fei Xia, William B Shen, Chengshu Li, Priya Kasimbeg, Micael Edmond Tchapmi, Alexander Toshev, Roberto Martín-Martín, and Silvio Savarese. Interactive gibson benchmark: A benchmark for interactive navigation in cluttered environments. IEEE Robotics and Automation Letters, 5(2):713–720, 2020.