scpi: Uncertainty Quantification for Synthetic Control Methods

Abstract

The synthetic control method offers a way to quantify the effect of an intervention using weighted averages of untreated units to approximate the counterfactual outcome that the treated unit(s) would have experienced in the absence of the intervention. This method is useful for program evaluation and causal inference in observational studies. We introduce the software package scpi for prediction and inference using synthetic controls, implemented in Python, R, and Stata. For point estimation or prediction of treatment effects, the package offers an array of (possibly penalized) approaches leveraging the latest optimization methods. For uncertainty quantification, the package offers the prediction interval methods introduced by Cattaneo, Feng and Titiunik (2021) and Cattaneo, Feng, Palomba and Titiunik (2022). The paper includes numerical illustrations and a comparison with other synthetic control software.

Keywords: program evaluation, causal inference, synthetic controls, prediction intervals, non-asymptotic inference.

1 Introduction

The synthetic control method was introduced by Abadie and Gardeazabal (2003), and since then it has become a popular approach for program evaluation and causal inference in observational studies. It offers a way to study the effect of an intervention (e.g., treatments at the level of aggregate units, such as cities, states, or countries) by constructing weighted averages of untreated units to approximate the counterfactual outcome that the treated unit(s) would have experienced in the absence of the intervention. While originally developed for the special case of a single treated unit and a few control units over a short time span, the methodology has been extended in recent years to a variety of other settings with longitudinal data. See Abadie (2021) for a review on synthetic control methods, and Abadie and Cattaneo (2018) for a review on general methods for program evaluation.

Most methodological developments in the synthetic control literature have focused on either expanding the causal framework or developing new implementations for prediction/point estimation. Examples of the former include disaggregated data settings (Abadie and L’Hour, 2021) and staggered treatment adoption (Ben-Michael, Feller and Rothstein, 2022), while examples of the latter include employing different constrained estimation methods (see Table 3 below for references). Conceptually, implementation of the synthetic control method involves two main steps: first, treated units are “matched” to control units using only their pre-intervention data via (often constrained) regression methods and, second, prediction of the counterfactual outcomes of the treated units are obtained by combining the pre-intervention “matching” weights with the post-intervention data of the control units. As a result, the synthetic control approach offers a prediction or point estimator of the (causal) treatment effect for the treated unit(s) after the intervention was deployed.

Compared to prediction or estimation, considerably less effort has been devoted to develop principled uncertainty quantification for synthetic control methods. The most popular approach in practice is to employ design-based permutation methods taking the potential outcome variables as non-random (Abadie, Diamond and Hainmueller, 2010). Other approaches include methods based on large-sample approximations for disaggregated data under correctly specified factor-type models (Li, 2020), time-series permutation-based inference (Chernozhukov, Wüthrich and Zhu, 2021), large-sample approximations for high-dimensional penalization methods (Masini and Medeiros, 2021), and cross-sectional permutation-based inference in semiparametric duration-type settings (Shaikh and Toulis, 2021). A conceptually distinct approach to uncertainty quantification is proposed by Cattaneo, Feng and Titiunik (2021) and Cattaneo, Feng, Palomba and Titiunik (2022), who take the potential outcome variables as random and develop prediction intervals for the imputed (counterfactual) outcome of the treated unit(s) in the post-intervention period employing finite-sample probability concentration methods.

This article introduces the software package scpi for prediction and inference using synthetic control methods, implemented in Python, R, and Stata. For prediction or point estimation of treatment effects, the package offers an array of possibly penalized approaches leveraging the latest conic optimization methods (Domahidi, Chu and Boyd, 2013; Fu, Narasimhan and Boyd, 2020); see also Boyd and Vandenberghe (2004) for an introduction. For uncertainty quantification, the package focuses on the aforementioned prediction interval methods under random potential outcomes. The rest of the article focuses on the implementation of the software, but we briefly illustrate analogous functionalities for Python in Appendix A, and for Stata in Appendix B.

The R package scpi includes the following six functions:

-

•

scdata() and scdataMulti(). These functions take as input a DataFrame object and process it to prepare the data matrices used for point estimation/prediction and inference/uncertainty quantification. The function scdata() is specific to the single treated unit case, whereas scdataMulti() can be used with multiple treated units and/or when treatment is adopted in a staggered fashion. Both functions allow the user to specify multiple features of the treated unit(s) to be matched by the synthetic unit(s), as well as feature-specific covariate adjustment, and can handle both independent and identically distributed (i.i.d.) and non-stationary (cointegrated) data.

-

•

scest(). This function handles “scpi_data” objects produced with scdata() or “scpi_data_multi” objects produced with scdataMulti(), and then implements a class of synthetic control predictions/point estimators for quantification of treatment effects. The implementation allows for multiple features, with and without additional covariate adjustment, and for both stationary and non-stationary data. The allowed prediction procedures include unconstrained weighted least squares as well as constrained weighted least squares with simplex, lasso-type, ridge-type parameter space restrictions and combinations thereof (see Table 2 below).

-

•

scpi(). This function takes as input an “scpi_data” object produced with scdata() or an “scpi_data_multi” object produced with scdataMulti(), and then computes prediction intervals for a class of synthetic control predictions/point estimators for quantification of treatment effects. It relies on scest() for point estimation/prediction of treatment effects, and thus inherits the same functionalities of that function. In particular, scpi() is designed to be the main function in applications, offering both predictions/point estimators for treatment effects as well as inference/uncertainty quantification (i.e., prediction intervals) for synthetic control methods. The function also allows the user to model separately in-sample and out-of-sample uncertainty, offering a broad range of options for practice.

-

•

scplot() and scplotMulti(). These functions process objects whose class is either “scest” or “scpi”. These objects contain the results of the point estimation/prediction or uncertainty quantification methods, respectively. The commands build on the ggplot2 package in R (Wickham, 2016) to compare the time series for the outcome of the treated unit(s) with the outcome time series of the synthetic control unit, along with the associated uncertainty. The functions return a ggplot object that can be further modified by the user.

The objects returned by scest() and scpi() support the methods print() and summary(). In typical applications, the user will first prepare the data using the function scdata() or scdataMulti(), and then produce predictions/point estimators for treatment effects with uncertainty quantification using the function scpi(). The function scest() is useful in cases where only predictions/point estimators are of interest. Numerical illustrations are given in Section 5.

There are many R, Python, and Stata packages available for prediction/point estimation and inference using synthetic control methods; Table 1 compares them to the package scpi. As shown in the table, scpi is the first package to offer uncertainty quantification using prediction intervals with random potential outcomes for a wide range of different synthetic control predictors. The package is also one of the first to handle multiple treated units and staggered treatment adoption, offering a wider array of options in terms of predictors and inference methods when compared with the other packages currently available. Furthermore, the package includes misspecification-robust methods, employs the latest optimization packages for conic programs available, and offers automatic parallelization in execution whenever multi-core processors are present, leading to significant improvements in numerical stability and computational speed. Finally, scpi is the only package available in Python, R, and Stata, which gives full portability across multiple statistical software and programming languages, and also the only package employing directly native conic optimization via the ECOS solver (see Table 4 for details).

| Package | Statistical | Prediction | Inference | Multiple | Staggered | Misspecification | Automatic | Last |

|---|---|---|---|---|---|---|---|---|

| Name | Platform | Method | Method | Treated | Adoption | Robust | Parallelization | Update |

| ArCo | R | LA | Asym | ✓ | 2017-11-05 | |||

| pgsc | R | SC | Perm | ✓ | 2018-10-28 | |||

| MSCMT | R | SC | Perm | ✓ | 2019-11-14 | |||

| npsynth | St | SC | Perm | 2020-06-23 | ||||

| tidysynth | R | SC | Perm | 2021-01-27 | ||||

| \hdashrule[0.5ex]22.5cm1pt1pt | ||||||||

| microsynth | R | CA | Perm | ✓ | ✓ | 2021-02-26 | ||

| scinference | R | SC, LA | Perm | ✓ | 2021-05-14 | |||

| SCUL | R | LA | Perm | 2021-05-19 | ||||

| gsynth | R | FA | Asym | ✓ | ✓ | ✓ | 2021-08-06 | |

| Synth | Py | SC | Perm | 2021-10-07 | ||||

| \hdashrule[0.5ex]22.5cm1pt1pt | ||||||||

| treebased-sc | Py | TB | Perm | ✓ | 2021-11-01 | |||

| SynthCast | R | SC | Perm | 2022-03-08 | ||||

| sytnhdid | R | LS, RI | Asym | ✓ | ✓ | 2022-03-15 | ||

| allsynth | St | SC | Perm | ✓ | ✓ | 2022-05-07 | ||

| synth2 | St | SC | Perm | 2022-05-28 | ||||

| \hdashrule[0.5ex]22.5cm1pt1pt | ||||||||

| Synth | R, St | SC | Perm | 2022-06-08 | ||||

| SCtools | R | SC | Perm | ✓ | ✓ | 2022-06-09 | ||

| augsynth | R | SC, RI | Perm | ✓ | ✓ | 2022-08-02 | ||

| scul | St | LA | Perm | 2022-08-21 | ||||

| scpi | Py, R, St | SC, LA, RI, LS, + | PI, Asym, Perm | ✓ | ✓ | ✓ | ✓ | 2022-10-07 |

Note: Py = Python (https://www.python.org/); R = R (https://cran.r-project.org/); St = Stata (https://www.stata.com/); LA = Lasso penalty; CA = calibration; FA = factor-augmented models; LS = unconstrained least squares; RI = Ridge penalty; SC = canonical synthetic control; TB = tree-based methods; + = user-specified options (see Table 3 below for more details); Perm = permutation-based inference; Asym = asymptotic-based inference; PI = prediction intervals (non-asymptotic probability guarantees). The symbol ✓ means that the feature is available. The last column reports the date of last update as of October 7, 2022.

The rest of the article is organized as follows. Section 2 introduces the canonical synthetic control setup, and also briefly discusses extensions to multiple treated units with possibly staggered treatment adoption. Section 3 gives a brief introduction to the theory and methodology underlying the point estimation/prediction for synthetic control methods, discussing implementation details. Section 4 gives a brief introduction to the theory and methodology underlying the uncertainty quantification via prediction intervals for synthetic control methods, and also discusses the corresponding issues of implementation. Section 5 showcases some of the functionalities of the package using a real-world dataset, and Section 6 concludes. The appendices illustrate the Python (Appendix A) and Stata (Appendix B) implementations of scpi. Detailed instructions for installation, script files to replicate the analyses, links to software repositories, and other companion information can be found in the package’s website, https://nppackages.github.io/scpi/.

2 Setup

We first consider the canonical synthetic control framework with a single treated unit. The researcher observes units for periods of time. Units are indexed by , and time periods are indexed by . During the first periods, all units are untreated. Starting at , unit receives treatment but the other units remain untreated. Once the treatment is assigned at , there is no change in treatment status: the treated unit continues to be treated and the untreated units remain untreated until the end of the series, periods later. The single treated unit in our context could be understood as an “aggregate” of multiple treated units; see Section 2.1 below for more discussion.

Each unit at period has two potential outcomes, and , respectively denoting the outcome under treatment and the outcome in the absence of treatment. Two implicit assumptions are imposed: no spillovers (the potential outcomes of unit depend only on ’s treatment status) and no anticipation (the potential outcomes at depend only on the treatment status of the same period). Then, the observed outcome is

The causal quantity of interest is the difference between the outcome path taken by the treated unit, and the path it would have taken in the absence of the treatment:

We view the two potential outcomes and as random variables, which implies that is a random quantity as well, corresponding to the treatment effect on a single treated unit. This contrasts with other analysis that regards the treatment effect as a fixed parameter (see Abadie, 2021, for references).

The potential outcome of the treated unit is observed after the treatment. To recover the treatment effect , it is necessary to have a “good” prediction of the counterfactual outcome of the treated after the intervention. The idea of the synthetic control method is to find a vector of weights such that a given loss function is minimized under constraints, only using pre-intervention observations. Given the resulting set of constructed weights , the treated unit’s counterfactual (potential) outcome is calculated as for . The weighted average is often referred to as the synthetic control of the treated unit, as it represents how the untreated units can be combined to provide the best counterfactual for the treated unit in the post-treatment period. In what follows, we briefly describe different approaches for point estimation/prediction leading to , and then summarize the uncertainty quantification methods to complement those predictions.

2.1 Extensions

Building on the canonical synthetic control setup, we can consider other settings involving multiple treated units with possibly staggered treatment adoption. In particular, we briefly discuss three potential extensions of practical interest.

-

•

Multiple post-treatment periods. When outcomes are observed in multiple periods after the treatment, a researcher might be interested in the average treatment effect on the (single) treated unit across multiple post-treatment periods rather than the effect at a single period:

The analysis of this quantity can be accommodated by the framework above. For instance, given the predicted counterfactual outcome for each post-treatment period , the predicted average counterfactual outcome of the treated is given by

This construction is equivalent to regarding the post-treatment periods as a “single” period and defining the post-treatment predictors as averages of the corresponding predictors across post-treatment time periods.

-

•

Multiple treated units. The canonical single treated unit framework above can also be extended to the more general case of multiple treated units. For instance, suppose a researcher observes units for time periods, and let units be indexed by . Without loss of generality, the first to units are assumed to be treated and units from to to be untreated. Treated and untreated potential outcomes are, respectively, denoted by and for . The observed outcome of the th treated unit is given by .

In such setting, a researcher might be interested in the individual treatment effect

or in the average treatment effect on the treated across treated units

The first causal quantity, , can be predicted in the framework described above considering one treated unit at a time or, alternatively, by considering all treated units jointly.

To predict the second causal quantity, , one extra step is necessary. Define an aggregate unit “ave” whose observed outcome is , for . Other features of “unit 1” used in the synthetic control construction can be defined similarly as averages of the corresponding features across multiple treated units. The framework above can now be applied to the “new” average unit with outcome .

-

•

Staggered treatment adoption. Our framework can also be extended to the scenario where multiple treated units are assigned to treatment at different points in time, a staggered adoption design. In this case, one can understand the adoption time as a multivalued treatment assignment, and a large class of causal quantities can be defined accordingly. For example, let denote the adoption time of unit where means unit is never treated, and represents the potential outcome of unit at time that would be observed if unit had adopted the treatment at time . Suppose that the treatment effect on unit one period after the treatment, i.e., , is of interest. One can take all units that are treated later than to obtain the synthetic control weights and construct the synthetic control prediction of the counterfactual outcome accordingly. The methodology described below can be immediately applied to this problem.

The package scpi allows for estimation/prediction of treatment effects and uncertainty quantification via prediction intervals for the more general synthetic control settings discussed above. However, in order to streamline the exposition, the rest of this article focuses on the case of a single treated unit. See Cattaneo, Feng, Palomba and Titiunik (2022) for a formal treatment of more general staggered adoption problems, and its supplemental appendix for further details on how the package scpi can be used in settings with multiple treatment units and staggered treatment adoption. Our companion replication files illustrate both the canonical single treated unit framework and the generalizations discussed above.

3 Synthetic Control Prediction

We consider synthetic control weights constructed simultaneously for features of the treated unit, denoted by , with index . For each feature , there exist variables that can be used to predict or “match” the -dimensional vector . These variables are separated into two groups denoted by and , respectively. More precisely, for each , corresponds to the th feature of the th unit observed in pre-treatment periods and, for each , is another vector of control variables also possibly used to predict over the same pre-intervention time span. For ease of notation, we let .

The goal of the synthetic control method is to search for a vector of common weights across the features and a vector of coefficients , such that the linear combination of and “matches” as close as possible, during the pre-intervention period, for all and some convex feasibility sets and that capture the restrictions imposed. Specifically, we consider the following optimization problem:

| (3.1) |

where

and is a weighting matrix reflecting the relative importance of different equations and time periods.

From (3.1), we can define the pseudo-true residual as

| (3.2) |

where and denote the mean squared error population analog of and . As discussed in the next section, the proposed prediction intervals are valid conditional on some information set . Thus, and above are viewed as the (possibly constrained) best linear prediction coefficients conditional on . We do not attach any structural meaning to and : they are only (conditional) pseudo-true values whose meaning should be understood in context, and are determined by the assumptions imposed on the data generating process. In other words, we allow for misspecification when constructing the synthetic control weights , as this is the most likely scenario in practice.

Given the constructed weights and coefficients , the counterfactual outcome at the post-treatment period for the treated unit, , is predicted by

| (3.3) |

where is a vector of predictors for control units observed in time and is another set of user-specified predictors observed at time . Variables included in and need not be the same as those in and , but in practice it is often the case that and is excluded when is not specified.

The next section discusses implementation details leading to , including the choice of feasibility sets and , weighting matrix , and additional covariates .

3.1 Implementation

The function scdata() in scpi prepares the data for point estimation/prediction purposes. This function takes as input an object of class DataFrame and outputs an object of class scpi_data containing the matrices described above, and a matrix of post-treatment predictors . The user must provide a variable containing a unit identifier (id.var), a time variable (time.var), an outcome variable (outcome.var), the features to be matched (features), the treated unit (unit.tr), the control units (unit.co), the pre-treatment periods (period.pre), and the post-treatment periods (period.post). These options completely specify , and . The user can also control the form of in (3.1) or, equivalently, the form of , through the options cov.adj and constant. The former option allows the user to flexibly specify covariate adjustment feature by feature, while the latter option introduces a column vector of ones of size in . If , this is a simple constant term, but if it corresponds to an intercept which is common across features.

The use of the options cov.adj and constant is best explained through some examples. If the user specifies only one feature (), then cov.adj can be an unnamed list:

This particular choice includes a constant term and a linear time trend in . If instead multiple features () are used to find the synthetic control weights , then cov.adj allows for feature-specific covariate adjustment. For example, in a two-feature setting (), the code

specifies as a block diagonal matrix where the first block contains a constant term and a trend, while the second block contains only a trend. If the user wants all features to share the same covariate adjustment, then it is sufficient to input a list with a unique element:

This specification creates a block diagonal matrix with identical blocks. In the same example with , if constant <- TRUE and cov.adj <- NULL, then would not be block diagonal, but rather a column vector of ones of size .

Finally, if and form a cointegrated system, by setting the option cointegrated.data to TRUE in scdata(), the matrix is prepared in such a way that the function scpi() will properly handle in-sample and out-of-sample uncertainty quantification (see sections 4.3 and 4.3).

Once all the design matrices and have been created, we can proceed with point estimation/prediction of the counterfactual outcome of interest via the function scest().

The form of the feasibility set in (3.1) or, equivalently, the constraints imposed on the weights , can be set using the option w.constr. The package allows for the following family of constraints:

where the inequality constraint on the norm can be made an equality constraint as well. The user can specify the desired form for through a list to be passed to the option w.constr:

The four lines above create , , , and , respectively. In greater detail,

-

•

p chooses the constrained norm of among the options ‘no norm’, ‘L1’, ‘L2’, or ‘L1-L2’

-

•

dir sets the direction of the constraint and it can be either ‘==’, ‘<=’, or ‘==/<=’

-

•

Q is the size of the constraint and it can be set to any positive real number

-

•

lb sets a (common) lower bound on and it takes as input either 0 or -Inf

Popular constraints can be called explicitly using the option name in the list passed to w.constr. Table 2 gives prototypical examples of such constraints.

| Name | w.constr | |

|---|---|---|

| OLS | list(name = ‘ols’) | |

| simplex | list(name = ‘simplex’, Q = Q) | |

| lasso | list(name = ‘lasso’, Q = Q) | |

| ridge | list(name = ‘ridge’, Q = Q) | |

| L1-L2 | list(name = ‘L1-L2’, Q = Q, Q2 = Q2) |

In particular, specifying list(name = ‘simplex’, Q = 1) gives the standard constraint used in the canonical synthetic control method, that is, computing weights in (3.1) such that they are non-negative and sum up to one, and without including an intercept. This is the default in the function scest() (and scpi()). The following snippet showcases how each of these five constraints can be called automatically through the option name and manually through the options p, Q, Q2, lb, and dir. In the snippet, Q and Q2 are set to 1 for ridge and L1-L2 constraints, respectively, for simplicity, but to replicate the results obtained with the option name one should input the proper Q according to the rules of thumb described further below.

Using the option w.constr in scest() (or scpi()) and the options cov.adj and constant in scdata() appropriately, i.e., setting and in (3.1), many synthetic control estimators proposed in the literature can be implemented. Table 3 provides a non-exhaustive list of such examples.

Tuning parameter choices

We provide rule-of-thumb choices of the tuning parameter for Lasso- and Ridge-type constraints.

-

•

Lasso . Being Lasso similar in spirit to the “simplex”-type traditional constraint in the synthetic control literature, we propose as a rule of thumb.

-

•

Ridge . It is well known that the Ridge prediction problem can be equivalently formulated as an unconstrained penalized optimization problem and as a constrained optimization problem. More precisely, assuming is not used and for simplicity, the two Ridge-type problems are

where is a shrinkage parameter, and

where is the (explicit) size of the constraint on the norm of . Under the assumption of Gaussian errors, a risk-minimizing choice (Hoerl, Kannard and Baldwin, 1975) of the standard shrinkage tuning parameter is

where and are estimators of the variance of the pseudo-true residual and the coefficients based on least squares regression, respectively.

Since the two optimization problems above are equivalent, there exists a one-to-one correspondence between and . For example, assuming the columns of are orthonormal, the closed-form solution for the Ridge estimator is , and it follows that if the constraint on the -norm is binding, then .

However, if , does not exist, hence we cannot rely on the approach suggested above. Indeed, the proposed mapping between and is ill-defined and, also, we are unable to estimate . In this case, we first make the design low-dimensional by performing variable selection on with Lasso. Once we select the columns of whose Lasso coefficient is non-zero, we choose according to the rule of thumb described above.

If more than one feature is specified (), we compute the size of the constraint for each feature and then select as the tightest constraint to favor shrinkage of , that is .

Missing Data

In case of missing values, we adopt different strategies depending on which units have missing entries and when these occur.

-

•

Missing pre-treatment data. In this case we compute without the periods for which there is at least a missing entry for either the treated unit or one of the donors.

-

•

Missing post-treatment donor data. Suppose that the th donor has a missing entry in one of the features in the post-treatment period . It implies that the predictor vector has a missing entry, and thus the synthetic unit and the associated prediction intervals are not available.

-

•

Missing post-treatment treated data. Data for the treated unit after the treatment is only used to quantify the treatment effect , which then will not be available. However, prediction intervals for the synthetic point prediction of the counterfactual outcome , , can still be computed in the usual way as they do not rely on the availability of such data points.

4 Uncertainty Quantification

Following Cattaneo, Feng and Titiunik (2021) and Cattaneo, Feng, Palomba and Titiunik (2022), we view the quantity of interest within the synthetic control framework as a random variable, and hence we refrain from calling it a parameter. Building an analogy with the concept of estimand (or parameter of interest), we refer to as a predictand. Consequently, we prefer to call based on (3.3) a prediction of rather than an estimator of it, and our goal is to characterize the uncertainty of by building prediction intervals rather than confidence intervals. In practice, it is appealing to construct prediction intervals that are valid conditional on a set of observables. We let be an information set generated by all features of control units and covariates used in the synthetic control construction, i.e., , , , and .

We first decompose the potential outcome of the treated unit based on and introduced in (3.2):

| (4.1) |

where is defined by construction. In our analysis, and are assumed to be (possibly) random quantities around which and are concentrating in probability, respectively. Then, the distance between the predicted treatment effect on the treated and the target population one is

| (4.2) |

where is the out-of-sample error coming from misspecification along with any additional noise occurring at the post-treatment period , and the term is the in-sample error coming from the construction of the synthetic control weights. Our goal is to find probability bounds on the two terms separately to give uncertainty quantification: for some pre-specified levels , with high probability over ,

It follows that these probability bounds can be combined to construct a prediction interval for with conditional coverage at least : with high probability over ,

4.1 In-Sample Error

Cattaneo, Feng and Titiunik (2021) provide a principled simulation-based method for quantifying the in-sample uncertainty coming from . Let and be a non-negative diagonal (scaling) matrix of size , possibly depending on the pre-treatment sample size . Since solves (3.1), is the optimizer of the centered criterion function:

where , , and . Recall that the information set conditional on which our prediction intervals are constructed contains and . Thus, can be taken as fixed, and we need to characterize the uncertainty of .

We construct a simulation-based criterion function accordingly:

| (4.3) |

where is some estimate of and represents the normal distribution with mean and variance-covariance matrix . In practice, the criterion function can be simulated by simply drawing normal random vectors .

Since the original constraint set is infeasible, we need to construct a constraint set used in simulation that is close to . Specifically, define the distance between a point and a set by

where is a generic vector norm on with (e.g., Euclidean norm or norm). We require

| (4.4) |

where is an -neighborhood around zero for some . In words, every point in the infeasible constraint set has to be sufficiently close to the feasible constraint set used in simulation. We discuss below a principled strategy for constructing , which allows for both linear and non-linear constraints in the feasibility set. Section 4.3 provides details on how is constructed and implemented in the scpi package.

Given the feasible criterion function and constraint set , we let

conditional on the data. Under mild regularity conditions, for a large class of synthetic control predictands (3.1), with high probability over ,

up to some small loss of the (conditional) coverage probability. Importantly, this conclusion holds whether the data are stationary or non-stationary and whether the model is correctly specified (i.e., ) or not. If constraints imposed are non-linear, an additional adjustment to this bound may be needed to ensure the desired coverage.

4.2 Out-of-Sample Error

The unobserved random variable in (4.1) is a single error term in period , which we interpret as the error from out-of-sample prediction, conditional on . Naturally, in order to have a proper bound on , it is necessary to determine certain features of its conditional distribution . In this section, we outline principled but agnostic approaches to quantify the uncertainty introduced by the post-treatment unobserved shock . Since formalizing the validity of our methods usually requires strong assumptions, we also recommend a generic sensitivity analysis to incorporate out-of-sample uncertainty into the prediction intervals. See Section 4.5 and Section 5, in particular Figure 3 with the corresponding snippet of R code, for further clarifications on how to carry out sensitivity analysis on .

-

•

Approach 1: Non-Asymptotic bounds. The starting point is a non-asymptotic probability bound on via concentration inequalities. For example, suppose that is sub-Gaussian conditional on , i.e., there exists some such that a.s. for all . Then, we can take

In practice, the conditional mean and the sub-Gaussian parameter can be parameterized and/or estimated using the pre-treatment residuals.

-

•

Approach 2: Location-scale model. Suppose that with statistically independent of . This setting imposes restrictions on the distribution of , but allows for a much simpler tabulation strategy. Specifically, we can set the lower bound and upper bound on as follows:

where and are and quantiles of , respectively. In practice, and can be parametrized and estimated using the pre-intervention residuals, or perhaps tabulated using auxiliary information. Once such estimates are available, the appropriate quantiles can be easily obtained using the standardized (estimated) residuals.

-

•

Approach 3: Quantile regression. Another strategy to bound is to determine the and conditional quantiles of , that is,

Consequently, we can employ quantile regression methods to estimate those quantities using pre-treatment data.

Using any of the above methods, we have the following probability bound on :

4.3 Implementation

We now discuss the implementation details. The function scpi(), through various options, allows the user to specify different approaches to quantify in-sample and out-of-sample uncertainty based on the methods described above. Most importantly, scpi() permits modelling separately the in-sample error and the out-of-sample error . In addition, the user can provide bounds on them manually with the options w.bounds and e.bounds, respectively, which can be useful for sensitivity analysis in empirical applications.

Modelling In-Sample Uncertainty

In-sample uncertainty stems from the prediction of , and its quantification reduces to determining and . We first review the methodological proposals for constructing the constraint set used in simulation discussed in Cattaneo, Feng, Palomba and Titiunik (2022), and then present the main procedure for constructing bounds on the in-sample error.

Constructing . Our in-sample uncertainty quantification requires the centered and scaled constraint feasibility set to be locally identical to (or, at least, well approximated by) the constraint set used in simulation described in (4.3), in the sense of (4.4). Suppose that

where and and denote the th constraint in as . Given tuning parameters , , let be the set of indices for the inequality constraints such that . Then, we construct as

In practice, we need to choose possibly heterogeneous parameters , , for different inequality constraints. Our proposed choice of is

for some parameter where denotes the -norm. We estimate according to the following formula if :

where if the data are i.i.d. or weakly dependent, and if and form a cointegrated system, while is one of the following:

with as the default. is the estimated (unconditional) covariance between the pseudo-true residual and the feature of the th control unit , and and are the estimated (unconditional) standard deviation of, respectively, and . In the case of multiple features (), the package employs the same construction above after stacking the data.

Degrees-of-Freedom Correction. Our uncertainty quantification strategy requires an estimator of the conditional variance , which may rely on the effective degrees of freedom of the synthetic control method. In general, there exists no exact correspondence between the degrees of freedom and the number of parameters in a fitting model (Ye, 1998). Therefore, the estimated degrees of freedom are defined according to the chosen constraint sets for underlying the estimation procedure in (3.1):

-

•

OLS. .

-

•

Lasso. Following Zou, Hastie and Tibshirani (2007), an unbiased and consistent estimator of is where is the th element of the constructed weights .

-

•

Simplex. Following the discussion for Lasso, .

-

•

Ridge. Let be singular values of and be the complexity parameter of the corresponding Lagrangian Ridge problem, which satisfies . Then, following Friedman, Hastie and Tibshirani (2001), .

Main procedure. Given the constraint set , the main procedure for computing the upper and lower bounds on the in-sample error is as follows:

-

Step 1.

Estimation of conditional moments of . To estimate and to simulate the criterion function (4.3) we need an estimate of which, in turn, depends on the conditional moments of . To estimate such moments, the user needs to specify three things:

-

i)

whether the model is misspecified or not, via the option u.missp.

-

ii)

how to model , via the options u.order, u.lags, and u.design.

-

iii)

an estimator of , via the option u.sigma.

Given the constructed weights , define regularized weights with for the tuning parameter specified previously. Let , where denotes the matrix composed of the columns of with non-zero regularized weight only. If the option cointegrated.data in scdata() is set to be TRUE, rather than the columns of , we take the first difference of the columns of . If the user inputs u.missp = FALSE, then it is assumed that , whereas if u.missp = TRUE (default), then needs to be estimated.

The unknown conditional expectation is estimated using the fitted values of a flexible linear-in-parameters regression of on a design matrix , which can be provided directly with the option u.design or by specifying the lags of (u.lags) and/or the order of the fully interacted polynomial in (u.order).

For example, if the user specifies u.lags = 1 and u.order = 1, then the design matrix is , where indicates the first lag of . If, instead, u.order = 0 and u.lags = 0 are specified, then , where with , is a vector of ones, and denotes the Kronecker product.

The conditional variance of is estimated as

where , is a sequence of variance-correction constants, which can be chosen among the well-known family of heteroskedasticity-robust variance-covariance estimators through the option u.sigma. In particular, the package currently allows for five choices:

with being the -th diagonal entry of the leverage matrix , , and is a degrees-of-freedom correction factor, whose estimation has been explained before.

-

i)

-

Step 2.

Estimation of . The estimator of is .

-

Step 3.

Simulation. The criterion function in (4.3) is simulated by drawing i.i.d. random vectors from the Gaussian distribution , conditional on the data.

-

Step 4.

Optimization. Let denote the criterion function corresponding to the -th draw from . For each draw , we solve the following constrained problems:

(4.5) where is constructed as explained previously.

-

Step 5.

Estimation of and . Step 4 is repeated times, where can be specified with the option sims. Then, is the quantile of and is the quantile of . The level of can be chosen with the option u.alpha.

Execution Speed and Parallelization. Steps 3 and 4 of the procedure above are the most computationally intensive and we optimize them in two ways. First, to solve the optimization problem in (4.5), scpi relies on ECOS, an efficient solver for conic problems (Domahidi, Chu and Boyd, 2013; Fu, Narasimhan and Boyd, 2020). See Cattaneo, Feng, Palomba and Titiunik (2022) for more details on how to cast the different constrained SC methods into conic optimization problems. To give the reader a sense of the speed improvement, Table 4 compares the execution speed of the conic solver we rely on (first column) with other two popular optimizers in R. The first row of the table reports the median computation time of each optimizer, whereas the second row shows the inter-quartile range. On the one hand, using a conic solver in place of a solver for more generic optimization programs (like nloptr) makes our software 4 times faster. On the other hand, our software is tailored to rewrite the SC problem as a conic problem. This gives a 300-fold gain in speed when compared to CVXR, which relies on ECOS but is meant for prototyping generic optimization problems in conic form.

| ECOS | CVXR | nloptr | |

|---|---|---|---|

| Median | 1.411 | 308.823 | 5.734 |

| IQR | [1.387, 1.431] | [301.183, 315.901] | [5.534, 6.148] |

Notes: The underlying optimization problem is the minimization problem in , where is a simplex-type constraint and and are chosen to replicate the size of the empirical application in Section 5. We evaluate the performance of the function scpi through the R package microbenchmark. This simulation was run using an Apple M2 chip, RAM 8.00 GB.

Second, scpi can be sped up further by efficient parallelization of the tasks performed through the package parallel which assigns different simulations to different cores. If cores are used, the final execution time would be approximately , where is the execution time when a single core is used.

Modelling Out-of-Sample Uncertainty

To quantify the uncertainty coming from , we need to impose some probabilistic structure that allows us to model the distribution and, ultimately, estimate and . We discussed three different alternative approaches: (i) non-asymptotic bounds; (ii) location-scale model; and (iii) quantile regression. The user can choose the preferred way of modeling by setting the option e.method to either ‘gaussian’, ‘ls’, or ‘qreg’.

The user can also choose the information used to estimate (conditional) moments or quantiles of . Practically, we allow the user to specify a design matrix that is then used to run the appropriate regressions depending on the approach requested. By default, we set . Alternatively, the matrix can be provided directly through the option e.design or by specifying the lags of (e.lags) and/or the order of the fully interacted polynomial in (e.order). If the user specifies e.lags = 0 and e.order = 2, then contains , , and all the unique second-order terms generated by the interaction of the columns of . If instead e.order = 0 and e.lags = 0 are set, then and are estimated using the sample average and the sample variance of using the pre-intervention data. Recall that if the option cointegrated.data is set to TRUE, is formed using the first differences of the columns in . Finally, the user can specify with the option e.alpha.

4.4 Simultaneous Prediction Intervals

Up to this point, we focused on prediction intervals that possess high coverage for the individual treatment effect in each period. However, it may be desirable to have prediction intervals that have high simultaneous coverage for several periods, usually known as simultaneous prediction intervals in the literature. In other words, our final goal is to construct a sequence of intervals for some such that with high probability over ,

To construct such intervals, we need to generalize the procedures described above to quantify the in-sample error (Section 4.1) and the out-of-sample error (Section 4.2).

With regard to the in-sample uncertainty, we handle two separate cases. On the one hand, if the constraints in are linear (e.g., simplex or lasso), then

which guarantees that with high probability over

On the other hand, if includes non-linear constraints (e.g., constraints involving the norm), it is necessary to decrease the lower bound and increase the upper bound by some quantity for each . To give an example of what looks like, in the case of ridge-type constraints we have

and see Cattaneo, Feng, Palomba and Titiunik (2022) for more general cases. With regard to the out-of-sample uncertainty, our proposed strategy is a generalization of “Approach 1” in Section 4.2: find and such that with high probability over ,

Suppose that each , , is sub-Gaussian conditional on (not necessarily independent over ) with sub-Gaussian parameters for some . Then, we can take

We can see that, compared to what we had for “Approach 1”, there is an extra term, , which makes the simultaneous prediction intervals longer.

4.5 Sensitivity Analysis

While the three approaches for out-of-sample uncertainty quantification described in Section 4.2 are simple and intuitive, their validity requires potentially strong assumptions on the underlying data generating process that links the pre-treatment and post-treatment data. Such assumptions are difficult to avoid because the ultimate goal is to learn about the statistical uncertainty introduced by a single unobserved random variable after the treatment/intervention is deployed, that is, for some . Without additional data availability, or specific modelling assumptions allowing for transferring information from the pre-treatment period to the post-treatment period, it is difficult to formally construct and using data-driven methods.

We suggest approaching the out-of-sample uncertainty quantification as a principled sensitivity analysis, using the approaches above as a starting point. Given the formal and detailed in-sample uncertainty quantification described previously, it is natural to progressively enlarge the final prediction intervals by adding additional out-of-sample uncertainty to ask the question: how large does the additional out-of-sample uncertainty contribution coming from need to be in order to render the treatment effect statistically insignificant? Using the approaches above, or similar ones, it is possible to construct natural initial benchmarks. For instance, to implement Approach 1, one can use the pre-treatment outcomes or synthetic control residuals to obtain a “reasonable” benchmark estimate of the sub-Gaussian parameter and then progressively enlarge or shrink this parameter to check the robustness of the conclusion. Alternatively, in specific applications, natural levels of uncertainty for the outcomes of interest could be available, and hence used to tabulate the additional out-of-sample uncertainty. We illustrate this approach in Section 5.

5 Empirical Illustration

We showcase the features of the package scpi using real data. For comparability purposes, we employ the canonical dataset in the synthetic control literature on the economic consequences of the 1990 German reunification (Abadie, 2021), and focus on quantifying the causal impact of the German reunification on GDP per capita in West Germany. Thus, we compare the post-reunification outcome for West Germany with the outcome of a synthetic control unit constructed using 16 OECD countries from 1960 to 1990. Using the notation introduced above, we have and . The only feature we exploit to construct the synthetic control is yearly GDP per capita, and we add a constant term for covariate adjustment. Thus and , and . We explore the effect of the reunification from 1991 to 2003, hence . Finally, we treat the time series for West Germany and those countries in the donor pool as a cointegrating system. Given this information, the command scdata() prepares all the matrices needed in the synthetic control framework described above (, , and ), and returns an object that must be used as input in either scest() to predict , or scpi() to conduct inference on .

We first call scdata() to transform any data frame into an object of class “scpi_data”.

After having prepared the data, the next step involves choosing the desired constraint set to construct the vector of weights . We consider the canonical synthetic control method and thus search for optimal weights in . Such constraint set is the default in scest() and, consequently, in scpi(), as the latter internally calls the former to construct . The snippet below illustrates how to call scest() and reports the results displayed in the console with the summary() method.

The next step is uncertainty quantification using scpi(). In this case, we quantify the in-sample and out-of-sample uncertainty the same way, using and as the conditioning set in both cases. To do so, it suffices to set the order of the polynomial in to 1 (u.order <- 1 and e.order <- 1) and not include lags (u.lags <- 0 and e.lags <- 0). Furthermore, by specifying the option u.miss <- TRUE, we take into account that the conditional mean of might differ from 0. This option, together with u.sigma <- "HC1", specifies the following estimator of :

Finally, by selecting e.method <- "gaussian", we perform out-of-sample uncertainty quantification treating as sub-gaussian conditional on and . As a last step, we visualize the constructed synthetic control and compare it with the observed time series for the treated unit, taking advantage of the function scplot().

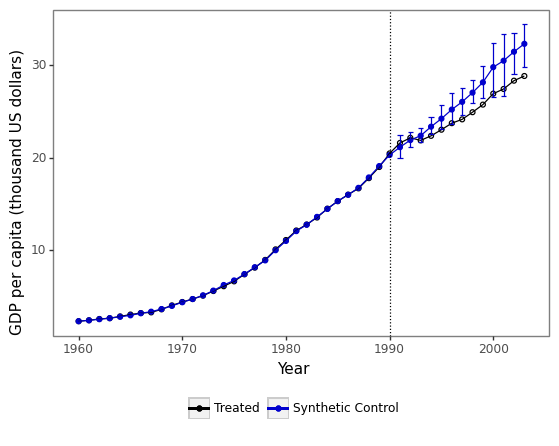

Figure 1 displays the plot resulting from the scplot call. The vertical bars are 90% prediction intervals, where the non-coverage error rate is halved between the out-of-sample and the in-sample uncertainty quantification, i.e. .

Notes: The black line shows the level of the outcome for the treated unit, , , whilst the blue line shows the level of the outcome for the synthetic control, , . The blue bars report 90% prediction intervals for . In-sample uncertainty is quantified by means of 1000 simulations of (4.5), whereas out-of-sample uncertainty is quantified through sub-Gaussian bounds.

We also conduct the same exercise using different choices of (see Table 2). In particular, we construct weights and compute prediction intervals using four other specifications: (i) a lasso-type constraint (Figure 2(a)), (ii) a ridge-type constraint (Figure 2(b)), (iii) no constraint (Figure 2(c)), and (iv) an L1-L2 constraint.

Notes: The black lines show the level of the outcome for the treated unit, , , whilst the blue lines show the level of the outcome for the synthetic control, , . The blue bars report 90% prediction intervals for . In-sample uncertainty is quantified by means of 1000 simulations of (4.5), whereas out-of-sample uncertainty is quantified through sub-Gaussian bounds. In panel (b), , whereas in panel (d) .

Section 4.5 clarified the need for some additional sensitivity analysis when it comes to out-of-sample uncertainty quantification. Figure 3 shows the impact of shrinking and enlarging on the prediction intervals for , , when we assume that is sub-Gaussian conditional on . As shown in the figure, the predicted treatment effect remains different from zero with high probability over even doubling .

Notes: The black horizontal line shows the level of the outcome for the treated unit in 1997, for . The blue bars report 95% prediction intervals for , , that only take into account in-sample uncertainty. The red dashed bars adds the out-of-sample uncertainty to obtain 90% prediction intervals.

Finally, the package offers the possibility to match the treated unit and the synthetic unit using multiple features and the possibility to compute simultaneous prediction intervals. If we want to match West Germany and the synthetic unit not only on GDP per capita but also on trade openness () and include joint prediction intervals, we can simply modify the object scpi_data as follows.

Results are reported in Figure 4, where blue shaded areas depict 90% simultaneous prediction intervals for periods from 1991 to 2004.

Notes: The black line shows the level of the outcome for the treated unit, , , whilst the blue line shows the level of the outcome for the synthetic control, , . The blue bars report 90% prediction intervals for . In-sample uncertainty is quantified by means of 1000 simulations of (4.5), whereas out-of-sample uncertainty is quantified through sub-Gaussian bounds. Blue shaded areas display 90% simultaneous prediction intervals. In panel (c), , whereas in panel (e) .

6 Conclusion

This article introduced the R software package scpi, which implements point estimation/prediction and inference/uncertainty quantification procedures for synthetic control methods. The package is also available in the Stata and Python statistical platforms, as described in the appendices. Further information can be found at https://nppackages.github.io/scpi/.

7 Acknowledgments

We thank Alberto Abadie and Bartolomeo Stellato for many insightful discussions. Cattaneo and Titiunik gratefully acknowledge financial support from the National Science Foundation (SES-2019432), and Cattaneo gratefully acknowledges financial support from the National Institute of Health (R01 GM072611-16).

References

- Abadie (2021) Abadie, A. (2021), “Using Synthetic Controls: Feasibility, Data Requirements, and Methodological Aspects,” Journal of Economic Literature, 59, 391–425.

- Abadie and Cattaneo (2018) Abadie, A., and Cattaneo, M. D. (2018), “Econometric Methods for Program Evaluation,” Annual Review of Economics, 10, 465–503.

- Abadie et al. (2010) Abadie, A., Diamond, A., and Hainmueller, J. (2010), “Synthetic control methods for comparative case studies: Estimating the effect of California’s tobacco control program,” Journal of the American Statistical Association, 105, 493–505.

- Abadie and Gardeazabal (2003) Abadie, A., and Gardeazabal, J. (2003), “The Economic Costs of Conflict: A Case Study of the Basque Country,” American Economic Review, 93, 113–132.

- Abadie and L’Hour (2021) Abadie, A., and L’Hour, J. (2021), “A Penalized Synthetic Control Estimator for Disaggregated Data,” Journal of the American Statistical Association, 116, 1817–1834.

- Amjad et al. (2018) Amjad, M., Shah, D., and Shen, D. (2018), “Robust Synthetic Control,” The Journal of Machine Learning Research, 19, 802–852.

- Arkhangelsky et al. (2021) Arkhangelsky, D., Athey, S., Hirshberg, D. A., Imbens, G. W., and Wager, S. (2021), “Synthetic Difference in Differences,” American Economic Review, 111, 4088–4118.

- Ben-Michael et al. (2022) Ben-Michael, E., Feller, A., and Rothstein, J. (2022), “Synthetic Controls with Staggered Adoption,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), 84, 351–381.

- Boyd and Vandenberghe (2004) Boyd, S., and Vandenberghe, L. (2004), Convex optimization, Cambridge university press.

- Cattaneo et al. (2022) Cattaneo, M. D., Feng, Y., Palomba, F., and Titiunik, R. (2022), “Uncertainty Quantification in Synthetic Controls with Staggered Treatment Adoption,” working paper.

- Cattaneo et al. (2021) Cattaneo, M. D., Feng, Y., and Titiunik, R. (2021), “Prediction Intervals for Synthetic Control Methods,” Journal of the American Statistical Association, 116, 1865–1880.

- Chernozhukov et al. (2021) Chernozhukov, V., Wüthrich, K., and Zhu, Y. (2021), “An Exact and Robust Conformal Inference Method for Counterfactual and Synthetic Controls,” Journal of the American Statistical Association, 116, 1849–1864.

- Domahidi et al. (2013) Domahidi, A., Chu, E., and Boyd, S. (2013), “ECOS: An SOCP solver for embedded systems,” in 2013 European Control Conference (ECC), IEEE, pp. 3071–3076.

- Ferman and Pinto (2021) Ferman, B., and Pinto, C. (2021), “Synthetic Controls with Imperfect Pretreatment Fit,” Quantitative Economics, 12, 1197–1221.

- Friedman et al. (2001) Friedman, J., Hastie, T., and Tibshirani, R. (2001), The Elements of Statistical Learning, Springer, New York.

- Fu et al. (2020) Fu, A., Narasimhan, B., and Boyd, S. (2020), “CVXR: An R Package for Disciplined Convex Optimization,” Journal of Statistical Software, 94, 1–34.

- Hoerl et al. (1975) Hoerl, A. E., Kannard, R. W., and Baldwin, K. F. (1975), “Ridge Regression: Some Simulations,” Communications in Statistics-Theory and Methods, 4, 105–123.

- Hsiao et al. (2012) Hsiao, C., Steve Ching, H., and Ki Wan, S. (2012), “A Panel Data Approach for Program Evaluation: Measuring the Benefits of Political and Economic Integration of Hong Kong with Mainland China,” Journal of Applied Econometrics, 27, 705–740.

- Li (2020) Li, K. T. (2020), “Statistical Inference for Average Treatment Effects Estimated by Synthetic Control Methods,” Journal of the American Statistical Association, 115, 2068–2083.

- Masini and Medeiros (2021) Masini, R., and Medeiros, M. C. (2021), “Counterfactual Analysis with Artificial Controls: Inference, High Dimensions and Nonstationarity,” Journal of the American Statistical Association, 116, 1773–1788.

- Shaikh and Toulis (2021) Shaikh, A. M., and Toulis, P. (2021), “Randomization Tests in Observational Studies With Staggered Adoption of Treatment,” Journal of the American Statistical Association, 116, 1835–1848.

- Wickham (2016) Wickham, H. (2016), ggplot2: Elegant Graphics for Data Analysis, Springer.

- Ye (1998) Ye, J. (1998), “On measuring and Correcting the Effects of Data Mining and Model Selection,” Journal of the American Statistical Association, 93, 120–131.

- Zou et al. (2007) Zou, H., Hastie, T., and Tibshirani, R. (2007), “On the “degrees of freedom” of the lasso,” The Annals of Statistics, 35, 2173–2192.

Appendix A Appendix: Python Illustration

This appendix section shows how to conduct the same analysis carried out in Section 5 using the companion Python package. Figure 5 shows the main results. The L1-L2 constraint is currently not implemented in the Python version of the scpi package due to technical difficulties with the optimizer nlopt. Replication files and data are available at https://nppackages.github.io/scpi/.

Case I:

Notes: The black line shows the level of the outcome for the treated unit, , whilst the blue line shows the level of the outcome for the synthetic control, , . The blue bars report 90% prediction intervals for . In-sample uncertainty is quantified by means of 1000 simulations of (4.5), whereas out-of-sample uncertainty is quantified through sub-Gaussian bounds. In panel (c), , whereas in panel (e) .

Case II:

Notes: The black line shows the level of the outcome for the treated unit, , , whilst the blue line shows the level of the outcome for the synthetic control, , . The blue bars report 90% prediction intervals for . In-sample uncertainty is quantified by means of 1000 simulations of (4.5), whereas out-of-sample uncertainty is quantified through sub-Gaussian bounds. Blue shaded areas display 90% simultaneous prediction intervals. In panel (c), , whereas in panel (e) .

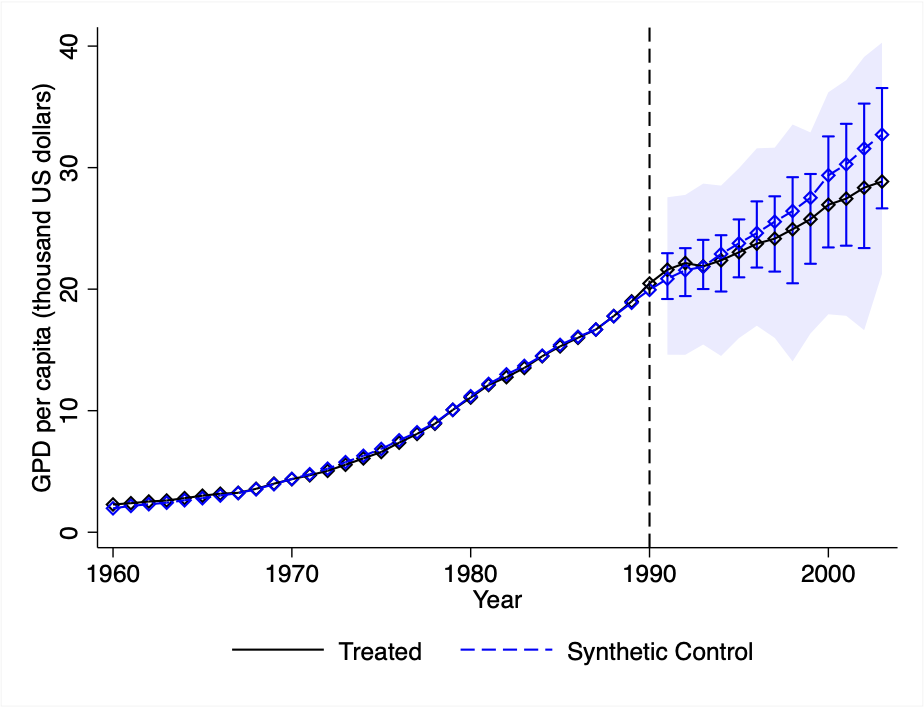

Appendix B Appendix: Stata Illustration

This appendix section replicates the analysis conducted in Section 5 for using the companion Stata package. Main results are shown in Figure 7. The L1-L2 constraint is currently not implemented in the Stata version of the scpi package due to technical difficulties with the optimizer nlopt. Replication files and data are available at https://nppackages.github.io/scpi/.

Case I:

Notes: The black line shows the level of the outcome for the treated unit, , , whilst the blue line shows the level of the outcome for the synthetic control, , . The blue bars report 90% prediction intervals for . In-sample uncertainty is quantified by means of 1000 simulations of (4.5), whereas out-of-sample uncertainty is quantified through sub-Gaussian bounds. In panel (c), , whereas in panel (e) .

Case II:

Notes: The black line shows the level of the outcome for the treated unit, , , whilst the blue line shows the level of the outcome for the synthetic control, , . The blue bars report 90% prediction intervals for . In-sample uncertainty is quantified by means of 1000 simulations of (4.5), whereas out-of-sample uncertainty is quantified through sub-Gaussian bounds. Blue shaded areas display 90% simultaneous prediction intervals. In panel (c), , whereas in panel (e) .