Scheduling Coflows for Minimizing the Makespan in Identical Parallel Networks

Abstract

With the rapid advancement of technology, parallel computing applications have become increasingly popular and are commonly executed in large data centers. These applications involve two phases: computation and communication, which are executed repeatedly to complete the work. However, due to the ever-increasing demand for computing power, large data centers are struggling to meet the massive communication demands. To address this problem, coflow has been proposed as a networking abstraction that captures communication patterns in data-parallel computing frameworks. This paper focuses on the coflow scheduling problem in identical parallel networks, where the primary objective is to minimize the makespan, which is the maximum completion time of coflows. It is considered one of the most significant -hard problems in large data centers. In this paper, we consider two problems: flow-level scheduling and coflow-level scheduling. In the flow-level scheduling problem, distinct flows can be transferred through different network cores, whereas in the coflow-level scheduling problem, all flows must be transferred through the same network core. To address the flow-level scheduling problem, this paper proposes two algorithms: a -approximation algorithm and a -approximation algorithm, where represents the number of network cores. For the coflow-level scheduling problem, this paper proposes a -approximation algorithm. Finally, we conduct simulations on our proposed algorithm and Weaver’s algorithm, as presented in Huang et al. (2020) in the 2020 IEEE International Parallel and Distributed Processing Symposium (IPDPS). We also validate the effectiveness of the proposed algorithms on heterogeneous parallel networks.

Key words: Coflow scheduling, identical parallel networks, makespan, data center, approximation algorithm.

1 Introduction

In recent years, the rapid growth in data volumes and the rise of cloud computing have revolutionized software systems and infrastructure. Numerous applications now dealing with large datasets sourced from diverse origins, presenting a formidable challenge in terms of efficient and prompt data handling. Consequently, the utilization of parallel computing applications has gained significant traction in large-scale data centers, as a means to tackle this pressing concern.

Data-parallel computation frameworks, including widely used ones like MapReduce [12], Hadoop [3], and Dyrad [17], offer the flexibility for applications to seamlessly transition between computation and communication stages. During the computation stage, intermediate data is generated and exchanged between sets of servers via the network. Subsequently, the communication stage involves the transfer of a substantial collection of flows, and the computation stage can only commence once all flows from the previous communication stage have been completed. Nevertheless, traditional networking approaches primarily prioritize optimizing flow-level performance rather than considering application-level performance metrics [18]. Notably, in application-level performance metrics, the completion time of a job is determined solely by the last flow to finish the communication phase, disregarding any flows that may have completed earlier within the same stage. To tackle this issue, Chowdhury and Stoica [9] introduced the concept of coflow abstraction, which takes into account application-level communication patterns for more comprehensive management and optimization.

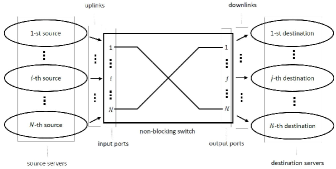

A coflow, as defined by Qiu et al. [18], represents a collection of parallel flows that share a common performance goal. The data center is modeled as a non-blocking switch, depicted in Figure 1, consisting of input and output ports, with the switch serving as a network core. The input ports facilitate data transfer from source servers to the network, while the output ports transfer data from the network to destination servers. Each coflow can be represented by an demand matrix, where each element denotes the data volume transferred from input to output for the corresponding flow . The capacity of all network links is assumed to be uniform, and capacity constraints apply to both input and output ports. The paper focuses on the coflow scheduling problem in identical parallel networks, aiming to minimize the makespan, which refers to the maximum completion time of all coflows. This problem is recognized as one of the most significant -hard problems encountered in large-scale data centers. To address this challenge, the paper introduces approximation algorithms for both flow-level scheduling and coflow-level scheduling problems and evaluates their performance against existing algorithms through simulations.

Previous studies on coflow scheduling problems [18, 20, 21] have predominantly focused on a single-core model. This model has been considered practical due to its utilization of topological designs such as Fat-tree or Clos networks [2, 14], which facilitate the construction of data center networks with full bisection bandwidth. However, as technology trends evolve and computation networks become more intricate, the single-core model becomes insufficient in meeting the evolving requirements. It has been observed that modern data centers often employ multiple generations of networks simultaneously [23] to bridge the gap in network speeds. As a result, our focus shifts towards identical parallel networks, where coflows can be transmitted through multiple identical network cores and processed in parallel. In this paper, the completion time of a coflow is defined as the time taken for the last flow within the coflow to complete. The objective of this paper is to schedule coflows in identical parallel networks, aiming to minimize the makespan, which represents the maximum completion time among all coflows.

This paper addresses the concept of coflow, which encompasses two distinct scheduling problems: flow-level scheduling and coflow-level scheduling. In the flow-level scheduling problem, individual flows can be distributed among multiple network cores for transmission. In contrast, the coflow-level scheduling problem limits the transmission of its constituent flows to a single network core. These two problems, representing different levels of granularity, capture the scheduling complexities associated with coflows.

1.1 Our Contributions

This paper addresses the coflow scheduling problem in identical parallel networks with the objective of minimizing the makespan. In the flow-level scheduling problem, we propose a -approximation algorithm as well as a -approximation algorithm, where represents the number of network cores. Additionally, for the coflow-level scheduling problem, we present a -approximation algorithm.

1.2 Organization

We structure the remaining sections as follows. Section 2 provides an overview of several related works. In Section 3, we present the fundamental notations and preliminaries used in this paper. Our main results are presented in Section 4 and Section 5, where we provide two approximation algorithms for flow-level scheduling in Section 4, and one approximation algorithm for coflow-level scheduling in Section 5. Subsequently, in Section 6, we conduct experiments to evaluate and compare the performance of our proposed algorithms with Weaver’s [16]. Finally, in Section 7, we present our conclusions.

2 Related Work

In the literature, numerous heuristic algorithms have been proposed to tackle coflow scheduling problems, such as those discussed in [10, 11, 15, 22]. Mosharaf et al. [11] introduced a Smallest-Effective-Bottleneck-First heuristic that allocates coflows greedily based on the maximum server loads. They then utilized the Minimum-Allocation-for-Desired-Duration algorithm to assign rates to the corresponding flows. Dian et al. [22] conducted simulations to address the joint problem of coflow scheduling and virtual machine placement. They proposed a heuristic approach aimed at minimizing the completion time of individual coflows. Additionally, Chowdhury et al. [10] presented a scheduler called Coflow-Aware Least-Attained Service, which operates without prior knowledge of coflows.

The concurrent open shop problem has been proven to be -complete to approximate within a factor of for any , in the absence of release times, as demonstrated in [19, 21]. Interestingly, it is worth noting that each concurrent open shop problem can be reduced to a coflow scheduling problem. Consequently, the coflow scheduling problem is also NP-complete to approximate within a factor of for any , when release times are not considered [1, 21].

In the context of minimizing the total weighted completion time in identical parallel networks, Chen [5] proposed several approximation algorithms for the coflow scheduling problem under different conditions. For the flow-level scheduling problem, Chen devised an algorithm that achieved a -approximation with release time and a -approximation without release time, where represents the number of network cores. This algorithm employed an iterative approach to schedule all flows based on the completion time order of coflows, computed using a linear program. Subsequently, the algorithm assigned each flow to the least loaded network core to minimize the flow’s completion time.

Regarding the coflow-level scheduling problem, Chen developed an algorithm that achieved a -approximation with release time and a -approximation without release time. Similar to the approach for divisible coflows, this algorithm also employed an iterative strategy to schedule flows based on the completion time order of coflows, computed using a linear program. Subsequently, the algorithm assigned each coflow to the least loaded network core to minimize the coflow’s completion time.

In the context of coflow scheduling problems with precedence constraints, Chen [6] also proposed two approximation algorithms for the aforementioned four conditions. In these cases, the approximation ratio for each condition, considering precedence constraints, is equal to the approximation ratio for each condition without precedence constraints, multiplied by a factor of . Here, represents the coflow number of the longest path in the precedence graph.

In the domain of coflow scheduling problems for minimizing the total weighted completion time in a single network core, several related works have been proposed [18, 20, 21]. Qiu et al. [18] introduced deterministic approximation algorithms achieving a -approximation and a -approximation with release time and without release time, respectively. Additionally, they obtained randomized approximation algorithms resulting in a -approximation and an -approximation with release time and without release time, respectively. The deterministic and randomized algorithms share a similar framework. They both approached the problem by relaxing it to a polynomial-sized interval-indexed linear program (LP), which provided an ordered list of coflows. Subsequently, the coflows were grouped based on their minimum required completion times from the ordered list. The algorithms scheduled coflows within the same time interval as a single coflow, utilizing matchings obtained through the Birkhoff-von Neumann decomposition theorem. The distinguishing factor between the deterministic and randomized algorithms lies in the selection of the time interval. The deterministic algorithm employed a fixed time point, while the randomized algorithm opted for a random time point. However, Ahmadi et al. [1] discovered that their approaches only yielded a deterministic -approximation algorithm with release time. On the other hand, Shafiee et al. [21] presented the best-known results in recent work, proposing a deterministic -approximation algorithm with release time and a -approximation algorithm without release time. The deterministic algorithm employed a straightforward list scheduling strategy based on the order of the coflows’ completion time, computed using a relaxed linear program that utilized ordering variables.

When addressing the coflow scheduling problem with the objective of minimizing the makespan in heterogeneous parallel networks, Huang et al. [16] proposed an -approximation algorithm called Weaver, where represents the number of network cores. The Weaver algorithm scheduled all flows iteratively based on their size in descending order. Subsequently, it classified the flows into two categories: critical and non-critical. For critical flows, the algorithm selected a network that minimizes the coflow completion time. On the other hand, for non-critical flows, it selected a network to balance the load.

Furthermore, Chen [4] further improved upon the previous results by introducing an -approximation algorithm. As a preprocessing step, Chen modified the makepan scheduling problem instance to contain only a small number of groups. In the first stage of preprocessing, any network cores that were at most times the speed of the fastest network core, where is the number of network cores, were discarded. The second stage of preprocessing involved dividing the remaining network cores into groups based on their similar speeds. Following the preprocessing step, Chen implemented the list algorithm to identify the least loaded network core and assigned flows to it. Additionally, Chen obtained an -approximation algorithm for minimizing the total weighted completion time.

3 Notation and Preliminaries

Our work focuses on the abstraction of identical parallel networks, which is an architecture consisting of identical network cores operating in parallel. We consider the identical parallel networks as a set of giant non-blocking switches, where each switch represents a network core. These switches have input ports and output ports. The input ports are responsible for transferring data from source servers to the network, while the output ports transfer data from the network to destination servers. In the network, there are source servers, where the -th source server is connected to the -th input port of each parallel network core. Similarly, there are destination servers, where the -th destination server is linked to the -th output port of each core. As a result, each source server has synchronized uplinks, and each destination server has synchronized downlinks. We model the network core as a bipartite graph, with the set representing the source servers on one side and the set representing the destination servers on the other side. Capacity constraints apply to both the input and output ports, allowing for the transfer of one data unit per one-time unit through each port. For simplicity, we assume that the capacity of all links within each network core is uniform, meaning that all links within a core have the same speed rate.

A coflow represents a collection of independent flows that share a common performance objective. Let denote the set of coflows. Each coflow can be represented as an demand matrix . It is important to note that each individual flow can be identified by a triple , where represents the source node, represents the destination node, and denotes the corresponding coflow. The size of the flow is denoted as , which corresponds to the -th element of the demand matrix . Each value represents the amount of data transferred by the flow from input to output . Furthermore, in our problem formulation, we assume that the sizes of flows are discrete and represented as integers. To simplify the problem, we consider all flows within a coflow to arrive simultaneously in the system, as described in [18].

Let denote the completion time of coflow . The completion time of a coflow is defined as the time when the last flow in the coflow finishes. Our objective is to schedule coflows in identical parallel networks to minimize the makespan , which represents the maximum completion time among all coflows. Table 1 provides an overview of the notation and terminology utilized in this paper.

| Symbol | Meaning |

|---|---|

| The number of network cores. | |

| The number of input/output ports. | |

| The number of coflows. | |

| The set of network cores. | |

| The source sever set. | |

| The destination server set. | |

| The set of coflows. | |

| The set of flows from all coflows . | |

| The demand matrix of coflow . | |

| The size of the flow to be transferred from input to output in coflow . | |

| is the total amount of data that coflow has to transfer through input port , and is the total amount of data that coflow has to transfer through output port . | |

| The speed factor of network core . | |

| The completion time of coflow . | |

| The makespan, the maximum of the completion time of coflows. |

4 Approximation Algorithm for flow-level Scheduling

In this section, we specifically address the scenario where coflows are considered in flow level, allowing for the transfer of individual flows through different network cores. Our focus is on a solution that operates at the flow level, prohibiting flow splitting. This means that data belonging to the same flow can only be assigned to a single network core (as discussed in [16]).

4.1 Algorithm

In this subsection, we introduce two algorithms for the flow-level scheduling problem. One is flow-list-scheduling (FLS) described in Algorithm 1, and the other is flow-longest-processing-time-first-scheduling (FLPT) described in Algorithm 2. The algorithm referred to as FLS (Algorithm 1) is outlined below. Let represent the set of flows obtained from all coflows within the coflow set . For each flow , our algorithm examines all flows that share congestion with and are scheduled prior to . Subsequently, flow is assigned to the core with the least workload, thereby minimizing the completion time of flow . Lines 6-11 determine the core with the minimum load and assign the flow to it. In terms of time complexity, Algorithm 1 (FLS) involves scanning each flow in (line 6 in Algorithm 1), which amounts to iterations. For each flow, a comparison is made among cores to identify the least loaded core (line 7 in Algorithm 1). Consequently, the time complexity of FLS is .

The algorithm known as FLPT (Algorithm 2) is presented below. It should be noted that Algorithm 2 is nearly identical to Algorithm 1. The key difference lies in line 6 of the algorithm. Specifically, lines 6-11 involve sorting the flows in non-increasing order based on the value of . Subsequently, the algorithm identifies the core with the least workload and assigns the flow to it. In terms of time complexity, Algorithm 2 (FLPT) begins by spending a runtime complexity of to sort the flows (line 6 in Algorithm 2). Afterwards, FLPT follows the same procedure as FLS. Consequently, the time complexity of FLPT can be expressed as .

4.2 Analysis

This subsection shows that Algorithm 1 achieves -approximation ratio and Algorithm 2 achieves -approximation ratio, where is the number of network cores. An intuitive lower bound on the optimal solution cost is

| (1) |

where , and . First, the following lemma for Algorithm 1 is obtained:

Lemma 1.

Let be the cost of an optimal solution, and let denote the makespan in the schedule found by FLS (Algorithm 1). Then,

Proof.

Let be the flow set of input port , be the flow set of output port . According to the lower bound (1), we know that

| (2) | |||||

| (3) | |||||

| (4) |

Assume that the last flow in the schedule of FLS is the flow , and the flow is sent via link . We have

| (5) | |||||

| (7) |

The concept of inequality (5) is similar to the proof of list scheduling in [24]. The inequality (4.2) is due to inequalities (2) and (3). The inequality (7) is based on the inequality (4), where . ∎

Theorem 1.

FLS (Algorithm 1) has an approximation ratio of , where is the number of network cores.

Next, this paper shows that Algorithm 2 has a better approximation ratio than Algorithm 1. We consider the worst case that one flow will affect other flows at input port and output port on the same core, then other affected flows will keep affecting others. This causes all flows on the same core can not be sent from input port to output port in parallel. In other words, the load of combining input port and output port of each core is the sum of all flows on core . Therefore, the following lemma for Algorithm 2 is obtained:

Lemma 2.

Let be the cost of an optimal solution, and let denote the makespan in the schedule found by FLPT (Algorithm 2). Then,

Proof.

Assume that the last flow in the schedule of FLPT is the flow . Considering the flows , they are sorted in non-increasing order of the size of flow, i.e., . Assume that all flows , they are sorted in non-increasing order of the size of flow, too, i.e., . Since flows do not change the value of , we can omit them. Therefore, flow is viewed as the latest and the smallest flow.

Based on the discussion above, our notations can be defined. Let be the set of flows , where is the latest and the smallest flow in the schedule of FLPT. Considering the worst case, let be the optimal time of the scheduling solution for all flows on input port and output port , where no two flows can be transmitted simultaneously on the same core. According Graham’s bound [13], we have

Let be the cost of an optimal solution only for port , and be the optimal solution only for port . Note that must be held, otherwise, we can construct a solution by using and , which is better than the optimal solution . Since , . Finally, we have

∎

Theorem 2.

FLPT (Algorithm 2) has an approximation ratio of , where is the number of network cores.

5 Approximation Algorithm for Coflow-level Scheduling

This section considers the coflow-level scheduling problem, where distinct flows in a coflow are allowed to be transferred through the same core only. Let be the total amount of data that coflow has to transfer through input port , and be the total amount of data that coflow has to transfer through output port .

5.1 Algorithm

This subsection introduces an algorithm for solving the coflow-level scheduling problem. The algorithm, called coflow-list-scheduling (CLS) and described in Algorithm 3, aims to assign each coflow to a core in order to minimize the completion time of coflow . Lines 6-11 of the algorithm identify the core with the minimum maximum completion time and assign the coflow to it.

In terms of time complexity, Algorithm 3 scans each coflow in (line 6), resulting in iterations. For each coflow, the algorithm compares cores to find the least loaded core (line 7). Additionally, for each core, pairs of input and output ports are compared to determine the maximum completion time (line 7). Consequently, the time complexity of CLS is .

5.2 Analysis

This section paper shows that Algorithm 3 achieves -approximation ratio, where is the number of network cores. First, the following lemma for Algorithm 3 is obtained:

Lemma 3.

Let be the cost of an optimal solution, and let denote the makespan in the schedule found by CLS (Algorithm 3). Then,

Proof.

Theorem 3.

CLS (Algorithm 3) has an approximation ratio of , where is the number of network cores.

6 Experiments

This section presents the simulation results and evaluates the performance of our proposed algorithms. Additionally, this paper compares the performance of our proposed algorithms for the flow-level scheduling problem with the algorithm Weaver proposed by Huang et al. [16]. Furthermore, the experiment demonstrates that our results align with the approximation ratios analyzed in sections 4 and 5.

6.1 Workload

We have implemented a flow-level simulator to track the assignment of each flow to various cores in both identical parallel networks and heterogeneous parallel networks. Our simulator is based on Mosharaf’s implementation [8], which originally simulates coflows on a single core. To track coflows assigned to cores, we have modified the code so that our simulator traces flows times for all cores. Additionally, we have incorporated Shafiee and Ghaderi’s algorithm [21] to ensure that all flows are transferred in a preemptible manner within each core. Moreover, each link in our simulator has a capacity of 128 MBps. We have chosen the time unit to be second (approximately 8 milliseconds) so that each link has a capacity of 1 MB per time unit.

In our study, all algorithms are simulated using both synthetic and real traffic traces. In synthetic traces, coflows are generated based on the number of coflows, denoted as , and the number of ports, denoted as . Each coflow is described by , where and , for all . Let represent the number of non-zero flows within each coflow. Then, , where and are randomly chosen from the set . Additionally, is randomly assigned to input links, and is randomly assigned to output links. The size of flow is randomly selected from . If the construction of a coflow is not explicitly specified in the synthetic traces, the default construction for all coflows follows a certain distribution of coflow descriptions: , , , and with percentages of , , , and respectively.

In real traces, coflows are generated from a realistic workload based on a Hive/MapReduce trace [7] obtained from Facebook, which was collected from a 3000-machine setup with 150 racks. These real traces have been widely used as benchmarks in various works, such as [11, 16, 18, 21]. The purpose of this benchmark is to provide realistic workloads synthesized from real-world data-intensive applications for the evaluation of coflow-based solutions. Since this paper does not consider release times, the release time for all coflows is set to 0.

In order to assess the performance of the algorithms, we calculate the approximation ratio. For identical parallel networks, the ratio is obtained by dividing the makespan achieved by the algorithms by the lower bound of the optimal value:

where represents the number of network cores. This lower bound value provides an estimate of the optimal makespan.

For heterogeneous parallel networks, the ratio of algorithms is calculated by dividing the makespan obtained from the algorithms by another lower bound of the optimal value:

In this case, represents the speed factor of core . In a heterogeneous parallel network with network cores, is randomly selected from the range , where is a heterogeneity factor. Higher values of indicate lower variance in network core speeds. This lower bound estimation takes into account the varying speeds of the cores.

By comparing the achieved makespan with these lower bound estimates, we can evaluate the performance of the algorithms in both identical and heterogeneous parallel networks.

6.2 Simulation Results in Identical Parallel Networks

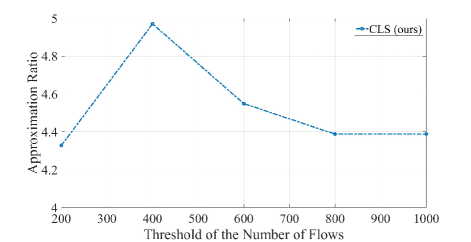

Figure 2 illustrates the algorithm ratios of FLS, FLPT, Weaver, and CLS for different thresholds of the number of flows in identical parallel networks. The real traces consist of 526 coflows distributed across network cores with input/output links. Among all the coflows, the maximum number of flows is 21170, while the minimum number is 1. Additionally, the maximum flow size is 2472 MB, and the minimum size is 1 MB. Similar to the approach described in [21], we set a threshold to filter coflows based on the number of non-zero flows. Coflows with a number of flows below the threshold are filtered out. We consider five collections filtered using the following thresholds: 200, 400, 600, 800, and 1000.

Subsequent experiments reveal that when there is a large number of coflows or sparse demand matrices, FLPT and Weaver demonstrate very similar performance. Consequently, in Figure 2(a), FLPT exhibits the same ratio as Weaver. Additionally, CLS matches the ratio of as depicted in Figure 2(b).

Figure 3 illustrates the algorithm ratios of FLS, FLPT, Weaver, and CLS for different numbers of network cores in identical parallel networks. In this synthetic trace, we consider coflows across 5 scenarios with varying numbers of network cores and input/output links. Each scenario represents a distinct number of cores, namely . For each scenario, we generate 100 sample traces and report the average performance of the algorithms.

The results demonstrate that FLPT consistently outperforms Weaver in terms of the ratio, as depicted in Figure 3(a). Furthermore, as the number of cores increases, the improvement provided by FLPT becomes more significant. Additionally, the ratio of CLS exhibits an increasing trend with the growing number of cores, as illustrated in Figure 3(b). This result aligns with the theoretical analysis.

Figure 4 illustrates the algorithm ratios of FLS, FLPT, Weaver, and CLS for different numbers of network coflows in an identical parallel network. In this synthetic trace, we consider cores with input/output links across 5 scenarios with varying numbers of coflows. Each scenario represents a distinct number of coflows: . For each scenario, we generate 100 sample traces and report the average performance of the algorithms.

The results demonstrate that FLPT outperforms Weaver in terms of the approximation ratio, as depicted in Figure 4(a). Furthermore, as the number of coflows increases, Weaver’s performance approaches that of FLPT. Moreover, the ratio of CLS decreases as the number of coflows increases, as shown in Figure 4(b). This indicates that the algorithm performs better with a larger number of coflows.

Figure 5 illustrates the approximation ratio of FLS, FLPT, Weaver, and CLS for dense and combined instances, as described in [21]. These instances are deployed in an identical parallel network. The synthetic trace consists of two sets of 25 coflows, each utilizing network cores and input/output links. One set represents a dense instance, while the other represents a combined instance. To create dense and combined instances, we define dense and sparse coflows. In a dense coflow, the coflow description is set to . On the other hand, a sparse coflow has the description . Therefore, in a dense instance, each coflow is dense, whereas in a combined instance, each coflow has an equal probability of being dense or sparse.

We generate 100 sample traces for each instance and present the average performance of the algorithms. The results show that FLPT outperforms Weaver in both dense and combined instances, as depicted in Figure 5(a). The improvement is more significant in the case of dense instances. Moreover, the ratio of dense instances is superior to that of combined instances, as shown in Figure 5(a) and Figure 5(b).

| FLS | FLPT | Weaver | CLS | |

|---|---|---|---|---|

| 1.4692 | 1.3310 | 1.4597 | 7.2222 | |

| 1.5671 | 1.4109 | 1.5655 | 7.7068 | |

| 1.6418 | 1.4956 | 1.6659 | 8.9244 | |

| Maximum | 1.9551 | 1.7989 | 2.0372 | 11.5818 |

| Minimum | 1.2529 | 1.1764 | 1.1679 | 5.8152 |

Figure 6 presents a box plot showing the performance of FLS, FLPT, Weaver, and CLS in an identical parallel network. The synthetic trace used for this analysis consists of coflows deployed in a network with cores and input/output links. We conducted 100 sample traces for each algorithm and represented the results using a box plot. The plot includes quartiles, maximum and minimum values for each algorithm. The findings demonstrate that FLPT not only achieves a superior ratio compared to Weaver but also exhibits a narrower interquartile range. This is evident in Figure 6 and summarized in Table 2. In Figure 6(b), it can be observed that the approximation ratio of CLS, as well as its interquartile range, increase as the number of cores grows.

Figure 7 displays the cumulative distribution function (CDF) of core completion time for FLS, FLPT, Weaver, and CLS in an identical parallel network. The synthetic trace used in this analysis involves 15 coflows operating in a network with cores and input/output links. The results indicate that FLPT achieves core completion before 29.320 seconds for all cores, while both FLS and Weaver achieve completion before 30.872 seconds, as illustrated in Figure 7(a). Additionally, CLS completes the core processing before 64.592 seconds for all cores, as depicted in Figure 7(b).

6.3 Simulation Results in Heterogeneous Parallel Networks

The algorithm proposed in this paper can be adapted for scheduling in heterogeneous parallel networks. The pseudocode of the algorithms is provided in the APPENDIX. The following are the simulation results of the algorithms in heterogeneous parallel networks.

Figure 8 illustrates the approximation ratio of FLPT and Weaver for different numbers of cores in heterogeneous parallel networks. The analysis is based on a synthetic trace comprising 25 coflows in five scenarios with varying numbers of network cores, while maintaining input/output links. In Figure 8(a), we set to 5, while in Figure 8(b), we set to 1. For each scenario, we generate 100 sample traces and report the average performance of the algorithms.

The findings demonstrate that as the number of cores increases, the approximation ratio also increases. Furthermore, as the number of cores increases, the performance gap between FLPT and Weaver widens. These observations align with the results observed in identical parallel networks. In Figure 8(b), the approximation ratio of CLS increases with the number of cores and decreases with the number of coflows. This result is also consistent with the findings observed in identical parallel networks.

Figure 9 illustrates a box plot displaying the performance of FLS, FLPT, Weaver, and CLS in heterogeneous parallel networks. The synthetic trace used in this analysis involves coflows operating in a network with cores and input/output links. For this scenario, we set as 5 and generated 100 sample traces for each algorithm. The box plot includes quartiles, maximum and minimum values for each algorithm. The results reveal that FLPT not only achieves a superior ratio compared to Weaver but also exhibits a narrower interquartile range. This is demonstrated in Figure 9(a) and summarized in Table 3. In Figure 9(b), it can be observed that the approximation ratio of CLS, as well as its interquartile range, increase as the number of cores grows. These results are consistent with those observed in identical parallel networks.

| FLPT | Weaver | CLS () | |

|---|---|---|---|

| 1.4556 | 1.5420 | 7.6049 | |

| 1.5486 | 1.6404 | 8.2986 | |

| 1.6340 | 1.8241 | 9.0896 | |

| Maximum | 1.8936 | 2.1244 | 11.1368 |

| Minimum | 1.3136 | 1.3510 | 5.9693 |

Figure 10 displays the approximation ratio of FLPT, Weaver, and CLS for different heterogeneity factors in heterogeneous parallel networks. The analysis is based on a synthetic trace comprising 25 coflows and cores with input/output links in five scenarios with varying heterogeneity factors.

For each scenario, we generated 100 sample traces and reported the average performance of the algorithms. When the heterogeneity increases (with ), FLPT and Weaver exhibit similar performance. However, when the heterogeneity decreases (with ), the performance gap between FLPT and Weaver widens. Overall, FLPT outperforms Weaver in terms of performance. Regarding CLS, there is no significant difference in performance based on heterogeneity factors. However, the number of cores has a greater impact on the performance of CLS.

7 Conclusion

This paper focuses on addressing the problem of coflow scheduling with the objective of minimizing the makespan of all network cores. We propose three algorithms that achieve approximation ratios of and for the flow-level scheduling problem, and an approximation ratio of for the coflow-level scheduling problem in identical parallel networks.

To evaluate the performance of our algorithms, we conduct experiments using both real and synthetic traffic traces, comparing them against Weaver’s algorithm. Our experimental results demonstrate that our algorithms outperform Weaver’s in terms of approximation ratio. Furthermore, we extend our evaluation to include heterogeneous parallel networks and find that the results align with those obtained in identical parallel networks.

As part of future work, we can explore additional constraints such as deadline constraints and consider alternative objectives such as tardiness objectives. Additionally, the problem of bandwidth allocation, which was not addressed in this paper, presents an interesting research direction. We anticipate further exploration of extended problems that arise from multiple parallel networks, as they play a crucial role in achieving quality of service.

Appendix A Algorithms for Heterogeneous Parallel Networks

In an extension of Algorithm 2, the FLPT algorithm can be adapted to handle heterogeneous parallel networks. The modified algorithm, referred to as FLPT-h (Algorithm 4), is outlined below. Let denote the speed factor of core . The load of flow on core is given by . Algorithm 4 closely resembles Algorithm 2, with the only difference occurring at lines 7, 9, and 10. In line 7, we identify a core that minimizes the completion time of flow . In lines 9-10, the values of and are updated with if flow is assigned to core .

In an extension of Algorithm 3, the CLS algorithm also can be adapted to handle heterogeneous parallel networks. The modified algorithm, referred to as CLS-h (Algorithm 5), is outlined below. The load of coflow on core is given by and for all and , respectively. In line 7, we identify a core that minimizes the completion time of coflow . In lines 9-10, the values of and are updated with and , respectively.

References

- [1] S. Ahmadi, S. Khuller, M. Purohit, and S. Yang, “On scheduling coflows,” Algorithmica, vol. 82, no. 12, pp. 3604–3629, 2020.

- [2] M. Al-Fares, A. Loukissas, and A. Vahdat, “A scalable, commodity data center network architecture,” ACM SIGCOMM computer communication review, vol. 38, no. 4, pp. 63–74, 2008.

- [3] D. Borthakur, “The hadoop distributed file system: Architecture and design,” Hadoop Project Website, vol. 11, no. 2007, p. 21, 2007.

- [4] C.-Y. Chen, “Scheduling coflows for minimizing the total weighted completion time in heterogeneous parallel networks,” arXiv preprint arXiv:2204.07799, 2022.

- [5] C. Chen, “Scheduling coflows for minimizing the total weighted completion time in identical parallel networks,” CoRR, vol. abs/2204.02651, 2022. [Online]. Available: https://doi.org/10.48550/arXiv.2204.02651

- [6] C.-Y. Chen, “Scheduling coflows with precedence constraints for minimizing the total weighted completion time in identical parallel networks,” arXiv preprint arXiv:2205.02474, 2022.

- [7] M. Chowdhury, “Coflow-benchmark,” https://github.com/coflow/coflow-benchmark.

- [8] ——, “Coflowsim,” https://github.com/coflow/coflowsim.

- [9] M. Chowdhury and I. Stoica, “Coflow: A networking abstraction for cluster applications,” in Proceedings of the 11th ACM Workshop on Hot Topics in Networks, 2012, pp. 31–36.

- [10] ——, “Efficient coflow scheduling without prior knowledge,” ACM SIGCOMM Computer Communication Review, vol. 45, no. 4, pp. 393–406, 2015.

- [11] M. Chowdhury, Y. Zhong, and I. Stoica, “Efficient coflow scheduling with varys,” in Proceedings of the 2014 ACM conference on SIGCOMM, 2014, pp. 443–454.

- [12] J. Dean and S. Ghemawat, “Mapreduce: simplified data processing on large clusters,” Communications of the ACM, vol. 51, no. 1, pp. 107–113, 2008.

- [13] R. L. Graham, “Bounds on multiprocessing timing anomalies,” SIAM Journal on Applied Mathematics, vol. 17, no. 2, pp. 416–429, 1969. [Online]. Available: https://doi.org/10.1137/0117039

- [14] A. Greenberg, J. R. Hamilton, N. Jain, S. Kandula, C. Kim, P. Lahiri, D. A. Maltz, P. Patel, and S. Sengupta, “Vl2: A scalable and flexible data center network,” in Proceedings of the ACM SIGCOMM 2009 conference on Data communication, 2009, pp. 51–62.

- [15] A. Hasnain and H. Karl, “Coflow scheduling with performance guarantees for data center applications,” in 2020 20th IEEE/ACM International Symposium on Cluster, Cloud and Internet Computing (CCGRID). IEEE, 2020, pp. 850–856.

- [16] X. S. Huang, Y. Xia, and T. E. Ng, “Weaver: Efficient coflow scheduling in heterogeneous parallel networks,” in 2020 IEEE International Parallel and Distributed Processing Symposium (IPDPS). IEEE, 2020, pp. 1071–1081.

- [17] M. Isard, M. Budiu, Y. Yu, A. Birrell, and D. Fetterly, “Dryad: distributed data-parallel programs from sequential building blocks,” in Proceedings of the 2nd ACM SIGOPS/EuroSys European Conference on Computer Systems 2007, 2007, pp. 59–72.

- [18] Z. Qiu, C. Stein, and Y. Zhong, “Minimizing the total weighted completion time of coflows in datacenter networks,” in Proceedings of the 27th ACM symposium on Parallelism in Algorithms and Architectures, 2015, pp. 294–303.

- [19] S. Sachdeva and R. Saket, “Optimal inapproximability for scheduling problems via structural hardness for hypergraph vertex cover,” in 2013 IEEE Conference on Computational Complexity. IEEE, 2013, pp. 219–229.

- [20] M. Shafiee and J. Ghaderi, “Scheduling coflows in datacenter networks: Improved bound for total weighted completion time,” ACM SIGMETRICS Performance Evaluation Review, vol. 45, no. 1, pp. 29–30, 2017.

- [21] ——, “An improved bound for minimizing the total weighted completion time of coflows in datacenters,” IEEE/ACM Transactions on Networking, vol. 26, no. 4, pp. 1674–1687, 2018.

- [22] D. Shen, J. Luo, F. Dong, and J. Zhang, “Virtco: joint coflow scheduling and virtual machine placement in cloud data centers,” Tsinghua Science and Technology, vol. 24, no. 5, pp. 630–644, 2019.

- [23] A. Singh, J. Ong, A. Agarwal, G. Anderson, A. Armistead, R. Bannon, S. Boving, G. Desai, B. Felderman, P. Germano, et al., “Jupiter rising: A decade of clos topologies and centralized control in google’s datacenter network,” ACM SIGCOMM computer communication review, vol. 45, no. 4, pp. 183–197, 2015.

- [24] D. P. Williamson and D. B. Shmoys, Greedy Algorithms and Local Search. Cambridge University Press, 2011, p. 27–56.