[2]⟨⟩_L^2#1,#2

Scalar auxiliary variable approach

for conservative/dissipative partial differential equations

with unbounded energy

Abstract

In this paper, we present a novel investigation of the so-called SAV approach, which is a framework to construct linearly implicit geometric numerical integrators for partial differential equations with variational structure. SAV approach was originally proposed for the gradient flows that have lower-bounded nonlinear potentials such as the Allen-Cahn and Cahn-Hilliard equations, and this assumption on the energy was essential. In this paper, we propose a novel approach to address gradient flows with unbounded energy such as the KdV equation by a decomposition of energy functionals. Further, we will show that the equation of the SAV approach, which is a system of equations with scalar auxiliary variables, is expressed as another gradient system that inherits the variational structure of the original system. This expression allows us to construct novel higher-order integrators by a certain class of Runge-Kutta methods. We will propose second and fourth order schemes for conservative systems in our framework and present several numerical examples.

1 Introduction

In this paper, we consider geometric numerical integration of Partial Differential Equations (PDEs) of the form

| (1) |

where is a skew-adjoint or negative semidefinite operator defined over an appropriate Hilbert space , is the Fréchet derivative of a functional , and denotes the temporal derivative. (The details of the setting will be described later.) A class of PDEs of the form (1) involves many physically important equations, e.g., the Korteveg–de Vries equation, the Camassa–Holm equation [7], the Cahn–Hilliard equation [5] and the Swift–Hohenberg equation [35]. This paper is devoted to novel investigation of the SAV approach for these equations, which is a framework to construct linearly implicit geometric numerical integrators recently proposed in [33].

A prominent property of the above PDEs is a conservation/dissipation law:

| (2) |

where denotes the inner-product of . Therefore, for these PDEs, countless numerical schemes inheriting the conservation/dissipation law have been considered in the literature.

In particular, there are some unified approaches to construct such schemes. The discrete gradient method [18, 30, 31] was originally devised for conservative/dissipative ODEs, which is now also used for PDEs in the form (1) [8]. On the other hand, Furihata [13] independently proposed the Discrete Variational Derivative Method (DVDM) (see also [15]) for the variational PDEs, which is now recognized as a combination of the discrete gradient method and an appropriate spatial discretization. The schemes based on the discrete gradient method (or DVDM) are often superior to general-purpose methods, in particular for a long run or numerically tough problems. Thus, they have been applied to many PDEs (see [14] and references therein).

However, at the price of their superiority, these schemes are unavoidably fully implicit and thus computationally expensive. Therefore, several remedies have been discussed in the literature. Some researchers have constructed structure-preserving and linearly implicit schemes for each specific PDE, for example, Besse [3] and Zhang, Pérez-García, and Vázquez [39] devised such schemes for the nonlinear Schrödinger equation. Moreover, for polynomial energy function, Matsuo and Furihata [28] proposed a multistep linearly implicit version of the DVDM (see also Dahlby and Owren [11]).

Most recently, for energy functions bounded below, Yang and Han [37] proposed an Invariant Energy Quadratization (IEQ) approach (see also [20, 36, 41]). A typical target of the IEQ approach is the Allen–Cahn equation [2]

| (3) |

where is a potential function. Since is non-negative, we can introduce an auxiliary function (: a positive constant), and rewrite the Allen–Cahn equation (3). The reformulated one has a dissipation law of the modified energy . Since the modified energy is quadratic, we can easily construct dissipative schemes (see, e.g., [16, 40]). For example, we can use the implicit midpoint rule and its extensions, Gauss methods (see, e.g., [19, Chapter IV]). Moreover, it is possible to construct linearly implicit schemes (see, e.g., [17, 20, 36, 37, 41]).

Though the IEQ approach is successful, it is still a bit expensive due to the presence of the auxiliary function. To overcome it, Shen, Xu, and Yang [33] proposed a Scalar Auxiliary Variable (SAV) approach (see also [34]). There, instead of the auxiliary function in the IEQ approach, a scalar auxiliary variable is introduced, where . Then the Allen–Cahn equation (3) can be rewritten in the form

| (4) |

Again, since the modified energy is quadratic, we can construct dissipative schemes in several ways. The SAV approach often provides us with quite efficient numerical schemes: in addition to being the smaller system than the IEQ approach, in each step, the principal part is to solve two linear equations having the same constant coefficient matrix.

Due to its attractive properties, the SAV approach has been intensively studied in these years. It has been applied to many PDEs, for example, the two-dimensional sine-Gordon equation [6], the fractional nonlinear Schrödinger equation [12], the Camassa–Holm equation [21], and the imaginary time gradient flow [42]. Shen and Xu [32] and Li, Shen and Rui [22] conducted a convergence analysis of SAV schemes. Akrivis, Li, and Li [1] devised and analyzed linear and high order SAV schemes. Moreover, the SAV approach is extended in several ways, for example, multiple SAV [10] and generalized Positive Auxiliary Variable (gPAV) [38].

Nevertheless, at least, there remain two issues on the SAV approach. First, the essential assumption “the energy functional is bounded below” is restrictive. In particular, when we deal with conservative PDEs, we often encounter an unbounded energy functional: for example, the KdV equation

| (5) |

Second, the scalar auxiliary variable in the modified equation (4) seems to be introduced in an unnatural way. As a consequence of this unclear derivation, the construction of resulting structure-preserving schemes is ad hoc.

There are some attempts to resolve the first problem [23, 24, 25, 27, 26, 4, 9]. The strategy of these studies is to change the definition of the scalar auxiliary variable. For example, in some of them, the authors replace the square-root function of the definition of , such as the exponential function. Then, the auxiliary variable is always well-defined and thus the assumption for the energy functional can be removed. Although these studies successfully overcome the first problem, the second issue, namely the natural derivation of the SAV schemes, is still remained.

The aim of this paper is twofold. First we propose a novel approach to deal with unbounded energy functions by a decomposition of the energy functional. Second, we present a new interpretation of the SAV approach using gradient system expression. Furthermore, combining the above two results, we propose several numerical schemes in the framework of Crank-Nicolson method and the Runge-Kutta methods.

In order to address general energy functionals, we assume that the energy is expressed as

| (6) |

for some linear operator and lower-bounded functions and (see Assumption 1). We then introduce two auxiliary variables ( or ) by

| (7) |

for some real numbers . In fact, the above decomposition of is always possible (Remark 1), and thus we can address general (possibly unbounded) energy functionals by this approach.

We then address the second problem: what is a natural way to construct SAV schemes? To answer this question, we begin with the modified energy

| (8) |

which is a quadratic function. The gradient of is then . With these notations, we will show that the equation of the SAV approach (such as (4)) is expressed as the gradient system for the modified functional . Further, this modified system inherits the conservation/dissipation property (i.e., variational structure) of the original gradient system (1) (see Lemma 1). From this point of view, existing SAV schemes are interpreted as a kind of discrete gradient method.

It is advantageous to keep the modified energy quadratic for the conservative case. Indeed, it is known that a certain class of Runge-Kutta methods preserves quadratic invariants [19]. Therefore, it is easy to construct higher-order time marching methods in the framework of Runge-Kutta methods and the SAV aproach, with the aid of our gradient system expression.

The remainder of this paper is organized as follows. In Section 2, we present the novel gradient system expression of the SAV approach, and show that the novel gradient system inherits the variational structure of the original system. Then, we propose second and fourth order schemes in the framework of our expression in Section 3. Finally, in Section 4, we present some numerical examples of our scheme to confirm the efficiency and we conclude this paper in Section 5.

2 Gradient system expression of SAV

In this section, we present a novel interpretation of the SAV approach, namely, gradient system expression. We state our result in an abstract setting.

Let be a Hilbert space over equipped with an inner-product , where or , and be its dual. Let be a -valued function defined on some open subset of , which is possibly unbounded, and be the Fréchet derivative of at . We further let be a skew-adjoint or negative semidefinite linear operator on (not necessarily bounded). Then, we consider the gradient system of the form

| (9) |

where is an unknown function and . We assume that all of the terms in (9) is well-defined.

We present SAV approach for the general gradient flow (9). To begin with, we make an assumption on the energy functional .

Assumption 1.

The energy functional can be decomposed into three parts as

| (10) |

where is a linear, self-adjoint, and positive semidefinite operator on , and both and are lower-bounded functionals.

Remark 1.

Any function can be decomposed as in the above assumption. Indeed, letting , obviously we can see

| (11) | ||||

| (12) | ||||

| (13) | ||||

| (14) |

Therefore, Assumption 1 is just a notation. Note that the decomposition is not unique.

We now introduce two scalar auxiliary variables and by

| (15) |

where or and are real constants. Then we define a modified energy functional by

| (16) |

which is quadratic, and thus . Differentiating the scalar auxiliary variables, we have

| (17) |

where

| (18) |

Although depends on , we abbreviate the dependency to simplify the notation. With this notation, the time derivative of the modified energy functional becomes

| (19) |

According to the above observation, we consider the following equation

| (20) |

We show that the system (20) can be expressed as a gradient system. For each time , define a linear operator by . The adjoint operator of is a multiplication operator . Then, (20) becomes

| (21) |

where is the identity operator on . Since it is easy to see that

| (22) |

we obtain

| (23) |

Hence, letting , , and

| (24) |

we can write (23) as

| (25) |

which is nothing but a gradient system in . In fact, the system (25) inherits the structure of the original gradient system (9).

Lemma 1.

If is skew-symmetric, then is as well. If is negative semidefinite, then is as well.

Proof.

The statement is obvious by the definition of . ∎

Remark 2.

Remark 3.

The system (25) is easily obtained by substituting the first equation of (20) into the second and the third ones. However, it is not clear that the resulting equation by the naive calculation inherits the variational structure of the original equation. Hence, not the system (25) itself but the above procedure is significant to see the variational structure of (20).

3 Proposed schemes

The new expression (25) gives insights to construct structure-preserving schemes for the gradient system (9). In this section, we propose a unified approach to construct numerical schemes for gradient systems based on (25). Let be time increment, , , and . We use the same notation as in the previous section.

3.1 Second order scheme

Let . Then, usual Crank-Nicolson scheme for (25) is

| (26) |

which is a nonlinear scheme. We then replace the first by any vector that is (locally) -approximation of , and obtain a Crank-Nicolson-type SAV scheme.

Scheme 1.

For given , find that satisfies

| (27) |

where is any (locally) -approximation of .

For example, we can choose

| (28) |

as in the literature. Alternatively, we can determine by the forward Euler or exponential Euler method with time increment . Regardless of the choice of , Scheme 1 has energy dissipation/preservation property for the modified discrete energy functional defined by

| (29) |

where is the solution of (27).

Lemma 2.

Let be the solution of (27). If is skew-symmetric, then the modified energy is preserved, namely, . If is negative semidefinite, then the modified energy is dissipative, namely, .

Proof.

We show that it is essentially sufficient to solve a partial differential equation with constant coefficients three times to get the solution of Scheme 1 at each time step, as in the original SAV schemes. It is clear that (27) is equivalent to

| (33) |

where . This yields

| (34) |

Assume that the operator is invertible. Then, multiplying the last equation by the matrix

| (35) |

from the left, we have

| (36) |

which is just block Gauss elimination. Therefore, we can decompose the equation into two parts. The first one is the two-dimensional linear system

| (37) |

which is equivalent to

| (38) |

The second one is

| (39) |

which is, in this case, equivalent to

| (40) |

We can summarize the above procedure as the following algorithm.

For the first step, we should solve the equation of the form three times. Therefore, it is sufficient to solve a partial differential equation with constant coefficients to get the solution of Scheme 1 at each time step.

3.2 Fourth order scheme for conservative systems

In this subsection, we focus on the conservative systems, namely, suppose is skew-adjoint. According to the expression (25), we can construct higher order schemes via Runge-Kutta methods. In the present paper, we present a fourth order SAV scheme. General theory to construct SAV Runge-Kutta schemes will be presented elsewhere.

Let us consider a two-stage Runge-Kutta method for (25) as follows:

| (41) |

where and . The Runge-Kutta method (41) is called canonical if

| (42) |

and it is known that canonical Runge-Kutta method preserves the energy functional if it is quadratic (cf. [19, Theorem IV.2.2]).

The typical example is the Runge-Kutta-Gauss-Legendre method with the Butcher tableau

| (43) |

where and . Here, is unused because (25) is autonomous. Although this method is fourth order, it is implicit and thus a nonlinear scheme. In order to construct a linear scheme, we replace in by other explicitly determined vector as follows:

| (44) |

Since is quadratic and the Runge-Kutta method is canonical, the modified method (44) also preserves the energy functional .

Lemma 3.

Proof.

The proof is the same as in [19, Theorem IV.2.2]. ∎

Now, it is necessary to find the predetermined vectors . Since in (41) is locally -approximation of , it is required that approximates the same vector with the same order. To construct such vectors, we consider two methods.

The one is to use another explicit five-stage Runge-Kutta method for the original equation (9), which is deduced from the framework of partitioned Runge-Kutta methods. That is, define by

| (45) |

where

| (46) |

Then, and are expected to fulfill the requirements. This can be proved in a general framework, which will be presented elsewhere.

The second choice is to utilize the three-stage exponential Runge-Kutta method. This strategy is efficient for the PDE case. Assume that the original equation (9) can be written as

| (47) |

where is a linear operator on and is some nonlinear function. Then, an exponential Runge-Kutta method for (9) is described as follows:

| (48) |

where and are functions defined as follows:

| (49) |

with

| (50) |

Let us write this integrator by . Then, () is locally -approximation of , which is what we desired.

Conclusively, we obtain the fourth order SAV-Runge-Kutta scheme as follows.

Scheme 2.

For given , find that satisfies (44), where is determined by the above methods.

4 Numerical Experiments

4.1 Kepler problem

We first consider, as a toy problem, the Kepler ODE model

| (51) |

where . The corresponding energy functional is

| (52) |

and we can rewrite equation (51) to the form (9) on with

| (53) |

where and are the identity and zero matrices in , respectively. Let . We decompose as

| (54) |

which corresponds to Assumption 1 with .

We then consider three schemes: the Crank-Nicolson-type scheme (Scheme 1) with determined by the extrapolation (SAV-CN-ext) and the forward Euler method with time increment (SAV-CN-Euler); the fourth order SAV-Runge-Kutta scheme (Scheme 2) with determined by the explicit five-stage Runge-Kutta method with coefficients (46) (SAV-RK). Throughout the experiments, we set , which implies the solution is periodic with period , and .

4.1.1 Numerical results

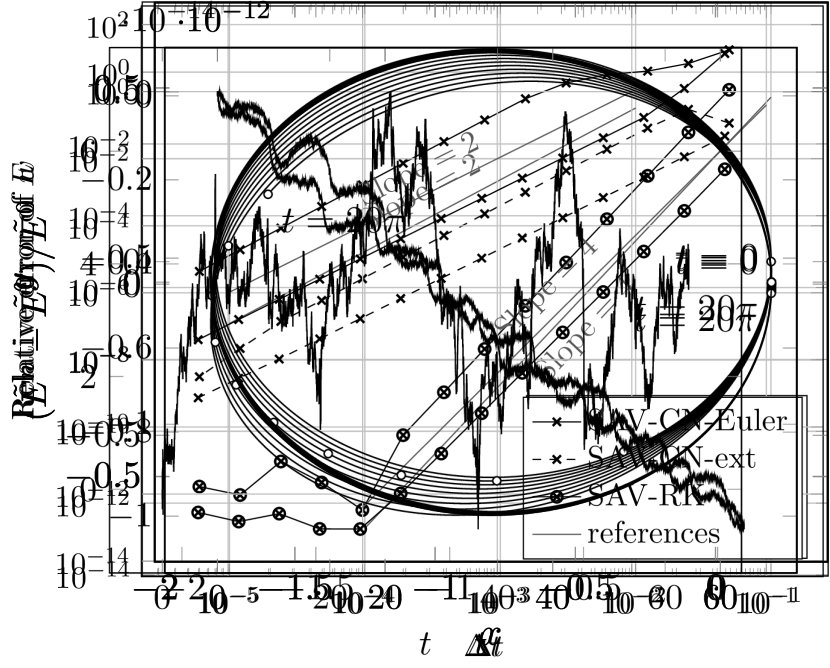

We first observe that the proposed schemes work well. We set and computed steps (ten periods) for each scheme. The results are plotted in Figures 1, 2 and 3. For each result, the left figure shows the orbit until , where small circles expresses the solution at for , and the right one shows the relative error of the modified energy functional .

Figures 11(a) and 22(a) show the orbit of numerical solutions of the second order Crank-Nicolson-type schemes. This result suggests that the choice of the predetermined vector in (27) may affects the accuracy. Figure 33(a) shows the orbit of numerical solutions of the fourth order scheme and the result suggests that this scheme has good accuracy. Furthermore, Figures 11(b)–33(b) show that the modified energy functional is numerically conserved, which support Lemmas 2 and 3.

4.1.2 Convergence rates

We now consider the convergence rates numerically. We set for , and computed the relative error

| (55) |

for the solution of each scheme. We plotted the results in Figure 44(a). We also computed the original energy functional and plotted the relative error

| (56) |

in Figure 44(b). These results suggest that, for both the solution and the energy functional, the convergence rate is for the second order schemes and for the fourth order scheme, as expected. Moreover, one can see that the error of of SAV-CN-ext is about times bigger than that of SAV-CN-Euler, which reflects the situation observed in Figures 11(a) and 22(a).

4.2 Korteveg–de Vries equation

We next consider the Korteveg–de Vries (KdV) equation

| (57) |

with the periodic boundary condition. The corresponding energy functional is

| (58) |

We first discretize the equation spatially by the spectral difference method [29]. Namely, for , we set , , and , and define the operator by

| (59) |

where

| (60) |

is the discrete Fourier transform and

| (61) |

Although and are complex matrices, it is easy to see that is a real matrix. Then, we discretize the KdV equation (57) spatially as

| (62) |

where and is the Hadamard product. Then, the functional

| (63) |

is conserved.

Now, let us define and by

| (64) |

and introduce the functionals

| (65) |

for . Then, we have the decomposition

| (66) |

which satisfies Assumption 1, and thus, for , , and with the inner product , the spatially discretized KdV equation (62) is expressed by the gradient system (9). Hence we obtain the system (25) with some parameters and , and we can apply our schemes that conserves the modified energy functional

| (67) |

We here consider three schemes: the Crank-Nicolson-type scheme (Scheme 1) with determined by the extrapolation (SAV-CN-ext) and the exponential Euler method with time increment (SAV-CN-Euler); the fourth order SAV-Runge-Kutta scheme (Scheme 2) with determined by the three-stage exponential Runge-Kutta method with coefficients (49) (SAV-RK).

Throughout the experiments, we set the initial function by

| (68) |

for , , and so that the solution is the cnoidal wave

| (69) |

which is periodic in space with and in time with period . Here, is one of the Jacobi elliptic functions and is the complete elliptic integral of the first kind. We also set the length of the spatial interval by , the frequency of the spectral difference method by , and the parameters for the auxiliary variables by .

4.2.1 Conservation law

We first observe that the proposed schemes conserves the modified energy functional . We set and computed one period for each scheme. We plotted the relative error of the modified energy functional (67). The results are plotted in Figure 5 and one can observe that (67) is conserved numerically, which support Lemmas 2 and 3.

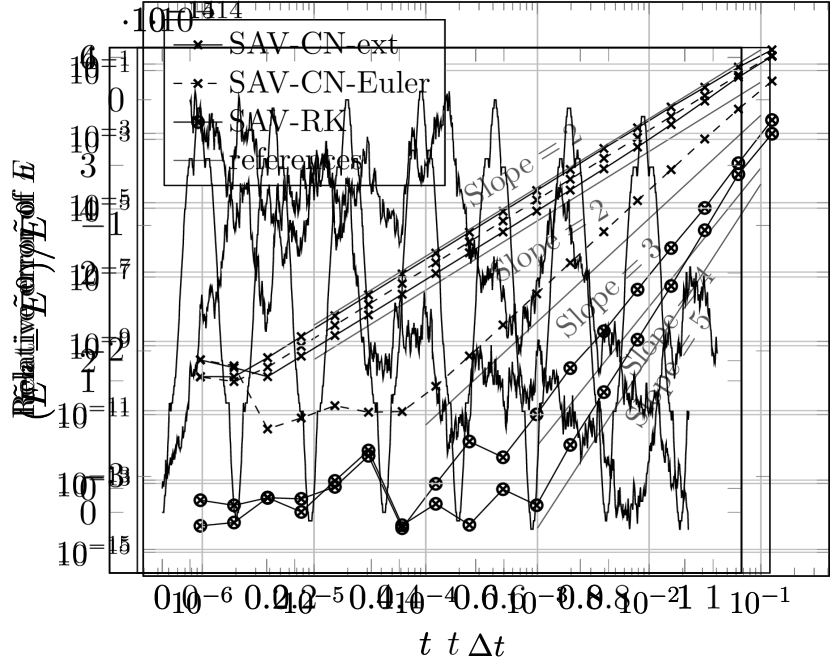

4.2.2 Convergence rate

We observe the convergence rate numerically. We set for , and computed the relative error

| (70) |

for the solution of each scheme. We plotted the results in Figure 66(a). This result suggests that the convergence rate for the solution is for the second order schemes and for the fourth order scheme, as expected.

We also computed the spatially discretized original energy functional defined by (29) and plotted the relative error

| (71) |

in Figure 66(b). In contrast to the case of , the convergence rates of for SAV-Euler and SAV-RK are better than expected. Although it is not clear why these phenomena occur, we can expect that the upper bound for convergence rate is or for each scheme.

5 Concluding Remarks

In this paper, we considered the SAV approach for general gradient flow (9) that has possibly lower-unbounded energy or energy functional. We decomposed the energy functional into three parts as in (10) and introduced two auxiliary variables. Then, we obtained the gradient system expression of the SAV approach (25), which inherits the structure of the original equation. According to this expression, we proposed the second and fourth order SAV schemes. In particular, the fourth order scheme is based on the canonical Runge-Kutta method and thus the novel expression (25) plays an essential role. We finally presented some numerical experiments that support the theoretical results.

However, we do not mention the well-posedness of the scheme even for the second order scheme. Indeed, it is not clear that the linear equation (38), which appears in the procedure of the block Gauss elimination, is solvable. Furthermore, for the fourth order scheme, it is not clear that the equation for the solution and the auxiliary variables () can be separated unlike the second order schemes, for which the separation is possible by the block Gauss elimination. Finally, we should discuss convergence rate of the schemes theoretically. We leave these studies for future work.

Acknowledgments

The first author was supported by JSPS Grant-in-Aid for Early-Career Scientists (No. 19K14590). The second author was supported by JSPS Grant-in-Aid for Research Activity Start-up (No. 19K23399).

References

- [1] Georgios Akrivis, Buyang Li, and Dongfang Li. Energy-Decaying Extrapolated RK–SAV Methods for the Allen–Cahn and Cahn–Hilliard Equations. SIAM J. Sci. Comput., 41(6):A3703–A3727, 2019.

- [2] Samuel M. Allen and John W. Cahn. A microscopic theory for antiphase boundary motion and its application to antiphase domain coarsening. Acta Metallurgica, 27(6):1085–1095, 1979.

- [3] Christophe Besse. A relaxation scheme for the nonlinear Schrödinger equation. SIAM J. Numer. Anal., 42(3):934–952, 2004.

- [4] Yonghui Bo, Yushun Wang, and Wenjun Cai. Arbitrary high-order linearly implicit energy-preserving algorithms for Hamiltonian PDEs. arXiv, 2011.08375, 2020.

- [5] J. W. Cahn and J. E. Hilliard. Free energy of a non-uniform system. I. interfacial free energy. J. Chem. Phys., 28:258–267, 1958.

- [6] Wenjun Cai, Chaolong Jiang, Yushun Wang, and Yongzhong Song. Structure-preserving algorithms for the two-dimensional sine-Gordon equation with Neumann boundary conditions. J. Comput. Phys., 395:166–185, 2019.

- [7] Roberto Camassa and Darryl D. Holm. An integrable shallow water equation with peaked solitons. Phys. Rev. Lett., 71(11):1661–1664, 1993.

- [8] E. Celledoni, V. Grimm, R. I. McLachlan, D. I. McLaren, D. O’Neale, B. Owren, and G. R. W. Quispel. Preserving energy resp. dissipation in numerical PDEs using the “average vector field” method. J. Comput. Phys., 231(20):6770–6789, 2012.

- [9] Qing Cheng. The generalized scalar auxiliary variable approach (G-SAV) for gradient flows. arXiv, 2002.00236, 2020.

- [10] Qing Cheng and Jie Shen. Multiple scalar auxiliary variable (MSAV) approach and its application to the phase-field vesicle membrane model. SIAM J. Sci. Comput., 40(6):A3982–A4006, 2018.

- [11] M. Dahlby and B. Owren. A general framework for deriving integral preserving numerical methods for PDEs. SIAM J. Sci. Comput., 33(5):2318–2340, 2011.

- [12] Yayun Fu, Wenjun Cai, and Yushun Wang. A structure-preserving algorithm for the fractional nonlinear Schrödinger equation based on the SAV approach. arXiv, 1911.07379, 2019.

- [13] D. Furihata. Finite difference schemes for that inherit energy conservation or dissipation property. J. Comput. Phys., 156(1):181–205, 1999.

- [14] D. Furihata and T. Matsuo. Discrete variational derivative method–A structure-preserving numerical method for partial differential equations. CRC Press, Boca Raton, 2011.

- [15] D. Furihata and M. Mori. General derivation of finite difference schemes by means of a discrete variation (in Japanese). Trans. Japan Soc. Indust. Appl., 8(3):317–340, 1998.

- [16] Yuezheng Gong, Jia Zhao, and Qi Wang. Arbitrarily high-order unconditionally energy stable SAV schemes for gradient flow models. Comput. Phys. Commun., page 107033, 2019.

- [17] Yuezheng Gong, Jia Zhao, and Qi Wang. Arbitrarily high-order linear energy stable schemes for gradient flow models. J. Comput. Phys., 419:109610, 20, 2020.

- [18] O. Gonzalez. Time integration and discrete Hamiltonian systems. J. Nonlinear Sci., 6(5):449–467, 1996.

- [19] E. Hairer, C. Lubich, and G. Wanner. Geometric numerical integration, Structure-preserving algorithms for ordinary differential equations, volume 31 of Springer Series in Computational Mathematics. Springer, Heidelberg, 2010.

- [20] Daozhi Han, Alex Brylev, Xiaofeng Yang, and Zhijun Tan. Numerical analysis of second order, fully discrete energy stable schemes for phase field models of two-phase incompressible flows. J. Sci. Comput., 70(3):965–989, 2017.

- [21] Chaolong Jiang, Yuezheng Gong, Wenjun Cai, and Yushun Wang. A linearly implicit structure-preserving scheme for the Camassa-Holm equation based on multiple scalar auxiliary variables approach. J. Sci. Comput., 83(1):Paper No. 20, 20, 2020.

- [22] Xiaoli Li, Jie Shen, and Hongxing Rui. Energy stability and convergence of SAV block-centered finite difference method for gradient flows. Math. Comp., 88(319):2047–2068, 2019.

- [23] Zhengguang Liu. Efficient invariant energy quadratization and scalar auxiliary variable approaches without bounded below restriction for phase field models. arXiv, 1906.03621, 2019.

- [24] Zhengguang Liu. Energy stable schemes for gradient flows based on novel auxiliary variable with energy bounded above. arXiv, 1907.04142, 2019.

- [25] Zhengguang Liu and Xiaoli Li. Efficient modified techniques of invariant energy quadratization approach for gradient flows. Appl. Math. Lett., 98:206–214, 2019.

- [26] Zhengguang Liu and Xiaoli Li. Step-by-step solving schemes based on scalar auxiliary variable and invariant energy quadratization approaches for gradient flows. arXiv, 2001.00812, 2019.

- [27] Zhengguang Liu and Xiaoli Li. The exponential scalar auxiliary variable (E-SAV) approach for phase field models and its explicit computing. SIAM J. Sci. Comput., 42(3):B630–B655, 2020.

- [28] T. Matsuo and D. Furihata. Dissipative or conservative finite-difference schemes for complex-valued nonlinear partial differential equations. J. Comput. Phys., 171(2):425–447, 2001.

- [29] T. Matsuo, M. Sugihara, D. Furihata, and M. Mori. Spatially accurate dissipative or conservative finite difference schemes derived by the discrete variational method. Japan J. Indust. Appl. Math., 19:311–330, 2002.

- [30] Robert I. McLachlan, G. R. W. Quispel, and Nicolas Robidoux. Unified approach to Hamiltonian systems, Poisson systems, gradient systems, and systems with Lyapunov functions or first integrals. Phys. Rev. Lett., 81(12):2399–2403, 1998.

- [31] Robert I. McLachlan, G. R. W. Quispel, and Nicolas Robidoux. Geometric integration using discrete gradients. R. Soc. Lond. Philos. Trans. Ser. A Math. Phys. Eng. Sci., 357(1754):1021–1045, 1999.

- [32] Jie Shen and Jie Xu. Convergence and error analysis for the scalar auxiliary variable (SAV) schemes to gradient flows. SIAM J. Numer. Anal., 56(5):2895–2912, 2018.

- [33] Jie Shen, Jie Xu, and Jiang Yang. The scalar auxiliary variable (SAV) approach for gradient flows. J. Comput. Phys., 353:407–416, 2018.

- [34] Jie Shen, Jie Xu, and Jiang Yang. A new class of efficient and robust energy stable schemes for gradient flows. SIAM Rev., 61(3):474–506, 2019.

- [35] J. Swift and P. C. Hohenberg. Hydrodynamic fluctuations at the convective instability. Phys. Rev. A, 15:319–328, 1977.

- [36] Xiaofeng Yang. Linear, first and second-order, unconditionally energy stable numerical schemes for the phase field model of homopolymer blends. J. Comput. Phys., 327:294–316, 2016.

- [37] Xiaofeng Yang and Daozhi Han. Linearly first- and second-order, unconditionally energy stable schemes for the phase field crystal model. J. Comput. Phys., 330:1116–1134, 2017.

- [38] Zhiguo Yang and Suchuan Dong. A roadmap for discretely energy-stable schemes for dissipative systems based on a generalized auxiliary variable with guaranteed positivity. Journal of Computational Physics, 404:109121, 2020.

- [39] Fei Zhang, Víctor M. Pérez-García, and Luis Vázquez. Numerical simulation of nonlinear Schrödinger systems: a new conservative scheme. Appl. Math. Comput., 71(2-3):165–177, 1995.

- [40] Hong Zhang, Xu Qian, and Songhe Song. Novel high-order energy-preserving diagonally implicit Runge-Kutta schemes for nonlinear Hamiltonian ODEs. Appl. Math. Lett., 102:106091, 9, 2020.

- [41] Jia Zhao, Qi Wang, and Xiaofeng Yang. Numerical approximations for a phase field dendritic crystal growth model based on the invariant energy quadratization approach. Internat. J. Numer. Methods Engrg., 110(3):279–300, 2017.

- [42] Qingqu Zhuang and Jie Shen. Efficient SAV approach for imaginary time gradient flows with applications to one- and multi-component Bose-Einstein condensates. Journal of Computational Physics, 396:72 – 88, 2019.