Scalable privacy-preserving cancer type prediction with homomorphic encryption

Abstract.

Machine Learning (ML) alleviates the challenges of high-dimensional data analysis and improves decision making in critical applications like healthcare. Effective cancer/tumor classification from high-dimensional genetic mutation data can be useful for cancer diagnosis and treatment, if the distinguishable patterns between cancer/tumor types are identified. At the same time, analysis of high-dimensional data is computationally expensive and is often outsourced to cloud services. Privacy concerns in outsourced ML, especially in the field of genetics, motivate the use of encrypted computation, like Homomorphic Encryption (HE). But restrictive overheads of encrypted computation deter its usage. In this work, we explore the challenges of privacy preserving cancer detection using a real-world dataset consisting of more than 2 million genetic information for several cancer types. Since the data is inherently high-dimensional, we explore smaller ML models for cancer prediction to enable fast inference in the privacy preserving domain. We develop a solution for privacy preserving cancer inference which first leverages the domain knowledge on somatic mutations to efficiently encode genetic mutations and then uses statistical tests for feature selection. Our logistic regression model, built using our novel encoding scheme, achieves 0.98 micro-average area under curve with 13% higher test accuracy than similar studies. We exhaustively test our model’s predictive capabilities by analyzing the genes used by the model. Furthermore, we propose a fast matrix multiplication algorithm that can efficiently handle high-dimensional data. Experimental results show that, even with 40,000 features, our proposed matrix multiplication algorithm can speed up concurrent inference of multiple individuals by approximately 10x and inference of a single individual by approximately 550x, in comparison to standard matrix multiplication.

1. Introduction

The improvement in computing power and an overwhelming wealth of data have enabled the viability of statistical learning methods and have achieved unprecedented performance in several fields of science, engineering, and finance. Artificial Intelligence (AI)-based techniques are also being incorporated by federal agencies to make critical infrastructure safe, secure, and robust (ai_secure, 1) and healthcare critical infrastructure is not an exception. From first generation rule-based healthcare AIs, the sector is slowly moving towards ML-based methods to investigate, understand, and use complex data patterns for clinical diagnosis (ai_health, 2). ML-based healthcare predictions are currently far from having generalizable diagnostic abilities. For example, trained ML models for disease prediction are extremely data dependent, and cannot be seamlessly transferred as in the case of image classification tasks. One such disease prediction application, with profound impact, is cancer (tumor) detection. An early/fast diagnosis of cancer (cancer inference) by a trained ML model can save precious time in prognosis and treatment of a patient.

Precision medicine is the process of tailoring the right diagnosis and treatment for the right person at the right place. One of the biggest components of precision medicine is to incorporate patients’ genetic information to the diagnosis, treatment, and decision making (precision, 3). In the context of precision cancer medicine, distinguishability between genetic mutations of normal and malignant tissues is the crux of cancer genomics. These genetic changes developed during a person’s life in malignant tumor cells are called somatic (or acquired) changes and are accountable for more than of cancer cases. Somatic Single-Nucleotide Variation (SNV) and Copy-Number Variation (CNV) on protein-coding genes, especially on oncogenes, tumor suppressors and cell cycle regulators are known to cause tumor formation and progress. However, the heterogeneity in various levels makes it difficult to understand precisely which gene is involved in which cancer type. Somatic mutation rate is different across cancer types; even within a single type, this rate is different across patients (mut_rate, 4). Conversely, origins of distinct cancer types have been found to share similarities, thus making distinguishability even harder (similar_origin, 5). It is important to find explainable relationships between the somatic mutations, the genes that they affect and the type of cancer. We explore the problem of cancer genomics using a real-world dataset consisting of more than 2 million CNV and SNV information of 11 different cancer types (idash20, 6).

While the use of genomics in cancer detection seems promising for comprehensive understanding of the disease and its treatment, there are major privacy-related concerns. Genomic data is extremely characterizing and identifying, and the data may pin-point to the exact patient. It is also permanent and cannot be changed like other private data (passwords, credit cards, etc.). A partial leak of genomic data may reveal important information about the individual and may also be used to reconstruct their genome (genome_attack, 7). A patient, waiting for their cancer diagnosis using a server-hosted state-of-the-art ML-based predictive models, should not be subjected to such privacy risks.

High privacy risks of genomic data leakage calls for data to be always encrypted during inference. Homomorphic Encryption (HE) is an encryption scheme which allows for encrypted computation, i.e. the encrypted genomic data can be sent to the ML model in the server and the patient can receive the encrypted diagnosis. The first Fully HE (FHE) scheme for arbitrary computation proposed by Gentry et. al. (gentry, 8) had prohibitive computational overheads for real-world applications like ML-based inference. Since the non-linear activation functions were the major bottleneck for private ML-based inference, researchers mainly focused on approximating the non-linear function using square function (cryptonets, 9), first terms of Taylor expansion (access_imputation, 10), or piece-wise linearization (minionn, 11), for fast private inference. A possible solution for this problem is to bypass the non-linear function using small models like logistic regression (as we show in section 5.2.4). But inherent high-dimensionality of genomic data makes the encrypted linear operation impractical for privacy preserving genomics. Using an ML model on the raw cancer dataset would require multiplication of matrices with millions of columns, which is extremely expensive in HE. The intuitive solution towards faster private inference is to reduce the number of computations. This translates to reducing the number of features using feature selection, as can be seen in other HE applications in genomics (access_imputation, 10, 12).

For dimensionality reduction, we develop a feature engineering methodology involving feature (gene) selection and genetic (mutation) information encoding, and is based on a combination of biological intuition and statistical tests. Trivially using statistical scores may result in overfitting especially for genomic datasets with the number of predictors several times larger than the number of samples i.e. . Domain knowledge is increasingly being used for feature engineering in healthcare predictive models (domain_healthcare, 13) because such methods are not only more interpretable but also integrate years of medical research and case-studies. Healthcare ML models need to be interpretable for sustainability (healthcare_explainability, 14). Therefore, it is sustainable to use explainable models (like SVM, logistic regression, etc.) than Deep Neural Networks (DNN) that are essentially a black-box. This practically eliminates the automatic feature engineering capabilities of DNNs. Using our methodology of somatic mutation encoding, we reduce the dimensionality of the task (from over 2 million mutations to 43K features), but still the genomic data remains high-dimensional as compared to benchmark ML datasets (like MNIST with image size of features or CIFAR with image size of features). Since our application needs several thousands of features for accurate predictions, not only our time budget is completely exhausted by the linear operation, but also standard matrix multiplication does not offer the performance needed. Another drawback of current HE-based implementations of private inference is that they are designed to maximize throughput, computing on thousands of inputs together to improve efficiency in a cumulative way. However, they suffer in latency, i.e. the algorithms would take the same time to compute on just one input as it would take for thousands of inputs. But the real-world application we consider in this work benefits from improved latency, as it a common use case to have to analyze the genome of only a single patient and not wait for thousands of patients to have their tests done. In summary, to enable practical real-world private inference, we need the ability to compute on high-dimensional data in the encrypted domain with low latency and high throughput.

In a nutshell, for a private cancer detection solution to be practicable, it must have three properties: 1) comprehensive high-dimensional genomic analysis for high detection accuracy with a focus on explainability, 2) cryptographically secure privacy guarantees, 3) practical inference time and high throughput. In developing the privacy-preserving tumor prediction model, we list our contributions as follows:

-

(1)

We develop a three-step privacy-friendly feature engineering methodology and demonstrate the importance of feature selection and encoding based on biological significance of genetic alterations and statistical tests. We discuss the predictive genes from our findings and compare it to Gene Ontology enrichment analysis to understand the significance of certain genes in predicting a cancer type.

- (2)

-

(3)

We propose a fast matrix multiplication algorithm for high-dimensional matrices, specifically designed to implement HE-based privacy-preserving logistic regression model to ensure high performance.

-

(4)

We optimize our private cancer prediction methodology for low latency as well as high throughput.

-

(5)

We implement our privacy-preserving cancer-prediction model using BFV, an FHE scheme, and demonstrate that we can perform privacy-preserving cancer prediction for 500 individuals under 1 minute and a single prediction in approximately 1 second. We compare the performance of our privacy-preserving model with an ML model implemented using standard matrix multiplication.

-

(6)

We open-source our methodology and implementation to be used as-a-service.

We first discuss the related work on genomics-based disease detection and privacy preserving ML inference in section 2 and then the background information about the dataset and HE in section 3. We describe our complete methodology including plaintext model development using encoding scheme and fast matrix multiplication in section 5 and the experiments on performance and comparison in section 6. We finally conclude in section 7.

2. Related work

The study of genomic data to understand the changes that led to cancer or the genomic alterations that happened as a result of cancer is of utmost importance for initial diagnosis, predicting stage, cancer growth, metastasis, treatment, drug response, and planning a path to recovery. Therefore, there have been several studies focused genomic dynamics on individual cancer types. Researchers from TCGA have delved into genomic and molecular characterization studies for several individual cancer types: Glioblastoma (glioblastoma, 17), ovarian carcinoma (ovarian_carcinoma, 18), lung (lung, 19), endometrial carcinoma (endometrial, 20), renal cell carcinoma (renal, 21), urothelial carcinoma (urothelial, 22). In 2012, TCGA launched a pan-cancer dataset collection i.e. coherent dataset collection over 12 tumor types each profiled using 6 different platforms: Reverse-phase protein arrays measuring protein and phosphoprotein abundance (RPPA), DNA methylation, microarray-based measurement of copy number, single-nucleotide and structural variants mutation using whole exome sequencing, microRNA sequencing, and RNA sequencing and microarray gene expression analysis (pan_cancer, 23). This opened up several new avenues of cancer analysis using genomic data like identification of genes that drive carcinogenesis (driver_genes, 24), studies on the metastatic nature of cancer types (metastatis, 25), and using genomics for precision medicine (precision, 3). All of these studies that focus on detecting the cancer type analyze the genomic data to help develop biological intuition towards understanding a tumor type. Therefore, we also perform the encoding of genomic mutation data based on biological intuition to retain this wealth of semantic information.

Prediction of cancer type using machine learning on genomic data has been of interest in the last few years because of the possibility of computation on the huge volume of genomic data. Researchers have aimed at finding information about cell of origin for all the 33 different tumor types (similar_origin, 5). Jiao et. al. propose a deep learning-based framework to predict 24 tumors of unknown primary site by analyzing somatic passenger mutations. Machine learning has also been used to analyze genomic data for immunotherapy for pan-cancer datasets (inhibition, 26). Deep learning has also been used for the prediction of tumor type using gene filtering (gdl, 16) and mutation frequency, sparsity reduction, and cluster gene filtering (deep_gene, 15) as pre-processing steps. Another approach to finding/understanding useful features is through auto-encoders, as proposed for individual cancer detection (encoder_liver, 27, 28) or for classification of 40 different cancer types achieving an area under curve between 0.54-0.97 for individual types (auto, 29). Our work also revolves around efficient tumor detection but we observe that a combination of biological intuition and statistical tests is required for a higher performance in tumor type detection.

Amidst the growing privacy concerns for sensitive data, private inference has been a topic of interest in recent years. Cryptonets (cryptonets, 9), one of the earlier research articles on generic HE-based private inference, implemented DNN by approximating non-linear layers with some lower order polynomial terms. Cryptonets, like many HE algorithms, are also optimized for throughput, predicting class labels for 8192 images together. The performance was improved by several other solutions with Gazelle (gazelle, 30), a low latency framework, being able to achieve state-of-the-art performance of 800 ms for one inference. HE-based ML/DNN implementations focus on lowering the cost of non-linear functions like ReLUs as the feature space (and thus, the cost of matrix multiplication) is smaller. For example, the largest inputs considered by the solutions is from the CIFAR dataset, with 3K features, whereas our model needs a minimum of 30K features. In this work, we focus on a different problem, where we require high number of features, high throughput, as well as low latency.

Private inference on genomic data is a challenging problem because of the nature of data. Several challenges in HE-based genome privacy were developed in iDash (integrating Data for Analysis, ’anonymization,’ and SHaring) like private statistic calculation (idash15, 31), privacy-preserving querying (idash16, 32), and logistic regression training on a dataset of 18 features and 2 labels (idash17, 33), each focusing on a different bottleneck of genome privacy road-map. iDash19 and iDash20 focused on private inference challenges. We observe that rigorous feature engineering is often performed on the original data to reduce the number of features to a bare minimum, from 16K features to 10-40 features (access_imputation, 10, 12). Authors have performed genomic analysis using several different libraries and types of FHE like CKKS, BFV, and TFHE using different models like SVM, LR, shallow DNNs (ultrafast, 12), and also using partial homomorphic encryption (access_imputation, 10). Naturally, as the number of features are reduced by three orders, there is a reduction in test accuracy, which is justified with performance vs accuracy trade-off. On a different application (genome wide association studies involving a large number of individuals), researchers have also explored approximation techniques in logistic regression algorithms using semi-parallel implementation (idash18, 34) improving evaluation time by 30% ( 6 hours on a single node). Our privacy preserving inference methodology is specifically directed to problems where a large number of features is indeed required.

3. Preliminaries

3.1. Dataset

We use the cancer classification dataset from iDASH 2020 competition Task I (idash20, 6) that was collected for private tumor classification. This data is curated from a centralized database The Cancer Genome Atlas (TCGA) (TCGA, 35) using patients from 11 different cancer types. As noted in section 2, several subsets of TCGA resulted in different types of studies. In our work, we study the impact of somatic mutations on prediction of cancer. Our dataset consists of two types of somatic alteration information (maybe considered as two subsets of features): Single-Nucleotide Variations (SNVs) and Copy Number Variations (CNVs) on protein-coding genes. In the SNV subset, four different characteristics are given for each somatic SNV of a gene. These characteristics represent the chromosome location, denotes whether the mutation is a single-nucleotide polymorphism, and the effect of the mutation (using two different measures). The effect of the mutation is calculated using Ensembl Variant Effect Predictor (VEP) (vep, 36) and is reported in two ways: 1) A mutation can be considered as one of the following categorical values; high, moderate, modifier, and low, followed by a real number denoting the impact of the mutation. 2) A mutation can qualitatively be denoted as tolerated or deleterious, based on Sorting Intolerant from Tolerant (SIFT) pathogenicity prediction. All of this information reflects the importance of a mutation, i.e. VEP scores help transform an observation of a mutation to its possible impact in development of the tumor. VEP scores help in developing the biological intuition for our feature engineering methodology, which is required as this subset of SNV features contains 2,044,328 somatic mutation rows. In the copy number subset, each gene for each sample (patient) is given a copy number value depending on whether there has been a change from their parents’ genes: 0 for no alteration, 1 or 2 for duplication, and -1 or -2 for deletion from one or both the parents, respectively. For each sample, there are 25,128 genes, and thus, there are 25,128 features for each sample. The dataset comes from 2713 patients belonging to 11 different cancer types. The dataset is unbalanced with the maximum number of patients belonging to Bronchus and Lung (). We randomly select of the dataset for training and the rest is reserved for testing maintaining the distribution of data, i.e. the distribution of training and test data for each label remains the same.

3.2. Homomorphic encryption

Homomorphic Encryption (HE) is a type of encryption that allows for computation on encrypted data without decryption. Let us consider a function, operating on plaintext operands , and the equivalent function operating on the corresponding ciphertexts , such that , and , where is the encryption function. Then, the computation of the function on plaintext operands is the decryption of computation of the function on ciphertexts, i.e. using HE, we can say that , where is the decryption function. Depending on the type of computation possible in an encryption scheme, there are several types of HE schemes.

For linear models with unencrypted weights, Partial Homomorphic Encryption (PHE) schemes like Paillier (Paillier, 37) can be used. Nevertheless, encryption and decryption operations, which consist of modular exponentiations, hinders the performance of ML models with larger inputs or outputs. In addition, although it is possible to encode several plaintext into a ciphertext in Paillier for certain applications, the density of plaintexts per ciphertext is much lower than in Somewhat Homomorphic Encryption (SHE) or Fully Homomorphic Encryption (FHE).

A better approach comes from using SHE/FHE schemes like BFV (Brakerski/Fan-Vercauteren) (bfv, 38) or CKKS (Cheon, Kim, Kim, Song) (ckks, 39). CKKS enables fixed-point arithmetic and it is the standard choice for ML applications. During computation, CKKS drops the lower bits of the plaintext after each operation, reducing the precision of the result. Conversely, BFV works on integers (modular arithmetic), where we can emulate a fixed-point arithmetic by scaling up the double-precision floating-point number into integers. Similarly to CKKS, there is a limitation for how much precision a BFV ciphertext can provide. However, since it computes on modular arithmetic, we can use the Chinese Remainder Theorem (CRT) to break our values into several smaller values, each one under unique modulus coprime to all other moduli. Each smaller value is then encrypted under a different key.

4. Threat model

In this work we focus on privacy-preserving inference. In our threat mode, the training dataset is public and is comprised of individuals who have agreed to share their data. The Cancer Genome Atlas database is one such example (TCGA, 35). This database catalogues genomic information, prognosis, diagnosis, on top of personal information like age, gender, race, and ethnicity of the individuals who are identified as case numbers. We do not aim to protect the training dataset, rather use it to build models for cancer prediction. However, when a new patient wants to test for cancer using their genome, their data must be protected. In our threat model, thus, the training is not privacy-preserving, but the inference is private. Fig. 1 summarizes our threat model.

5. Methodology

Since computations in the encrypted domain are expensive, private inference on any type of should prioritize towards less number of computations. This translates to a low number of features and smaller ML models (for example, SVM or logistic regression instead of deep networks). Our methodology can be divided into two parts. In the first part of our methodology (subsection 5.1), we focus on making our dataset compact encoding mutations and reducing the number of features with biological intuitions and statistical tests. This reduces the number of features from over 2 million to 43K. In the second part of our methodology (subsection 5.2), we propose a matrix multiplication algorithm , particularly catered towards implementing a faster version of privacy-preserving logistic regression-based cancer inference.

5.1. Somatic mutation encoding

For the cancer prediction to be correlated to both CNV and SNV information, the CNV and SNV features can be concatenated together, which can be used to train an ML model. CNV subset has 25K features. But the SNV subset corresponds to over 2 million mutation rows, which may equate to over 2 million features if each of these mutations is analyzed separately. The concatenated dataset, thus, consists of more than 2 million features. Therefore, instead of representing a mutation as a feature, we represent a gene as a feature with encoded mutation as the value of that feature. However, this approach faces the challenge of compacting mutation information of over 2 million data-points to 25K data-points (corresponding to 25K genes).

5.1.1. Step 1: Gene/feature selection using SNV frequency

Previous studies (deep_gene, 15, 16) on cancer detection using somatic mutations observed that the frequency of mutation of a gene correlates to higher prediction accuracy. The genes with higher number of mutations are more likely to develop a cancerous tumor. This makes sense because if there are more mutations in a gene, then the expression of that gene is likely disrupted through the mutations. There is also a correlation between the frequency of mutations in a patient cohort and the likelihood of observing the cancer in that cohort. This also makes sense because if a mutation is observed in a large number of patients with same cancer type diagnosis, then that mutation is likely either correlated or causal to that cancer type. Therefore, as the first step of feature selection, we choose genes with higher number of mutations. But each cancer type corresponds to a higher mutation in a different gene. We first rank the genes based on their SNV frequency in the patient cohort for each cancer type by also taking into consideration genes with more than one SNVs on them. We then combine the ranked genes from different cancer types and finally select the top 10,000 genes with highest SNV frequency for each cancer type as our features. This step also ensures removal of genes with low SNV frequency and reduce the dimensionality of our feature space. With all cancer types combined, we have a total of 18,606 genes.

5.1.2. Step 2: Encoding scheme

Encoding techniques, both for the feature vectors and the target variables, ease in training towards better accuracy. Efficient encoding techniques have been proven to result in better performance in genomic classification tasks as well (encoding, 40). As mentioned in the dataset section, each SNV on a gene is represented by multiple characteristics. This information need to be meaningfully merged with the CNV information of each gene. We explore the following encoding schemes based on a biological intuition as explained below:

-

(1)

Using presence of an SNV on a gene: The genes selected using frequency are merged for all cancer types. In this encoding we aim to study if a particular gene (mutation) is highly correlated to a cancer type. We assign a binary value to each of genes of a patient to denote the presence or absence of one or more SNVs. This allows us to encode SNV information in a categorical manner. This binary value per gene is used as a feature.

-

(2)

Using the type and impact of an SNV: As denoted in subsection 3.1, the impact of mutation of a gene is calulated using VEP For each SNV in the dataset, we have two types of measure: 1- a qualitative measure indicating whether an SNV has a tolerated or deleterious effect; 2- a quantitative real-value measure () representing the strength of the impact and the qualitative confidence with which the mutation can be attributed to the diagnosis. is the strength of the SNV in patient . Each of the encoding scheme are given equidistant real values between 0 to 1. We also experimented with different ranges but did not find any improvement in test accuracy. We think that both of these measures are useful in classifying the tumor type. We first encode the first measure by assigning values for [deleterious, deleterious (low confidence), tolerated (low confidence), tolerated] as [1.0, 0.75, 0.5, 0.25] for each . The reason for this encoding is that a detrimental effect is given the highest value in cancer prediction and similarly a tolerated effect is given the lowest feature value. Either of the effects, when estimated with lower confidence are given lower effect. is the effect of the SNV in patient . We then combine this encoding with the second measure as . The final effect values of SNV impact of a gene is the summation of the impacts of all the SNVs on that gene, if there is more than one SNVs. The resulting value per gene is used as a feature.

In addition to the strength () of an SNV, the qualitative confidence of the effect of an SNV on a gene is also as a categorical variable with values as [high, moderate, modifier, low]. We encode them as [1, 0.4, 0.7, 0.1] since intuitively we want to assign a higher importance to a high mutation effect. A gene with no mutation is assigned 0 effect. Similar to the previous feature, for a gene with multiple SNVs at different locations, the values are added to finally represent the effective value of all the SNVs in a gene. The resulting value per gene is used as a feature (Figure 1).

-

(3)

Using CNV of a gene CNV of a gene is represented as integers between -2 and 2, indicating if both, one, or no copy of the gene is deleted or duplicated. We scale these values to integers between 0 and 4 as statistical feature selection methods (for example test) often require positive values. Similarly, the resulting value per gene is used as a feature.

Overall, the top 10,000 genes per cancer type are merged into a total of 18,606 genes. For each patient, 18,606 genes with their associated SNV encoding are concatenated with 25,128 genes with their CNV information. In total, we have 43,734 features which undergo the following statistical tests.

5.1.3. Step 3: Feature selection using test

The previous steps of feature selection incorporate biological intuition. In this step, we explore statistical tests for evaluating feature importance. Statistical methods like test do not only inform the feature importance but also help reduce dimensionality of the feature space enabling faster computation (both during training and inference). It has previously been used in genetic information based disease prediction studies (chi_cancer, 41). We choose test as a feature selection metric since we achieve the best possible accuracy when compared to feature selection with mutual information or f-score statistics (as reported in Section 6). To decide if a feature is independent of the target label (i.e type of cancer), we perform the test where we calculate value of each feature with respect to the target variable. The value of a feature is given as where represents the expected value, represents the actual output and represents each instance of a test between a feature and a target. Note here that, the expected values of a variable is calculated using the distribution of feature values.

We run the test on all genes (CNV and SNV concatenated together) and sort the features decreasing order of values. The top features are selected and are used to train a classification model. We also use this step to analyze the selected genes and their relative importance in cancer type prediction in Section 6.1.5.

5.2. Privacy-preserving cancer inference

5.2.1. Model selection for cancer prediction

For the selection of the ML model that can correlate encoded somatic mutations to cancer type, we train a variety of ML models, while considering the difficulty of their implementation in HE domain. In plaintext, we experiment with Support Vector Machine (SVM) (with radial basis function, polynomial and linear kernels), logistic regression, and Deep Neural Networks (DNNs with two fully connected hidden layers with relu activation) possible classification models. We start with top 1,000 features selected by test and increase the number of features by 1,000 in each iteration. In our search for best-performing model, we train models using different number of features, different statistical tests for feature selection, different kernels (if applicable), with several regularization techniques, and with different optimization techniques cross-validated over 5 folds. We report the best performing models in Section 6.

Measures to reduce overfitting: We find that the logistic regression model performs best for several encoding schemes with the best test accuracy of . In logistic regression, the probability that a sample belongs to class is given by where is the linear combination of coefficients of the form . However, the number of features is much larger than the number of samples since each sample has effectively 43,000 features from CNV and SNV, whereas we have a total of 2,173 samples. Therefore, to avoid over-fitting in this high-dimensional setting, we introduce a Lasso () penalty (lasso, 42) to the logistic loss function during training such that the features that are unlikely to contribute to the prediction are penalized and weighted zero. Therefore, if the logistic loss function is given by where represents the coefficients of the features, the loss function after Lasso penalty in the Lagrangian form becomes which is minimized during training. Further, we use k-fold cross-validation on the training set () as another way to prevent the model from over-fitting to the training data. We iterate for 10,000 epochs to converge to a prediction model.

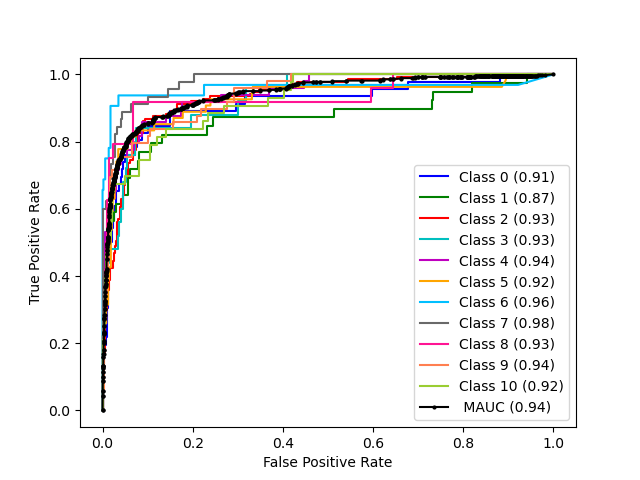

Metrics to detect problems of unbalanced data: High test accuracy on unbalanced datasets (with a higher percentage of samples from a particular label) can give a false sense of performance as a random guess (of the label with the highest number of samples) may also result in a high accuracy. For a holistic performance evaluation of our classifiers, we plot Receiver Operating Characteristics (ROC) Curve and report the individual area under curve for each class and the Micro-average Area Under Curve (MAUC) for the classifier. Although our dataset is unbalanced for 2 classes, still we report both test accuracy and ROCs for all the classes.

Binary models: Our cancer type prediction model uses the somatic genetic information to predict the cancer type from 11 classification labels. We also build models to predict each cancer type separately (like specific models in (gdl, 16)). These models are supplementary to our main prediction model and focus on one type of cancer. The features for this single cancer type model are chosen following the steps described above and the classifier is trained using a binary label: 0 for the all of the other cancer types and 1 for the cancer type of interest. We create separate models for each of the 11 cancer types and call these models as binary models since the prediction is converted into a binary classification task.

It should be noted here that the binary classification ability represented by the ROC curves of individual diseases (in our main prediction model) and the binary models for individual diseases are different because of the feature selection steps. In our binary models, the genes important to a specific disease are selected. However, for our main prediction model, the genes which are cumulatively important for all the 11 labels, are selected. Hence, we report both the analyses in section 6.

From analyzing the nature of genomic mutation data and the trends in accuracy (details in section 6), we observe that regardless of the ML model selected, the matrix multiplication would involve high-dimensional matrices (Dot product between weights and several thousands of features is common for all the ML models explored in our work). Therefore, following standard matrix multiplications would require a large number of multiplications corresponding to this high-dimensional dataset. The private cancer prediction methodology is characterized by a private inference protocol proposed in subsection 5.2.2 and then the fast matrix multiplication methodology, crux of the private ML algorithm, is proposed in subsection 5.2.3.

5.2.2. Private inference protocol

Fig. 2 shows the overview of our inference protocol. It consists of input encoding and encryption, weight and bias encoding, computation, decryption, and decoding. The client starts with a matrix containing the input values represented with double-precision floating-point numbers, where is the number of inputs and is the number of features. The values of matrix are multiplied by a scaling factor in order to be converted into integers, a requirement of the BFV encryption scheme. This effectively converts our HE operations into fixed-point arithmetic. The scaled matrix of inputs is then encoded into a matrix of polynomial plaintexts , where each polynomial contains coefficients. We pack features of each row into a polynomial. This leads to an encoded matrix of dimensions .

It is worth noting that our model requires more precision than what can be represented in the plaintext modulus . From experimental results, we determined that our inputs and weights require 14 bits of precision. Since our inputs are in the interval , we set to represent the inputs in 14 bits. Meanwhile, weights are in the interval , which leads to . Due to the fixed-point arithmetic, the biases must be scaled by . After the computation, the client will receive outputs that are scaled by a factor of , like the biases, but that require bits of precision for representation. For , that translates to 44 bits. This is above of what a secure BFV ciphertext with enough noise budget for our computation can support. To cope with that without hindering the accuracy of our model, we used the Chinese Remainder Theorem (CRT) to break our plaintext into a pair of smaller plaintexts, each one under its own modulus. We define our plaintext moduli as , which provides 30 bits of precision, and , offering 16 bits. This means that for every features encoded into a polynomial, we are actually encoding into a pair of polynomials, one with coefficient modulus , and another with coefficient modulus . For simplicity, we refer to this pair as plaintext polynomial.

The encoded matrix of inputs is then encrypted with the client’s public key . The encrypted matrix is sent to the server together with public values . Afterwards, the server scales and encodes the transpose of the matrix of weights , which has dimensions , where is the number of outputs. The transposition is a requirement of our computation. It packs several feature weights of an output in a plaintext polynomial, leading to an encoded matrix of weights of dimensions . Biases are encoded differently, each bias is encoded into a plaintext polynomial, filling all slots with its value. Finally, the server performs the matrix multiplication of encrypted inputs by encoded weights followed by addition of encoded biases . The resulting matrix is returned to the client together with public value . The client simply decrypts, decodes, and descale with its secret key and obtains the result of the inference in plaintext.

5.2.3. Matrix multiplication algorithm

Our privacy-preserving matrix multiplication algorithm, optimized for implementation in HE, is displayed in Algorithm 1. It receives three arguments: The encrypted matrix of inputs , encoded matrix of weights , and polynomial degree . Each row of represents an input, while each row of represents one output. The computation of the dot product for each input is independent, making this algorithm highly parallelizable. We start the dot product by performing the column-wise multiplication of each row of with the each row of and append the result for each row-row pair into a vector (lines 5-11). Next, we add together all elements of the resulting vector (line 13) and execute ciphertext rotations and additions to finalize the dot product (lines 14-17). This results in a ciphertext where all its slots contain the result of the dot product of the row-row pair. In order to save memory and reduce communication time, we aim at packing several dot product results into a single ciphertext. For this, first we need to clear the ciphertext slots in all but one carefully chosen position. We do it by multiplying the resulting ciphertext by a plaintext polynomial with one at that specific position and zero in the remaining slots (lines 18-22). Finally, we can compress the dot product results by adding them together (lines 25-42). If there are more dot product results than slots in a ciphertext, i.e., , then ciphertexts are appended to the output vector . Lastly, we return the result (line 43). We provide the mathematical representation of the algorithm in Appendix

5.2.4. Approximation of non-linear function in private inference

Tumor prediction is a classification problem which we address using multinomial Logistic Regression (LR). An LR model is trained by reducing the logistic loss function. During inference, the probability that an input , with features, belongs to a class is given by where , is the weight matrix, and is the bias. The predicted class is the class with the highest probability, i.e. where . This non-linear logistic function is computationally expensive in HE; thus, we perform the following approximation for building an ML model that can be used for encrypted inference. Since the logistic function is a monotonically increasing function, we can say that for a class is higher if is higher, and since the predicted label depends on the relative probability values, the predicted label can also be calculated using . Therefore, during inference, a test input needs to be multiplied with the weight matrix to get the final prediction, i.e. the predicted class . Effectively, for efficient inference, the matrix multiplication between the test inputs and the weight matrix must be fast. Please note here that the size of is dependent on the number of features, i.e. the higher the dimension of an input, the larger is the matrix, and the more time-consuming is the matrix multiplication.

| Feature type | #features | Model type | Feature selection | Accuracy | MAUC | Precision | Recall | F-score |

|---|---|---|---|---|---|---|---|---|

| SNV presence | 15,000 | Logistic regression | 66.85 | 0.928 | 0.670 | 0.668 | 0.660 | |

| CNV only | 17,000 | Logistic regression | 71.27 | 0.940 | 0.718 | 0.712 | 0.711 | |

| CNV + encoded SNV | 13,000 | SVM (linear) | 68.13 | 0.942 | 0.685 | 0.681 | 0.680 | |

| CNV + encoded SNV | 37,000 | SVM (rbf) | 64.82 | 0.949 | 0.685 | 0.648 | 0.634 | |

| CNV + encoded SNV | 34,000 | SVM (polynomial) | 69.98 | 0.954 | 0.712 | 0.699 | 0.698 | |

| CNV + encoded SNV | 43,000 | DNN | 63.16 | 0.925 | 0.658 | 0.631 | 0.628 | |

| CNV + encoded SNV | 34,000 | Logistic regression | 83.61 | 0.976 | 0.834 | 0.836 | 0.834 | |

| CNV + encoded SNV | 32,000 | Logistic regression | MI | 81.95 | 0.972 | 0.822 | 0.819 | 0.818 |

| CNV + encoded SNV | 40,000 | Logistic regression | f-score | 82.68 | 0.974 | 0.827 | 0.826 | 0.824 |

|

Accuracy | |||

|---|---|---|---|---|

| Class 0 (Bladder) | 9.76 | 78.26 | ||

| Class 1 (Breast) | 7.46 | 74.35 | ||

| Class 2 (Bronchus and lung) | 23.08 | 91.24 | ||

| Class 3 (Cervix uteri) | 5.71 | 64.0 | ||

| Class 4 (Colon) | 9.53 | 87.75 | ||

| Class 5 (Corpus uteri) | 7.6 | 85.18 | ||

| Class 6 (Kidney) | 5.39 | 90.625 | ||

|

6.63 | 93.33 | ||

| Class 8 (Ovary) | 5.85 | 70.83 | ||

| Class 9 (Skin) | 9.44 | 87.75 | ||

| Class 10 (Stomach) | 9.49 | 65.11 |

6. Experimental evaluation

The evaluation of private cancer prediction methodology is dependent on the precision of the plaintext model and performance of the private inference protocol. We report the evaluation of somatic mutation encoding towards accurate cancer prediction in plaintext in subsection 6.1 and the performance of the ML model (based on our matrix multiplication methodology) in subsection 6.2.

6.1. Evaluation of plaintext cancer prediction

6.1.1. Using only presence of an SNV on a gene

We report different performance metrics and the information on the features used for different models tested in Table 1. We observe that our model achieves a test accuracy of and a micro-average area under curve of with top 15,000 features. We also plot an Receiver Operating Characteristics (ROC) curve (Fig. 3) for each class and observe that skin cancer (class 9) detection has the highest area under the curve of while stomach cancer (class 10) prediction has the lowest area under the curve of . Although on a slightly different dataset, other ML-based cancer prediction achieved a similar test accuracy of (deep_gene, 15) and of (gdl, 16). These methods also used the SNV frequency to prune the number of features.

6.1.2. Using only CNV of the genes

We also experiment with just the copy number information for all the 25,128 genes and run a test to select the top genes. We achieve a slightly higher test accuracy of with 17,000 features. From Fig. 3, we observe that the micro average area also improves to with the lowest area under curve for detection of breast cancer (class 1) at (from ). Higher test accuracy and MAUC show that CNVs have more distinguishing power on the type of cancers than SNVs, when considered individually.

| Accuracy | MAUC | Precision | Recall | F-score | |||

|---|---|---|---|---|---|---|---|

| Class 0 (Bladder) | 95.58 | 0.982 | 0.952 | 0.955 | 0.952 | ||

| Class 1 (Breast) | 94.65 | 0.983 | 0.942 | 0.946 | 0.944 | ||

| Class 2 (Bronchus and lung) | 91.34 | 0.974 | 0.911 | 0.913 | 0.912 | ||

| Class 3 (Cervix uteri) | 97.42 | 0.989 | 0.972 | 0.974 | 0.973 | ||

| Class 4 (Corpus uteri) | 97.42 | 0.997 | 0.974 | 0.974 | 0.974 | ||

| Class 5 (Colon) | 96.86 | 0.991 | 0.967 | 0.968 | 0.968 | ||

| Class 6 (Kidney) | 97.97 | 0.996 | 0.979 | 0.979 | 0.979 | ||

|

95.39 | 0.992 | 0.952 | 0.953 | 0.953 | ||

| Class 8 (Ovary) | 97.60 | 0.993 | 0.974 | 0.976 | 0.975 | ||

| Class 9 (Skin) | 97.42 | 0.995 | 0.973 | 0.974 | 0.973 | ||

| Class 10 (Stomach) | 96.13 | 0.982 | 0.958 | 0.961 | 0.958 |

6.1.3. Final model: Using both SNV and CNV information

We select top features using both SNV and CNV information as described above and train several models to evaluate different machine learning algorithms for the tumor classification task. SVM with linear, rbf, and polynomial kernels achieve the best test accuracy of , , and with 13,000, 37,000, and 34,000 features respectively. Therefore, SVM does not show much improvement from our baseline that used only the presence of SNVs as features. Logistic regression model with Lasso penalty shows the best performance across all performance metrics. We achieve a test accuracy of with 34,000 features thereby also reducing the number of features. We also observe an improvement in the micro average area to when compared with models using just CNV or just presence of SNV. ROC for individual classes also have higher scores with the lowest area under curve of for cervical cancer (class 3). All the classes achieve an ROC area under curve of more than . This experiment also shows that although CNVs are more informative, using both CNV and SNVs result in the highest prediction accuracy. We also test our logistic regression model using mutual information and f-score as feature selection methods but we achieve lower test accuracy of with 32,000 features, and with 40,000 features, respectively (Table1).

6.1.4. Binary models

We built 11 specific models for each cancer type. We use both CNV and SNV information to select the top 34,000 features. In these experiments, we train 11 different models, where each one detects a particular tumor. We report the performance (test accuracy and micro-average score) in Table 3. We can see that all of the individual models have a test accuracy of more than and a micro-average area under curve of more than . The best performing classifier is for kidney with a test accuracy of and a micro-average score of . Even the worst performing binary model (for bronchus and lung) has a test accuracy of and micro-average score of . Binary models have also been evaluated in other somatic genetic information-based cancer classification tasks (gdl, 16) where the authors also achieve high performance for individual tasks but suffer in performance in cancer prediction using all labels.

6.1.5. Predictive genes

We discuss our findings on the top genes selected. 17,962 genes are selected for CNV data and 16,038 are selected for SNV data as the most informative features. However, more than of these genes are common, i.e. 11,133 genes are selected based on both CNV and SNV information. Considering the scores, we also observe that the top 1,030 genes come from using SNV information. We plot histograms of statistic scores in Fig. 4 and observe that the genes selected based on SNVs is more flat i.e. there are more genes with higher scores. We also verified that the highest score of a gene selected based on SNVs is higher than the highest value of a gene selected based on CNVs. Therefore, from feature selection perspective using test, SNV data on the selected genes are statistically more important than their CNV counterparts. But CNV information improves the test accuracy by adding potentially more relevant biological information.

When we investigated the top 10 most informative genes based on the SNV information (Table 4), we found “PTEN” are “APC” genes, which are known tumor suppressors; “MUC16” gene, which is a biomarker for ovarian cancer; “ZFHX3” gene, which is implicated in prostate cancer; “CCDC168” gene, which is known to be associated with Prostate Carcinoma and Uterine Body Mixed Cancer. The other 5 genes in this list are also implicated in important cellular activities that could potentially be related to cancer. These genes and their corresponding Gene Ontology (GO) term enrichment are depicted in Table 4.

|

Go enrichment analysis | ||

|---|---|---|---|

| RB1 |

|

||

| CDKN2A | transcription factor binding | ||

| LINC00441 | NA | ||

| DGKH |

|

||

| RCBTB2 | Ran guanyl-nucleotide exchange factor activity | ||

| CDKN2B-AS1 | NA & Intracranial Aneurysm and Periodontitis | ||

| LPAR6 | G protein-coupled receptor activity | ||

| AKAP11 |

|

||

| CDKN2B |

|

||

| ITM2B | amyloid-beta binding | ||

|

Go enrichment analysis | ||

| TTN | nucleic acid binding and identical protein binding | ||

| PTEN | protein kinase binding and magnesium ion binding | ||

| APC | microtubule binding | ||

| MUC16 | metabolism | ||

| DST | calcium ion binding and actin binding | ||

| ZFHX3 |

|

||

| CCDC168 | NA | ||

| ATRX | chromatin binding and helicase activity | ||

| DNAH5 | ATPase activity and microtubule motor activity | ||

| PIK3R1 | GTP binding and transcription factor binding |

The first gene in the top 10 most informative genes based on the CNV information (Table 4) is the first ever known tumor suppressor “RB1”. Similarly, “CDKN2A” and “CDKN2B” genes are also known tumor suppressors. “RCBTB2” gene is known to be repressed in prostate cancer. The “CDKN2B-AS1” gene has the silencing power of many other genes in the genome and strongly implicated in various cancer types. The other 5 genes in this list are also implicated in important cellular activities that could potentially be related to cancer.

6.1.6. Insights

The first insight is that biological intuition combined with high-dimensional data analysis methods can together achieve high accuracy (MAUC) while reducing the effective number of features. We further validate, using other studies, that our ML model indeed selects features that are biologically relevant. Using domain expertise, our ML model achieved a high performance of 0.98 MAUC with logistic regression ML model.

We further plot the variation of test accuracy (of a model trained using best hyper-parameters) with the number of features in Fig. 5 and observe that the test accuracy clearly rises when the number of features is lower than the number of samples and begins to saturate only after 30K features. From Fig. 5 we see that to achieve a test accuracy of more than , we need at least 20K features, and to achieve the highest test accuracy, we need more than 30K features. Therefore, private genomic analysis is one such application where extremely high-dimensional must be processed. The second insight motivates the development of matrix multiplication algorithm, which when implemented using BFV, can result in fast yet private cancer prediction.

6.2. Privacy-preserving model evaluation

We evaluate our privacy-preserving cancer prediction model on AMD Ryzen Threadripper 3960X 24-Core Processor with 128 GB RAM using 24 threads running Ubuntu 20.04 LTS. Encryption and computation operations are threaded, while decryption runs on single core. We implement our model using the E3 framework (e3, 43) with the underlying Microsoft SEAL library (seal, 44) and encryption parameters set as: polynomial degree , and plaintext moduli and , with a required security level of 128-bits. The cancer prediction model is hosted in the server and the client sends the encrypted genomic data to the server. As a use case, we privately compute cancer label for 543 patients, which constitutes of the dataset. We compare our private logistic regression model with private logistic regression model implemented using standard matrix multiplication (dubbed as standard LR). Please note all private models are implemented using BFV scheme with E3 framework.

6.2.1. Timing evaluation

We report the encryption, decryption, and computation time required for private cancer prediction in Fig. 6. The time taken to calculate the final cancer label, which is effectively the result of matrix multiplication , is denoted by computation. Computation, understandably, is the most costly operation in private cancer prediction. We observe that even if the number of features increase from 16K to more than 40K (), the computation time only increases from 33.44 seconds to 35.52 seconds (), which corresponds to increase in test accuracy. Therefore, the matrix multiplication is not the bottleneck for private cancer prediction. The time needed for encryption of the test samples increases with the number of features, with 3.87 seconds for 16K features to 10.40 seconds for 40K features () which indicates a linear increase in the encryption time as a function of number of features. Decryption is the least expensive operation (less than 1 second) as compared to encryption and computation; the values for decryption time are labelled in Fig. 6. The maximum total time for private inference of the entire test dataset is required when processing 40,960 features, and is 46.77 seconds.

6.2.2. Latency

As mentioned in section 1 private computations using HE are generally designed for high throughput, since popular FHE schemes support batching. For our application, we also prioritize latency, i.e. evaluation of a single sample. We report our findings in Fig. 7. From the figure we observe that the total amount of time to privately compute cancer label for a sample is 1.08 seconds and there is a linear increase in time with the number of samples.

6.2.3. Comparison to standard LR

In order to accurately quantify the performance benefits of our proposed methodology, we implement a privacy-preserving version of standard matrix multiplication using BFV. For the experiment we generate synthetic data in the same format (CNVs and SNVs) for 8192 individuals and measure time for encryption, computation, decryption operations. We plot the timing results in a log-log graph in Fig. 8. We observe that the total time required for private inference implemented using standard matrix multiplication for similar number of individuals as the test set is approximately 10 minutes, approximately more than our methodology. Also, the total time required for private inference on 1 individual is 598.25 seconds (similar time required for thousands of individuals), which is more than the time required by our algorithm. Therefore, as compared to standard matrix multiplication, commonly used for implementation of ML models, our algorithm has lower latency, and higher throughput.

6.2.4. Generalizing high-dimensional private inference

Healthcare models are difficult to port trivially across datasets (as discussed in section 1). Cancer detection ML model is no exception. However, our matrix multiplication algorithm is not dependent on input data or weight values (like quantization-based DNN design techniques (qnn, 45)) and thus, can be reused for datasets requiring HE-based high-dimensional inference. The transferability of our private inference algorithm across applications is an added advantage.

7. Conclusion

Current solutions for HE-based privacy preserving inference suffer from impractical overheads; which are further aggravated when dealing with high-dimensional genomic data. In this work we develop a solution for privacy preserving cancer inference on genomic data. We first leverage biological intuition to structure the mutation data and reduce the dimensionality to a practicable limit. For our privacy preserving ML model, we propose a matrix multiplication algorithm to implement logistic regression model, optimized for high throughput and low latency. Our analysis on a real-world genomic dataset shows that our solution achieves cancer prediction MAUC of 0.98 on test dataset and can be computed on encrypted genomic data at 1 second/patient.

References

- (1) Kate Macri “AI is Helping Secure Critical Infrastructure” URL: https://governmentciomedia.com/ai-helping-secure-critical-infrastructure

- (2) Kun-Hsing Yu, Andrew L Beam and Isaac S Kohane “Artificial intelligence in healthcare” In Nature biomedical engineering 2.10 Nature Publishing Group, 2018, pp. 719–731

- (3) Jianfang Liu et al. “An integrated TCGA pan-cancer clinical data resource to drive high-quality survival outcome analytics” In Cell 173.2 Elsevier, 2018, pp. 400–416

- (4) Christopher Greenman al. “Patterns of somatic mutation in human cancer genomes” In Nature 446, 2007

- (5) Katherine A Hoadley et al. “Cell-of-origin patterns dominate the molecular classification of 10,000 tumors from 33 types of cancer” In Cell 173.2 Elsevier, 2018, pp. 291–304

- (6) iDash “IDASH PRIVACY & SECURITY WORKSHOP 2020 - secure genome analysis competition” URL: http://www.humangenomeprivacy.org/2020/competition-tasks.html

- (7) Kerem Ayoz, Erman Ayday and A Ercument Cicek “Genome reconstruction attacks against genomic data-sharing beacons” In arXiv preprint arXiv:2001.08852, 2020

- (8) Craig Gentry “Fully Homomorphic Encryption Using Ideal Lattices” In Proceedings of the Forty-First Annual ACM Symposium on Theory of Computing, STOC ’09 Bethesda, MD, USA: Association for Computing Machinery, 2009, pp. 169–178 DOI: 10.1145/1536414.1536440

- (9) Nathan Dowlin et al. “CryptoNets: Applying Neural Networks to Encrypted Data with High Throughput and Accuracy” Microsoft Research, 2016

- (10) Esha Sarkar et al. “Fast and Scalable Private Genotype Imputation Using Machine Learning and Partially Homomorphic Encryption” In IEEE Access 9 IEEE, 2021, pp. 93097–93110

- (11) Jian Liu, Mika Juuti, Yao Lu and N. Asokan “Oblivious Neural Network Predictions via MiniONN Transformations” In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security New York, NY, USA: Association for Computing Machinery, 2017, pp. 619–631 URL: https://doi.org/10.1145/3133956.3134056

- (12) Miran Kim et al. “Ultrafast homomorphic encryption models enable secure outsourcing of genotype imputation” In Cell Systems Elsevier, 2021

- (13) Yun Liu “Applying domain knowledge to clinical predictive models”, 2016

- (14) Julia Amann et al. “Explainability for artificial intelligence in healthcare: a multidisciplinary perspective” In BMC Medical Informatics and Decision Making 20.1 Springer, 2020, pp. 1–9

- (15) Yuchen Yuan et al. “DeepGene: an advanced cancer type classifier based on deep learning and somatic point mutations” In BMC Bioinformatics 17, 2016

- (16) Yingshuai Sun et al. “Identification of 12 cancer types through genome deep learning” In Scientific Reports 9(1):17256. PMID:31754222, 2019

- (17) Cancer Genome Atlas Research Network “Comprehensive genomic characterization defines human glioblastoma genes and core pathways” In Nature 455.7216 Nature Publishing Group, 2008, pp. 1061

- (18) Cancer Genome Atlas Research Network “Integrated genomic analyses of ovarian carcinoma” In Nature 474.7353 Nature Publishing Group, 2011, pp. 609

- (19) Cancer Genome Atlas Research Network “Comprehensive genomic characterization of squamous cell lung cancers” In Nature 489.7417 Nature Publishing Group, 2012, pp. 519

- (20) Douglas A Levine “Integrated genomic characterization of endometrial carcinoma” In Nature 497.7447 Nature Publishing Group, 2013, pp. 67–73

- (21) Cancer Genome Atlas Research Network “Comprehensive molecular characterization of clear cell renal cell carcinoma” In Nature 499.7456 Nature Publishing Group, 2013, pp. 43

- (22) Cancer Genome Atlas Research Network “Comprehensive molecular characterization of urothelial bladder carcinoma” In Nature 507.7492 NIH Public Access, 2014, pp. 315

- (23) John N Weinstein et al. “The cancer genome atlas pan-cancer analysis project” In Nature genetics 45.10 Nature Publishing Group, 2013, pp. 1113–1120

- (24) David Tamborero et al. “Comprehensive identification of mutational cancer driver genes across 12 tumor types” In Scientific reports 3.1 Nature Publishing Group, 2013, pp. 1–10

- (25) Dan R Robinson et al. “Integrative clinical genomics of metastatic cancer” In Nature 548.7667 Nature Publishing Group, 2017, pp. 297–303

- (26) Maurizio Polano et al. “A Pan-Cancer Approach to Predict Responsiveness to Immune Checkpoint Inhibitors by Machine Learning” In Cancers 11.10, 2019 DOI: 10.3390/cancers11101562

- (27) Kumardeep Chaudhary, Olivier B Poirion, Liangqun Lu and Lana X Garmire “Deep learning–based multi-omics integration robustly predicts survival in liver cancer” In Clinical Cancer Research 24.6 AACR, 2018, pp. 1248–1259

- (28) Yang Guo, Xuequn Shang and Zhanhuai Li “Identification of cancer subtypes by integrating multiple types of transcriptomics data with deep learning in breast cancer” In Neurocomputing 324 Elsevier, 2019, pp. 20–30

- (29) Martin Palazzo, Pierre Beauseroy and Patricio Yankilevich “A pan-cancer somatic mutation embedding using autoencoders” In BMC bioinformatics 20.1 Springer, 2019, pp. 1–10

- (30) Chiraag Juvekar, Vinod Vaikuntanathan and Anantha Chandrakasan “GAZELLE: A low latency framework for secure neural network inference” In 27th USENIX Security Symposium (USENIX Security 18), 2018, pp. 1651–1669

- (31) Yuchen Zhang et al. “Foresee: Fully outsourced secure genome study based on homomorphic encryption” In BMC medical informatics and decision making 15.5, 2015, pp. 1–11 Springer

- (32) Gizem S Çetin et al. “Private queries on encrypted genomic data” In BMC medical genomics 10.2 Springer, 2017, pp. 1–14

- (33) Andrey Kim et al. “Logistic regression model training based on the approximate homomorphic encryption” In BMC medical genomics 11.4 BioMed Central, 2018, pp. 23–31

- (34) Marcelo Blatt, Alexander Gusev, Yuriy Polyakov and Shafi Goldwasser “Secure large-scale genome-wide association studies using homomorphic encryption” In Proceedings of the National Academy of Sciences 117.21 National Acad Sciences, 2020, pp. 11608–11613

- (35) The Cancer Genome Atlas Research Network et al. “The Cancer Genome Atlas Pan-Cancer analysis project” In Nature Genetics 45, 2013

- (36) William McLaren et al. “The ensembl variant effect predictor” In Genome biology 17.1 BioMed Central, 2016, pp. 1–14

- (37) Pascal Paillier “Public-Key Cryptosystems Based on Composite Degree Residuosity Classes” In Advances in Cryptology — EUROCRYPT ’99 Berlin, Heidelberg: Springer Berlin Heidelberg, 1999, pp. 223–238

- (38) Junfeng Fan and Frederik Vercauteren “Somewhat Practical Fully Homomorphic Encryption” https://ia.cr/2012/144, Cryptology ePrint Archive, Report 2012/144, 2012

- (39) Jung Hee Cheon, Andrey Kim, Miran Kim and Yongsoo Song “Homomorphic Encryption for Arithmetic of Approximate Numbers” In Advances in Cryptology – ASIACRYPT 2017 Cham: Springer International Publishing, 2017, pp. 409–437

- (40) Florian Mittag, Michael Römer and Andreas Zell “Influence of Feature Encoding and Choice of Classifier on Disease Risk Prediction in Genome-Wide Association Studies” In PLOS ONE 10.8 Public Library of Science, 2015, pp. 1–18 DOI: 10.1371/journal.pone.0135832

- (41) Xin Jin, Anbang Xu, Rongfang Bie and Ping Guo “Machine Learning Techniques and Chi-Square Feature Selection for Cancer Classification Using SAGE Gene Expression Profiles” In Data Mining for Biomedical Applications Berlin, Heidelberg: Springer Berlin Heidelberg, 2006, pp. 106–115

- (42) Robert Tibshirani “Regression Shrinkage and Selection via the Lasso” In Journal of the Royal Statistical Society. Series B (Methodological) 58.1 [Royal Statistical Society, Wiley], 1996, pp. 267–288 URL: http://www.jstor.org/stable/2346178

- (43) Eduardo Chielle, Oleg Mazonka, Nektarios Georgios Tsoutsos and Michail Maniatakos “E3: A Framework for Compiling C++ Programs with Encrypted Operands” Online: https://eprint.iacr.org/2018/1013, GitHub repository: https://github.com/momalab/e3, Cryptology ePrint Archive, Report 2018/1013, 2018

- (44) “Microsoft SEAL (release 3.3.2)” Microsoft Research, Redmond, WA., https://github.com/Microsoft/SEAL, 2019

- (45) Kun Huang, Bingbing Ni and Xiaokang Yang “Efficient quantization for neural networks with binary weights and low bitwidth activations” In Proceedings of the AAAI Conference on Artificial Intelligence 33.01, 2019, pp. 3854–3861

Appendix

Here we describe the matrix multiplication of , where is the encrypted input matrix (encoded genomic data) and is the encoded matrix of LR weights. The polynomial degree is , is the number of inputs, is the number of outputs, and is the number of features. The operator stands for the standard matrix multiplication, while represents our algorithm, is modular reduction over , and the intervals and represent elements packed in a ciphertext. When , there is a rotation of the elements of the ciphertext. Function is the element-wise addition of all rotations of a ciphertext, and function represents the compression part of the algorithm, where one slot of ciphertexts is selected and combined into a new ciphertext.