monthyeardate\monthname[\THEMONTH] \THEDAY, \THEYEAR

Scalable Detection and Tracking

of Geometric Extended Objects

Abstract

Multiobject tracking provides situational awareness that enables new applications for modern convenience, public safety, and homeland security. This paper presents a factor graph formulation and a particle-based sum-product algorithm (SPA) for scalable detection and tracking of extended objects. The proposed method dynamically introduces states of newly detected objects, efficiently performs probabilistic multiple-measurement to object association, and jointly infers the geometric shapes of objects. Scalable extended object tracking (EOT) is enabled by modeling association uncertainty by measurement-oriented association variables and newly detected objects by a Poisson birth process. Contrary to conventional EOT methods, a fully particle-based approach makes it possible to describe different geometric object shapes. The proposed method can reliably detect, localize, and track a large number of closely-spaced extended objects without gating and clustering of measurements. We demonstrate significant performance advantages of our approach compared to the recently introduced Poisson multi-Bernoulli mixture filter. In particular, we consider a simulated scenarios with up to twenty closely-spaced objects and a real autonomous driving application where measurements are captured by a lidar sensor.

Index Terms:

Extended object tracking, data association, factor graphs, sum-product algorithm, random matrix theoryI Introduction

Emerging sensing technologies and innovative signal processing methods will lead to new capabilities for autonomous operation in a wide range of problems such as applied ocean sciences and ground navigation. A technology that enables new capabilities in this context is EOT. EOT methods make it possible to detect and track an unknown number of objects with unknown shapes by using modern sensors such as high-resolution radar, sonar, and lidar [1].

Due to the high resolution of these sensors, the point object assumption used in conventional multiobject tracking algorithms [2, 3, 4] is no longer valid. Objects have an unknown shape that must be inferred together with their kinematic state. In addition, data association is particularly challenging due to the overwhelmingly large number of possible association events [5]. Even if only a single extended object is considered, the number of possible measurement-to-object association events scales combinatorially in the number of measurements. In the more realistic case of multiple extended objects, this “combinatorial explosion” of possible events is further exacerbated.

I-A State of the Art

An important aspect of EOT is the modeling and inference of object shapes. The shape of an object determines how the measurements that originate from the object are spatially distributed around its center. An important and widely used model [6, 7, 8, 5, 9] based on random matrix theory has been introduced in [10]. This model considers elliptically shaped objects. Here, unknown reflection points of measurements on extended objects are modeled by a random matrix that acts as the covariance matrix in the measurement model. This random extent state is estimated sequentially together with the kinematic state. The approach proposed in [10] introduces a closed-form update step for linear dynamics. It exploits that the Gaussian inverse Wishart distribution is a conjugate prior for linear Gaussian measurement models.

A major drawback of the original random matrix-based tracking method is that the driving noise variance in the state transition function of the kinematic state must be proportional to the extent of the object [10]. Furthermore, the orientation of the random matrix is constant. These limitations have been addressed by improved and refined random matrix-based extent models introduced in [11, 12, 13] and particle-based methods [14]. A significant number of additional application-dependent object extent models have been proposed recently (see [1] and references therein). The state-of-the-art algorithm for tracking an unknown number of objects with elliptical shape is the Poisson multi-Bernoulli mixture (PMBM) filter [5]. The PMBM filter is based on a model [15] where objects that have produced a measurement are described by a multi-Bernoulli probability distribution and objects that exist but have not yet produced a measurement yet are described by a Poisson distribution. This PMBM model is a conjugate prior with respect to the prediction and update steps of the extended object tracking problem [5].

Important methods for the tracking of point objects include joint probabilistic data association (JPDA) [2], multi-hypothesis tracking (MHT) [16, 17, 18], and approaches based on random finite sets (RFS) [3, 19, 20, 21, 15]. Many of these point object tracking methods have been adapted for extended object tracking. In [22], a probabilistic data association algorithm for the tracking of a single object that can produce more than one measurement has been introduced. A multiple-measurement JPDA filter for the tracking of multiple objects has been independently developed in [23] and [24]. The method in [6] combines a random matrix extent model with multisensor JPDA for the tracking of multiple extended objects. The computational complexity of these methods scales combinatorially in the number of objects and the number of measurements. They rely on gating, a suboptimal preprocessing technique that excludes unlikely data association events. Thus, these methods are only suitable in scenarios where objects produce few measurements and no more than four objects come into close proximity at any time [25].

Another widely used approach to limit computational complexity for data association with extended objects is to perform clustering of spatially close measurements. Here, based on the assumption that all measurements in a cluster correspond to the same object, the majority of possible data association events is discarded in a suboptimal preprocessing step. Based on this approach, tracking methods for objects that produce multiple measurements have been developed in the MHT [26] and the JPDA [27, 28] frameworks. Practical implementations of the update step of RFS-based methods for EOT [7, 8, 5, 9] including the one of the PMBM filter [5] also rely on gating and clustering and suffer from a reduced performance if objects are in close proximity. An alternative approach is to perform data association with extended objects using sampling methods [29, 30]. Here, in scenarios with objects that are in proximity, a better estimation performance can be achieved compared to methods based on clustering. These methods based on sampling, however, also discard the majority of possible data association events, and thus eventually fail if the number of relevant events becomes too large [31].

An innovative approach to high-dimensional estimation problems is the framework probabilistic graphical models [32]. In particular, the SPA [33, 34, 32] can provide scalable solutions to high-dimensional estimation problems. The SPA performs local operations through “messages” passed along the edges of a factor graph. Many traditional sequential estimation methods such as the Kalman filter, the particle filter, and JPDA can be interpreted as instances of SPA. In addition, SPA has led to a variety of new estimation methods in a wide range of applications [35, 36, 37]. For probabilistic data association (PDA) with point objects, an SPA-based method referred to as the sum-product algorithm for data association (SPADA) is obtained by executing the SPA on a bipartite factor graph and simplifying the resulting SPA messages [38, 4, 31]. The computational complexity of this algorithm scales as the product of the number of measurements and the number of objects. Sequential estimation methods that embed the SPADA to reduce computational complexity have been introduced for multiobject tracking (MOT) [15, 39, 40, 41, 42, 43], indoor localization [44, 45, 46, 47], as well as simultaneous localization and tracking [48, 49, 50].

SPA methods for probabilistic data association based on a bipartite factor graph have recently been investigated for the tracking of extended objects [51, 25, 52]. Here, the number of measurements produced by each object is modeled by an arbitrary truncated probability mass function (PMF). An SPA for data association with extended objects with a computational complexity that scales as the product of the number of measurements and the number of objects has been introduced [51, 25]. This method can track multiple objects that potentially generate a large number of measurements but was found unable to reliably determine the probability of existence of objects detected for the first time. An alternative method for scalable data association with extended objects has been developed independently and has been presented in [53, 54]. These methods however either assume that the number of objects are known, or that a certain prior information of new object locations is available. They are thus unsuitable for scenarios with spontaneous object birth.

I-B Contributions and Notations

In this paper, we introduce a Bayesian particle-based SPA for scalable detection and tracking of an unknown number of extended objects. Object birth and the number of measurements generated by an object are described by a Poisson PMF. The proposed method is derived on a factor graph that depicts the statistical model of the extended object tracking problem and is based on a representation of data association uncertainty by means of measurement-oriented association variables. Contrary to the factor graph used in [51], it makes it possible to reliably determine the existence of objects by means of the SPA. In our statistical model, the object extent is modeled as a basic geometric shape that is represented by a positive semidefinite extent state. Contrary to conventional EOT algorithms with a random-matrix model, the proposed fully particle-based method is not limited to elliptical object shapes. A fully particle-based approach also makes it possible to consider a uniform distribution of measurements on the object extent. Furthermore, our method has a computational complexity that scales only quadratically in the number of objects and the number of measurements. It can thus accurately detect, localize, and track multiple closely-spaced extended objects that generate a large number of measurements. The contributions of this paper are as follows.

-

•

We derive a new factor graph for the problem of detection and tracking of multiple extended objects and introduce the corresponding message passing equations.

-

•

We establish a particle-based scalable message passing algorithm for the detection and tracking of an unknown number of extended objects.

-

•

We demonstrate performance advantages in a challenging simulated scenario and by using real lidar measurements acquired by an autonomous vehicle.

This paper advances over the preliminary account of our method provided in the conference publication [55] by (i) simplifying the representation of data association uncertainty, (ii) including object extents in the statistical model, (iii) presenting a detailed derivation of the factor graph, (iv) establishing a particle-based implementation of the proposed method, (v) discussing scaling properties, (vi) demonstrating performance advantages compared to the recently proposed Poisson multi-Bernoulli mixture filter [5] using synthetic and real measurements.

The probabilistic model established in this paper is closely related to the probabilistic model employed by the PMBM filter (cf. [4]). Our focus is on obtaining a factor graph formulation for which the SPA scales well, and on exploiting the accuracy and flexibility of a particle-based implementation.

Notation: Random variables are displayed in sans serif, upright fonts, while their realizations are in serif, italic fonts. Vectors and matrices are denoted by bold lowercase and uppercase letters, respectively. For example, a random variable and its realization are denoted by and ; a random vector and its realization by and ; and a random matrix and its realization are denoted by and . Furthermore, denotes the transpose of vector ; indicates equality up to a normalization factor; denotes the probability density function (PDF) of random vector . denotes the Gaussian PDF (of random vector ) with mean and covariance matrix , denotes the uniform PDF (of random vector ) with support , and denotes the Wishart distribution (of random matrix ) with degrees of freedom and mean . The determinant of matrix is denoted . Finally, denotes the block diagonal matrix that consists of submatrices .

II System Model

At time , object is described by a kinematic state and an extent state. The kinematic state consists of the object’s position and possibly further parameters such as velocity or turn rate. The extent state is a symmetric, positive semidefinite random matrix that can either model an ellipsoid or a cube. Example realizations of and corresponding object shapes are shown in Fig. 1. Formally, we also introduce the vector notation of extent state , which is the concatenation of diagonal and unique off-diagonal elements of , e.g., in a 2-D tracking scenario the vector notation of extent matrix is given by . Note that the support of corresponds to all positive-semidefinite matrices in . In what follows, we will use extent matrix and its vector notation interchangeably.

As in [40, 50, 4], we account for the time-varying and unknown number of extended objects by introducing potential objects . The number of POs is the maximum possible number of actual objects that have produced a measurement so far [4]. (Note that increases with time .) The existence/non-existence of PO is modeled by the binary existence variable in the sense that PO represents an actual object if and only if . Augmented PO states are denoted as .

Formally, PO is also considered if it is non-existent, i.e., if . The states of non-existent POs are obviously irrelevant and have no impact on the estimation solution. Therefore, all hybrid continuous/discrete PDFs defined on augmented PO states, , are of the form , where is an arbitrary “dummy PDF” and is the probability that the PO does not exist.111Note that representing objects states and existence variables by this type of PDFs is analogous to a multi-Bernoulli formulation in the RFS framework [15, 4, 5]. For every PO state at previous times, there is one “legacy” PO state at current time . The number of legacy objects and the joint legacy PO state at time are introduced as and , respectively.

II-A State-Transition Model

The state-transition pdf for legacy PO state factorizes as

| (1) |

where the single-object augmented state-transition pdf is given as follows. If PO does not exist at time , i.e., , then it does not exist at time either, i.e., , and thus its state pdf is . This means that

| (2) |

On the other hand, if PO exists at time , i.e., , then the probability that it still exists at time , i.e., , is given by the survival probability , and if it still exists at time , its state is distributed according to a single-object state-transition pdf . Thus,

| (3) |

It is assumed that at time , the prior distributions are statistically independent across POs . If no prior information is available, we have .

A single-object state-transition pdf that was found useful in many extended object tracking scenarios is given by [12]

| (4) |

where is the state transition function of the kinematic state , is the kinematic driving noise covariance matrix, and is a rotation matrix. The degrees of freedom of the Wishart distribution determine the uncertainty of object extent prediction. A small implies a large state-transition uncertainty.

II-B Measurement Model

At time , a sensor produces measurements , . The joint measurement vector is denoted as . (Note that the total number of measurements is random.) Each measurement is either generated by a single object or is a false alarm.

Let be a measurement generated by the th PO.222The fact that the PO with index generates a measurement implies . In particular, let be the relative position (with respect to ) of the reflection point that generated . The considered general nonlinear measurement model is now given by

| (5) |

where is an arbitrary nonlinear function and is the measurement noise. (Note that the subspace remains unobserved.) This measurement model directly determines the conditional PDF .

We consider three geometric object shape models. The extent state either defines (i) the covariance matrix of a Gaussian PDF, i.e., ; (ii) the elliptical support of a uniform PDF, i.e., ; or (iii) the cubical support of a uniform PDF, i.e., .333The cubical support and the elliptical support are defined by the eigenvalues and eigenvectors of as shown in Fig 1. For example, let us consider a 3-D scenario and let be the eigenvalues of the symmetric, positive-semidefinite matrix . Length, width, and height of the cube are given by , , and , respectively. Similarly, the lengths of the three principal semi-axes of the ellipsoid , are given by , , and The orientation of and is determined by the eigenvectors ,, and . The eigenvalues , , and are assumed distinct. This assumption is always valid for real sensor data and objects of practical interest. The considered object shape model directly determines the conditional PDF . The PDF of measurement vector conditioned on and can now be obtained as

| (6) |

An important special case of this model is the additive-Gaussian case, i.e.,

| (7) |

For extent model (i), in this case, there exist closed-form expressions for (6). In particular, the likelihood function can be expressed as . Similarly, for models (ii) and (iii), there exists a simple approximation discussed in [56, Section 2], that also results in a closed-form of (6).

If PO exists () it generates a random number of object-originated measurements , which are distributed according to the conditional PDF discussed above. The number of measurements it generates is Poisson distributed with mean . For example, this Poisson distribution can be determined by a spatial measurement density related to the sensor resolution and by the volume or surface area of the object extent, i.e., . False alarm measurements are modeled by a Poisson point process with mean and strictly positive PDF .

II-C New POs

Newly detected objects, i.e., actual objects that generated a measurement for the first time, are modeled by a Poisson point process with mean and PDF . Following [15, 4, 5], newly detected objects are represented by new PO states , . Each new PO state corresponds to a measurement ; means that measurement was generated by a newly detected object. Since newly detected objects can produce more than one measurement, we define a mapping from measurements to new POs by the following rule.444A detailed derivation and discussion is provided in the supplementary material [56]. At time , if multiple measurements with are generated by the same newly detected object, we have for and for all . As will be further discussed in Section II-D, with this mapping every association event related to newly detected objects can be represented by exactly one configuration of new existence variables , . We also introduce representing the joint vector of all new PO states. Note that at time , the total number of POs is given by .

Since new POs are introduced as new measurements are incorporated, the number of PO states would grow indefinitely. Thus, for the development of a feasible method, a suboptimal pruning step is employed. This pruning step removes unlikely POs and will be further discussed in Section III-A.

II-D Data Association Uncertainty

Tracking of multiple extended objects is complicated by the data association uncertainty: it is unknown which measurement originated from which object . To reduce computational complexity, following [38, 40, 4] we use measurement-oriented association variables

and define the measurement-oriented association vector as . This representation of data association makes it possible to develop scalable SPAs for object detection and tracking. Note that the maximum value in the support of is . (As discussed above, a measurement with index cannot be associated to a new PO with index , i.e., the event in which measurements and are generated by the same newly detected object is represented through the new PO .) This is a direct result of the mapping introduced in Section II-C. In what follows, we write short for .

For a better understanding of new POs and measurement-oriented association vectors, we consider simple examples for fixed and . The event where all three measurements are generated by the same newly detected object, is represented by , , , and . Furthermore, the event where all three measurements are generated by three different newly detected objects, is represented by , , , and . Finally, the event where measurements are generated by the same newly detected object and measurement is a false alarm, is represented by , , , and . Note how every event related to newly detected objects is represented by exactly one configuration of new existence variables , and association vector .

III Problem Formulation and Factor Graph

In this section, we formulate the detection and estimation problem of interest and present the joint posterior pdf and factor graph underlying the proposed EOT method.

III-A Object Detection and State Estimation

We consider the problem of detecting legacy and new POs based on the binary existence variables , as well as estimation of the object states and extents from the observed measurement history vector . Our Bayesian approach aims to calculate the marginal posterior existence probabilities and the marginal posterior pdfs . We perform detection by comparing with a threshold , i.e., if [57, Ch. 2], PO is considered to exist. Estimates of and are then obtained for the detected objects by the minimum mean-square error (MMSE) estimator [57, Ch. 4]. In particular, an MMSE estimate of the kinematic state is obtained as

Similarly, an MMSE estimate of the extent state is obtained by replacing with in (LABEL:eq:mmse).

We can compute the marginal posterior existence probability needed for object detection as discussed above, from the marginal posterior pdf, , as

| (9) |

Note that is also needed for the suboptimal pruning step discussed in Section V-D.

Similarly, we can calculate the marginal posterior pdf underlying MMSE state estimation (see (LABEL:eq:mmse)) from according to

For this reason, the fundamental task is to compute the pdf . This pdf is a marginal density of , which involves all the augmented states and measurement-oriented association variables up to the current time .

The main problem to be solved is to find a computationally feasible recursive (sequential) calculation of marginal posterior PDFs . By performing message passing by means of the SPA rules [33] on the factor graph that represents our statistical model discussed in Section II, approximations (“beliefs”) of this marginal posterior pdfs can be obtained in an efficient way for all legacy and new POs.

III-B The Factor Graph

We now assume that the measurements are observed and thus fixed. By using common assumptions [2, 3, 4, 5, 1], the joint posterior PDF of and , conditioned on is given by555A detailed derivation of this joint posterior PDF is provided in [56, Section 1].

| (11) |

where we introduced the functions , , , and that will be discussed next.

The pseudo likelihood functions and are given by

| (12) |

and as well as

| (13) |

and . Note that the second line in (13) is zero because, as discussed in Section II-D, the new PO with index exists () if and only if it produced measurement .

Furthermore, the factors containing prior distributions for new POs , are given by

and the pseudo transition functions (cf. (2) and (3)) for legacy POs are given by and .

A detailed derivation of this factor graph is provided in the supplementary material [56]. The factor graph representing factorization (11) is shown in Fig. 2. An interesting observation is that this factor graph has the same structure as a conventional multi-scan tracking problem [4, 58] with scans. (Here, every measurement is considered an individual scan.) In what follows, we consider a single time step and remove the time index for the sake of readability.

IV The Proposed Sum-Product Algorithm

Since our factor graph in Fig. 2 has cycles, we have to decide on a specific order of message computation [33]. We choose this order according to the following rules: (i) messages are not sent backward in time666This is equivalent to a joint filtering approach with the assumption that the PO states are conditionally independent given the past measurements. [40, 4]; and (ii) at each time step messages are computed and processed in parallel. With these rules, the generic message passing equations of the SPA [33] yield the following operations at each time step. The corresponding messages are shown in Fig. 2.

IV-A Prediction Step

First, a prediction step is performed for all legacy POs . Based on SPA rule [33, Eq. (6)], we obtain

| (14) |

where is the belief that was calculated at the previous time step. Recall that the integration in (14) is performed over the support of which corresponds to all positive-semidefinite matrices .

Next, we use the expression for as introduced in Section III-B and in turn (2) and (3) for . In this way, we obtain the following expressions for (14)

| (15) |

and with

| (16) |

We note that approximates the probability of non-existence of legacy PO at the previous time step. After the prediction step, the iterative message passing is performed. For future reference, we also introduce .

IV-B Iterative Message Passing

At iteration , the following operations are computed for all legacy and new POs.

IV-B1 Measurement Evaluation

The messages , , sent from factor nodes to variable nodes can be calculated as discussed next. First, by using the SPA rule [33, Eq. (6)], we obtain

| (17) |

Note that for , we set , and for we use (represented by and ) calculated in Section IV-B4. By using the expressions for introduced in Section III-B, (17) can be further simplified, i.e.,

| (18) |

and with . After multiplying these two expressions by , the message is given by777Multiplying SPA messages by a constant factor does not alter the resulting approximate marginal posterior PDFs [33].

| (19) |

and . This final normalization step makes it possible to perform data association and measurement update discussed in the next two sections more efficiently. For future reference, we introduce the short notation for all , .

The messages , , sent from factor nodes to variable nodes can be obtained similarly. In particular, by replacing and in (19) by and , respectively, we obtain . Furthermore, we have . Note that for we set and for we again calculate as discussed in Section IV-B4.

Finally, the messages , sent from factor nodes to variable nodes are calculated by also replacing in (17) by and performing similar simplification steps. In particular, we get for and a result equal to (18) (with replaced by and replaced by ) for . After multiplying both expressions by , the message finally reads

and . For future reference, we again introduce the short notation for all ,

IV-B2 Data Association

IV-B3 Measurement Update

Next, the messages sent from factor nodes , , to variable nodes are calculated as [33, Eq. (6)]

| (23) |

By using again the expression for introduced in Section III-B, these messages can be further simplified as

| (24) |

By using the simplification of (21) discussed in the previous Section IV-B2, we can rewrite (24) as

| (25) |

where we introduced the sum

| (26) |

A similar result can be obtained for the messages sent from factor nodes , , to variable nodes . In particular, these messages are obtained by replacing in (23) the functions and by and as well as by performing the same simplification step discussed above.

By performing similar steps, we can then also obtain the messages , sent from to new PO state as

| (27) |

The calculation of , , and , is based on the sum

IV-B4 Extrinsic Information

Finally, updated messages used in the next message passing iteration for legacy POs are obtained as [33, Eq. (5)]

| (28) |

Note that for non-existent objects, i.e., , the messages have the form .

Similarly for new POs , we obtain

| (29) |

for and for . Again, for , the messages have the form .

IV-C Belief Calculation

After the last iteration , the belief of legacy PO state can be calculated as the normalized product of all incoming messages [33], i.e.,

| (30) |

where the normalization constant (cf. (16) and (25)) reads

| (31) |

Similarly, the belief of augmented new PO state , is given by

| (32) |

Here, is again the normalization constant that guarantees that (32) is a valid probability distribution.

IV-D Computational Complexity and Scalability

In the prediction step, (18) has to be performed times. Thus, its computational complexity scales as . The computational complexity related to each message passing iteration is discussed next. In the measurement evaluation step, for legacy PO , a total of messages have to be calculated. Similarly, for every new PO , a total of messages has to be obtained. Thus, the total number of messages is . The computational complexity related to calculating each individual message is constant in and . Also in the data association, measurement update, and extrinsic information steps, a total of messages have to be calculated at each of the three steps. The computational complexity related to the calculation of the individual messages is again constant in and . For the data association and measurement update steps, this constant computational complexity is obtained by precomputing the sums for each . Similarly, for the extrinsic information step, this constant computational complexity is obtained by precomputing the products , and , .

For fixed, it thus has been verified that the overall computational complexity only scales as . (Here we have omitted since the notation does not track constants.) Recalling that is the total number of legacy and new POs, we can equivalently write . Conservatively, we consider this to be quadratic in the number of measurements and objects, since existing objects are also expected to contribute measurements. We observed that increasing the number of message passing iterations beyond , does not significantly improve performance in typical EOT scenarios. Note that the computational complexity can be further reduced by preclustering of measurements (see, e.g., [7, Section IV]) and censoring of messages (see, e.g., [55, Section IV]). Preclustering combines the measurements to a smaller number of joint “hyper measurements” and replaces the single measurement ratios in (12) and (13) by the corresponding product of ratios. Censoring aims to omit messages related to new POs that are unlikely to represent an actual object.

V Particle-Based Implementation

For general state evolution and measurement models, the integrals in (15)–(18) as well as the message products in (28)–(32) typically cannot be evaluated in closed form. Therefore, we next present an approximate particle-based implementation of these operations that can be seen as a generalization of the particle-based implementation introduced in [40] to EOT. Pseudocode for the presented particle-based implementation is provided in [56, Section 3]. Each belief is represented by a set of particles and corresponding weights . More specifically, is represented by , and is given implicitly by the normalization property of , i.e., . Contrary to conventional particle filtering [59, 60] and as in [40], the particle weights , do not sum to one; instead,

| (33) |

Note that since approximates the posterior probability of existence for the object, it follows that the sum of weights is approximately equal to the posterior probability of existence.

V-A Prediction

The particle operations discussed in this section are performed for all legacy POs in parallel. For each legacy PO , particles and weights representing the previous belief were calculated at the previous time as described further below. Weighted particles representing the message in (15) are now obtained as follows. First, for each particle , , one particle is drawn from . Next, corresponding weights , are obtained as

| (34) |

Note that the proposal distribution [59, 60] underlying (34) is for . A particle-based approximation of in (16) is now obtained as

| (35) |

where . Finally, a particle approximation of introduced in Section IV-A is given by

V-B Measurement Evaluation

Let the weighted particles and the scalar be a particle-based representation of . For , we set and ; for this representation is calculated as discussed in Section V-C. An approximation of the message value in (19), can now be obtained as

Here, is the Monte Carlo integration [60] of in (17). This Monte Carlo integration is based on the proposal distribution . Similarly, an approximation of the message values related to new POs can be obtained. Here, for Monte Carlo integration and further particle-based processing, it was found useful to use a proposal distribution that is calculated from the measurement and its uncertainty characterization, e.g., by means of the unscented transformation [61].

Note that calculation of these messages relies on the likelihood function introduced in (6), which involves the integration . For general nonlinear and non-Gaussian measurement models, evaluation of the likelihood function can potentially also be performed by means of Monte Carlo integration [60]. Alternatively, if the measurement model is invertible in the sense that we can reformulate (5) as

| (36) |

then an approximated linear-Gaussian measurement model (7) can be obtained. In particular, the PDF of (for observed ) is approximated by a Gaussian with mean and covariance matrix . This approximation can be obtained, e.g., by linearizing or by applying the unscented transformation [61]. As a result, closed-form expressions for discussed in Section II-B and [56, Section. 2] can be used.

V-C Measurement Update, Belief Calculation, and Extrinsic Informations

The approximate measurement evaluation messages discussed in Section V-B are used for the subsequent approximation of and (cf. (26)) required in the measurement update step (cf. (25) and (27)). The calculation of the weighted particles that represent the legacy PO belief in (30) is discussed next. Weighted particles representing new PO beliefs (32) and extrinsic information messages in (28) and (29) can be obtained by performing similar steps.

The measurement update step (25) and the belief calculation step (30) are implemented by means of importance sampling [59, 60]. To that end, we first rewrite the belief in (30) by using (15), (16), and (25), i.e.,

| (37) | ||||

Here, we also replaced introduced in (26) by its particle-based approximation (cf. Section V-B), even though we do not indicate this additional approximation in our notation .

We now calculate nonnormalized weights corresponding to (37) for each particle as

| (38) |

Note that here we perform importance sampling with proposal density . This proposal density is represented by the weighted particles . Similarly, we calculate a single nonnormalized weight corresponding to (37) as

| (39) |

in which has been calculated in (35).

Next, weighted particles representing the belief are obtained by reusing the particles and calculating the corresponding new weights as

| (40) |

Here, is a particle-based approximation of the normalization constant in (31). We recall that .

Next, we discuss the calculation of the particle representations of the extrinsic information messages in (28) and (29). For example, weighted particles representing can be obtained as follows. The particle locations and extents are again reused and the corresponding new weights are obtained by replacing in (38) with and with . This is equal to plugging (25) into (28) and evaluating (28) at the particles . Here, normalization of the weights in (40) can be avoided and the constant is obtained as (cf. (39)).

V-D Detection, Estimation, Pruning, and Resampling

The weighted particles can now be used for object detection and estimation. First, for each (legacy or new) PO , an approximation of the existence probability is calculated from the particle weights as in (33). PO is then detected (i.e., considered to exist) if is above a threshold (cf. Section III-A). For the detected objects , an approximation of the MMSE estimate of the kinematic state in (LABEL:eq:mmse) is calculated according to

| (41) |

Similarly, an MMSE estimate of the extent state can be obtained by replacing in (41) by .

VI Numerical Results

Next, we report simulation results evaluating the performance of our method and comparing it with that of the PMBM filter implementation in [1]. Note that a performance comparison with other data association algorithms based on the SPA has been presented in [55].

VI-A Simulation Scenario

We simulated ten extended objects whose states consist of two-dimensional (2-D) position and velocity, i.e., . The objects move in a region of interest (ROI) defined as and according to the nearly constant-velocity motion model, i.e., , where and are chosen as in [62, Section 6.3.2] with s, and with is an independent and identically distributed (iid) sequence of 2-D Gaussian random vectors.

We considered a challenging scenario where the ten object tracks intersect at the ROI center. The object tracks were generated by first assuming that the ten objects start moving toward the ROI center from positions uniformly placed on a circle of radius m about the ROI center, with an initial speed of m/s, and then letting the objects start to exist (i.e., generate measurements) in pairs at times . Object tracks intersect at the ROI center at around time 40 and disappear in pairs at times . The extent of each object is obtained by drawing a sample from the inverse Wishart distribution with mean matrix and degrees of freedom. The extent state of objects does not evolve in time, i.e., it remains unchanged for all time steps. The survival probability is . Example realizations of object tracks and extents are shown in Fig. 3(a).

Since the PMBM filter is based on the Gaussian inverse Wishart model [5], we consider elliptical object shapes and a linear-Gaussian measurement model. In particular, a measurement that was originated by object , is modeled as

| (42) |

where is the measurement noise with m. In addition, is the random relative position of the reflection point with determined by the extent state. The mean of the number of measurements is for all objects and the mean number of false alarm measurements is . The false alarm PDF is uniform on the ROI.

The performance of all simulated methods is measured by the optimal subpattern assignment (OSPA) [63] and the generalized OSPA (GOSPA) [64] metrics. Both metrics are based on the Gaussian-Wasserstein distance with parameters and . The threshold for object declaration is and the threshold for pruning POs is . These parameters are used for all simulated methods.

VI-B Performance Comparison with the PMBM Filter

For the proposed SPA-based method we use the following settings. Newly detected objects are modeled as , with uniformly distributed on the ROI, following a zero-mean Gaussian PDF with covariance matrix , and distributed according to an inverse Wishart distribution with mean matrix and degrees of freedom. The proposed method uses the state transition model in (4) with given by and . The mean number of newly detected objects is set to . To facilitate track initialization, we perform message censoring and ordering of measurements as discussed in [55]. The number of SPA iterations was set to and the number of particles was set to . The resulting parameter combinations are denoted as “SPA-2-1000”, “SPA-3-1000”, “SPA-2-10000”, and “SPA-3-10000”, respectively.

For the PMBM filter, we set the Poisson point process that represents object birth consistent with the newly detected object representation introduced above. Furthermore, the probability of detection is set to . The Gamma distribution has an a priori mean of and a variance of . Its parameters remain unchanged at all time steps. The transformation matrix and maneuvering correlation constant (see [5, Table III]) used for extent prediction are set to and , respectively. The PMBM implementation described in [1] relies on measurement gating and clustering as well as pruning of global association events [5]. The gate threshold is chosen such that the probability that an object-oriented measurement is in the gate is .

Clusters of measurements and likely association events are obtained by using the density-based spatial clustering (DBSCAN) and Murthy’s algorithm, respectively. We simulated two different settings for measurement clustering and event pruning. Coarse clustering “PMBM-C” calculates measurement partitions by using the different distance values equally spaced between and as well as a maximum number of assignments for each partition of measurements. Fine clustering “PMBM-F” clusters with different distance values equally spaced between and as well as uses a maximum number of assignments for each partition of measurements. Clustering is performed for each distance value individually. All resulting clusters are then combined into one joint set of clusters. In this way, a diverse set of overlapping clusters is obtained. We also simulated variants of the PMBM that perform recycling of pruned Bernoulli components [65]. These variants are denoted as “PMBM-CR” and “PMBM-FR”.

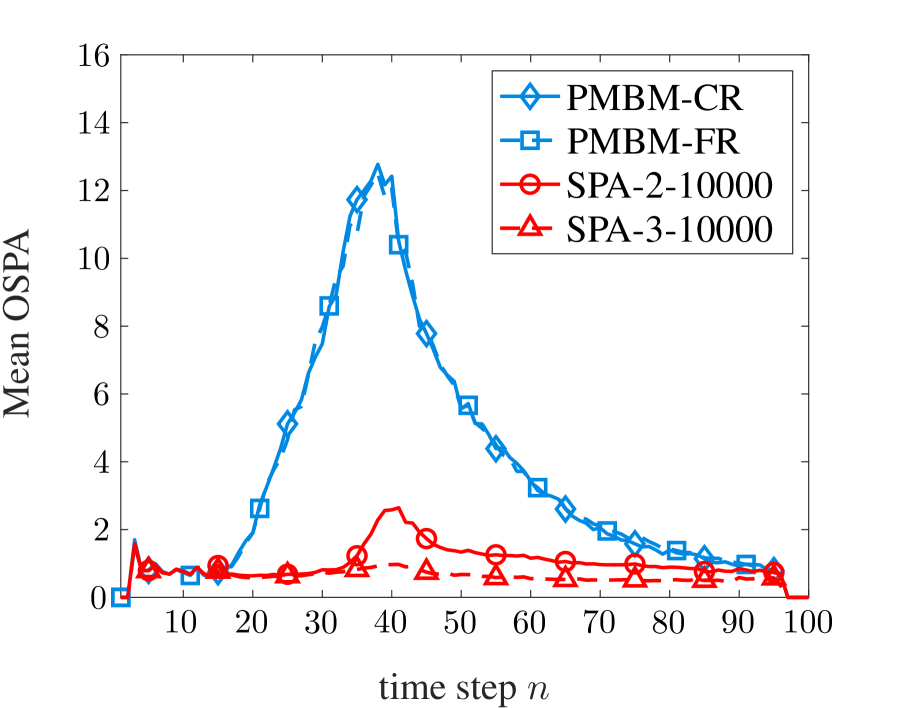

Fig. 3(b) and (c) shows estimation results of SPA-10000 and PMBM-FR. By comparing Fig. 3(c) with Fig. 3(a), it can be seen that PMBM-FR is unable to accurately estimate the state of objects that are in close proximity. Fig. 4 shows the mean OSPA error and its state and cardinality error contributions—averaged over 1200 simulation runs—of four simulated methods versus time. It can be seen that the proposed SPA-based methods outperform the PMBM at those time steps where objects are in close proximity. This can be explained by the fact that, due to its excellent scalability, the proposed SPA-based method can avoid clustering as performed by the PMBM filter implementations. In Fig. 4(c), it is shown that the main reason for the increased OSPA error of PMBM is an increased cardinality error. This is because large clusters that consist of measurements generated by multiple objects are associated to a single object. Thus, to certain other objects, no measurements are assigned, their probability of existence is reduced, and they are declared to be non-existent. The reduced state error of the PMBM methods compared to the proposed SPA method around time step 40 in Fig. 4(b) can be explained as follows. Since in this scenario PMBM methods tend to underestimate the number of objects, the optimum assignment step performed for OSPA calculation tends to find a solution with a lower state error. Table I shows the mean GOSPA error and corresponding individual error contributions as well as runtimes per time step for MATLAB implementations on a single core of an Intel Xeon Gold 5222 CPU. Notably, despite not using gating, measurement clustering, and pruning of association events, the proposed SPA method has a runtime that is comparable with the PMBM filter.

| Method | Total | State | Missed | False | Runtime |

|---|---|---|---|---|---|

| SPA-2-1000 | |||||

| SPA-2-10000 | |||||

| SPA-3-1000 | |||||

| SPA-3-10000 | |||||

| PMBM-FR | |||||

| PMBM-F | |||||

| PMBM-CR | |||||

| PMBM-C |

VI-C Size, Orientation, and Scalability

To investigate the capability of the methods to estimate size and orientation, we considered a scenario similar to that discussed in Section VI-A, changing the prior distribution of object extent to an inverse Wishart distribution with mean . Estimated object sizes are calculated from estimated extent states as the area of the represented ellipses, i.e., as . Similarly, orientation is obtained by restricting the larger of the two eigenvectors of to the upper half plane and calculating its angle. To compute mean size errors and mean orientation errors, we use the optimum assignments of the OSPA [63] metric calculated as discussed in Section VI-A for each time steps and each of the 120 simulation runs. Then, we use these optimum assignments to calculate mean size errors and mean orientation errors. Fig. 5 shows the resulting mean errors of the four simulated methods versus time. It can be seen that the proposed particle-based SPA method can estimate size and orientation more reliably than the PMBM filter when objects are in close proximity

To demonstrate scalability, we furthermore simulated the same scenario discussed in Section VI-A but with the number of objects increased to 20. Fig. 6 shows the mean OSPA error—averaged over 120 simulation runs—of four simulated methods versus time. Also in this larger scenario the performance advantages of the SPA methods over the PMBM methods are comparable to the smaller scenario with 10 objects. Plots for individual error contributions are similar to the ones for the scenario with 10 objects and are thus omitted. The average runtimes per time step for MATLAB implementations on a single core of an Intel Xeon Gold 5222 CPU were measured as 48.1s for SPA-2-10000, 76.0s for SPA-3-10000, 6.7s for PMBM-CR, and 171.4s for PMBM-FR. We emphasize that this simulation is an extreme case, where all 20 objects come into close proximity at the mid-point in time. As previously discussed, additional, well-known techniques can be utilized to limit the growth in complexity for well-spaced objects to linear by decoupling the joint EOT problem into smaller separate ones. The unique benefit of the proposed method is its ability to scale in problems where object proximity precludes trivial decoupling.

VII Real-Data Processing

To validate our method, we present results in an urban autonomous driving scenario. The observations are part of the nuScenes datasets [66] and were collected by a lidar sensor mounted on the roof of an autonomous vehicle. The dataset consists of 850 scenes. Every scene has a duration of s and consists of approximately 400 sets of lidar observations (or “point clouds”) and corresponding ground truth annotations for vehicles and pedestrians. A single lidar measurement is given by the 3-D Cartesian coordinates of a reflecting obstacle in the environment. Annotations are 3-D cubes defined by a position, dimensions, and heading.

We focused on tracking of vehicles in the environment. To extract measurement points related to reflections on vehicles, we used the supervised learning method presented in [67]. In particular, we employed the point clouds and annotations of 700 scenes for the training of the deep neural network (see [67] for details). After training, measurement extraction provides cubes that indicate vehicle locations and corresponding confidence scores on . We only used lidar observations inside vehicle-related detected cubes that have a confidence score larger than as measurements for the EOT methods. Measurements and ground truth annotations are converted to a global reference frame. The ROI covers an area of m2. The four scenes S1–S4888 These four scenes can be identified by their tokens 8b31aa5cac3846a6 a197c507523926f9, 9e79971671284ff482c63a1f658e703d, ee 4979d9ef9e417f9337d09d62072692, and 06b1e705b90641ce83 500b71c0dbf037, respectively (see [66] for details). considered for performance evaluation, have not been used for the training.

We represent the extent state of vehicles by using the 2-D version of the cubical model introduced in Section II-B and [56, Section 2]. The kinematic states of vehicles is modeled by their 2-D position and velocity, as well as their turn rate and their mean number of generated measurements , i.e., . Since the number of measurements that a vehicle generates strongly depends on its distance to the lidar sensor, the mean number of measurements is part of the random vehicle state, i.e., . The mean number of measurements follows the state transition model in [5, Table III] with parameter . The kinematic vehicle state and the extent state follow the state transition model in (4) with and as given in [12]. The parameters of the state transition model are , , and . The survival probability is . In addition, the linear-Gaussian measurement model in (42) with m was used. Extracted lidar observations are voxelized with a resolution of m and their z-coordinate is ignored. We model newly detected vehicles as , with uniformly distributed on the ROI and following an inverse Wishart distribution with mean matrix and degrees of freedom. In addition, we set where is zero-mean Gaussian distributed with covariance matrix and follows a Gamma distribution with mean and variance . The number of SPA iterations is and the number of particles was again either set to or to (denoted as SPA-1000 and SPA-10000, respectively). All the other parameters are set as described in Section VI-B.

Fig. 7 shows the lidar observations, true vehicle positions, true vehicle extents, true vehicle tracks, estimated vehicle positions, estimated vehicle extents, and estimated vehicle tracks for time step of scene S4. It can seen that all five actual vehicles are detected and tracked reliably. Videos of estimation results are available on fmeyer.ucsd.edu.

We use the PMBM filter based on the same system model described in Section VI-A as a reference method. The driving noise standard deviation of the PMBM was set to . Furthermore, the transformation matrix and maneuvering correlation constant (see [5, Table III]) used for extent prediction were set to and respectively. All other model parameters were chosen as for the proposed method. The parameters for measurement clustering, event pruning, and recycling were set as discussed in Section VI-B (denoted as PMBM-C, PMBM-F, PMBM-CR, and PMBM-FR).

As performance metric we use the generalized OSPA (GOSPA) [64] based on the 2-norm with parameters and . Only the kinematic state was used for the evaluation of the GOSPA. The mean GOSPA for the individual methods—averaged over the four considered scenes and all time steps—as well as runtimes per time step on a single core of an Intel Xeon Gold 5222 CPU are summarized in Table II. It can again be seen that the proposed SPA-based method outperforms the PMBM in terms of mean GOSPA and mean individual error contributions. These performance advantages of the proposed SPA-based method are related to its more accurate system model as well as its particle-based implementation. Interestingly, all PMBM variants perform very similarly.

| Method | Total | State | Missed | False | Runtime |

|---|---|---|---|---|---|

| SPA-1000 | |||||

| SPA-10000 | |||||

| PMBM-FR | |||||

| PMBM-F | |||||

| PMBM-CR | |||||

| PMBM-C |

VIII Conclusion

Detection, localization, and tracking of multiple objects is a key task in a variety of applications including autonomous navigation and applied ocean sciences. This paper introduced a scalable method for EOT. The proposed method is based on a factor graph formulation and the SPA. It dynamically introduces states of newly detected objects, and efficiently performs probabilistic data association, allowing for multiple measurements per object. Scalable inference of object tracks and their geometric shape is enabled by modeling association uncertainty by measurement-oriented association variables and newly detected objects by a Poisson birth process. The fully particle-based approach can represent the extent of objects by different geometric shapes. In addition, it yields a computational complexity that only scales quadratically in the number of objects and the number of measurements. This excellent scalability translates to an improved EOT performance compared to existing methods because it makes it possible to (i) generate and maintain a very large number of POs and (ii) avoid clustering of measurements, allowing problems with closely-spaced extended objects to be addressed.

Simulation results in a challenging scenario with intersecting object tracks showed that the proposed method can outperform the recently introduced Poisson multi-Bernoulli (PMBM) filter despite yielding a reduced computational complexity. The application of the proposed method to real measurement data captured by a lidar sensor in an urban autonomous driving scenario also demonstrated performance advantages compared to the PMBM filter. For the extraction of vehicle-related lidar measurements, supervised learning based on a deep neural network was used. This motivates future research on multiobject tracking methods that closely integrate neural networks and iterative message passing. Other promising directions for future research are the development of highly parallelized variants of the proposed method that exploit particle flow [68] and are suitable for real-time implementations on graphical processing units (GPUs).

Acknowledgment

The authors would like to thank Dr. E. Leitinger and W. Zhang for carefully reading the manuscript.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c2ac9e25-d454-4d02-9709-ce2408de753a/x14.png) |

Florian Meyer (S’12–M’15) received the Dipl.-Ing. (M.Sc.) and Ph.D. degrees (with highest honors) in electrical engineering from TU Wien, Vienna, Austria in 2011 and 2015, respectively. He is an Assistant Professor with the University of California San Diego, La Jolla, CA, jointly between the Scripps Institution of Oceanography and the Electrical and Computer Engineering Department. From 2017 to 2019 he was a Postdoctoral Fellow and Associate with Laboratory for Information & Decision Systems at Massachusetts Institute of Technology, Cambridge, MA, and from 2016 to 2017 he was a Research Scientist with NATO Centre for Maritime Research and Experimentation, La Spezia, Italy. Dr. Meyer was a keynote speaker at the IEEE Aerospace Conference in 2020. He served on the technical program committees of several IEEE conferences and as a co-chair of the IEEE Workshop on Advances in Network Localization and Navigation at the IEEE International Conference on Communications in 2018, 2019, and 2020. He is an Associate Editor for the IEEE Transactions on Aerospace and Electronic Systems and the ISIF Journal of Advances in Information Fusion as well as an Erwin Schrödinger Fellow. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c2ac9e25-d454-4d02-9709-ce2408de753a/JasonWilliams.png) |

Jason L. Williams (S’01–M’07–SM’16) received degrees of BE(Electronics)/BInfTech from Queensland University of Technology, MSEE from the United States Air Force Institute of Technology, and PhD from Massachusetts Institute of Technology. He is currently a Senior Research Scientist in Robotic Perception at the Robotics and Autonomous Systems Group of Commonwealth Scientific and Industrial Research Organisation, Brisbane, Australia. His research interests include SLAM, computer vision, multiple object tracking and motion planning. He previously worked in sensor fusion and resource management as a Senior Research Scientist at the Defence Science and Technology Group, Australia, and in electronic warfare as an engineering officer in the Royal Australian Air Force. |

References

- [1] K. Granström, M. Baum, and S. Reuter, “Extended object tracking: Introduction, overview and applications,” J. Adv. Inf. Fusion, vol. 12, no. 2, pp. 139–174, Dec. 2017.

- [2] Y. Bar-Shalom, P. K. Willett, and X. Tian, Tracking and Data Fusion: A Handbook of Algorithms. Storrs, CT: Yaakov Bar-Shalom, 2011.

- [3] R. Mahler, Statistical Multisource-Multitarget Information Fusion. Norwood, MA: Artech House, 2007.

- [4] F. Meyer, T. Kropfreiter, J. L. Williams, R. A. Lau, F. Hlawatsch, P. Braca, and M. Z. Win, “Message passing algorithms for scalable multitarget tracking,” Proc. IEEE, vol. 106, no. 2, pp. 221–259, Feb. 2018.

- [5] K. Granström, M. Fatemi, and L. Svensson, “Poisson multi-Bernoulli mixture conjugate prior for multiple extended target filtering,” IEEE Trans. Aerosp. Electron. Syst., vol. 56, no. 1, pp. 208–225, Feb. 2020.

- [6] M. Schuster, J. Reuter, and G. Wanielik, “Probabilistic data association for tracking extended targets under clutter using random matrices,” in Proc. FUSION-15, Washington DC, Jul. 2015, pp. 961–968.

- [7] K. Granström, C. Lundquist, and U. Orguner, “Extended target tracking using a Gaussian-mixture PHD filter,” IEEE Trans. Aerosp. Electron. Syst., vol. 48, no. 4, pp. 3268–3285, Oct. 2012.

- [8] M. Beard, S. Reuter, K. Granström, B. Vo, B. Vo, and A. Scheel, “Multiple extended target tracking with labeled random finite sets,” IEEE Trans. Signal Process., vol. 64, no. 7, pp. 1638–1653, Apr. 2016.

- [9] Y. Xia, K. Granström, L. Svensson, Ángel F. García-Fernández, and J. L. Williams, “Extended target Poisson multi-Bernoulli mixture trackers based on sets of trajectories,” in Proc. FUSION-19, Ottawa, Canada, Jul. 2019.

- [10] J. W. Koch, “Bayesian approach to extended object and cluster tracking using random matrices,” IEEE Trans. Aerosp. Electron. Syst., vol. 44, no. 3, pp. 1042–1059, Jul. 2008.

- [11] C.-Y. Feldmann, D. Fränken, and J. W. Koch, “Tracking of extended objects and group targets using random matrices,” IEEE Trans. Signal Process., vol. 59, no. 4, pp. 1409–1420, Apr. 2011.

- [12] K. Granström and U. Orguner, “New prediction for extended targets with random matrices,” IEEE Trans. Aerosp. Electron. Syst., vol. 50, no. 2, pp. 1577–1589, 2014.

- [13] S. Yang and M. Baum, “Tracking the orientation and axes lengths of an elliptical extended object,” IEEE Trans. Signal Process., vol. 67, no. 18, pp. 4720–4729, Sep. 2019.

- [14] G. Vivone, K. Granström, P. Braca, and P. Willett, “Multiple sensor measurement updates for the extended target tracking random matrix model,” IEEE Trans. Aerosp. Electron. Syst., vol. 53, no. 5, pp. 2544–2558, 2017.

- [15] J. L. Williams, “Marginal multi-Bernoulli filters: RFS derivation of MHT, JIPDA and association-based MeMBer,” IEEE Trans. Aerosp. Electron. Syst., vol. 51, no. 3, pp. 1664–1687, Jul. 2015.

- [16] D. B. Reid, “An algorithm for tracking multiple targets,” IEEE Trans. Autom. Control, vol. 24, no. 6, pp. 843–854, Dec. 1979.

- [17] T. Kurien, “Issues in the design of practical multitarget tracking algorithms,” in Multitarget-Multisensor Tracking: Advanced Applications, Y. Bar-Shalom, Ed. Norwood, MA: Artech-House, 1990, pp. 43–83.

- [18] S. Coraluppi and C. Carthel, “Distributed tracking in multistatic sonar,” IEEE Trans. Aerosp. Electron. Syst., vol. 41, no. 3, pp. 1138–1147, Jul. 2005.

- [19] B.-N. Vo, S. Singh, and A. Doucet, “Sequential Monte Carlo methods for multitarget filtering with random finite sets,” IEEE Trans. Aerosp. Electron. Syst., vol. 41, no. 4, pp. 1224–1245, Oct. 2005.

- [20] B.-T. Vo, B.-N. Vo, and A. Cantoni, “Analytic implementations of the cardinalized probability hypothesis density filter,” IEEE Trans. Signal Process., vol. 55, no. 7, pp. 3553–3567, Jul. 2007.

- [21] B.-N. Vo, B.-T. Vo, and D. Phung, “Labeled random finite sets and the Bayes multi-target tracking filter,” IEEE Trans. Signal Process., vol. 62, no. 24, pp. 6554–6567, Dec. 2014.

- [22] B. K. Habtemariam, R. Tharmarasa, T. Kirubarajan, D. Grimmett, and C. Wakayama, “Multiple detection probabilistic data association filter for multistatic target tracking,” in Proc. FUSION-11, Jul. 2011.

- [23] L. Hammarstrand, L. Svensson, F. Sandblom, and J. Sorstedt, “Extended object tracking using a radar resolution model,” IEEE Trans. Aerosp. Electron. Syst., vol. 48, no. 3, pp. 2371–2386, Jul. 2012.

- [24] B. Habtemariam, R. Tharmarasa, T. Thayaparan, M. Mallick, and T. Kirubarajan, “A multiple-detection joint probabilistic data association filter,” IEEE J. Sel. Topics Signal Process., vol. 7, no. 3, pp. 461–471, Jun. 2013.

- [25] F. Meyer and M. Z. Win, “Scalable data association for extended object tracking,” IEEE Trans. Signal Inf. Process. Netw., vol. 6, pp. 491–507, May 2020.

- [26] S. P. Coraluppi and C. A. Carthel, “Multiple-hypothesis tracking for targets producing multiple measurements,” IEEE Trans. Aerosp. Electron. Syst., vol. 54, no. 3, pp. 1485–1498, Jun. 2018.

- [27] G. Gennarelli, G. Vivone, P. Braca, F. Soldovieri, and M. G. Amin, “Multiple extended target tracking for through-wall radars,” IEEE Trans. Geosci. Remote Sens., vol. 53, no. 12, pp. 6482–6494, Dec. 2015.

- [28] G. Vivone and P. Braca, “Joint probabilistic data association tracker for extended target tracking applied to X-band marine radar data,” IEEE J. Ocean. Eng., vol. 41, no. 4, pp. 1007–1019, Oct. 2016.

- [29] K. Granström, L. Svensson, S. Reuter, Y. Xia, and M. Fatemi, “Likelihood-based data association for extended object tracking using sampling methods,” IEEE Trans. Intell. Veh., vol. 3, no. 1, pp. 30–45, 2018.

- [30] J. Böhler, T. Baur, S. Wirtensohn, and J. Reuter, “Stochastic partitioning for extended object probability hypothesis density filters,” in Proc. SDF-19, Bonn, Germany, 2019.

- [31] T. Kropfreiter, F. Meyer, and F. Hlawatsch, “A fast labeled multi-Bernoulli filter using belief propagation,” IEEE Trans. Aerosp. Electron. Syst., vol. 56, no. 3, pp. 2478–2488, Jun. 2020.

- [32] D. Koller and N. Friedman, Probabilistic Graphical Models: Principles and Techniques. Cambridge, MA: MIT Press, 2009.

- [33] F. R. Kschischang, B. J. Frey, and H.-A. Loeliger, “Factor graphs and the sum-product algorithm,” IEEE Trans. Inf. Theory, vol. 47, no. 2, pp. 498–519, Feb. 2001.

- [34] J. Yedidia, W. Freemand, and Y. Weiss, “Constructing free-energy approximations and generalized belief propagation algorithms,” IEEE Trans. Inf. Theory, vol. 51, no. 7, pp. 2282–2312, July 2005.

- [35] M. P. C. Fossorier, M. Mihaljevic, and H. Imai, “Reduced complexity iterative decoding of low-density parity check codes based on belief propagation,” IEEE Trans. Commun., vol. 47, no. 5, pp. 673–680, May 1999.

- [36] T. J. Richardson and R. L. Urbanke, “The capacity of low-density parity-check codes under message-passing decoding,” IEEE Trans. Inf. Theory, vol. 47, no. 2, pp. 599–618, Feb. 2001.

- [37] F. Meyer, B. Etzlinger, Z. Liu, F. Hlawatsch, and M. Z. Win, “A scalable algorithm for network localization and synchronization,” IEEE Internet Things J., vol. 5, no. 6, pp. 4714–4727, Dec. 2018.

- [38] J. L. Williams and R. Lau, “Approximate evaluation of marginal association probabilities with belief propagation,” IEEE Trans. Aerosp. Electron. Syst., vol. 50, no. 4, pp. 2942–2959, Oct. 2014.

- [39] T. Kropfreiter, F. Meyer, and F. Hlawatsch, “Sequential Monte Carlo implementation of the track-oriented marginal multi-Bernoulli/Poisson filter,” in Proc. FUSION-16, Jul. 2016, pp. 972–979.

- [40] F. Meyer, P. Braca, P. Willett, and F. Hlawatsch, “A scalable algorithm for tracking an unknown number of targets using multiple sensors,” IEEE Trans. Signal Process., vol. 65, no. 13, pp. 3478–3493, Jul. 2017.

- [41] G. Soldi, F. Meyer, P. Braca, and F. Hlawatsch, “Self-tuning algorithms for multisensor-multitarget tracking using belief propagation,” IEEE Trans. Signal Process., vol. 67, no. 15, pp. 3922–3937, Aug. 2019.

- [42] R. A. Lau and J. L. Williams, “A structured mean field approach for existence-based multiple target tracking,” in Proc. FUSION-16, Heidelberg, Germany, Jul. 2016, pp. 1111–1118.

- [43] W. Zhang and F. Meyer, “Graph-based multiobject tracking with embedded particle flow,” in Proc. IEEE RadarConf-21, Atlanta, GA, USA, May 2021.

- [44] E. Leitinger, F. Meyer, P. Meissner, K. Witrisal, and F. Hlawatsch, “Belief propagation based joint probabilistic data association for multipath-assisted indoor navigation and tracking,” in Proc. ICL-GNSS-16, Barcelona, Spain, Jun. 2016.

- [45] E. Leitinger, F. Meyer, F. Hlawatsch, K. Witrisal, F. Tufvesson, and M. Z. Win, “A belief propagation algorithm for multipath-based SLAM,” IEEE Trans. Wireless Commun., vol. 18, no. 11, pp. 5613–5629, Nov. 2019.

- [46] R. Mendrzik, F. Meyer, G. Bauch, and M. Z. Win, “Enabling situational awareness in millimeter wave massive MIMO systems,” IEEE J. Sel. Topics Signal Process., vol. 13, no. 5, pp. 1196–1211, Sep. 2019.

- [47] X. Li, E. Leitinger, A. Venus, and F. Tufvesson, “Sequential detection and estimation of multipath channel parameters using belief propagation,” 2021.

- [48] F. Meyer and M. Z. Win, “Joint navigation and multitarget tracking in networks,” in Proc. IEEE ICC-18, Kansas City, MO, May 2018.

- [49] F. Meyer, O. Hlinka, H. Wymeersch, E. Riegler, and F. Hlawatsch, “Distributed localization and tracking of mobile networks including noncooperative objects,” IEEE Trans. Signal Inf. Process. Netw., vol. 2, no. 1, pp. 57–71, Mar. 2016.

- [50] F. Meyer, Z. Liu, and M. Z. Win, “Network localization and navigation using measurements with uncertain origin,” in Proc. FUSION-18, Cambridge, UK, Jul. 2018, pp. 2237–2243.

- [51] ——, “Scalable probabilistic data association with extended objects,” in Proc. IEEE ICC-19, Shanghai, China, May 2019.

- [52] Y. Li, P. Wei, Y. Chen, Y. Wei, and H. Zhang, “Message passing based extended objects tracking with measurement rate and extension estimation,” in Proc. IEEE RadarConf-21, Atlanta, GA, USA, May 2021.

- [53] S. Yang, K. Thormann, and M. Baum, “Linear-time joint probabilistic data association for multiple extended object tracking,” in Proc. IEEE SAM-18, Sheffield, UK, Feb. 2018, pp. 6–10.

- [54] S. Yang, L. M. Wolf, and M. Baum, “Marginal association probabilities for multiple extended objects without enumeration of measurement partitions,” in Proc. FUSION-20, Pretoria, South Africa, Jul. 2020.

- [55] F. Meyer and J. L. Williams, “Scalable detection and tracking of extended objects,” in Proc. ICASSP-20, Barcelona, Spain, May 2020, pp. 8916–8920.

- [56] F. Meyer and J. L. Williams, “Scalable detection and tracking of geometric extended objects: Supplementary material,” 2021, arXiv:2103.11279.

- [57] H. V. Poor, An Introduction to Signal Detection and Estimation, 2nd ed. New York: Springer-Verlag, 1994.

- [58] J. L. Williams and R. A. Lau, “Multiple scan data association by convex variational inference,” IEEE Trans. Signal Process., vol. 66, no. 8, pp. 2112–2127, Apr. 2018.

- [59] M. S. Arulampalam, S. Maskell, N. Gordon, and T. Clapp, “A tutorial on particle filters for online nonlinear/non-Gaussian Bayesian tracking,” IEEE Trans. Signal Process., vol. 50, no. 2, pp. 174–188, Feb. 2002.

- [60] A. Doucet, N. de Freitas, and N. Gordon, Sequential Monte Carlo Methods in Practice. Springer, 2001.

- [61] S. J. Julier and J. K. Uhlmann, “Unscented filtering and nonlinear estimation,” Proc. IEEE, vol. 92, no. 3, pp. 401–422, Mar. 2004.

- [62] Y. Bar-Shalom, T. Kirubarajan, and X.-R. Li, Estimation with Applications to Tracking and Navigation. New York, NY, USA: Wiley, 2002.

- [63] D. Schuhmacher, B.-T. Vo, and B.-N. Vo, “A consistent metric for performance evaluation of multi-object filters,” IEEE Trans. Signal Process., vol. 56, no. 8, pp. 3447–3457, Aug. 2008.

- [64] A. S. Rahmathullah, A. F. Garcia-Fernandez, and L. Svensson, “Generalized optimal sub-pattern assignment metric,” in Proc. FUSION-17, Xi’an, China, Jul. 2017.

- [65] J. L. Williams, “Hybrid Poisson and multi-Bernoulli filters,” in Proc. FUSION-12, Singapore, Jul. 2012, pp. 1103–1110.

- [66] The nuScenes Dataset. [Online]. Available: https://www.nuscenes.org/

- [67] B. Zhu, Z. Jiang, X. Zhou, Z. Li, and G. Yu, “Class-balanced grouping and sampling for point cloud 3D object detection,” arXiv:1908.09492, 2019.

- [68] Y. Li and M. Coates, “Particle filtering with invertible particle flow,” IEEE Trans. Signal Process., vol. 65, no. 15, pp. 4102–4116, Aug. 2017.