44email: [email protected]; [email protected]

SAMConvex: Fast Discrete Optimization for CT Registration using Self-supervised Anatomical Embedding and Correlation Pyramid

Abstract

Estimating displacement vector field via a cost volume computed in the feature space has shown great success in image registration, but it suffers excessive computation burdens. Moreover, existing feature descriptors only extract local features incapable of representing the global semantic information, which is especially important for solving large transformations. To address the discussed issues, we propose SAMConvex, a fast coarse-to-fine discrete optimization method for CT registration that includes a decoupled convex optimization procedure to obtain deformation fields based on a self-supervised anatomical embedding (SAM) feature extractor that captures both local and global information. To be specific, SAMConvex extracts per-voxel features and builds 6D correlation volumes based on SAM features, and iteratively updates a flow field by performing lookups on the correlation volumes with a coarse-to-fine scheme. SAMConvex outperforms the state-of-the-art learning-based methods and optimization-based methods over two inter-patient registration datasets (Abdomen CT and HeadNeck CT) and one intra-patient registration dataset (Lung CT). Moreover, as an optimization-based method, SAMConvex only takes s ( with instance optimization) for one paired images.

Keywords:

Medical Image Registration Large Deformation.1 Introduction

Deformable image registration [21], a fundamental medical image analysis task, has traditionally been approached as a continuous optimization [19, 1, 2, 9, 24] problem over the space of dense displacement fields between image pairs. The iterative process always leads to inefficiency. Recent learning-based approaches that use a deep network to predict a displacement field [3, 30, 18, 16, 25, 17, 6], yield much faster runtime and have gained huge attention. However, they often struggle with versatile applicability due to the fact that they require training per registration task. Moreover, gathering enough data for the training is not a trivial task in practice. In addition, both optimization and learning-based methods rely on similarity measures computed over the intensity, which prevents the methods to utilize the anatomy correspondence. Several works [8, 10] use feature descriptors that provide modality and contrast invariant information but they still can only represent local information and do not contain the global semantic information. Thus, they face challenges in settings with large deformations or complex anatomical differences (e.g., inter-patient Abdomen).

To address this issue, we incorporate a Self-supervised Anatomical eMbedding (SAM) [27] into registration. SAM generates a unique semantic embedding for each voxel in CT that describes its anatomical structure, thus, providing semantically coherent information suitable for registration. SAME [15] enhances learning-based registration with SAM embeddings, but it suffers the applicability issue as the other learning-based methods even when the SAM embedding is pre-trained and ready to use out of the box for multiple anatomical regions.

Registration has also been formulated as a discrete optimization problem [23, 5, 26, 7, 20] that employs a dense set of discrete displacements, called cost volume. The main challenge of this category of approach is the massive size of the search space, as millions of voxels exist in a typical 3D CT scan and each voxel in the moving scan can be reasonably paired with thousands of points in the other scan, leading to a high computational burden. To obtain fast registration with discrete optimization, Heinrich et al. [11] prunes the search space by constructing the cost volume only within the neighborhood of each voxel. However, the magnitude range of the deformation it can solve is limited by the size of the neighborhood window, leading to reliance on an accurate pre-alignment.

We propose SAMConvex, a coarse-to-fine discrete optimization method for CT registration. Specifically, SAMConvex presents two main components: (1) a discriminative feature extractor that encodes global and local embeddings for each voxel; (2) a lightweight correlation pyramid that constructs multi-scale 6D cost volume by taking the inner product of SAM embeddings. It enjoys the strengths of state-of-the-art accuracy (Sec. 3.2), good generalization (Sec. 3.2 and Sec. 3.3) and fast runtime (Sec. 3.2).

2 SAMConvex Method

2.1 Problem Formulation

Given a source image and a target image within a spatial domain , our goal is to find the spatial transformation . We aim at minimizing the following energy:

| (1) |

where the transformation model is the displacement vector field (DVF) with being the identity transformation, the is the similarity term measuring the similarity between and warped image , is the diffusion regularizer that advocates the regularity of . weights between the data matching term and the regularization term.

2.2 Decoupling to Convex Optimizations

We conduct the optimization scheme proposed in [4, 22, 29, 11] and we give a brief review here. By introducing an extra term into Eq. 1

| (2) |

the optimization then can be decomposed into two sub-optimization problems111For better illustration, we substitute and with in the two equations in Eq. 3, respectively.

| (3) |

with each can be solved via global optimizations. By alternatively optimizing the two subproblems and progressively reducing during the alternative optimization, we get and obtain the solution of Eq. 1 as . To be noted, the first problem in Eq. 3 can be solved point-wise because the spatial derivatives of is not involved. We can search over the cost volume of each point to obtain the optimal solution of the first problem in Eq. 3. For the second problem, we are inspired by mean-field inference [14] and process using average pooling.

2.3 using Global & Local Semantic Embedding

Presumably, handling complex registration tasks rely on: (1) distinct features that are robust to inter-subject variation, organ deformation, contrast injection, and pathological changes, and (2) global/contextual information that can benefit the registration accuracy in complex deformation. To achieve these goals, we adopt self-supervised anatomical embedding (SAM) [27] based feature descriptor that encodes both global and local embeddings. The global embeddings memorize the 3D contextual information of body parts on a coarse level, while the local embeddings differentiate adjacent structures with similar appearances, as shown on the left of Fig. 1. The former helps the latter to focus on small regions to distinguish with fine-level features.

To be specific, given an image , we utilize a pre-trained SAM model that outputs a global embedding and a local embedding . We resample the global embedding with bilinear interpolation to match the shape of to and concatenate them to get . We normalize and before concatenation and adopt the dot product as the similarity measure in the following part, with a higher value indicating better alignment.

With , we construct as the cost volume in the SAM feature space within a neighborhood of , where represents searching radius. Given two images and , the resulting can then be formulated as

| (4) |

where and are the SAM embedding of and , respectively. is any voxel in the image domain and is the voxel displacement within the neighborhood. has the shape of .

2.4 Coarse-to-fine Optimization Strategy

Since is computed within the neighborhood, the magnitude of deformation is bounded by the size of the neighborhood. Our approach to addressing this issue is based on a surprisingly simple but effective observation: performing registration based on a coarse-to-fine scheme with a small search range at each level instead of a large search range at one resolution benefits to 1) significantly improve the efficiency such as low computation burden and fast running time; 2) enlarge receptive field and improve registration accuracy.

We first build an image pyramid of and . At each resolution, we warp the source image with the composed deformation computed from all the previous levels (starting from the coarsest resolution), transform the warped image and target image to the SAM space, and conduct the decoupling to convex optimizations strategy to obtain the deformation between the warped image and target at the current resolution. With such a coarse-to-fine strategy, we estimate a sequence of displacement fields from a starting identity transformation. The final field is computed via the composition of all the displacement fields [30].

To be noted, the cost volume at each level is computed by taking the inputs of resolution. Adding one coarser level consumes less computation than doubling the size of the neighborhood at the fine resolution but yields the same search range.

2.5 Implementation Detail

We conduct the registration in 3 levels of resolutions. At each level, we solve Eq. 3 via five alternative optimizations with . To further improve the registration performance, we append a SAM-based instance optimization after the coarse-to-fine registration with a learning rate of for iterations. It solves an instance-specific optimization problem [3] in the SAM feature space. The optimization objective consists of similarity (dot product between SAM feature vectors on the highest resolution) and diffusion regularization terms. All the experiments are run on the CPU of Intel Xeon Platinum 8163 with 16 cores of 2.50GHz and the GPU of NVIDIA Tesla V100. Code will be available at https://github.com/Alison-brie/SAMConvex.

3 Experiments

3.1 Datasets and Evaluation Metrics

We split Abdomen and HeadNeck into a training set and test set to accommodate the requirement of the training dataset of comparing learning-based methods. Lung dataset contains 35 CT pairs, which is not sufficient for developing learning-based methods. Hence, it is only used as a testing set for optimization-based methods. All the methods are evaluated on the test set. The SAM is pre-trained on NIH Lymph Nodes dataset [27]. All 3 datasets in this paper are not used for pre-training.

Inter-patient task on Abdomen: The Abdomen CT dataset [12] contains 30 abdominal scans with 20 for training and 10 for testing. Each image has 13 manually labeled anatomical structures: spleen, right kidney, left kidney, gall bladder, esophagus, liver, stomach, aorta, inferior vena cava, portal and splenic vein, pancreas, left adrenal gland, and right adrenal gland. The images are resampled to the same voxel resolution of 2 mm and spatial dimensions of .

Inter-patient task on HeadNeck: The HeadNeck CT dataset [28] contains 72 subjects with 13 organs labeled. The manually labeled anatomical structures include the brainstem, left eye, right eye, left lens, right lens, optic chiasm, left optic nerve, right optic nerve, left parotid, right parotid, left and right temporomandibular joint, and spinal cord. We split the dataset into 52, 10, and 10 for training, validation, and test set. For testing, we construct 90 image pairs for registration, with each image, resampled to an isotropic resolution of 2 mm and cropped to .

Intra-patient task on Lung: The images are acquired as part of the radiotherapy planning process for the treatment of malignancies. We collect a lung 4D CT dataset containing 35 patients, each with inspiratory and expiratory breath-hold image pairs, and take this dataset as an extra test set. Each image has labeled malignancies, and we try to align two phases for motion estimation of malignancies. The images are resampled to a spacing of mm and cropped to . All images are used as testing cases.

Evaluation metrics: We use the average Dice score (DSC) to evaluate the accuracy and compute the standard deviation of the logarithm of the Jacobian determinant (SDlogJ) to evaluate the plausibility of deformation fields, also comparing running time () on the same hardware.

| Method | Abdomen CT | HeadNeck CT | Lung CT | ||||||

| DSC13 | SDlogJ | DSC13 | SDlogJ | DSC1 | SDlogJ | ||||

| Initial | 32.64 | - | - | 33.91 | - | - | 67.06 | - | - |

| NiftyReg | 34.98 | 0.22 | 186.54 s | 57.66 | 0.21 | 168.39 s | 74.59 | 0.02 | 85.50 s |

| Deeds | 46.52 | 0.44 | 45.21 s | 61.32 | 1.07 | 42.05 s | 81.53 | 0.49 | 227.59 s |

| ConvexAdam | 44.44 | 0.74 | 6.06 s | 61.45 | 0.77 | 3.12 s | 79.26 | 1.17 | 2.20 s |

| LapIRN | 46.44 | 0.72 | 0.07 s | 60.16 | 0.58 | 0.03 s | - | - | - |

| SAME | 47.12 | 0.90 | 6.88 s | 61.35 | 1.00 | 3.34 s | - | - | - |

| Ours | 48.30 | 0.86 | 2.12 s | 61.88 | 0.79 | 1.88 s | 80.13 | 0.37 | 1.86 s |

| Ours + IO | 51.17 | 0.86 | 5.14 s | 63.72 | 0.88 | 4.59 s | 81.61 | 0.21 | 4.34 s |

3.2 Registration Results

We compare with five widely-used and top-performing deformable registration methods, including NiftyReg [24], Deeds [9], top-ranked ConvexAdam [20] in Learn2Reg Challenge [12], and SOTA learning-based methods LapIRN [18] and SAME [15]. All results are based on the SAM-affine [15] pre-alignment.

As shown in Table. 1, SAMConvex outperforms the widely-used NiftyReg [24] across the three datasets with an average of Dice score improvement. Compared with the best traditional optimization-based method Deeds [9], SAMConvex performs better or comparatively on the three datasets with approximately times faster runtime. We also see a better performance of SAMConvex over ConvexAdam [20]. To be noted, the performance gap between SAMConvex and ConvexAdam is greater on Abdomen CT than the other two datasets. This may be because the deformation is more complex in Abdomen and the anatomical differences between inter-subjects make the registration more challenging. With a coarse-to-fine strategy, SAMConvex can better bear these issues. Although LapIRN [18] has the fastest inference time, it has slightly inferior registration accuracy as compared to SAMConvex. Moreover, it needs training when being applied to a new dataset. Equipped with SAM, SAME achieves the overall 2nd best performance in two inter-patient registration tasks but with a notably higher folding rate overall. Moreover, it also requires individual training for a new dataset and struggles with the intra-patient lung registration task (small dataset and no available training data). In summary, our SAMConvex achieves the overall best performance as compared to other methods with a fast inference time and comparable deformation field smoothness.

To better understand the performance of different deformable registration methods, we display organ-specific results of the inter-patient registration task of abdomen CT in Fig. 2 where large deformations and complex anatomical differences exist. As shown, SAMConvex is consistently better than the second-best and third-best registration methods on most examined abdominal organs, in 11 out of 13 organs.

3.3 Ablation Study on SAMConvex

Loss landscape of SAM

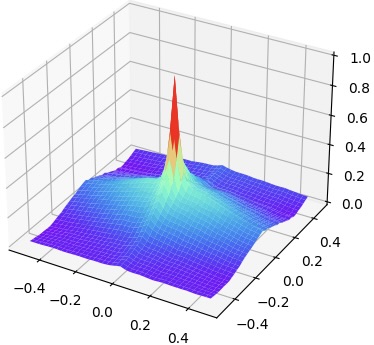

We explore the loss landscapes of SAM-global, SAM-local, SAM (SAM-global and SAM-local), and MIND via varying the transformation parameters. We conduct experiments on the abdomen dataset 222Refer to Appendix 0.A for more experiment results.. Fig. 3 shows the comparison of landscapes when we vary the rotation along two axes. The loss landscape falls to flat quickly when the rotation is greater than degrees and degrees for SAM-local and MIND, respectively. The flattened area indicates where the similarity measure loses the capability to guide the registration because multiple transformation parameters yield the same similarity value. Compared with SAM-local and MIND, SAM-global does not have the flattened area within , meaning it can give correct measure over a larger capture range. However, it does not have the fast converging area shown as a spike for the loss landscapes of MIND and SAM-local. When combining SAM-global and SAM-local together, SAM shows a greater capture range meanwhile fast convergence when the transformation is small.

|

|

|

|

|

|

|

|

| (a) MIND | (b) SAM global | (c) SAM local | (d) SAM |

Robustness to pre-alignment.

We study the robustness of SAMConvex over different affine pre-alignment. Elastix-Affine [13] and SAM-Affine are used as the pre-alignment and results are notated as Initiale and Initiala in Tab. 3.3, respectively. Compared to ConvexAdam, our method SAMConvex is less affected by the performance of the Affine pre-alignment. This is in line with our expectations. The global information contained in SAM provides a greater capture range than features that contain local information only. Thus, SAMConvex can register images with larger transformation, leading to less reliance on the performance of the pre-alignment.

Ablation on coarse-to-fine.

We study how the number of coarse-to-fine layers and how the size of the neighborhood window affect the registration result on the Abdomen dataset. From Tab. 3.3, we can conclude that performing registration with a small search range with a coarse-to-fine scheme instead of a cost volume with a large search range can help to improve registration accuracy and computation efficiency.

| Method | DSC13 | |

|---|---|---|

| Initiale | Initiala | |

| Initial | 25.88 | 32.64 |

| ConvexAdam | 34.42 | 44.44 |

| SAMConvex | 45.38 | 48.30 |

| Method | DSC13 | SDlogJ | |

|---|---|---|---|

| Pym1 | 40.51 | 0.59 | 1.69 s |

| Pym1 | 44.22 | 1.62 | 7.84 s |

| Pym2 | 47.80 | 0.76 | 2.03 s |

| Pym3 | 48.30 | 0.86 | 2.12 s |

Discussion about the differences with ConvexAdam [20].

Apart from the SAM extraction module, first, we introduce a cost volume pyramid to the convex optimization framework to reduce the intensive computation burdens. To be specific, with the coarse-to-fine strategy, one can sparsely search on a coarser level with a smaller search radius in each iteration, reaching the same search range as the complete search with less computational complexity. Second, we explicitly validate the robustness of the SAM feature against geometrical transformations and integrate the SAM feature into an instance-specific optimization pipeline. Our SAMConvex is less sensitive to local minimal, achieving superior performance with comparable running time. Ablation study on pyramid designs (Tab. 3.3) and leading accuracy on large deformation registration tasks (Tab. 1) further support our claims.

4 Conclusion

We present SAMConvex, a coarse-to-fine discrete optimization method for CT registration. It extracts per-voxel semantic global and local features and builds a series of lightweight 6D correlation volumes, and iteratively updates a flow field by performing lookups on the correlation volumes. The performance on two inter-patient and one intra-patient registration datasets demonstrates state-of-the-art accuracy, good generalization, and high computation efficiency of SAMConvex.

References

- [1] Ashburner, J.: A fast diffeomorphic image registration algorithm. NeuroImage 38(1), 95–113 (2007)

- [2] Avants, B.B., Epstein, C.L., Grossman, M., Gee, J.C.: Symmetric diffeomorphic image registration with cross-correlation: evaluating automated labeling of elderly and neurodegenerative brain. Medical image analysis 12(1), 26–41 (2008)

- [3] Balakrishnan, G., Zhao, A., Sabuncu, M.R., Guttag, J.V., Dalca, A.V.: Voxelmorph: A learning framework for deformable medical image registration. IEEE Transactions on Medical Imaging 38(8), 1788–1800 (2019)

- [4] Chambolle, A.: An algorithm for total variation minimization and applications. Journal of Mathematical imaging and vision 20, 89–97 (2004)

- [5] Chen, Q., Koltun, V.: Full flow: Optical flow estimation by global optimization over regular grids. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 4706–4714 (2016)

- [6] Fan, X., Li, Z., Li, Z., Wang, X., Liu, R., Luo, Z., Huang, H.: Automated learning for deformable medical image registration by jointly optimizing network architectures and objective functions. arXiv p. 2203.06810 (2022)

- [7] Heinrich, M.P.: Closing the gap between deep and conventional image registration using probabilistic dense displacement networks. In: International Conference on Medical Image Computing and Computer-Assisted Intervention (2019)

- [8] Heinrich, M.P., Jenkinson, M., Bhushan, M., Matin, T.N., Gleeson, F., Brady, M., Schnabel, J.A.: MIND: modality independent neighbourhood descriptor for multi-modal deformable registration. Medical Image Analysis 16(7), 1423–1435 (2012)

- [9] Heinrich, M.P., Jenkinson, M., Brady, M., Schnabel, J.A.: Globally optimal deformable registration on a minimum spanning tree using dense displacement sampling. In: Medical Image Computing and Computer-Assisted Intervention. vol. 7512, pp. 115–122. Springer (2012)

- [10] Heinrich, M.P., Jenkinson, M., Papiez, B.W., Brady, M., Schnabel, J.A.: Towards realtime multimodal fusion for image-guided interventions using self-similarities. In: Medical Image Computing and Computer-Assisted Intervention. vol. 8149, pp. 187–194 (2013)

- [11] Heinrich, M.P., Papież, B.W., Schnabel, J.A., Handels, H.: Non-parametric discrete registration with convex optimisation. In: Biomedical Image Registration: 6th International Workshop, WBIR 2014, London, UK, July 7-8, 2014. Proceedings 6. pp. 51–61. Springer (2014)

- [12] Hering, A., Hansen, L., Mok, T.C., Chung, A.C., Siebert, H., Häger, S., Lange, A., Kuckertz, S., Heldmann, S., Shao, W., et al.: Learn2reg: comprehensive multi-task medical image registration challenge, dataset and evaluation in the era of deep learning. IEEE Transactions on Medical Imaging (2022)

- [13] Klein, S., Staring, M., Murphy, K., Viergever, M.A., Pluim, J.P.W.: elastix: A toolbox for intensity-based medical image registration. IEEE Transactions on Medical Imaging 29(1), 196–205 (2010)

- [14] Krähenbühl, P., Koltun, V.: Efficient inference in fully connected crfs with gaussian edge potentials. In: Advances in Neural Information Processing Systems. pp. 109–117 (2011)

- [15] Liu, F., Yan, K., Harrison, A.P., Guo, D., Lu, L., Yuille, A.L., Huang, L., Xie, G., Xiao, J., Ye, X., Jin, D.: SAME: deformable image registration based on self-supervised anatomical embeddings. In: Medical Image Computing and Computer Assisted Intervention. vol. 12904, pp. 87–97. Springer (2021)

- [16] Liu, R., Li, Z., Fan, X., Zhao, C., Huang, H., Luo, Z.: Learning deformable image registration from optimization: Perspective, modules, bilevel training and beyond. IEEE Transactions on Pattern Analysis Machine Intelligence 44(11), 7688–7704 (2022)

- [17] Liu, R., Li, Z., Zhang, Y., Fan, X., Luo, Z.: Bi-level probabilistic feature learning for deformable image registration. In: Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence. pp. 723–730 (2020)

- [18] Mok, T.C.W., Chung, A.C.S.: Large deformation diffeomorphic image registration with laplacian pyramid networks. In: Medical Image Computing and Computer Assisted Intervention. vol. 12263, pp. 211–221. Springer (2020)

- [19] Shen, D., Davatzikos, C.: Hammer: hierarchical attribute matching mechanism for elastic registration. IEEE transactions on medical imaging 21(11), 1421–1439 (2002)

- [20] Siebert, H., Hansen, L., Heinrich, M.P.: Fast 3d registration with accurate optimisation and little learning for learn2reg 2021. In: Biomedical Image Registration, Domain Generalisation and Out-of-Distribution Analysis - MICCAI 2021 Challenges: MIDOG 2021, MOOD 2021, and Learn2Reg 2021. vol. 13166, pp. 174–179 (2021)

- [21] Sotiras, A., Davatzikos, C., Paragios, N.: Deformable medical image registration: A survey. IEEE Transactions on Medical Imaging 32(7), 1153–1190 (2013)

- [22] Steinbrücker, F., Pock, T., Cremers, D.: Large displacement optical flow computation withoutwarping. In: 2009 IEEE 12th International Conference on Computer Vision. pp. 1609–1614. IEEE (2009)

- [23] Steinbrücker, F., Pock, T., Cremers, D.: Large displacement optical flow computation withoutwarping. In: 2009 IEEE 12th International Conference on Computer Vision. pp. 1609–1614 (2009). https://doi.org/10.1109/ICCV.2009.5459364

- [24] Sun, W., Niessen, W.J., Klein, S.: Free-form deformation using lower-order b-spline for nonrigid image registration. In: Medical Image Computing and Computer Assisted Intervention. pp. 194–201 (2014)

- [25] Tian, L., Greer, H., Vialard, F.X., Kwitt, R., Estépar, R.S.J., Niethammer, M.: Gradicon: Approximate diffeomorphisms via gradient inverse consistency. arXiv preprint arXiv:2206.05897 (2022)

- [26] Xu, J., Ranftl, R., Koltun, V.: Accurate optical flow via direct cost volume processing. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition. pp. 5807–5815 (2017)

- [27] Yan, K., Cai, J., Jin, D., Miao, S., Guo, D., Harrison, A.P., Tang, Y., Xiao, J., Lu, J., Lu, L.: Sam: Self-supervised learning of pixel-wise anatomical embeddings in radiological images. IEEE Transactions on Medical Imaging (2022)

- [28] Ye, X., Guo, D., Ge, J., Yan, S., Xin, Y., Song, Y., Yan, Y., Huang, B.s., Hung, T.M., Zhu, Z., et al.: Comprehensive and clinically accurate head and neck cancer organs-at-risk delineation on a multi-institutional study. Nature Communications 13(1), 6137 (2022)

- [29] Zach, C., Pock, T., Bischof, H.: A duality based approach for realtime tv-l 1 optical flow. In: Pattern Recognition: 29th DAGM Symposium, Heidelberg, Germany, September 12-14, 2007. Proceedings 29. pp. 214–223. Springer (2007)

- [30] Zhao, S., Lau, T., Luo, J., Eric, I., Chang, C., Xu, Y.: Unsupervised 3d end-to-end medical image registration with volume tweening network. IEEE journal of biomedical and health informatics 24(5), 1394–1404 (2019)

Appendix 0.A Supplementary Material of SAMConvex

|

|

|

|

|

|

|

|

| (a) MIND | (b) SAM global | (c) SAM local | (d) SAM |

|

|

|

|

| Target | Source | Deeds | SAMConvex |