Salient Image Matting

Abstract

In this paper, we propose an image matting framework called Salient Image Matting to estimate the per-pixel opacity value of the most salient foreground in an image. To deal with the large amount of semantic diversity in images, a trimap is conventionally required as it provides important guidance about object semantics to the matting process. However, creating a good trimap is often expensive and time-consuming. The SIM framework simultaneously deals with the challenge of learning on a wide range of semantics and salient object types in a fully automatic and end to end manner. Specifically, our framework is able to produce accurate alpha mattes for a wide range of foreground objects and cases where the foreground class, such as human, appears in a very different context than the train data directly from an RGB input. This is done by employing a salient object detection model to produce a trimap of the most salient object in the image in order to guide the matting model about higher level object semantics. Our framework leverages large amounts of coarse annotations coupled with a heuristic trimap generation scheme to train the trimap prediction network so it can produce trimaps for arbitrary foregrounds. Moreover, we introduce a multi-scale fusion architecture for the task of matting to better capture finer, low level opacity semantics. With high level guidance provided by the trimap network, our framework requires only a fraction of expensive matting data as compared to other automatic methods while being able to produce alpha mattes for a diverse range of inputs. We demonstrate our framework on a range of diverse images and experimental results show our framework compares favorably against state of art matting methods without the need for a trimap.

Index Terms:

Natural Image Matting1 Introduction

Image matting refers to the problem of accurately estimating the foreground object opacity in images and video sequences. It serves as a prerequisite for a broad set of applications, such as film production, digital image editing and live streaming as it allows for realistic compositions of the foreground on novel backgrounds. Matting also plays an important role in making creative compositions of human and product images on a variety of backgrounds for creative marketing and design campaigns. Such images have diverse foregrounds and must meet high quality standards as they are usually used for commercial purposes. To easily process such images, a fully automatic matting system is desired.

Deep learning has shown tremendous advancements in a number of classical computer vision areas. Xu et al. [1] were the first to use deep learning to solve the matting problem. Their solution required a user generated trimap and the lower level semantics required to predict opacity was learned via a deep neural network. Several methods [2, 3, 4] have been proposed hence which make use of a user trimap . A trimap contains the values of 0,255 and 128 which represent the absolute background, absolute foreground and the uncertain region in which the opacity must be predicted, respecitively. Recently, a promising line of work towards the aim of automatic matting has seen methods [5, 6, 7, 8] been proposed that go directly going from an RGB input to alpha matte. These methods attempt to solve the under constraint matting equation:

| (1) |

where I, , F and B stand for an image, the alpha map, the foreground image and background image respectively, without the help of a trimap. Without the trimap the network must estimate alpha across the whole image rather than only the unknown region which is a more challenging task. This task is more challenging as it requires knowledge of low level and higher level semantics. In non-automatic methods knowledge of high level semantics is carried by the trimap in absolute background and foreground regions.

Capturing the high level semantic features in an image consistently requires a model to be trained on a large quantity of data due to the large variations in image content. Also, in real-world use cases the foreground object can appear in a very different setting from the training data, for example a fashion model may appear with different accessories or in different poses. The foreground object may also not be pre-specified from before. In e-commerce for example, new products are constantly added to the catalogue.

Previous automatic methods largely rely on expensive alpha annotations to learn such variations. Such annotations require skilled data annotators and are extremely expensive and time consuming to produce. Most current automatic methods either focus solely on humans or have trouble dealing with unseen object classes as well. Checking if an image is appropriate creates an additional layer of human involvement to either reject the image or create a trimap for it. Learning low level features on the other hand is more sample efficient can be further promoted by a better training set-up.

Motivated by these challenges, we propose a framework that can leverage cheap low quality annotations to learn robust semantic features and a fraction of high quality annotations used by other methods for learning of low-level features. Our simple yet powerful framework called SIM(Salient Image Matting) uses a novel saliency trimap network, inspired from salient object detection, that is able to produce trimaps of the most salient object in the image. The Salient Trimap Network(STN) is trained on trimaps generated from coarse annotations and a simple trimap generation scheme. This training allows the trimap network to accurately produce trimaps of a large variety of foregrounds and be robust to large semantic variations in natural images. The output of the STN is then fed into a matting network for refinement of lower level semantics. By decoupling the learning of these features we are able to provide guidance to the matting network of semantic information without a user generated trimap for arbitrary foreground objects. High quality data is used to finetune these models in an end-end fashion using the framework provided by Chen et al [6] . Further, for the task of image matting we propose a novel architecture with superior multi-scale feature representation than the common encoder-decoder architectures used for matting to learn the low level feature more efficiently.

Our framework can be viewed as an extension of existing matting models allowing them to handle large structural diversity and arbitrary foreground objects without user inputs. By using cheap coarse annotations we are able to achieve this aim in a very cost effective manner. We empirically evaluate the effectiveness of our method and achieve comparable results with the state-of-the-art interactive matting methods [1, 9, 4, 2] and outperform current automatic methods [5] . We also provide many examples of diverse images from the Internet to demonstrate our model’s performance on real world use cases.

We list our contributions as follows:

-

•

To the best of our knowledge, we are the first to combine the tasks of object saliency detection with image matting to create an end to end framework which can produce high fidelity alpha mattes of arbitrary foreground objects in an image. This framework allows matting models to be used in more diverse settings that previously suggested .

-

•

We propose a novel Salient Trimap Network which can generate trimaps for arbitrary foreground objects which can then be passed into a matting model for further refinement. We introduce a simple trimap generating scheme to effectively leverage coarsely annotated data for its pre-training.

-

•

We introduce a novel architecture based on better multi-scale feature representation and fusion for the task of image matting that achieves superior performance to the common encoder-decoder architectures used for matting.

2 Related Work

Automatic Trimap Generation: There have been a number of techniques that attempt to generate accurate trimaps for matting. [10, 11, 12] propose automatic trimap generation schemes that depends on depth information captured using special cameras. Hsieh et al. [13] generated automatic trimaps from an input image along with its segmentation mask. Singh & Jalal [14] propose a method based on edge detection and thresholding to generate fine trimaps. Gupta & Raman [15] use super pixels and three saliency detection techniques to find the object of interest and then use a uniform erosion dilation scheme. Henry et al. [16] use saliency detection to identify the foreground of an image, erode the boundaries and use lazy snapping and fuzzy c- means to identify the unknown pixels. [6] [17] used deep neural networks to generate trimaps.

Image Matting: Conventional image matting approaches include sampling based and affinity based methods. Sampling based approaches [18, 19, 20, 21, 22, 23] use color information of sampled pixels to estimate the alpha values of the unknown pixels in an image. Such methods build foreground and background color models to exploit natural image statistics. Affinity-based approaches [9], [24, 25, 26, 27] on the other hand propagates the alpha values of the known foreground and background regions to the unknown region. The predicted alpha map is determined by the affinity scores.

Recently deep learning has shown impressive results for matting. These methods adapt image segmentation encoder-decoder architectures to predict the alpha mattes from an RGB image input and an extra channel containing the user defined trimap. IndexNet Matting [3] employs learnable index pooling and un-pooling for the encoder and decoder branch respectively. Context Aware Matting [4] uses dual decoders to predict the alpha and foreground map. GCA Matting [2] uses deep learning to exploit the user generated trimap by using a trimap guided attention mechanism. [28] tries to make matting work for a higher diversity of scenarios by taking in a background image as additional support for the matting network. [29] uses a discriminator to make the alpha matte for realistic.

Automatic Matting Methods that only require a single RGB image input to generate a alpha matte provide great convenience in practical applications. Automatic soft segmentation was introduced in [30]. [31, 32] used segmentation masks as guidance for matting. SHM [6] introduced a novel fusion method to fuse their trimap and matting prediction to make an end to end trainable network. HAttMatting [7] tries to use attention mechanism in one network to train separate low and high level processing branches. SHM [6] and HAttMattin [7] introduced a fusion layer and hierarchical attention to carefully fuse low and high level feature. SHM also predict intermediate trimap while HAttMatting uses attention to highlight low level features in a different branch. LFM [5] uses a dual decoder architecture to predict foreground and background and fuse via weighted fusion. [33] makes use of coarse annotations to learn strong human semantics which are then supplied to their matting model after processing by a quality unification network. It does not produce a trimap but a coarse mask and a QN network for high and low quality data fusion. [34] used instance segmentation to identify objects in the image and then applied erosion dilation before matting. Their network was not end to end.

Multi-Scale Feature Representation Deep image matting architectures have conventionally been encoder- decoder based . Recent advancements in multi-scale feature representation and fusion are especially relevant for dense predication tasks like image matting. Unet [35] and FPN [36] were the first to introduce skip connection from an encoder to a decoder for fusion of low and high level features. PANet [37] added an extra bottom-up path aggregation pathway on top of FPN for further reconsideration and refinement of low level features. M2det [38] tries to fuse multi-scale feature using a U-shape module. EfficientDet [39] uses a repeating bi-pyramidal block with weighted attention for better muti-scale feature fusion and achieves high accuracy at a low compute budget. HRNet [40] emphases the importance of high resolution feature processing by maintaining several parallel processing streams. [41] provides for a nice survey of this area.

3 Method

3.1 Overview

In order to capture high level and low level features separately we utilise two sub-networks in our SIM framework, a Salient Trimap Network (STN) and a matting network. This disentanglement allows us to use large amount of coarsely annotated data for training semantic features in the STN. The details on trimap generation from coarse data is described in section 3.2. The STN generates a three-channel output representing the background, unknown region and foreground respectively. Details about the STN are described in section 3.3. The matting network then takes the intrinsic trimap from the STN along with the original input and predicts a one channel alpha matte image. The outputs of the two sub-networks are then fused following the method of Chen et al. to produce the final alpha matte. Our SIM workflow can be seen inFigure 3 . We also introduce a multi-scale layer, DensePN, that acts on a feature pyramid from an encoder. More details about our matting architecture are described in section 3.4. In the following subsections, we describe these in details.

3.2 Adaptive Trimap Generation Scheme

In order to train our STN we require trimap ground truths, however these are expensive to produce. Instead, we collect coarsely annotated segmentation masks and then construct a scheme to best generate trimaps from such coarse masks. Coarsely annotated data can be collected from a variety of sources including PNGs or DeepLabV3+ [42] outputs of internet images. The choice of content in the coarse mask is important as it influences semantic knowledge of the STN, thus we source 20,856 images of humans and common objects, similar to those in the DUTS dataset.

As our collection base is large, there is large variation in the size of images and the size of the foreground object. The colors of the foreground and background regions also resemble each other closely at times. As a result, common rule-based trimap generation schemes such as erosion-dilation and ones based on color information often results in inaccurate uncertainty regions. These methods also do not pay attention to common traits in coarse annotations. As show inFigure 4 , we observe that coarse annotation around hair regions often under or over estimate foreground areas. For furry objects such as animals or soft toys we notice a similar pattern but less pronounced.

We develop a simple but robust trimap generation scheme that takes into account the size of the object and object features such as hair and fur to generate trimaps from such coarse masks. We do so by classifying the boundary pixels of the coarse mask into three categories: hair, fur and solid and then dilating each separately. The classification is done as follows:

-

•

For hair pixels, we apply a state of art human parsing network [43] on the image, to get mask of hair and body regions. The mask is then converted to only 2 classes - hair and non-hair. Each edge pixel in the segmentation mask is associated to the closest class in the parsed mask. As a result, edge pixels closer to the hair class of the parsed mask are classified as hair pixels. The remaining boundary pixels are marked as regular pixels.

-

•

In images with animals or stuffed toys, all the boundary pixels are marked as fur pixels.

-

•

If a pixel is neither detected as hair or fur then it is marked as a solid pixel.

To determine the rate of dilation of each pixel type in an image we define a metric over the coarse image mask as a measure of the size of the salient object. The hair, fur and solid pixels are dilated by 3.5%, 2.5%, and 1.5% of respectively. In our paper, we calculate as the maximum value of the object’s distance map. Figure 4 shows our proposed adaptive trimap generation scheme as compared to the conventional erosion-dilation based method. Figure 4 e,f also shows examples of internet PNG images along with their masks. While the opacity values in such images is not precise and often uncalibrated, accurate trimaps can yet be generated from these masks using standard erosion and dilation methods.

3.3 Salient Trimap Network

Images of interest for alpha matting usually contain large semantic diversity. We cannot expect to learn these semantic features from matting data as it is expensive and usually limited. Instead of relying on an external input, we propose a Salient Trimap Network (STN) that predicts a trimap of the most salient foreground region. The STN’s output is a 3 channel classification output that is a probabilistic estimate of the absolute background, unknown region and absolute foreground regions. The matting network need only to focus on learning low level details from high quality data. The STN can be based on any saliency object detection architecture; we choose to use an architecture based on U2Net [44] due to its ability to capture accurate semantics efficiently. The nested U-structure of U2Net with the residual U-blocks enables the network to obtain multi-scale features without significantly degrading the feature map resolution, which helps STN to better classify the semantics among foreground, background and unknown region.

3.4 Matting Network

Network Design Encoder-decoder architectures only have one bottom up pathway which limits information flow from rich low level features and deep semantic features. High resolution representations and multi-scale feature fusion are important for matting as they promote accurate localization and provide low level features with better context for opacity prediction. We crate a repeatable pyramid layer, called DensePN, which has parallel multi-resolution streams that are enriched with the other multi-resolution features. As shows in Figure 5, each stream is a DenseBlock [45] followed by fusion layer which brings all streams to the same resolution and performs a 1x1 convolution. The repeated convolution and fusion block allows for rich multi-scale feature enrichment at each resolution level. Finally, all stream are merged at the final prediction head to predict the alpha matte. We use ResNet34 [46] as our encoder. More deals of architecture can be found in supplementary materials.

Fusion The matting network produces an alpha matte with refined values only in the uncertain region suggested by the intrinsic trimap. As the matting network is trained only to refine low level details, it does not provide accurate values in the absolute foreground and background regions where semantic knowledge is necessary. The outputs of the STN and matting model are fused using the fusion technique proposed by Chen et al. Specifically if , and denote the foreground, background and unknown region probability maps predicted by the STN and the output from the matting network then

This fusion allows for coarse semantics of the STN and fine details of the matting network to be combined effectively. Note that fusion is only required in the joint end-end training phase.

3.5 Loss Functions

STN Loss The STN is trained for 3 way classification of the background, unknown region and foreground using the standard cross-entropy loss over each pixel.

Matting Loss For the matting network pre-training, following [1] we apply a combination of alpha prediction loss and composition loss. We also apply Laplacian Loss, proposed in [47]to further improve the performance of our network:

| (3) |

where is the ’th level of the laplacian pyramid of the alpha map. Our overall matting loss is defined as:

Joint Loss Theoretically, we could use the matting loss computed over the whole image for joint training as well, however we observed that STN forgets the semantic knowledge it learned from the coarse trimaps. To prevent this, we simultaneously pass in a batch of our trimap data along with the high quality matting data and apply a constraint over only the foreground regions of the coarse annotation. The joint loss is thus

where FS is the groundtruth foreground map and is the indicator function. This soft constraint allows the joint network to optimise for delicate low and high level feature fusion between two models and also prevents the STN from forgetting its semantically rich features.

3.6 Implementation Details

SIM is trained in three phases — STN pre-training, Matting Network pre-training and end-to-end training.

3.6.1 STN Pre-training

The STN is pre-trained on trimaps generated from coarse segmentation masks generated by our proposed trimap generation scheme. In total, we have 20,856 coarse segmentation masks of which 8,919 are from the DUTS dataset [48]. These images include humans and animals in a variety of poses and many foreground objects such as those in DUTS dataset for the network to learn robust saliency detection. We also include trimaps generated by convention erosion-dilation for Adobe DIM data’s [1] alpha annotations so the network can jointly optimize for both sets during this stage.

Following [44] , we apply deep supervision over all the six side outputs of the U2Net with equal weight in this phase. Pre-trained weights of the U2Net are used to initialize the network. The inputs are resized to 320x320 followed by random cropping of 288x288. Learning rate warmup and cosine annealing are used to train the network from a max learning rate of 8e-5 to 5e-6 with a batch size of 14.

3.6.2 Matting Network Pre-training

The matting network is pre-trained with one hot encoded trimaps generated from standard erosion and dilation of the ground-truth alphas. Large kernels are randomly applied to make the matting network resistant to noisy trimaps. Following standard practice, random crops of 320x320,480x480 and 640x640 are taken centered around unknown regions and resized to 320x320. is only computed over the trimap region. All weights in are set to 1. Learning rate warmup and cosine annealing are used to train the network from a max learning rate of 1e-4 to 1e-5 with a batch size of 8.

Data augmentation Data augmentation is important for matting due to the limited number of unique high quality alphas. Flipping and color-jitter are applied randomly. Additionally, synthetic images composed between low quality COCO [49] images and high quality matting foreground suffer from discrepancies in texture, noise, color quality and resolution in the foreground and background regions. The matting network can exploit these discrepancies and this can hinder its generalisability to real world images. To combat the domain gap of training on synthetic composed images, CAM [4] used re-JPEGing and Gaussian blur and [28] added gamma correction and random noise to background images before compositing. To circumvent the disparity in quality between foreground and background regions we create a dataset of HD backgrounds with non-salient foreground objects and use it for composition during training. Additionally, we also use Places365 Dataset [50] to increase the texture and resolution diversity of the backgrounds.

Additionally, for wild images the STN may classify certain thin structures within an object entirely into the unknown region like purse straps and chair legs. To better deal with such cases we add segmentation masks of solid object such as jewellery, purses and chairs obtained from internet images to the matting training data so the matting network learns to segment out such structures.

3.6.3 End to End Training

For the joint training only high definition solid DIM images are used. The STN and Matting network are initialized with the best models in the pre-training stages. The output of the STN is resized to the original image size, to keep the matting net focused on low level features we continue the pre-training strategy for the matting network i.e random crops of 320x320,480x480 and 640x640 are taken and resized to 320x320, except the crop is now taken over the probabilistic trimap output from STN. Learning rate of 1e-5 and batch size of 4 is used to fit within the GPU memory. The batch-norm parameters of both networks are frozen in this stage due to the small batch size.

4 Experiments

4.1 Datasets

For training of our matting architecture in the pre-training and end to end training phase, we use 227 training images of solid objects from the DIM dataset composed on backgrounds taken from COCO, Places356 and our HD background dataset equally to form a total training set of around 30,645 images. Results of our architecture with a trimap input are shown in Table I .

We compare our method against state of art trimap dependent methods on 1000 synthetic composed images made from compositing 50 unique foregrounds on 20 images from our HD dataset. Our test set contains 23 solid foreground images from the DIM dataset and 27 diverse high quality alpha mattes to evaluate the robustness of our framework. Our HD dataset does not contain any salient objects in the foreground as this may lead to ambiguity in detecting the most salient object by the STN.

Our SIM model is trained and tested on a subset of DIM dataset excluding transparent and non-salient objects such as tree branches and wires. The 227 train images are composed on backgrounds from COCO, Places356 and our HD dataset not containing salient objects in their foreground. This is an important measure to prevent foreground ambiguity for the Salient Trimap Network.

4.2 Results

We use the four commonly used metrics to evaluate the effectiveness of our proposed framework: Sum of Absolute Difference (SAD), Mean Square Error(MSE), Gradient Error and Connectivity Error as proposed in [51].

4.2.1 Trimap Based Matting Models

In the first phase of evaluation, we compare the effectiveness of our matting architecture using ground truth trimaps against six state of the art trimap based matting models. We compare our matting model to a few conventional and state-of-art deep learning methods.Table I shows the results of this comparison. While KNN [9], CFM [27], DIM [1], GCA [2] and IndexNet [3] predict only the alpha matte, Context Aware matting [4] simultaneously predicts the alpha matte as well as the foreground. In all cases except for DIM, we use the official public code repository. Since DIM does not have a publicly available model, we use a model trained by the authors of IndexNet as the accuracy closely matches what was reported in the paper. Our network obtains superior performance as compared to other methods due to the ability of multi-scale feature fusion blocks to perceive fine-grained alpha mattes from adaptive semantics.

| Model | SAD | MSE (x10-2) | Gradient Error | Connectivity Error |

|---|---|---|---|---|

| KNN | 15.9 | 3.4 | 12.4 | 17.8 |

| CFM | 12.3 | 2.5 | 9.5 | 14.7 |

| DIM | 8.9 | 1.7 | 7.1 | 9.9 |

| IndexNet | 7.8 | 1.1 | 6.3 | 7.1 |

| GCA | 9.4 | 2.3 | 7.2 | 13.5 |

| CAM | 7.9 | 1.2 | 6.5 | 8.5 |

| Res34-DensePN(Ours) | 7.7 | 1.1 | 6.2 | 7.9 |

-

•

All metrics computed are computed only over the transition region following the methods proposed in [1]

4.2.2 End to End Models

Of the few automatic methods, SHM and [33] are designed exclusively for human images. HAttMatting [7] and LFM are the algorithms that most closely match our use case.

Since the training code of only LFM was available for us, we compare our end to end model on our test set. Table II compares our STN+DensePN without and with(STN) end to end finetuning against LFM.

| Model | SAD | MSE (x10-2) | Gradient Error | Connectivity Error |

|---|---|---|---|---|

| LFM | 69.9 | 3.4 | 28.9 | 23.5 |

| STN+DensePN | 47.9 | 2.0 | 33.0 | 34.2 |

| SIM (Ours) | 32.4 | 1.5 | 22.3 | 35.4 |

-

•

All metrics computed are computed over the entire image

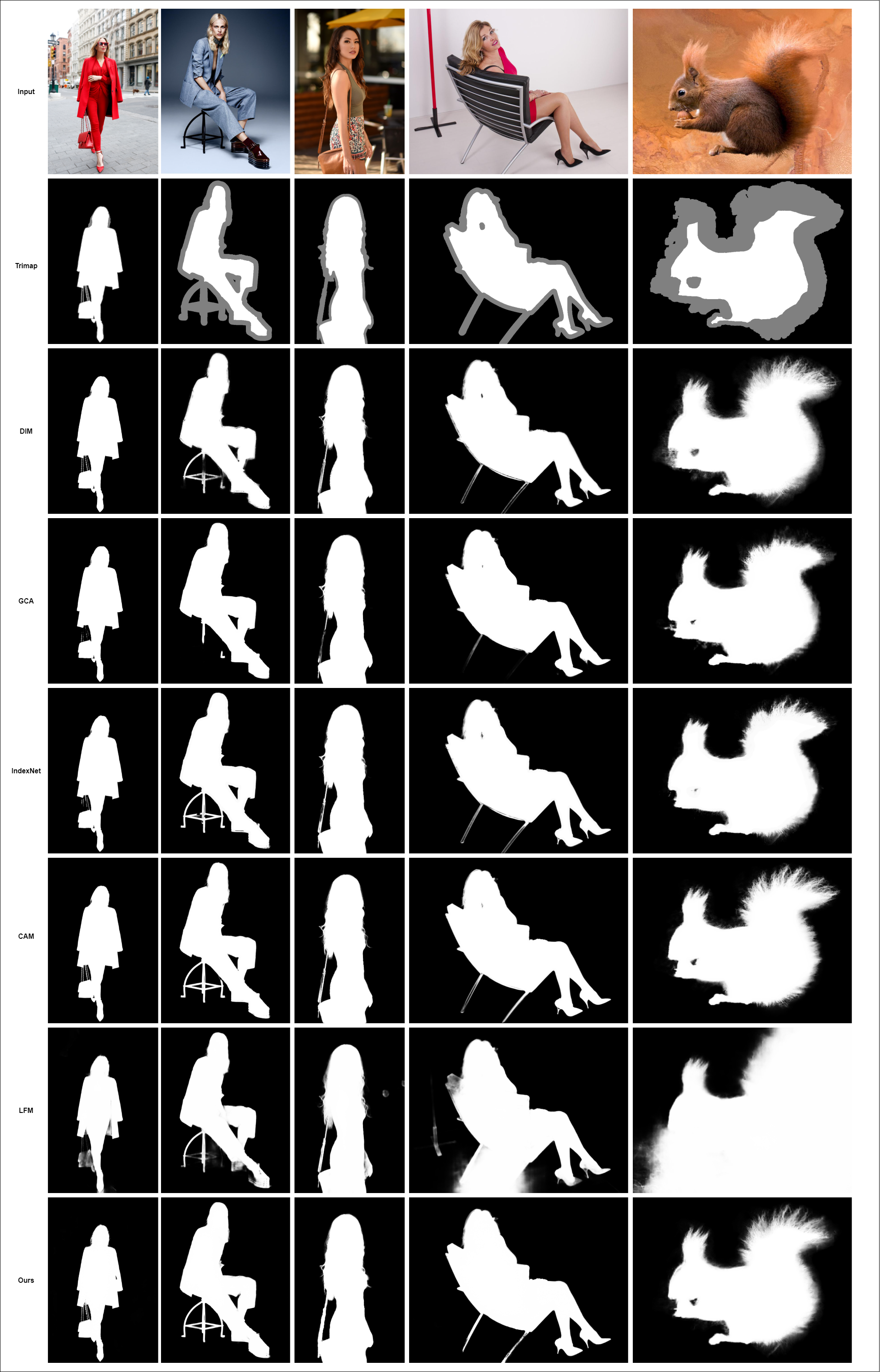

Figure 7 shows the results on some images on trimap based and automatic methods. We see that LFM is not able to accurately capture object semantics due to relying on its limited alpha dataset while ours is able to identify and capture accurate semantics. We compare favorably to trimap based matting models without the need for user generated trimap. Our STN is able to accurately capture diverse semantics and pass on its guidance to our matting network for low level refinement.

5 Conclusion

In this paper we propose an automatic, end to end model for salient image matting. The proposed model does not require any additional user inputs and produces high quality, alpha mattes for a variety of objects including humans, animals and more. Our testing shows demonstrates the robustness of our model on natural images. We achieve state-of-the-art results for automatic end to end matting and comparable results to other state of the art interactive methods which require externally provided trimaps along with the input image. This can be attributed to our two main sub networks — the STN which efficiently picks up the high level semantic details of the salient object in the image and the multi scale fusion matting network that learns the fine, low level features. We believe our framework will serve as a strong baseline for future automatic matting methods which are not restricted to just human or animal images but on a much wider range of objects.

References

- [1] N. Xu, B. Price, S. Cohen, and T. Huang, “Deep image matting,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 2970–2979.

- [2] Y. Li and H. Lu, “Natural Image Matting via Guided Contextual Attention,” arXiv preprint arXiv:2001.04069, 2020.

- [3] H. Lu, Y. Dai, C. Shen, and S. Xu, “Indices matter: Learning to index for deep image matting,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 3266–3275.

- [4] Q. Hou and F. Liu, “Context-aware image matting for simultaneous foreground and alpha estimation,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 4130–4139.

- [5] Y. Zhang, L. Gong, L. Fan, P. Ren, Q. Huang, H. Bao, and W. Xu, “A late fusion cnn for digital matting,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7469–7478, 2019.

- [6] Q. Chen, T. Ge, Y. Xu, Z. Zhang, X. Yang, and K. Gai, “Semantic human matting,” Proceedings of the ACM International Conference on Multimedia, pp. 618–626, 2018.

- [7] Y. Qiao, Y. Liu, X. Yang, D. Zhou, M. Xu, Q. Zhang, and X. Wei, “Attention-Guided Hierarchical Structure Aggregation for Image Matting,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 13 673–13 682. [Online]. Available: 10.1109/CVPR42600.2020.01369

- [8] J. Liu, Y. Yao, W. Hou, M. Cui, X. Xie, C. Zhang, and X. sheng Hua, “Boosting Semantic Human Matting with Coarse Annotations,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 8563–8572.

- [9] Q. Chen, D. Li, and C.-K. Tang, “KNN matting,” IEEE transactions on pattern analysis and machine intelligence, vol. 35, no. 9, pp. 2175–2188, 2013.

- [10] O. Wang, J. Finger, Q. Yang, J. Davis, and R. Yang, “Automatic Natural Video Matting with Depth,” 15th Pacific Conference on Computer Graphics and Applications (PG’07, 2007.

- [11] D. Cho, S. Kim, Y.-W. Tai, and I. S. Kweon, “Automatic Trimap Generation and Consistent Matting for Light-Field Images,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 8, pp. 1504–1517, 2017. [Online]. Available: 10.1109/tpami.2016.2606397;https://dx.doi.org/10.1109/tpami.2016.2606397

- [12] T. Lu and S. Li, “Image matting with color and depth information,” Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), 2012.

- [13] M. Hsieh and Lee, “Automatic trimap generation for digital image matting,” in 2013 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference, 2013, pp. 1–5.

- [14] S. Singh and A. S. Jalal, “Automatic generation of trimap for image matting,” International Journal of Machine Intelligence and Sensory Signal Processing, vol. 1, no. 3, pp. 232–232, 2014. [Online]. Available: 10.1504/ijmissp.2014.066425;https://dx.doi.org/10.1504/ijmissp.2014.066425

- [15] V. Gupta and S. Raman, “Automatic trimap generation for image matting,” 2016 International Conference on Signal and Information Processing, 2016.

- [16] C. Henry and S.-W. Lee, “Automatic trimap generation and artifact reduction in alpha matte using unknown region detection,” Expert Systems with Applications, vol. 133, pp. 242–259, 2019. [Online]. Available: 10.1016/j.eswa.2019.05.019;https://dx.doi.org/10.1016/j.eswa.2019.05.019

- [17] X. Xiaoyongshen, Hongyungao, Chaozhou, and Jia, “Deep automatic portrait matting,” European Conference on Computer Vision, vol. 3, pp. 6–6, 2016.

- [18] A. Karacan, E. Erdem, and Erdem, “Image matting with kl-divergence based sparse sampling,” ICCV, 2015.

- [19] C. Rhemann and Rother, “A global sampling method for alpha matting,” CVPR, 2011.

- [20] Y. Y. Chuang, B. Curless, D. H. Salesin, and R. Szeliski, “A bayesian approach to digital matting,” CVPR, 2003.

- [21] X. Feng, X. Liang, and Z. Zhang, “A cluster sampling method for image matting via sparse coding,” ECCV, 2016.

- [22] E. Shahrian, D. Rajan, B. Price, and S. Cohen, “Improving image matting using comprehensive sampling sets,” CVPR, 2013.

- [23] J. Wang and M. F. Cohen, “Optimized color sampling for robust matting,” CVPR, 2007.

- [24] P. Lee and Y. Wu, “Nonlocal matting,” CVPR, 2011.

- [25] Y. Aksoy, M. T. O. Aydin, and Pollefeys, “Designing effective inter-pixel information flow for natural image matting,” CVPR, 2017.

- [26] L. Grady, T. Schiwietz, A. Shmuel, and R. Westermann, “Random walks for interactive alpha-matting,” Proceedings of VIIP, 2005.

- [27] A. Levin, D. Lischinski, and Y. Weiss, “A closed-form solution to natural image matting,” IEEE transactions on pattern analysis and machine intelligence, vol. 30, no. 2, pp. 228–242, 2007.

- [28] S. Sengupta, V. Jayaram, B. Curless, S. M. Seitz, and I. Kemelmacher-Shlizerman, “Background Matting: The World is Your Green Screen,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 2291–2300.

- [29] S. Lutz, K. Amplianitis, and A. Smolic, “AlphaGAN: Generative adversarial networks for natural image matting,” CoRR, vol. abs/1807.10088, 2018.

- [30] Y. Aksoy, T.-H. Oh, S. Paris, M. Pollefeys, and W. Matusik, “Semantic soft segmentation,” ACM Transactions on Graphics, vol. 37, no. 4, pp. 1–13, 2018. [Online]. Available: 10.1145/3197517.3201275;https://dx.doi.org/10.1145/3197517.3201275

- [31] X. Shen, X. Tao, H. Gao, C. Zhou, and J.-A. Jia, “Deep automatic portrait matting,” European Con- ference on Computer Vision, vol. 2, pp. 3–3, 2016.

- [32] B. Zhu, Y. Chen, J. Wang, S. Liu, B. Zhang, and M. Tang, “Fast deep matting for portrait anima- tion on mobile phone,” Proceedings of the 25th ACM inter- national conference on Multimedia, pp. 297–305, 2017.

- [33] J. Liu, Y. Yao, W. Hou, M. Cui, X. Xie, C. Zhang, and X.-S. Hua, “Boosting Semantic Human Matting With Coarse Annotations,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), June 2020.

- [34] G. Hu and J. J. Clark, “Instance Segmentation based Semantic Matting for Compositing Applications,” CoRR, vol. abs/1904.05457, 2019.

- [35] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional Networks for Biomedical Image Segmentation,” CoRR, vol. abs/1505.04597, 2015.

- [36] T.-Y. Lin, P. Dollár, R. B. Girshick, K. He, B. Hariharan, and S. J. Belongie, “Feature Pyramid Networks for Object Detection,” CoRR, vol. abs/1612.03144, 2016.

- [37] S. Liu, L. Qi, H. Qin, J. Shi, and J. Jia, “Path Aggregation Network for Instance Segmentation,” CoRR, vol. abs/1803.01534, 2018.

- [38] Q. Zhao, T. Sheng, Y. Wang, Z. Tang, Y. Chen, L. Cai, and H. Ling, “M2Det: A Single-Shot Object Detector based on Multi-Level Feature Pyramid Network,” CoRR, vol. abs/1811.04533, 2018.

- [39] M. Tan, R. Pang, and Q. V. Le, “EfficientDet: Scalable and Efficient Object Detection,” CoRR, vol. abs/1911.09070, 2019.

- [40] J. Wang, K. Sun, T. Cheng, B. Jiang, C. Deng, Y. Zhao, D. Liu, Y. Mu, M. Tan, X. Wang, W. Liu, and B. Xiao, “Deep High-Resolution Representation Learning for Visual Recognition,” CoRR, vol. abs/1908.07919, 2019.

- [41] L. Liu, W. Ouyang, X. Wang, P. W. Fieguth, J. Chen, X. Liu, and M. Pietikäinen, “Deep Learning for Generic Object Detection: A Survey,” CoRR, vol. abs/1809.02165, 2018.

- [42] L.-C. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, “Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation,” CoRR, vol. abs/1802.02611, 2018.

- [43] P. Li, Y. Xu, Y. Wei, and Y. Yang, “Self-Correction for Human Parsing,” CoRR, vol. abs/1910.09777, 2019.

- [44] X. Qin, Z. Zhang, C. Huang, M. Dehghan, O. R. Zaiane, and M. Jagersand, “U2-Net: Going deeper with nested U-structure for salient object detection,” Pattern Recognition, vol. 106, p. 107404, 2020.

- [45] G. Huang, Z. Liu, and K. Q. Weinberger, “Densely Connected Convolutional Networks,” CoRR, vol. abs/1608.06993, 2016.

- [46] K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” CoRR, vol. abs/1512.03385, 2015.

- [47] S. Niklaus and F. Liu, “Context-aware synthesis for video frame interpolation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 1701–1710.

- [48] L. Wang, H. Lu, Y. Wang, M. Feng, D. Wang, B. Yin, and X. Ruan, “Learning to Detect Salient Objects with Image-Level Supervision,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 3796–3805. [Online]. Available: 10.1109/CVPR.2017.404

- [49] T.-Y. Lin, M. Maire, S. J. Belongie, L. D. Bourdev, R. B. Girshick, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft COCO: Common Objects in Context,” CoRR, vol. abs/1405.0312, 2014.

- [50] B. Zhou, A. Lapedriza, A. Khosla, A. Oliva, and A. Torralba, “Places: A 10 million Image Database for Scene Recognition,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017.

- [51] C. Rhemann, C. Rother, J. Wang, M. Gelautz, P. Kohli, and P. Rott, “A perceptually motivated online benchmark for image matting,” in 2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009, pp. 1826–1833. [Online]. Available: 10.1109/CVPR.2009.5206503