Faculty of Engineering and Technology,

SRM Institute of Science and Technology

Kattankulathur, Tamil Nadu, 603203, India

11email: [email protected], [email protected]

S3Simulator: A benchmarking Side Scan Sonar Simulator dataset for Underwater Image Analysis

)

Abstract

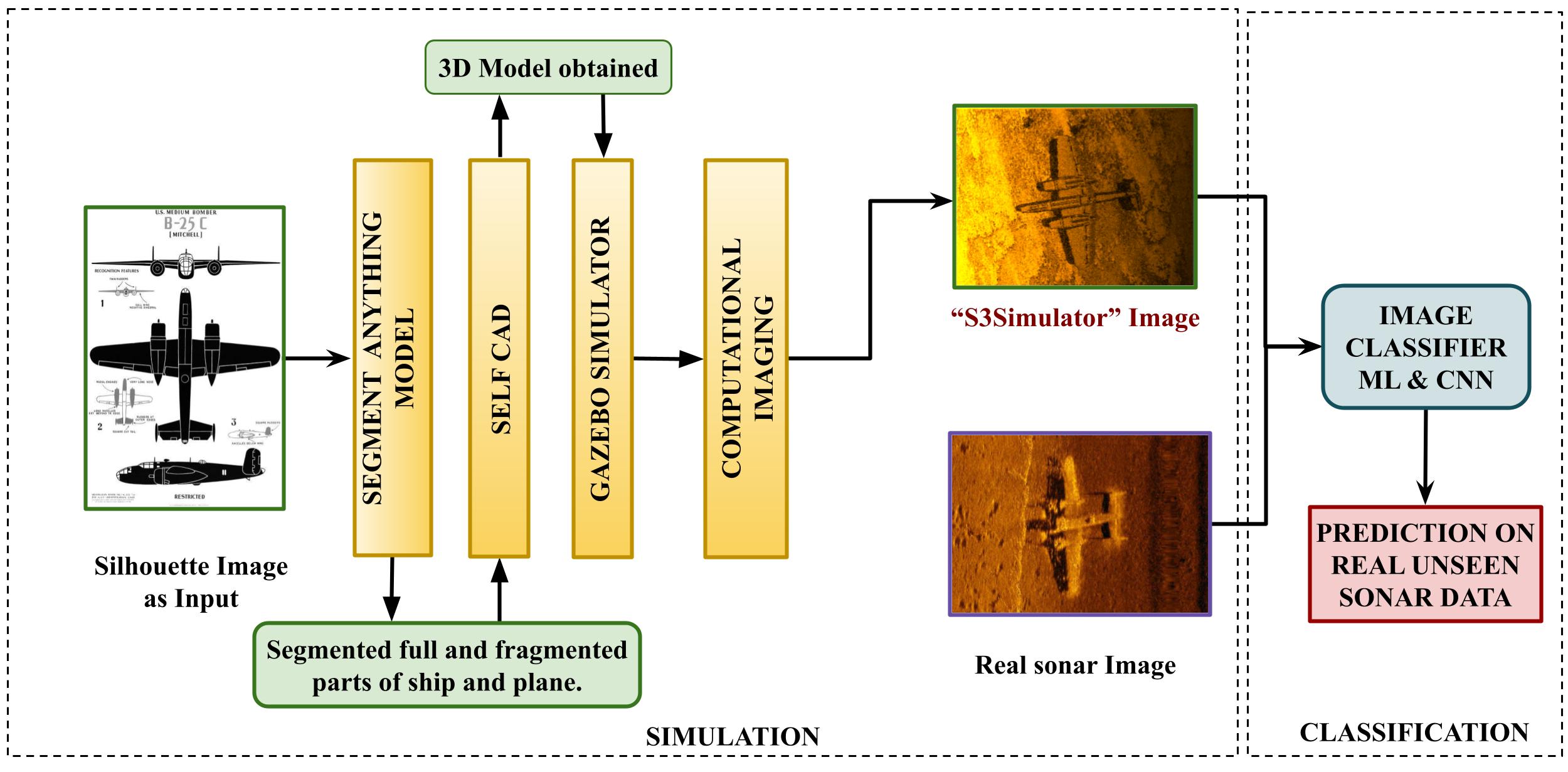

Acoustic sonar imaging systems are widely used for underwater surveillance in both civilian and military sectors. However, acquiring high-quality sonar datasets for training Artificial Intelligence (AI) models confronts challenges such as limited data availability, financial constraints, and data confidentiality. To overcome these challenges, we propose a novel benchmark dataset of Simulated Side-Scan Sonar images, which we term as ‘S3Simulator dataset’. Our dataset creation utilizes advanced simulation techniques to accurately replicate underwater conditions and produce diverse synthetic sonar imaging. In particular, the cutting-edge AI segmentation tool i.e. Segment Anything Model (SAM) is leveraged for optimally isolating and segmenting the object images, such as ships and planes, from real scenes. Further, advanced Computer-Aided Design tools i.e. SelfCAD and simulation software such as Gazebo are employed to create the 3D model and to optimally visualize within realistic environments, respectively. Further, a range of computational imaging techniques are employed to improve the quality of the data, enabling the AI models for the analysis of the sonar images. Extensive analyses are carried out on S3simulator as well as real sonar datasets to validate the performance of AI models for underwater object classification. Our experimental results highlight that the S3Simulator dataset will be a promising benchmark dataset for research on underwater image analysis. https://github.com/bashakamal/S3Simulator.

Keywords:

Sonar imagery Side Scan Sonar Simulated dataset Segmentation SelfCAD Gazebo underwater object classification.1 Introduction

SONAR, which stands for Sound Navigation and Ranging, plays a crucial role in various underwater applications [20]. Sonar systems utilize sound waves to overcome the limitations posed by optical devices, such as water darkness and turbidity. It has found applications in various civilian and defence sectors. The detection and classification of underwater objects in sonar images remain one of the most challenging tasks in marine applications, such as underwater rescue operations, seabed mapping, and coastal management [20].

Traditionally, Sonar imagery is manually inspected by human operators, which is a time-consuming task as well as requires domain expertise [17]. To automate this process, the integration of Artificial Intelligence (AI) emerged as a promising solution. However, the availability of publicly accessible, high-quality sonar datasets to train the AI models is scarce. This paucity of sonar datasets is mainly due to the extensive costs, domain expertise to label, limited resources, security and data sensitivity, and confidentiality constraints. Furthermore, the quality of the available sonar datasets is also suboptimal due to the complexity of the underwater environment, such as various kinds of distortion, underwater noise, speckle noise, small objects, and poor visibility [26].

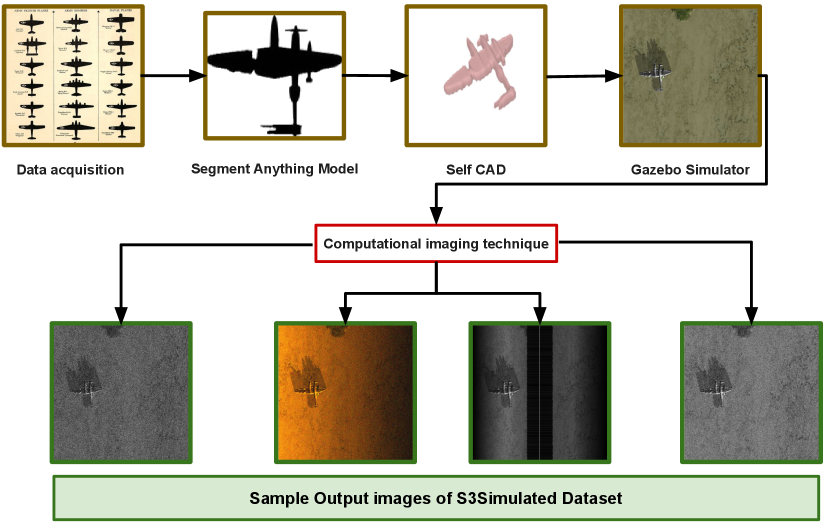

In order to address the aforementioned challenges, i.e., the scarcity of publicly available sonar data and low-quality sonar images, we propose a synthetic approach for generating Side Scan Sonar simulator dataset named as "S3Simulator" dataset. Currently, the S3Simulator dataset consists of 600 images of ships and 600 images of planes, which have been meticulously segmented and simulated to replicate real-world sonar conditions. A novel framework that combines an advanced AI segmentation model, i.e., Segment Anything Model (SAM) [11], with the selfCAD computer-aided design tool and the 3D dynamic simulator Gazebo is leveraged for the creation of the S3Simulator dataset. Further, it is augmented with cutting-edge computational imaging techniques to provide a heterogeneous dataset replicating real-world sonar imagery such as highlight, shadow, seafloor reverberation, and other characteristics.

The proposed S3Simulator dataset is developed in five stages: First, silhouette images of military and civilian planes and ships are acquired in their raw format. In the second stage, a benchmarking segmentation model SAM is utilized to explicitly segment out the image object, i.e., shipwrecks, plane wreckage, and its fragments, from the rest of the image based on the provided prompts. In the third stage, we employ a self-CAD tool to reconstruct 2D segmented images into 3D models, adjusting the model’s properties such as shape, size, and texture. In the fourth stage, these 3D objects are deployed on a simulator platform, e.g., Gazebo, to generate a simulated replica of the real-world objects by rendering the self-CAD results. This environment simulates complex sonar characteristics such as noise, shadows, object complexity, and diverse seabed terrain. Finally, we employ a range of computational imaging techniques, including pixel value clipping, linear gradient integration, and nadir zone mask generation, to enhance the data quality and replicate the characteristics of sonar images.

Extensive analysis is carried out on real and S3Simulator datasets to validate the performance of the AI model for underwater image analysis. In particular, we investigate the application of AI models for sonar image classification. To this end, benchmarking classical Machine Learning (ML) approaches such as Support Vector Machine (SVM), Random forest, K-Nearest Neighbors (KNN) and Deep Learning (DL) models including VGG16, VGG19, MobileNetV2, InceptionResNetV2, InceptionV3, ResNet50, and DenseNet121 are trained using augmentation and transfer learning techniques and tested on unseen real data. The key contributions of the paper are as follows:

-

Proposal of a novel ‘S3Simulator dataset’ that consists of simulated side-scan sonar imagery to tackle the scarcity of publicly available sonar data and low-quality sonar images.

-

Integration of Gazebo simulator and selfCAD 3D with the advanced AI segmentation model SAM, augmented by computational imaging refining.

-

Incorporation of a realistic environment comprising images with nadir zone, shadows and object rendering, alongside diverse seabed compositions.

-

Extensive evaluation of AI models through classical ML and DL methodologies for sonar image classification in both real-world and simulated scenarios.

The rest of the paper is organized as follows. The related works are described in Section 2. The overall architecture of the proposed S3Simulator multi-stage approach and methodology is presented in Section 3. In Section 4 and Section 5, sonar image classification on the S3Simulator dataset and the experimental setup are discussed. In Section 6, experimental results are discussed in detail. Finally, the conclusion and future works are enumerated in Section 7.

2 Related Works

2.1 Sonar Image Dataset

In the exploration of marine and object detection, researchers have made notable progress in creating sonar image datasets. In one of the earliest studies of side-scan sonar datasets, Huo et al. [9] developed Seabed Objects-KLSG, a side-scan sonar dataset obtained from real sonar equipment, featuring 385 wrecks and 36 drowning victims, 62 airplanes, 129 mines, and 578 seafloor images. Sethuraman et al. [18] AI4Shipwrecks dataset comprises 286 high-resolution side-scan sonar images obtained from autonomous underwater vehicles (AUVs) and labeled with consultation from specialist marine archaeologists. Another dataset i.e. Sonar Common Target Detection Dataset (SCTD) [27] consists of 57 images of planes, 266 images of shipwrecks, and 34 images of drowning victims, each with different dimensions.

However, due to real-world dataset limitations, synthetic sonar images play a significant role in advancing research in underwater exploration. Shin et al. [19] proposed a method using the Unreal Engine (UE) to generate synthetic sonar images with various seabed conditions and objects like cubes, cylinders, and spheres. Sung et al. [21] synthesized realistic sonar images using ray tracing algorithms and GAN. Liu et al. [15] proposed cycle GAN-based generation of realistic acoustic datasets for forward-looking sonars. Yang et al. [25] proposed a side-scan sonar image synthesis method based on the diffusion model. Xi et al. [23] used optical data to train their developed sonar-style image. Lee et al. [13] simulated a realistic sonar image of divers by applying the StyleBankNet image synthesizing scheme to the images captured by an underwater simulator.

2.2 Sonar Image Classification

After the extensive development of sonar imaging technology, underwater image classification has emerged as a crucial area in the field of ocean development. Li et al. [14] used Support Vector Machine (SVM) as the classifier to recognize small diver from dim special diver targets accurately and selected five main characteristics of divers such as divers average scale, velocity, shape, direction, and angle with 94.5% as accuracy rate. After feature extraction, Karine et al. [10] implemented the k-nearest neighbor (KNN) and SVM algorithms for seafloor image classification recorded by side scan sonar. Zhu et al. [28] proposed an extreme learning machine (KELM) and principle component analysis (PCA) for side scan sonar image classification. Du et. al [7] compared different CNN model prediction accuracy and found less improvement for AlexNet and VGG-16 and good improvement for Google Net and ResNet101 after the transfer learning technique is applied. Google Net has the highest prediction accuracy at 94.27%. After fine-tuning limited data, [5] used a pre-trained deep neural network in which ResNet-34 and DenseNet-121 were the best-performing models of underwater image classification.

In contrast to the aforementioned synthetic sonar images, which are expensive and time-consuming due to recreating from real data, our S3Simulator dataset is quite an economical and time-efficient solution built on simulator technology and advanced AI techniques. To the best of our knowledge, the S3Simulator dataset is the first publicly accessible and extensive compilation of side-scan sonar images for ship and plane objects.

3 S3Simulator Dataset

This section explains the workflow and generation of the S3Simulator dataset. The overall architecture of the proposed pipeline of S3Simulator is depicted in Fig. 1. It consists of modules Segment Anything Model (SAM), SelfCAD, Gazebo, computational imaging, output of the simulated image, real sonar image, and its classification using Machine Learning (ML) and Deep Learning (DL) techniques. The details of the modules are explained below.

3.1 Data acquisition

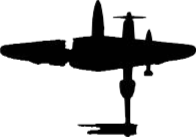

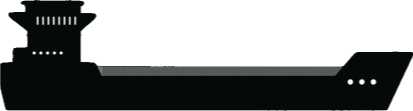

To replicate the sonar imagery of realistic objects such as ships and planes, the data is collected from the Royal Observer Corps Club’s third-grade exam. The collection has a total of 62 unique aircraft, whereas each aircraft is depicted as a black image on both sides and plan perspectives [3], and the U.S. ship silhouettes show the relative size of the various classes of aircraft carriers, battleships, cruisers, and destroyers [4].(The images are represented in the supplementary material for reference.)

Further, for the AI investigation and classification, as mentioned in Section 2.1 Seabed object KLSG dataset is utilized. (Sample images of the Seabed object KLSG dataset are given in the supplementary material for reference.) This dataset serves as the basis for testing the S3Simulated dataset against real sonar data.

3.2 Segmentation with Segment Anything Model (SAM)

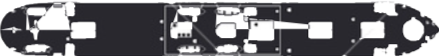

Segment Anything Model (SAM) [11]- is one of the cutting-edge models in semantic segmentation. SAM is intended to identify and isolate an object of interest within an image in response to specific user-provided prompts. Prompts can be text, a bounding box, a collection of points (including a complete mask), or a single point. Even though the request is ambiguous, the model still generates an appropriate segmentation mask, as shown in Fig. 2. Consequently, it can perform effectively in the zero-shot learning regime, i.e. it can segment objects of types it has never encountered before without the need for further training. SAM consists of an image encoder, a flexible prompt encoder, and a fast mask decoder based on Transformer vision models. The image encoder is applied once per image before prompting the model. Masks consist of dense prompts encoded with convolutions and combined element-wise with the image embedding. Image, prompt, and output token embedding are efficiently mapped to masks via the mask decoder.

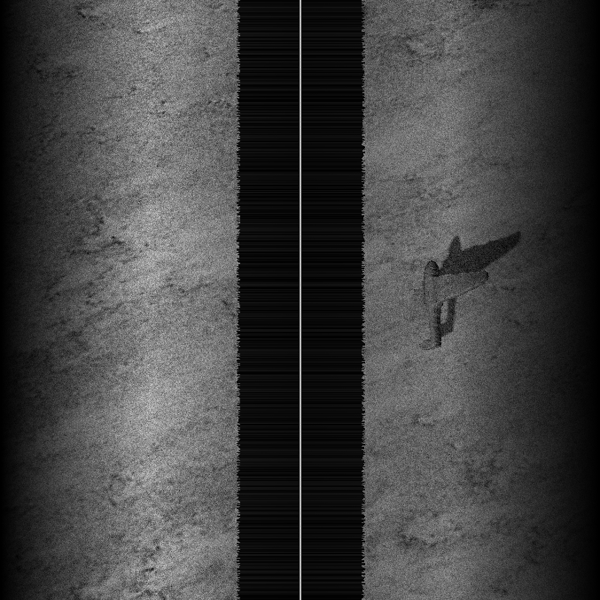

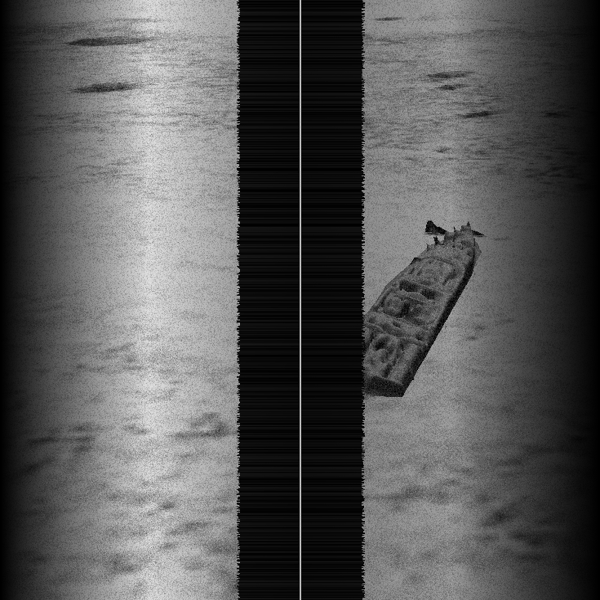

In the analysis of real-world objects, SAM is applied to facilitate the segmentation and masking of specified objects. In this work, SAM approach is used to segment the planes and ships objects from the raw silhouette images, as shown in Fig. 2.

3.3 3D Model Generation in SelfCAD

SelfCAD [2] is a software application for computer-aided design (CAD) that enables users to modify pre-existing designs as well as to generate 3D model from 2D image. SelfCAD enables users, with its robust tools, to effortlessly create, sculpt, and slice objects. In our work, SelfCAD is employed to generate a 3D model from the segmented 2D images shown in Fig.2. We refined the 3D models by applying sculpting techniques, improving resolution, modifying tolerances, and manipulating size and shape. The purpose of these modifications is to improve and optimize the final 3D models, which are similar to real-world objects as shown in Fig. 3.

3.4 Deployment to the Gazebo Simulator

Gazebo [1] is an open-source robotic simulator that simplifies high-performance application development. Its primary users are robot designers, developers, and educators. In our work, Gazebo is employed to simulate sonar images by rendering 3D objects and shadows on various seabeds shown in Fig. 5. The generated 3D model is integrated into Gazebo World. The rendering of objects is achieved by adjusting their poses on the x, y, and z axes and incorporating features like roll, pitch, and yaw rotations. Additionally, the visual texture of the 3D model can be fine-tuned with RGB values from the link inspector available in the Gazebo. To bring the simulated image to a more realistic sonar image, we explicitly add noise from sensors provided by Gazebo models, which adds Gaussian-sampled disturbance independently to each pixel.

3.5 Computational Imaging

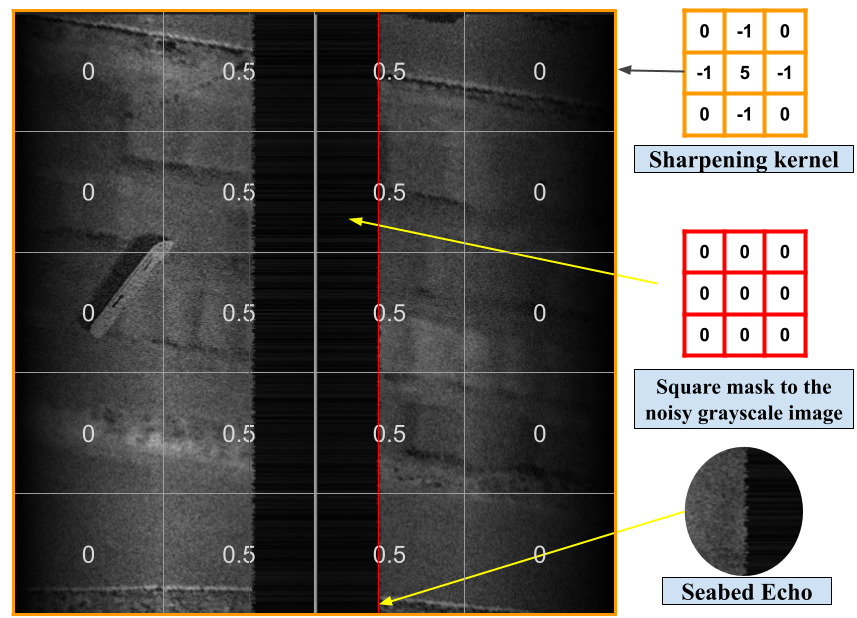

This process includes a series of computational imaging techniques aimed at converting data from a Gazebo simulator into a visual representation that closely resembles sonar imagery. Some of the key techniques include the clipping of pixel values, the integration of linear gradients, and the generation of nadir zones.

3.5.1 Image clipping and integration of Linear Gradient

In a sonar image, we can identify that dark colours represent deeper areas and bright colours represent shallow areas. To mimic the real-world conditions, the linear gradient technique is employed in simulated sonar images by partitioning the image into 50% and applying a gradient on both sides as shown in fig 6. The gradient for the image function is given by:

| (1) |

Gradient for Quarter-based Intensity Mapping in a Simulated Sonar Image,

| (2) |

In this representation:

-

•

- width of the image.

-

•

- gradient intensity at position in the image.

-

•

The gradient changes at different rates depending on the value of , where is the horizontal position within the image.

-

•

The gradient is 0 in the first quarter of the width, 0.5 in the second, 0.9 in the third, and 0 in the last.

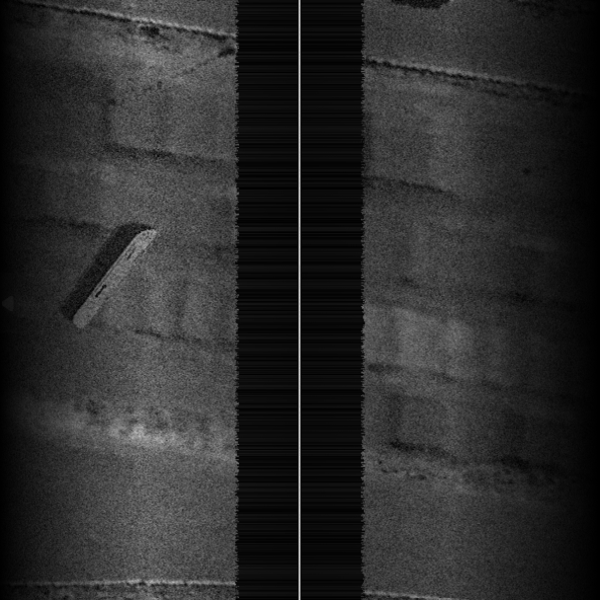

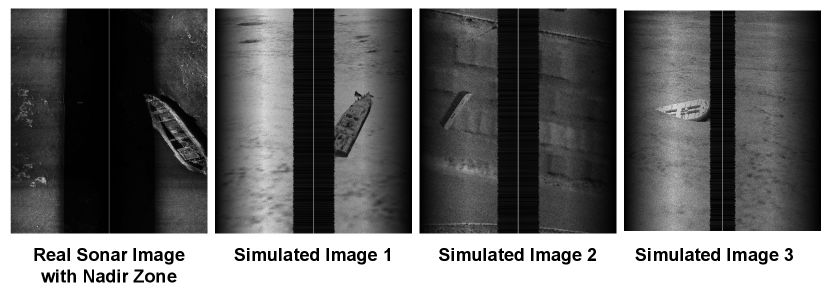

3.5.2 Generation of nadir zone

The nadir zone in the sonar image is beneath the sonar sensor which appears as a dark zone with a thick white line in between the zone. To mimic this in our work the combination of clipping and linear gradient is employed, to mask the nadir zone and thin white line inside the zone.

| (3) |

where:

-

•

- chosen threshold value.

-

•

- resulting mask, with a value of 1 indicating that the pixel is part of the Nidar zone and 0 indicating it’s not.

3.6 Image Augmentation

Image augmentation techniques [24] are utilized to improve the resilience and classification performance of the models, trained on both the synthetic sonar simulation dataset and the real sonar dataset. The augmentation pipeline consists of modifications to horizontal flips and random crops. These changes facilitate the inclusion of alterations in the images, hence strengthening the model’s ability to acquire robust features and mitigating the issue of overfitting.

4 Sonar image classification on S3Simulator dataset

To showcase the effectiveness of the proposed S3Simulator, the developed dataset is used to benchmark computer vision applications such as image classification. Image classification is a fundamental task in computer vision that involves categorizing an image into one or more predefined classes [12]. This study explores two primary techniques for image classification: the classical Machine Learning (ML) approach, which utilizes algorithms like k-nearest neighbors (KNN), Random Forest, and Support Vector Machines (SVM), suitable for smaller datasets; and deep learning (DL) models, such as Convolutional Neural Networks (CNNs), which can automatically learn from huge datasets with intricate patterns to provide better results.

The k-nearest neighbors [8] algorithm is a simple approach that classifies new data by calculating the distance between the nearest neighbors. similarly, Random forest [6] is a technique in ensemble learning that combines predictions from multiple decision trees that were trained on random subsets of data. Whereas, SVM [14] utilize a hyperplane in the feature space to distinguish between classes. Formally, the aforementioned techniques can be represented as

| (4) |

where, is the predicted label for the input , is lagrange multipliers obtained from the SVM optimization process, the feature vector of the training data, is class labels (-1 or 1) for the training data point, represents the kernel function that calculates the similarity between the input vector and the support vectors , and is the bias to determine the offset of the decision boundary.

Deep Learning (DL) is widely used in the field of pattern recognition and are more efficient than traditional machine learning approaches for image classification [16]. In particular, Convolutional Neural Networks (CNN) are utilized to classify images. In our study, we leverage transfer learning approach [22] wherein knowledge from one model is transferred to another model, in order to train deep neural networks with comparatively little data. Mathematically, the neural network learning can be represented as follows.

| (5) |

where, is the predicted label, be the activation function, is the weights of the newly added fully-connected layer for binary classification, h is the Output from the pre-trained model (usually the last layer before the final classification layer in the backbone model) and is the biases of the newly added fully-connected layer.

5 Experimental Setup

In this Section, the experimental details employed to develop, train, and evaluate the sonar image classification is explained.

5.1 Dataset

In the generation of the S3Simulator dataset, a 3D object model is generated from the silhouette images. To enhance realism with realistic objects, the silhouette image is acquired from army fighter planes, army bombers, naval planes, and battleships, as mentioned in Section 3.1.

For AI investigation, we incorporate a simulated dataset S3Simulator along with a real sonar Seabed objects-KLSG dataset [9]. This dataset comprises 578 seafloor images, 385 wreck images, 36 drowning victim images, 62 aircraft images, and 129 mine images accumulated over a period of more than ten years. With the generous assistance of numerous sonar equipment suppliers—including Lcocean, Hydro-tech Marine, Klein Marine, Tritech, and EdgeTech—this dataset is made possible. Additionally, it comprises images that were obtained directly from the original large-scale side-scan sonar images without any preprocessing.

5.2 Evaluation protocol

In the evaluation of the sonar image classification task, benchmarking classification metrics such as accuracy and confusion matrix are used. Accuracy is an evaluation metric that allows to measure the total number of predictions a model gets right. Mathematically, Accuracy (ACC) is formulated as,

| (6) |

-

•

TP (True Positives) - number of images correctly classified as positive.

-

•

TN (True Negatives) - number of images correctly classified as negative.

-

•

FP (False Positives) - number of images incorrectly classified as positive.

-

•

FN (False Negatives) - number of images incorrectly classified as negative.

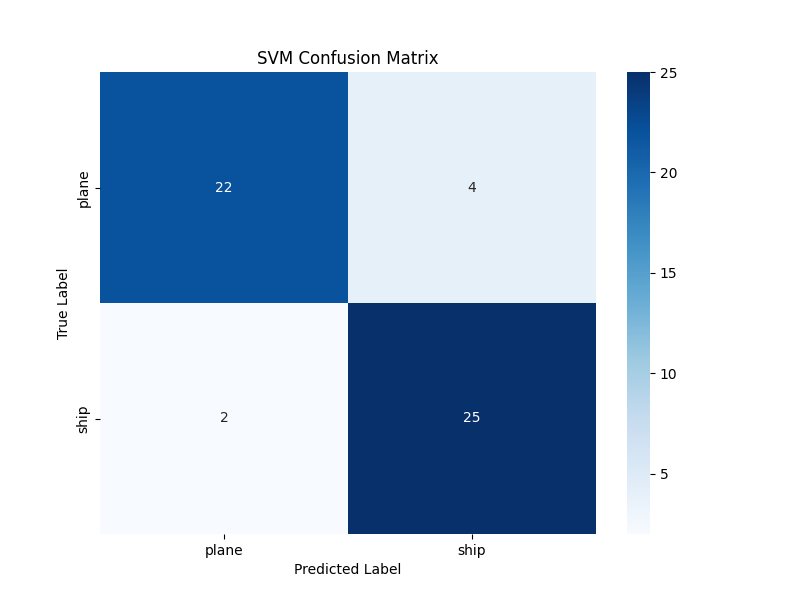

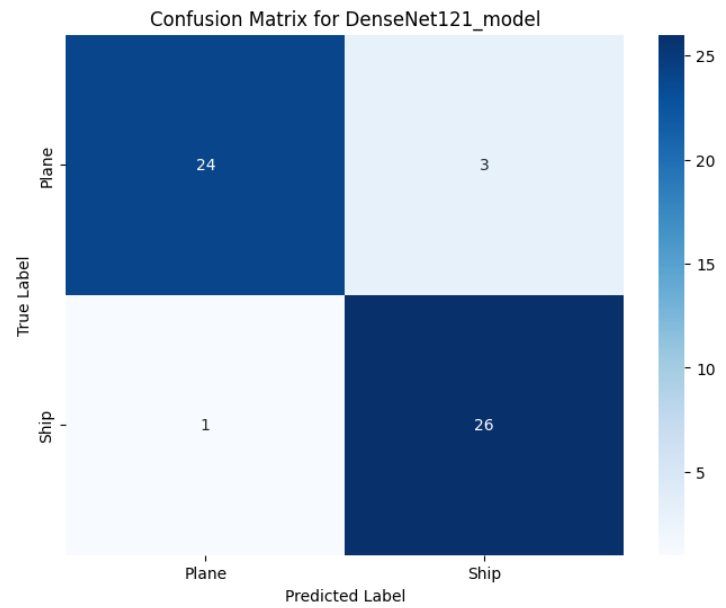

Confusion matrix displays counts of the True Positives, False Positives, True Negatives, and False Negatives produced by a model as shown in Fig. 9. Using a confusion matrix we can get the values needed to compute the accuracy of a model.

5.3 Implementation details

In this investigation, pre-trained models such as VGG16, VGG19, MobileNetV2, InceptionResNetV2, InceptionV3, ResNet50, and DenseNet121 are trained on the ImageNet dataset with two active layers of 1024 and 512 neurons. A dropout layer with a 0.25 dropout rate and a batch normalization layer improved the model’s robustness. In training, we used the Adam optimizer with a learning rate of 0.0001 and a batch size of 16. We employed model checkpoint and early stopping during the evaluation to evaluate training progress and prevent overfitting. In the gazebo, models are described as Simulation Description Format (.sdf) files detailing their physics characteristics, properties, visual appearance, collision, etc. We Utilized Ubuntu 22.04.4 LTS and Gazebo multi-robot simulator, version 11.10.2 for gazebo simulation. The implementation of image classification is conducted on Google Colab, utilizing an T4 GPU with an allocation of 15GB of RAM for training and employing Google’s TensorFlow framework.

6 Experimental Results

6.1 S3Simulator dataset results

As explained in Section 3 overall architecture, the final pipeline of simulated sonar image is shown in Fig. 7. The pipeline consists of data acquisition, SAM, self-CAD, gazebo simulator, and computational imaging techniques employed to generate the S3Simulator dataset. Sample S3Simulator images shown in Fig. 8.

6.2 Sonar Image classification

Referring to Section 4, a benchmark study of classification using the S3Simulator dataset is carried out using both Machine Learning (ML) and Deep Learning (DL) classifiers. Extensive analysis using simulated data, real data, and real+simulated data is conducted. The classifier performance of ML and DL models are shown in Table 1 and Table 2. From Table 1, it can be observed that by using simulated data SVM outperforms with an accuracy of 92%. Similarly, the Random Forest classifier outperforms with a test accuracy of 88% in real data. While utilizing both real + simulated data and testing on real data, which represents a realistic deployment of scenarios in the wild, SVM classifier outperforms with 88% accuracy. From all the above analyses, SVM classifier was found to be providing superior performance among all.

| Training Data | Testing Data | Classifier | Train Accuracy | Test Accuracy |

|---|---|---|---|---|

| Simulated | Simulated | SVM | 1.00 | 0.92 |

| Random Forest | 1.00 | 0.69 | ||

| KNN | 0.75 | 0.56 | ||

| Real | Real | SVM | 1.00 | 0.77 |

| Random Forest | 1.00 | 0.83 | ||

| KNN | 0.83 | 0.72 | ||

| Real + Simulated | Real | SVM | 1.00 | 0.88 |

| Random Forest | 1.00 | 0.63 | ||

| KNN | 0.79 | 0.65 |

| Model | Trained in real data | Trained in real + simulated data | Percentage improved from real data to combined data |

|---|---|---|---|

| VGG_16 | 0.90 | 0.94 | 4% |

| VGG_19 | 0.87 | 0.92 | 5% |

| ResNet50 | 0.64 | 0.70 | 6% |

| InceptionV3 | 0.91 | 0.94 | 3% |

| DenseNet121 | 0.92 | 0.96 | 4% |

| MobileNetV2 | 0.89 | 0.94 | 5% |

| InceptionResNetV2 | 0.91 | 0.95 | 4% |

Analogous to the ML classifier, the performance of the DL classifier, as mentioned in Section 5.3, is also investigated. Referring to Table 2, the test accuracy of different models in test data that are trained using real data / real + simulated data are studied. It is observed that while training with real data, DenseNet121 and InceptionResNetV2 outperform the models with an accuracy of 92% and 91%, respectively. Further training with real+simulated data, DenseNet121 achieved the best performance with test accuracy of 96%. It is observed that the significant improvement in accuracy of all the models from 3%-6% is observed in real + simulated data compared to real data. This accentuates the impact of additional synthetic data augmenting the training process, by replicating realistic sonar data in terms of the number of images, and quality of images and by recreating real-world scenarios.

The confusion matrices of the best-performed models in both ML (i.e., SVM) and DL (i.e.,DenseNet121) are depicted in Fig. 9. The overall as well as class-wise accuracy is analysed in the test scenario. The accuracy of the best ML classifier and DL classifier are 88% and 96%, respectively. It is observed that the performance of the "plane" class is improved in the DL Model with an accuracy of 88% compared to the ML accuracy of 84%. Similarly, the accuracy of "ships" is increased from 92% to 96% in the DL model.

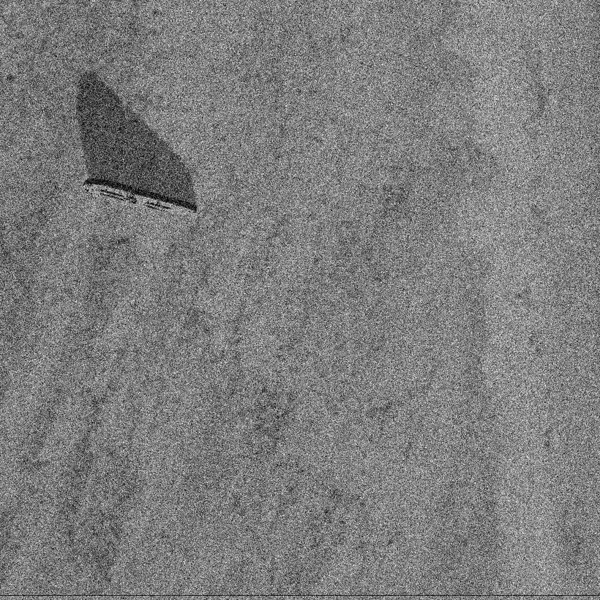

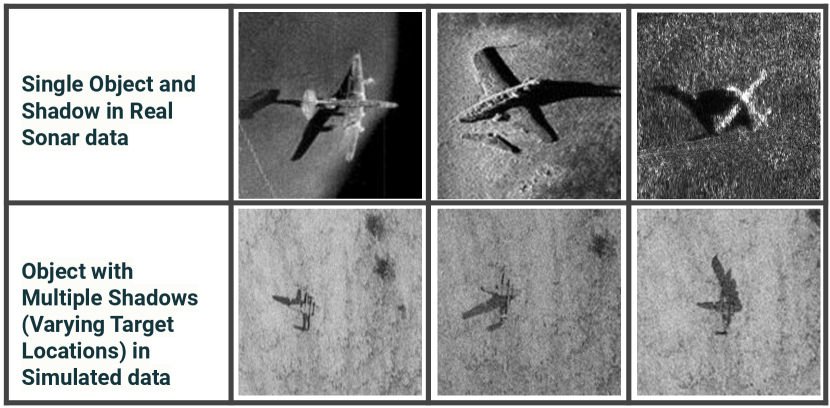

6.3 Visualization: Highlights & Shadows

In sonar image analysis, both the shadow and highlight regions are crucial for accurate classification and detection, as they provide complementary information about the objects’ shape and size. In many cases, the highlight area of an object in a sonar image may not be clearly visible, but its shadow can be distinctly observed as shown in Fig. 10(a). By emphasizing the shadow information, the "S3Simulator" dataset addresses an important gap in existing sonar image datasets and provides a valuable resource for researchers to advance the field of sonar image analysis.

The shadow characteristics in publicly available datasets are typically determined by the fixed positioning of the sonar relative to the object. The "S3Simulator" dataset overcomes this limitation by allowing for the generation of sonar images with varying shadow angles for a given object. This is achieved through the flexibility of the simulation process, where the position and orientation of the sonar device can be adjusted to create images with different shadow characteristics, as shown in Fig. 10(b). Furthermore, real-world side-scan sonar data contains a nadir zone, a crucial feature often missing in synthetic datasets. The "S3Simulator" dataset uniquely incorporates the nadir zone, enhancing the realism of simulated sonar imagery as shown in Fig.11.

7 Conclusion and Future Works

In this work, we presented a novel benchmarking Side Scan Sonar simulator dataset named "S3Simulator dataset" for underwater sonar image analysis. By employing a systematic methodology that encompasses the collection of real-world images, reconstruction of 3D models, simulations, and computational imaging techniques, we have effectively generated a comprehensive dataset similar to real-world sonar images. The effectiveness of our methodology is demonstrated by benchmarking image classification results obtained from several machine learning and deep learning techniques applied to both simulated and real sonar datasets. Future enhancements to our approach aim to increase reliability and broaden usability by integrating 3D models of humans, mines, and marine life into diverse environmental settings for enhanced information richness. Additionally, by incorporating advanced techniques such as diffusion models and generative AI, we can introduce greater diversity and improve the representativeness of the generated datasets. Moving forward, addressing these limitations will be crucial for enhancing the reliability and scalability of simulation-based approaches in sonar image analysis. We also envisage refining our framework’s flexibility through multi-object detection and tracking. We anticipate that the S3Simulator dataset will significantly advance AI technology for marine exploration and surveillance by offering valuable sonar imagery for research purposes.

8 Acknowledgments

This work was partially supported by the Naval Research Board (NRB), DRDO, Government of India under grant number: NRB/505/SG/22-23.

References

- [1] GAZEBO Homepage, https://gazebosim.org/home, last accessed 3 APR 2024

- [2] selfCAD Homepage, https://www.selfcad.com/, last accessed 3 APR 2024

- [3] Admin, L.S.: U.s. navy ship silhouettes. https://www.lonesentry.com/blog/u-s-navy-ship-silhouettes.html, accessed: 21 Aug 2024

- [4] Archive, I.D.: Silhouettes of british, american and german aircraft. https://ibccdigitalarchive.lincoln.ac.uk/omeka/collections/document/22384, accessed: 21 Aug 2024

- [5] Chungath, T.T., Nambiar, A.M., Mittal, A.: Transfer learning and few-shot learning based deep neural network models for underwater sonar image classification with a few samples. IEEE Journal of Oceanic Engineering (2023)

- [6] Cutler, A., Cutler, D., Stevens, J.: Random Forests, vol. 45, pp. 157–176 (01 2011). https://doi.org/10.1007/978-1-4419-9326-7_5

- [7] Du, X., Sun, Y., Song, Y., Sun, H., Yang, L.: A comparative study of different cnn models and transfer learning effect for underwater object classification in side-scan sonar images. Remote Sensing 15(3), 593 (2023)

- [8] Guo, G., Wang, H., Bell, D., Bi, Y., Greer, K.: Knn model-based approach in classification. In: On The Move to Meaningful Internet Systems 2003: CoopIS, DOA, and ODBASE: OTM Confederated International Conferences, CoopIS, DOA, and ODBASE 2003, Catania, Sicily, Italy, November 3-7, 2003. Proceedings. pp. 986–996. Springer (2003)

- [9] Huo, G., Wu, Z., Li, J.: Underwater object classification in sidescan sonar images using deep transfer learning and semisynthetic training data. IEEE access 8, 47407–47418 (2020)

- [10] Karine, A., Lasmar, N., Baussard, A., El Hassouni, M.: Sonar image segmentation based on statistical modeling of wavelet subbands. In: 2015 IEEE/ACS 12th International Conference of Computer Systems and Applications (AICCSA). pp. 1–5. IEEE (2015)

- [11] Kirillov, A., Mintun, E., Ravi, N., Mao, H., Rolland, C., Gustafson, L., Xiao, T., Whitehead, S., Berg, A.C., Lo, W.Y., et al.: Segment anything pp. 4015–4026 (2023)

- [12] Lai, Y.: A comparison of traditional machine learning and deep learning in image recognition. In: Journal of Physics: Conference Series. vol. 1314, p. 012148. IOP Publishing (2019)

- [13] Lee, S., Park, B., Kim, A.: Deep learning from shallow dives: Sonar image generation and training for underwater object detection. arXiv preprint arXiv:1810.07990 (2018)

- [14] Li, K., Li, C.L., Zhang, W.: Research of diver sonar image recognition based on support vector machine. Advanced Materials Research 785, 1437–1440 (2013)

- [15] Liu, D., Wang, Y., Ji, Y., Tsuchiya, H., Yamashita, A., Asama, H.: Cyclegan-based realistic image dataset generation for forward-looking sonar. Advanced Robotics 35(3-4), 242–254 (2021)

- [16] O’shea, K., Nash, R.: An introduction to convolutional neural networks. arXiv preprint arXiv:1511.08458 (2015)

- [17] Rutledge, J., Yuan, W., Wu, J., Freed, S., Lewis, A., Wood, Z., Gambin, T., Clark, C.: Intelligent shipwreck search using autonomous underwater vehicles. In: International Conference on Robotics and Automation (ICRA). pp. 6175–6182 (2018)

- [18] Sethuraman, A.V., Sheppard, A., Bagoren, O., Pinnow, C., Anderson, J., Havens, T.C., Skinner, K.A.: Machine learning for shipwreck segmentation from side scan sonar imagery: Dataset and benchmark. arXiv preprint arXiv:2401.14546 (2024)

- [19] Shin, J., Chang, S., Bays, M.J., Weaver, J., Wettergren, T.A., Ferrari, S.: Synthetic sonar image simulation with various seabed conditions for automatic target recognition. In: OCEANS 2022, Hampton Roads. pp. 1–8. IEEE (2022)

- [20] Steiniger, Y., Kraus, D., Meisen, T.: Survey on deep learning based computer vision for sonar imagery. Engineering Applications of Artificial Intelligence 114, 105157 (2022)

- [21] Sung, M., Kim, J., Lee, M., Kim, B., Kim, T., Kim, J., Yu, S.C.: Realistic sonar image simulation using deep learning for underwater object detection. International Journal of Control, Automation and Systems 18(3), 523–534 (2020)

- [22] Weiss, K., Khoshgoftaar, T.M., Wang, D.: A survey of transfer learning. Journal of Big data 3, 1–40 (2016)

- [23] Xi, J., Ye, X.: Sonar image target detection based on simulated stain-like noise and shadow enhancement in optical images under zero-shot learning. Journal of Marine Science and Engineering 12(2), 352 (2024)

- [24] Xu, M., Yoon, S., Fuentes, A., Park, D.S.: A comprehensive survey of image augmentation techniques for deep learning. Pattern Recognition 137, 109347 (2023)

- [25] Yang, Z., Zhao, J., Zhang, H., Yu, Y., Huang, C.: A side-scan sonar image synthesis method based on a diffusion model. Journal of Marine Science and Engineering 11(6), 1103 (2023)

- [26] Zhang, F., Zhang, W., Cheng, C., Hou, X., Cao, C.: Detection of small objects in side-scan sonar images using an enhanced yolov7-based approach. Journal of Marine Science and Engineering 11(11), 2155 (2023)

- [27] Zhang, P., Tang, J., Zhong, H., Ning, M., Liu, D., Wu, K.: Self-trained target detection of radar and sonar images using automatic deep learning. IEEE Transactions on Geoscience and Remote Sensing 60, 1–14 (2021)

- [28] Zhu, M., Song, Y., Guo, J., Feng, C., Li, G., Yan, T., He, B.: Pca and kernel-based extreme learning machine for side-scan sonar image classification. In: 2017 IEEE Underwater Technology (UT). pp. 1–4. IEEE (2017)