S2WAT: Image Style Transfer via Hierarchical Vision Transformer

Using Strips Window Attention

Abstract

Transformer’s recent integration into style transfer leverages its proficiency in establishing long-range dependencies, albeit at the expense of attenuated local modeling. This paper introduces Strips Window Attention Transformer (S2WAT), a novel hierarchical vision transformer designed for style transfer. S2WAT employs attention computation in diverse window shapes to capture both short- and long-range dependencies. The merged dependencies utilize the “Attn Merge” strategy, which adaptively determines spatial weights based on their relevance to the target. Extensive experiments on representative datasets show the proposed method’s effectiveness compared to state-of-the-art (SOTA) transformer-based and other approaches. The code and pre-trained models are available at https://github.com/AlienZhang1996/S2WAT.

Introduction

Background. Image style transfer imparts artistic characteristics from a style image to a content image, evolving from traditional (Efros and Freeman 2001) to iterative (Gatys, Ecker, and Bethge 2015, 2016) and feed-forward methods (Johnson, Alahi, and Fei-Fei 2016; Chen et al. 2017). Handling multiple styles concurrently remains a challenge, addressed by Universal Style Transfer (UST) (Park and Lee 2019; Kong et al. 2023; Li et al. 2022). This sparks innovative approaches like attention mechanisms for feature stylization (Yao et al. 2019; Deng et al. 2020; Chen et al. 2021), the Flow-based method (An et al. 2021) for content leakage, and Stable Diffusion Models (SDM) for creative outcomes (Zhang et al. 2023). New neural architectures, notably the transformer, show remarkable potential. (Deng et al. 2022) introduces StyTr2, leveraging transformers for SOTA performance. However, StyTr2’s encoder risks losing information due to one-time downsampling, impacting local details with global MSA (multi-head self-attention).

Challenge. To enhance the transformer’s local modeling capability, recent advancements propose the use of window-based attention computation, exemplified by hierarchical structures like Swin-Transformer (Liu et al. 2021). However, applying window-based transformers directly for feature extraction in style transfer can lead to grid-like patterns, as depicted in Fig. 1 (c). This arises due to the localized nature of window attention, termed the locality problem. While window shift can capture long-range dependencies (Liu et al. 2021), it necessitates deep layer stacks, introducing substantial model complexity for style transfer, particularly with high-resolution samples.

Motivation and Technical Novelty.Diverging from current transformer-based approaches, we introduce a novel hierarchical transformer framework for image style transfer, referred to as S2WAT (Strips Window Attention Transformer). This structure meticulously captures both local and global feature extraction, inheriting the efficiency of window-based attention. In detail, we introduce a distinct attention mechanism (Strips Window Attention, SpW Attention) that amalgamates outputs from multiple window attentions of varying shapes. These diverse window shapes enhance the equilibrium between modeling short- and long-range dependencies, and their integration is facilitated through our devised “Attn Merge” technique.

In this paper, we formulate the SpW Attention in a simple while effective compound mode, which encompasses three window types: horizontal strip-like, vertical strip-like, and square windows. The attention computations derived from strip windows emphasize long-range modeling for extracting non-local features, while the square window attention focuses on short-range modeling for capturing local features.

Furthermore, the “Attn Merge” method combines attention outputs from various windows by computing spatial correlations between them and the input. These calculated correlation scores serve as merging weights. In contrast to static merge strategies like summation and concatenation, “Attn Merge” dynamically determines the significance of different window attentions, thus enhancing transfer effect.

Contributions. Extensive quantitative and qualitative experiments are conducted to prove the effectiveness of the proposed framework, including a large-scale user study. The main contributions of our work include:

-

•

We introduce a pioneering image style transfer framework, S2WAT, founded on a hierarchical transformer. This framework adeptly undertakes both short- and long-range modeling concurrently, effectively mitigating the challenge of locality issues.

-

•

We devise a novel attention computation within the transformer for style transfer, termed SpW Attention. This mechanism intelligently merges outputs from diverse window attentions using the “Attn Merge” approach.

-

•

We extensively evaluate our proposed S2WAT on well-established public datasets, demonstrating its state-of-the-art performance for the style transfer task.

Related Work

Image Style Transfer. Style transfer methods can fall into single-style (Ulyanov, Vedaldi, and Lempitsky 2016), multiple-style (Chen et al. 2017), and arbitrary-style (UST) (Zhang et al. 2022; Kong et al. 2023; Ma et al. 2023) categories based on their generalization capabilities. Besides models based on CNNs, recent works include Flow-based ArtFlow (An et al. 2021), transformer-based StyTr2 (Deng et al. 2022), and SDM-based InST (Zhang et al. 2023). ArtFlow, with Projection Flow Networks (PFN), achieves content-unbiased results, while IEST (Chen et al. 2021) and CAST (Zhang et al. 2022) use contrastive learning for appealing effects. InST achieves creativity through SDM. Models like (Wu et al. 2021b; Zhu et al. 2023; Hong et al. 2023) use transformers to fuse image features, and (Liu et al. 2022; Bai et al. 2023) encode text prompts for text-driven style transfer. StyTr2 leverages transformers as the backbone for pleasing outcomes. Yet, hierarchical transformers remain unexplored in style transfer.

Hierarchical Vison Transformer. Lately, there has been a resurgence of interest in hierarchical architectures within the realm of transformers. Examples include LeViT (Graham et al. 2021) & CvT (Wu et al. 2021a), which employ global MSA; PVT (Wang et al. 2021) & MViT (Fan et al. 2021), which compress the resolution of K & V. However, in these approaches, local information is not adequately modeled. While Swin effectively captures local information through shifted windows, it still gives rise to the locality problem when applied to style transfer (see Fig. 1). Intuitive attempts, such as inserting global MSA (see Section Pre-Analysis) or introducing Mix-FFN (Xie et al. 2021) by convolutions (see appendix), are powerless for locality problem. In the context of style transfer, a promising avenue involves advancing further with a new transformer architecture that encompasses both short- and long-range dependency awareness and possesses the capability to mitigate the locality problem.

Differences with Other Methods While the attention mechanism in certain prior methods may share similarities with the proposed SpW Attention, several key distinctions exist. 1) The fusion strategy stands out: our proposed “Attn Merge” demonstrates remarkable superiority in image style transfer. 2) In our approach, all three window shapes shift based on the computation point, and their sizes dynamically adapt to variations in input sizes. Detailed differentiations from previous methods, such as CSWin, Longformer, and iLAT have been outlined in the Appendix.

Pre-Analysis

Our preliminary analysis aims to unveil the underlying causes of grid-like outcomes (locality problem) that arise when directly employing Swin for style transfer. Our hypothesis points towards the localized nature of window attention as the primary factor. To validate this hypothesis, we undertake experiments across four distinct facets as discussed in this Section. The details of the models tested in this part can be found in Appendix.

Global MSA for Locality Problem

The locality problem should be relieved or excluded when applying global MSA instead of window or shifted window attention, if the locality of window attention is the culprit. In the Swin-based encoder, we substitute the last one or two window attentions with global MSA, configuring the window size for target layers at 224 (matching input resolution). Fig. 2 (a) presents the experiment results, highlighting grid-like textures at a window size of 7 (column 3) and block-like formations when the last window attention is swapped with global MSA (column 4). While replacing the last two window attentions with global MSA effectively alleviates grid-like textures, complete exclusion remains a challenge. This series of experiments substantiates that the locality problem indeed stems from the characteristics of window attention.

Influence of Window Size for Locality Problem

The window size in window attention, akin to the receptive field in CNN, delineates the computational scope. To examine the impact of window size, assuming the locality of window attention causes the locality problem, we investigate three scenarios: window sizes of 4, 7, and 14 for the last stage. The outcomes of these experiments are depicted in Fig. 2 (b). Notably, relatively small blocks emerge with a window size of 4 (column 4), while a shifted window’s rough outline materializes with a window size of 14 (column 5). This series of experiments underscores the pivotal role of window size in the locality problem.

Locality Phenomenon in Feature Maps

In previous parts, we discuss the changes in external factors, and we will give a shot at internal factors in the following parts. Since the basis of the transformer-based transfer module is the similarity between content and style features, the content features should leave clues if the stylized images are grid-like. For this reason, we check out all the feature maps from the last layer of the encoder and list some of them (see Fig. 3), which are convincing evidence to prove that features from window attention have strongly localized.

Locality Phenomenon in Attention Maps

To highlight the adverse impact of content feature locality on stylization, we analyze attention maps from the first inter-attention (Cheng, Dong, and Lapata 2016) in the transfer module (see Fig. 4). Five points, representing corners (p1: top-left in red, p2: top-right in green, p3: bottom-left in blue, p4: bottom-right in black), and the central point (p5: white) are selected from style features to gauge their similarity with content features. These points, extracted from specific columns of attention maps and reshaped into squares, mirror content feature shapes. The similarity map of p1 reveals pronounced responses aligned with red blocks in the stylized image. Conversely, p2, p3, and p5 exhibit robust responses in areas devoid of red blocks. As for p4’s similarity map, responses are distributed widely. These outcomes underline the propagation of window attention’s locality from content features within the encoder to the attention maps of the transfer module. This influence significantly disrupts the stylization process, ultimately culminating in the locality problem. To address this issue, we present the SpW Attention and S2WAT solutions.

Method

Fig. 5 (c) presents the workflow of proposed S2WAT.

Strips Window Attention

As illustrated in Fig. 5 (b), SpW Attention comprises two distinct phases: a window attention phase and a fusion phase.

Window Attention. Assuming input features possess a shape of and denotes the strip width, the first phase involves three distinct window attentions: a horizontal strip-like window attention with a window size of , a vertical strip-like window attention with a window size of , and a square window attention with a window size of (where ). A single strip-like window attention captures local information along one axis while accounting for long-range dependencies along the other. In contrast, the square window attention focuses on the surrounding information. Combining the outputs of these window attentions results in outputs that consider both local information and long-range dependencies. Illustrated in Fig. 6, the merged approach gathers information from a broader range of targets, striking a favorable balance between computational complexity and the ability to sense global information.

In computing square window attention, we follow (Liu et al. 2021) to include relative position bias to each head in computing the attention map, as

| (1) |

where are the query, key, and value matrices; is the dimension of query/key, is the number of patches in the window, and denotes multi-head self-attention using window in shape of . We exclusively apply relative position bias to square window attention, as introducing it to strip-like window attention did not yield discernible enhancements.

Attn Merge. Following the completion of the window attention phase, a fusion module named “Attn Merge” is engaged to consolidate the outcomes with the inputs. Illustrated in Fig. 7, “Attn Merge” comprises three core steps: first, tensor stacking; second, similarity computation between the first tensor and the rest at every spatial location; third, weighted summation based on similarity. The computational efficiency of “Attn Merge” is noteworthy, as

| (2) | ||||

where are input tensors and is the outputs; Stack denotes the operation to collect tensors in a new dimension and represents the operation to add or subtract a dimension of tensor.

Strips Window Attention Block. We now provide an overview of the comprehensive workflow of the SpW Attention block. The structure of the SpW Attention block mirrors that of a standard transformer block, except for the substitution of MSA with a SpW Attention (SpW-MSA) module. As depicted in Fig. 5 (b), a SpW Attention block comprises a SpW-MSA module, succeeded by a two-layer MLP featuring GLUE as the non-linear activation in between. Preceding each SpW-MSA module and MLP, a LayerNorm (LN) operation is applied, and a residual connection is integrated after each module. The computation process of a SpW Attention block unfolds as follows:

| (3) | ||||

where “” means “Attn Merge”, , and denote the outputs of MLP, “Attn Merge”, and W-MSA for block , respectively; denotes multi-head self-attention using window in shape of . As shown in (3), the SpW Attention block primarily consists of two parts: SpW Attention (comprising W-MSA and “Attn Merge”) and an MLP.

Computational Complexity. To make the cost of computation in SpW Attention clear, we compare the computational complexity of MSA, W-MSA, and the proposed SpW-MSA. Supposing the window size of W-MSA and the strip width of SpW-MSA are equal to and is the dimension of inputs, the computational complexity of a global MSA, a square window based one, and a Strips Window based one on an image of patches are:

| (4) | ||||

| (5) | ||||

| (6) |

As shown in Eqs. (4)-(6), MSA is quadratic to the patch number , and W-MSA is linear when is fixed. And the proposed SpW-MSA is something in the middle.

Overall Architecture

In contrast to StyTr2 (Deng et al. 2022), which employs separate encoders for different input domains, we adhere to the conventional encoder-transfer-decoder design of UST. This architecture encodes content and style images using a single encoder. An overview is depicted in Fig. 5.

Encoder. Like Swin, S2WAT’s encoder initially divides content and style images into non-overlapping patches using a patch partition module. These patches serve as “tokens” in transformers. We configure the patch size as , resulting in patch dimensions of . Subsequently, a linear embedding layer transforms the patches into a user-defined dimension ().

After embedding, the patches proceed through a series of consecutive SpW Attention blocks, nestled between padding and un-padding operations. Patches are padded to achieve divisibility by twice the strip width and cropped (un-padded) after SpW Attention blocks, preserving the patch count. Notably, patch padding employs reflection to mitigate potential light-edge artifacts that can arise when using constant 0 padding. These SpW Attention blocks uphold the patch count () and, in conjunction with the patch embedding layer and padding/un-padding operations, constitute “Stage 1”.

To achieve multi-scale features, gradual reduction of the patch count is necessary as the network deepens. Swin introduces a patch merging layer as a down-sample module, extracting elements with a two-step interval along the horizontal and vertical axes. By concatenating groups of these features in the channel dimension and reducing channels from to through linear projection, a 2x downsampling result is obtained. Subsequent application of SpW Attention blocks, flanked by padding and un-padding operations, transforms the features while preserving a resolution of . This combined process is designated as “Stage 2”. This sequence is reiterated for “Stage 3”, yielding an output resolution of . Consequently, the encoder’s hierarchical features in S2WAT can readily be employed with techniques like FPN or U-Net.

Transfer Module. A multi-layer transformer decoder replaces the transfer module, similar to StyTr2 (Deng et al. 2022). In our implementation, we maintain a close resemblance to the original transformer decoder (Vaswani et al. 2017), with two key distinctions from StyTr2: a) The initial attention module of each transformer decoder layer is MSA, whereas StyTr2 employs MHA (multi-head attention); b) LayerNorm precedes the attention module and MLP, rather than following them. The structure is presented in Fig. 5 (e) and more details can be found in codes.

Network Optimization

Similar to (Huang and Belongie 2017), we formulate two distinct perceptual losses for gauging the content dissimilarity between stylized images and content images , along with the style dissimilarity between stylized images and style images . The content perceptual loss is defined as:

| (7) |

where the overline denotes mean-variance channel-wise normalization; represents extracting features of layer from a pre-trained VGG19 model; is a set consisting of and in the VGG19. The style perceptual loss is defined as:

| (8) | ||||

where and denote mean and variance of features, respectively; and is a set consisting of , , and in the VGG19.

We also adopt identity losses (Park and Lee 2019) to further maintain the structure of the content image and the style characteristics of the style image. The two different identity losses are defined as:

| (9) | |||

| (10) |

where (or ) denotes the output image stylized from two same content (or style) images and is a set consisting of , , and in the VGG19. Finally, our network is trained by minimizing the loss function defined as:

| (11) |

where , , , and are the weights of different losses. We set the weights to 2, 3, 50, and 1, relieving the impact of magnitude differences.

Experiments

MS-COCO (Lin et al. 2014) and WikiArt (Phillips and Mackintosh 2011) are used as the content dataset and the style dataset respectively. Other implementation details are available in Appendix and codes.

Style Transfer Results

In this Section, we compare the results between the proposed S2WAT and previous SOTAs, including AdaIN (Huang and Belongie 2017), WCT (Li et al. 2017), SANet (Park and Lee 2019), MCC (Deng et al. 2021), ArtFlow (An et al. 2021), IEST (Chen et al. 2021), CAST (Zhang et al. 2022), StyTr2 (Deng et al. 2022) and InST (Zhang et al. 2023).

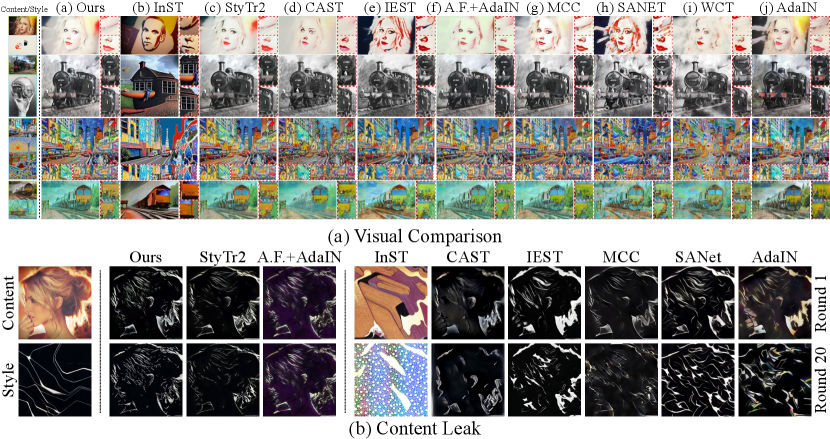

Qualitative Comparison. In Fig. 8 (a), we present visual outcomes of the compared algorithms. AdaIN, relying on mean and variance alignment, fails to capture intricate style patterns. While WCT achieves multi-level stylization, it compromises content details. SANet, leveraging attention mechanisms, enhances style capture but may sacrifice content details. MCC, lacking non-linear operations, faces overflow issues. Flow-based ArtFlow produces content-unbiased outcomes but may exhibit undesired patterns at borders. CAST retains content structure through contrastive methods but may compromise style. InST’s diffusion models yield creative results but occasionally sacrifice consistency. StyTr2 and proposed S2WAT strike a superior balance, with S2WAT excelling in preserving content details (e.g., numbers on the train, the woman’s glossy lips, and letters on billboards), as highlighted in dashed boxes in Fig. 8 (a). Additional results are available in the Appendix.

Quantitative Comparison. In this section, we follow a methodology akin to (Huang and Belongie 2017; An et al. 2021; Deng et al. 2022) utilizing losses as indirect metrics. Style, content, and identity losses serve as metrics, evaluating style quality, content quality, and input information retention, respectively. Additionally, inspired by (An et al. 2021), the Structural Similarity Index (SSIM) is included to gauge structure preservation. As shown in Table 1, S2WAT achieves the lowest content and identity losses, while SANet exhibits the lowest style loss. StyTr2 and S2WAT show comparable loss performance, emphasizing style and content, respectively. Due to its content-unbiased nature, ArtFlow registers identity losses of 0, signaling an unbiased approach. While ArtFlow is unbiased, S2WAT outperforms it in style and SSIM. S2WAT attains the highest SSIM, indicating superior content structure retention. It excels in preserving both content input structures and artistic style characteristics simultaneously.

| Method | Ours | InST | StyTr2 | CAST | IEST | ArtFlow-AdaIN | ArtFlow-WCT | MCC | SANet | WCT | AdaIN |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Content Loss | 1.66 | 3.73 | 1.83 | 2.07 | 1.81 | 1.93 | 1.73 | 1.92 | 2.16 | 2.56 | 1.71 |

| Style Loss | 1.74 | 29.98 | 1.52 | 4.33 | 2.72 | 1.90 | 1.89 | 1.70 | 1.11 | 2.23 | 3.50 |

| Identity Loss 1 | 0.16 | 0.71 | 0.26 | 1.94 | 0.91 | 0.00 | 0.00 | 1.07 | 0.81 | 3.01 | 2.54 |

| Identity Loss 2 | 1.38 | 134.23 | 3.10 | 18.72 | 7.16 | 0.00 | 0.00 | 7.72 | 6.03 | 21.88 | 17.97 |

| SSIM | 0.651 | 0.401 | 0.605 | 0.619 | 0.551 | 0.578 | 0.612 | 0.578 | 0.448 | 0.364 | 0.539 |

Content Leak

Content leak problem occur when applying the same style image to a content image repeatedly, especially if the model struggles to preserve content details impeccably. Following (An et al. 2021; Deng et al. 2022), We investigate content leakage in the stylization process, focusing on S2WAT and comparing it to ArtFlow, StyTr2, CNN-based, and SDM-based methods. Our experiments, detailed in Fig. 8 (b), reveal S2WAT and StyTr2, both transformer-based, exhibit minimal content detail loss over 20 iterations, surpassing CNN and SDM methods known for noticeable blurriness. While CAST alleviates content leak partially, the stylized effect remains suboptimal. In summary, S2WAT effectively mitigates the content leak issue.

InST occasionally underperforms, especially when content and style input styles differ significantly, potentially due to overfitting in the Textual Inversion module during single-image training. More details are available in the Appendix.

Ablation Study

Attn Merge. In order to showcase the effectiveness and superiority of “Attn Merge”, we undertake experiments where “Attn Merge” is replaced by fusion strategies such as the concatenation operation (as employed by CSWin) or the sum operation. The outcomes are depicted in Fig. 9. Stylized images generated using the sum operation are extensively corrupted, indicating a failure in model optimization. On the other hand, outputs obtained through concatenation relinquish a substantial portion of information from input images, particularly the style images. An intuitive rationale for this phenomenon lies in the optimization challenges posed by straightforward fusion operations. Comprehensive explanations are available in the Appendix. The proposed “Attn Merge”, however, facilitates smooth information transmission, allowing the model to undergo normal training.

Strips Window. To verify the demand to fuse outputs from window attention of various sizes, we carry out experiments employing window attention with distinct window sizes independently. As illustrated in Fig. 10, the utilization of horizontal or vertical strip-like windows in isolation yields corresponding patterns. Applying square windows alone results in grid-like patterns. However, the incorporation of “Attn Merge” to fuse outcomes leads to pleasing stylized images, surpassing the results obtained solely from window attention. Further details regarding the ablation study for Swin and Swin with Mix-FFN can be found in the Appendix.

User Study

In comparing virtual stylization effects between S2WAT and the aforementioned SOTAs like StyTr2, ArtFlow, MCC, and SANet, user studies were conducted. Using a widely-employed online questionnaire platform, we created a dataset comprising 528 stylized images from 24 content images and 22 style images. Participants, briefed on image style transfer and provided with evaluation criteria, assessed 31 randomly selected content and style combinations. Criteria emphasized preserving content details and embodying artistic attributes. With 3002 valid votes from 72 participants representing diverse backgrounds, including high school students and professionals in computer science, art, and photography, our method achieved a marginal victory in the user study, as reflected in Table 2. Additional details including an example questionnaire page can be found in the Appendix.

| Method | Ours | StyTr2 | ArtFlow | MCC | SANet |

|---|---|---|---|---|---|

| Percent(%) | 25.4 | 23.6 | 13.3 | 19.4 | 18.3 |

Conclusion

In this paper, we introduce S2WAT, a pioneering image style transfer framework founded upon a hierarchical vision transformer architecture. S2WAT’s prowess lies in its capacity to simultaneously capture local and global information through SpW Attention. The SpW Attention mechanism, featuring diverse window attention shapes, ensures an optimal equilibrium between short- and long-range dependency modeling, further enhanced by our proprietary “Attn Merge”. This adaptive merging technique efficiently gauges the significance of various window attentions based on target similarity. Furthermore, S2WAT mitigates the content leak predicament, yielding stylized images endowed with vibrant style attributes and intricate content intricacies.

Acknowledgement

This work was supported by the National Key R&D Program of China (2021YFB3100700), the National Natural Science Foundation of China (62076125, U20B2049, U22B2029, 62272228), and Shenzhen Science and Technology Program (JCYJ20210324134408023, JCYJ20210324134810028).

References

- An et al. (2021) An, J.; Huang, S.; Song, Y.; Dou, D.; Liu, W.; and Luo, J. 2021. Artflow: Unbiased image style transfer via reversible neural flows. In CVPR.

- Bai et al. (2023) Bai, Y.; Liu, J.; Dong, C.; and Yuan, C. 2023. ITstyler: Image-optimized Text-based Style Transfer. arXiv.

- Beltagy, Peters, and Cohan (2020) Beltagy, I.; Peters, M. E.; and Cohan, A. 2020. Longformer: The long-document transformer. arXiv.

- Cao et al. (2021) Cao, C.; Hong, Y.; Li, X.; Wang, C.; Xu, C.; Fu, Y.; and Xue, X. 2021. The image local autoregressive transformer. Advances in Neural Information Processing Systems, 34: 18433–18445.

- Chen et al. (2017) Chen, D.; Yuan, L.; Liao, J.; Yu, N.; and Hua, G. 2017. Stylebank: An explicit representation for neural image style transfer. In CVPR.

- Chen et al. (2021) Chen, H.; Wang, Z.; Zhang, H.; Zuo, Z.; Li, A.; Xing, W.; Lu, D.; et al. 2021. Artistic style transfer with internal-external learning and contrastive learning. In NeurIPS.

- Cheng, Dong, and Lapata (2016) Cheng, J.; Dong, L.; and Lapata, M. 2016. Long short-term memory-networks for machine reading. In EMNLP.

- Deng et al. (2021) Deng, Y.; Tang, F.; Dong, W.; Huang, H.; Ma, C.; and Xu, C. 2021. Arbitrary video style transfer via multi-channel correlation. In AAAI.

- Deng et al. (2022) Deng, Y.; Tang, F.; Dong, W.; Ma, C.; Pan, X.; Wang, L.; and Xu, C. 2022. StyTr2: Image Style Transfer with Transformers. In CVPR.

- Deng et al. (2020) Deng, Y.; Tang, F.; Dong, W.; Sun, W.; Huang, F.; and Xu, C. 2020. Arbitrary style transfer via multi-adaptation network. In ACM MM.

- Dong et al. (2022) Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Chen, D.; and Guo, B. 2022. Cswin transformer: A general vision transformer backbone with cross-shaped windows. In CVPR.

- Efros and Freeman (2001) Efros, A. A.; and Freeman, W. T. 2001. Image quilting for texture synthesis and transfer. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques.

- Fan et al. (2021) Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; and Feichtenhofer, C. 2021. Multiscale vision transformers. In ICCV.

- Gatys, Ecker, and Bethge (2015) Gatys, L.; Ecker, A. S.; and Bethge, M. 2015. Texture synthesis using convolutional neural networks. In NeurIPS.

- Gatys, Ecker, and Bethge (2016) Gatys, L. A.; Ecker, A. S.; and Bethge, M. 2016. A neural algorithm of artistic style. In Vision Sciences Society.

- Graham et al. (2021) Graham, B.; El-Nouby, A.; Touvron, H.; Stock, P.; Joulin, A.; Jégou, H.; and Douze, M. 2021. Levit: a vision transformer in convnet’s clothing for faster inference. In ICCV.

- Hong et al. (2023) Hong, K.; Jeon, S.; Lee, J.; Ahn, N.; Kim, K.; Lee, P.; Kim, D.; Uh, Y.; and Byun, H. 2023. AesPA-Net: Aesthetic Pattern-Aware Style Transfer Networks. In ICCV.

- Huang and Belongie (2017) Huang, X.; and Belongie, S. 2017. Arbitrary style transfer in real-time with adaptive instance normalization. In ICCV.

- Johnson, Alahi, and Fei-Fei (2016) Johnson, J.; Alahi, A.; and Fei-Fei, L. 2016. Perceptual losses for real-time style transfer and super-resolution. In ECCV.

- Kingma and Ba (2015) Kingma, D. P.; and Ba, J. 2015. Adam: A method for stochastic optimization. In ICLR.

- Kong et al. (2023) Kong, X.; Deng, Y.; Tang, F.; Dong, W.; Ma, C.; Chen, Y.; He, Z.; and Xu, C. 2023. Exploring the Temporal Consistency of Arbitrary Style Transfer: A Channelwise Perspective. IEEE Transactions on Neural Networks and Learning Systems.

- Li et al. (2022) Li, G.; Cheng, B.; Cheng, L.; Xu, C.; Sun, X.; Ren, P.; Yang, Y.; and Chen, Q. 2022. Arbitrary Style Transfer with Semantic Content Enhancement. In The 18th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry.

- Li et al. (2017) Li, Y.; Fang, C.; Yang, J.; Wang, Z.; Lu, X.; and Yang, M.-H. 2017. Universal style transfer via feature transforms. In NeurIPS.

- Lin et al. (2014) Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; and Zitnick, C. L. 2014. Microsoft coco: Common objects in context. In ECCV.

- Liu et al. (2021) Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; and Guo, B. 2021. Swin transformer: Hierarchical vision transformer using shifted windows. In ICCV.

- Liu et al. (2022) Liu, Z.-S.; Wang, L.-W.; Siu, W.-C.; and Kalogeiton, V. 2022. Name your style: An arbitrary artist-aware image style transfer. arXiv.

- Ma et al. (2023) Ma, Y.; Zhao, C.; Li, X.; and Basu, A. 2023. RAST: Restorable Arbitrary Style Transfer via Multi-Restoration. In WACV.

- Park and Lee (2019) Park, D. Y.; and Lee, K. H. 2019. Arbitrary style transfer with style-attentional networks. In CVPR.

- Paszke et al. (2017) Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; and Lerer, A. 2017. Automatic differentiation in pytorch. In NeurIPS.

- Phillips and Mackintosh (2011) Phillips, F.; and Mackintosh, B. 2011. Wiki Art Gallery, Inc.: A case for critical thinking. Issues in Accounting Education.

- Ulyanov, Vedaldi, and Lempitsky (2016) Ulyanov, D.; Vedaldi, A.; and Lempitsky, V. 2016. Instance normalization: The missing ingredient for fast stylization. arXiv.

- Vaswani et al. (2017) Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A. N.; Kaiser, Ł.; and Polosukhin, I. 2017. Attention is all you need. In NeurIPS.

- Wang et al. (2021) Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; and Shao, L. 2021. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In ICCV.

- Wu et al. (2021a) Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; and Zhang, L. 2021a. Cvt: Introducing convolutions to vision transformers. In ICCV.

- Wu et al. (2021b) Wu, X.; Hu, Z.; Sheng, L.; and Xu, D. 2021b. Styleformer: Real-time arbitrary style transfer via parametric style composition. In ICCV.

- Xie et al. (2021) Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J. M.; and Luo, P. 2021. SegFormer: Simple and efficient design for semantic segmentation with transformers. Advances in Neural Information Processing Systems, 34: 12077–12090.

- Xiong et al. (2020) Xiong, R.; Yang, Y.; He, D.; Zheng, K.; Zheng, S.; Xing, C.; Zhang, H.; Lan, Y.; Wang, L.; and Liu, T. 2020. On layer normalization in the transformer architecture. In ICML.

- Yao et al. (2019) Yao, Y.; Ren, J.; Xie, X.; Liu, W.; Liu, Y.-J.; and Wang, J. 2019. Attention-aware multi-stroke style transfer. In CVPR.

- Zhang et al. (2023) Zhang, Y.; Huang, N.; Tang, F.; Huang, H.; Ma, C.; Dong, W.; and Xu, C. 2023. Inversion-based style transfer with diffusion models. In CVPR, 10146–10156.

- Zhang et al. (2022) Zhang, Y.; Tang, F.; Dong, W.; Huang, H.; Ma, C.; Lee, T.-Y.; and Xu, C. 2022. Domain Enhanced Arbitrary Image Style Transfer via Contrastive Learning. In SIGGRAPH.

- Zhu et al. (2023) Zhu, M.; He, X.; Wang, N.; Wang, X.; and Gao, X. 2023. All-to-key Attention for Arbitrary Style Transfer. In ICCV.

This is the arxiv version for the paper.

| Supplementary Material (Appendix) |

Appendix A Net Architecture

Encoder

. The default dimension of the different stages in the encoder is 192, 384, and 768. The default strip width of the three stages is set to 2, 4, and 7. And the number of attention heads in the three stages is 3, 6, and 12.

Transfer Module

. The default dimension of the Transfer Module is 768 and the default number of attention heads is 8. And the default number of layers is three.

Decoder

. The decoder upscales the stylized features gradually, with the number of channels declining from 768 to 256 to 128 to 64, and finally downgrading the number of channels to 3. As shown in Fig. 11, given the stylized features in shape of , the patch reverse layer will reshape it to . Considering a , a , and a as a basic processing unit (denotes as ), the mirrored VGG upsamples the features in three stages. Stage one adopts a series of operations as while stages two and three take the same structure but subtract the last two . Each stage upsamples features but subtracts channels. Finally, the features in shape of will be processed by a and a to reduce the channels to 3. Then comes the output image.

Appendix B Differences from other methods

CSWin

. In CSwin (Dong et al. 2022), the strip-like window attention is used, while the fusion strategy is only concatenation which can not work in style transfer. Its shortcoming is described in the Section Experiments (First part) of Appendix. The necessity of the combination of multiple-shape window attentions and attn merge is also proved in the ablation study in main paper.

Longformer and iLAT

. First, the fusion strategies for attention in Longformer (Beltagy, Peters, and Cohan 2020) and iLAT (Cao et al. 2021) differ from our “Attn Merge”. Second, their attention computation manners are also varying from ours. In Longformer, the global information is computed only from “pre-selected input locations”, while in SpW attention, each pixel can adaptively capture the information from both global (strip window) and local (square window) locations. Moreover, To achieve autoregressive generation, the attention of iLAT is limited in a fixed region (for the paper example 3x3+t[s]) and computes the strip-like global information with a mask. In contrast, SpW does not constrain the fixed regions to calculate attention, and mask information is not required.

Appendix C Model Details in Pre-Analysis

The models tested in Pre-Analysis of main paper adopt the encoder-transfer-decoder architecture, where the encoder is based on a Swin, which has 3 stages, and each stage has 2 successive Swin transformer blocks (we call it layer hereafter). When a layer is replaced by global MSA (multi-head self-attention), the window won’t be shifted if it was. The transfer module and decoder are the same as those of S2WAT.

Appendix D Implementation Details

Dataset

We use MS-COCO (Lin et al. 2014) and WikiArt (Phillips and Mackintosh 2011) as the content dataset and the style dataset respectively. In the stage of training, the images are resized to 512 on the shorter side first and then randomly cropped to . In the stage of testing, images of any size are accepted.

Training Information

We use Pytorch (Paszke et al. 2017) framework to implement S2WAT and train it for 40000 iterations with a batch size of 4. An Adam optimizer (Kingma and Ba 2015) and the warmup learning rate adjustment strategy (Xiong et al. 2020) are applied with the initial learning rate of 1e-4. Our model is trained on a single Tesla V100 GPU for approximately 10 hours.

Appendix E Additional Experiments

Why do operations like sum and concatenation fail in the style transfer?

The drawback of simple fusion operations, e.g., sum and concatenation, is that they will treat attention computation results from different windows equally. This characteristic may change the local content structure critically, thus hindering the optimization of content loss and leading to a monotonous style (as shown in Fig. 9 of the main paper). E.g., assuming there are two features from different window attentions (one of them may focus on pixels of the sky and the other on the grassland), the outputs may not make sense when applying the sum or concatenation operation (a sky with grass?).

In contrast, our “attn merge” can adaptively decide features to merge from different attentions. Thus, the structure of inputs can be reserved, and the local/global style information is consistent with the style reference image.

Analysis for results of InST

InST is a method of image style transfer based on Stable Diffusion Models (SDMs). InST mainly consists of three parts: the Textual Inversion module to transform style image inputs to the corresponding latent vectors , the pre-trained SDM to denoise content noises to stylized images in condition of the latent vectors , and the Stochastic Inversion module to predict noises from content inputs (the content noises are the results of adding the predict noises to the content images).

During training, training data is a single style image (the content and style inputs are the same), which probably results in an overfitting problem. To avoid this problem, the dropout strategy is applied but it seems that the model still can not work well all the time. As presented in Fig. 12, InST performs normally when the style images are the same or similar to the content ones, while the results are unsatisfying if not. In the results of column 4, the style characteristics like colors fail to be transferred to the content image. Another possible reason may be the limited ability of the Textual Inversion module to encode specific style images, causing the loss of style information.

Comparison with Swin-based Encoder

In this section, we conduct experiments to compare results between the Swin-based model and S2WAT as part of the ablation study.

Qualitative Comparison. As depicted in Fig. 14 (column 3), the results from the Swin-based model suffer from grid-like textures severely which can not be tolerated by users. For the results from the proposed S2WAT, this problem is fixed perfectly with smooth strokes and natural textures.

Quantitative Comparison. We also conduct a quantitative comparison for the Swin-based model as once done on the previous state-of-the-art methods. The results are presented in Table 3. Surprisingly, S2WAT achieves the four best out of five metrics, which proves that S2WAT has delightful performance not only on the qualitative examination but also on the quantitative examination.

| Model | Content Loss | Style Loss | Identity Loss 1 | Identity Loss 2 | SSIM |

|---|---|---|---|---|---|

| Swin-based | 2.33 | 1.40 | 0.17 | 1.40 | 0.567 |

| S2WAT | 1.66 | 1.74 | 0.16 | 1.38 | 0.651 |

Comparison with Swin+Mix-FFN Encoder

Mix-FFN (Xie et al. 2021) is a technique to make attention positional-encoding-free, which may be helpful to erase the grid-like texture. However, it seems that Mix-FFN can not work ideally in image style transfer. The results of combining Swin transformers with Mix-FFN are presented in Fig. 13 (column 5). As shown in Fig. 13, blocky (or even strip-like) artifacts still appear on outputs, which proves that the local smooth of convolution can not erase the significant blocky artifacts.

Experiments with Attn Merge of only the Strip-based Attentions

The model solely reliant on strip-based attentions (referred to as “Strips Only”) is insufficient due to two observed phenomena in our experiments: 1) Negative impact on quantitative metrics, especially the style loss values (see Table 4); 2) Compromised generative quality, as demonstrated in Fig. 15.

High Resolution Generation

After completing training with a relatively larger strip width, S2WAT can afford higher resolution (up to on a 24G A5000 GPU) by reducing the strip width, with only a minor impact on performance. The first row of Table 4 and the last column of Fig. 15 displays the results obtained by reducing the strip width of the 3-layer S2WAT from 2, 4, 7 to 1, 1, 1 (denoting as “Strips Trans”).

| Method | C-Loss | S-Loss | Id1 | Id2 | SSIM |

|---|---|---|---|---|---|

| Strips Trans | 1.69 | 1.85 | 0.16 | 1.39 | 0.647 |

| Strips Only | 1.71 | 2.00 | 0.16 | 1.39 | 0.647 |

| Ours | 1.66 | 1.74 | 0.16 | 1.38 | 0.651 |

Appendix F More Example of Style Transfer Results

The pictures presented in Fig. 16 and Fig. 18 are all in a resolution of and the ones in Fig. 17 are in a resolution of .

Qualitative Comparison

Due to the length limitation of 7 pages in the main body of the article, some of the results are removed in qualitative comparison (Section Style Transfer Results). As shown in Fig. 16, we add an additional set of results in which the undesired patterns on the border from the results of ArtFlow (An et al. 2021) are more clear (rows 10 and 11 in Fig. 16). And more examples of the overflow issue from the results of MCC (Deng et al. 2021) could be found in row 5 and 10 of Fig. 16. To compare the style transfer ability between S2WAT and StyTr2, we also mark some areas of outputs with colored boxes which show that S2WAT is more capable of preserving content details.

Content Leak

As shown in Fig. 17, an additional group of results under the content leak problem is provided (Section Content Leak). Some content details though S2WAT will lose under a 20-round process, the results of S2WAT are obviously clear than that of CNN-based methods.

Appendix G Details of User Study

In conducting the user study, we leverage a widely-used online questionnaire platform employed by over 30 thousand corporations. A randomized selection of 31 content and style combinations, along with their corresponding stylized images, was presented to participants via a questionnaire. Before proceeding, participants were briefed on image style transfer and provided with evaluation criteria for an optimal outcome. These criteria encompassed two main aspects: a) a favorable outcome should diligently preserve content structure and details from the content image; b) a commendable outcome should effectively embody artistic attributes from the style image. Each participant was granted a 30-second timeframe to cast one or two votes, with all responses denoted in capital letters (omitting method names). After excluding outlier samples and instances where all votes were for a single method, we accumulated 3002 valid votes from 72 participants, including a diverse array of individuals ranging from high school students to professionals and experts spanning fields like computer science, art, and photography. The results of user study can be found in the Table 2 of the main paper.

We also provide an example questionnaire page we used in this part. As shown in Fig. 20, all the method names are covered with capitals as A, B, C, D, and E, where participants can not infer the corresponding relationship between results and methods.

Appendix H Limitation

Content Leak

. As discussed on the performance under the content leak in Section Content Leak of the main body of the article, S2WAT cannot achieves completely content-unbiased results. In some cases, the results may not be satisfying (as shown in Fig. 19). The reason why S2WAT is incompetent at producing completely content-unbiased results is that content details will be lost more or less from operations like downsampling. In the words of (An et al. 2021), reconstruction errors will be accumulated by the biased modules or operations. However, compared to CNN-based methods, results from S2WAT have a clear advantage.

Computational Complexity

. Another limitation of S2WAT is the computational complexity of SpW Attention, which is also the next goal in our schedules. As discussed in Section Strips Window Attention of the main body of the article, S2WAT cannot achieve linear computational complexity to the patch number. We try to replace the continuous sampling of window attention with discrete sampling to address this limitation in future work.