RotEqNet: Rotation-Equivariant Network for Fluid Systems with Symmetric High-Order Tensors

Abstract

In the recent application of scientific modeling, machine learning models are largely applied to facilitate computational simulations of fluid systems. Rotation symmetry is a general property for most symmetric fluid systems. However, in general, current machine learning methods have no theoretical guarantee of Rotation symmetry. By observing an important property of contraction and rotation operation on high order symmetric tensors, we prove that the rotation operation is preserved via tensor contraction. Based on this theoretical justification, in this paper, we introduce Rotation-Equivariant Network (RotEqNet) to guarantee the property of rotation-equivariance for high order tensors in fluid systems. We implement RotEqNet and evaluate our claims with four case studies on various fluid systems. The property of error reduction and rotation-equivariance is verified in these case studies. Results are showing the high superiority of RotEqNet compared to traditional machine learning methods.

keywords:

machine learning, tensor analysis, rotation-equivariant, fluid systems1 Introduction

With recent developments in data science and computational tools, machine learning algorithms have been increasingly applied in different engineering and science areas to model physical phenomena. The data from physical experiments and numerical simulations are a source of knowledge about the physical world, on which data-driven methods could be performed to extract new physical laws [1, 2, 3, 4, 5, 6]. For example, in turbulence RANS modeling in fluid mechanics, traditional modeling methods have failed in many flow scenarios. A unified RANS model that can successfully describe complex flows, including boundary layer, a strong rotation, separation still does not exist according to the author’s knowledge [7, 8]. On the other hand, advanced measurement and direct numerical simulations provide plenty of data that could be utilized to establish and validate new models. With the above argument, data-driven methods are particularly suitable for turbulence modeling and some other areas in physics and engineering. There have been many attempts to discover new turbulence models using machine learning methods. Milano and Koumoutsakos [9] reconstruct near-wall flow applying neural networks and compared their results with linear methods (POD). Zhang and Duraisamy [10] used Gaussian process regression combined with an artificial neural network to predict turbulent channel flow and bypass transition. Beck, Flad, and Munz [11] applied residual neural network for Large Eddy Simulation. Chen et. al. proposed an ODE network to generally learn differential equations [12].

The physical laws often appear in the form of tensorial equalities which inherently obey certain types of symmetry. For example, the constitution laws in fluid and solid mechanics should obey translation and rotation invariance [13]. The turbulence RANS model is local tensorial equality between mean velocity gradient and Reynolds stress. The turbulence RANS models should also be rotation invariant [14, 15]. However, machine learning methods for RANS modeling do not automatically guarantee rotation invariance, if we use Cartesian components of tensors as input and output of training data. This problem has been addressed by [16, 5]. In [16, 17], Reynolds stress is expressed as a general expansion of nonlinear integrity basis multiplied by scalar functions of invariants of strain rate and rotation rate tensors. Machine learning is performed to find these scalar functions of tensor invariants of strain rate and rotation rate tensors. Mathematically this expansion comes from an application of the Caylay-Hamilton theory. The special case used in [16, 17] is derived by S.B.Pope in [15]. Although such construction is general and possible for higher-order tensors and tensor tuples containing multiple tensors, the number of this basis and the derivation complexity will grow exponentially and become prohibitive for real applications [18, 19].

Why would this problem of rotation-equivariance be hard to solve? At first glance, if a system has the property of rotation-equivariance, one has more information for this system. Therefore, this added property of rotation-equivariance would lower the performance of a learner. More specifically, adding this new rule of rotation symmetry in a system will require the machine learning algorithm to extract more rules from existing data [20]. In this case, the property of rotation-equivariance could be considered as a continuous group action. There is limited research in the field of deep learning that considers the preservation of symmetries under continuous group actions for physical systems. To address our second point, continuous information is hard to be absorbed. If we consider a machine learning algorithm as an information compression process from input to output [21], a continuous transformation as rotation will be difficult for learning algorithms to absorb.

Given the universal approximation theorem by [22], it would seem that the application of neural networks, especially deep neural networks could solve any problem. As formulated by [23, 24, 25, 26], advanced machine learning methods, especially deep neural networks [27], seem to provide a new opportunity for physical equations approximation. However, in this case of rotation symmetry, if we use a multiple layer perceptron to learn the relation , then most likely does not preserve rotation-equivariance. Generally, the neural network function classes do not satisfy rotation equivariance.

There have been previous works considering group-equivariance with convolutional neural networks in image recognition. A general method has been proposed using group convolution [28, 29, 30]. Based on the idea of using convolution, several methods composed a steerable filter for rotation-equivariance in convolutional neural networks [31, 32, 33, 34]. However, these works cannot be applied in physical systems as well. One of the most important reasons is that the rotation operation on the image is different from rotation operation on physical systems. Consider a rotation operation on a specific image. We are thinking of a transformation from polar coordinates centering at a certain point [35]. This kind of transformation is different from rotation operation on tensors. Additionally, these methods have a strong restriction that this model must be built on convolutional neural networks. Yet, considering physical systems, convolutional neural networks might not be the best choice since they are designed for image processing.

The problem of rotation-equivariance is also quite impossible to be simply solved by data augmentation and preprocessing. Mentioned by previous works [16], a typical solution is to apply the technique of data augmentation. However, the method of data augmentation fails to have a theoretical guarantee of obtaining the property of rotation-equivariance with finite sample set. Data augmentation method has a theoretical foundation that at infinite sample limit it will asymptotically reach rotation equivariance. However, such a dataset is not only difficult to obtain but also requires much higher computation power while training the model. In the case of using naive preprocessing methods, the problem is that there are limited theoretical tools to deal with high-order tensors, and only limited methods to use for low order tensors. It is hard to apply specific techniques, such as diagonalization, in the case of high-order tensors. Since naive data preprocessing methods are impossible to apply, a more complex method with a theoretical guarantee should be proposed in order to solve this problem.

In this paper, we establish Rotation-Equivariant Network (RotEqNet), a new data-driven framework, which guarantees rotation-equivariance at a theoretical level. Different from previous methods, we first find a method to preserve rotation operation via tensor contraction. In our proposed position standardization algorithm, it could properly link a high-order tensor to a low order tensor with the same rotation operation. By applying mathematical tools for low order matrices (diagonalization and QR factorization), a desired standard position could be derived by the rotation matrix from the previous step. Standard position algorithm is proven to be rotation-invariant in Theorem 3.4, i.e. two tensors differ by a rotation would have the same standard position. Therefore, the learning rules based on standard position are forming a quotient space of the original rules in random rotated plural position [31, 36]. In this way, RotEqNet lowers the training difficulty of a randomly positioned dataset. Further, RotEqNet is also proven to be rotation-equivariant, as we have shown in Theorem 3.14. These advantages of RotEqNet would result in an observable error reduction compared to previously introduced data-driven methods. We applied RotEqNet into four different case studies ranging from second-order, third-order, and fourth-order. These case studies are designed based on Newtonian fluids, Large-eddy simulations, and Electrostriction. Improved performances could be observed for using RotEqNet. The error is reduced for 99.6%, 15.62%, and 54.63% for second, third, forth-order case studies, respectively. Our contribution in this paper is three-fold:

-

1.

We showed an important property of contraction operation on tensors. Contraction operation will preserve rotation operation on tensor with arbitrary order. This is stated in Lemma 2.11.

-

2.

We propose a properly designed RotEqNet with a position standardization algorithm to guarantee the property of rotation-equivariant. We proved the property of rotation-invariant of position standardization algorithm in Theorem 3.4 and the property of rotation-equivariant of RotEqNet rigorously in Theorem 3.14.

-

3.

We implement our proposed algorithm and the architecture of RotEqNet. We further conduct case studies to show its credibility in design and superiority compared to baseline methods.

To provide a general architecture of our paper, in Section 2 we introduce basic definitions of rotation for arbitrary order tensor (tuples) and related concepts. In Section 2.3 we formulate rotation invariance (equivariance) on supervised learning methods. The RotEqNet and main algorithm is presented in Section 3, and numerical results are shown in Section 4.

2 Preliminaries and Problem Description

2.1 Tensor and its operations

In this section, we first introduce an abstract way of defining tensor. One reason for us to introduce the more abstract way to think about tensors is that it provides a convenient formalism for the operations we will do on the tonsorial data discussed in the previous section. The operations are

-

1.

Linear transformation

-

2.

Contraction

The formalism helps us to prove that these two operations commute which lays theoretical ground for the computation of a representative of rotationally-relatated tensors. We will call this representative standard position

2.1.1 Abstract definition of tensors

Following [37], fix a vector space of dimension over . A tensor product is a vector space with the property that -bilinear maps are in natural one-to-one correspondence with -linear maps .

The tensor product can be constructed as the quotient vector space , where is generated by vectors of the following types

| (2.1) |

where and are vectors in and is a scalar in . This means any element in can be written as a linear combination of vectors of the above form. is not necessarily a vector space of finite dimension. But the quotient space is. Let be the natural projection map, then we use to denote the image of under .

Let be a basis of , then for and form a basis of . This means any vector can be written as

| (2.2) |

for some .

Here are some relations of tensors which come directly as a consequence of the relations generating :

| (2.3) |

| (2.4) |

The representation of a tensor in is similar to the representation of a linear map , i.e. a matrix. In fact, there is a natural way to think of a tensor as a linear map:

For each element in the basis of , we can think of it as a linear map by defining , where is the natural inner product on . Extend the definition linearly to every element in , we obtain a way to identify as the space of linear map . In fact, the tensor corresponds to the linear map represented by the matrix .

We have defined the tensor product over . The definition/construction of order tensor follows the same course. We will denote order tensor by .

The basis of is given by , where and . With respect to this basis, any order tensor can be written as . Analogous to the order 2 case, we can think of an order tensor as a -dimensional matrix, the typical way a tensor in physical experiments are represented.

We will use to denote a tensor of order , i.e. a vector in . is called the rank of the tensor.

2.1.2 Rotation on tensors: a linear transformation

A linear transformation on higher-order tensor is a generalization of a linear transformation on the first-order tensor, i.e. a vector.

Let be a linear transformation. Use the basis of , we can represent this expression with the equation

| (2.5) |

Let denote the matrix representation of with respect to the basis . Then

| (2.6) |

i.e. the transpose of the matrix

The map naturally induces a map on . On the basis element , the action of is defined as

| (2.7) |

For any tensor , we will use to denote the extension of on

There is a convenient way to represent a linear transformation of 2-tensor as matrix multiplication.

For a 2-tensor , use be the matrix whose term is .

Lemma 2.1.

Rotation operation by matrix on second-order tensor (matrix) is a change of basis operation.

| (2.8) |

where here means the usual matrix multiplication.

Remark 2.2.

Rotation operation by matrix on first-order tensor (vectors) could be viewed as

| (2.9) |

Lemma 2.8 and remark 2.9 will be used in the proof of Theorem 3.4. As we have shown in this subsection, one could use a matrix form of rotation operation with certain rules of matrix multiplication to perform a rotation on the tensor. In the following proofs of this paper, we applied this idea to perform rotation operation on tensors via matrix multiplication.

2.1.3 Contraction on tensors: reduction of order

Let be the standard inner product on . Using this inner product, we can define the contraction of a tensor. It ”merges” vectors on the specified axes using the inner product and reduces the rank of the tensor by 2. Formally, let denote the contraction along axis- and axis-. Here, the axis means the ordinal of in . For example, axis- refers to the first copy of in .

On the element , acts on it by pairing and via the inner product , i.e.

| (2.10) |

where means is not present.

We can then define on by extending linearly. When , contraction is nothing other than taking the trace of the corresponding matrix.

Lemma 2.3.

Let be a rotation. Let , then

| (2.11) |

Lemma 2.11 shows an interesting connection between rotation operation and contraction. To understand this lemma, it represents that the contraction of a tensor is compatible with a linear transformation if this linear transformation is a rotation. This is an important lemma which is the foundation of the entire analysis in this paper. We would further utilize this lemma for extracting its rotation operation from higher (arbitrary) orders. We show the proof in 5.

2.2 Supervised learning setup

In our problem, given data set . The data set contains input-output pairs . The input here is a tensor tuple:

| (2.12) |

is the length of . Normally, we only have one output.

Generally speaking, following the definition of [38, 39], parametric supervised learning can be viewed as a type of a model composed from two parts. The first part is a predictor. Given parameter , we have:

| (2.13) |

, where is the prediction output of learning model , is the parameter of . As stated, it predicts value based on input .

The second part is an optimizer, which updates the parameter based on a loss function. For a regression model, a typical loss function would be defined as:

| (2.14) |

where represents 2-norm.

2.3 Obtaining rotation-equivariance properties in systems using supervised learning

Group equivariance is an important property for most physical systems. Typical examples of group equivariance could be rotation group equivariance, scaling group equivariance, and translation group equivariance. Mathematically, group equivariance is a property of a mapping to commute from to under rotation group actions. Specifically, let be a rotation action. is rotation-equivariant if

| (2.16) |

As a special case of rotation-equivariant, a function is rotation-invariant if:

| (2.17) |

Since supervised learning models could be considered as functions, name a machine learning model as . For a rotation operation , we hope to obtain the property that:

| (2.18) |

For analysis below in Sec. 3.3, we prove the rotation-equivariance property following the definition stated here in Equ. 2.18. In other words, if a system would satisfy the property in Equ. 2.18, then this system is rotation-equivariant.

2.4 Modeling symmetric fluid systems via supervised learning

The machine learning approach to the fluid dynamics modeling involves training a supervised learning model using as features and as label.

In our case, the underlying space of the fluid dynamic system is complete with respect to rotation. This means for all rotation , for all . The objects we want to model via machine learning are rotation-equivariant tensorial fields on .

Let be a tensorial field on , for any point , we use to denote the tensor at (for example, pressure at a particular point in a fluid dynamics system). is said to be rotation-equivariant if for all point and all rotation

Suppose one has tensorial fields on such that and are related by some unknown physical law such that

Supervised machine learning methods can be used here to learn a function that approximates such that generalizes well on new data.

Suppose those tensorial fields are rotation-equivariant, then naturally the model as well its proxy

3 Rotation Equivariant Network

In this section, we would like to propose Rotation Equivariant Network (RotEqNet) to solve rotation problems for high order tensors in fluid systems. RotEqNet is based on the position standardization algorithm, as we would further discuss in Section 3.2. We first provide a general description of the whole architecture in 3.1.

3.1 Architecture

As shown in Figure1, RotEqNet generally goes through three important steps: position standardization, prediction of kernel predictor, and position resetting. To be specific, the position standardization is an algorithm to transfer incoming tensor to its standard position. In Figure1, the ’even order standardization’ and ’odd order standardization’ sections denote this algorithm in position standardization. Then, is considered as a standard position of input tensor , and is an extracted rotation operation to transfer between standard position and original position. The output of kernel predictor is only dealing with standard positions. This will result the output in its standard position as well. Finally, apply to output will be our final prediction. A general mathematical description of this process could be described as:

| (3.1) |

How would this process help to solve rotation problems for high order tensors?

An important reason is related to a reduced function space for learning. When a learning model is only training with the standard position, it would no longer still have to deal with the entire group action causing a group-equivariant, but only need to focus on the pattern by the related physical equation. Name the rotation group as G, and consider a full function space . As mentioned in [31], instead of performing regression on , RotEqNet is essentially exploring a much smaller space . The reduction of input-output dimensionality makes the training easier. With the same number of samples, the pattern for learning requires a far smaller space to explore. The second reason is RotEqNet could provide a theoretical guarantee of the property of rotation-equivariant. Utilizing rotation symmetry as a strong prior for most physical systems, RotEqNet have a better generalized result learning from limited amount of data.

3.2 Position standardization algorithm for High Order Tensors

Let denote our data set. The first stage of RotEqNet is to find a good representative of all tensors that are related to each other by rotation. We will call this representative the sample in ”standard position,” and we will denote it by . We will use to denote the position standardization algorithm and to mean reducing to its standard position.

has the following property that and all rotation operation ,

| (3.2) |

This means, produces exactly the same output no matter how is rotated, i.e. it is rotation-invariant.

Intuitively, for a tensor , we are selecting a representative on the orbit , (where ), as the rotation invariant of a [42]. In our algorithm, we initially perform a tensor contraction to higher-order tensors, reducing the dimension to obtain a lower order tensor. Then using diagonalization for even cases and QR factorization for odd cases, the algorithm could obtain a rotation operation acting on . Finally, it could get a tensor in standard position by rotating the original tensor with the inverse of the obtained rotation matrix.

This operation is compatible with the theoretical result shown in Lemma 2.11.

3.2.1 Tensor of even order

Given a symmetric tensor of even order ( is even). Let denote a sequence of contraction along the first two axes until we reach a second-order tensor. Applying to we get:

| (3.3) |

| (3.4) |

Then we find the orthonormal eigen-vectors of and use them to form the orthonormal matrix that diagonalize

| (3.5) |

Since is an orthonormal matrix, we have

| (3.6) |

We will call the standard position of . We write to shorten the notation

Since contraction and rotation are compatible by Theorem 2.11. We can apply to before we apply contraction, and we will have

| (3.7) |

For the even tensor , we define

| (3.8) |

3.2.2 Tensor of odd order

Why would it be different for even order and odd order? Since odd order cannot directly reduce its dimension to 2 by contraction. Due to the fact that each contraction will reduce the dimensionality by 2, the reduced dimension will also be an odd number, which cannot be 2. Involving in this problem, this would further be impossible to extract the rotation matrix, which is impossible to rotate the tensor into a standard position. The following described is the method that we use to solve the problem.

Given a symmetric tensor of an odd order tensor ( is odd). Let denote a sequence of contraction along the first two axes until we reach a third-order tensor. Applying to we get:

| (3.9) |

After we obtain , we could obtain three different order one tensors by contracting it. Name the contracted results, which are first-order tensors i.e. vectors, . We could get an order two tensor by concatenating them.

| (3.10) |

Then, we could perform the a similar process as before. We perform QR factorization to obtain rotation matrix .

| (3.11) |

For odd tensor, we define:

| (3.12) |

The pseudocode of our proposed algorithm is documented in Algorithm 1. We evaluate our method of position standardization algorithm in Section 4.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/4a110dd2-fc33-4f2b-b921-4745fa290b5e/x4.png)

3.3 Theoretical Analysis of Rotation-equivariant property

In our analysis, we aim to show the rotation-equivariant property of RotEqNet. As an important first step, we need to analyze the property of rotation invariant (standard position) derived by the position standardization algoriTheorem We hope to show Equ. 3.2 is true. Once Equ. 3.2 is proved true, RotEqNet will automatically be rotation-equivariant based on its architecture.

To sketch an outline about theorems below, Lemma 2.11 would serve as an important fact for preserving rotation information after contraction. Our algorithm has been analyzed by Theorem 3.4.

3.3.1 Main theorems and proofs

Theorem 3.4.

is rotation invariant, i.e. for all rotation and symmetric tensor

| (3.13) |

We provide a proof in Appendix 5.4. We call the standard position of

Using Theorem 3.4 we can automatically have the result on tensor with arbitrary order that the standard position, derived by position standardization algorithm, is rotation invariant.

Theorem 3.5.

RotEqNet, , is rotation-equivariant, i.e. for all rotation and tensor

| (3.14) |

Proof.

Name RotEqNet as , kernel classifier as . Consider a input pair . Suppose the result of standardize position algorithm would have , where denote a rotation operation.

First, consider the process of RotEqNet is defined as:

| (3.15) |

Consider another rotation operation in the matrix form acting on , using Theorem. 3.4 we know that:

| (3.16) |

where is an identity matrix.

Then, consider the process of RotEqNet is defined as:

| (3.17) |

We know that from Equ. 3.16. Therefore, we have . Substitute back into previous equation,

| (3.18) |

To simplify, for rotation operation on input , we have

| (3.19) |

This is showing that is rotation-equivariant by definition. Therefore, RotEqNet is rotation-equivariant. ∎

it has shown that Algorithm 1 is able to preserve rotation information for low dimension, and further extract using diagonalization for matrices. This part is a theoretical guarantee of our position standardization algoriTheorem

4 Case studies

In this section, a series of cases are provided to show the performance of RotEqNet. In the following subsections, cases are included from second-order, third-order, and fourth-order. We specifically investigate second-order cases with detailed studies on linear, and nonlinear test equations since, in current applications, second-order physical systems are widely used. Generally, we reported two properties of RotEqNet in every case study. The first property is a loss reduction property. We apply RotEqNet in each test physical equation and compared it to the baseline models (Neural Networks and Random Forests). Another one is the rotation-invariant property. We examine this property by letting RotEqNet and baseline models to perform prediction on rotations of randomly selected data. We report detailed information for these case studies in every subsection below. The interpretation of experimental results is also included in each subsection.

4.1 Case study from Newtonian fluid: a second-order linear case

4.1.1 Problem statement

Newtonian fluid is a type of fluid such that its viscous stress changes based on its flow. In this experiment, we aim to use simulation data to demonstrate this rule of Newtonian fluid. This would serve as a case study with second-order linear equations. Let be stress tensor, pressure and strain rate. The rule of Newtonian fluid is an second-order physical equation which satisfies the following condition [43]:

| (4.1) |

Another definition of the equation for Newtonian fluid would use the velocity vector field, defined as . could be expressed as a matrix. Using this definition, the equation of Newtonian fluid could also be written as:

| (4.2) |

This could also be considered as the definition of strain rate Based on this definition, we could observe that , and it is symmetric since . Since is symmetric and is an identity matrix, is also symmetric. Therefore, defining an arbitrary rotation matrix , this system is equipped with the property of rotation-equivariant that .

To quantify the stress for Newtonian fluid simulation, it would be useful to be able to predict the Newtonian fluid stress, given the simulation of pressure and velocity vector field. Based on this scenario, in this subsection, we provide a case study for the machine learning model on inputting the shear of Newtonian fluid and prediction of the stress.

4.1.2 Data generation and model description

Based on Eqn.4.2, we first generate random data to obtain and . The generation of random numbers in follows a normal distribution from range . Derived from generated and , we could obtain from Eqn.4.2. Denote the dataset as . To form a proper dataset with elements for a machine learning model for Newtonian fluid, the input is set up to be a vector where . Specifically, is composed by and flattened in Eqn.4.1. The output is a vector which is the flattened result of matrix derived by and . The dataset would set up in the description above. To compare the difference of our method to the baseline method, we trained two models with the same hyper-parameter using different amounts of training data, ranging from . of generated data is used for training and of data is used for testing. A rotation set with 10,000 random rotation matrices is also generated for evaluating the property of rotation-equivariant, denoted by .

The machine learning model we apply here is neural networks and random forests because of the ability of these two models to approximate arbitrary functions. For Neural Networks, in our implementation, the logistic activation function is used as an activation function for every neuron. The number of neurons for two layers is 512 and 4, respectively. Adam optimizer [44] is applied as the algorithm for optimization, and the learning is set up to be . We also set the batch size to be 64. For random forests, 100 estimators are set up with mean squared error as the criterion. The maximum depth of random forests is 3 to lower the chance of overfitting. We used Sklearn for implementation [45]. The computation environment of this experiment is CPU.

4.1.3 Results

There are two properties to evaluate, including error reduction and rotation-equivariant of RotEqNet. The effect of error reduction is evaluated for the first. A kernel predictor is trained by standard positions derived from training data. Then, the prediction algorithm is applied to both training and testing set to obtain the training and testing performances. The validation error is defined as the Mean Squared Loss using the formulation that:

| (4.3) |

In Eqn. 4.3, is the number of data in dataset , is the trained machine learning model, is the derived parameter from model , and describes input-label pair of the dataset. This evaluation represents the expected error of model with dataset .

| Kernel predictor | Training Error Reduction | Testing Error Reduction |

|---|---|---|

| Neural Networks | 99.56% | 99.60% |

| Random Forests | 99.56% | 99.72% |

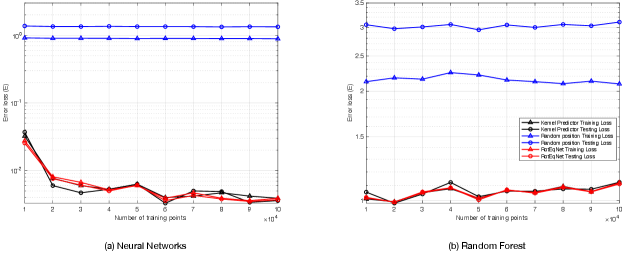

Fig. 4(a) shows the error reduction property of RotEqNet. This plot consists of three groups of experimental groups. The first experiment group focuses on the accuracy of the baseline model, a single feed-forward Neural Network, on raw data with random rotated positions. As shown in Fig. 4(a) with blue curves, triangle curve represents training error and circle curve represents testing error. The second experiment group is RotEqNet with Neural Network as the kernel predictor. As shown in Fig. 4(a) in red curves, triangle curve represents training error, and the circle curve represents testing error. For 100,000 training samples, the testing error of RotEqNet is 0.0037, and the testing error of the baseline method is 1.333. We could observe a huge error reduction, 99.56% in training, and 99.60% in testing, for RotEqNet compared to the error of using the baseline model. For the last experiment group, as performances marked as black curves in the figure, it reports the performance of kernel predictor with standard position only. This experiment would explain why RotEqNet would improve performance since training with standard positions would be an easier task compared to raw data.

Further, Fig. 4(b) shows the error reduction effect of RotEqNet using Random Forest as a kernel predictor. Similarly, as shown in Fig. 4(b) with blue curves, it represents the performance of the baseline method (Random Forests). The second experiment group is RotEqNet with Random Forests as the kernel predictor. As shown in Fig. 4(b) in red curves, triangle curve represents training error and the circle curve represents testing error. We could observe a huge error reduction, 99.56% in training and 99.72% in testing, for RotEqNet compared to the error of using only the Random Forest predictor. For the last experiment group, as performances marked as black curves in the figure, trains the kernel predictor with standard position only. As stated before, this could also serve as a reason for the error reduction effect for RotEqNet on random forests.

According to the reported results, RotEqNet has a good generalization result without overfitting. For cases training with raw data for baseline models, the testing error is typically higher compared to training error. For example, the difference is training and testing errors are 0.44 for Neural Networks, for Random forests when . This represents that for both Neural Networks and Random Forests would be easy to overfit this task on Newtonian Fluid. By contrast, RotEqNet would help to reduce this difference in training and testing error. As we could observe from the training and testing error of RotEqNet, the errors are much lower. When , there are only for Neural Networks and for Random Forests. In the case of linear second-order equations, the application of RotEqNet would result in better-generalized results in learning.

Another important property to evaluate for RotEqNet is rotation-equivariant. The experiment is designed on the definition of rotation-equivariant mentioned in Eqn. 2.18. First, we pick a randomly generated data . Then we apply the rotation set with 10,000 random rotation matrices to . To fully investigate the property of rotation-equivariant, we apply an error evaluation method here to evaluate the error compared to real data, which is defined as:

| (4.4) |

| Model | Baseline (NN) | RotEqNet (NN) | Baseline (RF) | RotEqNet (RF) |

| 0.6362 | 0.0013 | 3.1334 | 1.5513 |

This error evaluation method () focuses more on the model’s error on real data for all rotations. As shown in Tab. 2, for both baseline methods, using neural networks and random forests, there are large errors for and . The baseline methods have no theoretical guarantee that it has the property of rotation-equivariant. However, there is an error reduction for both machine learning models when applying with RotEqNet’s architecture. Especially for RotEqNet with Neural Networks as kernel predictor, we could observe that with of error reduction. This could guarantee the rotation-equivariant property of RotEqNet.

4.2 Case study from large eddy simulation: a second-order nonlinear case

4.2.1 Problem statement

In this case, we consider the subgrid model of large eddy simulation (LES) of turbulent flow by Kosovic [46]. In this case study, as formulated previously in [47, 48], we hope to obtain a learned model by simulation data from LES. This would serve as a case study with second-order non-linear equations. LES is defined as:

| (4.5) |

Here is subgrid stress, which is a symmetric traceless 2nd order tensor. and are symmetric and anti-symmetric parts of velocity gradient tensor , where . Further, , , , are all constants. The configuration of constants above are reported in the next subsection.

In order to qualify the subgrid stress for LES, this study aims to predict the subgrid stress, given the simulation of velocity gradient tensor. This case study for the machine learning model on inputting the velocity gradient tensor.

4.2.2 Data generation and model description

Based on Eqn. 4.5, we first generate random data to obtain a simulated velocity gradient tensor . The generation of random numbers follows a normal distribution from range , and is obtained from a random matrix by subtracting from diagonal position. This would keep . and could be obtained from by getting its symmetric and anti-symmetric parts. For the setup of constants, . is computed from the above setting with Eqn. 4.2. Denote the dataset as, . To form a proper dataset with elements for a machine learning model for Newtonian fluid, the input is set up to be a vector where . Specifically, is composed by flattened . The output is a vector, which is the flattened result of matrix derived by and other constants. To compare the difference of our method to the baseline method, we trained two models with the same hyper-parameter using different amounts of training data, ranging from . of generated data is used for training, and of data is used for testing. A rotation set with 10,000 random rotation matrices is also generated for evaluating the property of rotation-equivariant, denoted by . The model setup is the same compared to Sec. 4.1.2.

4.2.3 Results

The effect of error reduction is evaluated for the first. The validation error is defined as the Mean Squared Loss using the formulation in Eqn. 4.3. This evaluation represents the expected error of model with dataset .

Fig. 5(a) shows the error reduction effect of RotEqNet with Neural Network as a kernel predictor for second-order nonlinear cases with three groups of experimental groups. The first experiment group focuses on the accuracy of the baseline method on raw data with random rotated positions. As shown in Fig. 5 with blue curves, triangle curve represents training error and the circle curve represents testing error. The second experiment group is RotEqNet, with Neural Network as a kernel predictor. As shown in Fig. 5(a) in red curves. For 100,000 training samples, the testing error of RotEqNet is 0.1391, and the testing error of the baseline method is 0.2946, with 52.77% of error reduction. The performances of the last experiment group are marked as black curves in the figure, with only standard position trained for kernel predictor.

Based on the experimental results, firstly, RotEqNet could reach a better learning performance compared to simply applying Neural Networks (baseline method). Training with standard positions could lower the training difficulty, and therefore RotEqNet could obtain better performance. Further, Fig. 5(b) shows the error reduction effect of RotEqNet using Random Forest as a kernel predictor. The general performance of using Random Forests as a kernel predictor is relatively worse compared to using Neural Networks as a kernel predictor. In Fig. 5(b), blue curves represent the performance of training with raw data by Random Forests (baseline method); red curves represent the performance of RotEqNet; black curves represent the performance of kernel predictor trained with standard positions. We could observe an error reduction for 36.63% in training and 57.58% in testing for RotEqNet with Random Forests.

Moreover, RotEqNet has a good generalization result without overfitting. Applying raw data in learning directly on baseline models, the testing error is much higher compared to the training error. For example, the difference is training and testing errors are for Neural Networks, for Random forests when . It is also observable in Fig. 5(a) that the training error of the baseline model with raw data is the lowest, while the testing error of the baseline model is the highest. In this case study, Neural Networks are worse for the sake of overfitting compared to Random Forests. By contrast, introducing the architecture RotEqNet would help to reduce this difference in training and testing error. As we could observe from the training and testing error of RotEqNet, the errors are much lower. When , there are only for Neural Networks and for Random Forests. In this case study of LES, the application of RotEqNet would result in better-generalized results in learning.

| Kernel predictor | Training Error Reduction | Testing Error Reduction |

|---|---|---|

| Neural Networks | -98.44% | 52.77% |

| Random Forests | 36.63% | 57.58% |

To evaluate the rotation-equivariant property of RotEqNet for second-order nonlinear cases, our experimental process is close to the one stated in Sec. 4.1.3. First, we pick a randomly generated data . Then we apply the rotation set with 10,000 random rotation matrices to . This error evaluation method (), as defined in Eqn. 4.4, focuses more on the model’s error on real data for all rotations. As shown in Tab. 4, for both baseline methods, using neural networks and random forests, there are large error for . The baseline methods have no theoretical way to guarantee that it has the property of rotation-equivariant. However, there is an error reduction for both machine learning models when applying with RotEqNet’s architecture. Especially for RotEqNet with Neural Networks as kernel predictor, it is observable that with error reduction. This could guarantee the rotation-equivariant property of RotEqNet for nonlinear second-order cases.

| Model | Baseline (NN) | RotEqNet (NN) | Baseline (RF) | RotEqNet (RF) |

| 0.0945 | 0.0025 | 0.1912 | 0.0084 |

4.3 Case study from testing Newtonian Fluid equation: a third-order case

4.3.1 Problem statement

In this section, we study the performance of RotEqNet for tensor with odd order. In this case, we specifically set a third-order test equation. We used a test equation here revised from Newtonian fluid equation from Eqn. 4.2. We name this testing equation as ’testing Newtonian fluid equation’ for simplicity. The testing equation revised from Newtonian fluid equation can be described as:

| (4.6) |

where is testing stress, is testing pressure, and is testing velocity field. is the identity third-order tensor.

Based on this testing equation, we could observe that . Since is symmetric, and is symmetric, we have is also symmetric. Therefore, defining an arbitrary rotation matrix , this system is equipped with the property of rotation-equivariant that .

In order to qualify stress for testing the Newtonian fluid equation, this study aims to predict the stress, given the simulation of pressure and velocity gradient tensor. This case study for the machine learning model on inputting the pressure and velocity gradient tensor.

4.3.2 Data generation and model description

Based on Eqn. 4.6, we first generate random data to obtain and . The generation of random numbers in follows a normal distribution from range . could be obtained using the Eqn.4.6, derived from generated and . Denote the dataset as , . To form a proper dataset with elements for a machine learning model for Newtonian fluid, the input is set up to be a vector where . Specifically, is composed by and flattened in Eqn.4.6. The output is a vector which is the flattened result of matrix . The dataset would set up in the description above. To compare the difference of our method to the baseline method, we trained two models with the same hyper-parameter using different amounts of training data, ranging from . of generated data is used for training and of data is used for testing. A rotation set with 10,000 random rotation matrices is also generated for evaluating the property of rotation-equivariant, denoted by . The model setup is the same as Sec. 4.1.2.

4.3.3 Results

Fig. 6(a) shows the error reduction effect of RotEqNet with Neural Network as a kernel predictor for third-order cases with three groups of experimental groups. The first experiment group focuses on the accuracy of the baseline model (Neural Network) on raw data with random rotated positions as shown in Fig. 6(a) with blue curves. The second experiment group is RotEqNet, with Neural Network as kernel predictor as shown in Fig. 6(a) in red curves. For 100,000 training samples, the testing error of RotEqNet is 1.8759 and the testing error of baseline method is 2.2232 with 15.62% of error reduction. The performances of the last experiment group are marked as black curves in the figure, with only standard position trained for kernel predictor.

Based on the experimental results, for the third-order testing equation, RotEqNet could reach a better learning performance compared to the baseline method. Training with RotEqNet could lower the training difficulty, and therefore RotEqNet could obtain better performance. Moreover, RotEqNet has good generalization capability without overfitting. As shown in the blue curves of Fig. 6, if we apply raw data in learning directly on baseline models, the testing error is much higher compared to the training error. In this case study, introducing the architecture RotEqNet would help to reduce this difference in training and testing error. As we could observe from the training and testing error of RotEqNet, the errors are much lower. When , there are only for Neural Networks and for Random Forests. In this case study of testing the Newtonian fluid equation, the application of RotEqNet would result in better-generalized results in learning.

Further, Fig. 6(b) shows the error reduction effect of RotEqNet using Random Forest as a kernel predictor. The general performance of using Random Forests as a kernel predictor is relatively worse compared to using Neural Networks as a kernel predictor. In Fig. 5(b), blue curves represent the performance of training with raw data by Random Forests (baseline method); red curves represent the performance of RotEqNet; black curves represent the performance of Random Forest trained with standard positions. For the first point, we could observe an error reduction for 0.90% in training and 6.84% in testing for RotEqNet with Random Forests. As another point, RotEqNet is also obtaining a better-learned model for the model’s capability in generalization. The testing error of the baseline method is observably higher than testing error, while the training and testing performance of RotEqNet is approximately the same. As suggested in Fig. 6(a), in second-order nonlinear cases, RotEqNet could reach a generalized learning result with remarkably lower error compared to baseline methods.

| Kernel predictor | Training Error Reduction | Testing Error Reduction |

|---|---|---|

| Neural Networks | 9.42% | 15.62% |

| Random Forests | 0.90% | 6.84% |

| Model | Baseline (NN) | RotEqNet (NN) | Baseline (RF) | RotEqNet (RF) |

| 2.8454 | 2.6992 | 3.0788 | 3.1068 |

To evaluate the rotation-equivariant property of RotEqNet for this third-order case, we designed an experimental process that is close to the one stated in Sec. 4.1.3. As shown in Tab. 6, for baseline method using neural networks, the error is relatively large for compared to RotEqNet. In our experiment, we reached an error reduction of . We would further discuss this result in Section 4.4.3.

4.4 Case study from Electrostriction: a fourth-order case

4.4.1 Problem statement

This case study focuses on a linear relationship of fourth-order tensor. Nye [49] has introduced a fourth-order tensor in modeling elastic compliances and stiffnesses, which has been investigated using machine learning methods [50, 51]. Generally, in the study of the properties of a crystalline and anisotropic elastic medium, a fourth-order tensor coefficient will typically be applied to model the relationship between two symmetric second-order tensors [52]. In this case, we study Electrostriction, a property causing all electrical non-conductors to change their shape under the application of an electric field. The relationship is described as:

| (4.7) |

Here is a symmetric traceless second-order strain tensor. where and are first-order electric polarization density. Note here is symmetric. is the electrostriction coefficient.

Based on the formulation above, this system is symmetric. Since is symmetric, . This could guarantee that is also symmetric. Due to the face that the system is symmetric, applying a random rotation matrix , .

In order to qualify strain for study on Electrostriction, we aim to predict the strain, given the simulation of electrostriction coefficient and electric polarization density.

4.4.2 Data generation and model description

Based on Eqn. 4.7, we first generate random data to obtain simulated electrostriction coefficient tensor and electric polarization density tensor . The generation of random numbers follows a normal distribution. is computed from above setting using and . Denote the dataset as, . To form a proper dataset with elements for a machine learning model for the study on Electrostriction, the input is set up to be a vector where . Specifically, is composed by flattened and . The output is a vector, which is the flattened result of second-order tensor . To compare the difference of our method to the baseline method, we trained two models with the same hyper-parameter using different amounts of training data, ranging from . of generated data is used for training, and of data is used for testing. A rotation set with 10,000 random rotation matrices is also generated for evaluating the property of rotation-equivariant, denoted by . The model setup is the same compared to Sec. 4.1.2. We use Numpy to generate this simulated dataset by generating a random symmetric fourth-order tensor , and second-order tensor . is computed from generated and by Eqn. 4.7.

4.4.3 Results

The effect of error reduction is evaluated for the first. The validation error is defined as the Mean Squared Loss using the formulation in Eqn. 4.3. This evaluation represents the expected error of model with dataset . Fig. 7 shows the performance of Neural Networks and Random Forests as kernel predictor separately. It is observable that in high-order cases, Neural Networks have huge superiority to Random Forests. Details will be demonstrated in the following paragraphs.

We are starting with Neural Networks, Fig. 7(a) shows the error reduction effect of RotEqNet with Neural Network as a kernel predictor. As shown in blue curves, the first experiment group focuses on the accuracy of the baseline model on raw data with random rotated positions. The second experiment group is RotEqNet marked with red curves. As shown in black curves, it shows the performance of the kernel predictor trained by standard position. For 10,000 training samples, the testing error of RotEqNet is 4.0106 and the testing error of baseline model is 8.6458 with 53.61% of error reduction. The testing performance of the kernel predictor is only evaluated on the testing set with only standard positions. It will be helpful to explain the reason for the improved performance of RotEqNet.

To interpret the experimental results, firstly, RotEqNet could reach a better learning performance compared to simply applying Neural Networks (baseline method). A dataset with only standard positions has lower training difficulty compared to random positions. This claim is supported by black curves in Fig. 7(a), the performance of the kernel predictor is much better than the baseline model. RotEqNet could obtain better performance for utilizing rotation symmetry as a prior, and training kernel predictor with only standard positions. Moreover, RotEqNet has a good generalization result without clear overfitting. The training error and testing error of RotEqNet is considerably close to each other, and sometimes, the testing error of RotEqNet is even slightly better than its training error. By contrast, applying raw data in learning directly on would result in an overfitted model. The testing error is much higher compared to the training error. To demonstrate the improved learning result in generalization, for example, when , the difference between training and testing errors for RotEqNet is only while the difference of the baseline method is . As a quick conclusion, for Neural Networks as a kernel predictor, the application of RotEqNet would be better compared to the baseline method.

| Kernel predictor | Training Error Reduction | Testing Error Reduction |

|---|---|---|

| Neural Networks | 18.93% | 54.63% |

| Random Forests | 0.58% | 2.96% |

Further, Fig. 7(b) shows the error reduction effect of RotEqNet using Random Forest as a kernel predictor. At first glance, we could find that the curves for Random Forests are quite messy without certain patterns like Fig. 7(a). The general performance of using Random Forests as a kernel predictor is worse in both aspects of performance and generalization. In Tab. 7, we could observe a training error reduction for 0.58% and testing error reduction of 2.96%. Even if we could still see the general error of RotEqNet seems to be slightly lower than the baseline method. This result is not comparable to the error reduction performance with setting Neural Networks as a kernel predictor. As another point, selecting Random Forests as a kernel predictor fails to extract learning rules with the standard position. As we could observe the black curves in Fig. 7(b) is not showing an improved performance as good as using Neural Networks. Finally, the learned model of RotEqNet is also not getting a model with better generalization capability. There is no significant reduction of overfitting error compared to the baseline method.

| Model | Baseline (NN) | RotEqNet (NN) | Baseline (RF) | RotEqNet (RF) |

| 3.9290 | 2.7960 | 4.8976 | 4.8740 |

To evaluate the rotation-equivariant property of RotEqNet for this fourth-order case, we designed an experimental process as stated in Sec. 4.1.3. The error evaluation measurement (), as defined in Eqn. 4.4, focuses more on the model’s error on real data for all rotations. As shown in Tab. 8, when using neural networks, baseline method has large error for . RotEqNet helps in keeping the rotation-equivariant property as observing error reduction in for . Considering the case using Random Forests as a kernel predictor, as shown in the previous paragraph, because of the reason that Random Forests are relatively bad in learning fourth-order data, the performance of is still affected, which results in a large error in the prediction of RotEqNet with Random Forest.

The large error reduction observed in case studies raised new opportunities in solving the problem of the physical system with rotation symmetry. Most physical systems have the property of rotation symmetry, and currently, there exist few works that could provide a theoretical guarantee to this property for machine learning methods. A key point in this problem is to design a properly defined algorithm to obtain rotation invariant for high-order tensors. This paper has shown RotEqNet with theoretical and experimental results aiming to solve the problem of rotation symmetry.

We first define a standard position as rotation invariant, which is compatible for high-order tensors. It allows us to extract the rotation invariant of high-order tensors using a contraction, diagonalization, and QR factorization. The theoretical guarantee is shown in Thm. 3.14, and the algorithm is shown in Alg. 3.2.2. RotEqNet is built on Alg. 3.2.2 with a kernel predictor which only deals with standard positions (rotation invariants). By setting kernel predictor with Neural Networks and Random Forests, these two methods are compared with baseline methods in four different case studies focusing on second-order linear, second-order nonlinear, third-order linear, and fourth-order linear cases. There are three important points to address from the observation of case studies.

First, the definition of the standard position is successful. The definition of the standard position is not unique. We aim to define a proper version of the standard position to simplify the learning task by removing the effect of rotation symmetry. In our case, the standard position satisfies the definition of rotation-invariants, which selects a representative point from the orbit of an element via diagonalization (or QR factorization). The experimental results are compatible with this definition of the standard position. We could observe in most of the cases, training kernel predictors with only rotation invariants could reach the lowest error. The reduced error means that the rotation invariant in our definition could lower the difficulty of this learning task as we previously discussed the reason in Sec. 1.

Second, RotEqNet is equipped with the property of Rotation-equivariant. As we could observe from the results of case studies, the rotation error is typically low compared to baseline methods. The perseverance of the property of Rotation-equivariant shows the successful design of RotEqNet and the correctness of Thm. 3.14. Operating with Alg. 3.2.2, the property of Rotation-equivariant of RotEqNet could be held if and only if Thm. 3.14 is correct. Further, this fact would cause an error reduction for RotEqNet. As stated in the previous paragraph, training with rotation invariants will result in a lower error. Under this situation, adding with the property of Rotation-equivariant, this would cause RotEqNet could process this system with any rotation.

The two reasons above are the main reasons that are causing the error reduction for RotEqNet. There is also one point to mention is the selection of kernel predictor. The model selection of kernel predictor will affect the learning results significantly since the kernel predictor is essential in learning the physical system without the effect of rotation symmetry. Neural Networks is the best model in the design of the data-driven method for physical systems because of its flexibility to approximate arbitrary functions. We only reported the performance of Neural Networks and Random Forests as previous work by Ling [16]. As described in Sec. 4.4.3, the performance of Random Forests is limited compared to Neural Networks. Also, as a general trend in previous experiments, Neural Networks are usually reaching better performance compared to Random Forests. As a quick conclusion, we believe the application of Neural Networks as a kernel predictor has a series of advantages than other machine learning models.

We wish to further discuss about another error evaluation method of rotation-equivariance property that we do not mention in case studies. Consider a type of error evaluation, evaluating rotation error of model itself, the error is defined as:

| (4.8) |

The evaluation of this error is actually trivial since we have already rigorously provided a proof in Theorem 3.14 showing the rotation-equivariance property of RotEqNet. We applied this evaluation in first two case studies, and the estimated error is around for all these cases.

For future work, there are three directions to this paper: a better definition of standard position, application to other groups, and generalization to non-symmetric systems. For the first direction, for the current definition, the rotation invariant of odd-order tensors is not reaching equivalent performance as even-order tensors. It would be a good work for revising the definition of standard position for odd tensors. Second, besides rotation symmetries, there are also physical systems with other group-equivariant properties such as scaling and transaction. This work could provide a method in solving problems with other groups, but the detailed design of an algorithm should differ from case to case. Third, current work could only deal with the symmetric system. However, for a general case, if the system is not symmetric, there are certain methods to use RotEqNet in a symmetric system for solving a non-symmetric system. A good trick to consider, for example, is to deal with , where is a matrix. This is a great intuition to extend our current work into non-symmetric physical systems.

Acknowledgments

G. Lin would like to acknowledge the support from National Science Foundation (DMS-1555072 and DMS-1736364).

5 Appendix

5.1 Lemma 2.1

Proof.

We will use column vector convention to represent vectors in . Let and be vectors in . Then

| (A-1) |

Then,

| (A-2) | ||||

| (A-3) | ||||

| (A-4) | ||||

| (A-5) |

Therefore,

| (A-6) |

∎

5.2 Lemma 2.2

Proof.

We will use column vector convention to represent vectors in . Let be vector in . Then

| (A-7) |

Therefore,

| (A-8) |

∎

5.3 Lemma 2.3

Proof.

Since both and are linear, we may assume that is of the form .

| (A-9) | ||||

| (A-10) |

Since is a rotation, it preserves the inner product i.e.

| (A-11) |

So

| (A-12) | ||||

| (A-13) | ||||

| (A-14) |

∎

5.4 Proof of Theorem 1

Proof.

Since the position standardization algorithm defines standard position differently for even and odd orders. We show our proof on even and odd cases separately.

Suppose has even order.

Let be the sequence of contraction along the first two axes such that , where is a second-order tensor as described in the algorithm.

Given arbitrary even high order tensor , we could perform contraction to a second order tensor via first two indices:

| (A-15) |

For , using Lemma 2.8, there exists a rotation such that:

| (A-16) |

where . is diagonalizable because it is symmetric. Since is represented by a orthonormal matrix, therefore .

Based on Lemma 2.11, we know rotation commutes with contraction. Therefore, based on the standard position is defined as

| (A-17) |

Consider a rotation operation in its matrix form. When we act on we obtain a new tensor . For this new tensor, applying contraction we could have:

| (A-18) |

For , since Equ. A-16, applying Lemma 2.8,

| (A-19) |

For its standard position we have:

| (A-20) |

To simplify, for a rotation operation acting on an even high order tensor ,

| (A-21) |

This satisfy the definition of rotation invariant. Therefore, for even cases, the standard position is a rotation invariant.

Suppose has odd order.

Let be the sequence of contraction along the first two axes such that , where is a third-order tensor as described in the algorithm.

| (A-22) |

Let be vectors of contraction operation on via different axes, i.e.,

| (A-23) |

Based on , we have

| (A-24) |

In this case,

| (A-25) |

Consider any rotation operation acting on . We have,

| (A-26) |

Using QR-factorization,

| (A-27) |

The standard position of will be defined as:

| (A-28) |

Using Remark 2.9, we could obtain

| (A-29) |

Considering and , for the same reason, we could know that

| (A-30) |

By reorganizing A-24, A-27, and A-30,

| (A-31) |

Since QR-factorization is unique [53], we should have that . Therefore,

| (A-32) |

Plugging A-32 into A-28, comparing the result of A-25 we have:

| (A-33) |

Here, we shown that given any rotation operation on ( is odd). By position standarization algorithm , we will always have:

| (A-34) |

This satisfy the definition of rotation invariant. Therefore, for odd cases, the standard position is a rotation invariant.

Combining with the proof on even and odd cases, we have shown is rotation-invariant. ∎

References

- [1] Z. Huang, Y. Tian, C. Li, G. Lin, L. Wu, Y. Wang, H. Jiang, Data-driven automated discovery of variational laws hidden in physical systems, Journal of the Mechanics and Physics of Solids (2020) 103871.

- [2] M. Raissi, P. Perdikaris, G. E. Karniadakis, Physics informed deep learning (part i): Data-driven solutions of nonlinear partial differential equations, arXiv preprint arXiv:1711.10561.

- [3] G. Carleo, I. Cirac, K. Cranmer, L. Daudet, M. Schuld, N. Tishby, L. Vogt-Maranto, L. Zdeborová, Machine learning and the physical sciences, Reviews of Modern Physics 91 (4) (2019) 045002.

- [4] J. N. Kutz, Deep learning in fluid dynamics, Journal of Fluid Mechanics 814 (2017) 1–4.

- [5] J.-X. Wang, J.-L. Wu, H. Xiao, Physics-informed machine learning approach for reconstructing reynolds stress modeling discrepancies based on dns data, Physical Review Fluids 2 (3) (2017) 034603.

- [6] Y. Li, T. Zhang, S. Sun, X. Gao, Accelerating flash calculation through deep learning methods, Journal of Computational Physics 394 (2019) 153–165.

- [7] P. A. Durbin, Some recent developments in turbulence closure modeling, Annual Review of Fluid Mechanics 50 (2018) 77–103.

- [8] J. Ling, J. Templeton, Evaluation of machine learning algorithms for prediction of regions of high reynolds averaged navier stokes uncertainty, Physics of Fluids 27 (8) (2015) 085103.

- [9] M. Milano, P. Koumoutsakos, Neural network modeling for near wall turbulent flow, Journal of Computational Physics 182 (1) (2002) 1–26.

- [10] Z. J. Zhang, K. Duraisamy, Machine learning methods for data-driven turbulence modeling, in: 22nd AIAA Computational Fluid Dynamics Conference, 2015, p. 2460.

- [11] A. Beck, D. Flad, C.-D. Munz, Deep neural networks for data-driven les closure models, Journal of Computational Physics 398 (2019) 108910.

- [12] T. Q. Chen, Y. Rubanova, J. Bettencourt, D. K. Duvenaud, Neural ordinary differential equations, in: Advances in neural information processing systems, 2018, pp. 6571–6583.

- [13] G. T. Mase, R. E. Smelser, G. E. Mase, Continuum mechanics for engineers, CRC press, 2009.

- [14] S. B. Pope, Turbulent flows (2001).

- [15] S. Pope, A more general effective-viscosity hypothesis, Journal of Fluid Mechanics 72 (2) (1975) 331–340.

- [16] J. Ling, R. Jones, J. Templeton, Machine learning strategies for systems with invariance properties, Journal of Computational Physics 318 (2016) 22–35.

- [17] J. Ling, A. Kurzawski, J. Templeton, Reynolds averaged turbulence modelling using deep neural networks with embedded invariance, Journal of Fluid Mechanics 807 (2016) 155–166.

- [18] R. W. Johnson, Handbook of fluid dynamics, Crc Press, 2016.

- [19] G. Smith, On isotropic integrity bases, Archive for rational mechanics and analysis 18 (4) (1965) 282–292.

- [20] M. Mohri, A. Rostamizadeh, A. Talwalkar, Foundations of machine learning, MIT press, 2018.

- [21] A. M. Saxe, Y. Bansal, J. Dapello, M. Advani, A. Kolchinsky, B. D. Tracey, D. D. Cox, On the information bottleneck theory of deep learning, Journal of Statistical Mechanics: Theory and Experiment 2019 (12) (2019) 124020.

- [22] K. Hornik, M. Stinchcombe, H. White, et al., Multilayer feedforward networks are universal approximators., Neural networks 2 (5) (1989) 359–366.

- [23] S. Müller, M. Milano, P. Koumoutsakos, Application of machine learning algorithms to flow modeling and optimization, Annual Research Briefs (1999) 169–178.

- [24] J. Weatheritt, R. D. Sandberg, J. Ling, G. Saez, J. Bodart, A comparative study of contrasting machine learning frameworks applied to rans modeling of jets in crossflow, in: ASME Turbo Expo 2017: Turbomachinery Technical Conference and Exposition, American Society of Mechanical Engineers Digital Collection, 2017.

- [25] T. Qin, K. Wu, D. Xiu, Data driven governing equations approximation using deep neural networks, Journal of Computational Physics 395 (2019) 620–635.

- [26] J. Han, L. Zhang, E. Weinan, Solving many-electron schrödinger equation using deep neural networks, Journal of Computational Physics 399 (2019) 108929.

- [27] Y. LeCun, Y. Bengio, G. Hinton, Deep learning, nature 521 (7553) (2015) 436.

- [28] T. Cohen, M. Welling, Group equivariant convolutional networks, in: International conference on machine learning, 2016, pp. 2990–2999.

- [29] C. Esteves, Theoretical aspects of group equivariant neural networks, arXiv preprint arXiv:2004.05154.

- [30] C. Esteves, Y. Xu, C. Allen-Blanchette, K. Daniilidis, Equivariant multi-view networks, in: Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 1568–1577.

- [31] M. Weiler, F. A. Hamprecht, M. Storath, Learning steerable filters for rotation equivariant cnns, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 849–858.

- [32] X. Cheng, Q. Qiu, R. Calderbank, G. Sapiro, Rotdcf: Decomposition of convolutional filters for rotation-equivariant deep networks, arXiv preprint arXiv:1805.06846.

- [33] M. Finzi, S. Stanton, P. Izmailov, A. G. Wilson, Generalizing convolutional neural networks for equivariance to lie groups on arbitrary continuous data, arXiv preprint arXiv:2002.12880.

- [34] L. Gao, H. Li, Z. Lu, G. Lin, Rotation-equivariant convolutional neural network ensembles in image processing, in: Adjunct Proceedings of the 2019 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2019 ACM International Symposium on Wearable Computers, 2019, pp. 551–557.

- [35] J. D. Foley, F. D. Van, A. Van Dam, S. K. Feiner, J. F. Hughes, J. HUGHES, E. ANGEL, Computer graphics: principles and practice, Vol. 12110, Addison-Wesley Professional, 1996.

- [36] Y. Zhou, C. Barnes, J. Lu, J. Yang, H. Li, On the continuity of rotation representations in neural networks, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 5745–5753.

- [37] M. L. Curtis, Abstract linear algebra, Springer Science & Business Media, 2012.

- [38] C. M. Bishop, Pattern recognition and machine learning, springer, 2006.

- [39] D. Tao, X. Li, W. Hu, S. Maybank, X. Wu, Supervised tensor learning, in: Fifth IEEE International Conference on Data Mining (ICDM’05), IEEE, 2005, pp. 8–pp.

- [40] D. F. Specht, et al., A general regression neural network, IEEE transactions on neural networks 2 (6) (1991) 568–576.

- [41] A. Liaw, M. Wiener, et al., Classification and regression by randomforest, R news 2 (3) (2002) 18–22.

- [42] C. C. Pinter, A book of abstract algebra, Courier Corporation, 2010.

- [43] C. K. Batchelor, G. Batchelor, An introduction to fluid dynamics, Cambridge university press, 2000.

- [44] D. P. Kingma, J. Ba, Adam: A method for stochastic optimization, arXiv preprint arXiv:1412.6980.

- [45] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, et al., Scikit-learn: Machine learning in python, the Journal of machine Learning research 12 (2011) 2825–2830.

- [46] B. KOSOVIĆ, Subgrid-scale modelling for the large-eddy simulation of high-reynolds-number boundary layers, Journal of Fluid Mechanics 336 (1997) 151–182.

- [47] H. Pitsch, Large-eddy simulation of turbulent combustion, Annu. Rev. Fluid Mech. 38 (2006) 453–482.

- [48] R. Matai, Les of flow over bumps and machine learning augmented turbulence modeling.

- [49] J. F. Nye, et al., Physical properties of crystals: their representation by tensors and matrices, Oxford university press, 1985.

- [50] K. Yang, X. Xu, B. Yang, B. Cook, H. Ramos, N. A. Krishnan, M. M. Smedskjaer, C. Hoover, M. Bauchy, predicting the young’s modulus of silicate glasses using high-throughput molecular dynamics simulations and machine learning, Scientific reports 9 (1) (2019) 1–11.

- [51] Z. Liu, C. Wu, M. Koishi, A deep material network for multiscale topology learning and accelerated nonlinear modeling of heterogeneous materials, Computer Methods in Applied Mechanics and Engineering 345 (2019) 1138–1168.

- [52] L. Walpole, Fourth-rank tensors of the thirty-two crystal classes: multiplication tables, Proceedings of the Royal Society of London. A. Mathematical and Physical Sciences 391 (1800) (1984) 149–179.

- [53] G. H. Golub, C. F. Van Loan, Matrix computations, Vol. 3, JHU press, 2012.