RoSSBi3D: a 3D and bi-fluid code for protoplanetary discs

Abstract

Context. The diversity of structures recently observed in protoplanetary discs (PPDs) with the new generation of high-resolution instruments have made the challenging questions that planet-formation models have to answer more acute. The challenge is on the theoretical side and in the numerical one, with the need to significantly improve the codes’ performances and stretch the limit of PPD simulations. Multi-physics, fast, accurate, high-resolution, modular and reliable 3D codes are needed to understand the shape of PPD and the observed features as well as to explore the mechanisms at work.

Aims. We present RoSSBi3D as the 3D extension of the 2D code Rotating-System Simulations for Bi-fluids (RoSSBi), a pure hydrodynamic code, which was specifically developed to study the evolution of PPDs. Being described in detail, this is a new code, even if based on the 2D version. We explain its architecture and specificity but also its performances against test cases.

Methods. This grid-based, FORTRAN code solves the fully compressible inviscid continuity, Euler and energy conservation equations for an ideal gas in non-homentropic conditions and for pressureless particles in a fluid approximation. It is a finite volume code which is second order in time and accounts for discontinuities thanks to an exact Riemann solver. The spatial scheme accounts for the equilibrium solution and is improved thanks to parabolic interpolation. The code is developed in 3D and structured for high-performance parallelism.

Results. The optimised version of the code works on high-performance computers with excellent scalability. We checked its reliability against a 2D analogue of the sod shock tube test and a series of tests. We release this code under the terms of the CeCILL2 Licence and make it publicly available.

Key Words.:

Protoplanetary discs – 3D simulations – Parallel programming – High-performance Computing – Vortices1 Introduction

Protoplanetary discs (PPDs) are mainly composed of molecular gas in which are embedded solid particles of different sizes. An accurate description of both phases’ dynamics could help to understand the aspect of PPDs at different wavelengths (Stapelfeldt et al., 1999; Villenave et al., 2020, 2022) and the observed substructures (Pérez et al., 2018a; Dong et al., 2018; Pérez et al., 2018b) with, as an ultimate goal, the will to unravel a global picture of planet formation. Analytical tools remain fruitful in this quest since they led to the discovery of complex phenomenons possibly occurring in PPDs, such as the streaming instability (Youdin & Goodman, 2005; Youdin & Johansen, 2007; Johansen & Youdin, 2007), vertical shear instability (VSI) (Nelson et al., 2013; Stoll & Kley, 2014; Manger et al., 2020, 2021), gaseous vortices generation (Lovelace et al., 1999a), dust trapping by vortices (Barge et al., 2005), baroclinic instabilities (Klahr & Bodenheimer, 2003; Klahr, 2004) etc. However, these tools reach their limits when accounting for a fine time evolution and global description of PPDs where multi-physic aspects are at interplay. Therefore numerical experiments have become increasingly important in PPDs studies, and more generally in Astrophysics. Progress in this field was made possible thanks to the development of accurate numerical methods, increasingly optimised algorithms, and the advent of multi-core machines, which have allowed access to unprecedented detail and multi-physics through the development of high-performance computing (HPC).

RoSSBi3D is the result of the wish to develop a dedicated numerical tool suited to study the evolution of PPDs and adapted to their specifics. Among them, shearing by the gravitational potential of the star, vertical stratification and the intricate coupling of dust and gas. In this code, we assumed that the gas, compressible and inviscid, is coupled through aerodynamic forces to solid particles which are described statistically as a fluid (or a family of different fluids) in which the intrinsic pressure is neglected. This approximation remains relevant as far as dilution is strong, otherwise the inelastic collision between particles should be taken into account contributing to a pressure term. Thus, in the limit of a diluted medium hypothesis, the problem is to solve a system of Euler’s equations for the gas and the particles which are coupled to one another and to the energy equation. This 3D extension of RoSSBi (Surville, 2013) came from the necessity to provide a full description of the evolution of PPDs which requires to account for the disc thickness. It is indeed important to describe at best the physical evolution of the PPDs avoiding the constraining 2D approximation that could either enhance problems raised by the thin disc approximation, such as vortices destruction by dust back-reaction (Raettig et al., 2021), or neglect physical aspects raised by vertical stratification, such as the VSI.

After assessing the three main discretisation methods used in fluid mechanics, we choose to keep the finite volume method (FVM), used in prior prototype versions, to solve the set of hyperbolic equations governing the evolution of an inviscid PPD. Indeed, the finite difference method (FDM), well adapted for solving the partial differential equations related to Euler’s equations, could provide incorrect results in the presence of rarefaction and shock waves, which could be encountered in PPDs 111But this could be circumvent introducing an artificial bulk viscosity as proposed by Von Neumann & Richtmyer (1950).. In order to account for discontinuities without introducing an artificial viscosity, the equations have to be solved under their most primitive form, which makes both the finite element method (FEM) and the FVM the natural choices for solving numerically the equations governing gas dynamics. Indeed, both methods are well adapted for solving equations written in a conservative form and, as a consequence, are preferred choices for fluid dynamics problems. The difficulty of the variational formalism and the problem reformulation under the weak form, intrinsic to FEM, lead us to naturally prefer the FVM. The FVM has the advantage to be easier to understand and implement, and it requires only to evaluate fluxes at cells boundaries, which makes it a robust method for non-linear conservation laws (Wendt et al., 2009; Toro, 2009) .

The goal of this paper is to present the new 3D RoSSBi3D code, highlight its improvements in terms of performance, and grant this code with a proper reference for future scientific publications. We attach great importance to the reliability of this code, thanks to a validation procedure with relevant test cases absent in previous versions and its scalability, which permits an efficient use of computational resources. Even if it could not be reflected in this paper, the code was cleared, simplified, restructured, and highly documented. RoSSBi3D is part of a long story of 2D prototype codes, which led to a number of publications (Inaba et al., 2005; Inaba & Barge, 2006; Richard et al., 2013; Surville, 2013; Richard et al., 2016; Barge et al., 2016, 2017; Rendon Restrepo & Barge, 2022). The detailed legacy and numerical scheme evolution of these prototypes, on which several people have worked, is summarised in the code wiki. This last version is based on the 2D code RoSSBi, implemented by Surville (2013), which was then upgraded in 2018, by Centre de donnéeS Astrophysiques de Marseille (CeSAM), with an elementary parallelisation using the MPI standard. This code is written in the FORTRAN 90 programming language and requires the use of HDF5 and FFTW3 libraries. RoSSBi3D is open source and released under the terms of the CeCILL2 Licence. The code can be downloaded from its public repository, [email protected]:srendon/rossbi3d.git. The visualisation tools are not available in the repository but could be happily shared upon request.

The paper is organised in the following way: in Sect. 2, we explain the structure of the code, the numerical scheme, the choice of the boundary conditions, and the current supported options. In Sect. 3, we discuss in detail the performances of RoSSBi3D. Sect. 4 is devoted to benchmark RoSSBi3D numerical scheme with test cases. In Sect. 5, we discuss additional steps in the development of our code and possible ways to improve it. Finally, in Sect. 6 we conclude. Appendices mainly concerns aspects of the 3D stationary solution, required for balancing the numerical scheme, the conservative form of different terms, and the visualisation tools.

2 Numerical resolution and code structure

This section is in the continuation of Surville (2013, in french) work and devoted to introduce the numerical methods used in RoSSBi3D. We begin by recalling the description of the integral equations governing the gas dynamics.

2.1 Conservative form of the equations

Our code was developed assuming the gas is completely inviscid to conserve the equilibrium solution, to treat compressibility at best, and to respect the supersonic character of the Keplerian flow. These conditions correspond to the ones expected in an ideal dead zone of PPDs. Thus, in this outline, the equations governing gas dynamics in a 3D PPD are Euler’s equation coupled with the conservation of mass and energy; they read:

| (1) |

where is the gas energy and are all volume forces applied to the gas. refers to source or sink terms in the continuity equation while stands for heat transfer by conduction, radiation, or cooling. The variable is the surface enclosing the volume . In current version of RoSSBi3D, the volume forces applied to the gas are the central’s object gravitation, , aerodynamic forces with solid particles, self-gravity (SG)222SG is not yet supported in the 3D version and the indirect potential due to a shift of the barycenter of mass, since the frame is centered on the star’s position. The equations governing dust dynamics are slightly different, and it was preferred to devote the entire Sect. 2.9 to solid particles. The conservative form provided by Eqs. 1 is attractive if the solution is discontinuous, which occurs when the flow is inviscid. This is one of the primary reasons that led us to use the FVM that we describe in the next section.

2.2 Numerical scheme: finite volume method

We summarise the set of Eqs. 1 under the compact form:

| (2) |

where , , and are the component of the Euler variables, flux, and source vectors. The expression of these quantities in cylindrical coordinates is provided in Appendix B. We use a cell-centred formulation that is variables are defined by their mean value over finite volumes. This implies that triple integrals are simply approximated on elementary volumes as follows: where is the mean value of function in the elementary cell of respective volume . For conciseness, we will use the notation throughout the rest of this paper. On the other hand, the incoming fluxes are decomposed as elementary fluxes in each cell surface element. Considering the above statements, the flux and source terms of Eq. 2 can be rewritten as:

| (3) |

where is the outward unit normal vector to surface . Accounting for the above approximations, the conservative form of the evolution Eqs. 2 can be simply written:

| (4) |

The 2D and 3D versions of RoSSBi3D solve the above equations in a polar and cylindrical coordinate system, respectively.

For the sake of conciseness, in next sections we will only treat the 1D case, which is easily generalised to three dimensions.

2.3 Mesh

In the current version, we use a uniform structured grid in the azimuth and vertical directions while logarithmic in the radial direction. The choice of a logarithmic mesh in the radial direction stems from the 2D code. Indeed, a logarithmic mesh is necessary for computing SG thanks to Fast Fourier methods (Baruteau & Masset, 2008; Surville, 2013; Rendon Restrepo & Barge, 2023). Even if the logarithmic cutting is unnecessary in the 3D version, we kept it in order to save the effort during the coding stage.

2.4 Boundary conditions and ghost cells

In our code, we connected the numerical domain to the stationary flow in isothermal equilibrium (see Eq. 25) thanks to a set of 2 ghost cells in each direction. A sudden change in the flow at the domain boundaries could lead to waves reflection, which could affect the computation domain, and even instabilities can arise from an improper choice of BC. Therefore, in the radial direction, we apply wake-killing and sheared BC in order to avoid (i) spurious reflections which can perturb the computation domain and (ii) the triggering of Papaloizou-Pringle like instabilities in the disc inner edge (Papaloizou & Pringle, 1984; Goldreich & Narayan, 1985). We naturally use periodic BC in the azimuthal direction to close the physical domain. Finally, motivated by the work of Meheut et al. (2012b); Lin (2013), we implemented two different kinds of BC for the vertical direction: outflow BC or sheared damped BC.

2.5 Time evolution and scheme stability

In order to match high accuracy and reasonable computation effort, we use an explicit, second order Runge-Kutta Method (RKM2) time-stepping scheme for time integration. Crudely, this RKM2 is based on the first prediction of a differential equation solution which permits performing a correction and thus increases the accuracy of the final solution. The most general form of the explicit RKM2 applied to our problem is written:

| (5) |

where is the time step and is the time at step . For our calculations, we used , , and .

In order to ensure the scheme stability during the time integration, a CFL-like condition must be respected at each time step (Courant et al., 1928; Toro, 2009, chapter 6.3). In the absence of magnetic fields and viscosity, this condition is fulfilled as long as the time step is less than:

| (6) |

where is the sound speed in the center of the cell and is a constant parameter, which is equaled to in order to improve stability. Physically, the quantity is the highest wave speed in the whole computational domain which allows interpreting the CFL requirement as a condition for avoiding multiple wave overlap within cell when the Riemann problem is solved. Indeed, only two solutions of the Riemann problem can affect cell : the solution coming from left cell and the solution coming from the right cell (Toro, 2009, chapter 6.1).

2.6 Numerical fluxes and exact Riemann solver

From the intrinsic definition of FVM, a numerical solution possesses discontinuities at inter-cells positions, which forces the fluxes at cell boundaries to be computed. Indeed, flux terms are step functions, which are not continuous from one cell to the other. At each time step, , a correct evaluation of these fluxes is done thanks to the solution of the following initial value problem (IVP):

| (7) |

This corresponds to a local Riemann problem, the solution of which is executed numerically thanks to an exact Riemann solver which has been taken from Toro (2009, chapter 4). Even if contains source terms, which does not pose calculation problems, the Riemann solver is more sensitive to the scheme permitting to estimate fluxes. Indeed, a difficulty is that Euler variables are only defined on cell’s centers, but flux terms need to be computed on cell’s frontiers. A workaround is to improve the FVM with a more advanced spatial scheme, which could permit better estimation of fluxes between cells and, thus, obtaining accurate solutions even in the presence of shocks. Therefore, for this version of RoSSBi3D, we use (1) a three-point parabolic interpolation of Euler variables, which allows accurate computation of flux terms at cell’s boundaries, combined with (2) a MinMod flux limiter in order to avoid undesired oscillations.

All steps of this section are summarised below (for cell ). First, we perform the (1) Euler variables interpolation at the cell frontiers. For instance, the left and right Euler variables at frontier , , and , respectively, are estimated thanks to the parabolic interpolation described in Sect. 2.7. Then, (2) fluxes are evaluated in the right and left side of each frontier. Still for frontier , we get:

| (8) | |||

| (9) |

Finally, we proceed to the (3) Riemann problem resolution. When fluxes are estimated for the first time at frontiers and , the exact Riemann solver allows computing exactly the Euler variables at frontiers and then reevaluating fluxes:

| (10) |

where Riemann stands for the solver. We highlight that the choice of the exact Riemann solver is justified by the absence of viscosity in the simulation box. Indeed, this solver was specially designed for the treatment of Euler’s equations.

2.7 Method improvement thanks to the stationary solution and a parabolic interpolation

The equilibrium solution presented in Eq. 25 could be used to improve the numerical scheme described in Sect. 2.6. First, we rewrite the Euler variables under the form:

| (11) |

where are the stationary solution Euler variables. stands for deviations to the steady axisymmetric flow: for the stationary solution, we get . Thanks to this formulation, the Euler variables at cell frontiers are:

| (12) |

Such formulation has two advantages. First, the stability of the stationary solution is improved since we use the exact value of the Euler variables at the cell frontiers and subsequently the exact flux terms. Second, the accuracy of flux terms is improved thanks to a more accurate interpolation of Euler’s variables described in 2.6. Within this maneuver a parabolic interpolation on permits obtaining the left and right Euler’s variables at cell’s frontiers:

| (13) |

where:

| (14) |

In order to avoid undesired oscillations, we use a MinMod flux limiter which, when necessary, reduces the curvature of the interpolation function (Surville, 2013, Appendix).

2.8 Balanced scheme

Top: Unbalanced

Bottom: Balanced

One sensitive issue in PPD is that the stationary solution is not in a numerical equilibrium. Indeed, the scheme induces residual terms, which affect the stationary solution during the time evolution steps. For instance, the evolution of the radial velocity, written in terms of Euler variables is:

| (15) |

where the right hand side stems from the computation of flux and source terms (see Eq. 4 and Appendix B). For an ideal infinite mesh resolution, the r.h.s term of above equation vanishes, but in practise it is not the case. Indeed, this term depends on the accuracy of computation, which introduces a small residual term affecting the stationary solution after few tens of orbits.

At 2D, Surville (2013, Chapter III.2.1) corrected this problem using an interpolation of Euler variables and performing the exact computation of the stationary solution source terms. However, for the current 3D code, this is a more difficult task since the same method would imply the analytic integration of source terms in the radial and vertical directions. For above reason, we adopted another technique for the 3D code proposed by Hervé Guillard, which consists on performing an empty run which allows to compute the r.h.s residual term for the equilibrium solution. This residual term is then subtracted at each time step from the right hand side of Eq. 15. Such strategy was also adopted by Meheut et al. (2010, Sect. 4.4). Other sophisticated techniques exist, but they do not show significant improvements while the present one has the advantage to be easy and rapid to implement. In Fig. 1, we report the error in different physical quantities for the equilibrium solution explored in Sect. 4.2, depending on whether the numerical scheme is balanced or not. The effectiveness of the exposed method is confirmed: the errors of the unbalanced scheme are 5 orders of magnitude higher than the balanced scheme at least.

2.9 Aerodynamic forces and evolution of the solid phase

In PPDs, particles interact with the gas mainly through friction forces that look like a headwind depending on the size of the particles and the gas free mean path. From the kinetic theory of gases, the mean-free-path of a gas molecule, , is defined as the length travelled between two successive collisions:

| (16) |

where , , and are the atomic mass, the number of atoms of the gas, and the molecule cross-section, respectively. The two regimes describing drag forces are the Epstein and Stokes regime. The former describes particles with sizes smaller than the gas mean-free-path interacting with the gas through molecular collisions and is equivalent to a ram pressure force. The latter deals with particles with a size being greater than the gas mean-free-path and experiencing a viscous fluid friction. For ideal spherical particles, the laws of friction expressions are well known (Weidenschilling, 1977; Youdin, 2010); the norm of the volume drag force is with:

| (17) |

where , , and are the radius, the internal density of particles, and particles velocity relative to the gas, respectively.

Solid material is considered as a pressureless fluid interacting with gas through the aerodynamic forces introduced in the above paragraph. Therefore the equations governing dust dynamics are similar to the ones of gas except that the pressure term vanishes, which implies that there is no energy equation to be solved for this phase. The absence of pressure implies that the Riemann solver should be adapted to solid particles, but essentially it is almost the same as for gas.

2.10 Self-gravity and indirect-gravity

The current version of RoSSBi3D supports SG for 2D simulations and accounts for the correction and generalisation of the smoothing length method to bi-fluids proposed by Rendon Restrepo & Barge (2023). On the other hand, the 3D version lacks SG calculation. Indeed, this is a long-term task requiring care in the theoretical and programming stages. Yet, we already thought about it and we provide a discussion on different possibilities for future versions in Sect. 5.3.

The presence of a disc asymmetry can lead to an offset of the mass barycenter (Zhu & Baruteau, 2016; Regály & Vorobyov, 2017). We have taken this effect into account through the gradient of the indirect gravity potential, which can be read in cylindrical coordinates:

| (18) |

where and . We assumed that the density vertical stratification is symmetric with respect to the plane that permits the vertical component of the indirect gravity to be cancelled.

2.11 Numerical resolution summary

| Version | 2D | 3D |

|---|---|---|

| Box | yes | yes |

| Parallelism in all directions | yes | yes |

| Dust drag | yes | yes |

| Dust back-reaction | yes | yes |

| SG | yes | no |

| Indirect-Gravity | yes | yes |

| Viscosity | no | no |

| Magnetic fields | no | no |

We presented the new bi-fluid RoSSBi3D code, which is the natural extension of RoSSBi to 3D. This 3D version is based on a FVM scheme, is third order in space, second order in time, stable, balanced, and accounts for inherent boundary instabilities, which could arise in PPDs simulations. The current version of RoSSBi3D supports the options gathered in Table 1. In the next section, we show how we succeeded in making a fast and scalable 2D and 3D code well-suitable for HPC.

3 Improving performances on parallel architectures

Parallelism in this code is implemented using an MPI programming model (Message Passing Interface Forum, 2021). It is a standardised protocol that provides a communication among processes running on parallel computers, typically consisting of many nodes with distributed memory model.

3.1 Parallel decomposition of the regular grid

The subdomains of same colour are not treated by the same processor (It permits only to differentiate subdomains from each other).

| 1D | 2D | |

|---|---|---|

Domain decomposition is one key to reach scalability and high-performance at high resolutions. As an instructive example, let us consider a 2D square computational box containing cells in each direction where the computational effort (workload) is shared among processors. In a crude approach, the workload computed by a processor is proportional to the volume of data the assigned cells contain () while communication (the data to be transferred among processors) is proportional to their surface (). One may use the communication-to-computation ratio, 333In numerical code metrics, we often use granularity defined by where and are the measured computation and communication times, respectively., which helps to roughly estimate the bandwidth requirements. Since the ratio between the available bandwidth and the computing power of the modern parallel HPC systems is typically low, it is also important to keep the ratio low during the algorithm design. For distributing the processors in this regular grid, we consider 1D and 2D domain decompositions the features of which are gathered in Table 2. The ratio is lower in the 2D decomposition compared to the 1D decomposition since it grows more slowly with . Therefore, communication costs are smaller in the 2D case and more suitable for architectures with low bandwidth. For the reasons above, it was decided to (1) change the 1D grid decomposition to a 2D grid decomposition in the 2D code and (2) use a 3D grid decomposition in the 3D code. Fig. 2 illustrates how the computational grid could be decomposed and distributed among 128 processors.

3.2 Performance analysis and optimisations

| Version | original | updated |

|---|---|---|

| Number of processes (total) | 576 | 576 |

| Number of processes (X, Y, Z) | (8, 36, 2) | (8, 18, 4) |

| Elapsed time (sec) | 7.59 | 1.56 |

| Efficiency | 0.18 | 0.95 |

| Speedup | 2.85 | 15.13 |

| Average IPC | 1.05 | 0.92 |

| Average frequency (GHz) | 2.52 | 3.25 |

Many bottlenecks intrinsically related to the structure of the code may suppress the potential of parallelisation. Therefore, we took advantage of the offer by Performance Optimisation and Productivity CoE (POP) that helps users and developers to optimise parallel software and understand performance issues. The initial performance assessment of the RoSSBi3D code was requested to identify areas of improvement in large resolution simulations. The assessment was conducted using Barbora cluster at IT4Innovations, National Supercomputing Center at VSB – Technical University of Ostrava. The cluster consists of 192 powerful x86-64 compute nodes, each equipped with 2x 18-core Intel Cascade Lake 6240 at 2.6 GHz processors and 192 GB of DDR4 memory, interconnected by high-speed InfiniBand HDR100 network. As the vast majority of the current supercomputers, Barbora is operated by a Linux OS, specifically Red Hat Enterprise Linux 7.9. To build and install the RoSSBi3D code, the GCC 9.3.0 toolchain together with OpenMPI 4.0.3 was used. In addition, the BSC performance toolset including Extrae 3.8.3, Paraver 4.9.0, Dimemas 5.4.2, and Basic Analysis 0.3.9 was employed to collect and analyse performance data.

The scaling of the RoSSBi code was evaluated on 1 – 16 compute nodes, i.e 36 – 576 cores, respectively. During the initial stage of the analysis, it turned out that full-length simulations comprising millions of time steps produce an unmanageable amount of collected performance data. Using high-level techniques for detecting the computation structure, it was proved that the performance characteristics do not evolve in time significantly. Therefore, further analysis was done with the simulation duration being reduced to the order of low hundreds of time steps. The detailed computation structure analysis showed that the code had issues with computation granularity that were indicated by the very short computation bursts – the sequences of user-code instructions outside the MPI library runtime. Most of the bursts were shorter than 500 s. The scalability tests showed rapid speedup degradation on more than 2 nodes as depicted in Fig. 3 (original version).

The following investigation revealed significant growth of the communication phase that consisted of a sequence of loops with many short, mostly collective, MPI calls. Moreover, the loops overhead also caused the increased number of instructions issued. Each MPI call or user instruction causes an additional latency that increases the total runtime and effectively degrades the scalability. To narrow this limiting factor, the code was refactored in the way of fusing the loops and collapsing the thousands of the small MPI messages transferred in each time step into only tens of larger messages. This enhancement significantly improved efficiency of the inter-node communication through the network and also reduced the instruction overhead. Another important optimisation was improving the insufficient parallelisation of 3D domains in all directions (see Sect. 3.1). Indeed, the original version of RoSSBi3D was only parallelised in the azimuthal direction while the updated version used a 3D domain decomposition.

The follow-on performance assessment demonstrated that the applied optimisations significantly improved the scaling as shown in Fig. 3 (updated version). During the analysis, we found that also the MPI process configuration and domain decomposition, as explained in Sect. 3.1, has a non-negligible impact on performance. For example, we obtained the lower speedup value (13.62) of the updated version in Fig. 3 with the 8x36x2 configuration of processes, while the higher value (15.13) using the 8x18x4 configuration. Table 3 reports up to 4.9x speedup on the same largest test case. Also increased average CPU frequency was observed. For future scaling to even larger test cases, some optimisation opportunities in the communication pattern were also identified. The single-core performance might be enhanced by analysing and optimising the vectorisation opportunities.

We also compared the performances of RoSSBi3D when compiled with OpenMPI library + GCC compiler and with IntelMPI library + ifort compiler for a standard test on 64 processors in CeSAM. The test reports a 1.86 speedup when compiled with Intel compilers that we recommend for Intel architectures. This is particularly interesting since Intel® compilers are free to use now.

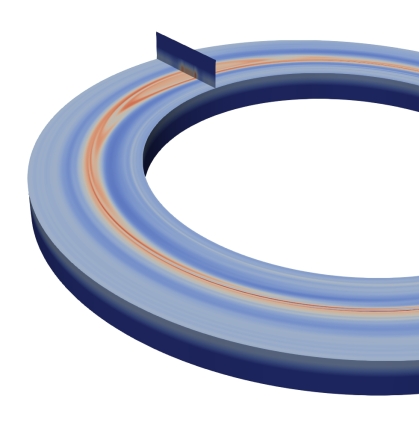

Outline: Column Gaussian vortex evolution.

Barbora: 2 Intel Cascade Lake, 18 cores, 2.6 GHz (namely 36 cores/node). Infiniband HDR 200 Gb/s

4 Test cases

This section is devoted to retrieve common results on PPDs literature thanks to the RoSSBi3D code. For the sake of clarity in this whole section, and stand for the orbital period and the pressure scale height, respectively, at the center of the simulation box (). We start assessing our numerical method with the 2D explosion test.

4.1 Cylindrical explosion test

| Top left: density | Top right: radial velocity |

| Bottom left: pressure | Bottom right: internal energy |

The explosion test is a 2D analogue of the sod shock-tube test which permits both the accuracy of a numerical scheme and its ability to keep the cylindrical symmetry of the numerical solution to be assessed (Toro, 2009, chap. 17). This test consists of a 2D Riemann problem in which, as an initial state, a high pressure region and a low pressure region are separated by a diaphragm:

| (19) |

For this test, we take the ratio of specific heats , and the number of cells in the radial and azimuthal direction is . In Fig. 4, we show this test results at , which are in good agreement with the reference solution of Toro (2009, Fig. 17.4).

4.2 Equilibrium solution

4.3 Rossby wave instability

| Top left: mode ; | Top right: mode ; |

| Bottom left: mode ; | Bottom right: mode ; |

| row: | Left: Normalised density - | Right: Normalised pressure - |

|---|---|---|

| row: | Left: Rossby number - | Right: Normalised deviation to azimutal velocity - |

with and .

PPDs are subject to instabilities, such as the RWI (Lovelace et al., 1999b; Li et al., 2000). Numerical and theoretical work was already considered at 3D for this instability by few authors and in this section, we aim to retrieve Meheut et al. (2010, 2012a) results. As an initial state, we introduced a radial Gaussian overdensity bump in the stationary solution provided by Eq. 25

| (20) |

To facilitate the trigger of the unstable modes we introduced a small perturbation in the radial velocity field:

| (21) |

where and is the perturbation mode. We ran 5 simulation tests for the outline of which is the same as (Meheut et al., 2012b). In Fig. 5 we depicted the exponential growth rate obtained for each mode. Fig. 6 shows the perturbed density evolution in the region for the mode up to instability saturation and in Fig. 7, we illustrated in detail the vortex generated by the mode and its vertical distribution. We found that the growth rate values and the curve trend are in agreement with Meheut et al. (2012b, Fig. 7).

4.4 Dust trapping by 3D gaseous Vortices

| row: | Left: whole disc | Right: zoom |

|---|---|---|

| row: | Left: whole disc | Right: zoom |

| row: | Left: whole disc | Right: zoom |

In all these graphics we rescaled the vertical direction by a factor 20.

Vortices are gaseous structures whose main interest is their strong ability to capture solid particles with nearly optimal Stokes numbers (Barge & Sommeria, 1995; Tanga et al., 1996). Here we propose to find this trapping mechanism in a 3D vortex using RoSSBi3D.

For this test, we use the vortex obtained after RWI saturation in the mode of Sect. 4.3. Fig. 7 shows this 3D gaseous vortex used as an input for this test. Then we introduce uniformly dust in the radial and azimutal direction but with a vertical Gaussian distribution:

| (22) |

where and . We chose particles interacting with the gas only through aerodynamic forces (friction and dust back-reaction) and with a nearly optimal Stokes number (). In Fig. 8, the dust density is exhibited at different times. We do not show the gaseous vortex evolution since it is nearly unaffected by the dust back-reaction.

In the early stages, we observe that dust settles in the midplane and starts to be captured by the vortex. After orbits almost all dust lies exactly in the midplane: the settling time is consistent with the theoretical prediction . Because of the absence of viscosity the dust scale vanishes (at the limit of the vertical resolution). The gaseous vortex collects dust during the whole simulation and after 110 orbits the dust density is increased by a factor 2000 in the vortex core which is in agreement with the theoretical expectations.

5 Next steps and possible development

Despite a good scalability, RoSSBi3D may need improvements to tackle challenging simulations, such as 2D and 3D SG at high resolutions, and at the same time, more physics options are needed to describe PPDs in a more realistic way. Therefore, in the following sections, we discuss possible improvements to our code.

5.1 Can GPU improve the performance of RoSSBi3D ?

Outline: Column Gaussian vortex evolution.

Jean Zay: 2 Intel Cascade Lake 6248, 20 cores, 2.5 GHz (40 cores/node)

Barbora: 2 Intel Cascade Lake, 18 cores, 2.6 GHz (36 cores/node). Infiniband HDR 200 Gb/s

AMU Mesocentre: Intel®Xeon® Gold 6142 (SkyLake), 32 cores, 2.6 GHz. Nodes Dell PowerEdge C6420

According to Gheller et al. (2015) and Wei et al. (2020), GPU technology is promising for solving Euler’s equations. However, the current code architecture and the observed workload on processors in tests conducted do not permit confirming yet if RoSSBi3D performance would benefit notably from GPU acceleration. Another audit or proof of concept analysis would be necessary to elucidate this question, but this is not a current priority. Furthermore, the parallelisation philosophy in the CUDA language is different from MPI and this would imply a significant rewriting of the code. Alternatively, the OpenACC language could be employed for prototyping GPU acceleration since it is way less intrusive than CUDA and can potentially reach similar performances. Finally, the metrics and scalability of the code illustrated in Table 3 and Fig. 9 are satisfactory, and for the current use there is no real need to migrate to GPU.

5.2 Optimisation of 2D self-gravity

Outline: Self-gravitating Gaussian vortex evolution.

Parameters: , , , . Resolution: . Simulation box: , .

Jean Zay cluster: 2 Intel Cascade Lake 6248, 20 cores, 2.5 GHz (namely 40 cores/node)

Currently the calculation of 2D SG is based on convolution products computed thanks to fast Fourier transforms. In Fig. 10, we compare the scalability of the 2D version of RoSSBi3D with and without SG with respect to the number of processors. We found that with SG, the scalability starts to show limitations from 16 nodes (576 processors), whereas such behaviour is not observed without SG. This suggests that SG calculation is responsible for the loss of performances for a large number of nodes. Possible improvements that permit alleviating this issue consists in performing in-place transforms, which is particularly interesting if each processor has to treat large amounts of data. Another idea is to carrying out a single transform on a unique multidimensional array instead of doing successive transforms on multiple arrays. This could be beneficial for bi-fluid simulations where 6 Fourier transforms need to be performed at each time step, compared to 2 for a single fluid simulation (Rendon Restrepo & Barge, 2023).

5.3 Implementation of 3D self-gravity

SG is a rather scarce option in 3D hydrodynamic codes. There are only a few codes with this option, e.g.: the PENCIL Code (Pencil Code Collaboration et al., 2021), RAMSES (Teyssier, 2002), NIRVANA (Ziegler, 2004) or FLASH (Fryxell et al., 2000). The scarcity mainly comes from computational costs and trade offs between accuracy and computational efficiency. In the next paragraph, we summarise few of the main methods for computing SG and identify those that could help us.

The numerical computation of the SG potential and the associated forces are handled by two main approaches. The first one is called the difference method and is based on the resolution of the Poisson’s equation:

| (23) |

where is the gravitational potential. This equation discretization results in a linear system which is usually solved using iterative multigrid methods as it was done by Ziegler (2005) in NIRVANA. Nonetheless, this requires evaluating the SG potential at the numerical domain boundaries which could be done by multipole expansion. The second approach called integral method relies on the equivalent integral form:

| (24) |

This summation could be performed using a multipole expansion of , which is explained in detail by Wongwathanarat (2019). This second formulation is also compatible and appealing for some Adaptive Mesh Refinement (AMR) codes where one can take advantage of the tree structure: in a crude way, the self-gravity calculation is refined in the neighbourhood of a cell or regions of strong density gradients while long distance and slow varying density regions are averaged. This method is very efficient numerically and is used by Wünsch et al. (2018). Finally, fast Fourier methods could also be used for both formulations and different types of geometries (Müller & Chan, 2019; Moon et al., 2019; Krasnopolsky et al., 2021).

5.4 A possible multi-fluid approach

This is a development often considered to address the complex evolution of PPDs. Numerical simulations with two phase codes generally describe the solid material as a single population of spherical and solid particles. This is of course a major draw-back which biases the estimate of dust-gas friction and, consequently, the description of the disc evolution. A natural possibility to overcome this problem is to use a multi-fluid approach as, for example, the ones implemented by Hanasz et al. (2010), Benítez-Llambay et al. (2019) and Huang & Bai (2022).

However, in a view of a more realistic evolution, it is also important to describe the exchanges of mass and energy between the various fluids of the dispersed phase and between the two phases, gas and solids. This refers to the formation of planetesimals, a problem in which binary collisions and gas drag must be taken into account and result in coagulation/fragmentation or condensation/evaporation processes (Weidenschilling, 1980; Voelk et al., 1980; Weidenschilling & Cuzzi, 1993; Brauer et al., 2008; Birnstiel et al., 2011). An appropriate way to implement a multi-fluid approach could be to use a sectional method (Laurent & Massot, 2001; Laurent, 2002), which is well known in the context of the dispersed droplet evolution (Dufour & Villedieu, 2005) and can be interpreted in terms of the kinetic theory of gases (Tambour, 1985; Laurent & Massot, 2001). The advantages of this method are to ensure conservation of moment transfer between the various fluids and, possibly, to rely on the large developments of the numerical simulation of reactive flows (Drui, 2017).

5.5 Other possible improvements

In order to explain the angular momentum transport in PPDs, it is common to associate local turbulence, generated in various regions of the disc, with a pseudo-viscosity (Shakura & Sunyaev, 1973). Promising mechanisms generating turbulence include the magneto rotational instability (MRI) (Balbus & Hawley, 1991) and the VSI (Nelson et al., 2013; Stoll & Kley, 2014), among others. Such mechanisms encourage to include viscosity in the RoSSBi3D code for a realistic description of PPD. However, accounting viscous effects modifies the nature of the equations to be solved: Euler’s equations are hyperbolic whereas Navier-Stokes equations are parabolic. The presence of diffusion causes the Riemann solver to be inadequate for solving the Navier-Stokes equations, forcing an alternative numerical strategy. A possible solution could be to use an explicit Runge-Kutta-Legendre method (Meyer et al., 2014).

The confrontation of numerical models with dust thermal emission (Andrews, 2015) is key since it allows discriminating between physical processes operating on PPDs. Such comparison is made possible thanks to dust radiative transfer codes, see (Steinacker et al., 2013, and references therein). Therefore, an important step is to include radiative transfer in the post-processing pipeline from another existing code. We think that the best option is to use a free, robust, documented, and up to date existing code: the most attractive solutions seem to be RADMC-3D 2.0 or MCFOST.

6 Conclusions

In this paper, we presented the new RoSSBi3D code specifically designed for PPDs studies at 2D and 3D.

This FORTRAN 90 code solves the fully compressible inviscid Euler, continuity and energy conservation equations in polar coordinates for an ideal gas orbiting a central object.

Solid particles are treated as a pressureless fluid and interact with the gas through aerodynamic forces in the Epstein or Stokes regimes.

In 2D, particles can also interact with gas via SG.

The main features implemented in this code are:

Numerical methods

-

Finite volume scheme adapted to non-linear conservation laws and naturally to computational fluid dynamics problems

-

Second order Runge Kutta method for the time integration

-

An exact Riemann solver

Scheme improvement and stability

-

Sheared BC at radial boundaries for avoiding waves reflection and instabilities. Outflow BC in the vertical extents

-

A CFL-like condition respected in the whole numerical domain which ensures time integration stability

-

A balanced scheme adapted to PPDs

-

A parabolic interpolation based on deviations to the equilibrium solution

-

A MinMod flux limiter allowing to handle drastic density gradients

An optimised parallelism

-

A 3D domain decomposition for 3D calculations

-

An optimised code thanks to POP audit and good MPI practices

-

A satisfactory scalability up to 1280 processors (2D with SG) and 2560 processors (2D and 3D without SG)

We performed test cases and we retrieved expected results, which validated RoSSBi3D reliability. As future improvements, we propose the following implementations:

-

Viscosity at 2D and 3D

-

A 3D SG option based on the integral method and Fast Fourier Transforms

-

Output files compatible with RADMC-3D 2.0 or MCFOST to include radiative transfer

For 3D calculations, the code is accompanied by a visualisation tool based on Paraview. Finally, RoSSBi3D is open source, released under the terms of the CeCILL2 Licence, and accessible from its public repository: [email protected]:srendon/rossbi3d.git.

Acknowledgements.

S.R.R acknowledges Clément Baruteau for discussions and suggestions for a future coding of SG at 3D, Jean M. Favre for writing the Python programmable source used for 3D data visualisation in Paraview, and Jean-Charles Lambert for his technical support during the whole coding phase of the 3D version and optimisation of the 2D version. We would like to warmly acknowledge Stéphane Le Dizès for fruitfull discussions during the preparation of the paper and also for his help to find funding for part of the project. P.B thanks Serguei Rodionov at LAM for his help in initiating the first systematic treatments of the data using Python subroutines. This work was granted access to the HPC resources of IDRIS under the allocation A0090411982 made by GENCI. Centre de Calcul Intensif d’Aix-Marseille is acknowledged for granting access to its high performance computing resources. This research has made use of computing facilities operated by CeSAM data center at LAM, Marseille, France. This work was supported by the POP2 project - the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 824080 and by the Ministry of Education, Youth and Sports of the Czech Republic through the e-INFRA CZ (ID:90140). Co-funded by the European Union (ERC, Epoch-of-Taurus, 101043302). Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or the European Research Council. Neither the European Union nor the granting authority can be held responsible for them.References

- Ahrens et al. (2005) Ahrens, J., Geveci, B., & Law, C. 2005, Visualization Handbook

- Andrews (2015) Andrews, S. M. 2015, PASP, 127, 961

- Balbus & Hawley (1991) Balbus, S. A. & Hawley, J. F. 1991, ApJ, 376, 214

- Barge et al. (2005) Barge, P., Inaba, S., Daniel, E., & Guillard, H. 2005, in SF2A-2005: Semaine de l’Astrophysique Française, ed. F. Casoli, T. Contini, J. M. Hameury, & L. Pagani, 767

- Barge et al. (2017) Barge, P., Ricci, L., Carilli, C. L., & Previn-Ratnasingam, R. 2017, A&A, 605, A122

- Barge et al. (2016) Barge, P., Richard, S., & Le Dizès, S. 2016, A&A, 592, A136

- Barge & Sommeria (1995) Barge, P. & Sommeria, J. 1995, A&A, 295, L1

- Baruteau & Masset (2008) Baruteau, C. & Masset, F. 2008, ApJ, 678, 483

- Benítez-Llambay et al. (2019) Benítez-Llambay, P., Krapp, L., & Pessah, M. E. 2019, ApJS, 241, 25

- Birnstiel et al. (2011) Birnstiel, T., Ormel, C. W., & Dullemond, C. P. 2011, A&A, 525, A11

- Brauer et al. (2008) Brauer, F., Dullemond, C. P., & Henning, T. 2008, A&A, 480, 859

- Courant et al. (1928) Courant, R., Friedrichs, K., & Lewy, H. 1928, Mathematische Annalen, 100, 32

- Dong et al. (2018) Dong, R., Liu, S.-y., Eisner, J., et al. 2018, ApJ, 860, 124

- Drui (2017) Drui, F. 2017, Theses, Université Paris-Saclay

- Dufour & Villedieu (2005) Dufour, G. & Villedieu, P. 2005, http://dx.doi.org/10.1051/m2an:2005041, 39

- Fryxell et al. (2000) Fryxell, B., Olson, K., Ricker, P., et al. 2000, ApJS, 131, 273

- Gheller et al. (2015) Gheller, C., Wang, P., Vazza, F., & Teyssier, R. 2015, in Journal of Physics Conference Series, Vol. 640, Journal of Physics Conference Series, 012058

- Goldreich & Narayan (1985) Goldreich, P. & Narayan, R. 1985, MNRAS, 213, 7P

- Hanasz et al. (2010) Hanasz, M., Kowalik, K., Wóltański, D., Pawłaszek, R., & Kornet, K. 2010, in EAS Publications Series, Vol. 42, EAS Publications Series, ed. K. Gożdziewski, A. Niedzielski, & J. Schneider, 281–285

- Huang & Bai (2022) Huang, P. & Bai, X.-N. 2022, arXiv e-prints, arXiv:2206.01023

- Inaba & Barge (2006) Inaba, S. & Barge, P. 2006, ApJ, 649, 415

- Inaba et al. (2005) Inaba, S., Barge, P., Daniel, E., & Guillard, H. 2005, A&A, 431, 365

- Johansen & Youdin (2007) Johansen, A. & Youdin, A. 2007, ApJ, 662, 627

- Klahr (2004) Klahr, H. 2004, ApJ, 606, 1070

- Klahr & Bodenheimer (2003) Klahr, H. H. & Bodenheimer, P. 2003, ApJ, 582, 869

- Krasnopolsky et al. (2021) Krasnopolsky, R., Martínez, M. P., Shang, H., Tseng, Y.-H., & Yen, C.-C. 2021, ApJS, 252, 14

- Laurent (2002) Laurent, F. 2002, Theses, Université Claude Bernard - Lyon I

- Laurent & Massot (2001) Laurent, F. & Massot, M. 2001, Combustion Theory and Modelling, 5, 537

- Li et al. (2000) Li, H., Finn, J. M., Lovelace, R. V. E., & Colgate, S. A. 2000, ApJ, 533, 1023

- Lin (2013) Lin, M.-K. 2013, MNRAS, 428, 190

- Lovelace et al. (1999a) Lovelace, R. V. E., Li, H., Colgate, S. A., & Nelson, A. F. 1999a, ApJ, 513, 805

- Lovelace et al. (1999b) Lovelace, R. V. E., Li, H., Colgate, S. A., & Nelson, A. F. 1999b, ApJ, 513, 805

- Manger et al. (2020) Manger, N., Klahr, H., Kley, W., & Flock, M. 2020, MNRAS, 499, 1841

- Manger et al. (2021) Manger, N., Pfeil, T., & Klahr, H. 2021, MNRAS, 508, 5402

- Meheut et al. (2010) Meheut, H., Casse, F., Varniere, P., & Tagger, M. 2010, A&A, 516, A31

- Meheut et al. (2012a) Meheut, H., Keppens, R., Casse, F., & Benz, W. 2012a, A&A, 542, A9

- Meheut et al. (2012b) Meheut, H., Yu, C., & Lai, D. 2012b, MNRAS, 422, 2399

- Message Passing Interface Forum (2021) Message Passing Interface Forum. 2021, MPI: A Message-Passing Interface Standard Version 4.0

- Meyer et al. (2014) Meyer, C. D., Balsara, D. S., & Aslam, T. D. 2014, Journal of Computational Physics, 257, 594

- Moon et al. (2019) Moon, S., Kim, W.-T., & Ostriker, E. C. 2019, ApJS, 241, 24

- Müller & Chan (2019) Müller, B. & Chan, C. 2019, ApJ, 870, 43

- Nelson et al. (2013) Nelson, R. P., Gressel, O., & Umurhan, O. M. 2013, MNRAS, 435, 2610

- Papaloizou & Pringle (1984) Papaloizou, J. C. B. & Pringle, J. E. 1984, MNRAS, 208, 721

- Pencil Code Collaboration et al. (2021) Pencil Code Collaboration, Brandenburg, A., Johansen, A., et al. 2021, The Journal of Open Source Software, 6, 2807

- Pérez et al. (2018a) Pérez, L. M., Benisty, M., Andrews, S. M., et al. 2018a, ApJ, 869, L50

- Pérez et al. (2018b) Pérez, L. M., Benisty, M., Andrews, S. M., et al. 2018b, ApJ, 869, L50

- Raettig et al. (2021) Raettig, N., Lyra, W., & Klahr, H. 2021, ApJ, 913, 92

- Regály & Vorobyov (2017) Regály, Z. & Vorobyov, E. 2017, A&A, 601, A24

- Rendon Restrepo & Barge (2022) Rendon Restrepo, S. & Barge, P. 2022, A&A, 666, A92

- Rendon Restrepo & Barge (2023) Rendon Restrepo, S. & Barge, P. 2023, A&A

- Richard et al. (2013) Richard, S., Barge, P., & Le Dizès, S. 2013, A&A, 559, A30

- Richard et al. (2016) Richard, S., Nelson, R. P., & Umurhan, O. M. 2016, MNRAS, 456, 3571

- Shakura & Sunyaev (1973) Shakura, N. I. & Sunyaev, R. A. 1973, A&A, 24, 337

- Stapelfeldt et al. (1999) Stapelfeldt, K. R., Watson, A. M., Krist, J. E., et al. 1999, ApJ, 516, L95

- Steinacker et al. (2013) Steinacker, J., Baes, M., & Gordon, K. D. 2013, ARA&A, 51, 63

- Stoll & Kley (2014) Stoll, M. H. R. & Kley, W. 2014, A&A, 572, A77

- Surville (2013) Surville, C. 2013, PhD thesis, Aix Marseille Université, thèse de doctorat dirigée par Barge, Pierre Astrophysique et Cosmologie Aix-Marseille 2013

- Tambour (1985) Tambour, Y. 1985, Combustion and Flame - COMBUST FLAME, 60, 15

- Tanga et al. (1996) Tanga, P., Babiano, A., Dubrulle, B., & Provenzale, A. 1996, Icarus, 121, 158

- Teyssier (2002) Teyssier, R. 2002, A&A, 385, 337

- Toro (2009) Toro, E. 2009, Riemann Solvers and Numerical Methods for Fluid Dynamics: A Practical Introduction (Springer Berlin, Heidelberg), XXIV, 724

- Villenave et al. (2020) Villenave, M., Ménard, F., Dent, W. R. F., et al. 2020, A&A, 642, A164

- Villenave et al. (2022) Villenave, M., Stapelfeldt, K. R., Duchêne, G., et al. 2022, ApJ, 930, 11

- Voelk et al. (1980) Voelk, H. J., Jones, F. C., Morfill, G. E., & Roeser, S. 1980, A&A, 85, 316

- Von Neumann & Richtmyer (1950) Von Neumann, J. & Richtmyer, R. D. 1950, Journal of Applied Physics, 21, 232

- Wei et al. (2020) Wei, F., Jin, L., Liu, J., Ding, F., & Zheng, X. 2020, Journal of the Brazilian Society of Mechanical Sciences and Engineering, 42, 250

- Weidenschilling (1977) Weidenschilling, S. J. 1977, MNRAS, 180, 57

- Weidenschilling (1980) Weidenschilling, S. J. 1980, Icarus, 44, 172

- Weidenschilling & Cuzzi (1993) Weidenschilling, S. J. & Cuzzi, J. N. 1993, in Protostars and Planets III, ed. E. H. Levy & J. I. Lunine, 1031

- Wendt et al. (2009) Wendt, J., Jr, J., Degroote, J., et al. 2009, Computational Fluid Dynamics: An Introduction (Springer Berlin, Heidelberg), 1–332

- Wongwathanarat (2019) Wongwathanarat, A. 2019, ApJ, 875, 118

- Wünsch et al. (2018) Wünsch, R., Walch, S., Dinnbier, F., & Whitworth, A. 2018, MNRAS, 475, 3393

- Youdin & Johansen (2007) Youdin, A. & Johansen, A. 2007, ApJ, 662, 613

- Youdin (2010) Youdin, A. N. 2010, in EAS Publications Series, Vol. 41, EAS Publications Series, ed. T. Montmerle, D. Ehrenreich, & A. M. Lagrange, 187–207

- Youdin & Goodman (2005) Youdin, A. N. & Goodman, J. 2005, ApJ, 620, 459

- Zhu & Baruteau (2016) Zhu, Z. & Baruteau, C. 2016, MNRAS, 458, 3918

- Ziegler (2004) Ziegler, U. 2004, Journal of Computational Physics, 196, 393

- Ziegler (2005) Ziegler, U. 2005, A&A, 435, 385

Appendix A Stationary gas flow at equilibrium

Assuming the flow of gas is axisymmetric, in Keplerian equilibrium in the radial direction, in hydrostatic equilibrium in the vertical direction and inviscid, we get the equilibrium solution:

| (25) |

where :

| (26) |

Appendix B Conservative form variables

Below we present the Euler variables, flux terms and source terms.

Euler Variables

| (27) |

Flux terms

| (28) |

Source terms

| (29) |

with:

| (30) |

where is a volume force.

Appendix C Data treatment and visualisation tools

RoSSBi3D produces data for meshes containing up to few millions of points which require efficient visualisation tools. At the early stages of development of this code, the interactive visualisation program GLnemo2444https://projets.lam.fr/projects/glnemo2/wiki was used. Despite many advantages it was later decided to resort to another visualisation software. The decision was based mostly on the need to make visualisation runs remotely and in parallel but also with the need to display up to 15 different physical variables, which are not native in GLnemo2.

In order to satisfy above requirements, it was decided to use Paraview (Ahrens et al. 2005).

This visualisation application allows data to be visualised remotely using the GPU/CPU resources of computing clusters, which improves rendering since the graphic card of the host station is not used.

Currently, the visualisation works thanks to a programmable source based on a Python script.

In order to fully benefit from Paraview capabilities, it would be necessary in the future to write the output files of the RoSSBi3D code as VTK files.