Robustness of Practical Perceptual Hashing Algorithms to Hash-Evasion and Hash-Inversion Attacks

Abstract

Perceptual hashing algorithms (PHAs) are widely used for identifying illegal online content and are thus integral to various sensitive applications. However, due to their hasty deployment in real-world scenarios, their adversarial security has not been thoroughly evaluated. This paper assesses the security of three widely utilized PHAs—PhotoDNA, PDQ, and NeuralHash—against hash-evasion and hash-inversion attacks. Contrary to existing literature, our findings indicate that these PHAs demonstrate significant robustness against such attacks. We provide an explanation for these differing results, highlighting that the inherent robustness is partially due to the random hash variations characteristic of PHAs. Additionally, we propose a defense method that enhances security by intentionally introducing perturbations into the hashes.

1 Introduction

Perceptual hashing, also known as robust hashing, is a content fingerprinting technique that generates similar binary hashes for perceptually similar contents and different hashes for different contents [1]. Perceptual hashing is useful in various applications such as content moderation, authentication, and detection. Numerous perceptual hash algorithms (PHAs) have been developed or deployed for practical applications [2]. Early examples include the algorithm used on YouTube to identify copyrighted material [3] and algorithms used in online image search engines of Google, Microsoft Bing, etc [4].

Recently, PHAs have gained more critical importance, particularly in responding to the requiremnt of reporting illicit content. For instance, in cases involving Child Sexual Abuse Media (CSAM), US media service providers are legally obliged to examine suspected illegal content and report it to a “Cyber Tip Line” operated by the National Center for Missing and Exploited Children (NCMEC). Microsoft’s PhotoDNA and Meta’s PDQ were immediately deployed for this purpose [5][6][7].

With a growing emphasis on user privacy, technologies like end-to-end encryption have become prevalent. This shift has led to the deployment of PHAs on the client side before data encryption [8]. For example, Apple has proposed to deploy NeuralHash on all its iOS devices [9]. However, this client-side deployment has raised significant concerns among the client users about their data privacy, which inadvertently arouses a widespread criticism regarding the security and privacy-preserving capabilities of PHAs [10]. Criticism has been further fueled by recent studies highlighting the vulnerability of PHAs to adversarial attacks and the potential for information leakage through hash bits [4][11][12].

Given the critical role PHAs play in sensitive applications, it is essential to ensure their security. Unfortunately, PHAs are often deployed hastily without sufficient security evaluation. This paper systematically assesses the adversarial robustness of three widely used PHAs—PhotoDNA, PDQ, and NeuralHash—against two types of attacks: hash-evasion attacks, aimed at enabling illicit content to evade detection, and hash-inversion attacks, aimed at reconstructing the original secret content from hashes. To support this evaluation, we introduce two new attack algorithms: a query-efficient black-box adversarial attack algorithm and a data-efficient hash inversion algorithm.

The major contributions of this paper are as follows.

-

•

This study demonstrates that PHAs exhibit notable robustness against blackbox adversarial evasion attacks when realistic constraints on image distortion, query budget, and hash difference are applied. This finding challenges previous studies that overlooked these constraints, leading to unjustified criticisms of PHAs.

-

•

This paper confirms the sufficient privacy-preserving capabilities of hash bits, countering claims from some existing studies that suggested hash bits disclose significant information about the content of the original images. The key point is how diverse the images are.

-

•

This research reveals that the security of PHAs is partially due to the unique property of random hash variations. Based on this observation, a defense method is proposed to enhance security by intentionally randomizing hash bits.

The paper is organized as follows: Section 2 provides a literature review, Section 3 formulates the attack and defense algorithms, Section 4 presents the experiment results, and Section 5 concludes the paper. More detailed experiment data can be found in the Appendix. The source code of the experiments, including ways to access binary executables of the PHAs, can be found at https://github.com/neddamj/nnhash.

2 Literature Review

Among the numerous PHA’s [2], this paper focuses on the following three major ones due to their widespread deployment in practice:

- •

- •

-

•

NeuralHash, released by Apple in 2021 with the goal of identifying CSAM [9], creates a more concise 96-bit hash for each input image.

The adversarial robustness of PhotoDNA, PDQ, and NeuralHash to either hash-evasion or hash-inversion attacks has not been thoroughly investigated, primarily due to their relative novelty and the absence of publicly available source code. For hash-evasion, [16] was the first to explore the robustness of NeuralHash, revealing its vulnerability to whitebox attacks, assuming the adversary has full access to the PHA. Additionally, [17] examined PDQ and demonstrated how adversarial images could be generated in the whitebox context. Furthermore, [18] utilized linear interpolation between two images to create adversarial examples.

Regarding blackbox hash-evasion attacks, where the adversary only has access to the input and output of the algorithm, [17] examined PDQ. It concluded that it was not robust against untargeted blackbox attacks. Similarly, [19] evaluated both PhotoDNA and PDQ, finding that they lacked robustness against both targeted and untargeted attacks. While these studies are closely related to the current paper, they overlooked the importance of adversaries’ constraints on image distortion, query budget, as well as hash difference levels. By all means, unconstrained blackbox attacks may always be successful, particularly in the untargeted setting.

Regarding hash-inversion attacks or privacy-preserving capabilities of PHAs, Microsoft initially claimed that the hashes generated by PhotoDNA were irreversible and could not be used to reconstruct the original images [20]. However, a blog post [12] challenged this assertion, suggesting that PhotoDNA hashes might contain enough information to synthesize images from the hashes. To the best of our knowledge, [12] is the only work addressing the reconstruction of original images from hashes, and it specifically focuses on PhotoDNA, which uses long hashes. Another method for investigating information leakage involves training classifiers to identify image content from hashes. Studies such as [16] and [18] demonstrated that NeuralHash hashes could indeed reveal information about their source images, such as identifying the object’s class. These findings contrast with those of [21], which examined PhotoDNA hashes and concluded that they were resistant to such classification attacks.

3 Attack Algorithms and Defense Method

In a perceptual hash system, a service provider scans a client’s unencrypted content, extracts hashes for each image using a perceptual hashing algorithm (PHA) and compares these hashes with a database of known illicit hashes. If a match is found, an alarm is raised or the unencrypted content is forwarded for further analysis [8]. In this context, we consider two types of adversaries: the client adversary, who aims to evade hash matching, and the service provider (or third-party) adversary, who seeks to reconstruct the client’s original images from collected hashes. This paper evaluates the security of three widely used PHAs—PhotoDNA, PDQ, and NeuralHash—against two specific types of attacks: blackbox hash-evasion attacks, which are employed by the client adversary, and hash-inversion attacks, which are utilized by the service provider adversary. Additionally, we propose a defense mechanism that can be integrated into the perceptual hashing system.

3.1 A Query-Efficient Adversarial Blackbox Attack Algorithm for Hash Evasion

This study focuses exclusively on blackbox adversarial attacks, rather than whitebox attacks, to reflect the practical scenario where PHA implementations are proprietary. In many cases, the details of PHA architectures and model weights are not publicly available due to their commercial nature. For example, Microsoft enforces non-disclosure agreements for PhotoDNA users, providing only an emulation of the algorithm. Similarly, although [22] released weights for the first-generation NeuralHash, Apple has since updated the algorithm, and the details of the latest version remain confidential.

Consider the original image with the hash value . The objective of the client adversary is to generate a very similar image whose new hash is sufficiently different from in untargeted attacks or sufficiently similar to some target hash in targeted attacks. We use normalized Hamming distance to evaluate the similarity of two hashes, and use per-pixel normalized norm to evaluate the similarity of two images.

Because the output of PHAs is hash bits, not logits or class decisions, we propose a joint soft-label hard-label attack (JSHA) algorithm that can drastically improve blackbox attack query efficiency. JSHA consists of two steps. The first step is to apply a soft-label blackbox attack to generate an initial adversarial image so that its hash is far away from for untargeted attacks or close to for targeted attacks. This step is formulated as the optimization

| (1) |

The optimization is conducted iteratively. In each iteration, the adversary queries the PHAs with its adversarial input to get the hash, and uses the hash to optimize the loss. After reaching the query number threshold or the hash distance threshold , this first step stops with output .

The second step applies a hard-label blackbox attack to reduce the image distortion while maintaining the hash distance obtained in the first step. Specifically, this step solves the following optimization

| (2) |

Starting from , the adversary queries the PHAs to get the hash and uses the queried hash to optimize (2) until the query number threshold or the threshold of is reached.

One of major advantages of this algorithm is high query efficiency. The first step is an unconstrained optimization and a large learning rate can be applied to get an initial adversarial sample quickly. In the second step, since we start from which is only a noisy version of , the convergence is fast.

(a) (b)

3.2 A Data-Efficient Hash-Inversion Attack Algorithm

GAN (generative adversarial network) was trained in [12] to invert CelebA images. However, the adversary may need more data-efficient hash-inversion attack algorithms because the training data may not be abundant. For this, we has developed new and more data-efficient generative models to reconstruct the original input images from the hashes. We designed a decoder-style deep model, similar to the Fast Style Transfer [23], to convert the -bit hashes to images. This model is mainly a combination of residual blocks depicted in Fig. 1(b), with fractionally-strided convolutions/deconvolutions for learned upsampling. The entire architecture is described in Fig. 1(a), where the left network is for the MNIST and CelebA dataset while the right network is used for the STL-10 dataset.

For a given training dataset, we first compute the hashes of each image offline and store the image-hash pairs. We then train the model outlined above to reconstruct the images as closely as possible by minimizing the mean-square-error (MSE) loss between the model output and the image from which the hash was generated.

3.3 A Defense Method based on Random Hash Perturbation

The adversaries rely on gradient estimation to solve the optimization problems. It is well known that hash bits of PHAs change slightly and randomly with respect to slight changes in input images. Based on existing work on random perturbation defense [24][25], we have the following proposition:

Proposition 1. Gradient estimation based on queried hash is not reliable due to the random variation of hash bits.

An outline of the proof is in the Appendix A.1. The random variation of hash bits makes the adversaries’ optimization struggle to converge, which will be demonstrated in the next section. Furthermore, considering this observation, we propose a defense method where the PHAs randomly invert -portion of the hash bits to guarantee sufficient hash randomness, where .

4 Experiment

4.1 Performance Metrics

We use the attack success rate for assessing the robustness against blackbox adversarial hash-evasion attacks. Given a set of original images with their corresponding hashes , the adversary generates adversarial images with hashes . The attack success rate is defined as

| (3) |

where is the indicator function, is the pre-set hash distance threshold and is the pre-set image distortion threshold. For the operator “”, is used for untargeted attacks, is for targeted attacks. Small or zero ASR() means a PHA is robust to an attack.

For the thresholds, we set . On the other hand, we should apply large enough to evaluate untargeted attacks because should be different from . It was found in [11] that most pairs of distinct images had . Therefore, we primarily use in (3) to determine the success of untargeted attacks. In contrast, we should use small enough for targeted attacks to make sure is sufficiently close to . Prior studies [11] indicate that many normal image edits would change an image’s hashes to the level of . Therefore, we mainly use in (3) for assessing the success of targeted attacks. This important parameter was unfortunately neglected by most existing adversarial robustness studies.

4.2 Experiment on Hash-Evasion Attack and Defense

In implementing our proposed JSHA algorithm, we modified three different soft-label attack algorithms: SimBA [28], NES [29], and ZOsignSGD [30], to use in Step One, and modified the hard-label attack algorithm HSJA [31] to use in Step Two. For instance, “SimBA+HSJA” means our JSHA with a modified SimBA as Step One and a modified HSJA as Step Two. Modifications included removing/changing constraints and adjusting hyper-parameters, etc. We compared the proposed JSHA with the direct application of the original SimBA, NES, and ZOsignSGD algorithms. We also compared it with existing works [17] and [19].

The experiments were conducted on 100 randomly selected images from the ImageNet validation dataset. Step One was executed for a total of 3000 queries unless the early stopping criterion was met. Step Two was run for an additional 2000 queries unless meeting the early stopping criterion. The main challenge for us was the CPU-based non-parallel PHA executables, which limited the number of images or queries we could process. In comparison, [19] only utilized 30 images in experiments.

For targeted hash-evasion attacks, experiment results are presented in Table 1 and Appendix A.3. With a focus on ASR(0.1), we observed that all targeted attacks failed, yielding ASR. To our knowledge, no literature has ever reported non-zero ASR, suggesting that all the PHAs are robust against blackbox targeted hash-evasion attacks.

| ZO- | ZOsign | |||||

| SimBA | NES+ | sign- | -SGD | |||

| ASR | SimBA | +HSJA | NES | HSJA | SGD | +HSJA |

| PH ASR(.1) | 0% | 0% | 0% | 0% | 0% | 0% |

| PD ASR(.1) | 0% | 0% | 0% | 0% | 0% | 0% |

| NH ASR(.1) | 0% | 0% | 0% | 0% | 0% | 0% |

| [19] PH ASR(.3) () | 83% | ( queries) | ||||

| [19] PD ASR(.3) () | 100% | ( queries) | ||||

For untargeted attacks, experiment results are presented in Table 2 and Appendix A.4. With a focus on ASR(0.3), we see that PDQ was robust against all adversarial attacks, with a maximum ASR of 1% only. It’s worth noting that although [17] achieved a much higher attack success rate against PDQ, it required significantly more queries. The results also demonstrated that our defense method was effective because it drastically reduced ASR(0.3) and made the PHAs robust.

| ZO- | ZOsign | Defense | Defense | |||||

| SimBA | NES+ | sign- | -SGD | +SimBA | +NES | |||

| ASR | SimBA | +HSJA | NES | HSJA | SGD | +HSJA | +HSJA | +HSJA |

| PH ASR(.3) | 92% | 27% | 21% | |||||

| PD ASR(.3) | 0% | 0% | 0% | 1% | 0% | 0% | 0% | 0% |

| NH ASR(.3) | 0% | 14% | 0% | 28% | 0% | 2% | 0% | 2% |

| [19] PH ASR(.3) 90%, (, HSJA with queries) | ||||||||

| [17] PD ASR(.3) 73%, (, up to queries) | ||||||||

4.3 Experiment on Hash-Inversion Attack and Defense

Experiments were conducted over three datasets: MNIST, CelebA, and STL-10. For each hashing algorithm and dataset, we trained the inversion network depicted in Fig. 1 for 50 epochs, with a batch size of 64 and an initial learning rate of 0.005. We utilized the MSE loss function with the AdamW optimizer and the cosine annealing learning rate scheduler. While MNIST and CelebA models were relatively easy to train, we encountered substantial challenges when training STL-10 models. We had to degrade STL-10 images to black-and-white in order to get meaningful reconstructed images.

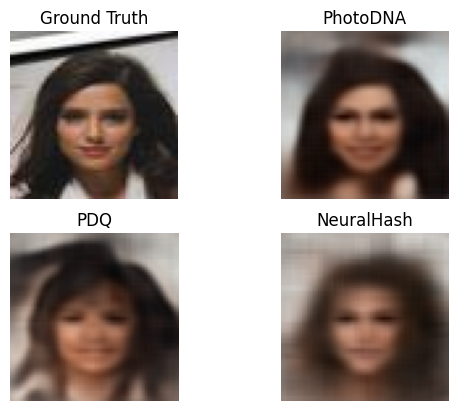

Some sample images are shown in Fig. 2 whereas major experiment results are left to Appendix A.5 to save space. In general, hash-inversion failed over STL-10 while some level of success could be seen in MNIST and CelebA over PhotoDNA hash only. The reason is that the MNIST and CelebA images have similar or regular formation. The reconstructed images tend to converge to such common formation when the hash is long enough. However, even in this case, the reconstructed CelebA images could not be used to recognize the original face.

The effectiveness of random hash perturbation defense can be clearly seen in Fig. 2(b). Even the slightest perturbation made the reconstructed image quite different from the original image.

(a) STL-10 image samples with NeuralHash, without defense

(b) CelebA image samples with PhotoDNA, under various levels of defense

5 Conclusions

This paper evaluates the security of three widely used perceptual hashing algorithms (PHAs): PhotoDNA, PDQ, and NeuralHash. Contrary to many existing studies, our findings show that these PHAs demonstrate notable robustness against both adversarial blackbox hash-evasion attacks and hash-inversion attacks. We attribute this robustness, in part, to the random variations inherent in the hash generation process. Building on this insight, we propose enhancing security by introducing additional randomization to the hash bits.

References

- [1] Mauro Barni, Patrizio Campisi, Edward J Delp, Gwenaël Doërr, Jessica Fridrich, Nasir Memon, Fernando Pérez-González, Anderson Rocha, Luisa Verdoliva, and Min Wu, “Information forensics and security: A quarter-century-long journey,” IEEE Signal Processing Magazine, vol. 40, no. 5, pp. 67–79, 2023.

- [2] Hany Farid, “An overview of perceptual hashing,” Journal of Online Trust and Safety, vol. 1, no. 1, 2021.

- [3] Sivic and Zisserman, “Video google: A text retrieval approach to object matching in videos,” in Proceedings ninth IEEE international conference on computer vision. IEEE, 2003, pp. 1470–1477.

- [4] Qingying Hao, Licheng Luo, Steve TK Jan, and Gang Wang, “It’s not what it looks like: Manipulating perceptual hashing based applications,” in Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, 2021, pp. 69–85.

- [5] Wayne A Logan, “Citizen searches and the duty to report,” Ohio St. LJ, vol. 83, pp. 939, 2022.

- [6] Tracy Ith, “Microsoft’s photodna: Protecting children and businesses in the cloud,” Retrieved from Microsoft News Center: https://news. microsoft. com/features/microsofts-photodna-protecting-children-and-businesses-in-the-cloud, 2015.

- [7] Antigone Davis and Guy Rosen, “Open-sourcing photo-and video-matching technology to make the internet safer,” Facebook Newsroom, 2019.

- [8] Anunay Kulshrestha and Jonathan Mayer, “Identifying harmful media in End-to-End encrypted communication: Efficient private membership computation,” in 30th USENIX Security Symposium (USENIX Security 21), 2021, pp. 893–910.

- [9] Jennifer Cobbe, “Data protection, eprivacy, and the prospects for apple’s on-device csam detection system in europe,” SocArXiv, 2021.

- [10] Harold Abelson, Ross Anderson, Steven M Bellovin, Josh Benaloh, Matt Blaze, Jon Callas, Whitfield Diffie, Susan Landau, Peter G Neumann, Ronald L Rivest, et al., “Bugs in our pockets: The risks of client-side scanning,” Journal of Cybersecurity, vol. 10, no. 1, pp. tyad020, 2024.

- [11] Sean McKeown and William J Buchanan, “Hamming distributions of popular perceptual hashing techniques,” Forensic Science International: Digital Investigation, vol. 44, pp. 301509, 2023.

- [12] Anish Athalye, “Inverting photodna,” https://anishathalye.com/inverting-photodna/, 2021.

- [13] Gannon Burgett, “Photodna lets google, fb and others hunt down child pornography without looking at your photos,” PetaPixel, August, 2014.

- [14] Charles Arthur, “Twitter to introduce photodna system to block child abuse images,” The Guardian, vol. 22, 2013.

- [15] Evan Klinger and David Starkweather, “phash: The open source perceptual hash library,” 2013.

- [16] Lukas Struppek, Dominik Hintersdorf, Daniel Neider, and Kristian Kersting, “Learning to break deep perceptual hashing: The use case neuralhash,” in Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, 2022, pp. 58–69.

- [17] Shubham Jain, Ana-Maria Cre\textcommabelowtu, and Yves-Alexandre de Montjoye, “Adversarial detection avoidance attacks: Evaluating the robustness of perceptual hashing-based client-side scanning,” in 31st USENIX Security Symposium (USENIX Security 22), 2022, pp. 2317–2334.

- [18] Jagdeep Singh Bhatia and Kevin Meng, “Exploiting and defending against the approximate linearity of apple’s neuralhash,” arXiv preprint arXiv:2207.14258, 2022.

- [19] Jonathan Prokos, Neil Fendley, Matthew Green, Roei Schuster, Eran Tromer, Tushar Jois, and Yinzhi Cao, “Squint hard enough: attacking perceptual hashing with adversarial machine learning,” in 32nd USENIX Security Symposium (USENIX Security 23), 2023, pp. 211–228.

- [20] Robert Grimm, “Putting the count back into accountability: An audit of social media transparency disclosures, focusing on sexual exploitation of minors,” arXiv preprint arXiv:2402.14625, 2024.

- [21] Muhammad Shahroz Nadeem, Virginia NL Franqueira, and Xiaojun Zhai, “Privacy verification of photodna based on machine learning,” in ,. 93y42, 2019.

- [22] A. Ygvar, “Apple neural hash to onnx,” https://github.com/AsuharietYgvar/ AppleNeuralHash2ONNX, 2021.

- [23] Justin Johnson, Alexandre Alahi, and Li Fei-Fei, “Perceptual losses for real-time style transfer and super-resolution,” in Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14. Springer, 2016, pp. 694–711.

- [24] Zeyu Qin, Yanbo Fan, Hongyuan Zha, and Baoyuan Wu, “Random noise defense against query-based black-box attacks,” Advances in Neural Information Processing Systems, vol. 34, pp. 7650–7663, 2021.

- [25] Manjushree B Aithal and Xiaohua Li, “Mitigating black-box adversarial attacks via output noise perturbation,” IEEE Access, vol. 10, pp. 12395–12411, 2022.

- [26] Alain Hore and Djemel Ziou, “Image quality metrics: Psnr vs. ssim,” in 2010 20th international conference on pattern recognition. IEEE, 2010, pp. 2366–2369.

- [27] Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang, “The unreasonable effectiveness of deep features as a perceptual metric,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 586–595.

- [28] Chuan Guo, Jacob Gardner, Yurong You, Andrew Gordon Wilson, and Kilian Weinberger, “Simple black-box adversarial attacks,” in International Conference on Machine Learning. PMLR, 2019, pp. 2484–2493.

- [29] Andrew Ilyas, Logan Engstrom, Anish Athalye, and Jessy Lin, “Black-box adversarial attacks with limited queries and information,” in International conference on machine learning. PMLR, 2018, pp. 2137–2146.

- [30] Sijia Liu, Pin-Yu Chen, Xiangyi Chen, and Mingyi Hong, “signsgd via zeroth-order oracle,” in International Conference on Learning Representations, 2018.

- [31] Jianbo Chen, Michael I Jordan, and Martin J Wainwright, “Hopskipjumpattack: A query-efficient decision-based attack,” in 2020 ieee symposium on security and privacy (sp). IEEE, 2020, pp. 1277–1294.

- [32] Martin Steinebach, “An analysis of photodna,” in Proceedings of the 18th International Conference on Availability, Reliability and Security, 2023, pp. 1–8.

Appendix A Appendix / supplemental material

A.1 Proof of Proposition 1 of Section 3.3

Without loss of generality, consider the optimization problem (1). A common approach involves utilizing randomly perturbed images to query the PHAs and then using the queried hashes to estimate the gradient as follows

| (4) |

where represents random perturbation [29]. Firstly, due to the nature of PHAs, overly small perturbations may not induce any change in hash. Hence, relatively large is required, but this renders the gradient estimation unreliable. Secondly, the hash randomly varies with the small perturbation . The random variation can be modeled as a noise term with variance . Following the analysis of [25], this noise is amplified by , leading to the gradient estimate being affected by a noise power , hence making it unreliable.

Due to the random nature of PHAs, it is almost impossible to limit the change of hash to just one or a few hash bits by perturbations. Practical is usually around . may be around . As per [25], the signal-to-noise-ratio (SNR) of the gradient estimation can fall well below dB, which makes the adversary’s optimization not convergent or converge extremely slowly. This implies that PHAs are theoretically robust to blackbox attacks under the query budget constraint.

A.2 Robustness Problem of PHAs to Normal Image Editing

One of the special issue of PHAs is that they seem not robust to some normal imaging editing methods, for which [32] examined PhotoDNA, while [11] investigated PDQ and NeuralHash. Both studies demonstrated that PHAs were robust to certain edits like compression but vulnerable to others like cropping. While such prior works have already extensively investigated image editing on PHAs, a new evaluation is necessary due to ongoing algorithm updates. Notably, NeuralHash has received at least one update since its first release. This study addresses the gap in knowledge by evaluating the robustness of the updated algorithms and, more importantly, by providing the first comparison of the three PHAs.

Assume the original input to the PHA is an image , and the true output is an -bit hash , where denotes the hashing algorithm. The client adversary applies standard image editing operations over to obtain an adversarial image with a new -bit hash . A successful attack means is sufficiently different from .

We experimented with four image editing operations that can keep the original high image quality: JPEG compression with random quality factors, resizing images with random scaling factors, filtering images with the vignette filter, or rotating images to random degrees. We calculated the hashes and of the images before and after the editing, and derived their distance . Using 1000 images from the ImageNet validation dataset, we obtained ASR for various based on (3), wherein the condition was removed by setting to infinity.

Experiment results are presented in Tables 3. The data for ASR(.3) is highlighted in black because is the threshold we suggested to focus on. It is evident that all three PHAs were robust to compression and resizing, but not to filtering and rotation. In conjunction with the findings in [11][16], the PHAs are robust to many standard image editing operations except the following:

-

•

PhotoDNA is not robust to: filtering, rotating, resizing, mirroring, bordering, cropping;

- •

-

•

NeuralHash is not robust to: filtering, rotating, mirroring [11].

NeuralHash was the most robust among the three. Surprisingly, the newest edition of NeuralHash that we experimented with was still not robust to filtering, rotating, and mirroring, similar to the first edition experimented in [11][16]. Nevertheless, we believe this robustness problem can be mitigated by training the model to be invariant to such standard operations with data augmentations.

| ASR | Compress | Resize | Filter | Rotate |

| PhotoDNA ASR(.1) | 3% | 99% | ||

| PhotoDNA ASR(.2) | 55% | |||

| PhotoDNA ASR(.3) | 0% | 0% | 94% | 100% |

| PhotoDNA ASR(.4) | 0% | |||

| PDQ ASR(.1) | 0% | 27% | 88% | 100% |

| PDQ ASR(.2) | 0% | 0% | 67% | 100% |

| PDQ ASR(.3) | 0% | 0% | 44% | 100% |

| PDQ ASR(.4) | 0% | 0% | 27% | 100% |

| NeuralHash ASR(.1) | 0% | 22% | 96% | 100% |

| NeuralHash ASR(.2) | 0% | 0% | 82% | 100% |

| NeuralHash ASR(.3) | 0% | 0% | 57% | 96% |

| NeuralHash ASR(.4) | 0% | 0% | 12% | 31% |

A.3 Extra Experiment Data of Targeted Hash-Evasion Attacks in Section 4.2

Besides the data shown in Section 4.2 and Table 1, more experiment data of targeted attacks are presented in Table 4. We find that the robustness is extremely strong because of ASR across all . The only exception was NeuralHash, which exhibited ASR(0.4) of 43% with SimBA. However, this isn’t indicative of successful adversarial attacks; rather, it’s due to numerous hashes generated by NeuralHash having as low as 0.3. Under NeuralHash, many images are inherently adversarial when evaluated at .

Therefore, contrary to the prior claims that PHAs were not robust against targeted attacks [19], our findings demonstrate the strong robustness of all PHAs against targeted blackbox attacks. This is significant to the practical application of PHAs because the client adversary needs targeted attacks to ensure that its adversarial hashes do not inadvertently match illicit hashes in the database. The strong robustness means it is hard for the adversary to undertake either untargeted or targeted attacks without being caught.

| ZO- | ZOsign | |||||

| SimBA | NES+ | sign- | -SGD | |||

| ASR | SimBA | +HSJA | NES | HSJA | SGD | +HSJA |

| PH ASR(.1) | 0% | 0% | 0% | 0% | 0% | 0% |

| PH ASR(.2) | 0% | 0% | 0% | 0% | 0% | 0% |

| PH ASR(.3) | 0% | 0% | 0% | 0% | 0% | 0% |

| PH ASR(.4) | 1% | 2% | 0% | 0% | 0% | 0% |

| PD ASR(.1) | 0% | 0% | 0% | 0% | 0% | 0% |

| PD ASR(.2) | 0% | 0% | 0% | 0% | 0% | 0% |

| PD ASR(.3) | 0% | 0% | 0% | 0% | 0% | 0% |

| PD ASR(.4) | 0% | 0% | 0% | 0% | 0% | 0% |

| NH ASR(.1) | 0% | 0% | 0% | 0% | 0% | 0% |

| NH ASR(.2) | 0% | 0% | 0% | 0% | 0% | 0% |

| NH ASR(.3) | 0% | 0% | 0% | 0% | 0% | 0% |

| NH ASR(.4) | 43% | 11% | 0% | 0% | 0% | 0% |

| [19] PH ASR(.3) () | 83% | ( queries) | ||||

| [19] PD ASR(.3) () | 100% | ( queries) | ||||

A.4 Extra Experiment Data of Untargeted Blackbox Hash-Evasion Attacks in Section 4.2

A.4.1 Experiment data and sample images of untargeted attacks without defense

Besides the experiment data shown in Section 4.2 and Table 2, extra experiment data are presented in Table 5. Sample adversarial images generated with our proposed JSHA with various pre-set threshold are depicted in Fig. 3 and Fig. 4, with their corresponding distortion metrics listed in Table 6. Notably, the images with were deemed invalid adversarial images as their distortion was not under the threshold , resulting in extremely noisy images. Similarly, for PDQ, the adversarial images with and were also invalid.

Therefore, contrary to previous studies suggesting that PHAs were not robust against untargeted attacks, we found that some PHAs like PDQ and NeuralHash could be considered as robust if realistic constraints regarding image distortion and query budget are taken into account.

| ZO- | ZOsign | |||||

| SimBA | NES+ | sign- | -SGD | |||

| ASR | SimBA | +HSJA | NES | HSJA | SGD | +HSJA |

| PH ASR(.1) | ||||||

| PH ASR(.2) | ||||||

| PH ASR(.3) | 92% | |||||

| PH ASR(.4) | ||||||

| PD ASR(.1) | 0% | 45% | 0% | 64% | 0% | 8% |

| PD ASR(.2) | 0% | 1% | 0% | 10% | 0% | 0% |

| PD ASR(.3) | 0% | 0% | 0% | 1% | 0% | 0% |

| PD ASR(.4) | 0% | 0% | 0% | 0% | 0% | 0% |

| NH ASR(.1) | 4% | 88% | 0% | 99% | 0% | 94% |

| NH ASR(.2) | 2% | 23% | 0% | 77% | 0% | 42% |

| NH ASR(.3) | 0% | 14% | 0% | 28% | 0% | 2% |

| NH ASR(.4) | 0% | 0% | 0% | 0% | 0% | 0% |

| [19] PH ASR(.3) 90%, (, HSJA with queries) | ||||||

| [17] PD ASR(.3) 73%, (, up to queries) | ||||||

| Measure | Threshold | PhotoDNA | PDQ | NeuralHash |

|---|---|---|---|---|

| 0.02 | 0.07 | 0.02 | ||

| 0.02 | 0.11 | 0.04 | ||

| 0.05 | 0.14 | 0.07 | ||

| 0.17 | 0.19 | 0.19 |

(a) Original image .

PhotoDNA PDQ NeuralHash

(b) Adversarial images .

PhotoDNA PDQ NeuralHash

A.4.2 Experiment data of untargeted attacks under proposed defense

Observing that some untargeted attack algorithms such as SimBA+HSJA achieved relataively high ASR(0.3) over some PHAs such as PhotoDNA, we experimented with the hash perturbation defense method proposed in Section 3.3. The level of our random hash perturbation was denoted as , where , which stand for the ratio of the hash bits that were randomly flipped.

Besides the experiment results presented in Section 4.2 and Table 2, more experiment data are presented in Tables 7, 8, and 9. It can be seen that random perturbation reduced ASR() drastically, which means the attacks became much less successful or even failed. Even a slight perturbation with could prevent most of the attacks.

| ZOsign | |||

| SimBA | NES+ | -SGD | |

| ASR | +HSJA | HSJA | +HSJA |

| PH q =0.1 | 100% | ||

| PH q =0.2 | 100% | ||

| PH q =0.3 | 100% | ||

| PD q =0.1 | 0% | 0% | 0% |

| PD q =0.2 | 0% | 0% | 0% |

| PD q =0.3 | 0% | 0% | 0% |

| NH q =0.1 | 21% | 18% | 15% |

| NH q =0.2 | 20% | 18% | 15% |

| NH q =0.3 | 21% | 18% | 15% |

| ZOsign | |||

| SimBA | NES+ | -SGD | |

| ASR | +HSJA | HSJA | +HSJA |

| PH q =0.1 | 98% | 99% | 95% |

| PH q =0.2 | 98% | 99% | 95% |

| PH q =0.3 | 98% | 99% | 95% |

| PD q =0.1 | 0% | 0% | 0% |

| PD q =0.2 | 0% | 0% | 0% |

| PD q =0.3 | 0% | 0% | 0% |

| NH q =0.1 | 5% | 5% | 2% |

| NH q =0.2 | 5% | 6% | 3% |

| NH q =0.3 | 5% | 6% | 2% |

| ZOsign | |||

| SimBA | NES+ | -SGD | |

| ASR | +HSJA | HSJA | +HSJA |

| PH q =0.1 | 27% | 21% | 5% |

| PH q =0.2 | 27% | 21% | 5% |

| PH q =0.3 | 27% | 21% | 5% |

| PD q =0.1 | 0 | 0% | 0% |

| PD q =0.2 | 0% | 0% | 0% |

| PD q =0.3 | 0% | 0% | 0% |

| NH q =0.1 | 0% | 2% | 0% |

| NH q =0.2 | 0% | 2% | 0% |

| NH q =0.3 | 0% | 0% | 0% |

A.5 Extra Experiment Results of Hash-Inversion Attacks in Section 4.3

A.5.1 Experiment data and sample images of hash-inversion attacks without defense

Besides the experiment sample images presented in Section 4.3 and Fig. 2, a complete set of experiment data of image reconstruction quality are in Table 10. In addition, Fig. 5 shows sample images of MNIST dataset reconstruction, Fig. 6 shows sample images of STL-10 dataset reconstruction, whereas Fig. 7 shows sample images of CelebA dataset reconstruction. All these experiment results were obtained without the defense being applied.

Generally, the hash-inversion attacks worked with some success when there was a lot of regularity in the dataset (e.g., MNIST and CelebA). In this case, the hash-inversion algorithm gave an image of such regularity only. The longer hash values leaked more information and allowed better hash-inversion. Nevertheless, even in the best case, it was still impossible to match the reconstructed human faces with the true faces in the CelebA images.

When the dataset was diverse (e.g., STL-10), we were not able to learn any meaningful inversions. This demonstrated that the PHAs were robust against hash-inversion attacks in practical applications. In addition, PHAs with smaller number of output bits were always preferable because natural images were not very standardized, so the smaller number of bits could help to prevent information leakage/hash inversion.

The reason for [12] to draw the false conclusion of successful hash-inversion was because only CelebA images were used over the long PhotoDNA hashes, and the authors overlooked the fact that the faces can not be discriminated based on the reconstructed images.

| Dataset | Hash | SSIM | LPIPS | |

|---|---|---|---|---|

| PH | ||||

| MNIST | PD | - | - | - |

| NH | ||||

| PH | ||||

| CelebA | PD | |||

| NH | ||||

| PH | ||||

| STL-10 | PD | |||

| NH |

(a) PhotoDNA. Left: (.14, .83, .05). Right: (.12, .86, .07)

(b) NeuralHash. Left: (.26, .61, .10). Right: (.34, .52, .14)

(a) Using PhotoDNA Hash bits

(b) Using PDQ Hash bits

(c) Using NeuralHash hash bits

(a) Our model. {, SSIM, LPIPS} {.14, .65, .34} (PhotoDNA),

{.15, .60, .36} (PDQ), {.20, .40, .50} (NeuralHash)

(b) Our model. {, SSIM, LPIPS} {.17, .63, .34} (PhotoDNA),

{.21, .50, .34} (PDQ), {.20, .47, .45} (NeuralHash)

(c) Compare images generated by our model and [12].

A.5.2 Experiment data hash-inversion attacks under the proposed defense

To mitigate the potential hash inversion attacks over regular images, we experimented with the proposed random hash perturbation defense algorithm. Some sample images were shown in Fig. 2(b) and explained briefly in Section 4.3. Extra experiment data are shown in Tables 11, 12 and 13.

Compare these data with those in Table 10, it is clear that as we increased the level of perturbation to the hash bits, the quality of reconstructed images decreased as expected. Therefore, the random perturbation defense does help to defend against hash-inversion attacks.

| Dataset | Hash | SSIM | LPIPS | |

|---|---|---|---|---|

| PH | ||||

| STL-10 | PD | |||

| NH |

| Dataset | Hash | SSIM | LPIPS | |

|---|---|---|---|---|

| PH | ||||

| STL-10 | PD | |||

| NH |

| Dataset | Hash | SSIM | LPIPS | |

|---|---|---|---|---|

| PH | ||||

| STL-10 | PD | |||

| NH |