Robust Optimization for Quantum Reinforcement Learning Control using Partial Observations

Abstract

The current quantum reinforcement learning control models often assume that the quantum states are known a priori for control optimization. However, full observation of quantum state is experimentally infeasible due to the exponential scaling of the number of required quantum measurements on the number of qubits. In this paper, we investigate a robust reinforcement learning method using partial observations to overcome this difficulty. This control scheme is compatible with near-term quantum devices, where the noise is prevalent and predetermining the dynamics of quantum state is practically impossible. We show that this simplified control scheme can achieve similar or even better performance when compared to the conventional methods relying on full observation. We demonstrate the effectiveness of this scheme on examples of quantum state control and quantum approximate optimization algorithm. It has been shown that high-fidelity state control can be achieved even if the noise amplitude is at the same level as the control amplitude. Besides, an acceptable level of optimization accuracy can be achieved for QAOA with noisy control Hamiltonian. This robust control optimization model can be trained to compensate the uncertainties in practical quantum computing.

I Introduction

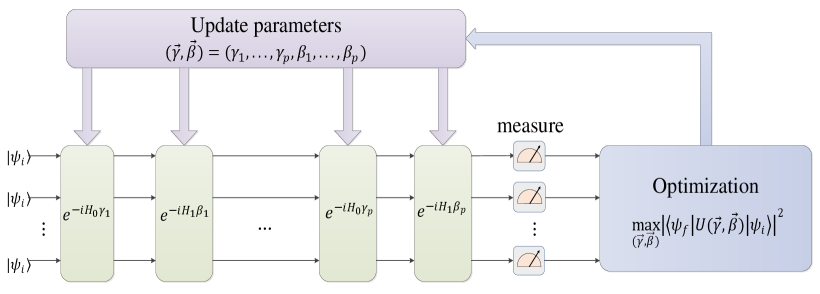

Quantum control is fundamental for quantum communication and scalable quantum computation[1, 2]. State steering of quantum systems [3, 4, 5, 6, 7, 8] and Quantum Approximate Optimization Algorithm (QAOA) [9, 10, 11] are two major applications of quantum control. These control tasks are often achieved via the application of a series of unitary transformations driven by control Hamiltonians. For example, QAOA switches between two noncommuting Hamiltonians to ensure the universal controllability of quantum states, and the control durations are used to parameterize the quantum control actions (Fig. 1).

However, noise exists in all parts of a quantum system [12], which may have an negative impact on control precision. One of the most common types of noise is the Hamiltonian uncertainty, which means the Hamiltonian may be perturbed as , with being the unknown perturbing Hamiltonian and being the magnitude of perturbation. Previous works have studied the robust control against this kind of uncertainty, such as the gradient-based methods with robust optimization [13, 14, 15]. Alternatively, Reinforcement Learning (RL) algorithms have also been applied to solve the robust quantum control problem [3, 4, 5, 6, 12, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26]. During the training process, the agent learns to maximize the reward by measuring the state of the quantum system during, or at the end of the process and taking corresponding control actions.

All the aforementioned algorithms have made use of the full information of the quantum states, which is not available in practice unless a large number of quantum measurements are made. Most of the existing works have obtained the states for optimization by classically simulating the system dynamics, which is clearly not scalable. In essence, the RL control model for noisy quantum systems is expected to be trained and implemented without knowing the underlying dynamical model of the system and noise, which makes it scalable and adaptive to noise. To do this, RL has to rely on measurements of the state which requires huge amount of resources. Therefore, a simplified measurement scheme will hasten the application of RL control on near-term quantum devices. Although a few works [27, 24] have considered using partial observations to reduce the resource requirement for the implementation of RL in quantum control tasks, the rewards in these models are still defined in terms of the state fidelity or the expectation of a target Hamiltonian, which still requires an enormous number of quantum measurements to determine the intermediate states. Besides, partial observability can also stem from noisy measurements of the quantum states, which is similar to noisy sensor readings in the classical case. For example, the RL-enhanced QAOA discussed in [27] made use of noisy measurements on the full set of observables and achieved approximation ratios between 0.8 and 0.93, which are taken as acceptable solutions.

Inspired by classical RL algorithms based on Partially Observable Markov Decision Process (POMDP) [28, 29, 30, 31, 32], we propose an RL scheme that uses only partial observations for control decision making and in the meantime avoids using any reward function that employs additional quantum measurements. During the control process, the agent receives partial observations about the current state of the quantum system, and then an optimal action is determined solely based on these observations. As a result, there is no need to estimate the reward with additional quantum measurements, which significantly reduces the measurement and computational cost. We demonstrate this algorithm on two illustrating quantum robust control problems, by imposing noise perturbation to the control Hamiltonian.

This paper is organized as follows. Section II provides a brief introduction to the control model and the definition of partial observations. Section III introduces the quantum RL algorithm with partial observations. Section IV presents the numerical results on single-qubit and multi-qubit systems. Section V concludes the paper.

II Preliminaries

II.1 Control Model

Consider the Hilbert space . The -qubit pure quantum state is defined as a complex-valued unit vector in , where is the number of qubits. Starting from an initial state , we apply the following control sequence

| (1) |

where and are noncommuting control Hamiltonians and is the control depth. For quantum state transfer problem, the objective is to choose the correct control actions to steer the state from to a target . The existing quantum RL control schemes use the following state fidelity

| (2) |

to define the reward at each intermediate step , which requires a huge amount of quantum measurements or accurate simulation of the system dynamics. In contrast, the intermediate states will only be partially observed for the implementation of RL in this paper.

For QAOA, the control scheme is similar as shown in Fig. 1, except that the objective function may be slightly different.

II.2 Bloch Sphere Representation of Quantum States

Since the pure quantum state is represented by a complex-valued vector in , the space of quantum states can be visualized as a generalized unit sphere called Bloch sphere. Consequently, the coordinates for each state can be calculated by projecting the state vector onto an orthogonal basis. Denote the single-qubit Pauli operators as

| (3) |

and the basis states of a single qubit as

| (4) |

It is clear that the Pauli operators span the space of one-qubit states, and thus the coordinates of a state vector on the Bloch sphere can be derived by

| (5) |

with . It should be noted that Eq. (5) is the mathematical formulation of quantum measurements, with the Pauli operators being the measurement operations and being the state to be measured. are the corresponding measurement results which are obtained by averaging the measured outcomes on a large number of copies of . The quantum state is fully observable if all three observations in Eq. (5) can be made.

The coordinates of multi-qubit states can be defined in a similar way. Denote . The coordinates of a multi-qubit state are given by

| (6) |

except for . Hence, the dimension of an -qubit pure state vector is . In this paper, partial observations are made on a subset of these generalized Pauli operators.

III Quantum RL Algorithm with Partial Observation

III.1 Model Description

First, we introduce the quantum RL control scheme of this paper.

-

•

State. The quantum state is defined by the coordinate vector on the Bloch sphere. Due to the noise and uncertain environments, the agents may only receive partial observations of the current state.

To be more specific, according to the control model (1), the quantum state evolves as

(7) Due to the unknown perturbations and , the quantum state at each time step is hidden to the agent unless a full observation is conducted.

-

•

Partial Observation. The quantum observation is made by applying quantum measurements to the states with respect to a subset of generalized Pauli operators, which will give the estimates for only a part of the coordinates of the state. Alternatively, the full set of coordinates may be observed, with a fraction of the observations being single-shot or extremely noisy.

-

•

Reward. As depicted in Fig. 2, the reward for optimizing the quantum control action is defined as the reduced distance between the partially observed coordinates of the current state and target state on the Bloch sphere, which is calculate by .

-

•

Action. The action is chosen from a continuous interval, representing the values of the control durations (or angles) . Similar to the classical RL, the actor generates the action through an actor-critic framework given the observations as input. After that, the action is used to parameterize the next unitary control.

The general framework for the quantum RL control is depicted in Fig. 3. At the time step , the agent receives the partial observation which allows us to calculate the reward . Here we use a done flag to mark the end of each trajectory. If , the done flag is set to 1, otherwise it is set to 0. After taking the action , the experience is sent into the replay buffer which records the historical data. The parameters of the agent are optimized by sampling mini-batches from the replay buffer. More details on POMDP can be found in Appendix. A.

III.2 The Implementation of Robust Control using Memory-based TD3 framework

Any established RL-based frameworks can be used to train the agent as defined in Fig. 3. Here we adopt the TD3 deep neural networks from [33]. TD3 is based on the commonly-used actor-critic structure as depicted in Fig. 4, which involves a total of two actor networks and four critic networks. TD3 is said to be able to solve the problem of overestimating the Q-value. During the training process, each critic in the predict network is optimized to minimize the mean-square-error between the predicted and the estimated target . The pseudo-code that implements the quantum robust control using TD3 is shown in Algorithm III.2.

Algorithm 1 Pseudo-code for Quantum Robust Control with Partial Observations

Since any RL-based frameworks can be readily used to implement the proposed algorithm of this paper as an alternative, we do not intend to expand on the design of the deep neural network structures of TD3. Further details on the exact implementation of the neural networks can be found in Appendix. B and [33].

In particular, step 6 in Algorithm III.2 will be replaced by real quantum control in practical implementation on quantum hardware. In that case, Algorithm III.2, which is solely based on partial measurements, can still work with unknown perturbations and noises. However, if prior knowledge on the source of the perturbation and noise is available, the RL control model can be pretrained with classical simulations, which will provide significant cost reduction since experimenting on quantum hardware is very expensive at present.

IV Numerical Experiments

In this section, the performances of the partially observed RL algorithm are first demonstrated on the robust state control problem of single- and multi-qubit systems. Then we also test the algorithm on multi-qubit QAOA.

IV.1 Single-qubit Case

For single-qubit robust control, the number of control steps is chosen to be 20. We adopt the experimental setup from [16], in which the initial and target states are given by

| (8) |

and the control Hamiltonians are given by

| (9) |

The perturbations and to and are randomly generated for each trajectory of training. That is, at the beginning of each trajectory of training, we randomly generate the diagonal elements of and from a uniform distribution . The real and imaginary parts of the elements of the upper triangle of and are sampled from as well. The lower triangle is then generated as the complex conjugate of the upper triangle to guarantee that the perturbed Hamiltonian is Hermitian. is a positive parameter that controls the magnitude of perturbation. The action values are confined within , and the initial values of actions for each trajectory is uniformly sampled from . The mini-batch size is set as and the maximal length of history is set as for the simulations. The parameters of the agent are optimized using the Adam optimizer with a learning rate of for actor and critics. Also, we set in the reward function. We assume that the partial observations are made on the observables and . Therefore, the observations obtained at time step are calculated by .

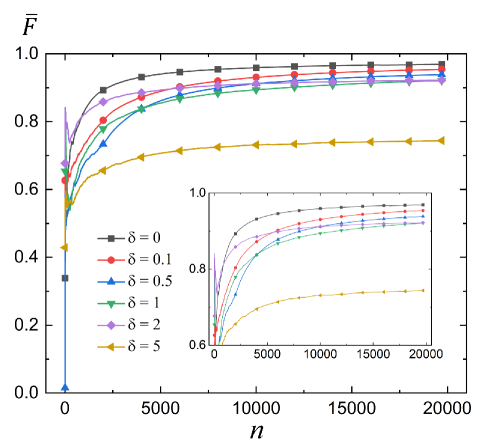

The average fidelities between the initial and target states during the training are shown in Fig. 5(a). The model is trained for 20000 times, corresponding to 20000 complete trajectories. As increases, the average

| Algorithms | Average Fidelity | Standard Deviation |

| SGD () | 0.950333 | 0.072376 |

| Krotov () | 0.989746 | 0.021458 |

| Deep Q Learning () | 0.991249 | 0.081046 |

| Policy Gradient () | 0.999562 | 0.002871 |

| Our Method () | 0.998972 | 0.001112 |

| Our Method () | 0.989171 | 0.014606 |

| Our Method () | 0.979764 | 0.039399 |

| Our Method () | 0.973757 | 0.088222 |

fidelities show an obvious decrease due to the random uncertainties in the control Hamiltonian. Particularly, the fidelities averaged over a local epoch of trajectories are more useful to demonstrate the final performance of the algorithm. In Fig. 5(b), the fidelities are calculated after every 200 trajectories, and we can see that the average fidelity can reach above 0.98 when or . The ultimate fidelity with is still higher than 0.96. If we increase to , which makes the amplitude of perturbation approximately equal to the amplitude of control Hamiltonians, the algorithm can achieve an average fidelity between 0.93 and 0.94. It should be noted that this noise level is already much larger than the actual noise level in quantum computing systems.

Next, we perform additional tests to demonstrate the generalizability of our method. In Fig. 6(a), the agents are trained with , respectively. Then the parameters of the agents are fixed, and the performances of the models are tested with different on the same initial and target states. An interesting observation is that models trained with higher level of noise () show better generalizability than models trained with little or no noise (). For example, when , the test fidelity can still reach above 0.9 with any that is larger than 0.3. In contrast, when , the test fidelity quickly drops below 0.9 before . This observation suggests that adding a certain amount of noise during training is beneficial for improving the robustness and generalizability of the model.

We have also tested the performance of the trained model on different initial and target states. In Fig. 6(b), the agent is trained with , and pairs of initial and target states are randomly generated for test by

| (10) |

with

| (11) |

The test experiments are still conducted with . It is clearly that the trained model works well with unseen initial and target states, with more than 32500 pairs of states having achieved a final fidelity above 0.95.

Our method is then compared to other commonly-used algorithms in Table 1 under the same experimental setup with full observability. These algorithms consist of two gradient-based methods: stochastic gradient descent (SGD) [34], Krotov algorithm [35] and two commonly-used RL algorithms: deep Q-learning [4] and deep policy gradient [12, 19]. SGD is one of the simplest gradient-based optimization algorithms, in which the control is updated using the approximated gradient of the cost function based on random batches. In Krotov algorithm, the initial state is firstly propagated forward to obtain the evolved state. Then the evolved state is projected to the target state, defining a co-state that encapsulates the mismatch between the two. Finally, the co-state is propagated backward to the initial state, during which the controls are updated. As this process converges, the co-state becomes identical to the target state. For comparison, each algorithm has been run 100 times to calculate the average fidelity without assuming any noise or Hamiltonian uncertainty, with codes adopted from [19]. The achieved fidelity of our method () is higher than those of other algorithms including deep Q-Learning when noise is not taken into consideration. If noise is added to the control Hamiltonian of our method, e.g. or , the performance of our method is still better than the conventional SGD and Krotov methods and similar to deep Q-learning and policy gradient method, which did not consider any noise effect. These numerical results demonstrate that our method can at least achieve the same level of performance in comparison with the state-of-the-art algorithms using only partial observations while being adaptive to noise.

It should be noted that we have , or that the control landscape is a unit sphere. As a result, the estimates of and can be used to jointly determine the value of which is unobserved. The RL model may have learned to infer this hidden relation with partial observations to promote the control precision.

IV.2 Two-qubit Case

Consider the commonly-used spin-chain example for multi-qubit control [24], in which the control Hamiltonians are defined as

| (12) |

and

| (13) |

where is the coupling strength. Then initial state is given by

| (14) |

Without loss of generality, we let and for the demonstration. In this case, the control Hamiltonians and initial state are written as

| (15) |

and

| (16) |

| Algorithms | Average Fidelity | Standard Deviation |

| Krotov () | 0.978592 | 0.068521 |

| Deep Q Learning () | 0.990445 | 0.060376 |

| Policy Gradient () | 0.998939 | 0.003715 |

| Our Method () | 0.984726 | 0.018958 |

| Our Method () | 0.981503 | 0.019187 |

| Our Method () | 0.965358 | 0.022574 |

| Our Method () | 0.936025 | 0.027871 |

Here the target state is chosen as the ground state of

| (17) |

The observations at time step are made as follows

| (18) |

The numerical results in Fig. 7 show that the RL algorithm with 6 observations can achieve high-fidelity control against a low level of perturbations to the Hamiltonian. As the amplitude of the perturbations increases, the average fidelities will decrease. In particular, the fluctuations in the ultimate fidelities have become more severe with , which can be seen from Fig. 7(b). This may be due to the fact that the number of randomly perturbed elements of the control Hamiltonians is growing exponentially with the number of qubits, which indicates an exponential growth in the uncertainties of the Hamiltonians. In addition, the number of partial observations for two qubits is only 6, while the dimension of the state space is . In contrast, 3 observations are enough for the complete determination of a single-qubit state, and thus partial observation with 2 observables will make the single-qubit algorithm more tolerant of a large .

We also test the trained agents with different noise levels, as shown in Fig. 8. Since the number of observations is too few to counteract the increased effects of noise in the two-qubit system, the agents suffer from an abrupt performance deterioration if is much larger than . Similar to the single-qubit example, the trained model with the largest has the most stable performance during the test under a small variation of the noise amplitude.

As can be seen from Table 2, our method does not always outperform the state-of-the-art reinforcement learning algorithms. However, with partial observations, our method can at least achieve the same level of performance in comparison with the state-of-the-art algorithms. SGD is not studied in the two-qubit case since it has been proved to be an inefficient method for solving many-body problems [19].

Next, we train the agents with 4 different configurations of observations as follows

| (19) |

| (20) |

| (21) |

| (22) |

Here the noise level is set as . As shown in Fig. 9, the performance of the algorithm continues to improve as the number of observations increases. However, the achieved fidelity with 8 observations is worse than that with 6 observations. This may be because the reward becomes overly large with too many observations. In particular, is set as 3.5 in the reward function for all the experiments. Reducing may improve the performance of the algorithm with more observations.

IV.3 6-qubit QAOA

We consider a 6-qubit example which uses QAOA to solve the combinatorial optimization problem [36]. The target problem-based Hamiltonian is defined as

| (23) |

whose ground states are the solutions to a specific combinatorial optimization problem. The control Hamiltonian is given by

| (24) |

Then initial state is set as

| (25) |

The goal of QAOA is to minimize . It has been argued that even if the optimization process may not be able to reach the ground states, at least an approximate solution can be found using the quantum-classical algorithm. We let , and randomly generate the weights from a uniform distribution for illustration.

The full set of observations are given by

| (26) |

which can be used for the complete determination of the expectation with respect to . In the numerical experiment, only of the observations are made at each time step to calculate the reward, while the other observations are just generated as random noise from . This configuration is proposed to demonstrate the model performance under the extreme condition that of the observations are pure noise, which is also an instance of partially observable process as aforementioned. The real observations are made as follows

| (27) |

Although the other noisy observations are not used to calculate the reward, they are still contained in to train the agent. It should be noted that only in the last step, the reward is calculated using the full set of real observations. To be more precise, the rewards for the intermediate steps are calculated as

| (28) |

while the reward for the last step is calculated as

| (29) |

This definition of reward function is slightly different from that of the state transfer problem, as the target state is unknown in the current case. Here, the purpose of the reward function is to encourage the decrease of on the partially observed subsystem.

The optimization performance is shown in Fig. 10, where we have used the following approximation ratio[24]

| (30) |

as the metric to evaluate the accuracy of the QAOA algorithm with partial observation. Here and are the lowest and highest values of . The control depth is set as , and we let . The numerical result demonstrates that the algorithm with only accurate observations can still achieve a good-enough approximation ratio when . In particular, the approximation ratio can reach above , which may yield an acceptable solution to the combinatorial optimization problem, as optimizing the approximation ratio beyond a desired value has been proven to be NP-hard with classical algorithms [37].

| Algorithms | Accuracy | Optimized Coefficients | Total Duration |

| Our method () | 0.924059 | 0.47231, 0.26109, -0.00375, 0.00312, 0.0155, 0.00016, 0.00896, 0.00691, 0.00445, 0.00101 | 0.77726 |

| Trotterized quantum annealing () | 0.966693 | 3.27237, 3.05595, -1.36772, 2.79921, 0.99586, -3.32931, -3.74419, -1.04337, -0.88244, -2.95511 | 23.44553 |

| Trotterized quantum annealing () | 0.983772 | 2.78629, -0.37299, 0.33541, -2.06054, -0.93116, 0.79423, 0.37143, -3.00828, 3.42862, 2.18688, -1.48824, -2.03968, -1.7566, 0.12666 | 21.68701 |

| Trotterized quantum annealing () | 0.984729 | -1.57822, 0.83495, -2.48816, -0.99136, -2.66433, -0.12738, -1.2762, 0.25729, 1.34711, 1.75152, -0.93297, 0.98766, -0.76968, -0.36198, 0.79805, 1.27066, 0.04574, -2.47126 | 20.95452 |

We have compared our method with Trotterized quantum annealing control protocol by increasing as . Part of the numerical results with optimized coefficients are shown in Table 3, and the complete results are given in Supplementary Materials. The Trotterized quantum annealing algorithm is executed using PyQPanda without assuming any Hamiltonian uncertainties, and the total durations of control are initialized with the same value. Under the same control depth, the accuracy of the Trotterized quantum annealing algorithm is higher than our method. Considering that our method additionally deals with the robust control problem with Hamiltonian noise and only uses of full observations, such accuracy is acceptable for QAOA. It should also be noted that our strategy achieves a relatively high accuracy in the first few control steps in order to reduce the cumulative effect of Hamiltonian noise, which greatly reduces the total duration of control as compared to Trotterized quantum annealing. In practice, the control depth cannot be increased to infinity due to robustness considerations.

V Conclusion

In this paper, we have proposed a quantum RL control algorithm whose reward function is calculated solely based on the partial observations. In comparison with the existing methods relying on the classical simulation and fidelity to define the reward or objective function, the proposed algorithm does not need any information other than the measurement results of partial observations, which provides an advantage for the practical implementation of control algorithms on near-term quantum devices. We show that the algorithm can accomplish high-fidelity control tasks with a significant reduction in the resource requirement for quantum measurements.

The algorithm is robust to generic noise in the control Hamiltonians and observations. In particular, the RL algorithm with partial observation can achieve similar performance under the practical level of noise when compared to the commonly-used algorithms which did not even consider the noise effect. The trained model can also work with different level of noise and different initial and target states.

The proposed framework may also find applications in the practical implementation of noisy QAOA for the demonstration of near-term quantum computing, which only requires QAOA to give an optimization result that is good enough instead of the best result. In this case, the control precision of RL method with partial observability may be high enough for achieving an acceptable level of optimization accuracy.

The optimization process of POMDP proposed in this paper may be computationally more demanding than the conventional gradient-based methods, mainly because deep RL models are more computationally demanding than conventional gradient descent. Nevertheless, since the scale of the neural network often grows linearly with the number of qubits, the computational resource required by the deep RL models will not constitute the major obstacle for scalability. The scalability issue in quantum systems comes from the exponential grow of the size of quantum state with the number of qubits. As a result, the conventional gradient-based methods still face the same scalability issues as the partially-observation method does. In certain cases, the number of partial observations only grows linearly with the number of qubits. For example, [24] has successfully carried out the simulation on the Ising chain of spins using a deep RL scheme. For Ising chain, only the adjacent pair of qubits are measured. Therefore, the computation scalability can be significantly enhanced.

Further procedures can be incorporated into the partial observation scheme to improve the robustness and reduce the measurement cost. For example, Ref. [38] proposed a robust quantum control protocol against systematic errors by combining Short-cuts-To-Adiabatic (STA) and deep RL methods, which has been verified in the trapped-ion system [39]. Moreover, weak measurements can be implemented to reduce the measurement cost more significantly [40, 41], which have been successfully applied to the double-well and dissipative qubit systems, respectively. Since the state of the qubit is not destroyed but slightly perturbed via weak measurement, these protocols no longer need to record the historical data for repetitive state preparation, and thus may bring significant resource savings in practical implementations.

References

References

- Kay [2010] A. Kay, Perfect, efficient, state transfer and its application as a constructive tool, Int. J. Quantum Inf. 8, 641 (2010).

- Christandl et al. [2004] M. Christandl, N. Datta, A. Ekert, and A. J. Landahl, Perfect state transfer in quantum spin networks, Phys. Rev. Lett. 92, 187902 (2004).

- Chen et al. [2014] C. Chen, D. Dong, H.-X. Li, J. Chu, and T.-J. Tarn, Fidelity-based probabilistic Q-learning for control of quantum systems, IEEE Trans. Neural Netw. Learn. Syst. 25, 920 (2014).

- Bukov et al. [2018] M. Bukov, A. G. Day, D. Sels, P. Weinberg, A. Polkovnikov, and P. Mehta, Reinforcement learning in different phases of quantum control, Phys. Rev. X 8, 031086 (2018).

- Bukov [2018] M. Bukov, Reinforcement learning for autonomous preparation of Floquet-engineered states: Inverting the quantum Kapitza oscillator, Phys. Rev. B 98, 224305 (2018).

- Albarrán-Arriagada et al. [2018] F. Albarrán-Arriagada, J. C. Retamal, E. Solano, and L. Lamata, Measurement-based adaptation protocol with quantum reinforcement learning, Phys. Rev. A 98, 042315 (2018).

- Mabuchi and Khaneja [2005] H. Mabuchi and N. Khaneja, Principles and applications of control in quantum systems, Int. J. Robust Nonlin. Control 15, 647 (2005).

- Machnes et al. [2011] S. Machnes, U. Sander, S. J. Glaser, P. de Fouquieres, A. Gruslys, S. Schirmer, and T. Schulte-Herbrüggen, Comparing, optimizing, and benchmarking quantum-control algorithms in a unifying programming framework, Phys. Rev. A 84, 022305 (2011).

- Farhi et al. [2014] E. Farhi, J. Goldstone, and S. Gutmann, A quantum approximate optimization algorithm, preprint arXiv:1411.4028 (2014).

- Kusyk et al. [2021] J. Kusyk, S. M. Saeed, and M. U. Uyar, Survey on quantum circuit compilation for noisy intermediate-scale quantum computers: Artificial intelligence to heuristics, IEEE Trans. Quantum Eng. 2, 1 (2021).

- Pan et al. [2022] Y. Pan, Y. Tong, and Y. Yang, Automatic depth optimization for a quantum approximate optimization algorithm, Phys. Rev. A 105, 032433 (2022).

- Yao et al. [2020] J. Yao, M. Bukov, and L. Lin, Policy gradient based quantum approximate optimization algorithm, in Proc. 1st Mathematical and Scientific Machine Learning (PMLR, 2020) pp. 605–634.

- Ding and Wu [2019] H.-J. Ding and R.-B. Wu, Robust quantum control against clock noises in multiqubit systems, Phys. Rev. A 100, 022302 (2019).

- Ge et al. [2020] X. Ge, H. Ding, H. Rabitz, and R.-B. Wu, Robust quantum control in games: An adversarial learning approach, Phys. Rev. A 101, 052317 (2020).

- Dong et al. [2020] Y. Dong, X. Meng, L. Lin, R. Kosut, and K. B. Whaley, Robust control optimization for quantum approximate optimization algorithms, IFAC-PapersOnLine 53, 242 (2020).

- Zhang et al. [2018] X.-M. Zhang, Z.-W. Cui, X. Wang, and M.-H. Yung, Automatic spin-chain learning to explore the quantum speed limit, Phys. Rev. A 97, 052333 (2018).

- Niu et al. [2019] M. Y. Niu, S. Boixo, V. N. Smelyanskiy, and H. Neven, Universal quantum control through deep reinforcement learning, NPJ Quantum Inf. 5, 1 (2019).

- Fösel et al. [2018] T. Fösel, P. Tighineanu, T. Weiss, and F. Marquardt, Reinforcement learning with neural networks for quantum feedback, Phys. Rev. X 8, 031084 (2018).

- Zhang et al. [2019] X.-M. Zhang, Z. Wei, R. Asad, X.-C. Yang, and X. Wang, When does reinforcement learning stand out in quantum control? A comparative study on state preparation, NPJ Quantum Inf. 5, 1 (2019).

- Sørdal and Bergli [2019] V. B. Sørdal and J. Bergli, Deep reinforcement learning for robust quantum optimization, preprint arXiv:1904.04712 (2019).

- An and Zhou [2019] Z. An and D. Zhou, Deep reinforcement learning for quantum gate control, EPL (Europhys. Lett.) 126, 60002 (2019).

- Chen and Xue [2019] J.-J. Chen and M. Xue, Manipulation of spin dynamics by deep reinforcement learning agent, preprint arXiv:1901.08748 (2019).

- Porotti et al. [2019] R. Porotti, D. Tamascelli, M. Restelli, and E. Prati, Coherent transport of quantum states by deep reinforcement learning, Commun. Phys. 2, 1 (2019).

- Wauters et al. [2020] M. M. Wauters, E. Panizon, G. B. Mbeng, and G. E. Santoro, Reinforcement-learning-assisted quantum optimization, Phys. Rev. Res. 2, 033446 (2020).

- Schäfer et al. [2020] F. Schäfer, M. Kloc, C. Bruder, and N. Lörch, A differentiable programming method for quantum control, Machine Learning: Sci. Technol. 1, 035009 (2020).

- Li et al. [2020] J.-A. Li, D. Dong, Z. Wei, Y. Liu, Y. Pan, F. Nori, and X. Zhang, Quantum reinforcement learning during human decision-making, Nature Human Behaviour 4, 294 (2020).

- McKiernan et al. [2019] K. A. McKiernan, E. Davis, M. S. Alam, and C. Rigetti, Automated quantum programming via reinforcement learning for combinatorial optimization, preprint arXiv:1908.08054 (2019).

- Braziunas [2003] D. Braziunas, POMDP solution methods, Univ. Toronto, Canada, Tech. Rep. (2003).

- Foka and Trahanias [2007] A. Foka and P. Trahanias, Real-time hierarchical POMDPs for autonomous robot navigation, Rob. Auton. Syst. 55, 561 (2007).

- Candido and Hutchinson [2011] S. Candido and S. Hutchinson, Minimum uncertainty robot navigation using information-guided POMDP planning, in Proc. IEEE Int. Conf. Robot. Automat. (IEEE, May. 2011) pp. 6102–6108.

- Liu et al. [2015] W. Liu, S.-W. Kim, S. Pendleton, and M. H. Ang, Situation-aware decision making for autonomous driving on urban road using online POMDP, in Proc. IEEE Intell. Veh. Symp. (IEEE, 2015) pp. 1126–1133.

- Wang et al. [2019] C. Wang, J. Wang, Y. Shen, and X. Zhang, Autonomous navigation of UAVs in large-scale complex environments: A deep reinforcement learning approach, IEEE Trans. Veh. Technol. 68, 2124 (2019).

- Meng et al. [2021] L. Meng, R. Gorbet, and D. Kulić, Memory-based deep reinforcement learning for pomdps, in Proceedings of the International Conference on Intelligent Robots and Systems (IEEE, New York, 2021) pp. 5619–5626.

- Ferrie [2014] C. Ferrie, Self-guided quantum tomography, Phys. Rev. Lett. 113, 190404 (2014).

- Morzhin and Pechen [2019] O. V. Morzhin and A. N. Pechen, Krotov method for optimal control of closed quantum systems, Russ. Math. Surv. 74, 851 (2019).

- Farhi et al. [2015] E. Farhi, J. Goldstone, and S. Gutmann, A quantum approximate optimization algorithm applied to a bounded occurrence constraint problem, preprint arXiv:1412.6062 (2015).

- Zhou et al. [2020] L. Zhou, S.-T. Wang, S. Choi, H. Pichler, and M. D. Lukin, Quantum approximate optimization algorithm: Performance, mechanism, and implementation on near-term devices, Phys. Rev. X 10, 021067 (2020).

- Ding et al. [2021a] Y. Ding, Y. Ban, J. D. Martín-Guerrero, E. Solano, J. Casanova, and X. Chen, Breaking adiabatic quantum control with deep learning, Phys. Rev. A 103, L040401 (2021a).

- Ai et al. [2022] M.-Z. Ai, Y. Ding, Y. Ban, J. D. Martín-Guerrero, J. Casanova, J.-M. Cui, Y.-F. Huang, X. Chen, C.-F. Li, and G.-C. Guo, Experimentally realizing efficient quantum control with reinforcement learning, Sci. China Phys. Mech. Astron. 65, 1 (2022).

- Borah et al. [2021] S. Borah, B. Sarma, M. Kewming, G. J. Milburn, and J. Twamley, Measurement-based feedback quantum control with deep reinforcement learning for a double-well nonlinear potential, Phys. Rev. Lett. 127, 190403 (2021).

- Ding et al. [2021b] Y. Ding, X. Chen, R. Magdalena-Benedicto, and J. D. Martín-Guerrero, Quantum stream learning, preprint arXiv:2112.06628 (2021b).

- Givan and Parr [2001] B. Givan and R. Parr, An introduction to markov decision processes, Purdue University (2001).

- Konda and Tsitsiklis [1999] V. Konda and J. Tsitsiklis, Actor-critic algorithms, in Proceedings of the Neural Information Processing Systems, Vol. 12, edited by S. Solla, T. Leen, and K. Müller (MIT Press, Cambridge, 1999).

- Fujimoto et al. [2018] S. Fujimoto, H. Hoof, and D. Meger, Addressing function approximation error in actor-critic methods, in Int. Conf. Mach. Learn. (PMLR, New York, 2018) pp. 1587–1596.

Appendix A Partially Observable Markov Decision Process (POMDP)

The Markov Decision Process (MDP) is a sequential decision process for a fully observable, stochastic environment with a Markovian transition model and additive rewards [42]. By partially observable we mean that the agent only receives an observation through non-complete set of measurements. POMDP is often defined as a -tuple , where , are state space, action space, transition function, immediate reward, observation space and conditional observation probabilities, respectively. In MDP and POMDP, the agent takes an action after the current observation at each time step . Then the environment reaches the next state with the probability , and the agent receives the next observation with the probability . The agent needs to choose actions in order to maximize the expected discounted reward , where is the immediate reward function at the current time step and is the discount factor that describes the preference of the agent for current rewards over future rewards.

Appendix B Reinforcement Learning (RL) and Actor-Critic Framework

RL is an effective method to generate an optimal policy for choosing the proper actions at each time step . In our algorithm, we utilize the deterministic policy by implementing . In order to learn an optimal policy, the common practice is to learn an action-value function which is defined as follows

| (31) |

Here is often called the Q-value of the action . Such a function indicates the expected reward starting from the observation with action and policy .

Actor-Critic [43] is a commonly-used framework of RL, in which the policy and the action-value function are called actor and critic, respectively. Both the actor and the critic are implemented as neural networks. TD3 [44] is a state-of-the-art actor-critic framework which can address the problem of overestimating the Q-value. Specifically, TD3 employs two critics and , which uses the minimum of the predicted optimal future return in state to bootstrap the Q-value of the current observation and action as illustrated in Fig. 4. The parameters of the critic are updated as follows

| (32) |

The target Q-value is determined by

| (33) |

and the parameters of the actor are optimized as follows

| (34) |

in which the function could be either of the two critics and .