Robust Multimodal 3D Object Detection

via Modality-Agnostic Decoding and Proximity-based Modality Ensemble

Abstract

Recent advancements in 3D object detection have benefited from multi-modal information from the multi-view cameras and LiDAR sensors. However, the inherent disparities between the modalities pose substantial challenges. We observe that existing multi-modal 3D object detection methods heavily rely on the LiDAR sensor, treating the camera as an auxiliary modality for augmenting semantic details. This often leads to not only underutilization of camera data but also significant performance degradation in scenarios where LiDAR data is unavailable. Additionally, existing fusion methods overlook the detrimental impact of sensor noise induced by environmental changes, on detection performance. In this paper, we propose MEFormer to address the LiDAR over-reliance problem by harnessing critical information for 3D object detection from every available modality while concurrently safeguarding against corrupted signals during the fusion process. Specifically, we introduce Modality Agnostic Decoding (MOAD) that extracts geometric and semantic features with a shared transformer decoder regardless of input modalities and provides promising improvement with a single modality as well as multi-modality. Additionally, our Proximity-based Modality Ensemble (PME) module adaptively utilizes the strengths of each modality depending on the environment while mitigating the effects of a noisy sensor. Our MEFormer achieves state-of-the-art performance of 73.9% NDS and 71.5% mAP in the nuScenes validation set. Extensive analyses validate that our MEFormer improves robustness against challenging conditions such as sensor malfunctions or environmental changes. The source code is available at https://github.com/hanchaa/MEFormer

1 Introduction

Multi-sensor fusion, which utilizes information from diverse sensors such as LiDAR and multi-view cameras, has recently become mainstream in 3D object detection [36, 35, 16, 13, 39]. Point clouds from the LiDAR sensor provide accurate geometric information of the 3D space and images from the multi-view camera sensors provide rich semantic information. The effective fusion of these two modalities leads to state-of-the-art performance in 3D object detection by compensating for insufficient information of each modality.

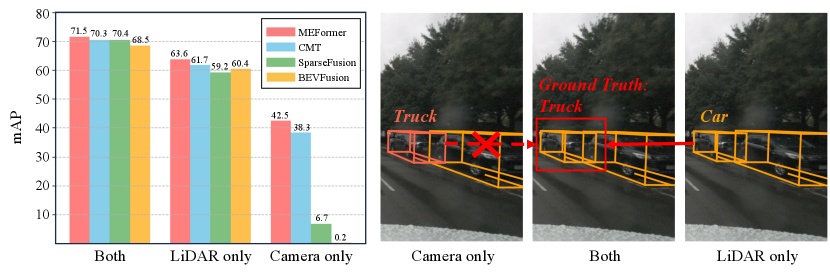

However, as discussed in Yu et al. [42], previous frameworks [1, 28, 10, 27] have LiDAR reliance problem that primarily relies on LiDAR modality and treats camera modality as an extra modality for improving semantic information, even though recent studies [14, 11, 12] show that the geometric information can also be extracted solely from camera modality. The LiDAR reliance problem makes the framework fail to extract geometric information from camera modality, which results in missing objects that can only be found by the camera e.g., distant objects. This problem is exacerbated when the LiDAR sensor is malfunctioning. In LiDAR missing scenarios during inference, although the model is trained with both modalities, it shows inferior performance to the same architecture trained only with camera modality or even completely fails to perform the detection task (see the left graph of Fig. 1). Moreover, previous works [16, 13] simply fuse the point feature and image pixel feature in the same coordinate in Bird’s-Eyes-View (BEV) space without considering the disparities between two modalities. In a challenging environment where one modality exhibits weak signals, such as at night time, prior works may suffer from a negative fusion that the defective information from a noisy modality adversely affects the correct information obtained from another modality, which results in corrupted detection performance as shown in the right side of Fig. 1.

In this paper, we introduce the Modality Ensemble transFormer, dubbed MEFormer, which effectively leverages the inherent characteristics of both LiDAR and camera modalities to tackle the LiDAR reliance and negative fusion problems. First, inspired by multi-task learning [20, 44], we propose the Modality-Agnostic Decoding (MOAD) training strategy to enhance the ability of the transformer decoder in extracting both geometric and semantic information regardless of input modalities. In addition to the multi-modal decoding branch, which takes both modalities as input, we introduce auxiliary tasks, where the transformer decoder finds objects with single-modal decoding branches that take only a single modality as input. This alleviates the LiDAR reliance problem by enabling the decoder to extract the information needed for 3D object detection from individual modalities. In addition, we propose Proximity-based Modality Ensemble (PME), which alleviates the negative fusion problem. A simple cross-attention mechanism with our proposed attention bias generates a final box prediction by integrating the box candidates from all modality decoding branches. PME adaptively aggregates the box features from each modality decoding branch depending on the environment and mitigates the noise that may occur in the multi-modal decoding branch. Extensive experiments demonstrate that MEFormer exhibits superior performance. Especially in challenging environments such as sensor malfunction, MEFormer shows robust performance compared to previous works.

Our contributions are summarized as:

-

•

We propose a novel training strategy Modality-agnostic Decoding (MOAD), which is effective in addressing the LiDAR reliance problem by better utilizing information from all modalities.

-

•

We introduce the Proximity-based Modality Ensemble (PME) module, which adaptively aggregates box predictions from three decoding branches of MOAD to prevent the negative fusion problem.

-

•

MEFormer achieves the state-of-the-art 3D object detection performance on nuScenes dataset. We also show promising performance in challenging environments.

2 Related Work

Camera-based 3D Object Detection. The field of camera-based 3D object detection has witnessed numerous recent advances in autonomous driving. Some previous studies [21, 7, 23, 32], have proposed a method to lift 2D features into 3D space by predicting pixel-wise depth from a camera. Despite its simplicity and good performance, this approach is constrained by its dependence on accurate depth prediction. Other works [12, 38, 14, 15] introduce leveraging 3D queries and employing transformer attention mechanisms to find the corresponding 2D features. In particular, PETR [14] showed efficacy in encoding 3D coordinates into image features through positional encoding, implicitly deducing 3D-2D correspondences and achieving good performance. However, relying solely on a camera sensor for 3D perception, while advantageous due to its lower cost than a LiDAR sensor, faces limitations stemming from the inherent ambiguity in predicting 3D features from the 2D image.

Multi-modal 3D Object Detection. In the domain of 3D object detection for autonomous driving, multi-modal detection methods that leverage data from both LiDAR sensors and multi-view cameras achieve state-of-the-art performance. These two sensors provide complementary information to each other, prompting numerous studies [22, 41, 27, 28, 9, 3, 4] to explore methodologies for learning through these both modality. TransFusion [1] and DeepFusion [10] introduce a transformer-based method that utilizes LiADR features as queries, and image features as keys and values. Meanwhile, BEVFusion [16, 13] shows commendable performance by lifting 2D features into a unified Bird’s Eye View (BEV) space following LSS [21], and subsequently applying a 3D detector head. DeepInteraction [39] applies iterative cross-attention by extracting queries from each modality to fully exploit modality-specific information. SparseFusion [35] learns sparse candidates acquired via modality-specific detectors and fuses these sparse candidates to generate the final outputs. CMT [36] aggregates information into a query with the assistance of modality positional embedding to generate final boxes. However, most previous studies are highly dependent on LiDAR modality, not fully exploiting the information inherent in each modality. Furthermore, the modality fusion methods often overlook the distinctions between modalities, leading to a degradation of robustness in scenarios where a specific sensor introduces noise.

Robust Multi-Modality Fusion. In real-world driving scenarios, sensor failures are prevalent and adversely affect the stability required for autonomous driving [34, 6, 43, 42, 25, 47]. Apart from a simple sensor missing, external environment noise or sensor malfunctions can severely impair robust 3D perception. While many studies [1, 16, 13, 10, 39, 35] have explored robust 3D detection through multi-sensor fusion, they primarily focus on achieving superior performance on complete multi-modal inputs. However, reliance on an ideal sensor results in significant performance degradation when a specific sensor malfunctions, diminishing the effectiveness of the fusion approach compared to utilizing a single modality. Specifically, existing fusion methods heavily rely on LiDAR modality, and the camera is used in an auxiliary role, resulting in inferior performance in the case of LiDAR corruption [42]. In this paper, we propose a framework that reduces reliance on specific modalities, mitigates the negative fusion problem, and achieves robust detection performance even in scenarios involving noise or sensor missing.

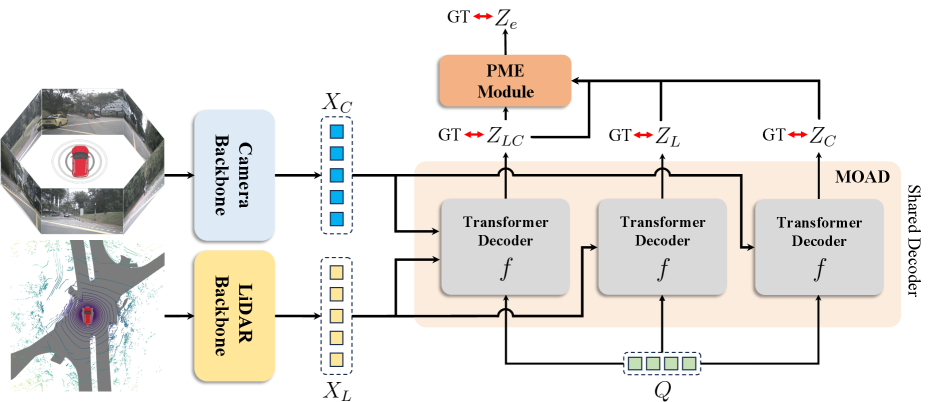

3 Method

In this section, we propose MEFormer, a robust 3D object detection framework with modality-agnostic decoding and modality ensemble module. The overall architecture is illustrated in Fig. 2. We start with a brief review of the cross-modal transformer in Sec. 3.1, and then, in Sec. 3.2, we present the modality-agnostic decoding that enables fully extracting features for 3D object detection from each modality to reduce reliance on LiDAR modality. Finally, in Sec. 3.3, we introduce a proximity-based modality ensemble module to adaptively integrate box predictions from each decoding branch while preventing negative fusion in the modality fusion process.

3.1 Preliminaries

Cross modal transformer (CMT) [36] is a recent framework that uses a transformer decoder to aggregate information from both modalities into object queries. In CMT, the modality-specific backbone first extracts modality features e.g., VoVNet [8] for camera and VoxelNet [45] for LiDAR. Then, they localize 3D bounding boxes using a transformer decoder with modality-specific position embeddings that help object queries aggregate information from both modalities simultaneously. Given the flattened LiDAR BEV features and camera image features , CMT can be formulated as:

| (1) |

where denotes a set of learnable object queries and [;] indicates the concatenation. and denote the height and width of the LiDAR BEV feature map and camera image feature map respectively, and indicates the number of cameras.

CMT is an effective framework but it has some drawbacks discussed in Sec. 1. First, when a LiDAR is missing, CMT lacks the ability to extract geometric information from the camera modality, resulting in substantial performance degradation. Second, when a specific modality shows corrupted signals, information from it may act as noise when aggregating information from both modalities simultaneously. In the following sections, we will demonstrate how to mitigate these issues.

3.2 Modality-Agnostic Decoding

For the cross-modal transformer to maximize the use of both modalities, both geometric and semantic information should be extracted from each modality without relying on a specific one. To this end, we propose a modality-agnostic decoding (MOAD) training scheme, that allows a transformer decoder to fully decode information for 3D object detection regardless of input modalities.

First, we randomly sample learnable anchor positions in the 3D space from uniform distributions and set their positional embeddings to the initial object queries following PETR [14]. Then is processed by multiple decoding branches, each using different modality combinations as an input. This can be formulated as:

| (2) | |||

| (3) | |||

| (4) |

where is a shared transformer decoder and , and denote box features from each modality decoding branch. Note that is shared across multiple decoding branches. We generate modality-specific positional embeddings and following [14, 36], and , and are used as positional embedings for queries when generating , and respectively. Then, we predict the final box prediction and classification score via modality-agnostic box head :

| (5) |

where indicates the set of modality decoding branch.

We use Hungarian matching between ground truth boxes and predicted boxes from each modality decoding branch respectively for loss computation. The loss function from each modality decoding branch can be formulated as:

| (6) |

and overall loss function of MOAD is defined as:

| (7) |

We use the focal loss for classification loss and L1 loss for box regression.

Note that applying Hungarian matching and computing loss function separately for each modality decoding branch helps fully decode information regardless of input modality. By minimizing the shared transformer decoder with the loss function and , modality backbones and transformer decoder learn to extract both geometric and semantic information from each modality without relying o3n specific modality. In addition, minimizing helps the shared transformer learn how to fuse rich information from both modalities effectively. At test time, we only use the multi-modal decoding branch for final box prediction, resulting in higher performance without additional computational cost.

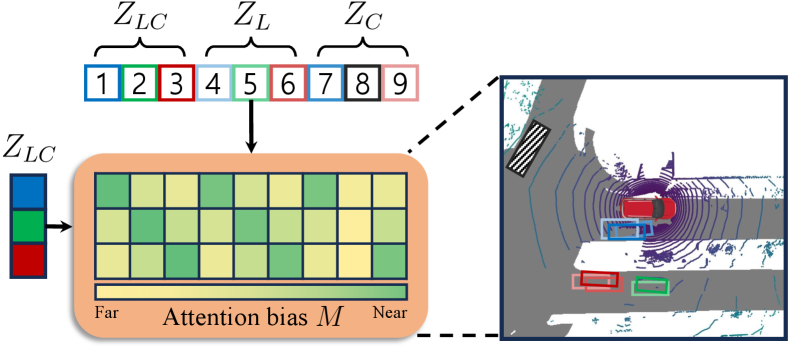

3.3 Proximity-based Modality Ensemble

As discussed in [30, 19], there are challenging environments where one modality outperforms the other, such as at night or sensor malfunction environments. In this case, aggregating information from both LiDAR and camera modalities simultaneously may suffer from negative fusion, which leads to poor box prediction as discussed in Sec. 1. To this end, we propose a novel proximity-based modality ensemble (PME) module that adaptively integrates box features from every modality decoding branch of MOAD.

Given the set of the box features from each modality decoding branch, is updated through a cross-attention layer with the whole output features including itself. To avoid the noise transfer caused by the interaction between irrelevant box features, we introduce an attention bias for the cross-attention. To obtain the attention bias, we measure the center distance between the predicted boxes from and those from , and . Then, we apply a linear transformation with a learnable scaler and a bias . Given and their corresponding center coordinates in the BEV space, the attention bias can be formulated as:

| (8) | |||

| (9) |

Then, we add attention bias to the attention logit and apply the softmax function to calculate attention scores.

Every set of the box features , and are linearly projected by modality-specific projection function , and before the cross-attention layer. To summarize, the overall architecture of the PME can be written as:

| (10) | |||

| (11) |

where is a cross-attention layer for the modality ensemble. We generate positional embeddings for queries and keys using , and and MLPs. The final box prediction and are generated by the box head which is another box head for the ensembled box features , as:

| (12) |

For proximity-based modality ensemble loss, we apply Hungarian matching between ground truth boxes and predicted boxes. The loss function of PME is defined as:

| (13) |

where and is the L1 loss and the focal loss respectively following Eq. 6.

Note that the cross-attention mechanism helps our framework adaptively select promising modalities depending on the environment when predicting the final box. MEFormer with PME shows remarkable performance in challenging environments, which will be discussed in Sec. 4.4.

4 Experiments

In this section, we present comprehensive experiments of our framework. First, we introduce the dataset, metrics, and experimental settings. Then we demonstrate the effectiveness of MEFormer through comparison with state-of-the-art methods on the benchmark dataset. Additionally, we analyze the contribution of the proposed framework through ablations and extensive experiments about various scenarios.

4.1 Experimental Setup

Datasets and Metrics. We evaluate MEFormer on the nuScenes dataset [2], a large-scale benchmark dataset for 3D object detection. It includes point clouds collected with 32-beam LiDAR and 6 multi-view images of 1600900 resolution. The dataset is composed of 1000 scenes and split into 700, 150, and 150 scenes for training, validation, and testing. We report 3D object detection performance through mAP and NuScenes Detection Score (NDS). Unlike the conventional Average Precision metric, nuScenes mAP determines the box matches by considering center distance in Bird’s Eye View (BEV) space. A prediction is considered positive if the ground truth box lies within a certain distance from the center of the prediction. This metric averages results across 10 classes with 4 distance thresholds (0.5m, 1m, 2m, 4m). NDS represents an integrated metric considering mAP and various true positive metrics, which consist of translation, scale, orientation, velocity, and attributes.

4.2 Implementation details

Model. We implement MEFormer with MMDetection3D framework [5]. We use VoVNet [8] for the camera modality backbone and use images with a resolution of 1600 640 by cropping the upper region of the image. For the LiDAR modality, VoxelNet [45] is used as a backbone. We set the detection range to [] and [ for the XY-axes and Z-axis, respectively, and the voxel size is set to . Our shared transformer decoder of MOAD has 6 cross-attention layers and PME has 1 cross-attention layer.

| Method | Modality | Validation set | Test set | ||

| NDS | mAP | NDS | mAP | ||

| BEVDet [7] | C | - | - | 48.2 | 42.2 |

| FCOS3D [31] | C | 41.5 | 34.3 | 42.8 | 35.8 |

| PETR [14] | C | 44.2 | 37.0 | 45.5 | 39.1 |

| SECOND [37] | L | 63.0 | 52.6 | 63.3 | 52.8 |

| CenterPoint [40] | L | 66.8 | 59.6 | 67.3 | 60.3 |

| TransFusion-L [1] | L | 70.1 | 65.1 | 70.2 | 65.5 |

| PointAugmenting [28] | L+C | - | - | 71.0 | 66.8 |

| FUTR3D [3] | L+C | 68.3 | 64.5 | - | - |

| UVTR [9] | L+C | 70.2 | 65.4 | 71.1 | 67.1 |

| AutoAlignV2 [4] | L+C | 71.2 | 67.1 | 72.4 | 68.4 |

| TransFusion [1] | L+C | 71.3 | 67.5 | 71.6 | 68.9 |

| BEVFusion [13] | L+C | 72.1 | 69.6 | 73.3 | 71.3 |

| BEVFusion [16] | L+C | 71.4 | 68.5 | 72.9 | 70.2 |

| DeepInteraction [39] | L+C | 72.6 | 69.9 | 73.4 | 70.8 |

| UniTR [29] | L+C | 73.3 | 70.5 | 74.5 | 70.9 |

| MetaBEV [6] | L+C | 71.5 | 68.0 | - | - |

| SparseFusion [35] | L+C | 72.8 | 70.4 | 73.8 | 72.0 |

| CMT [36] | L+C | 72.9 | 70.3 | 74.1 | 72.0 |

| MEFormer (Ours) | L+C | 73.9 | 71.5 | 74.3 | 72.2 |

Training. All experiments are conducted with a batch size of 16 on 8 A6000 GPUs. Our model is trained through two steps: 1) We first train MOAD without PME for 20 epochs with CBGS [46]. We apply GT sampling augmentation for the first 15 epochs and we do not apply for the last 5 epochs. The initial learning rate is 1.0 and the cyclic learning rate policy [24] is adapted. 2) Once the MOAD is trained, we train PME module for 6 epochs with CBGS while freezing the modality backbone and the rest of the transformer. Note that we do not apply GT sampling augmentation in the second stage. The initial learning rate is 1.0 and we adopt the cosine annealing learning rate policy with 1000 warm-up iterations [17]. AdamW [18] optimizer is adopted for optimization in both stages. For the loss weights, we set the and to 2.0 and 0.25 respectively following DETR3D [33]. We empirically set , and to 1.0 for MOAD.

4.3 Comparison to the state of the art framework

We compare our proposed model with existing baseline models on the nuScenes dataset. To evaluate the model on the test set, we submitted the detection results of MEFormer to the nuScenes test server and report the performance. Note that we did not use any test-time augmentation or ensemble strategies during the inference. As shown in Table 1, MEFormer outperforms other baseline models in terms of both mAP and NDS with a significant margin. Specifically, MEFormer attains a remarkable 73.9% NDS and 71.5% mAP on the nuScenes validation set. On the test set, our model achieves the best performance with 72.2% mAP and the second best performance with 74.3% NDS compared to the previous methods. This demonstrates that, although MEFormer is originally proposed for tackling the sensor missing or corruption scenarios, our proposed approach is effective for enhancing overall detection performance as well.

4.4 Robustness in challenging scenarios

| Method | Modality | Both | LiDAR only | Camera only | |||

| NDS | mAP | NDS | mAP | NDS | mAP | ||

| PETR [14] | C | - | - | - | - | 44.2 | 37.0 |

| CMT-C [36] | C | - | - | - | - | 46.0 | 40.6 |

| CMT-L [36] | L | - | - | 68.6 | 62.4 | - | - |

| BEVFusion [16] | L+C | 71.4 | 68.5 | 66.5 | 60.4 | 1.3 | 0.2 |

| MetaBEV [6] | L+C | 71.5 | 68.0 | 69.2 | 63.6 | 42.6 | 39.0 |

| CMT [36] | L+C | 72.9 | 70.3 | 68.1 | 61.7 | 44.7 | 38.3 |

| Ours | L+C | 73.9 | 71.5 | 69.5 | 63.6 | 48.0 | 42.5 |

| Method | Modality | LiDAR Beam Reduction | Camera Dirt Occlusion | Night | Rainy | ||||

| NDS | mAP | NDS | mAP | NDS | mAP | NDS | mAP | ||

| PETR [14] | C | - | - | 30.6 | 17.9 | 24.2 | 17.2 | 50.6 | 41.9 |

| Transfusion-L [1] | L | 49.6 | 31.8 | - | - | 43.5 | 37.5 | 69.9 | 64.0 |

| BEVFusion [16] | L+C | 55.3 | 43.2 | 68.9 | 63.7 | 45.7 | 42.2 | 72.1 | 68.1 |

| DeepInteraction [39] | L+C | 55.1 | 46.0 | 65.9 | 63.8 | 43.8 | 42.3 | 70.6 | 69.4 |

| CMT [36] | L+C | 62.2 | 54.9 | 69.2 | 63.9 | 46.3 | 42.8 | 73.7 | 70.5 |

| CMT w/ PME | L+C | 62.4 | 55.1 | 69.5 | 64.5 | 46.3 | 43.0 | 74.0 | 70.7 |

| MOAD | L+C | 62.8 | 55.0 | 69.7 | 64.3 | 46.6 | 43.1 | 74.6 | 72.2 |

| MOAD w/ PME | L+C | 63.4 | 55.9 | 69.9 | 64.6 | 46.8 | 43.7 | 74.9 | 72.2 |

4.4.1 Sensor missing

We introduce modality-agnostic decoding, a novel training strategy for leveraging a shared decoder to extract both geometric and semantic information from each modality while reducing heavy reliance on specific modalities. To validate this, Table 2 presents the performance in environments where a single modality is missing at test time. Under the absence of a camera or LiDAR sensor, our modality-agnostic decoding (w/o PME) shows strong robustness, demonstrating a significant performance gap compared to other baselines. Specifically, in the camera-only scenario, CMT shows 28.2% performance degradation in NDS while ours shows 25.9%. Note that BEVFusion is completely impaired if only cameras are available, which means BEVFusion fails to extract geometric information. Additionally, the comparison between CMT-C, CMT-L, and CMT shows that when one modality is missing, the framework trained with both LiDAR and camera modalities exhibits a performance degradation compared to those trained with a single modality. This means CMT does not fully utilize both geometric and semantic information from each modality, instead, it heavily relies on a specific modality. However, our framework shows the best performance by 69.5% NDS and 63.6% mAP in the LiDAR-only scenarios and 48.0% NDS and 42.5% mAP in the camera-only scenarios. Through the application of modality-agnostic decoding and the parameter-sharing mechanism in the transformer decoder, our framework demonstrates better robustness in both LiDAR-only and camera-only scenarios while mitigating the reliance on either modality.

4.4.2 Challenging scenarios

We propose modality-agnostic decoding to address the LiDAR reliance problem and effectively extract the information for 3D object detection regardless of input modalities. However, potential drawbacks arise when aggregating both LiDAR features and camera features into a single object query, as the modalities from different domains may suffer from negative fusion when they are fused. To prove that the proposed proximity-based modality ensemble module is effective to address this problem, we evaluate the MEFormer in LiDAR or camera corruption scenarios. As presented in Table 3, our framework shows the best performance in all scenarios. Specifically, compared to MOAD, PME improves 0.9% mAP in the beam reduction scenario and 0.3% mAP in the camera dirt occlusion scenario.

Also, performance comparison in challenging environments is presented in Table 3, and MEFormer outperforms other frameworks for all challenging environments. Especially on rainy days where LiDAR shows noisy signals due to the refraction of the laser by raindrops, MEFormer shows a large performance gap (+1.2% NDS and +1.7% mAP) compared to CMT. This result validates that our framework fully leverages the camera modality in scenarios where LiDAR struggles. In addition, compared to MOAD, PME improves mAP by 0.6% at night, where the camera modality shows noisy signals due to dark images. This performance gap validates that PME adaptively exploits desirable modalities depending on the environment, avoiding negative fusion.

| Method | Modality | Near | Middle | Far | |||

| NDS | mAP | NDS | mAP | NDS | mAP | ||

| PETR [14] | C | 53.8 | 53.1 | 40.2 | 31.8 | 25.7 | 14.5 |

| TransFusion-L [1] | L | 77.3 | 77.5 | 67.9 | 61.5 | 47.5 | 34.3 |

| BEVFusion [16] | L+C | 78.0 | 79.2 | 69.3 | 64.1 | 49.8 | 38.9 |

| DeepInteraction [39] | L+C | 75.3 | 78.6 | 67.9 | 65.4 | 48.4 | 40.8 |

| CMT [36] | L+C | 79.4 | 81.0 | 70.9 | 65.8 | 52.6 | 42.5 |

| CMT w/ PME | L+C | 79.6 | 81.1 | 71.1 | 66.1 | 52.9 | 42.9 |

| MOAD | L+C | 80.4 | 82.3 | 71.7 | 66.8 | 53.4 | 43.6 |

| MOAD w/ PME | L+C | 80.4 | 82.4 | 72.0 | 67.1 | 53.3 | 43.6 |

4.4.3 Detection range

LiDAR sensor struggles to collect enough points of the objects that are located far away from the ego car. This often leads to the failure to detect distant objects, which can be alleviated by utilizing the camera modality that has dense signals. We show performance comparison across the various detection ranges to validate that MEFormer effectively utilizes the camera modality. As shown in Table 4, MEFormer outperforms previous frameworks for distant objects. Specifically, introducing MOAD achieves 1.1% mAP improvement compared to CMT, which proves that MOAD helps extract geometric information from the camera modality. In addition, PME improves the detection performance of objects located at middle and far distances compared to near distances. This validates that PME addresses the negative fusion of noisy LiDAR information.

5 Analysis

| MOAD | PME | NDS | mAP | |

| (a) | 72.9 | 70.3 | ||

| (b) | ✓ | 73.1 | 70.5 | |

| (c) | ✓ | 73.7 | 71.3 | |

| (d) | ✓ | ✓ | 73.9 | 71.5 |

5.1 Ablation studies

We present ablation studies of the proposed training strategy and module in Table 5. All experiments are conducted on nuScenes validation set. First of all, as shown in (b), adapting PME improves detection performance by 0.2% NDS and mAP compared to (a) which is identical to CMT. Note that inputs and for the PME in (b) are generated by a transformer decoder trained with only the multi-modal decoding branch since our modality-agnostic decoding is not yet applied. This shows that PME alone helps improve the detection performance. Next, (c) shows applying modality-agnostic decoding enhances the performance by 0.8% NDS and 1.0% mAP. Note that only a multi-modal decoding branch is used for the box prediction in (c) during inference time and there is no additional computation compared to (a). This empirical evidence verifies that reducing reliance on a LiDAR sensor improves the detection performance in overall environments. In (d), we extend our methodology to include both modality-agnostic decoding and proximity-based modality ensemble. Applying both MOAD and PME shows 1.0% NDS and 1.2% mAP performance gain compared to (a), resulting in state-of-the-art detection performance. Performance comparison between (c) and (d) in challenging environments is discussed in Section 4.4.2 and shows a larger performance gap compared to that in the overall environments, which means PME is more effective as the environment becomes more challenging.

| Method | FPS | NDS | mAP |

| DeepInteractioion | 1.5 | 72.6 | 69.9 |

| SparseFusion | 2.3 | 72.8 | 70.4 |

| CMT | 3.4 | 72.9 | 70.3 |

| Ours | 3.1 | 73.9 | 71.5 |

5.2 Inference speed

We compare the inference speed of our framework with previous frameworks and the result is shown in Table 6.

MEFormer shows 1.0% NDS and 1.2% mAP performance improvement with only a 0.3 FPS reduction in inference speed. Note that all results are measured on a single NVIDIA RTX 3090 GPU and voxelization time is included following [36].

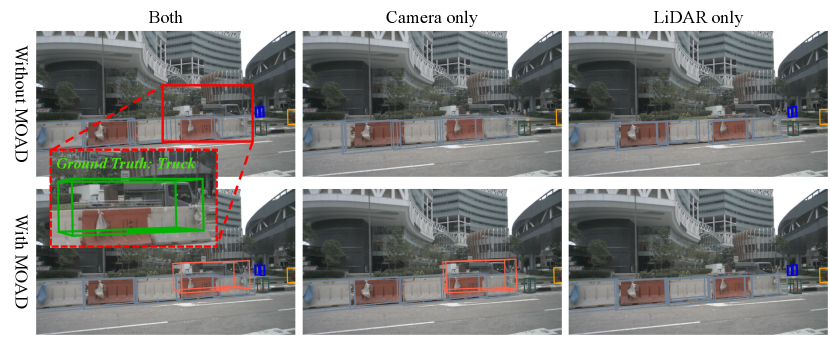

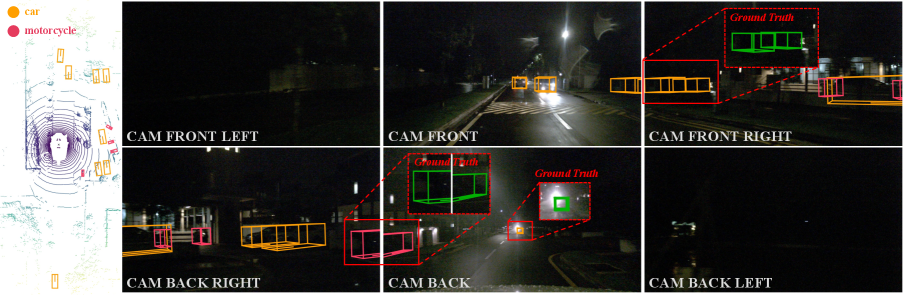

5.3 Qualitative results

In this section, we validate the effectiveness of our framework with qualitative results. First, Figure 4 shows that the transformer decoder trained with MOAD utilizes the camera to successfully localize the truck that LiDAR fails to detect, resulting in bounding boxes in the multi-modal decoding branch as well. However, without MOAD, all decoding branches fail to detect the same truck. This result validates that MOAD reduces the LiDAR reliance problem in the modality fusion process and helps the framework utilize geometric information in camera modality.

Figure 5 shows qualitative results of MEFormer in the dark environment, where the cameras show extremely corrupted signals. In the front right camera, our framework successfully detects two cars that are hard to recognize with the camera. Additionally, in the back view camera, our framework also detected the car in which a camera shows corrupted signals due to the car’s headlight. Qualitative results validate that our framework shows competitive detection performance in challenging environments.

6 Conclusion

In this paper, we present MEFormer, an effective framework to fully leverage the LiDAR sensor and camera sensors, addressing the LiDAR reliance problem. MEFormer is trained to extract both geometric and semantic information from each modality using the modality-agnostic decoding training strategy, resulting in promising results in various LiDAR malfunction scenarios as well as overall environments. In addition, the proximity-based modality ensemble module shows another performance improvement by preventing negative fusion in challenging environments. Extensive experiments validate that MEFormer achieves state-of-the-art performance in various scenarios. We hope that MEFormer can inspire further research in the field of robust multi-modal 3D object detection.

References

- Bai et al. [2022] Xuyang Bai, Zeyu Hu, Xinge Zhu, Qingqiu Huang, Yilun Chen, Hongbo Fu, and Chiew-Lan Tai. Transfusion: Robust lidar-camera fusion for 3d object detection with transformers. In CVPR, 2022.

- Caesar et al. [2020] Holger Caesar, Varun Bankiti, Alex H Lang, Sourabh Vora, Venice Erin Liong, Qiang Xu, Anush Krishnan, Yu Pan, Giancarlo Baldan, and Oscar Beijbom. nuscenes: A multimodal dataset for autonomous driving. In CVPR, 2020.

- Chen et al. [2023] Xuanyao Chen, Tianyuan Zhang, Yue Wang, Yilun Wang, and Hang Zhao. Futr3d: A unified sensor fusion framework for 3d detection. In CVPR, 2023.

- Chen et al. [2022] Zehui Chen, Zhenyu Li, Shiquan Zhang, Liangji Fang, Qinhong Jiang, and Feng Zhao. Autoalignv2: Deformable feature aggregation for dynamic multi-modal 3d object detection. In ECCV, 2022.

- Contributors [2020] MMDetection3D Contributors. MMDetection3D: OpenMMLab next-generation platform for general 3D object detection. https://github.com/open-mmlab/mmdetection3d, 2020.

- Ge et al. [2023] Chongjian Ge, Junsong Chen, Enze Xie, Zhongdao Wang, Lanqing Hong, Huchuan Lu, Zhenguo Li, and Ping Luo. Metabev: Solving sensor failures for 3d detection and map segmentation. In ICCV, 2023.

- Huang et al. [2021] Junjie Huang, Guan Huang, Zheng Zhu, Ye Yun, and Dalong Du. Bevdet: High-performance multi-camera 3d object detection in bird-eye-view. arXiv preprint arXiv:2112.11790, 2021.

- Lee and Park [2020] Youngwan Lee and Jongyoul Park. Centermask: Real-time anchor-free instance segmentation. In CVPR, 2020.

- Li et al. [2022a] Yanwei Li, Yilun Chen, Xiaojuan Qi, Zeming Li, Jian Sun, and Jiaya Jia. Unifying voxel-based representation with transformer for 3d object detection. In NeurIPS, 2022a.

- Li et al. [2022b] Yingwei Li, Adams Wei Yu, Tianjian Meng, Ben Caine, Jiquan Ngiam, Daiyi Peng, Junyang Shen, Yifeng Lu, Denny Zhou, Quoc V. Le, Alan Yuille, and Mingxing Tan. Deepfusion: Lidar-camera deep fusion for multi-modal 3d object detection. In CVPR, 2022b.

- Li et al. [2023] Yinhao Li, Zheng Ge, Guanyi Yu, Jinrong Yang, Zengran Wang, Yukang Shi, Jianjian Sun, and Zeming Li. Bevdepth: Acquisition of reliable depth for multi-view 3d object detection. In AAAI, 2023.

- Li et al. [2022c] Zhiqi Li, Wenhai Wang, Hongyang Li, Enze Xie, Chonghao Sima, Tong Lu, Yu Qiao, and Jifeng Dai. Bevformer: Learning bird’s-eye-view representation from multi-camera images via spatiotemporal transformers. In ECCV, 2022c.

- Liang et al. [2022] Tingting Liang, Hongwei Xie, Kaicheng Yu, Zhongyu Xia, Zhiwei Lin, Yongtao Wang, Tao Tang, Bing Wang, and Zhi Tang. Bevfusion: A simple and robust lidar-camera fusion framework. In NeurIPS, 2022.

- Liu et al. [2022] Yingfei Liu, Tiancai Wang, Xiangyu Zhang, and Jian Sun. Petr: Position embedding transformation for multi-view 3d object detection. In ECCV, 2022.

- Liu et al. [2023a] Yingfei Liu, Junjie Yan, Fan Jia, Shuailin Li, Aqi Gao, Tiancai Wang, and Xiangyu Zhang. Petrv2: A unified framework for 3d perception from multi-camera images. In ICCV, 2023a.

- Liu et al. [2023b] Zhijian Liu, Haotian Tang, Alexander Amini, Xinyu Yang, Huizi Mao, Daniela L Rus, and Song Han. Bevfusion: Multi-task multi-sensor fusion with unified bird’s-eye view representation. In ICRA, 2023b.

- Loshchilov and Hutter [2017] Ilya Loshchilov and Frank Hutter. SGDR: stochastic gradient descent with warm restarts. In ICLR, 2017.

- Loshchilov and Hutter [2019] Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. In ICLR, 2019.

- Mao et al. [2023] Jiageng Mao, Shaoshuai Shi, Xiaogang Wang, and Hongsheng Li. 3d object detection for autonomous driving: A comprehensive survey. IJCV, 2023.

- Nekrasov et al. [2019] Vladimir Nekrasov, Thanuja Dharmasiri, Andrew Spek, Tom Drummond, Chunhua Shen, and Ian D. Reid. Real-time joint semantic segmentation and depth estimation using asymmetric annotations. In ICRA, 2019.

- Philion and Fidler [2020] Jonah Philion and Sanja Fidler. Lift, splat, shoot: Encoding images from arbitrary camera rigs by implicitly unprojecting to 3d. In ECCV, 2020.

- Qi et al. [2018] Charles R Qi, Wei Liu, Chenxia Wu, Hao Su, and Leonidas J Guibas. Frustum pointnets for 3d object detection from rgb-d data. In CVPR, 2018.

- Reading et al. [2021] Cody Reading, Ali Harakeh, Julia Chae, and Steven L Waslander. Categorical depth distribution network for monocular 3d object detection. In CVPR, 2021.

- Smith [2017] Leslie N. Smith. Cyclical learning rates for training neural networks. In WACV, 2017.

- Song et al. [2024] Ziying Song, Lin Liu, Feiyang Jia, Yadan Luo, Guoxin Zhang, Lei Yang, Li Wang, and Caiyan Jia. Robustness-aware 3d object detection in autonomous driving: A review and outlook. arXiv preprint arXiv:2401.06542, 2024.

- Team [2020] OpenPCDet Development Team. Openpcdet: An open-source toolbox for 3d object detection from point clouds. https://github.com/open-mmlab/OpenPCDet, 2020.

- Vora et al. [2020] Sourabh Vora, Alex H Lang, Bassam Helou, and Oscar Beijbom. Pointpainting: Sequential fusion for 3d object detection. In CVPR, 2020.

- Wang et al. [2021a] Chunwei Wang, Chao Ma, Ming Zhu, and Xiaokang Yang. Pointaugmenting: Cross-modal augmentation for 3d object detection. In CVPR, 2021a.

- Wang et al. [2023a] Haiyang Wang, Hao Tang, Shaoshuai Shi, Aoxue Li, Zhenguo Li, Bernt Schiele, and Liwei Wang. Unitr: A unified and efficient multi-modal transformer for bird’s-eye-view representation. In ICCV, 2023a.

- Wang et al. [2023b] Ke Wang, Tianqiang Zhou, Xingcan Li, and Fan Ren. Performance and challenges of 3d object detection methods in complex scenes for autonomous driving. IEEE Trans. Intell. Veh., 2023b.

- Wang et al. [2021b] Tai Wang, Xinge Zhu, Jiangmiao Pang, and Dahua Lin. Fcos3d: Fully convolutional one-stage monocular 3d object detection. In ICCV, 2021b.

- Wang et al. [2019] Yan Wang, Wei-Lun Chao, Divyansh Garg, Bharath Hariharan, Mark Campbell, and Kilian Q Weinberger. Pseudo-lidar from visual depth estimation: Bridging the gap in 3d object detection for autonomous driving. In CVPR, 2019.

- Wang et al. [2021c] Yue Wang, Vitor Guizilini, Tianyuan Zhang, Yilun Wang, Hang Zhao, and Justin Solomon. DETR3D: 3d object detection from multi-view images via 3d-to-2d queries. In CoRL, 2021c.

- Xie et al. [2023a] Shaoyuan Xie, Lingdong Kong, Wenwei Zhang, Jiawei Ren, Liang Pan, Kai Chen, and Ziwei Liu. Robobev: Towards robust bird’s eye view perception under corruptions. arXiv preprint arXiv:2304.06719, 2023a.

- Xie et al. [2023b] Yichen Xie, Chenfeng Xu, Marie-Julie Rakotosaona, Patrick Rim, Federico Tombari, Kurt Keutzer, Masayoshi Tomizuka, and Wei Zhan. Sparsefusion: Fusing multi-modal sparse representations for multi-sensor 3d object detection. In ICCV, 2023b.

- Yan et al. [2023] Junjie Yan, Yingfei Liu, Jianjian Sun, Fan Jia, Shuailin Li, Tiancai Wang, and Xiangyu Zhang. Cross modal transformer: Towards fast and robust 3d object detection. In ICCV, 2023.

- Yan et al. [2018] Yan Yan, Yuxing Mao, and Bo Li. Second: Sparsely embedded convolutional detection. Sensors, 2018.

- Yang et al. [2023] Chenyu Yang, Yuntao Chen, Hao Tian, Chenxin Tao, Xizhou Zhu, Zhaoxiang Zhang, Gao Huang, Hongyang Li, Yu Qiao, Lewei Lu, et al. Bevformer v2: Adapting modern image backbones to bird’s-eye-view recognition via perspective supervision. In CVPR, 2023.

- Yang et al. [2022] Zeyu Yang, Jiaqi Chen, Zhenwei Miao, Wei Li, Xiatian Zhu, and Li Zhang. Deepinteraction: 3d object detection via modality interaction. In NeurIPS, 2022.

- Yin et al. [2021a] Tianwei Yin, Xingyi Zhou, and Philipp Krähenbühl. Center-based 3d object detection and tracking. In CVPR, 2021a.

- Yin et al. [2021b] Tianwei Yin, Xingyi Zhou, and Philipp Krähenbühl. Multimodal virtual point 3d detection. In NeurIPS, 2021b.

- Yu et al. [2023] Kaicheng Yu, Tang Tao, Hongwei Xie, Zhiwei Lin, Tingting Liang, Bing Wang, Peng Chen, Dayang Hao, Yongtao Wang, and Xiaodan Liang. Benchmarking the robustness of lidar-camera fusion for 3d object detection. In CVPRW, 2023.

- Zhang et al. [2019] Wenwei Zhang, Hui Zhou, Shuyang Sun, Zhe Wang, Jianping Shi, and Chen Change Loy. Robust multi-modality multi-object tracking. In ICCV, 2019.

- Zhang and Yang [2022] Yu Zhang and Qiang Yang. A survey on multi-task learning. IEEE Trans. Knowl. Data Eng., 2022.

- Zhou and Tuzel [2018] Yin Zhou and Oncel Tuzel. Voxelnet: End-to-end learning for point cloud based 3d object detection. In CVPR, 2018.

- Zhu et al. [2019] Benjin Zhu, Zhengkai Jiang, Xiangxin Zhou, Zeming Li, and Gang Yu. Class-balanced grouping and sampling for point cloud 3d object detection. arXiv preprint arXiv:1908.09492, 2019.

- Zhu et al. [2023] Zijian Zhu, Yichi Zhang, Hai Chen, Yinpeng Dong, Shu Zhao, Wenbo Ding, Jiachen Zhong, and Shibao Zheng. Understanding the robustness of 3d object detection with bird’s-eye-view representations in autonomous driving. In CVPR, 2023.