Robust High-Dimensional Regression with Coefficient Thresholding and its Application to Imaging Data Analysis

This Version: January 2021)

Abstract

It is of importance to develop statistical techniques to analyze high-dimensional data in the presence of both complex dependence and possible outliers in real-world applications such as imaging data analyses. We propose a new robust high-dimensional regression with coefficient thresholding, in which an efficient nonconvex estimation procedure is proposed through a thresholding function and the robust Huber loss. The proposed regularization method accounts for complex dependence structures in predictors and is robust against outliers in outcomes. Theoretically, we analyze rigorously the landscape of the population and empirical risk functions for the proposed method. The fine landscape enables us to establish both statistical consistency and computational convergence under the high-dimensional setting. Finite-sample properties of the proposed method are examined by extensive simulation studies. An illustration of real-world application concerns a scalar-on-image regression analysis for an association of psychiatric disorder measured by the general factor of psychopathology with features extracted from the task functional magnetic resonance imaging data in the Adolescent Brain Cognitive Development study.

Keywords— Landscape analysis; Nonconvex optimization; Thresholding function; Scalar-on-image regression.

1 Introduction

Regression analysis of high-dimensional data has been extensively studied in a number of research fields over the last three decades or so. To overcome the high-dimensionality, statistical researchers have proposed a variety of regularization methods to perform variable selection and parameter estimation simultaneously. Among these, the regularization enjoys the oracle risk inequality (Barron et al., 1999) but it is impractical due to its NP-hard computational complexity. In contrast, the regularization (Tibshirani, 1996) provides an effective convex relaxation of the regularization and achieves variable selection consistency under the so-called irrepresentable condition (Zhao & Yu, 2006; Zou, 2006; Wainwright, 2009). The adaptive regularization (Zou, 2006) and the folded concave regularization (Fan & Li, 2001; Zhang, 2010) relax the irrepresentable condition and improve the estimation and variable selection performance. The folded concave penalized estimation can be implemented through a sequence of the adaptive penalized estimations and achieves the strong oracle property (Zou & Li, 2008; Fan et al., 2014).

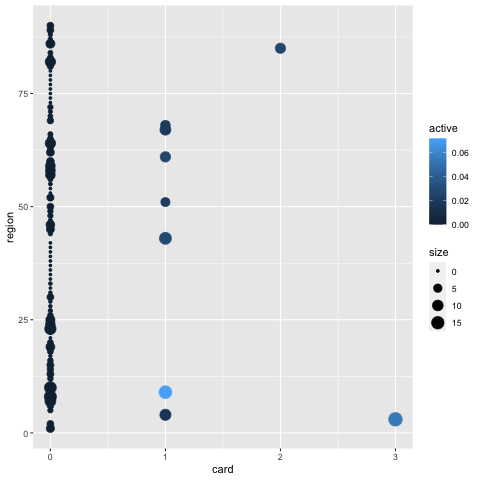

Despite these important advances, existing methods, including the (adaptive) regularization and folded concave regularization, do not work well when predictors are strongly correlated, which is the case especially in scalar-on-image regression analysis (Wang et al., 2017; Kang et al., 2018; He et al., 2018). This paper is motivated by the needs of analyzing the -back working memory task fMRI data in the Adolescent Brain Cognitive Development (ABCD) study (Casey et al., 2018). The task-invoked fMRI imaging measures the blood oxygen level signal that is linked to personal neural activities when performing a specific task. The -back task is a commonly used approach to making assessment in psychology and cognitive neuroscience with a focus on working memory. One question of interest is to understand the association between the risk of developing psychiatry disorder and features related to functional brain activity. We use the 2-back versus 0-back contrast map statistics derived from the -back task fMRI data as imaging predictors. We aim at identifying important imaging biomarkers that are strongly associated with the general factor of psychopathology (GFP) or “p-factor”. GFP is a psychiatric disorder outcome used to evaluate the overall mental health of a subject. In this application, it is expected that the irrepresentable condition of the regularization can be easily violated by strong dependence among high dimensional imaging predictors from fMRI data. To illustrate the presence of strong dependence among imaging predictors, in Figure 1, we plot the largest absolute value of correlation coefficients and the number of correlation coefficients that are or between brain regions. Obviously, there exists strong dependence among imaging predictors, so that existing methods may not have a satisfactory performance in the scalar-on-image analysis. See the simulation study in Section 4 for more details.

To effectively address potential technical challenges in the presence of such strongly correlated predictors, we consider a new approach based on the thresholding function technique. The rationale behind our idea is rooted in attractive performances given by various recently developed thresholding methods, including the hard-thresholding property of the regularization shown in Fan & Lv (2013). They showed that the global minimizer in the thresholded parameter space enjoys the variable selection consistency. Thus, with proper thresholding of coefficients, it is possible to significantly relax the irrepresentable condition while to address the strong dependence in the scalar-on-image regression analysis. Recently, manifested by the potential power of the thresholding strategy, Kang et al. (2018) studied a new class of Bayesian nonparametric models based on the soft-thresholded Gaussian prior, and Sun et al. (2019) proposed a two-stage hard thresholding regression analysis that applies a hard thresholding function on the initial -penalized estimator. To achieve the strong oracle property (Fan et al., 2014), Sun et al. (2019) required the initial solution is reasonably close to the true solution in aspect of norm with high probability.

Robustness against outliers occurring from heavy-tailed errors is of great importance in the scalar-on-image analysis. Due to various limitations of used instruments and quality control in data preprocessing, fMRI data often involves many potential outliers(Poldrack, 2012), compromising the stability and reliability of standard regression analyses. fMRI indirectly measures neural activity by assessing blood-oxgen-level-dependent signals and its signal-to-noise ratio is often low(Lindquist et al., 2008). Moreover, the complexity of fMRI techniques limits the capacity of unifying fMRI data preprocessing procedures (Bennett & Miller, 2010; Brown & Behrmann, 2017) to identify and remove outliers effectively. Standard regression analysis with contaminated data may lead to a high rate of false positives in inference, as shown in many empirical studies (Eklund et al., 2012, 2016). It is loudly advocated that potential outliers should be taken into account in the study of brain functional connectivity using fMRI data (Rosenberg et al., 2016). In the lens of robustness, many works have been proposed to study the high dimensional robust regression problem. El Karoui et al. (2013) studied the consistency of regression with a robust loss function such as the least absolute deviation (LAD). In a high-dimensional robust regression, Loh (2017) showed that the use of a robust loss can help achieve the optimal rate of regression coefficient estimation with independent zero-mean error terms. In addition, Loh (2018) showed that by calibrating with a scale estimator in the Huber loss, the regularized robust regression estimator can be further improved.

In the current literature of the high dimensional scalar-on-image regression, Goldsmith et al. (2014) introduced a single-site Gibbs sampler that incorporates spatial information in a Bayesian regression framework to perform the scalar-on-image regression. Li et al. (2015) introduced a joint Ising and Dirichlet process prior to develop a Bayesian stochastic search variable selection. Wang et al. (2017) proposed a generalized regression model in which the image is assumed to belong to the space of bounded total variation incorporating the piece-wise smooth nature of fMRI data. Motivated by these works, in this paper we first introduce a new integrated robust regression model with coefficient thresholding and then propose a penalized estimation procedure with provable theoretical guarantees, where the noise distribution is not restricted to be sub-Gaussian. Specifically, we propose to use a smooth thresholding function to approximate the discrete hard thresholding function and tackle the strong dependence of predictors together with the use of the smoothed Huber loss (Charbonnier et al., 1997) to achieve desirable robust estimation. We design a customized composite gradient descent algorithm to efficiently solve the nonconvex and nonsmooth optimization problem. The proposed coefficient thresholding method is capable of incorporating intrinsic group structures of high-dimensional imaging predictors and dealing with their strong spatial and functional dependencies. Moreover, the proposed method effectively improves robustness and reliability.

The proposed regression with the coefficient thresholding method results in a nonconvex objective function in optimization. In the current literature, it becomes an increasingly important research topic to obtain the statistical and computational guarantees for nonconvex optimization methods. The local linear approximation (LLA) approach (Zou & Li, 2008; Fan et al., 2014, 2018) and the Wirtinger flow method (Candes et al., 2015; Cai et al., 2016) directly have enabled to analyze the computed local solution. The restricted strong convexity (RSC) condition (Negahban et al., 2012; Negahban & Wainwright, 2012; Loh & Wainwright, 2013, 2017) and the null consistency condition (Zhang & Zhang, 2012) were used to prove the uniqueness of the sparse local solution. However, it still remains non-trivial to study theoretical properties of the proposed robust regression with coefficient thresholding. The nonconvex optimization cannot be directly solved by the LLA approach, and the RSC condition does not hold. Alternatively, following the seminal paper by Mei et al. (2018), we carefully study the landscape of the proposed method. We prove that the proposed nonconvex loss function has a fine landscape with high probability and also establish the uniform convergence of the directional gradient and restricted Hessian of the empirical risk function to their population counterparts. Thus, under some mild conditions, we can establish key statistical and computational guarantees. Let be the sample size, be the dimension of predictors, and the size of the support set of true parameters. Specifically, we prove that, with high probability, (i) any stationary solution achieves the oracle inequality under the norm when ; (ii) the proposed nonconvex estimation procedure has a unique stationary solution that is also the global solution when ; and (iii) the proposed composite gradient descent algorithm attains the desired stationary solution. We shall point out that both statistical and computational guarantees of the proposed method do not require a specific type of initial solutions.

The rest of this paper is organized as follows. Section 2 first revisits the irrepresentable condition and then proposes the robust regression with coefficient thresholding and nonconvex estimation. Section 3 studies theoretical properties of the proposed method, including both statistical guarantees and computational guarantees. The statistical guarantees include the landscape analysis of the nonconvex loss function and asymptotic properties of the proposed estimators, while the computational guarantees concern the convergence of the proposed algorithm. Simulation studies are presented in Section 4 and the real application is demonstrated in Section 5. Section 6 includes a few concluding remarks. All the remaining technical details are given in the supplementary file.

2 Methodology

We begin with revisiting the irrepresentable condition and its relaxations in Subsection 2.1. After that, Subsection 2.2 proposes a new high-dimensional robust regression with coefficient thresholding.

2.1 The Irrepresentable Condition and its Relaxations

In the high-dimensional linear regression is an -dimensional response vector, is an deterministic design matrix, is a -dimensional true regression coefficient vector and is an error vector with mean zero. We wish to recover the true sparse vector , whose support satisfies that its cardinality . We use as the shorthand for the support set .

The irrepresentable condition is known to be sufficient and almost necessary for the variable selection consistency of the penalization (Zhao & Yu, 2006; Zou, 2006; Wainwright, 2009). Specifically, the design matrix should satisfy that

for some incoherence constant , This condition requires that the variables in the true support are weakly correlated with other variables that are not in the true support.

There are several versions of relaxation of the irrepresentable condition in the current literature. Zou (2006) used the adaptive weights ’s in the penalization and relaxed the irrepresentable condition as:

where denotes the Hadamard (or componentwise) product of two vectors. The folded concave penalization (Fan & Li, 2001; Zhang, 2010) also relaxed the irrepresentable condition. Let be the folded concave penalty function. Given the local linear approximation and the current solution , the folded concave penalization relaxed this condition as:

where is the subgradient of the function . Both the adaptive penalization and folded concave penalization utilized the differential penalty functions to relax the restrictive irrepresentable condition. The procedure of adaptive lasso and solving folded concave penalized problem using local linear approximation (LLA) both require a good initialization. Their dependence on the initial solution is inevitably affected by the irrepresentable condition. As a promising alternative, we consider the assignment of adaptive weights on the design matrix , which down-weights the unimportant variables. Consider the ideal adaptive weight function , where goes to when and goes to , otherwise. We propose the following nonconvex estimation procedure:

Let . The irrepresentable condition is now relaxed in this paper as follows:

Given the ideal adaptive weight function , the proposed approach further relaxes the irrepresentable condition in comparison to those considered by existing methods. Unlike existing methods, we propose an integrated nonconvex estimation procedure without depending on initial solutions.

2.2 The Proposed Method

The weight function plays an important role in relaxing the irrepresentable condition. If we knew the oracle threshold , the hard thresholding function would be the ideal choice for the weight function . However, the discontinuity of is challenging for associated optimization. To overcome, we consider a smooth approximation of given by

where . Since as when , we have as when . Figure 2 illustrates the smooth approximation of to when is small (e.g.,

Given the above smooth thresholding function , we propose the robust regression with coefficient thresholding:

| (1) |

where following Mei et al. (2018) and Loh & Wainwright (2017) we assume that the regression coefficients are bounded in the Euclidean ball . Here, to tackle possible outliers, we choose to be a differentiable and possibly nonconvex robust loss function such as the pseudo Huber loss (Charbonnier et al., 1997) or Tukey’s biweight loss. For the ease of presentation, throughout this paper, we use the pseudo Huber loss of the following form:

| (2) |

Note that provides a smooth approximation of the Huber loss (Huber, 1964) and bridges over the loss and the loss. Specifically, is approximately an loss when is small and an loss with slope when is large. It is worth pointing out that the utility of the pseudo Huber loss essentially adds a weight on each observation by in the following form:

where is a fitted value. Hence, outliers are down-weighted to alleviate potential estimation bias.

To deal with a large number of predictors, we propose the following penalized estimation of high-dimensional robust regression with coefficient thresholding:

| (3) |

where is a chosen penalty function such as the penalization or folded concave penalization. In the scalar-on-image analysis, often we need to incorporate some known group information into . Suppose that the coefficient vector is divided into separate subvectors . We can use the group penalty (Yuan & Lin, 2006) or sparse group penalty (Simon et al., 2013) in the penalized estimation (3). With , the sparse robust regression with coefficient thresholding and group selection can be written as follows:

| (4) |

which includes the penalization as a special example with . The penalized robust regression with coefficient thresholding (3) and (4) can be efficiently solved by a customized composite gradient descent algorithm with provable guarantees, and the details will be presented in Subsection 3.3.

REMARK 1.

Both thresholding function and robust loss work together to relax the restrictive irrepresentable condition in the presence of possible outliers. Define as the weight matrix with . The irrepresentable condition for the -penalized robust loss can be written as

where . We impose the weights on the row vectors of design matrix . Recall that the thresholding function adds the weights to the column vectors of . Now, with in (3), we add the entry-specific weight to with

Thus, the irrepresentable condition is now relaxed as follows:

where . By assigning column-wise weight, we allow strong dependence between variables in the true support and other variables; By assigning row-wise weight, we reduce the weights for outliers and enhance the robustness.

REMARK 2.

The proposed method can be considered as the simultaneous estimation of regression coefficients and adaptive weights to improve the adaptive penalization (Zou, 2006), whose weights are usually solved from the initial solution or iterated solution. Let . Since is a continuous and injective function of (say, ), there is a unique such that holds. Hence, we may write . The minimization in the proposed high-dimensional robust regression with coefficient thresholding can be rewritten as follows:

| (5) |

However, it does not fall into the folded-concave penalized estimation (Fan & Li, 2001; Fan et al., 2014). The proposed method aims to address the presence of highly correlated predictors, whereas the nonconvex penalized estimation was motivated by correcting the bias of the -penalized estimation. Also, solving (5) is extremely challenging, since the denominator can be arbitrarily small when . In comparison, the thresholding function is bounded and the non-convex optimization in (4) is computationally tractable.

REMARK 3.

The proposed method is also related to Bayesian methods (Nakajima & West, 2013a, b, 2017; Kang et al., 2018; Ni et al., 2019; Cai et al., 2019). In the scalar-on-image regression, Kang et al. (2018) proposed a soft-thresholded Gaussian process (STGP) that enjoys posterior consistency for both parameter estimation and variable selection. In this Bayesian framework, is assumed to follow an STGP, where the soft thresholding function is defined as

| (6) |

Thus the regression model can be written as follows:

where is a realization of a Gaussian process. Compared to Kang et al. (2018), our proposed method is more robust to possible outliers, and uses a very different approach to incorporate the thresholding function that down-weights unimportant variables and achieves sparsity. The proposed method also enjoys both statistical and computational guarantees as detailed in Section 3, whereas the convergence rate of the posterior computation algorithm in Kang et al. (2018) is still unclear.

3 Theoretical Properties

This section studies the theoretical properties of our proposed method. After establishing the connection of our proposed method with the thresholded parameter space in Subsection 3.1, we present the landscape analysis and asymptotic properties in Subsection 3.2, and the computational guarantee for an efficient composite gradient descent algorithm in Subsection 3.3.

3.1 Connection with the Thresholded Parameter Space

It is known that -penalization enjoys the oracle risk inequality (Barron et al., 1999). Fan & Lv (2013) proved that the global solution of -penalization, denoted by , satisfies the hard thresholding property that is either or larger than a positive threshold whose value depends on and and tuning parameter . Specifically, Fan & Lv (2013) studied the oracle properties of regularization methods over the thresholded parameter space defined as

| (7) |

Given the thresholded parameter space , we introduce the high-dimensional regression with coefficient hard-thresholding as follows:

| (8) |

This thresholded parameter space guarantees that the hard thresholding property is satisfied with proper and norm upper bounds. Suppose that global solutions to both (3) and (8) exist. It is intuitive that if is very small, an optimal solution to (3) should be “close” to that of (8). Apparently, converges to pointwisely almost everywhere as . However it is known that almost surely pointwise convergence does not guarantee the convergence of minimizers (e.g., (Rockafellar & Wets, 2009, Section 7.A)). Here we provide the following proposition to show that the global solution of the proposed method (3) converges to the minimizer of coefficient hard-thresholding in (8) with additional assumptions.

PROPOSITION 1.

Suppose that is a sequence of scale parameters in the weight function such that goes to as . Let be a global minimizer of (3) with . If

-

(i)

and

-

(ii)

For any , there exists , such that

then with arbitrary high probability, the sequence enjoys a cluster point , which is the global minimizer of the high-dimensional regression with coefficient hard-thresholding in (8).

REMARK 4.

Conditions (i) and (ii) of Proposition 1 are mild in our setting. Condition (i) assumes that the threshold level is smaller than the minimal signal level and the norm of true regression coefficients is bounded. Condition (ii) assumes the magnitude of the estimated is bounded away from the threshold level with high probability, that is, , . Note that the true regression coefficients ’s are either zero or larger than the threshold level . As long as the estimator is consistent (see Theorem 2), Condition (ii) can be satisfied.

Given Proposition 1, we can prove that the total number of falsely discovered signs of the cluster point , i.e., the cardinality , converges to zero for the folded concave penalization under the squared loss and sub-Gaussian tail conditions. Recall that the folded concave penalty function satisfies that (i) it is increasing and concave in , (ii) , and (iii) it is differentiable with for some . Define as the smallest possible positive integer such that there exists an submatrix of having a singular value less than a given positive constant . is named robust spark in (Fan & Lv, 2013) to ensure model identifiability. Note that is an upper bound on the total number of false positives and false negatives. The following proposition implies the variable selection consistency of the cluster point .

PROPOSITION 2.

Suppose we re-scale each column vector of the design matrix for each predictor to have -norm , and the error satisfies the sub-Gaussian tail condition with mean 0. and for some constant . And there exists constant such that . Let be the support of and minimal signal strength . Define as the global minimizer of the following optimization problem:

| (9) |

If the penalization parameter is chosen such that for some constant , then with probability , we have

where and are constants.

Propositions 1 and 2 indicate that the cluster point enjoys the similar properties as those of the global solution given by the -penalized methods. The proposed method mimics the -penalization to remove irrelevant predictors and improve the estimation accuracy as .

In the sequel, we will show that with high probability our proposed method has a unique global minimizer (See Theorem 2(b)). In this aspect, our proposed regression with coefficient thresholding estimator provides a practical way to approximate the global minimizer over the thresholded space.

3.2 Statistical Guarantee

After presenting technical conditions, we first provide the landscape analysis and establish the uniform convergence from empirical risk to population risk in Subsection 3.2.1 and then prove the oracle inequalities for the unique minimizer of the proposed method in Subsection 3.2.2.

Now we introduce the notation to simplify our analysis. Let be a shorthand of , and let on . Given fixed , we claim that is third continuously differentiable on its domain. After the direct calculations given in Lemma 1 of the supplementary file, we have the explicit upper and lower bounds for the derivatives of , i.e.,

where , , and . Here, and are uniform constants independent of . And represents that is semi positive definite.

For the group lasso penalty, denote by the non-zero groups and by the zero groups, respectively. Following the structure of classical group lasso, let and be the corresponding length of vector and , respectively. Let , , and , where is finite, not diverging with and .

We make the following assumptions on the distribution of predictor vector , the true parameter vector and the distribution of random error .

ASSUMPTION 1.

-

(a)

The predictor vector is -sub-Gaussian with mean zero and continuous density in , namely and for all .

-

(b)

The feature vector spans all directions in , namely for some .

-

(c)

The true coefficient vector is sparse such that and . Also, the threshold index in weight function satisfies that .

-

(d)

The random error has a symmetric distribution whose density is strictly positive and decreasing on .

Assumption 1(a) presents the technical conditions on the design matrix. The same conditions were considered in Mei et al. (2018) for binary linear classification and robust regression. The sub-Gaussian assumption is a commonly used mild condition in high-dimensional regression. Assumption 1(b) imposes the sparsity on the true parameter vector . Assumption 1(c) permits the possibly heavy tails of the error. We allow the size of the true support set to diverge at rate . Given the sparsity, it is reasonable to limit our theoretical analysis in the Euclidean ball , which can avoid unnecessary technical complications. Also, does not need to be a constant bounded away from in the asymptotic analysis. Since is continuous and injective, there exists a unique , such that and , i.e. is the thresholded version of . In the sequel, we will study the statistical convergence to the surrogate , which shares the same non-zero support with . In Theorem 2, we show that . Then given is Lipschitz with fixed . In this way, we can use as an estimator for . Assumption 1(d) allows random error with heavier tails than the standard Gaussian distribution, and it suits for many applications with outliers. For example, in our simulation, our noise is a mixture distribution with a small variance Gaussian distribution and a large variance Gaussian distribution. Define and . Assumption 1(d) can be relaxed as Assumption 1(d’) , which holds for the right skewed error or discrete error. Our theoretical results given in this paper still hold under Assumption 1(a)–(c) and 1(d’). See Remark 6 for more details.

3.2.1 The Landscape of Population Risk and Empirical Risk

We analyze the landscape of the population and empirical risk functions of the proposed methods. We can bound both gradient vector and Hessian matrix of the population risk

and then prove the uniqueness of its stationary point in the following lemma. We let be the gradient of with respect to and the Hessian matrix. Recall that . Let be the ball with the center and radius .

LEMMA 1.

(Landscape of population risk) Under Assumption 1, the population risk function has the following properties:

-

(a)

There exists an and constants , such that

(10) and for all ,

(11) -

(b)

For same in part (a), there exist constants such that

(12) -

(c)

The population risk has a unique stationary point .

REMARK 5.

Lemma 1 analyze the landscape of population risk and establishes good properties of population risk function. Indeed, we first show that out of the ball , gradient has a lower bound and thus no stationary point exists. In fact, we get a stronger result that no stationary point exists except . Secondly, inside the ball , Hessian matrix of risk function has a lower bound, meaning that is a unique minimizer.

REMARK 6.

Lemma 1 still holds under Assumption 1(a)–(c) and 1(d’). In order to establish a lower bound for the gradient of population risk, we need conditions that for all and . When we consider the pseudo Huber loss, is odd and for all ; is even and . If the exchange of expectation and limit are allowed, is always true. Thus, Lemma 1 and all the following theorems hold with the same proofs.

Next we follow Mei et al. (2018) to develop the uniform convergence results for gradient and Hessian of empirical risk, to study the landscape of the empirical risk function

LEMMA 2.

(Landscape of empirical risk) Under Assumption 1, for any arbitrarily small , there exists a constant depending on parameters , independent of and , such that, if , the following properties hold for , with probability at least :

-

(a)

There exists an and constants , such that

(13) and for all satisfying and ,

(14) -

(b)

For the same in part (a), there exist constants such that

(15) -

(c)

The empirical risk function has a unique minimizer satisfying that

It is worth pointing out that Part (c) of Lemma 2 implies the consistency of the unique minimizer of the empiricial risk function when the dimension diverges with the sample size but . In the sequel, we will establish the oracle inequality for the proposed penalized robust regression with coefficient thresholding when the dimension can be much larger than the sample size .

3.2.2 Oracle Inequality

Given the landscape analysis, we establish the oracle inequality under the ultra-high dimensional setting when is at the nearly exponential order of . Specifically, Theorem 1 shows that the sample directional gradient and restricted Hessian converges uniformly to their population counterparts.

THEOREM 1.

Under Assumption 1, for any arbitrarily small , there exist constants and that depends on such that the following properties hold:

-

(a)

The empirical directional gradient converges uniformly to population directional gradient along the direction , namely

(16) -

(b)

The empirical restricted Hessian converges uniformly to the population restricted Hessian in the set for any . That is, if and , as , we have

(17) where , and .

Theorem 2 proves the oracle inequality of the global minimizer of the proposed high-dimensional robust regression with coefficient thresholding as well as its uniqueness with high probability.

THEOREM 2.

Under Assumption 1, for any arbitrarily small , there exist constants , and that depends on but are independent of , such that as and , the following properties hold with probability at least :

-

(a)

Any stationary point of group-regularized risk minimization satisfies

(18) -

(b)

Furthermore, let . There exists a constant , for such that , the optimization problem (4) has a unique global minimizer .

3.3 Computational Guarantee

Gradient descent algorithms do not work since the objective function is not differentiable at zero. We consider the composite gradient descent algorithm (Nesterov, 2013), which is computationally efficient for solving the non-smooth and nonconvex optimization and enjoys the desired convergence property. Specifically, solving the proposed robust regression with coefficient thresholding on proposed composite gradient descent algorithm consists of two key steps at each iteration: the gradient descent step and the -ball projection step. To perform the step of gradient descent, we derive the gradient vector of the empirical risk function:

where is a diagonal matrix. Given the previous iterated solution , we need to solve the following subproblem:

It is known that this subproblem has a closed-form solution through the following group-wise soft thresholding operator:

where denotes the Hadamard product. Thus, the gradient descent step can be solved as:

Next, in the second step, we perform the following projection onto the -ball:

which is given by the following closed-form projection:

To sum up, the proposed composite gradient descent algorithm can be proceeded as Algorithm 1.

At each iteration, the subproblem can be solved easily with the closed-form solution, and the computational complexity is on the quadratic order of dimension . The algorithmic convergence rate is presented in the following theorem.

THEOREM 3.

Let be the -th iterated solution of Algorithm 1. There exist constants , and are independent of such that when we choose and , we can find one iteration that can be upper bounded by , such that there exists a subgradient we denote as :

where denotes the sub-differential of the group penalty function (Rockafellar, 2015).

REMARK 7.

Theorem 3 provides a theoretical justification of the algorithmic convergence rate. More specifically, the proposed algorithm always converges to an approximate stationary solution (a.k.a. -stationary solution) at finite sample sizes. In other words, for the solution solved after iterations, the subgradient of the objective function is bounded by under the norm at finite sample sizes. When increases, the proposed algorithm will find the stationary solution that satisfies the subgradient optimality condition as . To better understand the algorithmic convergence in practice, we also provide the convergence plots and computational cost of the proposed algorithm in simulation studies in Section C of the supplementary file. Combining Theorems 2 and 3, we may obtain the convergence guarantee with high probability. From both theoretical and practical aspects, the proposed algorithm is computationally efficient and achieves the desired computational guarantee. Observing that the nice empirical gradient structure proved in Theorem 2, in Appendix Section C, we further provide Theorem 4, which is an extension of Theorem 3. It further shows that given the solution is sparse, the composite gradient descent algorithm guarantees a linear convergence rate of , with respect to the number of iterations.

4 Simulation Studies

This section carefully examines the finite-sample performance of our proposed method in simulation studies. To this end, we compare the proposed robust estimator with coefficient thresholding (denoted by RCT) to Lasso, Adaptive Lasso (denoted by AdaLasso), SCAD and MCP penalized estimators in eight different linear regression models (Models 1–8) and with Lasso, Group Lasso (denoted by GLasso), and Sparse Group Lasso (denoted by SGL) in two Gaussian process regression models (Models 9 and 10). Models 9 and 10 mimic the scalar-on-image regression analysis. We implemented the Lasso and the Adaptive lasso estimators using R package ‘glmnet’, and chose the weight for adaptive lasso estimator based on the Lasso estimator. Group lasso is implemented using the method in (Yang, 2015). SCAD and MCP penalized estimators are implemented using R package ‘ncvreg’. And we also verify that the estimation results are consistency with R package ‘Picasso’.

We compare estimation performance based on the root-mean-square error (RMSE, that is ) and variable selection performance based on both false positive rate (FPR) and false negative rate (FNR). , and , where TN, TP, FP and FN represent the numbers of true negative, true positive, false positive and false negative respectively. Each measure is computed as the average over independent replications.

Before proceeding to simulation results, we discuss the choice of tuning parameters for the proposed method. The proposed method involves tuning over the penalization parameter , coefficient thresholding parameter , step size , and radius of the feasible region. Here, is chosen to be relatively small such that the algorithm does not encounter overflow issues. To simplify the tuning process, we set to be a small constant in our empirical studies; alternatively, can also be chosen according to an acceleration process in Nesterov (2013) to further achieve faster convergence. Also, is chosen to be relatively large to specify the appropriate feasible region, and can be specified as any arbitrary quantity that is smaller than the minimal signal strength. In addition, is chosen to be properly small to make our soft-thresholding function mimic the true thresholding function. It is important to point out that the algorithmic convergence and numerical results are generally robust to the choices of , , and . In our simulation studies, we choose , , . With these prespecified , , and , we choose the penalization parameter and using the -folded cross-validation based on prediction error. Additionally, can also be chosen as the quantile of the absolute values of non-zero coefficients of Lasso.

4.1 Linear Regression

First, we simulated data from the linear model where . We consider different correlation structures for in the following six simulation models:

Models 1–3 have autoregressive (AR) correlation structures, in which the irrepresentable condition holds for Model 1 but fails for Models 2 and 3. Models 4-6 have the compound symmetry (CS) correlation structures and the irrepresentable condition fails in all these models.

We assume a mixture model for the error, , where is set much larger than . For Models 1–3, set and or in case (a), (b) or (c), respectively. For Models 4–6, set and , or in case (a), (b) or (c), respectively. For all the models, we choose , and to create a high dimensional regime.

| FPR | FNR | loss | FPR | FNR | loss | FPR | FNR | loss | |

|---|---|---|---|---|---|---|---|---|---|

| Model (1a) | Model (2a) | Model (3a) | |||||||

| Lasso | 0.021 | 0.199 | 3.198 | 0.017 | 0.165 | 3.032 | 0.014 | 0.153 | 3.035 |

| (0.009) | (0.130) | (0.475) | (0.010) | (0.100) | (0.407) | (0.008) | (0.091) | (0.391) | |

| AdaLasso | 0.020 | 0.212 | 3.787 | 0.016 | 0.180 | 3.716 | 0.014 | 0.156 | 3.739 |

| (0.008) | (0.143) | (0.821) | (0.007) | (0.111) | (0.816) | (0.008) | (0.111) | (0.815) | |

| SCAD | 0.007 | 0.422 | 4.148 | 0.008 | 0.474 | 4.750 | 0.010 | 0.575 | 5.979 |

| (0.006) | (0.137) | (0.950) | (0.007) | (0.123) | (1.032) | (0.006) | (0.107) | (1.054) | |

| MCP | 0.003 | 0.625 | 4.709 | 0.004 | 0.654 | 5.430 | 0.003 | 0.694 | 6.201 |

| (0.002) | (0.065) | (0.537) | (0.002) | (0.060) | (0.571) | (0.002) | (0.049) | (0.536) | |

| RCT | 0.010 | 0.177 | 2.860 | 0.004 | 0.071 | 2.041 | 0.002 | 0.018 | 1.466 |

| (0.008) | (0.117) | (0.888) | (0.002) | (0.112) | (0.754) | (0.002) | (0.035) | (0.502) | |

| Model (1b) | Model (2b) | Model (3b) | |||||||

| Lasso | 0.019 | 0.243 | 3.446 | 0.017 | 0.209 | 3.319 | 0.014 | 0.187 | 3.273 |

| (0.010) | (0.140) | (0.372) | (0.008) | (0.101) | (0.406) | (0.008) | (0.087) | (0.422) | |

| AdaLasso | 0.018 | 0.256 | 4.134 | 0.016 | 0.330 | 4.027 | 0.013 | 0.199 | 4.062 |

| (0.008) | (0.130) | (0.657) | (0.008) | (0.104) | (0.687) | (0.008) | (0.107) | (0.726) | |

| SCAD | 0.008 | 0.443 | 4.233 | 0.008 | 0.477 | 4.772 | 0.009 | 0.566 | 5.726 |

| (0.005) | (0.140) | (0.998) | (0.006) | (0.156) | (1.236) | (0.007) | (0.153) | (1.393) | |

| MCP | 0.003 | 0.636 | 4.771 | 0.003 | 0.674 | 5.573 | 0.003 | 0.708 | 6.386 |

| (0.002) | (0.069) | (0.627) | (0.002) | (0.060) | (0.577) | (0.002) | (0.058) | (0.667) | |

| RCT | 0.018 | 0.242 | 3.879 | 0.010 | 0.154 | 3.331 | 0.007 | 0.084 | 2.939 |

| (0.016) | (0.163) | (0.735) | (0.009) | (0.098) | (0.691) | (0.005) | (0.127) | (0.755) | |

| Model (1c) | Model (2c) | Model (3c) | |||||||

| Lasso | 0.018 | 0.338 | 3.706 | 0.017 | 0.244 | 3.589 | 0.014 | 0.242 | 3.611 |

| (0.010) | (0.162) | (0.383) | (0.010) | (0.106) | (0.475) | (0.010) | (0.093) | (0.450) | |

| AdaLasso | 0.019 | 0.195 | 4.148 | 0.016 | 0.258 | 4.469 | 0.012 | 0.243 | 4.459 |

| (0.018) | (0.156) | (0.650) | (0.010) | (0.114) | (0.590) | (0.009) | (0.105) | (0.590) | |

| SCAD | 0.008 | 0.498 | 4.390 | 0.007 | 0.481 | 4.722 | 0.006 | 0.549 | 5.599 |

| (0.005) | (0.134) | (1.034) | (0.005) | (0.147) | (1.331) | (0.006) | (0.149) | (1.612) | |

| MCP | 0.003 | 0.678 | 4.968 | 0.003 | 0.697 | 5.624 | 0.003 | 0.732 | 6.506 |

| (0.001) | (0.069) | (0.716) | (0.002) | (0.054) | (0.709) | (0.002) | (0.047) | (0.823) | |

| RCT | 0.025 | 0.294 | 4.305 | 0.019 | 0.195 | 4.148 | 0.011 | 0.164 | 3.886 |

| (0.156) | (0.230) | (0.574) | (0.018) | (0.156) | (0.650) | (0.011) | (0.136) | (0.688) | |

| FPR | FNR | loss | FPR | FNR | loss | FPR | FNR | loss | |

|---|---|---|---|---|---|---|---|---|---|

| Model (4a) | Model (5a) | Model (6a) | |||||||

| Lasso | 0.040 | 0.337 | 4.075 | 0.041 | 0.387 | 4.183 | 0.041 | 0.374 | 4.144 |

| (0.004) | (0.135) | (0.501) | (0.003) | (0.126) | (0.458) | (0.004) | (0.135) | (0.422) | |

| AdaLasso | 0.033 | 0.369 | 4.035 | 0.033 | 0.421 | 3.726 | 0.032 | 0.443 | 4.512 |

| (0.003) | (0.138) | (0.626) | (0.003) | (0.134) | (0.578) | (0.003) | (0.135) | (0.763) | |

| SCAD | 0.021 | 0.736 | 5.849 | 0.021 | 0.736 | 5.849 | 0.020 | 0.745 | 5.711 |

| (0.007) | (0.147) | (1.238) | (0.007) | (0.147) | (1.238) | (0.009) | (0.149) | (1.151) | |

| MCP | 0.008 | 0.868 | 6.763 | 0.008 | 0.896 | 7.129 | 0.007 | 0.921 | 7.348 |

| (0.003) | (0.084) | (0.558) | (0.003) | (0.065) | (0.541) | (0.003) | (0.064) | (0.510) | |

| RCT | 0.061 | 0.215 | 3.982 | 0.062 | 0.244 | 4.023 | 0.066 | 0.253 | 4.093 |

| (0.007) | (0.089) | (0.265) | (0.009) | (0.093) | (0.283) | (0.009) | (0.098) | (0.314) | |

| Model (4b) | Model (5b) | Model (6b) | |||||||

| Lasso | 0.040 | 0.351 | 4.092 | 0.041 | 0.378 | 4.173 | 0.041 | 0.364 | 4.124 |

| (0.004) | (0.135) | (0.448) | (0.004) | (0.125) | (0.451) | (0.003) | (0.081) | (0.235) | |

| AdaLasso | 0.033 | 0.377 | 4.083 | 0.033 | 0.411 | 4.283 | 0.033 | 0.459 | 4.553 |

| (0.003) | (0.139) | (0.561) | (0.003) | (0.134) | (0.565) | (0.003) | (0.145) | (0.819) | |

| SCAD | 0.022 | 0.719 | 5.563 | 0.021 | 0.722 | 5.668 | 0.020 | 0.744 | 5.802 |

| (0.022) | (0.135) | (1.178) | (0.007) | (0.138) | (1.154) | (0.008) | (0.157) | (1.229) | |

| MCP | 0.008 | 0.868 | 6.738 | 0.007 | 0.900 | 7.142 | 0.008 | 0.900 | 7.146 |

| (0.003) | (0.079) | (0.600) | (0.003) | (0.065) | (0.614) | (0.004) | (0.080) | (0.719) | |

| RCT | 0.060 | 0.226 | 4.019 | 0.063 | 0.260 | 4.067 | 0.066 | 0.275 | 4.138 |

| (0.008) | (0.090) | (0.249) | (0.007) | (0.191) | (0.181) | (0.007) | (0.089) | (0.255) | |

| Model (4c) | Model (5c) | Model (6c) | |||||||

| Lasso | 0.040 | 0.382 | 4.173 | 0.041 | 0.418 | 4.265 | 0.040 | 0.408 | 4.324 |

| (0.003) | (0.131) | (0.385) | (0.004) | (0.133) | (0.360) | (0.003) | (0.103) | (0.352) | |

| AdaLasso | 0.034 | 0.411 | 4.264 | 0.033 | 0.446 | 4.347 | 0.032 | 0.478 | 4.633 |

| (0.002) | (0.135) | (0.473) | (0.003) | (0.127) | (0.446) | (0.003) | (0.140) | (0.549) | |

| SCAD | 0.023 | 0.704 | 5.343 | 0.021 | 0.726 | 5.688 | 0.021 | 0.727 | 5.612 |

| (0.006) | (0.148) | (1.168) | (0.007) | (0.164) | (1.415) | (0.008) | (0.179) | (1.390) | |

| MCP | 0.008 | 0.885 | 6.979 | 0.007 | 0.905 | 7.217 | 0.007 | 0.915 | 7.441 |

| (0.004) | (0.073) | (0.531) | (0.004) | (0.069) | (0.578) | (0.027) | (0.062) | (0.411) | |

| RCT | 0.061 | 0.267 | 4.147 | 0.062 | 0.290 | 4.228 | 0.064 | 0.314 | 4.275 |

| (0.007) | (0.102) | (0.239) | (0.007) | (0.173) | (0.212) | (0.008) | (0.110) | (0.228) | |

Tables 1 and 2 summarize the simulation results for Models 1–6. Several observations can be made from this table. First, in Models 1–3, as the auto correlation increases, it becomes clearer that our robust estimator with coefficient thresholding (RCT) outperforms both Lasso ,Adaptive Lasso (AdaLasso) and nonconvex penalized estimators (SCAD and MCP). Nonconvex penalized estimators do not work well on all these settings, since they tend to choose too-large penalization, and it results in a much higher false negative rate than other methods. The Lasso estimator misses many true signals due to the high correlated , leading to that adaptive lasso also cannot perform well given the Lasso initials. Especially in Model 3, when the auto correlation is high, our thresholding estimator has much smaller FPR and FNR compared to lasso-type methods. Second, in case (a), where the effects of outliers are stronger than (b) and (c), our estimator achieves a relatively better performance than other two estimators thanks to the use of the pseudo Huber loss. Third, in more challenging Models 4–6, our estimator is able to identify more true predictors and well controls false positives. In summary, the proposed estimator is more favorable in high dimensional regression setting, especially when the predictors are highly correlated, such as AR1(0.7) model.

As we introduced in previous sections, nonconvex penalization such as MCP penalized regression does not require irrepresentable condition. However, in our simulation, we found that it does not work well in our setting. We find the major reason is that it does not work not as well as our estimator when dimension is too large comparable the sample size: , in our case. Here we summarize the regression simulation settings for major nonconvex penalized regression papers. Breheny & Huang (2011) has and for linear regression setting. It has larger dimension of features under logistic regression setting, but nonconvex penalized estimator performs no better than penalized estimator in the aspect of prediction. Zhang (2010) has and , with features being generated independently, in which the irrepresentable condition is not violated. Fan et al. (2014) used a setting of and with AR1(0.5) covariance matrix, which does not violate the irrepresentable condition. Loh & Wainwright (2017) used and various different sample sizes larger than . Wang et al. (2014) used a setting of and . In comparison to these reported simulation studies, our setting of , appears to be more challenging, in which larger variances in the generative model add additional difficulty in the analysis. Therefore MCP/SCAD penalized estimators do not perform well in these settings considered in our simulation studies, which clearly demonstrate the advantage of our proposed method.

4.2 Gaussian Process Regression

We now report simulation results to mimic the scalar-on-image regression. In the simulation, we consider a two-dimensional image structure and the whole region is generated by Gaussian processes. In these models, let and , and consider the following covariance structures:

where and are two points in the rectangle . And the coefficients with nonzero effects are in a circle of the graph, with radius 0.1 for the true parameters. The values of the nonzero effects are generated by a uniform distribution on , similar to the setting given in He et al. (2018). The errors follow the same mixture model, . For Models 7–8, set , and refer to case (a), (b) and (c) with and , respectively. A typical realization of for Model 7 is illustrated in Figure 3(a).

In this section, we also evaluate the soft-threholded Gaussian Process(STGP) estimator(Kang et al., 2018), which is a state-of-art scalar-on-image regression method. STGP assumes a spatial smootheness condition, which is satisfied by Model 7-10. The original STGP estimator requires knowledge of whether any two predictors are adjacent. Such information may be unavailable or cannot be implemented in many cases. For example, when the number of voxels are too large to be fully processed, we may have to preselect a subset of voxels or use certain proper dimension reduction techniques to overcome related computational difficulties. Given that all other methods do not need such adjacency information, to fairly compare STGP with other methods, we consider two STGP estimators in our comparison. That is, STGP represents the original STGP estimator; STGP(no-info) represents STGP estimator without using adjacency information.

| FPR | FNR | loss | FPR | FNR | loss | |

|---|---|---|---|---|---|---|

| Model (7a) | Model (8a) | |||||

| Lasso | 0.002 | 0.814 | 7.083 | 0.001 | 0.784 | 6.071 |

| (0.002) | (0.031) | (1.561) | (0.001) | (0.068) | (1.305) | |

| AdaLasso | 0.002 | 0.820 | 12.527 | 0.001 | 0.784 | 0.196 |

| (0.031) | (0.031) | (0.041) | (0.068) | (0.068) | (0.306) | |

| MCP | 0.007 | 0.918 | 7.526 | 0.007 | 0.918 | 7.532 |

| (0.003) | (0.060) | (0.622) | (0.003) | (0.060) | (0.655) | |

| STGP(no-info) | 0.001 | 0.435 | 2.729 | 0.002 | 0.461 | 2.584 |

| (0.002) | (0.161) | (0.335) | (0.006) | (0.185) | (0.621) | |

| RCT | 0.025 | 0.018 | 2.302 | 0.027 | 0.196 | 3.038 |

| (0.001) | (0.041) | (0.342) | (0.014) | (0.306) | (1.083) | |

| Model (7b) | Model (8b) | |||||

| Lasso | 0.002 | 0.825 | 7.328 | 0.001 | 0.805 | 6.639 |

| (0.002) | (0.051) | (1.719) | (0.001) | (0.053) | (0.429) | |

| AdaLasso | 0.003 | 0.815 | 12.995 | 0.001 | 0.807 | 10.936 |

| (0.003) | (0.095) | (4.783) | (0.001) | (0.062) | (6.530) | |

| MCP | 0.007 | 0.918 | 7.525 | 0.007 | 0.918 | 7.532 |

| (0.003) | (0.060) | (0.619) | (0.007) | (0.060) | (0.654) | |

| STGP(no-info) | 0.001 | 0.460 | 2.904 | 0.001 | 0.485 | 2.600 |

| (0.003) | (0.171) | (0.635) | (0.006) | (0.192) | (0.650) | |

| RCT | 0.030 | 0.053 | 2.520 | 0.030 | 0.172 | 2.949 |

| (0.007) | (0.033) | (0.219) | (0.013) | (0.265) | (0.828) | |

| Model (7c) | Model (8c) | |||||

| Lasso | 0.002 | 0.854 | 7.228 | 0.041 | 0.418 | 4.265 |

| (0.001) | (0.033) | (1.222) | (0.006) | (0.050) | (0.360) | |

| AdaLasso | 0.001 | 0.853 | 10.690 | 0.033 | 0.446 | 4.347 |

| (0.001) | (0.033) | (2.728) | (0.003) | (0.127) | (0.446) | |

| MCP | 0.007 | 0.918 | 7.524 | 0.007 | 0.918 | 7.535 |

| (0.007) | (0.060) | (0.612) | (0.003) | (0.069) | (0.675) | |

| STGP(no-info) | 0.001 | 0.484 | 2.753 | 0.003 | 0.476 | 2.561 |

| (0.003) | (0.210) | (0.610) | (0.008) | (0.226) | (0.577) | |

| RCT | 0.034 | 0.016 | 2.761 | 0.045 | 0.303 | 3.284 |

| (0.008) | (0.105) | (0.298) | (0.016) | (0.320) | (1.215) | |

As shown in Table 3, Lasso, Adaptive Lasso, SCAD and MCP fail to identify most of the true predictors and have a very high FNR, while our proposed estimator is consistently the best among all these models. It also indicates that the thresholding function helps us deal with these very complicated covariance structures of predictors. RCT also outperforms STGP(no-info), and has compatible performance with original STGP estimator, despite our estimator are not restricted with adjacency information.

We consider two more cases when the group lasso penalty is necessary to be applied. In Models 9–10, we partition the whole image space into 25 sub-regions with equal numbers of predictors in each region. The covariance structure inside each region is the same as Models 7–8. Correlations between different regions are 0.9. Then we randomly assign two regions with each non-zero regions of radius 0.13, which makes around 1/3 of the points within the selected region has non-zero effects. We assign all non-zero effects as 2. The covariance structures can be summarized as:

where is the mean for region , and , . For the noise term, we still set and as case (a),(b) and (c), respectively, and . For these two models, we compare performances of the Lasso, the group Lasso (GLasso), the sparse Group Lasso (SGL), STGP and the proposed estimator (RCT) in Table 4. As a key difference from Models and , Models and impose the group structure on both and . Figure 3(b) shows an illustration of the simulated imaging predictor in Models 7 and 9.

| FPR | FNR | R-FPR | R-FNR | loss | FPR | FNR | R-FPR | R-FNR | loss | |

|---|---|---|---|---|---|---|---|---|---|---|

| Model (9a) | Model (10a) | |||||||||

| Lasso | 0.019 | 0.270 | 0.101 | 0 | 25.607 | 0.028 | 0.411 | 0.140 | 0 | 33.091 |

| (0.004) | (0.072) | (0.091) | (0) | (3.235) | (0.006) | (0.100) | (0.083) | (0) | (3.380) | |

| GLasso | 0.220 | 0.378 | 0.232 | 0 | 16.101 | 0.215 | 0.403 | 0.232 | 0 | 16.128 |

| (0.055) | (0.151) | (0.094) | (0) | (0.626) | (0.054) | (0.141) | (0.094) | (0) | (0.621) | |

| SGL | 0.115 | 0.010 | 0.135 | 0 | 12.507 | 0.126 | 0.012 | 0.126 | 0 | 13.366 |

| (0.035) | (0.022) | (0.060) | (0) | (0.198) | (0.037) | (0.030) | (0.063) | (0) | (0.523) | |

| STGP | 0.063 | 0 | 0.109 | 0 | 12.540 | 0.061 | 0 | 0.110 | 0 | 12.642 |

| (0.010) | (0) | (0.060) | (0) | (0.281) | (0.007) | (0) | (0.060) | (0) | (0.354) | |

| RCT | 0.059 | 0 | 0.087 | 0 | 13.114 | 0.064 | 0 | 0.111 | 0 | 13.505 |

| (0.003) | (0) | (0.087) | (0) | (0.198) | (0.007) | (0) | (0.091) | (0) | (0.247) | |

| Model (9b) | Model (10b) | |||||||||

| Lasso | 0.019 | 0.347 | 0.166 | 0 | 27.557 | 0.028 | 0.415 | 0.145 | 0 | 32.957 |

| (0.004) | (0.078) | (0.088) | (0) | (3.128) | (0.006) | (0) | (0.096) | (0) | (0.273) | |

| GLasso | 0.223 | 0.372 | 0.232 | 0 | 16.084 | 0.214 | 0.405 | 0.232 | 0 | 16.119 |

| (0.053) | (0.143) | (0.094) | (0) | (0.618) | (0.056) | (0.140) | (0.094) | (0) | (0.619) | |

| SGL | 0.130 | 0.012 | 0.170 | 0 | 12.656 | 0.128 | 0.020 | 0.165 | 0 | 13.353 |

| (0.032) | (0.026) | (0.060) | (0) | (0.603) | (0.037) | (0.046) | (0.091) | (0) | (0.615) | |

| STGP | 0.061 | 0 | 0.114 | 0 | 12.520 | 0.062 | 0 | 0.113 | 0 | 12.536 |

| (0.007) | (0) | (0.062) | (0) | (0.378) | (0.007) | (0) | (0.039) | (0) | (0.173) | |

| RCT | 0.066 | 0 | 0.161 | 0 | 13.269 | 0.065 | 0 | 0.120 | 0 | 13.670 |

| (0.006) | (0) | (0.100) | (0) | (0.281) | (0.006) | (0) | (0.096) | (0) | (0.273) | |

| Model (9c) | Model (10c) | |||||||||

| Lasso | 0.019 | 0.394 | 0.180 | 0 | 27.949 | 0.027 | 0.427 | 0.151 | 0 | 32.553 |

| (0.004) | (0.075) | (0.102) | (0) | (3.328) | (0.005) | (0.098) | (0.098) | (0) | (3.517) | |

| GLasso | 0.223 | 0.354 | 0.232 | 0 | 16.064 | 0.211 | 0.407 | 0.232 | 0 | 16.114 |

| (0.058) | (0.143) | (0.094) | (0) | (0.619) | (0.054) | (0.137) | (0.094) | (0) | (0.634) | |

| SGL | 0.128 | 0.013 | 0.165 | 0 | 12.869 | 0.135 | 0.016 | 0.213 | 0 | 13.566 |

| (0.033) | (0.020) | (0.123) | (0) | (0.700) | (0.040) | (0.027) | (0.170) | (0) | (0.848) | |

| STGP | 0.059 | 0 | 0.098 | 0 | 12.565 | 0.126 | 0.012 | 0.126 | 0 | 13.366 |

| (0.006) | (0) | (0.064) | (0) | (0.362) | (0.037) | (0.030) | (0.063) | (0) | (0.523) | |

| RCT | 0.067 | 0 | 0.157 | 0 | 13.581 | 0.059 | 0 | 0.088 | 0 | 12.655 |

| (0.006) | (0) | (0.092) | (0) | (0.260) | (0.007) | (0) | (0.066) | (0) | (0.324) | |

In Table 4, we include the region level false positive rate (Region FPR) and region level false negative rate (region FNR) to measure the variable selection accuracy within each region. They are computed based on whether there is at least one variable in the region is selected. Compared with the group penalty, the proposed method identified almost all the correct groups with zero false negatives and achieved a more satisfying FPR and the loss. The proposed method is less likely to over-select either predictors or regions than the sparse group Lasso. It implies that the thresholding function effectively achieved a lower FPR. Again, RCT has compatible performance with original STGP estimator, despite our estimator are not restricted with adjacency information.

5 Application to Scalar-on-Image Analysis

This section applies the proposed method to analyze the 2-back versus 0-back contrast maps derived from the -back task fMRI imaging data in the Adolescent Brain Cognitive Development (ABCD) study (Casey et al., 2018). Our goal is to identify the important imaging features from the contrast maps that are strongly associated with the risk of psychairtric disorder, measured by the general factor of psychopathology (GFP) or “p-factor”. After the standard fMRI prepossessing steps, all the images are registered into the 2 mm standard Montreal Neurological Institute (MNI) space consisting of 160,990 voxels in the 90 Automated Anatomical Labeling (AAL) brain regions. With the missing values being removed, the data used in our analysis consists of 2,070 subjects. To reduce the dimension of the imaging data, we partition 90 AAL regions into 2,518 sub-regions with each region consisting of an average of 64 voxels. We refer to each subregion as a super-voxel. For each subject, we compute the average intensity values of the voxels within each super-voxel as its intensity. We consider those 2,518 super-voxel-wise intensity values as the potential imaging predictors.

There are several challenging issues in the scalar-on-image regression analysis of this dataset. First, the correlations between super-voxels across the 90 AAL regions can be very high and the correlation patterns are complex. In fact, there are 151,724 voxel pairs across these regions having a correlation larger than 0.8 (or less than ), and 9,038 voxel pairs with a correlation larger than 0.9 (or less than ). Figure 1 visualizes the region-wise correlation structures, where panel (a) shows the highest correlations between regions; and panel (b) counts the voxel pairs that have a correlation higher than 0.8 (or less than ) in each corresponding region pair. Given the imaging predictors having such high and complicated covariance structures, the classical lasso or the group lasso method may fail to perform variable selection satisfactorily. In contrast, the proposed model with coefficient thresholding is developed to resolve this issue since it does not require the strong conditions on the design matrix. Second, the AAL brain atlas provides useful information on the brain structure and function that may be related to the risk of psychiatry disorder. It is of interest to integrate the AAL region partition as grouping information of imaging predictors to improve the accuracy of imaging feature selection. Third, the outcome variable “p-factor” has a right skewed marginal distribution (see Figure 4), thus its measurements are more likely to contain outliers. The existing non-robust scalar-on-image regression methods may produce inaccurate results. All the aforementioned challenging issues motivate the needs of developing our robust regression with coefficient thresholding and group penalty.

In our analysis, we adjust confounding effects by including a few predictors in the model: family size, gender, race, highest parents education, household marital status and household income level. Given the intrinsic group structure, we compare the performance of the proposed method and the STGP in this data analysis. As we mentioned, the fMRI data analysis generally suffers from the reliability issue due to its complex data structure and low signal-to-noise ratio (Bennett & Miller, 2010; Brown & Behrmann, 2017; Eklund et al., 2012, 2016). To evaluate the variable selection stability for both methods, we consider a bootstrap approach with 100 replications. In each replication, we sample observations with replacement, and fit the bootstrap samples using the best set of tuning parameters chosen by a five-fold cross-validation. Then we obtain the frequency of each super-voxel being selected over 100 replications as a measure of the selection stability, which can be used to fairly compare the regions that can be consistently selected against randomness, and thus ensure the reliability of the scientific findings in our analysis.

RCT and STGP respectively select 124.5 and 245.3 super-voxels per replication on average. Figure 5 displays the bootstrap selection results, where the -axis represents the maximum selection frequency of super-voxels in each region. The circle size is proportional to the number of super-voxels with the corresponding selection frequency being larger than 0.6. The color represents the proportion of super-voxels being selected in each region. Despite a smaller number of super-voxels being selected in each bootstrap run, RCT consistently selects super-voxels in several important brain regions over bootstrap samples, while STGP identifies a less number of brain regions that contain selected super-voxels.

| Selected frequency | RCT | STGP |

|---|---|---|

| 0.6-0.7 | Callcarine_L, Occipital_Mid_L, | Frontal_Sup_Midial_R, Temporal_Mid_L |

| Parietal_Inf_L | ||

| 0.7-0.8 | Temporal_Mid_L | SupraMarginal_R |

| 0.8-0.9 | Frontal_Mid_Orb_L, Precuneus_L | N/A |

| >0.9 | Front_Sup_L | N/A |

Table 5 summarizes the comparisons of selected regions from RCT and STGP by varying different thresholds of selection frequency from 0.6 to 0.9. Compared with STGP, RCT selects more stable regions for each level of selection frequency, indicating that our method produces more reliable selection results. In particular, containing at least one super-voxels with more than 60% selection frequency, seven and three regions are respectively identified by RCT and STGP. Among those regions, only one common region, i.e. the left temporal gyrus (Temporal_Mid_L), is detected by both methods, where RCT has a higher selection frequency (0.79) than STGP (0.69). The existing functional neuroimaging studies have indicated that the middle temporal gyrus is involved in language and semantic memory processing (Cabeza & Nyberg, 2000), and it is also related to mental diseases such as chronic schizophrenia (Onitsuka et al., 2004).

Among other selected regions, with more than 90% selection frequency, RCT consistently selects super-voxels in the left superior frontal gyrus (Frontal_Sup_L), while the selection frequency by STGP is below 60%. Superior frontal gyrus is known to be strongly related with working memory (Boisgueheneuc et al., 2006) which plays a critical role in attending to and analyzing incoming information. Deficits in working memory are associated with many cognitive and mental health challenges, such as anxiety and stress (Lukasik et al., 2019), which can be captured by the “p-factor”. The strong relationship between p-factor and working memory has been discovered by exisitng studies (Huang-Pollock et al., 2017).

In addition, RCT also identifies five more regions than STGP: precuneus, the left middle frontal gyrus (Frontal_Mid_Orb_L), the left alcarine fissure and surrounding cortex (Calcarine_L), the middle occipital gyrus (Occipital_Mid_L) and the left inferior parietal gyri (Parietal_Inf_L). Percuneus is well studied as a core of mind (Cavanna & Trimble, 2006), and it is highly related to posttraumatic stress disorder(PTSD) and other mental health issue (Geuze et al., 2007). Middle frontal gyrus is part of limbic system and known to be highly related to emotion (Sprooten et al., 2017). Inferior parietal lobule has been involved in the perception of emotions in facial stimuli, and interpretation of sensory information (Radua et al., 2010). Calcarine fissure is related to vision. Middle occipital gyrus is primarily responsible for object recognition. It would be interesting to further investigate how the brain activity in these regions influence on the p-factor.

To further demonstrate the proposed method providing more reliable scientific findings in comparison to STGP, we evaluate the prediction performance of the two methods by using the train-test data cross-validation. We randomly split the data into two parts with as the training data for model fitting and as the test data for computing the prediction error. We repeat this procedure for 50 times. The mean absolute prediction error of RCT is 0.464 with standard error 0.004; STGP has a mean absolute prediction error of 0.480 with standard error 0.038. We conclude that our proposed method improves the prediction performance of the p-factor using working memory contrast maps in the ABCD study, comparing with the state-of-the-art scalar-on-image regression method.

6 Conclusion

In this paper, we propose a novel high-dimensional robust regression with coefficient thresholding in the presence of complex dependencies among predictors and potential outliers. The proposed method uses the power of thresholding functions and the robust Huber loss to build an efficient nonconvex estimation procedure. We carefully analyze the landscape of the nonconvex loss function for the proposed method, which enables us to establish both statistical and computational consistency. We demonstrate the effectiveness and usefulness of the proposed method in simulation studies and a real application to imaging data analysis. In the future, it is interesting to investigate how to incorporate the spatial-temporal information of the imaging data into our proposed method. It is also important to study the statistical consistency of the near-stationary solution from the proposed gradient descent based algorithm under more general conditions.

References

- (1)

- Barron et al. (1999) Barron, A., Birgé, L. & Massart, P. (1999), ‘Risk bounds for model selection via penalization’, Probability Theory and Related Fields 113(3), 301–413.

- Bennett & Miller (2010) Bennett, C. M. & Miller, M. B. (2010), ‘How reliable are the results from functional magnetic resonance imaging?’, Annals of the New York Academy of Sciences 1191(1), 133–155.

- Boisgueheneuc et al. (2006) Boisgueheneuc, F. d., Levy, R., Volle, E., Seassau, M., Duffau, H., Kinkingnehun, S., Samson, Y., Zhang, S. & Dubois, B. (2006), ‘Functions of the left superior frontal gyrus in humans: a lesion study’, Brain 129(12), 3315–3328.

- Breheny & Huang (2011) Breheny, P. & Huang, J. (2011), ‘Coordinate descent algorithms for nonconvex penalized regression, with applications to biological feature selection’, The annals of applied statistics 5(1), 232.

- Brown & Behrmann (2017) Brown, E. N. & Behrmann, M. (2017), ‘Controversy in statistical analysis of functional magnetic resonance imaging data’, Proceedings of the National Academy of Sciences 114(17), E3368–E3369.

- Cabeza & Nyberg (2000) Cabeza, R. & Nyberg, L. (2000), ‘Imaging cognition ii: An empirical review of 275 pet and fmri studies’, Journal of cognitive neuroscience 12(1), 1–47.

- Cai et al. (2019) Cai, Q., Kang, J. & Yu, T. (2019), ‘Bayesian network marker selection via the thresholded graph laplacian gaussian prior’, Bayesian Analysis In Press.

- Cai et al. (2016) Cai, T. T., Li, X. & Ma, Z. (2016), ‘Optimal rates of convergence for noisy sparse phase retrieval via thresholded wirtinger flow’, The Annals of Statistics 44(5), 2221–2251.

- Candes et al. (2015) Candes, E. J., Li, X. & Soltanolkotabi, M. (2015), ‘Phase retrieval via wirtinger flow: Theory and algorithms’, IEEE Transactions on Information Theory 61(4), 1985–2007.

- Casey et al. (2018) Casey, B., Cannonier, T., Conley, M. I., Cohen, A. O., Barch, D. M., Heitzeg, M. M., Soules, M. E., Teslovich, T., Dellarco, D. V., Garavan, H. et al. (2018), ‘The adolescent brain cognitive development (abcd) study: imaging acquisition across 21 sites’, Developmental cognitive neuroscience 32, 43–54.

- Cavanna & Trimble (2006) Cavanna, A. E. & Trimble, M. R. (2006), ‘The precuneus: a review of its functional anatomy and behavioural correlates’, Brain 129(3), 564–583.

- Charbonnier et al. (1997) Charbonnier, P., Blanc-Féraud, L., Aubert, G. & Barlaud, M. (1997), ‘Deterministic edge-preserving regularization in computed imaging’, IEEE Transactions on Image Processing 6(2), 298–311.

- Eklund et al. (2012) Eklund, A., Andersson, M., Josephson, C., Johannesson, M. & Knutsson, H. (2012), ‘Does parametric fmri analysis with spm yield valid results? -— an empirical study of 1484 rest datasets’, NeuroImage 61(3), 565–578.

- Eklund et al. (2016) Eklund, A., Nichols, T. E. & Knutsson, H. (2016), ‘Cluster failure: Why fmri inferences for spatial extent have inflated false-positive rates’, Proceedings of the national academy of sciences 113(28), 7900–7905.

- El Karoui et al. (2013) El Karoui, N., Bean, D., Bickel, P. J., Lim, C. & Yu, B. (2013), ‘On robust regression with high-dimensional predictors’, Proceedings of the National Academy of Sciences 110(36), 14557–14562.

- Fan & Li (2001) Fan, J. & Li, R. (2001), ‘Variable selection via nonconcave penalized likelihood and its oracle properties’, Journal of the American Statistical Association 96(456), 1348–1360.

- Fan et al. (2018) Fan, J., Liu, H., Sun, Q. & Zhang, T. (2018), ‘I-lamm for sparse learning: Simultaneous control of algorithmic complexity and statistical error’, The Annals of Statistics 46(2), 814–841.

- Fan et al. (2014) Fan, J., Xue, L. & Zou, H. (2014), ‘Strong oracle optimality of folded concave penalized estimation’, The Annals of Statistics 42(3), 819–849.

- Fan & Lv (2013) Fan, Y. & Lv, J. (2013), ‘Asymptotic equivalence of regularization methods in thresholded parameter space’, Journal of the American Statistical Association 108(503), 1044–1061.

- Geuze et al. (2007) Geuze, E., Vermetten, E., de Kloet, C. S. & Westenberg, H. G. (2007), ‘Precuneal activity during encoding in veterans with posttraumatic stress disorder’, Progress in brain research 167, 293–297.

- Goldsmith et al. (2014) Goldsmith, J., Huang, L. & Crainiceanu, C. M. (2014), ‘Smooth scalar-on-image regression via spatial bayesian variable selection’, Journal of Computational and Graphical Statistics 23(1), 46–64.

- He et al. (2018) He, K., Xu, H. & Kang, J. (2018), ‘A selective overview of feature screening methods with applications to neuroimaging data’, Wiley Interdisciplinary Reviews: Computational Statistics p. e1454.

- Huang-Pollock et al. (2017) Huang-Pollock, C., Shapiro, Z., Galloway-Long, H. & Weigard, A. (2017), ‘Is poor working memory a transdiagnostic risk factor for psychopathology?’, Journal of abnormal child psychology 45(8), 1477–1490.

- Huber (1964) Huber, P. J. (1964), ‘Robust estimation of a location parameter’, The Annals of Mathematical Statistics 53, 73–101.

- Kang et al. (2018) Kang, J., Reich, B. J. & Staicu, A.-M. (2018), ‘Scalar-on-image regression via the soft-thresholded gaussian process’, Biometrika 105(1), 165–184.

- Li et al. (2015) Li, F., Zhang, T., Wang, Q., Gonzalez, M. Z., Maresh, E. L. & Coan, J. A. (2015), ‘Spatial bayesian variable selection and grouping for high-dimensional scalar-on-image regression’, The Annals of Applied Statistics 9(2), 687–713.

- Lindquist et al. (2008) Lindquist, M. A. et al. (2008), ‘The statistical analysis of fmri data’, Statistical science 23(4), 439–464.

- Loh (2017) Loh, P.-L. (2017), ‘Statistical consistency and asymptotic normality for high-dimensional robust -estimators’, The Annals of Statistics 45(2), 866–896.

- Loh (2018) Loh, P.-L. (2018), ‘Scale calibration for high-dimensional robust regression’, arXiv preprint arXiv:1811.02096 .

- Loh & Wainwright (2013) Loh, P.-L. & Wainwright, M. J. (2013), Regularized m-estimators with nonconvexity: Statistical and algorithmic theory for local optima, in ‘Advances in Neural Information Processing Systems’, pp. 476–484.

- Loh & Wainwright (2017) Loh, P.-L. & Wainwright, M. J. (2017), ‘Support recovery without incoherence: A case for nonconvex regularization’, The Annals of Statistics 45(6), 2455–2482.

- Lukasik et al. (2019) Lukasik, K. M., Waris, O., Soveri, A., Lehtonen, M. & Laine, M. (2019), ‘The relationship of anxiety and stress with working memory performance in a large non-depressed sample’, Frontiers in psychology 10, 4.

- Mei et al. (2018) Mei, S., Bai, Y. & Montanari, A. (2018), ‘The landscape of empirical risk for nonconvex losses’, The Annals of Statistics 46(6A), 2747–2774.

- Nakajima & West (2013a) Nakajima, J. & West, M. (2013a), ‘Bayesian analysis of latent threshold dynamic models’, Journal of Business & Economic Statistics 31(2), 151–164.

- Nakajima & West (2013b) Nakajima, J. & West, M. (2013b), ‘Bayesian dynamic factor models: Latent threshold approach’, Journal of Financial Econometrics 11, 116–153.

- Nakajima & West (2017) Nakajima, J. & West, M. (2017), ‘Dynamics & sparsity in latent threshold factor models: A study in multivariate eeg signal processing’, Brazilian Journal of Probability and Statistics 31(4), 701–731.

- Negahban et al. (2012) Negahban, S. N., Ravikumar, P., Wainwright, M. J., Yu, B. et al. (2012), ‘A unified framework for high-dimensional analysis of -estimators with decomposable regularizers’, Statistical Science 27(4), 538–557.

- Negahban & Wainwright (2012) Negahban, S. & Wainwright, M. J. (2012), ‘Restricted strong convexity and weighted matrix completion: Optimal bounds with noise’, Journal of Machine Learning Research 13(May), 1665–1697.

- Nesterov (2013) Nesterov, Y. (2013), ‘Gradient methods for minimizing composite functions’, Mathematical Programming 140(1), 125–161.

- Ni et al. (2019) Ni, Y., Stingo, F. C. & Baladandayuthapani, V. (2019), ‘Bayesian graphical regression’, Journal of the American Statistical Association 114, 184–197.

- Onitsuka et al. (2004) Onitsuka, T., Shenton, M. E., Salisbury, D. F., Dickey, C. C., Kasai, K., Toner, S. K., Frumin, M., Kikinis, R., Jolesz, F. A. & McCarley, R. W. (2004), ‘Middle and inferior temporal gyrus gray matter volume abnormalities in chronic schizophrenia: an mri study’, American Journal of Psychiatry 161(9), 1603–1611.

- Poldrack (2012) Poldrack, R. A. (2012), ‘The future of fmri in cognitive neuroscience’, Neuroimage 62(2), 1216–1220.

- Radua et al. (2010) Radua, J., Phillips, M. L., Russell, T., Lawrence, N., Marshall, N., Kalidindi, S., El-Hage, W., McDonald, C., Giampietro, V., Brammer, M. J. et al. (2010), ‘Neural response to specific components of fearful faces in healthy and schizophrenic adults’, Neuroimage 49(1), 939–946.

- Rockafellar (2015) Rockafellar, R. T. (2015), Convex Analysis, Princeton University Press.

- Rockafellar & Wets (2009) Rockafellar, R. T. & Wets, R. J.-B. (2009), Variational analysis, Vol. 317, Springer Science & Business Media.

- Rosenberg et al. (2016) Rosenberg, M. D., Finn, E. S., Scheinost, D., Papademetris, X., Shen, X., Constable, R. T. & Chun, M. M. (2016), ‘A neuromarker of sustained attention from whole-brain functional connectivity’, Nature Neuroscience 19(1), 165.

- Simon et al. (2013) Simon, N., Friedman, J., Hastie, T. & Tibshirani, R. (2013), ‘A sparse-group lasso’, Journal of Computational and Graphical Statistics 22(2), 231–245.

- Sprooten et al. (2017) Sprooten, E., Rasgon, A., Goodman, M., Carlin, A., Leibu, E., Lee, W. H. & Frangou, S. (2017), ‘Addressing reverse inference in psychiatric neuroimaging: Meta-analyses of task-related brain activation in common mental disorders’, Human brain mapping 38(4), 1846–1864.

- Sun et al. (2019) Sun, Q., Jiang, B., Zhu, H. & Ibrahim, J. G. (2019), ‘Hard thresholding regression’, Scandinavian Journal of Statistics 46(1), 314–328.

- Tibshirani (1996) Tibshirani, R. (1996), ‘Regression shrinkage and selection via the lasso’, Journal of the Royal Statistical Society: Series B (Methodological) 58(1), 267–288.

- Wainwright (2009) Wainwright, M. J. (2009), ‘Sharp thresholds for high-dimensional and noisy sparsity recovery using {}-constrained quadratic programming (lasso)’, IEEE Transactions on Information Theory 55(5), 2183–2202.

- Wang et al. (2017) Wang, X., Zhu, H. & Initiative, A. D. N. (2017), ‘Generalized scalar-on-image regression models via total variation’, Journal of the American Statistical Association 112(519), 1156–1168.

- Wang et al. (2014) Wang, Z., Liu, H. & Zhang, T. (2014), ‘Optimal computational and statistical rates of convergence for sparse nonconvex learning problems’, Annals of statistics 42(6), 2164.

- Yang (2015) Yang, Y. (2015), ‘A unified algorithm for fitting penalized models with high dimensional data’.

- Yuan & Lin (2006) Yuan, M. & Lin, Y. (2006), ‘Model selection and estimation in regression with grouped variables’, Journal of the Royal Statistical Society: Series B (Statistical Methodology) 68(1), 49–67.

- Zhang (2010) Zhang, C.-H. (2010), ‘Nearly unbiased variable selection under minimax concave penalty’, The Annals of Statistics 38(2), 894–942.

- Zhang & Zhang (2012) Zhang, C.-H. & Zhang, T. (2012), ‘A general theory of concave regularization for high-dimensional sparse estimation problems’, Statistical Science 27(4), 576–593.

- Zhao & Yu (2006) Zhao, P. & Yu, B. (2006), ‘On model selection consistency of lasso’, Journal of Machine Learning Research 7(Nov), 2541–2563.

- Zou (2006) Zou, H. (2006), ‘The adaptive lasso and its oracle properties’, Journal of the American Statistical Association 101(476), 1418–1429.

- Zou & Li (2008) Zou, H. & Li, R. (2008), ‘One-step sparse estimates in nonconcave penalized likelihood models’, The Annals of Statistics 36(4), 1509–1533.