Robust Fitting of Mixture Models using Weighted Complete Estimating Equations

Shonosuke Sugasawa1 and Genya Kobayashi2

1Center for Spatial Information Science, The University of Tokyo

2Graduate School of Social Science, Chiba University

Abstract

Mixture modeling, which considers the potential heterogeneity in data, is widely adopted for classification and clustering problems. Mixture models can be estimated using the Expectation-Maximization algorithm, which works with the complete estimating equations conditioned by the latent membership variables of the cluster assignment based on the hierarchical expression of mixture models. However, when the mixture components have light tails such as a normal distribution, the mixture model can be sensitive to outliers. This study proposes a method of weighted complete estimating equations (WCE) for the robust fitting of mixture models. Our WCE introduces weights to complete estimating equations such that the weights can automatically downweight the outliers. The weights are constructed similarly to the density power divergence for mixture models, but in our WCE, they depend only on the component distributions and not on the whole mixture. A novel expectation-estimating-equation (EEE) algorithm is also developed to solve the WCE. For illustrative purposes, a multivariate Gaussian mixture, a mixture of experts, and a multivariate skew normal mixture are considered, and how our EEE algorithm can be implemented for these specific models is described. The numerical performance of the proposed robust estimation method was examined using simulated and real datasets.

Key words: Clustering; Divergence; EEE algorithm; Mixture of experts; Skew normal mixture

Introduction

Mixture modeling (McLachlan and Peel, 2000) is very popular for distribution estimation, regression, and model-based clustering by taking account of potential subgroup structures of data. Typically, such mixture models are fitted by the maximum likelihood method using the well-known Expectation-Maximization (EM) algorithm. However, data often contain outliers and the maximum likelihood method can be highly affected by them. The presence of outliers would result in the biased and inefficient statistical inference of the parameters of interest and would thus make recovering the underlying clustering structure of the data very difficult. A typical remedy for this problem is to replace the normal component distributions with heavy-tailed components. For example, Bagnato et al. (2017), Forbes and Wraith (2014), Peel and McLachlan (2004), Punzo and Tortora (2021), Sun et al. (2010), and Zhang and Liang (2010) used symmetric component distributions, Basso et al. (2010), Lee and McLachlan (2014), Lin (2010), Morris et al. (2019), and Wang et al. (2009) used skewed distributions, and Galimberti and Soffritti (2014), Ingrassia et al. (2014), Mazza and Punzo (2020), Punzo et al. (2017), Song et al. (2014), Yao et al. (2014), and Zarei et al. (2019) considered robustifying the mixture of regressions. However, this approach cannot distinguish non-outliers from outliers straightforwardly because it fits a mixture model to all the observations, including outliers. Apart from using heavy-tailed distributions, a class of generalized likelihood is an effective alternative tool for the robust fitting of statistical models. In the context of mixture modeling, Fujisawa and Eguchi (2006) employed density power divergence (Basu et al., 1998) for robust estimation of Gaussian mixture models. However, a direct application of the divergence may suffer from computational problems because the objective functions may contain intractable integrals. For example, the objective function under the density power divergence includes the integral , where is the density function with the parameter and is the tuning parameter. When is the density function of a mixture model, the integral becomes intractable. Therefore, these approaches are not necessarily appealing in practice, despite their desirable robustness properties.

In this paper, we develop a new approach to robust mixture modeling by newly introducing the idea of the weighted complete estimating equations (WCE). Complete estimating equations appear in the EM algorithm and are conditioned on the latent membership variables of the cluster assignment. Our WCE introduces weights to the complete estimating equations such that the contribution of outliers to the complete estimating equations is automatically downweighted. The weight is defined based on the assumed component distributions. By conditioning the membership variables in WCE, only the weighted estimating equations for each component distribution must be considered separately instead of directly using the weighted estimating equations for a mixture model. Since the derived WCE depends on the unknown latent membership variables, these latent variables are augmented via their posterior expectations to solve the WCE, which provides an expectation-estimating-equation (EEE) algorithm (Elashoff and Ryan, 2004). The proposed EEE algorithm is general and can be applied to various mixture models. The proposed WCE method is then applied to three types of mixture models: a multivariate Gaussian mixture, a mixture of experts (Jacobs et al., 1991), and a multivariate skew-normal mixture (Lin et al., 2007; Lin, 2009). For the multivariate Gaussian mixture, the updating steps of the proposed EEE algorithm are obtained in closed form without requiring either numerical integration or optimization steps. A similar algorithm can be derived for a mixture of experts when the component distributions are Gaussian. Moreover, for the multivariate skew normal mixture, by introducing additional latent variables based on the stochastic representation of the skew normal distribution, a novel EEE algorithm can be obtained as a slight extension of the proposed general EEE algorithm in which all the updating steps proceed analytically.

The proposed framework of the weighted complete estimating equations unifies several existing approaches regarding the choice of the weight function, such as hard trimming (e.g. Garcia-Escudero et al., 2008), soft trimming (e.g. Campbell, 1984; Farcomeni and Greco, 2015; Greco and Agostinelli, 2020) and their hybrid (e.g. Farcomeni and Punzo, 2020). Among several designs for the weight function, this study adopts the density weight, which leads to theoretically valid and computationally tractable robust estimation procedures not only for the Gaussian mixture but also for a variety of mixture models.

As a related work, Greco and Agostinelli (2020) employed a similar idea using the weighted likelihood for the robust fitting of the multivariate Gaussian mixture. They constructed weights by first obtaining a kernel density estimate for the Mahalanobis distance concerning the component parameters and then computing the Pearson residuals. Since their method requires knowledge of the distribution of the quadratic form of the residuals, their approach cannot be directly extended to other mixture models. On the other hand, the proposed method can be applied to various mixture models, as in the proposed weight design, and the weights are automatically determined by the component-wise density. It should be noted that introducing the density weight in estimating equations is closely related to the density power divergence (Basu et al., 1998) in which the density power divergence induces the density-weighted likelihood equations. However, the novelty of the proposed approach is that the density weight is considered within the framework of complete estimating equations rather than likelihood equations, which leads to tractable robust estimation algorithms for a variety of mixture models.

The remainder of this paper is organized as follows. In Section 2, we first describe the proposed WCE method for a general mixture model and derive a general EEE algorithm for solving the WCE. Then, the general algorithm is applied to the specific mixture models, a multivariate Gaussian mixture (Section 3), a mixture of experts (Section 4), and a multivariate skew normal mixture (Section 5). In Section 6, the proposed method is demonstrated for a multivariate Gaussian mixture and a skew normal mixture through the simulation studies. Section 7 illustrates practical advantages of the proposed method using real data. Finally, conclusions and discussions are presented in Section 8. The R code for implementing the proposed method is available at the GitHub repository (https://github.com/sshonosuke/RobMixture).

Weighted Estimating Equations for Mixture Modeling

Weighted complete estimating equations

Let be the random variables on . We consider the following mixture model with probability density function :

| (1) |

where is the set of model parameters in the th component, is the vector of grouping or prior membership probabilities, and is the collection of all model parameters. To fit model (1), we introduce the latent membership variable defined as for ; therefore, the conditional distribution of given is . For notional simplicity, we define , which is the indicator function for the condition . The complete estimating equations for given are given as follows:

for .

To robustify the estimating equations against outliers, we introduce the component-specific weight for and which controls the contribution from the th observation. We then propose the following weighted complete estimating equations (WCE):

| (2) |

for , where is the weight function which may depend on the tuning parameter and

Note that and are necessary to make WCE (2) unbiased. That is, the expectations of the estimating equations in (2) with respect to the joint distribution of and are zero; otherwise, asymptotic properties such as consistency and asymptotic normality of the resulting estimator may not be guaranteed. In the unbiased WCE, we consider the specific form of the weight function based on the component density given by

for . The weight is small if is an outlier, that is, is located far in the tail of the distribution of the th component . The weighted estimating equations are reduced to the original complete estimating equation when . It should be noted that the proposed weight design is not a direct application of the density power divergence of Basu et al. (1998) to the mixture model (1), which would have used the whole mixture density (1) as the weight in the estimating equations.

EEE algorithm

Since the proposed WCE (2) includes the latent variable , WCE should be imputed with the conditional expectation of given . Starting from some initial values, we propose an EEE algorithm that updates the estimates in the th iteration as follows:

-

-

E-step: Compute the posterior probability:

(3) -

-

EE-step: Update the membership probabilities ’s as

(4) and the component-specific parameters by solving the estimating equations:

where .

Note that the updating process (4) can be obtained by solving the imputed version of the second equation in (2). To apply the general algorithm above to a particular mixture model such as a multivariate Gaussian mixture, the only requirement is to calculate the bias correction terms and . As shown in Section 3, the bias correction terms are quite simple under a Gaussian distribution. Moreover, the algorithm can be easily modified to a case in which the distribution of each component admits a hierarchical or stochastic representation. For instance, the multivariate skew normal distribution has a hierarchical representation based on the multivariate normal distribution, which allows us to derive tractable weighted complete estimating equations to carry out the proposed robust EEE algorithm, as demonstrated in Section 5.

Regarding the setting of the starting values, we can use the estimation results of some existing robust methods. The detailed initialization strategy for each mixture model is discussed in Sections 3-5. We also note that the algorithm may converge to a not necessarily suitable solution. To avoid this problem, initial points are randomly prepared to produce solutions obtained from the EEE algorithm. Among the solutions, the best solution is chosen such that it minimizes the trimmed BIC criterion given in Section 2.4. In our implementation, we simply set .

The augmented function used in the EE-step can be regarded as a bivariate with the constraint , where is a collection of the augmented estimating equations given by

for and . The EEE algorithm updates the estimate of by solving in the th iteration. It is assumed that the component distribution is continuous and differentiable with respect to . Since the density weight inherits these properties, bivariate function is continuous with respect to and . Hence, if the sequence of estimators from the EEE algorithm converges, it will converge to the solution of the augmented equation . From Lemma 1 in Tang and Qu (2016), the sequence of estimators that solve converges to the solution of in the neighborhood of if , where and .

Classification and outlier detection

The observations are classified into clusters using the posterior probability (3). We adopt the standard method of classifying an observation into a cluster with the maximum posterior probability. It should be noted that the classification is applied to all observations, both non-outliers and outliers.

Given the cluster assignment, outlier detection is performed based on a fitted robust model. Although distance-based outlier detection rules (e.g. Cerioli and Farcomeni, 2011; Greco and Agostinelli, 2020) can be adopted under Gaussian mixture models, such approaches are not necessarily applicable to other general mixture models that we are concerned with. Here, we propose an alternative outlier detection method that uses the component density (or probability mass) function . Suppose the th observation is classified into the th cluster. We define as the value of the density function of under the th cluster. Our strategy computes the probability where is a random variable with a density . Under a general , this probability is not analytically available, but we can approximately compute using the Monte Carlo approximation given by

where is the number of Monte Carlo samples and is the th Monte Carlo sample generated from the distribution . Note that a smaller value of means that the th observation is more likely to be an outlier. Then, our outlier detection strategy declares the th observation as an outlier when for some fixed . Note that this approach may result in both type I error (a non-outlier wrongly flagged as an outlier) and type II error (a genuine outlier is not flagged), and larger leads to the larger likelihood of the type I error. We here consider following the popular choices of the thresholding probability values in the distance-based outlier detection.

Selection of the tuning parameters

In practice, the number of components is unknown and must be chosen reasonably. When the data contains outliers, they should not affect selecting ; otherwise the selected can be different from the true . Here, we utilize the results of the outlier detection discussed in the previous section. Let be the index set of observations flagged as non-outliers under thresholding level . We then propose the trimmed BIC criterion as follows:

| (5) |

where and are the cardinalities of and , respectively. If (no outliers are detected), then the criterion is reduced to the standard BIC criterion. The optimal value is selected as the minimizer of this criterion.

Regarding the choice of , we first note that the proposed EEE algorithm reduces to the standard EM algorithm when . With a larger , the observations with the small component densities are more strongly downweighted by the density weights . Thus, the proposed EEE algorithm becomes more robust against outliers. However, when there are no outliers, using a large value of may result in a loss of efficiency by unnecessarily downweighting non-outliers. Specifically, the use of greater than may lead to considerable efficiency loss and numerical instability, where this phenomenon is partly demonstrated under the simple models in Basu et al. (1998). Hence, we suggest using a small positive value for . Specifically, we recommend using or as in our numerical studies in Sections 6 and 7. Another practical idea is to monitor the trimmed BIC (5) for several values of and as demonstrated in Section 7.2.

Asymptotic variance-covariance matrix

Here, the asymptotic variance-covariance matrix of the estimator is considered. Let denote the complete estimating functions for the th observation given in (2) and let denote the estimating equations in which the unobserved (or ) is imputed with its conditional expectation. Furthermore, let denote the estimator which is the solution of . Note that the augmented estimating equations are unbiased since the complete estimating equations (2) are unbiased. Then, under some regularity conditions, the asymptotic distribution of is where the asymptotic variance-covariance matrix is given by

This can be consistently estimated by replacing with and with . In practice, it is difficult to obtain an analytical expression for the derivative of . Therefore, the derivative is numerically computed by using the outputs of the EEE algorithm. Let denote the final estimate of the EEE algorithm. Then the derivative for evaluated at can be numerically approximated by , where is obtained by replacing the in with .

Robust Gaussian mixture

The Gaussian mixture models are the most famous and widely adopted mixture models for model-based clustering, even though the performance can be severely affected by outliers due to the light tails of the Gaussian (normal) distribution. Here, a new approach to the robust fitting of the Gaussian mixture model using the proposed weighted complete estimating equations is presented. Let denote the -dimensional normal density . It holds that

and , where is a -dimensional vector of and

Then, the weighted complete estimating equations for and are given by

for , where . Hence, starting from some initial values , the proposed EEE algorithm repeats the following two steps in the th iteration:

-

-

E-step: The standard E-step is left unchanged as

- -

Note that all the formulas in the above steps are obtained in closed form. This is one of the attractive features of the proposed method, as it does not require any computationally intensive methods such as Monte Carlo integration.

To choose reasonable starting values , we employ existing robust estimation methods of Gaussian mixture models or clustering, such as trimmed robust clustering (TCL; Garcia-Escudero et al., 2008) and improper maximum likelihood (IML; Coretto and Hennig, 2016).

To stabilize the estimation of the variance-covariance matrix , it would be beneficial to impose the following eigen-ratio constraint:

| (6) |

where denotes the th eigenvalue of the covariance matrix in the th component and is a fixed constant. When , a spherical structure is imposed on , and a more flexible structure is allowed under a large value of . To reflect the eigen-ratio constraint in the EEE algorithm, the eigenvalues of can be simply replaced with the truncated version where if , if and if , where is an unknown constant that depends on . The procedure of Fritz et al. (2013) is employed in this study. Note that if is set to a small value, the constraint for is too severe such that the solution of the weighted estimating equation might not exist.

Robust mixture of experts

A mixture of experts model (Jacobs et al., 1991; McLachlan and Peel, 2000) is a useful tool for modelling nonlinear regression relationships. Models that include a simple mixture of normal regression models and their robust versions have been proposed in the literature (e.g. Bai et al., 2012; Song et al., 2014). In addition, the robust versions of a mixture of experts based on the non-normal distributions were considered by Nguyen and McLachlan (2016), and Chamroukhi (2016). The general model is described as

where is a vector of covariates including an intercept term and is the mixing proportion such that . For the identifiability of the parameters, is assumed to be an empty set, since is completely determined by . A typical form of the continuous response variables adopts and . Let be the set of the unknown parameters, , and . Compared to the standard mixture model (1), the mixing proportion is a function of parameterized by .

As before, the latent variable such that for is introduced. Then, the complete weighted estimating equations are given by

where

Starting from some initial values , the proposed EEE algorithm repeats the following two steps in the th iteration:

-

-

E-step: The standard E-step is left unchanged as

-

-

EE-step: Update and by solving the following equations:

(7)

When the mixture components are the normal linear regression models given by with , the bias correction terms and can be analytically obtained as

The first equation in (7) can be solved analytically, and the closed-form updating steps similar to those in Section 3 can be obtained. On the other hand, the second equation is a function of and cannot be solved analytically. Note that the solution corresponds to the maximizer of the weighted log-likelihood function of the multinomial distribution given by

since its first-order partial derivatives with respect to are reduced to the second equation in (7). Thus, the updating step for can be readily carried out.

In setting the initial values of the EEE algorithm, we first randomly split the data into groups and apply some existing robust methods to estimate for , which can be adopted for the initial values of .

For example, when is the normal linear regression as adopted in Section 7.1, we employ an M-estimator available in the rlm function in R package ‘MASS’ (Venables and

Ripley, 2002).

For the initial values of , we suggest simply using .

Robust skew normal mixture

We next consider the use of the -dimensional skew normal distribution (Azzalini and Valle, 1996) for the th component. The mixture model based on skew normal distributions is more flexible than a multivariate Gaussian mixture, especially when the cluster-specific distributions are not symmetric but skewed. Several works have been conducted on the maximum likelihood estimation of a skew normal mixture (Lin et al., 2007; Lin, 2009). Despite the flexibility in terms of skewness, because the skew normal distribution still has light tails as the normal distribution, the skew normal mixture is also sensitive to outliers. Alternative mixture models using heavy-tailed and skewed distributions have also been proposed in the literature (e.g. Lee and McLachlan, 2017; Morris et al., 2019). Here, we consider the robust fitting of the skew normal mixture within the proposed framework.

We first note that the direct application of the skew normal distribution to the proposed EEE algorithm would be computationally intensive since the bias correction term cannot be obtained analytically, and a Monte Carlo approximation would be required in each iteration. Instead, we provide a novel algorithm that exploits the stochastic representation of the multivariate skew normal distribution. The resulting algorithm is a slight extension of the proposed algorithm in Section 2. Früwirth-Schnatter and Pyne (2010) provided the following hierarchical representation for given :

| (8) |

where is the vector of location parameters, is the vector of the skewness parameters, and is the covariance matrix. Here, denotes the truncated normal distribution on the positive real line with mean and variance parameters and , respectively, and its the density function is given by

where is the distribution function of the standard normal distribution. By defining

where . The probability density function of the multivariate skew normal distribution of Azzalini and Valle (1996) is given by

| (9) |

From (8), the conditional distribution of given both and is normal, namely, , so that the bias correction terms under given and can be easily obtained in the same way as in the case of the Gaussian mixture models. Therefore, we consider the following complete estimating equations for the parameters conditional on both and :

where and have the same forms as in the Gaussian mixture case.

The proposed EEE algorithm for the robust fitting of the skew normal mixture is obtained after some modification to the normal case. Since the complete estimating equations contain the additional latent variables , some additional steps are included to impute the moments of in the equations. The moments are those of the conditional posterior distribution of , given , which is , where

We define the following quantities:

| (10) |

Note that we have for and

Since the conditional distribution of given is , we have

and it follows from Lin et al. (2007) that

These expressions are applied to the analytical updating steps in E-step. Starting from some initial values of the parameters, , the proposed EEE algorithm iteratively updates the parameters in the th iteration as follows:

Note that the form of under the skew normal mixture is the same as that in the case of the normal mixture, because it holds that and the conditional distribution of given is the multivariate normal with the variance-covariance matrix . In addition, note that all the steps in the above algorithm are obtained in closed forms by successfully exploiting the stochastic representation of the skew normal distribution in the complete weighted complete estimating equations. In our EEE algorithm, we impose the eigen-ratio constraint considered in Section 3 to avoid an ill-posed problem.

The following strategy is employed to set the initial values of the algorithm. First, an existing robust algorithm for fitting Gaussian mixture models is applied to obtain the cluster assignment and mean vectors of the clusters. We then set as the estimated mean vector and set to be the vector of marginal sample skewness of the observations in the th cluster. For and , we set and .

Simulation study

Gaussian mixture

The performance of the proposed method for the robust fitting of a Gaussian mixture is evaluated along with existing methods through simulation studies. First, the performance in terms of the estimation accuracy of the unknown model parameters is examined. We set the underlying true distribution to be a -dimensional Gaussian mixture model with components, where the parameters in each Gaussian distribution are given by

for some and with

controls the separation of the three clusters. The following two scenarios, and , correspond to overlapping and well-separated clusters, respectively. In this study, the sample size is set to , and the three cases of the dimension, , are considered (the results under are provided in the Supplementary Material). The true mixing probabilities are fixed at , and . Outliers are generated from the uniform distribution on where and is the collection of points where the minimum Mahalanobis distance to the th cluster mean is smaller than , namely,

Let denote the proportion of outliers such that observations are drawn from the true mixture distribution, whereas the remaining observations are generated as outliers. We adopt the following three cases: . Setting means that there are no outliers in the data. Therefore, the efficiency loss of the robust methods against standard non-robust methods can be assessed.

For each of the 1000 simulated datasets, the proposed weighted complete estimating equation method is applied with two fixed values of , , and . Hereafter, they will be denoted as WCE1 and WCE2, respectively. For comparison, the standard (non-robust) maximum likelihood method for fitting the Gaussian mixture (GM) using the EM algorithm is also implemented. For the existing robust contenders, we consider the trimmed robust clustering (TCL; Garcia-Escudero et al., 2008), improper maximum likelihood (IML; Coretto and Hennig, 2016) and contaminated Gaussian mixture (CGM; Punzo and McNicholas, 2016), which are available in R packages ‘tclust’ (Fritz et al., 2012), ‘otrimle’ (Coretto and Hennig, 2019) and ‘ContaminatedMixt’ (Punzo et al., 2018), respectively. The trimming level in TCL is set to a default value of . In CGM, the fully structured variance-covariance is used. The same eigen-ratio constraint is imposed for all the methods other than CGM by setting in condition (6), noting that the true eigen-ratios are for the first and third clusters and for the second cluster. In WCE1 and WCE2, is employed for outlier detection.

The estimation performance of the methods is evaluated based on the squared error given by , and . We also computed the following integrated squared error to check the overall estimation accuracy:

| (11) |

where the integral is approximated by Monte Carlo integration by generating 3000 random numbers uniformly distributed on . For measuring the classification accuracy, we calculated the classification error defined as , where and are the estimated and true cluster assignments, respectively, and is the set of non-outliers. The above quantities were averaged over 1000 simulated detests, which gives the mean squared error (MSE) of the model parameters, the mean integrated squared errors (MISE) of the density function and the mean classification error (MCE) of the cluster assignment.

Tables 1-3 present the MSE, MISE and MCE of the competing models under and , respectively. Firstly, the performance of the standard GM is reasonable only in the cases where the clusters are well-separated (), and there are no outliers (). When the clusters are overlapping (), and the data does not contain any outliers, the estimation accuracy of GM deteriorates as the dimensionality increases. In fact, in the case of , the robust methods, including the proposed method, performed better than GM. In the presence of the outliers, , the estimation accuracy in terms of MSE and MISE of the robust methods appears comparable. For the classification accuracy, the order of the performance of the robust methods appears to be case dependent, and they perform comparably overall. For example, in the cases where the clusters are well-separated with and , IML and CGM tend to result in smaller MCE than the proposed method. On the other hand, when and the clusters are overlapping, the proposed WCE2 resulted in the smallest MCE.

The performance of outlier detection is also evaluated. We let be an indicator of outliers such that denotes that is an outlier. The false discovery rate (FDR) and power (PW) are given by and , where denotes the set of outliers. Table 4 presents the PDR and PW values averaged over the 1000 simulated datasets 4. The table shows that the proposed method performs well in the low-dimensional cases () with achieving a relatively low FDR and high power compared with the contenders. It is also shown that, as the dimensionality increases, the FDR for the proposed method increases while maintaining high power.

Next, we consider the situation where the number of outliers is close to the minimum size of the clusters. Specifically, we set and the true mixing probability as . Using the same data generating process as in the case of , MSE, MISE, and MCE of the six methods are evaluated based on the 1000 simulated datasets. The result are reported in Table 5. In this simulation setting, TCL appears to have produced the best result with the smallest MSE, MISE, and MCE. The table shows that some errors under IML and CGM resulted in very large magnitudes.

Finally, we examine the performance in selecting the number of components. The same simulation scenarios with are considered. For the simulated datasets, the optimal from is selected based on the trimmed BIC criterion (5). Since the trimmed BIC can be applied to a variety of robust methods that provide parameter estimates and detect outliers, the proposed methods, as well as the three existing robust methods (TCL, IML, and CGM) are considered in this study. The same settings are used for the tuning parameters as in the previous simulation. In all the scenarios, is used for WCE1 and WCE2. In addition, the performance is also assessed with to check the sensitivity with respect to in the most challenging situations where . Based on the 300 simulated datasets, the selection probabilities for each are computed. The results are presented in Table 6. The table shows that when the data contains outliers, the standard GM method tends to select a larger number of components than the truth since the outliers are recognized as a new cluster. On the other hand, the proposed methods (WCE1 and WCE2) are highly accurate in selecting the true number of components even in the presence of outliers. Among the existing robust methods, TCL shows comparable performance with the proposed methods, whereas IML and CGM perform rather poorly.

Recalling that rather advantageous settings are used for the contenders, we can conclude that the overall estimation performance of the proposed method is comparable with the existing methods. The results of this study indicate that the proposed method is also a promising approach for the robust estimation of mixture models.

| MSE | MSE | MSE | MSE | MSE | MSE | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| MISE | MCE | MISE | MCE | |||||||

| () | () | () | () | () | () | () | () | |||

| WCE1 | 7.27 | 0.37 | 1.85 | 3.22 | 4.55 | 5.16 | 0.23 | 1.33 | 2.60 | 1.12 |

| WCE2 | 7.49 | 0.40 | 1.88 | 3.36 | 4.60 | 5.43 | 0.25 | 1.34 | 2.73 | 1.17 |

| GM | 7.09 | 0.34 | 1.82 | 3.09 | 3.91 | 4.92 | 0.22 | 1.33 | 2.49 | 0.24 |

| TCL | 9.04 | 0.82 | 2.62 | 9.35 | 8.69 | 6.01 | 0.60 | 1.65 | 6.55 | 5.03 |

| IML | 7.64 | 0.61 | 2.37 | 9.44 | 7.18 | 5.23 | 0.41 | 1.60 | 6.60 | 2.47 |

| CGM | 7.15 | 0.47 | 1.84 | 5.07 | 3.91 | 4.94 | 0.33 | 1.33 | 4.06 | 0.24 |

| WCE1 | 8.90 | 0.91 | 2.15 | 3.77 | 4.54 | 5.66 | 0.42 | 1.34 | 2.98 | 0.90 |

| WCE2 | 8.28 | 0.57 | 2.00 | 3.59 | 4.56 | 5.77 | 0.34 | 1.35 | 2.91 | 1.02 |

| GM | 47.63 | 17.28 | 10.01 | 13.68 | 6.28 | 13.13 | 6.00 | 1.39 | 11.25 | 0.28 |

| TCL | 8.47 | 0.56 | 2.24 | 6.09 | 5.93 | 5.76 | 0.33 | 1.49 | 3.91 | 2.16 |

| IML | 8.73 | 0.64 | 2.22 | 6.62 | 6.04 | 5.74 | 0.45 | 1.62 | 5.45 | 2.14 |

| CGM | 16.17 | 2.77 | 3.71 | 5.13 | 4.41 | 5.69 | 0.48 | 1.40 | 3.60 | 0.26 |

| WCE1 | 17.13 | 6.72 | 4.23 | 6.37 | 4.99 | 6.40 | 1.54 | 1.46 | 4.45 | 0.74 |

| WCE2 | 9.77 | 1.36 | 2.33 | 4.12 | 4.63 | 6.02 | 0.60 | 1.45 | 3.36 | 0.90 |

| GM | 164.50 | 52.31 | 32.37 | 24.63 | 10.97 | 32.90 | 23.56 | 1.81 | 20.84 | 0.32 |

| TCL | 9.73 | 0.69 | 2.15 | 4.30 | 4.13 | 6.24 | 0.47 | 1.51 | 3.22 | 0.35 |

| IML | 9.35 | 0.80 | 2.52 | 7.91 | 6.86 | 6.12 | 0.58 | 1.82 | 7.33 | 3.20 |

| CGM | 58.41 | 15.18 | 14.10 | 8.77 | 6.23 | 6.41 | 1.08 | 1.67 | 4.99 | 0.26 |

| MSE | MSE | MSE | MSE | MSE | MSE | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| MISE | MCE | MISE | MCE | |||||||

| () | () | () | () | () | () | () | () | |||

| WCE1 | 1.66 | 2.93 | 2.41 | 1.80 | 6.00 | 1.19 | 2.92 | 1.43 | 1.74 | 2.53 |

| WCE2 | 1.74 | 2.82 | 2.46 | 1.79 | 6.01 | 1.33 | 2.77 | 1.47 | 1.72 | 2.49 |

| GM | 2.23 | 3.58 | 4.19 | 1.97 | 4.90 | 1.07 | 3.70 | 1.34 | 1.93 | 0.32 |

| TCL | 1.64 | 1.31 | 2.27 | 2.21 | 8.98 | 1.21 | 1.01 | 1.58 | 1.69 | 5.14 |

| IML | 2.46 | 1.38 | 3.49 | 1.23 | 5.31 | 1.07 | 0.77 | 1.39 | 0.94 | 0.81 |

| CGM | 1.42 | 1.00 | 1.87 | 1.21 | 4.19 | 1.06 | 0.79 | 1.34 | 1.07 | 0.26 |

| WCE1 | 1.77 | 3.10 | 2.88 | 1.73 | 5.91 | 1.23 | 2.91 | 1.50 | 1.64 | 2.24 |

| WCE2 | 1.78 | 2.91 | 2.54 | 1.76 | 5.91 | 1.37 | 2.82 | 1.55 | 1.69 | 2.35 |

| GM | 15.22 | 26.80 | 40.38 | 2.82 | 12.64 | 2.18 | 10.19 | 1.52 | 2.11 | 0.44 |

| TCL | 1.65 | 1.20 | 2.35 | 1.57 | 6.30 | 1.15 | 0.85 | 1.47 | 1.14 | 2.26 |

| IML | 3.70 | 3.02 | 5.98 | 1.35 | 6.00 | 1.12 | 0.87 | 1.42 | 0.99 | 0.96 |

| CGM | 5.61 | 13.12 | 9.81 | 1.47 | 6.20 | 1.18 | 1.68 | 1.46 | 0.98 | 0.28 |

| WCE1 | 2.18 | 4.02 | 4.59 | 1.70 | 6.05 | 1.27 | 2.96 | 1.54 | 1.56 | 1.93 |

| WCE2 | 1.88 | 3.09 | 2.98 | 1.74 | 5.89 | 1.39 | 2.84 | 1.60 | 1.63 | 2.13 |

| GM | 30.16 | 59.38 | 66.20 | 3.69 | 17.15 | 5.18 | 32.89 | 2.24 | 3.26 | 0.57 |

| TCL | 2.12 | 2.91 | 2.88 | 1.31 | 4.87 | 1.30 | 1.63 | 1.46 | 0.97 | 0.29 |

| IML | 3.01 | 2.23 | 3.51 | 1.30 | 5.69 | 1.17 | 1.01 | 1.52 | 1.05 | 1.00 |

| CGM | 15.61 | 62.26 | 33.93 | 2.51 | 10.26 | 1.45 | 3.96 | 1.83 | 1.23 | 0.28 |

| MSE | MSE | MSE | MSE | MSE | MSE | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| MISE | MCE | MISE | MCE | |||||||

| () | () | () | () | () | () | () | () | |||

| WCE1 | 0.86 | 6.51 | 25.39 | 8.52 | 10.40 | 0.24 | 4.46 | 1.63 | 7.06 | 2.99 |

| WCE2 | 0.60 | 5.73 | 15.32 | 9.80 | 8.90 | 0.29 | 4.52 | 1.75 | 7.63 | 3.08 |

| GM | 1.29 | 8.26 | 43.01 | 8.60 | 11.56 | 0.20 | 5.29 | 1.39 | 7.42 | 0.37 |

| TCL | 1.99 | 11.86 | 24.72 | 19.24 | 19.99 | 0.22 | 2.66 | 1.60 | 11.18 | 5.23 |

| IML | 2.04 | 10.79 | 20.15 | 9.45 | 14.06 | 0.20 | 2.36 | 1.39 | 5.95 | 0.55 |

| CGM | 0.44 | 3.88 | 7.51 | 7.50 | 5.96 | 0.20 | 2.34 | 1.38 | 5.94 | 0.30 |

| WCE1 | 0.83 | 6.84 | 26.74 | 8.80 | 10.52 | 0.25 | 4.56 | 1.68 | 7.94 | 2.89 |

| WCE2 | 0.60 | 5.89 | 14.83 | 9.62 | 8.95 | 0.30 | 4.65 | 1.83 | 8.58 | 2.98 |

| GM | 3.10 | 36.02 | 95.08 | 10.81 | 21.60 | 0.39 | 15.96 | 1.94 | 9.32 | 0.73 |

| TCL | 2.02 | 12.64 | 26.26 | 16.46 | 17.76 | 0.22 | 2.57 | 1.53 | 8.34 | 2.34 |

| IML | 2.01 | 14.29 | 19.64 | 10.49 | 13.91 | 0.22 | 2.61 | 1.50 | 6.63 | 0.61 |

| CGM | 1.13 | 23.54 | 25.64 | 10.54 | 10.01 | 0.23 | 5.87 | 1.54 | 6.86 | 0.34 |

| WCE1 | 1.49 | 11.88 | 65.37 | 11.79 | 15.68 | 0.26 | 4.64 | 1.72 | 7.85 | 2.81 |

| WCE2 | 0.53 | 5.91 | 14.05 | 17.53 | 8.43 | 0.31 | 4.75 | 1.87 | 7.70 | 2.88 |

| GM | 3.54 | 55.76 | 92.74 | 7.92 | 19.89 | 0.87 | 37.46 | 4.88 | 11.25 | 1.48 |

| TCL | 3.12 | 26.48 | 42.54 | 13.23 | 20.68 | 0.25 | 5.05 | 1.43 | 6.90 | 0.45 |

| IML | 1.25 | 10.40 | 21.28 | 11.08 | 11.36 | 0.23 | 2.84 | 1.66 | 6.89 | 0.70 |

| CGM | 1.59 | 34.55 | 43.64 | 9.75 | 12.72 | 0.34 | 15.13 | 2.17 | 7.80 | 0.44 |

| FDR | PW | FDR | PW | FDR | PW | |

|---|---|---|---|---|---|---|

| () | ||||||

| WCE1 | 13.8 | 89.8 | 31.0 | 93.6 | 40.0 | 93.9 |

| WCE2 | 15.6 | 91.5 | 32.4 | 93.8 | 41.7 | 93.8 |

| TCL | 40.0 | 93.8 | 39.9 | 93.8 | 39.9 | 93.8 |

| IML | 16.4 | 85.4 | 10.0 | 87.5 | 4.7 | 84.1 |

| CGM | 10.5 | 78.5 | 7.2 | 68.0 | 6.6 | 72.1 |

| () | ||||||

| WCE1 | 6.0 | 81.9 | 16.2 | 96.1 | 21.2 | 96.8 |

| WCE2 | 7.3 | 91.0 | 18.2 | 96.7 | 25.0 | 96.8 |

| TCL | 1.2 | 79.7 | 0.3 | 80.4 | 0.0 | 80.6 |

| IML | 16.4 | 91.5 | 8.0 | 94.9 | 2.4 | 94.3 |

| CGM | 9.9 | 72.3 | 9.4 | 49.0 | 7.3 | 78.5 |

| () | ||||||

| WCE1 | 18.3 | 89.8 | 38.4 | 93.9 | 44.7 | 93.9 |

| WCE2 | 20.8 | 91.2 | 39.6 | 93.9 | 45.4 | 94.0 |

| TCL | 40.0 | 93.7 | 39.9 | 93.9 | 39.9 | 93.9 |

| IML | 18.3 | 81.7 | 9.6 | 89.5 | 5.8 | 91.9 |

| CGM | 13.8 | 85.0 | 10.5 | 90.8 | 7.2 | 89.2 |

| () | ||||||

| WCE1 | 8.4 | 86.7 | 20.7 | 96.8 | 27.8 | 96.9 |

| WCE2 | 10.3 | 91.7 | 22.6 | 96.8 | 28.2 | 96.8 |

| TCL | 2.1 | 79.0 | 0.3 | 80.4 | 0.1 | 80.6 |

| IML | 17.3 | 89.7 | 6.3 | 94.7 | 4.2 | 96.1 |

| CGM | 16.0 | 92.1 | 12.0 | 93.4 | 8.4 | 91.0 |

| MSE | MSE | MSE | MSE | MSE | MSE | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| MISE | MCE | MISE | MCE | |||||||

| () | () | () | () | () | () | |||||

| WCE1 | 3.29 | 60.50 | 22.79 | 5.58 | 5.22 | 0.68 | 45.93 | 2.69 | 4.62 | 0.86 |

| WCE2 | 1.70 | 41.50 | 12.05 | 4.50 | 4.79 | 0.26 | 23.61 | 1.88 | 3.63 | 0.94 |

| GM | 9.90 | 82.05 | 66.44 | 22.17 | 13.33 | 3.63 | 83.48 | 6.62 | 13.41 | 0.80 |

| TCL | 0.29 | 3.58 | 1.78 | 4.03 | 3.57 | 0.16 | 2.10 | 1.24 | 3.07 | 0.35 |

| IML | 15.78 | 8.47 | 110.44 | 40.32 | 27.60 | 57.51 | 8.45 | 194.53 | 59.72 | 40.14 |

| CGM | 9.09 | 236.34 | 54.47 | 19.76 | 11.30 | 5.04 | 311.11 | 10.26 | 5.62 | 1.25 |

| 2 | 3 | 4 | 5 | 6 | 2 | 3 | 4 | 5 | 6 | ||

| WCE1 | 0.0 | 92.3 | 7.7 | 0.0 | 0.0 | 0.0 | 97.0 | 3.0 | 0.0 | 0.0 | |

| WCE2 | 0.0 | 92.3 | 7.7 | 0.0 | 0.0 | 0.0 | 96.0 | 4.0 | 0.0 | 0.0 | |

| GM | 0.0 | 100.0 | 0.0 | 0.0 | 0.0 | 0.0 | 97.7 | 2.3 | 0.0 | 0.0 | |

| TCL | 0.0 | 100.0 | 0.0 | 0.0 | 0.0 | 0.0 | 100.0 | 0.0 | 0.0 | 0.0 | |

| IML | 23.1 | 53.8 | 23.1 | 0.0 | 0.0 | 68.0 | 25.3 | 3.0 | 3.0 | 0.7 | |

| CGM | 0.0 | 84.6 | 15.4 | 0.0 | 0.0 | 0.0 | 71.0 | 20.7 | 5.0 | 3.3 | |

| WCE1 | 0.0 | 98.7 | 1.3 | 0.0 | 0.0 | 0.0 | 98.3 | 1.7 | 0.0 | 0.0 | |

| WCE2 | 0.0 | 98.7 | 1.3 | 0.0 | 0.0 | 0.0 | 94.9 | 5.1 | 0.0 | 0.0 | |

| GM | 0.3 | 92.7 | 7.0 | 0.0 | 0.0 | 0.0 | 97.5 | 2.5 | 0.0 | 0.0 | |

| TCL | 0.0 | 100.0 | 0.0 | 0.0 | 0.0 | 0.0 | 100.0 | 0.0 | 0.0 | 0.0 | |

| IML | 14.3 | 63.3 | 15.7 | 4.0 | 2.7 | 61.9 | 23.7 | 7.6 | 5.1 | 1.7 | |

| CGM | 8.3 | 78.0 | 7.3 | 4.3 | 2.0 | 0.0 | 68.6 | 19.5 | 8.5 | 3.4 | |

| WCE1 | 2.0 | 93.3 | 4.3 | 0.3 | 0.0 | 0.0 | 97.0 | 3.0 | 0.0 | 0.0 | |

| WCE2 | 0.0 | 93.7 | 6.3 | 0.0 | 0.0 | 0.0 | 96.0 | 4.0 | 0.0 | 0.0 | |

| WCE1 () | 3.0 | 92.7 | 3.7 | 0.3 | 0.3 | 0.0 | 99.7 | 0.3 | 0.0 | 0.0 | |

| WCE2 () | 0.0 | 96.7 | 3.3 | 0.0 | 0.0 | 0.0 | 99.0 | 1.0 | 0.0 | 0.0 | |

| WCE1 () | 4.7 | 86.3 | 7.3 | 1.3 | 0.3 | 0.0 | 98.0 | 2.0 | 0.0 | 0.0 | |

| WCE2 () | 1.0 | 93.0 | 5.7 | 0.3 | 0.0 | 0.0 | 95.3 | 4.7 | 0.0 | 0.0 | |

| GM | 14.0 | 66.3 | 18.7 | 1.0 | 0.0 | 0.0 | 81.7 | 17.3 | 1.0 | 0.0 | |

| TCL | 0.0 | 96.0 | 4.0 | 0.0 | 0.0 | 0.0 | 96.0 | 4.0 | 0.0 | 0.0 | |

| IML | 8.0 | 59.7 | 21.0 | 8.7 | 2.7 | 56.7 | 31.0 | 6.3 | 4.0 | 2.0 | |

| CGM | 43.3 | 33.0 | 14.7 | 7.0 | 2.0 | 2.7 | 74.7 | 16.0 | 4.7 | 2.0 | |

Skew normal mixture

The performance of the proposed methods for the skew normal mixture is examined using simulated data along with some existing methods. We consider a -dimensional mixture of skew-normal distributions with components where the mixture components have the location parameter vectors , skewness parameter vectors and scale matrices, and given by with and , in the parametrization given in (8). The two cases for , , and are considered, corresponding to the cases of the overlapping and well-separated clusters, respectively. The mixing proportions are set to and . The sample size is set to , and the results for are provided in the Supplementary Material. The proportion of outliers is set to , and the outliers are generated in the same way as in Section 6.1.

First, we show some results based on a single simulated dataset with . The proposed weighted complete equation (WCE) method is applied to fit the skew normal mixture with . The standard skew normal mixture (SNM) is estimated using the maximum likelihood method with the EM algorithm of Lin et al. (2007). Figure 1 presents the three contours of the estimated and true densities. The figure shows that the proposed WCE method provides reasonable estimates of the true density by adequately suppressing the influence of outliers through density weights. On the other hand, SMN produces inaccurate estimates. In particular, it overestimates the variability of the true data-generating process by treating the outliers as non-outliers. It is also observed that the inaccuracy of the SNM becomes more profound as the proportion of outliers increases.

Next, the performance of the proposed method and the existing methods are quantitatively compared. The two settings for the proposed WCE method with and are considered, denoted as WCE1 and WCE2, respectively. As contenders, we consider SNM and the skew- mixture (Lee and McLachlan, 2016) of the R package ‘EMMIXcskew’ (Lee and McLachlan, 2017) denoted by STM. Note that STM is also robust against outliers because of the heavy tails of the skew- distribution, but the interpretations of the skewness parameters and scale matrices are different from those for WCE and SNM. Thus, the comparison with STM is made only with respect to the mean (location) parameters, mixing proportions, and classification accuracy. We considered two cases of , and , but the computing time for STM under is too long to include STM as a contender in our study, so the results of STM are reported only for . To evaluate this estimation performance, we employ the squared errors: , , and . Based on 1000 replications, the mean squared error (MSE) for the model parameters is obtained. We also computed the mean integrated squared error (MISE) of the density estimation (11) and the mean classification error in the same way as in Section 6.1. The table shows that the standard SNM model is severely affected by the outliers resulting in a large MSE, as in the case of the normal mixture. In the present setting, MCE for SNM in the case of overlapped clusters is particularly large. Comparing the proposed method and STM for , it can be seen that the proposed method resulted in a smaller MSE for and than STM, while MCE appears somewhat comparable. The table shows that the proposed method also seems to work reasonably well in the skew normal mixture case and suggests that our method is a useful tool applicable to a wide range of mixture models.

| MSE | MISE | MCE | ||||

|---|---|---|---|---|---|---|

| () | (%) | |||||

| () | ||||||

| WCE1 | 1.22 | 8.59 | 2.15 | 0.48 | 0.66 | 2.94 |

| WCE2 | 1.43 | 10.97 | 2.76 | 0.43 | 0.61 | 2.83 |

| SNM | 25.48 | 29.00 | 38.70 | 21.61 | 2.42 | 24.47 |

| STM | 5.62 | - | - | 1.93 | - | 4.12 |

| () | ||||||

| WCE1 | 1.29 | 10.64 | 2.33 | 0.38 | 0.72 | 3.66 |

| WCE2 | 1.70 | 12.75 | 3.30 | 0.32 | 0.57 | 3.62 |

| SNM | 46.21 | 61.28 | 75.54 | 39.67 | 3.22 | 47.80 |

| STM | 7.87 | - | - | 3.88 | - | 7.02 |

| () | ||||||

| WCE1 | 2.11 | 16.40 | 4.54 | 1.60 | 1.06 | 6.36 |

| WCE2 | 2.17 | 15.08 | 4.10 | 0.48 | 0.63 | 4.51 |

| SNM | 45.16 | 80.68 | 76.50 | 36.66 | 3.62 | 49.63 |

| STM | 11.20 | - | - | 7.82 | - | 14.83 |

| () | ||||||

| WCE1 | 0.69 | 2.14 | 1.21 | 0.10 | 0.31 | 0.66 |

| WCE2 | 0.71 | 2.47 | 1.33 | 0.10 | 0.32 | 0.65 |

| SNM | 1.19 | 8.98 | 2.28 | 0.12 | 2.11 | 0.18 |

| STM | 5.95 | - | - | 0.87 | - | 0.91 |

| () | ||||||

| WCE1 | 0.69 | 2.48 | 1.19 | 0.09 | 0.40 | 1.40 |

| WCE2 | 0.86 | 3.22 | 1.59 | 0.09 | 0.38 | 1.32 |

| SNM | 1.25 | 25.51 | 2.85 | 0.22 | 3.28 | 0.33 |

| STM | 7.85 | - | - | 0.89 | - | 1.07 |

| () | ||||||

| WCE1 | 0.86 | 3.24 | 1.47 | 0.11 | 0.58 | 2.28 |

| WCE2 | 1.12 | 4.09 | 2.01 | 0.10 | 0.48 | 2.03 |

| SNM | 1.28 | 44.31 | 3.21 | 0.46 | 3.94 | 0.47 |

| STM | 8.94 | - | - | 1.34 | - | 1.47 |

| MSE | MISE | MCE | ||||

|---|---|---|---|---|---|---|

| () | (%) | |||||

| () | ||||||

| WCE1 | 1.74 | 0.39 | 4.16 | 0.31 | 0.29 | 2.55 |

| WCE2 | 1.83 | 0.40 | 4.28 | 0.32 | 0.32 | 2.65 |

| SNM | 2.22 | 6.66 | 5.40 | 1.48 | 1.23 | 4.12 |

| () | ||||||

| WCE1 | 0.51 | 0.37 | 1.08 | 0.16 | 0.26 | 2.11 |

| WCE2 | 0.56 | 0.38 | 1.11 | 0.16 | 0.28 | 2.10 |

| SNM | 1.08 | 20.72 | 2.57 | 0.81 | 1.85 | 3.02 |

| () | ||||||

| WCE1 | 0.28 | 0.35 | 0.46 | 0.13 | 0.29 | 2.24 |

| WCE2 | 0.31 | 0.37 | 0.46 | 0.13 | 0.29 | 2.23 |

| SNM | 1.72 | 36.35 | 3.49 | 1.20 | 2.15 | 3.07 |

| () | ||||||

| WCE1 | 0.30 | 0.38 | 0.39 | 0.09 | 0.27 | 0.17 |

| WCE2 | 0.34 | 0.38 | 0.44 | 0.10 | 0.28 | 0.17 |

| SNM | 0.44 | 6.51 | 0.80 | 0.11 | 1.15 | 0.19 |

| () | ||||||

| WCE1 | 0.26 | 0.36 | 0.36 | 0.11 | 0.26 | 0.36 |

| WCE2 | 0.29 | 0.38 | 0.38 | 0.11 | 0.28 | 0.35 |

| SNM | 1.52 | 23.78 | 3.24 | 0.25 | 1.93 | 0.38 |

| () | ||||||

| WCE1 | 0.23 | 0.35 | 0.34 | 0.10 | 0.28 | 0.58 |

| WCE2 | 0.30 | 0.37 | 0.41 | 0.10 | 0.29 | 0.55 |

| SNM | 3.06 | 40.12 | 6.80 | 0.52 | 2.21 | 0.53 |

Real data example

Tone perception data

The proposed robust mixture of experts modeling is applied to a famous tone perception dataset (Cohen, 1984). In the tone perception experiment, a pure fundamental tone added with electronically generated overtones determined by a stretching ratio was played to a trained musician. The data consists of pairs of “stretch ratio” variable (denoted by ) and “tuned” variable (denoted by ) and the conditional distribution of given is of interest. Following Chamroukhi (2016), we consider the two-component mixture of experts model given by

where . The unknown parameters are estimated based on two approaches: the standard maximum likelihood method via the EM algorithm denoted by MOE and the proposed WCE method with denoted by RMOE. Based on the outlier detection rule in Section 2.3, we detected 13 outliers for . The estimated regression lines, detected outliers, and classification results are shown in the upper panels of Figure 2. The figure shows that the estimated regression lines based on the two methods are slightly different, but both methods successfully capture the grouped structure of the dataset. It is also found that the estimate of is almost equal to , thus the mixing probability is homogeneous.

Next, we investigated the sensitivity of the model against outliers by artificially adding the 10 identical pairs to the original dataset as outliers, as done in Chamroukhi (2016). The estimated regression lines obtained from the two methods are shown in the lower panels of Figure 2. The figure clearly shows that MOE is very sensitive to outliers. In contrast, the estimates of the proposed RMOE for the original and contaminated datasets are quite similar, indicating the desirable robustness properties of the proposed method. Different values of are also tested, but the results are not sensitive to . We also note that the result of the proposed method in Figure 2 is almost identical to that reported by Chamroukhi (2016), where the -distribution was used as a robust alternative. Finally, using the outlier detection rule, we identified 23 outliers, including 10 artificial outliers for any .

Swiss banknote data

The proposed WCE method is applied to the famous Swiss banknote dataset (Flury and Riedwyl, 1988). The data consists of six measurements () made on the 100 genuine and 100 counterfeit old Swiss 1000 franc bills. We apply the skew normal mixture model to the data using the proposed WCE and the standard EM algorithm.

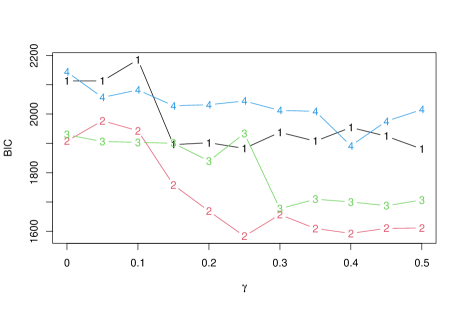

We first select the number of clusters . To this end, we computed the trimmed BIC criterion (5) for and in which we set (threshold probability for outliers) and (eigen-ratio constraint). Note that we also carried out the same procedure with and , but the results were almost identical. The results shown in Figure 3 indicate that is a reasonable choice for the number of clusters, which is consistent with the true number of labels. We also note that under the non-robust method (), the BIC values of and are almost identical.

Using in the proposed WCE method, we fitted the skew normal mixture models with . The robust estimates and standards errors of the skewness parameter are listed in Table 9. The table shows significant skewness in the two measurements (bottom and top), so the skew-normal mixture models would be more desirable than Gaussian mixture models for this dataset. By carrying out outlier detection with , we identified 33 outliers, including 14 genuine and 19 counterfeit bills. The outliers and resulting cluster assignments are shown in Figure 4. The figure shows that the skew normal mixture can flexibly capture the cluster-wise distributions of the six measurements while successfully suppressing the influence of the outliers.

Finally, we assessed the classification accuracy of the proposed WCE method and standard (non-robust) EM algorithm. Based on the fitted results, the cluster assignment for all observations including outliers is obtained as the maximizer of the posterior probability. The estimated assignments with the true labels (”genuine” or ”counterfeit”) are then compared. The proposed method was able to classify all bills correctly, that is, there was no misclassification, whereas the standard EM algorithm misclassified 18 bills. This result is consistent with that of the simulation study and thus strongly suggests using the robust mixture model to correctly classify the observations to the clusters.

| Cluster 1 | Cluster 2 | |||

|---|---|---|---|---|

| Estimate | SE | Estimate | SE | |

| Length | -0.02 | 0.17 | -0.11 | 0.55 |

| Left | -0.09 | 0.05 | 0.09 | 0.26 |

| Right | -0.05 | 0.18 | 0.01 | 0.15 |

| Bottom | 0.82 | 0.08 | 1.33 | 0.24 |

| Top | -0.95 | 0.07 | -1.00 | 0.23 |

| Diagonal | 0.14 | 0.11 | -0.05 | 0.16 |

Concluding remarks

In this paper, we have introduced a new strategy for robust mixture modeling based on the weighted complete estimating equations. We then developed an EEE algorithm that iteratively solves the proposed estimating equations. The advantage of the proposed method is its computational feasibility because the proposed EEE algorithm admits relatively simple iterations. Applications of the proposed method to the three mixture models (multivariate Gaussian mixture, mixture of experts, and multivariate skew normal mixture) were considered, and feasible EEE algorithms to estimate these models were derived. In particular, for the skew normal mixture, we have slightly extended the proposed method and derived a novel EEE algorithm in which all parameters are updated in closed forms. The desirable performance of the proposed method is confirmed through a simulation study and real data examples.

In this work, we only considered the unstructured variance-covariance (scale) matrix of the Gaussian mixture and skew normal mixture in Sections 3 and 5. However, it might be useful to consider a structured scale matrix characterized by some parameters as done in R package ‘Mclust’ (Scrucca et al., 2016) or selecting a suitable structure of the scale matrix via an information criterion (e.g. Cerioli et al., 2018). Furthermore, while is selected by monitoring the trimmed BIC defined in (5) in this paper, it might be possible to employ a selection criterion for robust divergence, suggested by, for example, (Basak et al., 2021) and Sugasawa and Yonekura (2021). Incorporating such approaches into the proposed method will be a valuable future study.

As briefly mentioned in Section 1, the proposed weighted complete estimating equations are closely related to the density power divergence (Basu et al., 1998), since the derivative of the density power divergence of the component-wise distribution given the latent membership variables leads to the weighted complete estimating equations for the component-wise parameters. Hence, other types of divergence can also be adopted to devise alternative estimating equations. However, there is no guarantee that a feasible EEE algorithm, as developed in the present study, can be obtained for other divergences. An investigation of the usage of another divergence and its comparisons exceeds the scope of this study and is thus left for future research.

Acknowledgements

We would like to thank the three reviewers and the coordinating editor for their valuable comments and suggestions, which have significantly improved the paper. This work was supported by the Japan Society for the Promotion of Science (KAKENHI) Grant Numbers 18K12754, 18K12757, 20H00080, 21K01421, and 21H00699.

References

- Azzalini and Valle (1996) Azzalini, A. and A. D. Valle (1996). The multivariate skew normal distribution. Biometrika 83, 715–725.

- Bagnato et al. (2017) Bagnato, L., A. Punzo, and M. G. Zoia (2017). The multivariate leptokurtic-normal distribution and its application in model-based clustering. Canadian Journal of Statistics 45(1), 95–119.

- Bai et al. (2012) Bai, X., W. Yao, and J. E. Boyer (2012). Robust fitting of mixture regression models. Computational Statistics & Data Analysis 56, 2347–2359.

- Basak et al. (2021) Basak, S., A. Basu, and M. Jones (2021). On the ‘optimal’density power divergence tuning parameter. Journal of Applied Statistics 48(3), 536–556.

- Basso et al. (2010) Basso, R. M., V. H. Lachos, C. R. B. Cabral, and P. Ghosh (2010). Robust mixture modeling based on scale mixtures of skew-normal distributions. Computational Statistics & Data Analysis 54(12), 2926–2941.

- Basu et al. (1998) Basu, A., G. I. R, N. L. Hjort, and M. C. Jones (1998). Robust and efficient estimation by minimizing a density power divergence. Biometrika 85, 549–559.

- Campbell (1984) Campbell, N. (1984). Mixture models and atypical values. Mathematical Geology 16, 465–467.

- Cerioli and Farcomeni (2011) Cerioli, A. and A. Farcomeni (2011). Error rates for multivariate outlier detection. Computational Statistics & Data Analysis 55, 544–553.

- Cerioli et al. (2018) Cerioli, A., L. A. Garcia-Escudero, A. Mayo-Iscar, and M. Riani (2018). Finding the number of normal groups in model-based clustering via constrained likelihoods. Journal of Computational and Graphical Statistics 27, 404–416.

- Chamroukhi (2016) Chamroukhi, F. (2016). Robust mixture of experts modeling using the t distribution. Neural Networks 79, 20–36.

- Cohen (1984) Cohen, E. A. (1984). Some effects of inharmonic partials on interval perception. Music Perception 1, 323–349.

- Coretto and Hennig (2016) Coretto, P. and C. Hennig (2016). Robust improper maximum likelihood: Tuning, computation, and a comparison with other methods for robust gaussian clustering. Journal of the American Statistical Association 516, 1648–1659.

- Coretto and Hennig (2019) Coretto, P. and C. Hennig (2019). otrimle: Robust model-based clustering R package version 1.3 (https://CRAN.R-project.org/package=otrimle).

- Elashoff and Ryan (2004) Elashoff, M. and L. Ryan (2004). An em algorithm for estimating equations. Journal of Computational and Graphical Statistics 13, 48–65.

- Farcomeni and Greco (2015) Farcomeni, A. and L. Greco (2015). S-estimation of hidden markov models. Computational Statics 30, 57–80.

- Farcomeni and Punzo (2020) Farcomeni, A. and A. Punzo (2020). Robust model-based clustering with mild and gross outliers. Test 29(4), 989–1007.

- Flury and Riedwyl (1988) Flury, B. and H. Riedwyl (1988). Multivariate Statistics, a Practical Approach. Cambridge University Press.

- Forbes and Wraith (2014) Forbes, F. and D. Wraith (2014). A new family of multivariate heavy-tailed distributions with variable marginal amounts of tailweight: application to robust clustering. Statistics and computing 24(6), 971–984.

- Fritz et al. (2012) Fritz, H., L. Garcia-Escudero, and A. Mayo-Iscar (2012). tclust : An r package for a trimming approach to cluster analysis. Journal of Statistical Software 47, 1–26.

- Fritz et al. (2013) Fritz, H., L. Garcia-Escudero, and A. Mayo-Iscar (2013). A fast algorithm for robust constrained clustering. Computational Statistics and Data Analysis 61, 124–136.

- Früwirth-Schnatter and Pyne (2010) Früwirth-Schnatter, S. and S. Pyne (2010). Bayesian inference for finite mixtures of univariate and multivariate skew-normal and skew-t distributions. Biostatistics 11, 317–3360.

- Fujisawa and Eguchi (2006) Fujisawa, H. and S. Eguchi (2006). Robust estimation in the normal mixture model. Journal of Statistical Planning and Inference 136, 3989–4011.

- Galimberti and Soffritti (2014) Galimberti, G. and G. Soffritti (2014). A multivariate linear regression analysis using finite mixtures of t distributions. Computational Statistics & Data Analysis 71, 138–150.

- Garcia-Escudero et al. (2008) Garcia-Escudero, L., A. Gordaliza, C. Matran, and A. Mayo-Iscar (2008). A general trimming approach to robust cluster analysis. The Annals of Statistics 36, 1324–1345.

- Greco and Agostinelli (2020) Greco, L. and C. Agostinelli (2020). Weighted likelihood mixture modeling and model-based clustering. Statistics and Computing 30, 255–277.

- Ingrassia et al. (2014) Ingrassia, S., S. C. Minotti, and A. Punzo (2014). Model-based clustering via linear cluster-weighted models. Computational Statistics & Data Analysis 71, 159–182.

- Jacobs et al. (1991) Jacobs, R., M. Jordan, S. Nowlan, and G. Hinton (1991). Adaptive mixtures of local experts. Neural Computation 3, 79–87.

- Lee and McLachlan (2016) Lee, S. and G. McLachlan (2016). Finite mixtures of canonical fundamental skew t-distributions: the unification of the restricted and unrestricted skew t-mixture models. Statistics and Computing 26, 573–589.

- Lee and McLachlan (2017) Lee, S. and G. McLachlan (2017). Emmixcskew: An R package for the fitting of a mixture of canonical fundamental skew t-distributions. Journal of Statistical Software 83, 1–32.

- Lee and McLachlan (2014) Lee, S. and G. J. McLachlan (2014). Finite mixtures of multivariate skew t-distributions: some recent and new results. Statistics and Computing 24(2), 181–202.

- Lin (2009) Lin, T. (2009). Maximum likelihood estimation for multivariate skew normal mixture models. Journal of Multivariate Analysis 100, 257–265.

- Lin et al. (2007) Lin, T., J. Lee, and Y. Yen (2007). Finite mixture modelling using the skew normal distribution. Statistica Sinica 17, 909–927.

- Lin (2010) Lin, T.-I. (2010). Robust mixture modeling using multivariate skew t distributions. Statistics and Computing 20(3), 343–356.

- Mazza and Punzo (2020) Mazza, A. and A. Punzo (2020). Mixtures of multivariate contaminated normal regression models. Statistical Papers 61(2), 787–822.

- McLachlan and Peel (2000) McLachlan, G. and D. Peel (2000). Finite mixture models. Wiley, New York.

- Morris et al. (2019) Morris, K., A. Punzo, P. D. McNicholas, and R. P. Browne (2019). Asymmetric clusters and outliers: Mixtures of multivariate contaminated shifted asymmetric laplace distributions. Computational Statistics & Data Analysis 132, 145–166.

- Nguyen and McLachlan (2016) Nguyen, H. D. and G. J. McLachlan (2016). Laplace mixture of linear experts. Computational Statistics & Data Analysis 93, 177–191.

- Peel and McLachlan (2004) Peel, D. and G. J. McLachlan (2004). Robust mixture modelling using the distribution. Statistics and Computing 10, 339–348.

- Punzo et al. (2018) Punzo, A., A. Mazza, and P. D. McNicholas (2018). ContaminatedMixt: An R package for fitting parsimonious mixtures of multivariate contaminated normal distributions. Journal of Statistical Software 85, 1–25.

- Punzo et al. (2017) Punzo, A., P. McNicholas, et al. (2017). Robust clustering in regression analysis via the contaminated gaussian cluster-weighted model. Journal of Classification 34(2), 249–293.

- Punzo and McNicholas (2016) Punzo, A. and P. D. McNicholas (2016). Parsimonious mixtures of multivariate contaminated normal distributions. Biometrical Journal 58, 1506–1537.

- Punzo and Tortora (2021) Punzo, A. and C. Tortora (2021). Multiple scaled contaminated normal distribution and its application in clustering. Statistical Modelling 21(4), 332–358.

- Scrucca et al. (2016) Scrucca, L., M. Fop, T. B. Murphy, and A. E. Raftery (2016). mclust 5: clustering, classification and density estimation using Gaussian finite mixture models. The R Journal 8, 289–317.

- Song et al. (2014) Song, W., W. Yao, and Y. Xing (2014). Robust mixture regression model fitting by laplace distribution. Computational Statistics & Data Analysis 71, 128–137.

- Sugasawa and Yonekura (2021) Sugasawa, S. and S. Yonekura (2021). On selection criteria for the tuning parameter in robust divergence. Entropy 23(9), 1147.

- Sun et al. (2010) Sun, J., A. Kabán, and J. M. Garibaldi (2010). Robust mixture clustering using pearson type vii distribution. Pattern Recognition Letters 31(16), 2447–2454.

- Tang and Qu (2016) Tang, X. and A. Qu (2016). Mixture modeling for longitudinal data. Journal of Computational and Graphical Statistics 25, 1117–1137.

- Venables and Ripley (2002) Venables, W. N. and B. D. Ripley (2002). Modern Applied Statistics with S (Fourth ed.). New York: Springer.

- Wang et al. (2009) Wang, K., S.-K. Ng, and G. J. McLachlan (2009). Multivariate skew t mixture models: applications to fluorescence-activated cell sorting data. In 2009 Digital Image Computing: Techniques and Applications, pp. 526–531. IEEE.

- Yao et al. (2014) Yao, W., Y. Wei, and C. Yu (2014). Robust mixture regression using the t-distribution. Computational Statistics & Data Analysis 71, 116–127.

- Zarei et al. (2019) Zarei, S., A. Mohammadpour, S. Ingrassia, and A. Punzo (2019). On the use of the sub-gaussian -stable distribution in the cluster-weighted model. Iranian Journal of Science and Technology, Transactions A: Science 43(3), 1059–1069.

- Zhang and Liang (2010) Zhang, J. and F. Liang (2010). Robust clustering using exponential power mixtures. Biometrics 66(4), 1078–1086.