Laboratory for Nuclear Science, MIT, Cambridge, MA, USA

Robust and Provably Monotonic Networks

Abstract

The Lipschitz constant of the map between the input and output space represented by a neural network is a natural metric for assessing the robustness of the model. We present a new method to constrain the Lipschitz constant of dense deep learning models that can also be generalized to other architectures. The method relies on a simple weight normalization scheme during training that ensures the Lipschitz constant of every layer is below an upper limit specified by the analyst. A simple monotonic residual connection can then be used to make the model monotonic in any subset of its inputs, which is useful in scenarios where domain knowledge dictates such dependence. Examples can be found in algorithmic fairness requirements or, as presented here, in the classification of the decays of subatomic particles produced at the CERN Large Hadron Collider. Our normalization is minimally constraining and allows the underlying architecture to maintain higher expressiveness compared to other techniques which aim to either control the Lipschitz constant of the model or ensure its monotonicity. We show how the algorithm was used to train a powerful, robust, and interpretable discriminator for heavy-flavor-quark decays, which has been adopted for use as the primary data-selection algorithm in the LHCb real-time data-processing system in the current LHC data-taking period known as Run 3. In addition, our algorithm has also achieved state-of-the-art performance on benchmarks in medicine, finance, and other applications.

1 Introduction

The sensor arrays of the LHC experiments produce over 100 TB/s of data, more than a zettabyte per year. After drastic data-reduction performed by custom-built read-out electronics, the annual data volumes are still exabytes, which cannot be stored indefinitely. Therefore, each experiment processes the data in real-time and decides whether each proton-proton collision event should remain persistent or be discarded permanently, referred to as triggering in particle physics. Trigger classification algorithms must be designed to minimize the impact of effects like experimental instabilities that occur during data taking—and deficiencies in simulated training samples. (If we knew all of the physics required to produce perfect training samples, there would be no point in performing the experiment.) The need for increasingly complex discriminators for the LHCb trigger system LHCb-DP-2012-004 ; LHCb-DP-2019-001 calls for the use of expressive models which are both robust and interpretable. Here we present an architecture based on a novel weight normalization technique that achieves both of these requirements.

Robustness

A natural way of ensuring the robustness of a model is to constrain the Lipschitz constant of the function it represents, defined such that for every pair of points on the graph of the function, the absolute value of the slope of the line connecting them is not greater than the Lipschitz constant. To this end, we developed a new architecture whose Lipschitz constant is constrained by design using a novel layer-wise normalization which allows the architecture to be more expressive than the current state-of-the-art with more stable and faster training.

Interpretability

An important inductive bias in particle detection at the LHC is the idea that particular collision events are more interesting if they are outliers, e.g., possible evidence of a particle produced with a longer-than-expected (given known physics) lifetime would definitely warrant further detailed study. The problem is that outliers are often caused by experimental artifacts or imperfections, which are included and labeled as background in training; whereas the set of all possible interesting outliers is not possible to construct a priori, thus not included in the training process. This problem is immediately solved if outliers are better is implemented directly using an expressive monotonic architecture. Some work was done in this regard liu2020certified ; you2017deepLattice ; nips93monotonic but most implementations are either not expressive enough or provide no guarantees. We present Monotonic Lipschitz Networks which overcome both of these problems by building an architecture that is monotonic in any subset of the inputs by design, while keeping the constraints minimal such that it still offers significantly better expressiveness compared to current methods.

2 Monotonic Lipschitz Networks

The goal is to develop a neural network architecture representing a scalar-valued function

| (1) |

that is provably monotonic in any subset of inputs and whose gradient (with respect to its inputs) has a constrained magnitude in any particular direction. In an experimental setting, this latter property is a measure of robustness to small changes in experimental conditions or to small deficiencies in the training samples.

Constraints with respect to a particular metric will be denoted as Lipp. We start with a model that is Lip1 with Lipschitz constant if (we show below how to train such a model)

| (2) |

The choice of 1-norm is crucial because it allows a well defined maximum directional derivative for each input regardless of the gradient direction. This has the convenient side effect that we can tune the robustness requirement for each input individually. Note that rescaling the inputs allows for directional dependence.

2.1 Enforcing Monotonicity

Assuming we have trained a model that satisfies (2), we can make an architecture with built-in monotonicity by adding a term that is linear (or has gradient ) in each direction in which we want to be monotonic:

| (3) |

where denotes the set of indices of the input features for which we would like to be monotonic. This residual connection enforces monotonicity:

| (4) |

Note that the construction presented here only works with Lip1 constraints as Lipp≠1 functions introduce dependencies between the partial derivatives. In addition, we stress that monotonicity is defined via partial derivatives. The value of is guaranteed to increase when is increased while keeping all constant. It is therefore advisable to look out for ill defined edge cases. For instance, let in the training data and define . This is incompatible with the architecture and produces unwanted results unless for both and (otherwise the problem is ill posed).

To the best of our knowledge, the only use of residual connections in the literature when trying to learn monotonic functions is in the context of invertible ResNets behrmann2019invertible . Instead, the state-of-the-art approach for learning monotonic functions involves penalizing negative gradients in the loss, then certifying the final model is monotonic, rather than enforcing it in the architecture (e.g. in liu2020certified ).

2.2 Enforcing Lipschitz Constraints

Ideally, the construction should be a universal approximator of Lip1 functions. Here, we discuss possible architectures for this task.

Lip1 constrained models

Fully connected networks can be Lipschitz bounded by constraining the matrix norm of all weights p_norm_paper ; spectral2018 . Given the fully connected network with activation

| (5) |

where is the weight matrix of layer , satisfies Eq. (2) if

| (6) |

and has a Lipschitz constant less than or equal to 1. There are multiple ways to enforce Eq. (6). Two possibilities that involve scaling by the operator norm of the weight matrix p_norm_paper are

| (7) |

In our studies thus far, the latter variant seems to train slightly better. However, in some cases it might be useful to use the former to avoid the scale imbalance between the neural network’s output and the residual connection used to induce monotonicity.

In order to satisfy Eq. (6), it is not necessary to divide the entire matrix by its 1-norm. It is sufficient to ensure that the absolute sum over each column is constrained:

| (8) |

This novel normalization scheme tends to give even better training results in practice. While Eq. (8) is not suitable as a general-purpose scheme, e.g. it would not work in convolutional networks, its performance in training in our analysis motivates further study of this approach in future work.

The constraints in Eqs. (7) and (8) can be applied in different ways. For example, one could normalize the weights directly before each call such that the induced gradients are propagated through the network like in spectral2018 . While one could come up with toy examples for which propagating the gradients in this way hurts training, it appears that this approach is what usually is implemented for spectral norm spectral2018 in PyTorch and TensorFlow. Alternatively, the constraint could be applied by projecting any infeasible parameter values back into the set of feasible matrices after each gradient update as in Algorithm 2 of p_norm_paper . Algorithm 1 summarizes our approach.

Preserving expressive power

Some Lipschitz network architectures (e.g. spectral2018 ) tend to overconstrain the model in the sense that these architectures cannot fit all functions -Lip1 due to gradient attenuation. For many problems this is a rather theoretical issue. However, it becomes a practical problem for the monotonic architecture since it often works on the edges of its constraints, for instance when partial derivatives close to zero are required. The authors of huster2018 showed that ReLU networks are unable to fit the function if the layers are norm-constrained with . The reason lies in fact that ReLU, and most other commonly used activations, do not have unit gradient with respect to the inputs over their entire domain.

While element-wise activations like ReLU cannot have unit gradient over the whole domain without being exactly linear, the authors of sorting2019 explore activations that introduce nonlinearities by reordering elements of the input vector. They propose the following activation function:

| (9) |

which sorts its inputs in chunks (groups) of a fixed size. This operation has gradient with respect to every input and gives architectures constrained with Eq. (6) increased expressive power. In addition, we have found that using this activation function also results in achieving sufficient expressiveness with a small number of weights, making the networks ideal for use in resource-constrained applications.

3 Example Applications to Simple Models

Before applying our new architecture to real-time data-processing at the LHC, we first demonstrate that it behaves as expected on some simple toy problems.

3.1 Robustness to Outliers

We will demonstrate the robustness that arises from the Lipschitz constraint by making a simple toy regression model to fit to data sampled from a 1-dimensional function with one particularly noisy data point. The underlying model that we sample from here has the form

| (10) |

where is Gaussian noise with unit variance for one data point and otherwise. While this toy problem will explicitly show that the Lipschitz network is more robust against outliers than an unconstrained network due to its bounded gradient, it also serves as a proxy for any scenario with deficiencies in the training data. N.b., due to its bounded gradient a Lipschitz network is also more robust against adversarial attacks and data corruption than an unconstrained model.

Figure 1 shows that the unconstrained model overfits the data as expected, whereas applying our approach from Sec. 2 does not. The Lipschitz model effectively ignores the outlier, since there is no way to accommodate that data point while respecting its built-in gradient bound. In addition, we see that the Lipschitz constraint enforces much smoother functions over the full range—the degree of this smoothness determined by us via the chosen Lipschitz constant.

3.2 Monotonic Dependence

To demonstrate monotonicity, we will make a simple toy regression model to fit to data sampled from the following 1-dimensional function:

| (11) |

where is a Gaussian noise term whose variance is linearly increasing in . In this toy model, we will assume that our prior knowledge tells us that the function we are trying to fit must be monotonic, despite the non-monotonic behavior observed due to the noise. This situation is ubiquitous in real-world applications of AI/ML, but is especially prevalent in the sciences (see, e.g., Sec. 4).

First, we train standard (unconstrained) neural networks on several different samples drawn from Eq. (11). Here, we also consider two generic situations where the training data are missing: one that requires extrapolation beyond the region covered by the training data, and another that requires interpolation between two occupied regions. Figure 2 shows that the unconstrained models overfit the data as expected, resulting in non-monotonic behavior. Furthermore, when extrapolating or interpolating into regions where training data were absent, the unconstrained models exhibit highly undesirable and in some cases unpredictable behavior. (This problem is exacerbated in higher dimensions and sparser data.) In the case of extrapolation, the behavior of the unconstrained model is largely driven by the noise in the last one or two data points. The interpolation scenario is less predictable.

While the overfitting observed here could be reduced by employing some form of strong regularization, such an approach would not (in general) lead to monotonic behavior, nor would it formally bound the gradient. Applying our approach from Sec. 2 does both. Figure 2 demonstrates that our method always produces a monotonic function, even in the extrapolation scenario where the slope of the noise terms in the last few data points is strongly suggestive of non-monotonic behavior. In addition, the Lipschitz constraint produces much smoother models than in the unconstrained case. Therefore, we conclude that the monotonicity and Lipschitz constraints do act as strong regularization against fitting random non-monotonic noise as expected.

3.3 Expressiveness

GroupSort weight-constrained neural networks can describe arbitrarily complex decision boundaries in classification problems provided the proper objective function is used in training (the usual cross entropy and MSE losses may be sub-optimal for Lipschitz models in some scenarios manyfaces , see Sec. 5). Here we will directly regress on a synthetic boundary to emulate a classification problem. The boundary is the perimeter of circle with oscillating radius and is given by

| (12) |

where and are chosen to be and , respectively. Figure 3 shows an example where this complicated decision boundary is learned by a Lipschitz network (as defined in Sec. 2) trained on the boundary while achieving zero loss, demonstrating the expressiveness that is possible to obtain in these models.

4 Example Application: The LHCb inclusive heavy-flavor Run 3 trigger

The architecture presented in Sec. 2 has been developed with a specific purpose in mind: The classification of the decays of heavy-flavor particles produced at the Large Hadron Collider, which are bound states that contain a beauty or charm quark that live long enough to travel an observable distance before decaying. The dataset used here is built from simulated proton-proton () collisions in the LHCb detector. Charged particles that survive long enough to traverse the entire detector before decaying are reconstructed and combined pairwise into decay-vertex (DV) candidates.

The task concerns discriminating between DV candidates corresponding to the decays of heavy-flavor particles versus all other sources of DVs. The signatures of a heavy-flavor DV are substantial separation from the collision point, due to the relatively long heavy-flavor particle lifetimes, and sizable transverse momenta, , of the component particles, due to the large heavy-flavor particle masses. There are three main sources of background DVs. The first involves DVs formed from particles that originated directly from the collision, but where the location of the DV is measured to have non-zero displacement due to resolution effects. These DVs will typically have small displacements and small . The second source of background DVs arises due to particles produced in the collision interacting with the LHCb detector material, creating new particles at a point in space far from the collision point. Such DVs will have even larger displacement than the signal, but again have smaller . The third source involves at least one fake particle, i.e. a particle inferred from detector information that did not actually exist in the event. Since the simplest path through the detector (a straight line) corresponds to the highest possible momentum, DVs involving fake particles can have large .

In the first decision-making stage of the LHCb trigger, a pre-selection is applied to reject most background DVs, followed by a classifier based on the following four DV features: , the scalar sum of the of the two particles that formed the DV; , the smaller of the two increases observed when attempting to instead include each component particle into the -collision vertex fit, which is large when the DV is far from the collision point; the quality of the DV vertex fit; and the spatial distance between the DV and -collision locations, relative to their resolutions. N.b., the threshold required on the classifier response when run in real time during data taking is fixed by the maximum output bandwidth allowed from the first trigger stage.

Unfortunately, extremely large values of both displacement and momentum are more common for backgrounds than for heavy-flavor signals. For the former, this is easily visualized by considering a simplified problem using only the two most-powerful inputs, and . Figure 4 (left) shows that an unconstrained neural network learns to reject DVs with increasing larger displacements, corresponding to the lower right corner in the figure. Figure 5 (left) shows that this leads to a dependence of the signal efficiency on the lifetime of the decaying heavy-flavor particle. Larger lifetimes are disfavored since few heavy-flavor particles live more than . While rejecting DVs with the largest displacements does maximize the integrated signal efficiency in the training sample, this is undesirable because in many cases studying the longest-lived heavy-flavor particles is of more interest than simply collecting the largest decay sample integrated over lifetime (see, e.g., HFLAV:2022pwe ). Furthermore, many proposed explanations of dark matter and other types of new physics predict the existence of new particles with similar properties to heavy-flavor particles, but with longer lifetimes RF6 ; Graham:2021ggy . This classifier would reject these particles because it is unaware of our inductive bias that highly displaced DVs are worth selecting in the trigger and studying in more detail later.

Since the LHCb community is generally interested in studying highly displaced DVs for many physics reasons, we want to ensure that a larger displacement corresponds to a more signal-like response. The same goes for DVs with higher . Enforcing a monotonic response in both features is thus a desirable property, especially because it also ensures the desired behaviour for data points that are outside the boundaries of the training data. Multiple methods to enforce monotonic behavior in BDTs already exist auguste2020better , and Figs. 4 (middle) and 5 (middle) show that this approach works here. However, the jagged decision boundary can cause problems, e.g., when measuring the heavy-flavor spectrum. Specifically, the jagged BDT decision boundary can lead to sharp changes in the selection efficiency. If there is not perfect alignment of where these changes occur with where the interval boundaries of the spectrum are defined, then correcting for the efficiency can be challenging. Figure 4 (right) shows that our novel approach, outlined in Sec. 2, successfully produces a smooth and monotonic response, and Fig. 5 (right) shows that this provides the monotonic lifetime dependence we wanted in the efficiency.

Not only does our architecture guarantee a monotonic response in whatever features the analyst wants, it is guaranteed to be robust with respect to small changes to the inputs as governed by the constrained Lipschitz constant. Because calibration and resolution effects play a role in obtaining the features during detector operation, robustness is a necessary requirement for any classification performed online. Downstream analyses of these data depend on their stability. Figure 6 shows that the cost in terms of signal efficiency loss of enforcing monotonicity and robustness is small, even under the unrealistic assumption that the training data were, in fact, perfect. Therefore, the actual cost is likely negligible, while the benefits of the guarantees provided is hard to quantify but immediately obvious to the LHCb collaboration. Our algorithm runs in the LHCb trigger software stack and has been chosen to replace Refs. BBDT ; LHCb-PROC-2015-018 as the primary trigger-selection algorithm used by LHCb in Run 3. Due to its guaranteed robustness—and excellent expressiveness even for small networks—this architecture is being explored for other uses within the LHCb trigger system for Run 3, since robustness and monotonicity are ubiquitous inductive biases in experimental particle physics.

Experiment details

The default LHCb model shown here is a 4-input, 3-layer (width 20) network with GroupSort activation (here, all outputs are sorted), , constrained using Eq. (8). Inference times in the fully GPU-based LHCb trigger application Aaij:2019zbu are 4 times faster than the Run 3 trigger BDT that was the baseline algorithm before ours was chosen to replace it (the BDT baseline was based on the model used during data taking in Run 2 BBDT ; LHCb-PROC-2015-018 ). We performed runs with different seeds but the differences were negligible, at the level of .

For the unconstrained network, we use the same architecture but without the linear term and without the weight constraints during training. The depth and width are the same as used for the monotonic Lipschitz network.

The BDT is a LightGBM lightgbm gradient boosted classifier with 1000 base trees and a maximum 25 leaves per tree. Monotonicity is enforced there via the built-in monotone_constraints keyword.

Code for the monotonic network implementation of the architecture developed here can be found at https://github.com/niklasnolte/MonotoneNorm.

5 Limitations and Potential Improvements

We are working on improving the architecture as follows. First, common initialization techniques are not optimal for weight-normed networks arpit2019initialize . Simple modifications to weight initialization could likely improve convergence significantly. Second, it appears from empirical investigation that the networks described in Eq. (6) with GroupSort activation could be universal approximators, as we have yet to find a function that could not be approximated well enough with a deep enough network. A proof for universality is still required and could be developed in the future. Note that universal approximation is indeed proven for a similar architecture that only differs slightly in the normalization scheme, see sorting2019 . Neither of these limitations has any practical impact on the example applications discussed in the previous sections.

In many scenarios, Lipschitz-constrained architectures are considered inferior to unconstrained architectures because of their inability to offer competitive performance on standard benchmarks. This low performance is partly due to the fact that standard losses (such as cross-entropy) are not an adequate proxy of the metric of interest (accuracy) for the Lipschitz-constrained models. At a fundamental level, for any maximally accurate unconstrained classifier with Lipschitz constant , there exists a Lipschitz 1 classifier that replicates the former’s decision boundary, namely, . In the following, we will demonstrate a basic toy setting in which a maximally accurate Lipschitz classifier exists but cannot be obtained using standard losses.

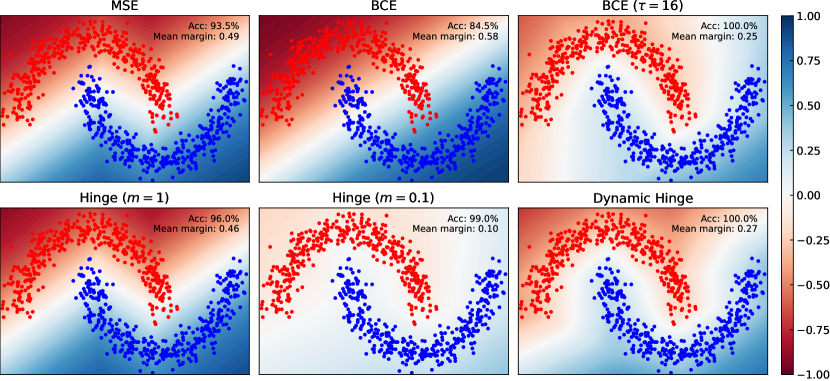

To understand the effect of the choice of objective function, we train a Lipschitz-constrained model to separate the two-moons dataset as shown in Fig. 7. This example is special in that the two samples do not overlap and can be completely separated by a Lipschitz-bounded function; however, that function cannot return the true label values for any data points due to the Lipschitz bound. Therefore, a loss function that penalizes any difference of the model output to the true label now faces a misalignment of the optimization target and the actual goal: While the classification goal is to have high accuracy, i.e. correct output sign, the optimization target is to minimize deviations of the output from the true label. This misalignment becomes irrelevant for a function with unbounded Lipschitz constant. We will show below that for examples such as this there is an important dependence on the objective used and its hyperparameters.

First, we note that losses with exponential tails (in the sense that they require large weights to reach zero) are in general not suitable for maximizing accuracy. In practice, this can be remedied in cross-entropy by increasing the temperature. Note that cross-entropy with temperature is defined as

| (13) |

where is the usual binary cross-entropy loss on targets and predictions . Following PyTorch conventions, are logits which will be normalized before computing the negative log-likelihood.

An accurate classification boundary comes at the expense of reduced margins when classes have small separation. A maximally robust accurate classifier will, however, have optimal margins if trained using the appropriate objective. In the case of separable data (i.e. when classes have disjoint support), the maximally robust accurate Lipschitz classifier is the signed-distance function (SDF) manyfaces defined, in the binary case as

| (14) |

where and are the sets of points for which has label and , respectively, and is the boundary between classes defined as . For a closed set , the distance to is defined as .

A naive objective minimized by the SDF is the hinge loss with margin given by . Because we do not have access to the true decision boundary a priori, as a proxy, we use the following objective:

| (15) |

where and is defined as

| (16) |

While this objective produces the highest margins for an accurate classifier, as depicted in Fig. 7, it may encounter scalability issues when applied to higher-dimensional problems due to the unavoidable spareness of the training data. There are many possible alternative approaches that could resolve this issue, though this remains an open problem. For lower-dimensional problems with overlapping datasets—as studied in the various examples above and the most common scenario in scientific applications—this non-optimal loss issue does not appear to be relevant.

Another factor that restricts the perceived expressiveness of Lipschitz architectures is the lack of access to standard techniques that improve convergence in unconstrained networks. For example, batch norm cannot be directly used with Lipschitz architectures. If the variance is too small, it may exceed the Lipschitz bound, and if it is too large, it can reduce the effective Lipschitz constant substantially.

6 Summary & Discussion

The Lipschitz constant of the map between the input and output space represented by a neural network is a natural metric for assessing the robustness of the model. We developed a new method to constrain the Lipschitz constant of dense deep learning models that can also be generalized to other architectures. Our method relies on a simple weight normalization scheme during training that ensures the Lipschitz constant of every layer is below an upper limit specified by the analyst. A simple monotonic residual connection can then be used to make the model monotonic in any subset of its inputs, which is useful in scenarios where domain knowledge dictates such dependence.

Our implementation of Lipschitz constrained networks is minimally constraining compared to other weight-normed models. This allows the underlying architecture to be more expressive and easier to train while maintaining explicit robustness guarantees. We showed how the algorithm was used to train a powerful, robust, and interpretable discriminator for heavy-flavor decays in the LHCb trigger system. Furthermore, thanks to the expressive capacity of the architecture, we were able to shrink the number of model parameters to meet the memory and latency requirements of the LHCb trigger, which allows for faster event selection. This translates to higher sensitivity to the elusive physics phenomena we aim to observe. Our algorithm has been adopted for use as the primary data-selection algorithm in the LHCb trigger in the current LHC data-taking period known as Run 3.

Given that the desire for robustness and interpretability and benevolent out-of-distribution behavior is ubiquitous when performing experiments, we expect that our architecture could have wide-ranging applications in science. In addition, our architecture could also be used in various applications in which robustness is required such as safety-critical environments and those which need protection against adversarial attacks. Monotonicity is a desirable property in various applications where fairness and safety are a concern. There are many scenarios in which models which are not monotonic are unacceptable. For example, in Ref. ICLR-Mono we showed that our algorithm achieves state-of-the-art performance on benchmarks in medicine, finance, and other applications with monotonic inductive biases. In addition, in Ref. NEEMo we presented a new and interesting direction for the architecture developed here: Estimation of the Wasserstein metric (Earth Mover’s Distance) in optimal transport by employing the Kantorovich-Rubinstein duality to enable its use in geometric fitting applications. Therefore, we expect that our algorithm will have far-reaching impact well beyond experimental physics.

Acknowledgement

The authors would like to thank the LHCb computing and simulation teams for their support and for producing the simulated LHCb samples used to benchmark the performance of RTA software. This work was supported by NSF grants PHY-2019786 (The NSF AI Institute for Artificial Intelligence and Fundamental Interactions, http://iaifi.org/) and OAC-2004645.

References

- (1) R. Aaij et al., The LHCb trigger and its performance in 2011, JINST 8 (2013) P04022 LHCb-DP-2012-004, [1211.3055].

- (2) R. Aaij et al., Performance of the LHCb trigger and full real-time reconstruction in Run 2 of the LHC, JINST 14 (2019) P04013 [1812.10790].

- (3) X. Liu, X. Han, N. Zhang and Q. Liu, Certified monotonic neural networks, 2020.

- (4) S. You, D. Ding, K. Canini, J. Pfeifer and M. Gupta, Deep lattice networks and partial monotonic functions, 2017.

- (5) J. Sill, Monotonic networks, in Advances in Neural Information Processing Systems, M. Jordan, M. Kearns and S. Solla, eds., vol. 10, MIT Press, 1998, https://proceedings.neurips.cc/paper/1997/file/83adc9225e4deb67d7ce42d58fe5157c-Paper.pdf.

- (6) J. Behrmann, W. Grathwohl, R.T.Q. Chen, D. Duvenaud and J.-H. Jacobsen, Invertible residual networks, 2019.

- (7) H. Gouk, E. Frank, B. Pfahringer and M.J. Cree, Regularisation of neural networks by enforcing lipschitz continuity, 2020.

- (8) T. Miyato, T. Kataoka, M. Koyama and Y. Yoshida, Spectral Normalization for Generative Adversarial Networks, arXiv e-prints (2018) arXiv:1802.05957 [1802.05957].

- (9) T. Huster, C.-Y.J. Chiang and R. Chadha, Limitations of the lipschitz constant as a defense against adversarial examples, 2018.

- (10) C. Anil, J. Lucas and R. Grosse, Sorting out Lipschitz function approximation, in Proceedings of the 36th International Conference on Machine Learning, K. Chaudhuri and R. Salakhutdinov, eds., vol. 97 of Proceedings of Machine Learning Research, pp. 291–301, PMLR, 09–15 Jun, 2019, http://proceedings.mlr.press/v97/anil19a.html.

- (11) L. Béthune, T. Boissin, M. Serrurier, F. Mamalet, C. Friedrich and A. González-Sanz, Pay attention to your loss: understanding misconceptions about 1-lipschitz neural networks, 2021. 10.48550/ARXIV.2104.05097.

- (12) HFLAV collaboration, Averages of -hadron, -hadron, and -lepton properties as of 2021, 2206.07501.

- (13) S. Gori, M. Williams et al., Dark Matter Production at Intensity-Frontier Experiments, in 2021 Snowmass Summer Study [2209.04671].

- (14) M. Graham, C. Hearty and M. Williams, Searches for Dark Photons at Accelerators, Ann. Rev. Nucl. Part. Sci. 71 (2021) 37 [2104.10280].

- (15) C. Auguste, S. Malory and I. Smirnov, A better method to enforce monotonic constraints in regression and classification trees, 2020.

- (16) V.V. Gligorov and M. Williams, Efficient, reliable and fast high-level triggering using a bonsai boosted decision tree, JINST 8 (2013) P02013 [1210.6861].

- (17) T. Likhomanenko et al., LHCb topological trigger reoptimization, J. Phys. Conf. Ser. 664 (2015) 082025.

- (18) R. Aaij et al., Allen: A high level trigger on GPUs for LHCb, Comput. Softw. Big Sci. 4 (2020) 7 [1912.09161].

- (19) G. Ke, Q. Meng, T. Finely, T. Wang, W. Chen, W. Ma et al., Lightgbm: A highly efficient gradient boosting decision tree, in Advances in Neural Information Processing Systems 30 (NIP 2017), December, 2017, https://www.microsoft.com/en-us/research/publication/lightgbm-a-highly-efficient-gradient-boosting-decision-tree/.

- (20) D. Arpit, V. Campos and Y. Bengio, How to initialize your network? robust initialization for weightnorm & resnets, 2019.

- (21) O. Kitouni, N. Nolte and M. Williams, Expressive Monotonic Neural Networks, in International Conference on Learning Representations (ICLR 2023), 2023.

- (22) O. Kitouni, N. Nolte and M. Williams, Finding NEEMo: Geometric Fitting using Neural Estimation of the Energy Mover’s Distance, in Advances in Neural Information Processing Systems (NeurIPS 2022), Machine Learning and the Physical Sciences, 2022 [2209.15624].