RLEKF: An Optimizer for Deep Potential with Ab Initio Accuracy

Abstract

It is imperative to accelerate the training of neural network force field such as Deep Potential, which usually requires thousands of images based on first-principles calculation and a couple of days to generate an accurate potential energy surface. To this end, we propose a novel optimizer named reorganized layer extended Kalman filtering (RLEKF), an optimized version of global extended Kalman filtering (GEKF) with a strategy of splitting big and gathering small layers to overcome the computational cost of GEKF. This strategy provides an approximation of the dense weights error covariance matrix with a sparse diagonal block matrix for GEKF. We implement both RLEKF and the baseline Adam in our Dynamics package and numerical experiments are performed on 13 unbiased datasets. Overall, RLEKF converges faster with slightly better accuracy. For example, a test on a typical system, bulk copper, shows that RLEKF converges faster by both the number of training epochs (11.67) and wall-clock time (1.19). Besides, we theoretically prove that the updates of weights converge and thus are against the gradient exploding problem. Experimental results verify that RLEKF is not sensitive to the initialization of weights. The RLEKF sheds light on other AI-for-science applications where training a large neural network (with tons of thousands parameters) is a bottleneck.

Introduction

Ab initio molecular dynamics (AIMD) has been the method of choice in modeling physical phenomena from a microscopic scale, for example, water (Rahman and Stillinger 1971; Stillinger and Rahman 1974), alloy (Wang et al. 2009), nanotube (Raty, Gygi, and Galli 2005), and even protein (Karplus and Kuriyan 2005). However, the cubic scaling of the first-principles methods (Meier, Laino, and Curioni 2014) has hindered both spatial and temporal scales of AIMD packages within thousands of atoms and picoseconds on modern supercomputers. To overcome the “scaling wall” of AIMD, two types of machine-learned MD (MLMD) methods are adopted. The first one is based on classical ML methods. In 1992, Ercolessi and Adam first introduced ML for describing potentials with an accuracy comparable to that obtained by ab initio methods (Ercolessi and Adams 1992, 1994), and to date, methods like ACE (Drautz 2019; Lysogorskiy et al. 2021), SNAP (Thompson et al. 2015), and GPR (Bartók et al. 2010) are developed and widely used in physical problems such as copper and silicon, tantalum, bulk crystals. The second approach is based on neural network (NN) and was first introduced in 2007 by Belher and Parrinello (Behler and Parrinello 2007). The neural network MD (NNMD) method approximates both atomic energy () and force () with a local neighboring environment, and the atomic potential energy surface is trained through tons of data generated from first-principles calculations. One current state-of-the-art is the Deep Potential (DP) model, which combines large NN and physical symmetries (translation, rotation, and permutation invariance) for accurately describing the high-dimensional configuration space of interatomic potential. Although the corresponding package DeePMD-kit can reach 10 billion atoms when scaling to the top supercomputers (Jia et al. 2020; Guo et al. 2022) in model inference , training procedure of an individual model can still take from hours to days and is the bottleneck.

The two most commonly used training methods are Adam (Kingma and Ba 2014) and scholastic gradient descent (SGD) (Saad 1998) in NNMD packages due to their integration in NN framework such as TensorFlow and PyTorch. For example, many NNMD packages such as HDNNP (Behler 2014), SIMPLE-NN (Lee et al. 2019) adopt Adam in the training of interatomic potential. Yet these optimizers have a slow convergence rate in searching for the optimal solution on the landscape and can take up to hundreds of epochs in training one NNMD model with thousands of training data. Moreover, SGD suffers from gradient exploding without proper control of the learning rate.

Global extended Kalman filtering (Chui, Chen et al. 2017) (GEKF) is a good choice in both convergence and robustness. For example, RuNNer (Behler 2011) adopts GEKF as an optimizer in its training of a simple three-layer fully connected NN with 1000 parameters or so. As shown in (Singraber et al. 2019), RuNNer can achieve 0.69 meV/atom in Energy RMSE and 35.5 meV/ in Force RMSE for HO physical system. However, since the error covariance matrix is updated globally (Fig. 2), GEKF can be computationally expensive when NNs with tens of thousands of parameters are applied.

Our main contribution is a reorganized layer extended Kalman filtering (RLEKF) method, which approximates the dense weights error covariance matrix of GEKF with a sparse diagonal block matrix to reduce the computational cost. Technically, these layers are reorganized by splitting big and gathering adjacent small layers. To have a fair comparison, both RLEKF and Adam methods are implemented in our NNMD package Dynamics. Compared to the Adam method, our testing results show that RLEKF can reach the same or higher accuracy for both force (better than Adam in 7 out 13 testing cases) and energy (better than Adam in 11 out of 13 testing cases). For a typical copper system, the time-to-solution of RLEKF can be and faster in terms of the number of epochs and wall-clock time, respectively. Especially, RLEKF can significantly reduce the number of epochs to 2-3 for reaching a reasonable accuracy ( the RMSE of the best accuracy possible). We theoretically prove the weights updating convergence and therefore this protects RLEKF from gradient exploding. Our work also sheds light on other NN-based applications where training NNs with a relatively large number of parameters is a bottleneck.

Related Work

NNMD Packages. Fully connected NNs are the most widely used in NNMD packages. For example, HDNNP (Behler and Parrinello 2007; Behler 2017), which is a three-layered fully-connected NN is introduced in RuNNer (Behler 2011). Other packages such as BIM-NN (Yao et al. 2017), Simple-NN (Lee et al. 2019), CabanaMD-NNP (Desai, Reeve, and Belak 2022), SPONGE (Huang et al. 2022), DeepMD-kit (Wang and Weinan 2018) are also implemented via fully-connected NN. Many physical phenomena such as a diagram of water and CuS (Singraber et al. 2019), chemical molecule (Yao et al. 2017), SiO (Lee et al. 2019), organic molecules (CH, CH) (Lysogorskiy et al. 2021) are studied with the packages above.

Recent progress of NNMD packages implemented with graph NN (GNN) is gaining momentum. DimeNet++ (Gasteiger, Groß, and Günnemann 2020; Gasteiger et al. 2020), NequIP (Batzner et al. 2022), has shown great potential in describing organic molecules (QM7 and QM9 dataset) at high accuracy. We remark that NNMD packages also differ in employing physical symmetries for NN inputs (“features”), which are not discussed due to it is out of the scope of this paper.

Training Methods in NNMD Packages. Nearly all NNMD packages mentioned above use Adam and SGD as the training procedure for their ease of use in NN frameworks. One challenge of these methods is their time-consuming model training. For example, it usually takes more than 100 epochs to systematically train an individual DP model in the DeePMD-kit software.

As an alternative, Kalman filtering (Kalman et al. 1960; Welch, Bishop et al. 1995) (KF) aims to estimate states of a linear process theoretically based on a state-measurement model with Gaussian noise by an estimator optimal in the sense of minimizing the mean square error of predicted states with the noisy measurement as input. It is widely used in autonomous, navigation, and interactive computer graphics due to its fast convergence and noise filtering. However, the formulation of KF confines itself to a linear optimization problem. Extended Kalman filtering (Smith, Schmidt, and McGee 1962) (EKF) is introduced to solve nonlinear optimization problems through Taylor expansion, and then the linearized problem is solved by KF. In the implementation, EKF has many variants such as NDEKF (Murtuza and Chorian 1994), ONDEKF, LDEKF, and FDEKF. Note that the performance of EKF and its variants are compared in Ref. (Heimes 1998).

We remark that in training an NNMD model with more than tens of thousands of parameters, such as the DP mentioned in this paper, both Adam and EKF (and its currently known variants) are either not effective or not efficient.

Problem Setup and Formulation

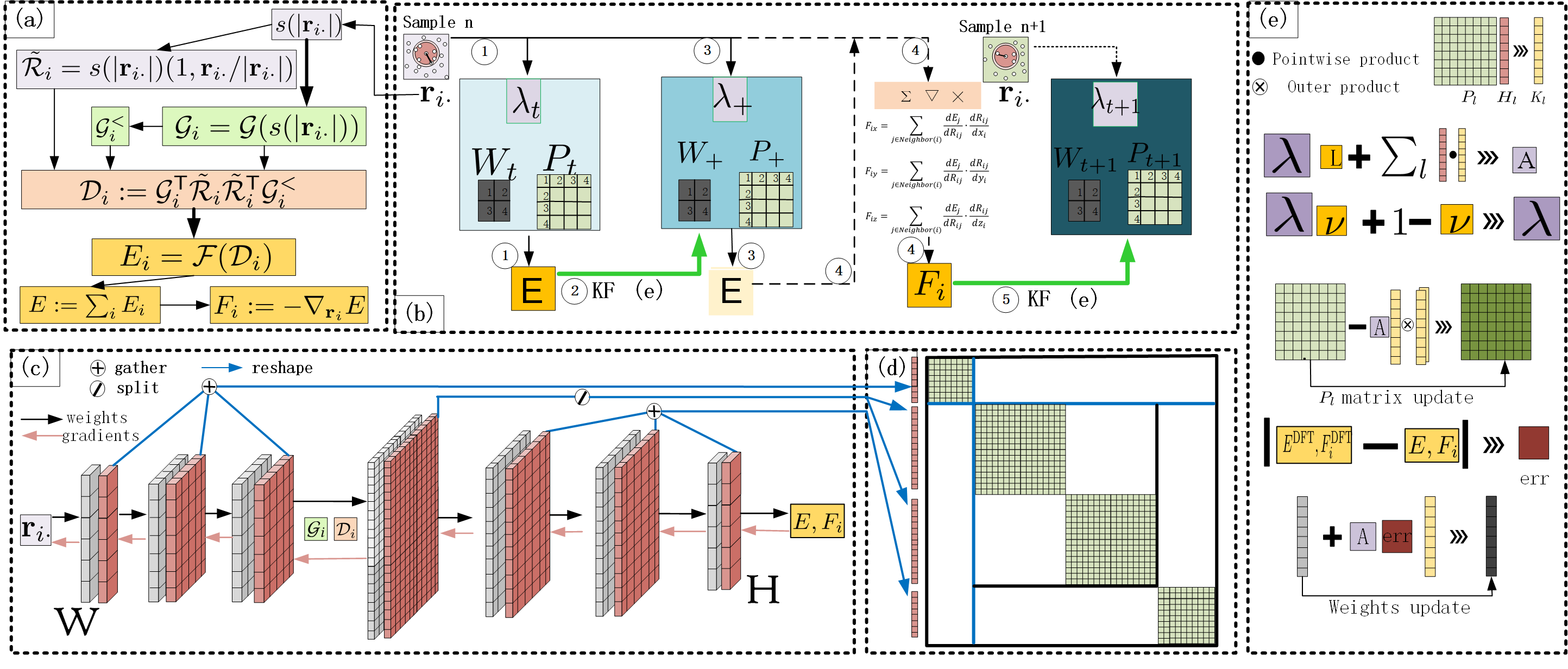

DP. The key steps of the DP training are shown in Fig. 1(a). For each atom , the physical symmetries such as translational, rotational, and permutational invariances are integrated into the descriptor through a three-layer fully connected network named embedding net. Then is trained via a three-layered fitting net.

-

1.

Every snapshot of the molecule system consists of each atom’s 3D Cartesian coordinates which is then translated into neighbor list of atom , as the input of DP NN. Then, we gather it into its smooth version , , where when , when , decaying smoothly between the two thresholds, is the maximum length of all neighbor lists, is the neighbor index of atom .

-

2.

Define the embedding net , , where is called symmetry order, , , , and and the functions and are element-wise.

-

3.

timing yields the so-called descriptor , i.e. is the several columns of and .

-

4.

The fitting net is , where is reshaped into a vector of form , , , , , , , .

-

5.

The output , .

EKF with Memory Factor for NNs. For neural networks, the model of interest

| (1) |

is formulated in stochastic language as an EKF problem targeting on , where is the vector of all trainable parameters in the network , are pairs of feature and label, can also be seen as measurements of EKF, are noise terms subject to normal distribution with mean and variances correspondingly, and . With fixed and bounded , will be approximated well by its linearization at , just omitting a term .

| (2) | ||||

If set and rewrite (2) the following KF problem

| (3) | |||||

| (4) |

At the beginning of training, the estimator is far away from , so less attention should be paid to those data fed to the network at an earlier stage of training than those at later stage. Through timing a factor and , where and , the last problem enjoys the better variant as below

| (5) |

where is called memory factor. The greater is, the more weight, or say attention, is paid to previous data. According to basic KF theory (Haykin and Haykin 2001) , we obtain

Finally, we recover the estimator of via that of divided by the factor , define , find , and then get our weights updating strategy

| Systems | Structure | Time Step (fs) | # Snapshots | Energy(trn) | Energy(tst) | Force(trn) | Force(tst) |

|---|---|---|---|---|---|---|---|

| Cu | FCC | 1 | 1646 | 0.250/0.451 | 0.327/0.442 | 40.6/39.7 | 45.2/44.4 |

| Si | DC | 2.5-3.5 | 3000 | 0.148/0.165 | 0.186/0.181 | 22.3/21.9 | 24.1/23.4 |

| C | Graphene | 2-3.5 | 4000 | 0.0856/0.133 | 0.267/0.278 | 24.2/27.6 | 34.7/35.5 |

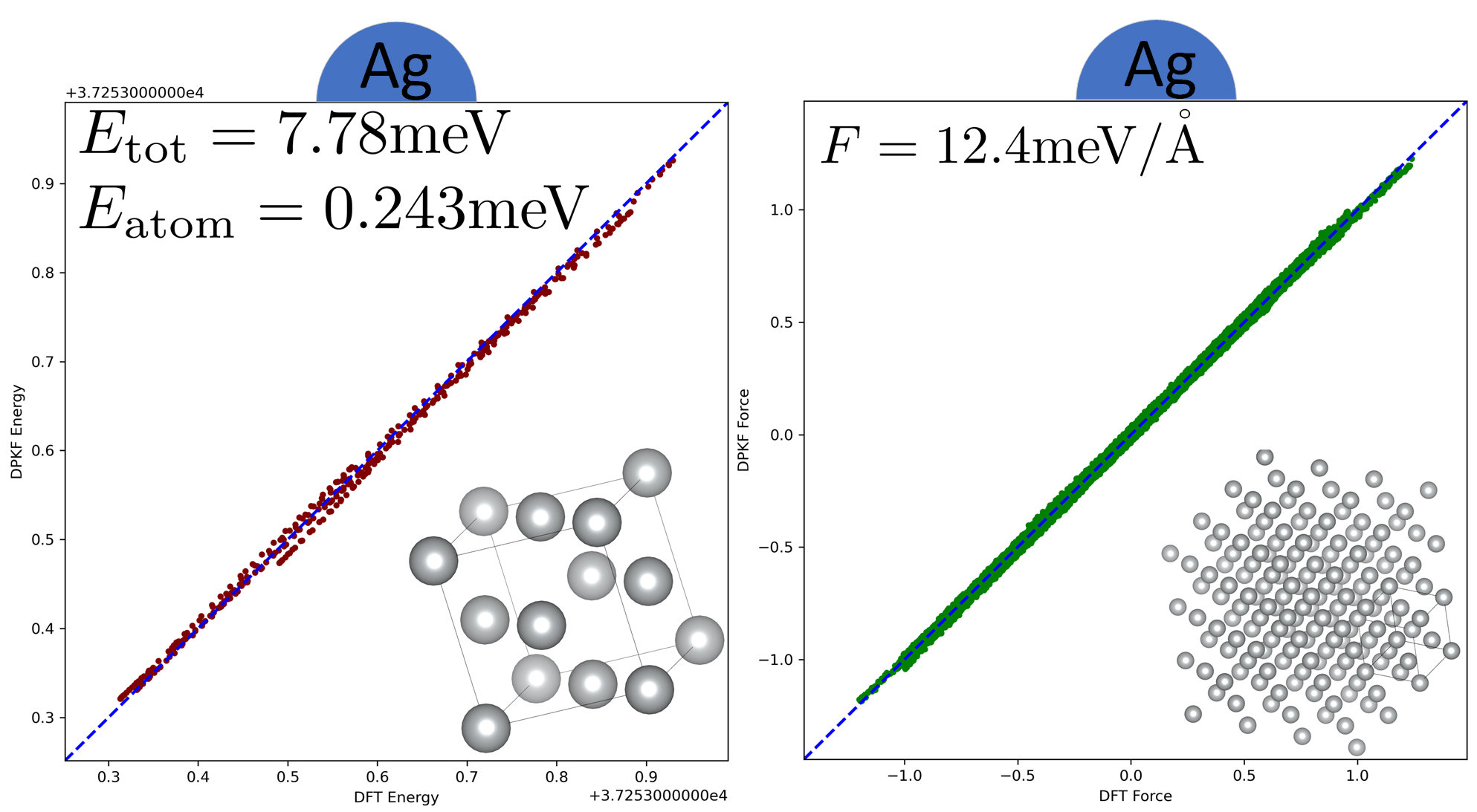

| Ag | FCC | 2.5-3 | 2015 | 0.142/0.159 | 0.243/0.265 | 11.3/11.0 | 12.4/12.5 |

| Mg | HCP | 0.5-2 | 4000 | 0.111/0.160 | 0.169/0.189 | 13.0/14.8 | 16.1/17.1 |

| NaCl | FCC | 2-3.5 | 3193 | 0.0403/0.0631 | 0.0435/0.0514 | 6.06/5.78 | 6.69/6.47 |

| Li | BCC,FCC,HCP | 0.5 | 2494 | 0.0482/0.126 | 0.312/0.332 | 22.9/14.0 | 24.8/26.4 |

| Al | FCC | 2-3.5 | 4000 | 0.359/0.534 | 0.473/1.24 | 54.1/56.3 | 58.6/55.8 |

| MgAlCu | Alloy | 3 | 2530 | 0.165/0.227 | 0.218/0.233 | 44.1/37.6 | 45.2/42.6 |

| H2O | Liquid | 0.5 | 4000 | 0.297/0.584 | 0.545/1.00 | 60.8/20.6 | 68.1/87.6 |

| S | S8 | 3-5 | 7000 | 0.210/0.401 | 0.628/0.628 | 51.5/45.9 | 60.4/58.5 |

| CuO | FCC | 3 | 1000 | 2.203/2.47 | 2.04/3.78 | 424/414 | 442/438 |

| Cu+C | SA | 3-3.2 | 2000 | 0.458/1.27 | 1.07/2.52 | 176/143 | 190/195 |

Method

In this section, we introduce RLEKF and its splitting strategy (Fig. 1) and then compare RLEKF with GEKF.

The Workflow of Training with RLEKF. The overview of DP NN with RLEKF is shown in Fig. 1, and the corresponding training procedures are detailed in Alg. 1. Specifically, Alg. 1 and Fig. 1.b show the training of RLEKF for both energy (Alg. 1, line 2 & 3 ) and force (Alg. 1, line 4 & 5 ). The forward prediction(shown in Alg. 1 and Fig. 1.c), backward propagation (shown in Alg. 2 and Fig. 1.c), and updating of weights based on Kalman filtering with forgetting factor are shown in Alg. 2 (line 8 to line 14 and Fig. 1.e). Alg. 1 shows the iterative update of with (also shown in Fig. 1.b). After the initialization of , , and , the energy process starts and tries to fit the predicted energy to the energy label of a sample, and then the force process updates weights under the supervision of the samples’ force label. In the force process for each image, we randomly choose atoms and concatenate their network-predicted force vectors as an effective predicted force , which is then used to update weights. This process (i.e. line 4 & 5 of Alg. 1) is repeated times in a loop. Here, we recommend a relatively universal setting . When these two kinds of alternative weights update in the direction of minimizing the trace of for this sample, the same process for the next one repeats until time step . The algorithm RLEKF in line 3 & 5 of Alg. 1 unfolds below.

RLEKF. Alg. 2 shows the layerwise weights-updating strategy of RLEKF, which is an optimized version of EKF in training the DP model. From line 1 to line 7, we transform any vector into a number, which enjoys two advantages. The first is it averages all the updating information in and transforms a vector into a number which avoids an impregnable problem, solving inversion of overwhelmingly many big matrices introduced later. The second is once Alg. 2 invoked we only need to calculate the gradient for one time. More specifically, from line 1 to line 7, we just want to adjust the gradient of with respect to weights in the direction of ”decreasing the difference between and ”. In order to keep strictly symmetric if is, the definition of Kalman gain in Alg. 2 is a little different from traditional Kalman filtering. In addition, is usually a matrix, but in line 8 it degenerates into a number, which heavily reduces the computational complexity, where is the number of blocks in and take as a suitable hyperparameter. RLEKF independently updates weights and of different layers by KF theory and its splitting strategy introduced in the next subsection. Finally, all updated parameters are collected for the prediction of the next turn (Fig. 1.e, Alg. 2 from line 8 to line 16, where is forgetting rate, a hyperparameter describing the varying rate of and split is a function for obtaining the -th part after splitting).

The Splitting Strategy of RLEKF. According to the splitting strategy of RLEKF, GEKF can be approximated by RLEKF with a reduction in weights error covariance matrix considering the efficiency and stability of a large-scale application. Unlike GEKF, the weights error covariance matrix of whichever time step, up to a scalar factor, is a block diagonal matrix, whose shape is , where and are the number of weights of the th block and that of the whole NN respectively. This means some weights error covariances are forced into and thus is no longer the real weights error covariance matrix at most an approximation, but fortunately there are indeed such good enough approximations that they carry most of the ”big values” and information in these diagonal blocks, on which concentrates. As shown in Fig. 2, GEKF concerns correlations between all the parameters. However, it is computationally expensive when adapted to large NNs. As a seemingly good choice, SEKF is a load balanced approximation version of GEKF, but the heavy correlations between parameters in a layer are ignored inappropriately. Overcoming the drawback of SEKF, LEKF can fully consider the internal correlation between parameters in the same layer, whereas unbearable computation still confronts us if the layer contains tens of thousands of parameters and decoupling every small neighboring layer deviates from the load balance idea. Here, we induce two heuristic principles for choosing a suitable strategy:

-

1.

Put weights with high correlation (in the same layer) in the same block if possible.

-

2.

The block must not be too large since that burdens computers with much computation (splitting the layers if the number of parameters is larger than the threshold ) or too small since that wastes computational power and loses massive information (gathering the near neighboring small layers). The threshold aims at splitting the matrix as evenly as possible for load balance and linear computational complexity.

Therefore, following these principles, we induce the splitting strategy of RLEKF (Fig. 1.d) and reorganize the weights in different layers, named parameter parts, into several more appropriate layers. Trainable parameters could be decomposed into a series of the parts of size . Starting from the first part of size , we execute the commands below repeatedly until :

-

1.

For a given part with weights, we split them into layers of size and a new part of size .

-

2.

Then consider subsequent parts with weights, gather them together into a layer of size and then set , if and or .

Empirically, Fig. 2 validates the efficiency of RLEKF, comparably or even more accurate than GEKF.

Theoretical Analysis

In this section, we prove the convergence of weights updating and the stability of the training process (avoid gradient exploding). For simplicity, we analyze GEFK case which has the same asymptotic feature as RLEKF (Witkoskie and Doren 2005).

Theorem 1

In EKF problem (5), setting , assuming components of are independent and subject to identical distribution with mean 0 and variance , we have

with the probability arbitrarily close to 1 when , which means the convergence of weights updating and thus the algorithm avoidance of gradient exploding.

The following is a brief proof of Theorem 1. Based on basic KF theory, we obtain

and

by using Woodbury matrix identity, where , weights error covariance matrix . In the following experiments, we assume components of are independent and subject to identical distribution with mean 0 and variance . So, the covariance matrix of is and . Hence

Using -Pochhammer symbol, we find

exists. Therefore, of order . According to the law of large numbers, we get

i.e.

Hence,

if is large enough. Further, is bounded with a probability

arbitrarily close to 1, where Markov’s inequality is used, if initialization is close enough to some local minimum of the landscape for Taylor approximation (1). Therefore,

with the probability arbitrarily close to 1.

Experiments and Results

Bulk systems are challenging AIMD tasks due to their extensiveness (periodic boundary condition) and complexity (many different phases and atomic components). Our experiment is conducted on several representative problematic bulk systems. They are simple metal (Cu, Ag, Mg, Al, Li), alloy (MgAlCu), nonmetal (S, C), semiconductor(Si), simple compound (H2O), electrolyte (NaCl), and some challenging systems (CuO, CuC). The effectiveness of RLEKF is shown by a comparison with the SOTA benchmark Adam in terms of the RMSEs of predicted total energy of the whole system , and forces , where correspond to different directions of Cartesian coordinate system and is the index of atom (Tab. 1).

Experiment Setting. Set , , , consistent with DeePMD-kit (Wang and Weinan 2018). The network configuration is [1, 25, 25, 25] (embedding net), [400, 50, 50, 50,1] (fitting net).

Data Description. We choose 13 unbiased and representative datasets of the aforementioned systems with certain specific structures (second column of Tab. 1). For each dataset, snapshots are yielded based on solving ab initio molecular trajectories via PWmat (Jia et al. 2013). During this process, to enlarge the sampling span of configuration space, we fast generate a long sequence of the snapshot by small time step (the third column of Tab. 1) and choose one for every fixed number in the temperature 300K.

Main Results. Both RLEKF and Adam yield good results except for CuO and CuC systems (Tab. 1). From an accuracy perspective, RLEKF reaches a higher energy accuracy in 12 cases except for Si, little less accurate than Adam. As for force, RLEKF is more accurate than Adam in 7 cases while the reminder achieves a very comparable precision. Furthermore, Fig. 3 shows how far the fitting results of RLEKF deviate from those of DFT (ground truth). From a speed perspective, generally, RLEKF converges to a reasonable RMSE much faster than Adam in most of the 13 cases in terms of energy (Fig. 4). We take bulk copper as an example to demonstrate how to understand information in Fig. 4. The lower Energy and Force RMSE between Adam and RLEKF is 0.327 meV and 44.4 meV/. Therefore, the 1.2 energy baseline is 0.3271.2=0.392 meV. It is unreachable for Adam, which is denoted as 1000 in (Fig. 5). The 1.2 force baseline is 44.41.2=52.6meV/. Adam spends 35 epochs to reach the baseline while RLEKF’s only costs 3 epochs (Fig. 5). Then, the force speed ratio is 35/3=11.67 according to epoch. For each sample, Adam updates weights for 1 time and RLEKF updates for 7 times (1 time in the energy process and 6 times in the force process). The iteration speed ratio is 35/(37)=1.66. On tesla V100, the time cost of each epoch is 60s (Adam) and 587s (RLEKF). Thus, the wall-clock time speed ratio is 3560/(3587)=1.19.

Robustness Analysis: Distribution of Hyperparameter of Weights Initialization. RLEKF is very stable as an optimizer, which can keep NNs from gradients exploding and consequently endow them with very loose initialization constraints almost up to none as shown in Fig. 6.

Computational Complexity. RLEKF also benefits from computing through reducing the computation compared to GEKF. There are 3 computational intensive parts in Alg. 2, calculating (line 8), (line 10), and (line 11). Due to the even splitting strategy, the order of float operation for each block is , and the number of the block is of order . Hence the total computational complexity of RLEKF is of order .

Conclusions

We proposed an optimizer RLEKF on DP NN and tested RLEKF on several typical bulk systems (simple metals, insulators, and semiconductors) of diverse structure (FCC, BCC, HCP). RLEKF defeated SOTA benchmark Adam by 11-1 (7-6) in precision and 12-1 (7-6) in wall-clock time speed in terms of energy (force). Besides, the convergence of weights updating is proved theoretically and RLEKF presents robustness on weights initialization. To sum up, RLEKF is an accurate, efficient, and stable optimizer, which paves another path to training general large NNs.

Acknowledgments

This work is supported by the following funding: National Key Research and Development Program of China (2021YFB0300600), National Science Foundation of China (T2125013, 62032023, 61972377), CAS Project for Young Scientists in Basic Research (YSBR-005) and Network Information Project of Chinese Academy of Sciences (CASWX2021SF-0103), the Key Research Program of the Chinese Academy of Sciences grant No. ZDBS-SSW-WHC002, Soft Science Research Project of Guangdong Province (No. 2017B030301013), and Huawei Technologies Co., Ltd.. We thank Dr. Haibo Li for helpful discussions.

References

- Bartók et al. (2010) Bartók, A. P.; Payne, M. C.; Kondor, R.; and Csányi, G. 2010. Gaussian approximation potentials: The accuracy of quantum mechanics, without the electrons. Physical review letters, 104(13): 136403.

- Batzner et al. (2022) Batzner, S.; Musaelian, A.; Sun, L.; Geiger, M.; Mailoa, J. P.; Kornbluth, M.; Molinari, N.; Smidt, T. E.; and Kozinsky, B. 2022. E(3)-equivariant graph neural networks for data-efficient and accurate interatomic potentials. Nature Communications, 13(1): 2453.

- Behler (2011) Behler, J. 2011. Atom-centered symmetry functions for constructing high-dimensional neural network potentials. The Journal of Chemical Physics, 134(7): 074106.

- Behler (2014) Behler, J. 2014. Representing potential energy surfaces by high-dimensional neural network potentials. Journal of Physics: Condensed Matter, 26(18): 183001.

- Behler (2017) Behler, J. 2017. First principles neural network potentials for reactive simulations of large molecular and condensed systems. Angewandte Chemie International Edition, 56(42): 12828–12840.

- Behler and Parrinello (2007) Behler, J.; and Parrinello, M. 2007. Generalized Neural-Network Representation of High-Dimensional Potential-Energy Surfaces. Phys. Rev. Lett., 98: 146401.

- Chui, Chen et al. (2017) Chui, C. K.; Chen, G.; et al. 2017. Kalman filtering. Springer.

- Desai, Reeve, and Belak (2022) Desai, S.; Reeve, S. T.; and Belak, J. F. 2022. Implementing a neural network interatomic model with performance portability for emerging exascale architectures. Computer Physics Communications, 270: 108156.

- Drautz (2019) Drautz, R. 2019. Atomic cluster expansion for accurate and transferable interatomic potentials. Phys. Rev. B, 99: 014104.

- Ercolessi and Adams (1992) Ercolessi, F.; and Adams, J. B. 1992. Interatomic potentials from first-principles calculations. MRS Online Proceedings Library (OPL), 291.

- Ercolessi and Adams (1994) Ercolessi, F.; and Adams, J. B. 1994. Interatomic potentials from first-principles calculations: the force-matching method. EPL (Europhysics Letters), 26(8): 583.

- Gasteiger et al. (2020) Gasteiger, J.; Giri, S.; Margraf, J. T.; and Günnemann, S. 2020. Fast and Uncertainty-Aware Directional Message Passing for Non-Equilibrium Molecules. In Machine Learning for Molecules Workshop, NeurIPS.

- Gasteiger, Groß, and Günnemann (2020) Gasteiger, J.; Groß, J.; and Günnemann, S. 2020. Directional Message Passing for Molecular Graphs. In International Conference on Learning Representations (ICLR).

- Guo et al. (2022) Guo, Z.; Lu, D.; Yan, Y.; Hu, S.; Liu, R.; Tan, G.; Sun, N.; Jiang, W.; Liu, L.; Chen, Y.; Zhang, L.; Chen, M.; Wang, H.; and Jia, W. 2022. Extending the Limit of Molecular Dynamics with Ab Initio Accuracy to 10 Billion Atoms. In Proceedings of the 27th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, PPoPP ’22, 205–218. New York, NY, USA: Association for Computing Machinery. ISBN 9781450392044.

- Haykin and Haykin (2001) Haykin, S. S.; and Haykin, S. S. 2001. Kalman filtering and neural networks, volume 284. Wiley Online Library.

- Heimes (1998) Heimes, F. 1998. Extended Kalman filter neural network training: experimental results and algorithm improvements. In SMC’98 Conference Proceedings. 1998 IEEE International Conference on Systems, Man, and Cybernetics (Cat. No.98CH36218), volume 2, 1639–1644 vol.2.

- Huang et al. (2022) Huang, Y.-P.; Xia, Y.; Yang, L.; Wei, J.; Yang, Y. I.; and Gao, Y. Q. 2022. SPONGE: A GPU-Accelerated Molecular Dynamics Package with Enhanced Sampling and AI-Driven Algorithms. Chinese Journal of Chemistry, 40(1): 160–168.

- Jia et al. (2013) Jia, W.; Fu, J.; Cao, Z.; Wang, L.; Chi, X.; Gao, W.; and Wang, L.-W. 2013. Fast plane wave density functional theory molecular dynamics calculations on multi-GPU machines. Journal of Computational Physics, 251: 102–115.

- Jia et al. (2020) Jia, W.; Wang, H.; Chen, M.; Lu, D.; Lin, L.; Car, R.; Weinan, E.; and Zhang, L. 2020. Pushing the Limit of Molecular Dynamics with Ab Initio Accuracy to 100 Million Atoms with Machine Learning. In SC20: International Conference for High Performance Computing, Networking, Storage and Analysis, 1–14.

- Kalman et al. (1960) Kalman, R. E.; et al. 1960. Contributions to the theory of optimal control. Bol. soc. mat. mexicana, 5(2): 102–119.

- Karplus and Kuriyan (2005) Karplus, M.; and Kuriyan, J. 2005. Molecular dynamics and protein function. Proceedings of the National Academy of Sciences, 102(19): 6679–6685.

- Kingma and Ba (2014) Kingma, D. P.; and Ba, J. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980.

- Lee et al. (2019) Lee, K.; Yoo, D.; Jeong, W.; and Han, S. 2019. SIMPLE-NN: An efficient package for training and executing neural-network interatomic potentials. Computer Physics Communications, 242: 95–103.

- Lysogorskiy et al. (2021) Lysogorskiy, Y.; Oord, C. v. d.; Bochkarev, A.; Menon, S.; Rinaldi, M.; Hammerschmidt, T.; Mrovec, M.; Thompson, A.; Csányi, G.; Ortner, C.; and Drautz, R. 2021. Performant implementation of the atomic cluster expansion (PACE) and application to copper and silicon. npj Computational Materials, 7(1): 97.

- Meier, Laino, and Curioni (2014) Meier, K.; Laino, T.; and Curioni, A. 2014. Solid-state electrolytes: revealing the mechanisms of Li-ion conduction in tetragonal and cubic LLZO by first-principles calculations. The Journal of Physical Chemistry C, 118(13): 6668–6679.

- Murtuza and Chorian (1994) Murtuza, S.; and Chorian, S. 1994. Node decoupled extended Kalman filter based learning algorithm for neural networks. In Proceedings of 1994 9th IEEE International Symposium on Intelligent Control, 364–369.

- Rahman and Stillinger (1971) Rahman, A.; and Stillinger, F. H. 1971. Molecular dynamics study of liquid water. The Journal of Chemical Physics, 55(7): 3336–3359.

- Raty, Gygi, and Galli (2005) Raty, J.-Y.; Gygi, F.; and Galli, G. 2005. Growth of carbon nanotubes on metal nanoparticles: a microscopic mechanism from ab initio molecular dynamics simulations. Physical review letters, 95(9): 096103.

- Saad (1998) Saad, D. 1998. Online algorithms and stochastic approximations. Online Learning, 5(3): 6.

- Singraber et al. (2019) Singraber, A.; Morawietz, T.; Behler, J.; and Dellago, C. 2019. Parallel multistream training of high-dimensional neural network potentials. Journal of chemical theory and computation, 15(5): 3075–3092.

- Smith, Schmidt, and McGee (1962) Smith, G. L.; Schmidt, S. F.; and McGee, L. A. 1962. Application of statistical filter theory to the optimal estimation of position and velocity on board a circumlunar vehicle. National Aeronautics and Space Administration.

- Stillinger and Rahman (1974) Stillinger, F. H.; and Rahman, A. 1974. Improved simulation of liquid water by molecular dynamics. The Journal of Chemical Physics, 60(4): 1545–1557.

- Thompson et al. (2015) Thompson, A.; Swiler, L.; Trott, C.; Foiles, S.; and Tucker, G. 2015. Spectral neighbor analysis method for automated generation of quantum-accurate interatomic potentials. Journal of Computational Physics, 285: 316–330.

- Wang and Weinan (2018) Wang, L. H. J., H.; Zhang; and Weinan, E. . 2018. DeePMD-kit: A deep learning package for many-body potential energy representation and molecular dynamics. Computer Physics Communications, 228: 178–184.

- Wang et al. (2009) Wang, S.; Kramer, M.; Xu, M.; Wu, S.; Hao, S.; Sordelet, D.; Ho, K.; and Wang, C. 2009. Experimental and ab initio molecular dynamics simulation studies of liquid Al 60 Cu 40 alloy. Physical Review B, 79(14): 144205.

- Welch, Bishop et al. (1995) Welch, G.; Bishop, G.; et al. 1995. An introduction to the Kalman filter.

- Witkoskie and Doren (2005) Witkoskie, J. B.; and Doren, D. J. 2005. Neural Network Models of Potential Energy Surfaces: Prototypical Examples. Journal of Chemical Theory and Computation, 1(1): 14–23. PMID: 26641111.

- Yao et al. (2017) Yao, K.; Herr, J. E.; Brown, S. N.; and Parkhill, J. 2017. Intrinsic Bond Energies from a Bonds-in-Molecules Neural Network. The Journal of Physical Chemistry Letters, 8(12): 2689–2694. PMID: 28573865.