Revisiting Pretraining for Semi-Supervised Learning in the Low-Label Regime

Abstract

Semi-supervised learning (SSL) addresses the lack of labeled data by exploiting large unlabeled data through pseudolabeling. However, in the extremely low-label regime, pseudo labels could be incorrect, a.k.a. the confirmation bias, and the pseudo labels will in turn harm the network training. Recent studies combined finetuning (FT) from pretrained weights with SSL to mitigate the challenges and claimed superior results in the low-label regime. In this work, we first show that the better pretrained weights brought in by FT account for the state-of-the-art performance, and importantly that they are universally helpful to off-the-shelf semi-supervised learners. We further argue that direct finetuning from pretrained weights is suboptimal due to covariate shift and propose a contrastive target pretraining step to adapt model weights towards target dataset. We carried out extensive experiments on both classification and segmentation tasks by doing target pretraining then followed by semi-supervised finetuning. The promising results validate the efficacy of target pretraining for SSL, in particular in the low-label regime.

Index Terms:

Semi-Supervised Learning, Transfer Learning, Self-Supervised Learning, Contrastive LearningI Introduction

Deep neural networks trained on large collections of labeled data have achieved unprecedented performance on many computer vision tasks. Unfortunately, acquiring these large amounts of labeled data is expensive and laborious. One effective strategy to mitigate this dependence on labels is exploiting unlabeled data for training, as done in semi-supervised learning (SSL).

Semi-supervised learning tackles the challenge of limited labeled data by exploiting a large amount of unlabeled data. Recent consistency-based SSL methods obtain pseudo-labels for unlabeled data through ensembles of predictions [1], network parameters [2] or weaker augmentations [3]. A student network is further trained on both labeled data and unlabeled data with pseudo-labels. Since these methods are directly trained on a specific task (dataset), we refer to this overall approach as task-specific SSL [4]. Task-specific SSL has demonstrated very competitive performance on simpler datasets like CIFAR-10 [5]. However, the performance of task-specific SSL in more challenging settings, for instance, fine-grained classification [6], the extremely low-label regime [6] and segmentation [7], is still far from satisfactory. This is partly because when few labels are available, there is simply not enough supervision to generate accurate pseudo-labels, thus misleading the training of student network [8].

In contrast to task-specific SSL, the finetuning (FT) approach involves pretraining a backbone network on a separate, large dataset in either supervised [9] or unsupervised [4, 10, 11, 12] manner, and then finetuning the network on the potentially smaller target dataset. For example, it is common practice in computer vision to start with a model pretrained on ImageNet [13], and then finetune it on the downstream task of interest. The FT paradigm has been successfully used across wide range of computer vision tasks, including classification [14], segmentation [15], detection [16] etc.

The advantages of SSL and FT are often orthogonal as the former exploits unlabeled data to generate pseudo labeled data while the latter provides better initial model parameters. Therefore, synergistically combining FT with SSL, termed as finetuning + SSL, to enjoy the benefits of both strategies, has been explored by recent works. Among these attempts, SimCLR v2 [17] starts with ImageNet pretrained weights and then employs a supervised loss to finetune on a small labeled target dataset followed by distillation on unlabeled data. This initial attempt of finetuning + SSL in a non-trivial way demonstrated superior performance, compared with task-specific SSL methods, mainly in the low-label regime with very large network capacity. Along this direction, Self-Tuning [6] further proposed to combine cross-entropy loss with a multi-positive pair contrastive loss during finetuning. It was claimed that by incorporating this additional contrastive loss with pseudo-labels, confirmation bias [8] and model shift [18] issues can be mitigated, and Self-Tuning is reported to outperform state-of-the-art semi-supervised learners.

The reported large performance improvement of finetuning + SSL [17, 6] over task-specific SSL [2, 19, 20, 3], inspired us to explore the following questions: 1) What is the key ingredient that enables the success of finetuning + SSL? 2) Does it generalize to task-specific SSL? 3) Is task-specific SSL still the most effective SSL approach? To answer these questions, we first make a critical observation that one major issue with the comparison of finetuning + SSL and task-specific SSL is the initialization of model weights. In particular, SimCLRv2 [17] and Self-Tuning [6] employed model weights pretrained on ImageNet while task-specific SSL methods are often trained with randomly initialized weights. Therefore, we hypothesize that the better initial weights provided by pretraining on the separate large dataset is largely responsible for the superior performance reported by finetuning + SSL. It is thus reasonable to believe, if the same initial weights are available to task-specific SSL methods, a similar performance boost could be expected. To test this hypothesis, we carry out empirical studies by comparing state-of-the-art task-specific SSL methods both with random initial weights and pretrained weights. As shown in Fig. 1, with FixMatch we observe a clear performance gap between random initialized weights “CIFAR-10/CIFAR-100—Rand” and ImageNet pretrained weights “CIFAR-10/CIFAR-100—ImgNet”. These observations suggest that the benefits of good initial weights also apply to task-specific SSL methods. With further empirical results in Sect. IV-C, we reveal that under fair comparison, task-specific SSL finetuning still outperforms state-of-the-art finetuning based methods by a large margin.

Based on the insights above, we further notice that pretraining is often carried out on a large pretrain dataset, e.g. ImageNet, while SSL finetuning is often on a smaller target dataset, e.g. CIFAR-10, CIFAR-100, PascalVOC. There is an inevitable distribution mismatch between pretrain and target dataset, commonly referred to as covariate shift [21]. The backbone network optimized for pretrain data distribution is subject to covariate shift and therefore will not be optimal for direct reuse on finetuning tasks. To bridge the data distribution gap, we introduce an additional target pretraining step before semi-supervised finetuning. Target pretraining is realized by doing contrastive training on both labeled and unlabeled data in the target dataset. With this additional step, model parameters will adapt to target data distribution and eventually improve finetuning performance. In particular, we find this target pretraining step is most effective when labeled data is sparse, reflecting that confirmation bias can be mitigated by a better initial weights.

We validate the effectiveness of target pretraining on both semi-supervised image classification and segmentation tasks. As augmentation is particularly important for segmentation tasks, we further introduce a differentiable geometric augmentation module to enhance the representation learning ability for segmentation tasks. We summarize the contributions of this work as follows:

-

•

We reveal that good initial weights through pretraining on large dataset is universally helpful to both finetuning based and task-specific semi-supervised learning approaches.

-

•

Realizing the data distribution shift between pretrain and target datasets, we propose a target pretraining step to adapt pretrained weights to target dataset before semi-supervised finetuning.

-

•

We demonstrate on both classification and semantic segmentation tasls that the proposed target pretraining strategy can substantially improve finetuning performance at low-label regime.

II Related Work

II-A Semi-Supervised Learning

The success of many computer vision tasks depends on training deep neural networks with large amount of labeled data which is expensive to acquire. Semi-supervised learning (SSL) aims to address learning with small labeled data and large unlabeled data [1, 2, 22, 20, 23, 3].

Task-Specific SSL is a major SSL paradigm which mainly produces pseudo-labels for unlabeled data to utilize them in training. In [1] pseudo-labels are produced as moving average of network predictions. To further exploit the ensemble of networks for stable and efficient pseudo-label prediction, Mean Teacher [2] proposed to utilize moving average of network parameters as teacher network which supervises a student network. More recently, data augmentation has been increasingly investigated under SSL. MixMatch [22] mixes up multiple images and their labels for stronger regularization for SSL. UDA [20] argues that advanced data augmentation methods play a crucial role in semi-supervised learning. More recently, FixMatch [3] achieved significantly higher performance by simultaneously exploiting strong and weak augmentations for consistency matching. Other than classification task, SSL has been adapted to semantic segmentation tasks [7, 24, 25] mainly adopting a Mean Teacher like architecture. Specialised data augmentation strategy is mainly accountable for the improvement in segmentation tasks.

Finetuning + SSL is an emerging approach towards SSL [17, 6, 26] by combining transfer learning with semi-supervised finetuning. An initial attempt [17] in this direction pretrains the backbone network on ImageNet [5] via contrastive learning [4]. The network is further finetuned on limited labeled data, and then distilled on unlabeled data. Empirical study suggests the superiority of pretraining plus finetuning over task-specific SSL with small labeled data and large network capacity. Self-Tuning [6] further extended the framework by using ImageNet supervisely pretrained weights and finetune on target datasets by combining cross-entropy loss with contrastive loss. A significant improvement over task-specific SSL is observed on fine-grained classification tasks. In this work, observing the performance gap between finetuning + SSL and task-specific SSL, we suspect the initial weights are accountable and further reveal that improving initial weights by target pretraining are universally helpful to both paradigms.

II-B Domain Adaptation

Transfer learning aims to reuse the data or model from source task/domain to target task/domain [27]. One of the key challenges in transfer learning is the domain shift between source and target datasets and domain adaptation aims to tackle this issue. When both source and target data are available during model training, UDA [28] introduced a discriminator to align the distribution between source and target domains. Nevertheless, the assumption of access to target domain data during model training restricts the application and recently source-free domain adaptation, a.k.a. test time training, emerges as a solution which no longer requires the access to labeled data during adaptation [29, 30, 31] at the cost of modifying the learning objective during training. To avoid altering training objective, which could be very expensive for large-scale pretraining tasks, Tent [32] adopted a self-training like approach to adapt only the affine transformation parameters for batchnorm layers by minimizing an entropy loss. Despite requiring no changes to training objective, Tent requires the prediction task to be consistent between source and target domains. In contrast to source-free domain adaptation and test time training, our target pretrain does not modify training objective in the source domain nor assume the prediction task to be identical between the source and target domains. A recent related work addressed finetuning on target domain in an unsupervised manner [33]. To avoid large deviations from pretrained model parameters they simultaneously finetune on both target and source domain data. Therefore, the adaptation still requires access to source domain data.

II-C Contrastive Learning

Constrative learning [4, 10, 11, 12] is particularly effective in pretraining backbone network on large unlabeled dataset by enforcing the image-wise features subject to two augmentation to be consistent. As such the network is able to attend to the discriminative areas for feature extraction. To focus on dense prediction downstream tasks, e.g. segmentation, detection, etc., contrasting pixel-wise features was proposed [34, 35] to train the backbone network to generate local features invariant to augmentations. Contrastive learning has been demonstrated to be friendly to data-efficient learning [12] as the feature representation becomes more amenable for training classifiers after pretraining. However, using contrastive learning to adapt pretrained weights to heterogeneous target dataset is yet explored and in this work we employ contrastive learning as a target pretraining approach and demonstrate effectiveness when label-data on target dataset is sparse.

III Methodology

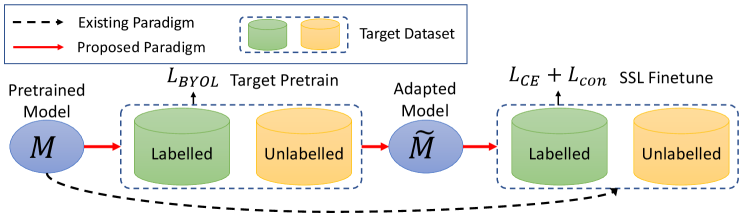

We first provide a overview of the proposed target pretraining strategy in Fig. 2. An initial model weights pretrained on large dataset, e.g. ImageNet, is assumed to be available. In addition, we have a target dataset consisting of labeled training samples and unlabeled samples . A key step is to adapt pretrained model weights by doing target pretraining on the target dataset. In the rest of this section, we first briefly review the contrastive learning approach. To further improve contrastive target pretraining for dense prediction tasks, we include a differentiable affine transformation [36] to improve the contrastive learning efficacy.

III-A Preliminary on Contrastive Learning

We use to denote a backbone network, parameterized by , which takes input image and produces a feature embedding . We further denote a projection head, parameterized by , as which outputs a lower dimension embedding. Contrastive learning is formulated as minimizing a contrastive loss defined over two augmentations of the same input image. Formally, the InfoNCE loss [37] is adopted as contrastive loss as in Eq. (1).

| (1) |

In this formulation, the positive pair is two different augmentations of the same input image, and negative pairs are constructed as different images and defines a cosine similarity between feature encodings of pair . It is identified in [4] that a large batchsize is essential to contrastive learning performance which is computationally expensive. Instead of maintaining both positive and negative pairs, BYOL [10] relies on similarity of features between a target network and an online network with target network being a parameter moving average of online network; the output of target network is used as a target for the online network to match against. Because of the slowly updating target network, trivial solution is avoided. BYOL optimizes the cosine distance, as in Eq. 2, between the representation subject to two augmentations as below, where and are respectively the features after projection head with two augmentations and and is a predictor.

| (2) |

Dense Contrastive Pretraining: BYOL is carried out at instance-level thus a global average pooling layer is appended at the end of backbone network to obtain a single feature vector for each image. For dense prediction tasks, e.g. segmentation, contrastive pretraining is carried out with spatial awareness. PixPro [34] proposed a dense contrative learning approach where global average pooling layer is removed and pixel-wise features are used for contrasting instead of image-wise features adopted in BYOL.

III-B Target Pretraining for SSL

Contrastive Target Pretraining: In a regular transfer learning setting, we first assume a backbone network, parameterized by , is pretrained on a large dataset. After pretraining, the model comes with parameter weights . Then, is used to initialize the backbone network for the downstream/target task, e.g. finetuning on a smaller dataset or for semantic segmentation. As discussed, we believe directly re-using as initial weights for finetuning is sub-optimal. Instead, we propose to apply constrative target pretraining on the target dataset. Specifically, we adopt BYOL contrastive loss with a regularization as below,

|

|

(3) |

We add a L2 weight regularization to avoid target pretrained weights to deviate too much from the initial weights. For a gradient-based optimization, the initial value for is . We find that by simply training enough iterations on target dataset, better weights can be obtained for semi-supervised finetuning. We denote the weights after -epoch target pretraining as .

Semi-Supervised Finetuning After target pretraining, the backbone weights are now better for finetuning on target dataset. For simplicity, we denote the classifier as . Semi-supervised finetuning is then defined as optimizing the semi-supervised loss as,

| (4) |

The consistency loss can be instantiated as off-the-shelf semi-supervised learners, e.g. FixMatch [3].

III-C Affine Augmentation for Segmentation

One of the most critical ingredients in contrastive training is data augmentation. An investigation into effective data augmentation was carried out in [4] and many contrastive approaches follow the augmentation policies [10, 34]. For a dense prediction task, [34] developed a contrastive learning paradigm by comparing pixel-wise features and it turns out to be effective for detection and segmentation tasks. Therefore, we follow the dense contrastive learning strategy when target pretraining is applied to segmentation tasks. However, we believe the data augmentation strategies that work well for classification task may not be the best for segmentation.

CNNs are known to translation equivariant, therefore cropping and weak resizing as augmentation is not stronger enough to force the network learn useful information on unlabeled data. Under such weak geometric transformations, the network will simply output feature maps subject to the same transformation. Therefore, we propose to include a differentiable affine transformation [36] to achieve more diverse geometric poses in augmented data.

An affine transformation involves a combination of scaling, rotation, shearing and translation. Each time one affine transformation is constructed by randomly chosing each component with chance and drawing parameters from a predefined distribution. For input image, we apply the affine transformation after all non-differentiable data augmentation operations. As with STN [36], we use bilinear interpolation to estimate the pixel intensity after transformation. This transformation is differentiable w.r.t. input image and so does its inversed one.

We denote the transformation applied to image as . To enable computing pixel-wise contrastive loss, we further apply the inverse transformation to the output of both online and target networks as . With the inverse transformation, predictions on two arbitrarily augmented images are directly comparable at pixel-level.

IV Experiments

In this section, we introduce the datasets, experiment settings and then provide benchmarking on semi-supervised finetuning with different initial weights. Finally, we provide hypothesis on the effectiveness of target pretraining from an adaptation perspective with empirical evidence.

IV-A Datasets

We demonstrate the effectiveness of proposed contrastive target pretraining strategy on four datasets. For classification tasks, we evaluate on CIFAR-10 [5], CIFAR-100 [5] and SVHN [38]. We further evaluate on additional semantic segmentation datasets, Pascal VOC 2012 [39], CityScapes [40] and ISIC2017 [41]. CIFAR-10 is a image classification datasets with 50,000 training images and 10,000 testing images evenly categorized into 10 classes. For semi-supervised learning setting, we evaluate on 40, 250 and 1000 labeled data. CIFAR-100 extended from CIFAR-10 with 10 times more categories and the same amount of training/testing samples. Therefore, the categories are more fine-grained than CIFAR-10. For semi-supervised learning setting, we evaluate on 250 and 1000 labeled data. SVHN is a street view house numbers recognition dataset with all numbers cropped and registered. There are 73257 digits for training, 26032 digits for testing respectively. For semi-supervised learning setting, we evaluate on 40 labeled data. For CIFAR-10, CIFAR-100 and SVHN, we follow the standard data splits for semi-supervised evaluation as in [3]. Pascal VOC 2012 has been widely adopted for semantic segmentation tasks. The dataset consists of 1464 training images and 1449 validation images. Following the practice in [7, 34] we augment the training set with additional training images, resulting in 10582 training images in total. For semi-supervised learning, we evaluate on , and of the labeled data. CityScapes was collected from cars diving in urban environment with aim to segment objects commonly seen on the street. It has 2975 training and 500 validation samples, with 19 semantic classes. We evaluate on 100 and 372 labeled data. ISIC2017 is a medical image segmentation dataset detecting skin lesions. It has 2000 training images and features a significant difference from the source ImageNet dataset. We evaluate SSL at 10, 20 and 50 labeled data.

IV-B Experiment Settings

Backbone Network: We adopt Resnet18 and Resnet50 [42] for classification and segmentation tasks respectively, as the weights pretrained on ImageNet is publicly available.

Data Augmentation: Since backbone network is pretrained on ImageNet with input size , for target pretrain and finetuning, we first apply random cropping and then resize cropped region to . We further apply the same augmentations in BYOL [10] for target pretraining on classification tasks and with additional affine transformation for segmentation tasks.

Contrastive Target Pretraining: We adopt BYOL [10] and PixPro [34] for contrastive target pretraining for classification and segmentation respectively. We reduce the learning rate 10 times from the default ImageNet experiments in respective methods and further pretrain 300 epochs. For BYOL, we use the following hyperparameters, batchsize , base learning rate , momentum and total epochs . For PixPro, we adopt the following hyperparameters, batchsize , base learning rate , momentum and total epochs . The LARS optimizer is adopted for both pretraining. Weight regularization strength is fixed as .

Semi-Supervised Finetuning: In classification task we use FixMatch [3] as the semi-supervised learner, we stick to the original hyperparameters for CIFAR-10, CIFAR-100 and SVHN reported in [3]. The following ones are adopted, the confidence threshold , the unlabeled loss weight , the unlabeled to labeled data ratio in a minibatch , the batchsize and the learning rate . SGD optimizer is adopted with momentum .

For segmentation tasks, we adopt CutMix [7] as the semi-supervised leaner. We adopt the following hyperparameters, the initial learning rate , batchsize / for PascalVOC and CityScapes respectively, iterations per epoch and the number of total training epochs . The Adam optimizer is adopted for both datasets. We randomly crop out a and region for training on PascalVOC and CityScapes respectively. For CityScapes, we downsample the image to to allow more efficient training.

IV-C Contrastive Target Pretraining

IV-C1 Classification Tasks.

We first evaluate fully supervised baseline, Mean Teacher (MT) [2] and virtual adversarial training (VAT) [19] as reference methods. We further evaluate two state-of-the-art SSL approaches, Self-Tuning [6] and FixMatch [3] with different initial weights. As shown in Tab. I, we use “Pretrain” to refer to weights pretrained on large pretraining dataset, “Trg. Pretr.” indicates contrastive target pretraining and “SSL” indicates the semi-supervised learner. We make the following observations from this table.

i) Target pretraining with BYOL is very effective regardless the initial weights being ImageNet supervised pretrained or from scratch. For example, if naive supervised finetuning is adopted, target pretraining improves and respectively from random initialized weights and ImageNet weights at CIFAR-10 with 40 labeled samples. The improvement is maintained with SelfTuning and FixMatch as semi-supervised learner on all datasets and labeling budgets.

ii) As expected, the gap diminishes when more labeled data becomes available and SOTA semi-supervised learner is adopted for finetuning. For example, on CIFAR-10 with 250 and 1000 labeled samples with FixMatch finetuing, the improvement is negligible. This is due to more labeled data can mitigate the confirmation bias during semi-supervised learning.

iii) Self-Tuning (ST) [6], proposed to combine cross-entropy loss with a contrastive loss during finetuning. ST was originally claimed to be superior to FixMatch at low-label regime [6]. However, through our extensive comparison, we conclude that the advantage of ST is mainly due to a better initial weights. For example, with the best initial weights (IN Sup+BYOL), ST is still far behind FixMatch on all datasets. This implies that developing more effective pseudo-labeling algorithm for SSL is still one of the most effective way to exploit unlabeled data. Nevertheless, a good initial weights are equally important, combining finetuning with semi-supervised learning should receive more attention for more data-efficient SSL.

| CIFAR-10 | CIFAR-100 | SVHN | ||||||

| Pretrain | Trg. Pre. | SSL | #40 | #250 | #1000 | #400 | #2500 | #40 |

| - | - | MT [2] | 27.34 | 47.29 | 65.48 | 10.36 | 37.49 | 27.73 |

| - | - | VAT [19] | 31.81 | 57.02 | 75.61 | 12.85 | 32.32 | 15.21 |

| - | - | - | 18.41 | 29.76 | 44.96 | 8.67 | 21.69 | 10.47 |

| - | BYOL | - | 49.44 (+31.03) | 66.33 (+36.57) | 75.15 (+30.19) | 15.18 (+6.51) | 35.20 (+13.51) | 18.25 (+7.78) |

| IN Sup | - | - | 49.69 | 77.38 | 85.56 | 30.85 | 57.36 | 16.92 |

| IN Sup | BYOL | - | 65.13 (+15.44) | 82.07 (+4.69) | 87.64 (+2.08) | 34.63 (+3.78) | 58.65 (1.29) | 35.82 (+18.90) |

| - | - | SelfTuning [6] | 27.89 | 45.84 | 61.06 | 9.17 | 23.72 | 13.49 |

| - | BYOL | SelfTuning | 49.76 (+21.87) | 73.70 (+27.86) | 82.11 (+21.05) | 19.05 (+9.88) | 41.40 (+17.68) | 22.23 (+8.74) |

| IN Sup | - | SelfTuning | 48.83 | 82.56 | 89.86 | 35.56 | 62.86 | 45.84 |

| IN Sup | BYOL | SelfTuning | 62.57 (+13.74) | 84.71 (+2.15) | 90.83 (+0.97) | 38.36 (+2.80) | 67.31 (4.45) | 46.95 (+1.11) |

| - | - | FixMatch [3] | 60.75 | 91.32 | 93.01 | 29.85 | 59.12 | 32.76 |

| - | BYOL | FixMatch | 75.51 (+14.76) | 91.55 (+0.23) | 94.19 (+1.18) | 40.14 (+10.29) | 63.34 (+4.22) | 36.94 (+4.18) |

| IN Sup | - | FixMatch | 75.28 | 94.22 | 95.82 | 44.34 | 71.81 | 79.89 |

| IN Sup | BYOL | FixMatch | 90.06 (+14.78) | 94.99 (+0.77) | 95.48 (-0.34) | 46.23 (+1.89) | 72.19 (+0.38) | 92.97 (+13.08) |

IV-C2 Segmentation Tasks.

We further present semi-supervised finetuning results on semantic segmentation task in Tab. II. We compare three state-of-the-art semi-supervised segmentation approaches, CutMix [7], ClassMix [24] and CLMB [25]. We make the following observations from the segmentation experiments.

i) Contrastive target pretraining demonstrates consistent effectiveness on semantic segmentation task. The improvement is particularly large for CutMix at low-label regime, e.g. Pascal VOC and CityScapes #100 labeled data. This again implies the bottleneck of from-scratch semi-supervised finetuning is prone to confirmation bias when labeled data is small. As a result, better initial weights is required

ii) It is also surprising to see with the target pretraining step, the final results of CutMix can sometimes match or slightly outperform its counterpart with ImageNet pretrained weights. It is worth noting that no labels are required for PixPro to pretrain ImageNet, thus a substantial amount of labeling efforts is saved under this setting.

| PascalVOC | CityScapes | ||||||

| Pretrain | Trg.Pre. | SSL | 1% | 2% | 5% | #100 | #372 |

| IN Sup | - | CutMix [7] | 53.79 | 64.81 | 66.48 | 57.89 | 65.64 |

| IN Sup | - | ClassMix [24] | 40.64 | 52.84 | 59.82 | - | - |

| IN Sup | - | CLMB [25] | - | 63.40 | 69.10 | 64.90 | 70.00 |

| IN PixPro | - | - | 38.20 | 49.95 | 60.35 | 52.99 | 63.05 |

| IN PixPro | PixPro | - | 41.35 | 52.97 | 63.11 | 53.26 | 63.26 |

| IN PixPro | - | CutMix | 49.58 | 61.63 | 67.61 | 58.30 | 68.21 |

| IN PixPro | PixPro | CutMix | 52.20 | 61.25 | 68.59 | 59.20 | 68.02 |

IV-C3 Transferring to Medical Image Segmentation

We further evaluate target pretraining on target datasets with a large domain gap by performing an experiment transferring a model pretrained on ImageNet to a skin lesion segmentation dataset, ISIC2017 [43]. We follow the settings proposed in CutMix and consider labeling budgets of 10, 20 and 50 labeled samples. We use 1000 epochs of target pretraining implemented on all 2000 training examples of ISIC2017. Results are shown in Tab. III – the consistent improvement in the low-label regime further validates the effectiveness of target pretraining.

| #Labeled | Pretrain | Trg. Pre. | mIoU |

|---|---|---|---|

| 10 | IN Sup | - | 73.80 |

| IN Sup | BYOL | 77.74 | |

| 20 | IN Sup | - | 82.93 |

| IN Sup | BYOL | 82.96 | |

| 50 | IN Sup | - | 85.99 (74.57) |

| IN Sup | BYOL | 86.02 |

IV-D Ablation Study

In this section, we carry out ablation study to verify the importance of weight regularization and affine transformation as augmentation. We carry out ablation on PascalVOC segmentation task. As shown in Tab. IV, we observe both weight regularization and affine transformation as augmentation improve the generalization of further pretrained model weights.

| PascalVOC | ||||

|---|---|---|---|---|

| Weight Reg. | Affine Aug | 1% | 2% | 5% |

| - | - | 38.20 | 49.95 | 60.35 |

| ✓ | - | 40.32 | 52.00 | 62.39 |

| ✓ | ✓ | 41.35 | 52.97 | 63.11 |

We perform additional evaluations on alternative contrastive learning and semi-supervised learning methods on CIFAR-10 with 40 labeled samples. We perform target pretraining with MoCo v2 [44] in addition to BYOL on CIFAR-10 with ImageNet pretrained weights and SSL finetuning with UDA [28] and MeanTeacher in addition to FixMatch. From Tab. V, we observe consistent improvements with alternative contrastive learning methods and we observe the advantage of target pretraining with additional SSL methods as well.

IV-E Further Insights into Target Pretraining

We discuss in this part the hypotheses of why this simple target pretraining is so effective. Some early works managed to explain deep neural network pretraining from providing a regularization effect on model parameters [45]. For example, pretraining will initialize the parameters in the basin of attraction of a good local optimum. Therefore, the following supervised finetuning will be easier to generalize. Another recent empirical study [9] argued that when there is enough labeled data, training from scratch is comparable to finetuning from pretrained weights. However, a breakdown point was observed in the low labelling regime, indicating good initial weights is necessary when labeled data is low.

In contrast to the previous attempts to explain, we would like to analyze from a covariate shift point of view. W.l.o.g, we describe a general supervised pretraining and finetuning example. The conclusion also applies to contrastive pretrain and semi-supervised finetuning. For simplicity, we denote an additional unlabelled pretraining dataset as . The objective of supervised pretraining can be seen as minimizing the following negative log likelihood, where we can decompose the conditional probability into the backbone and classifier .

|

|

(5) |

The objective of finetuning can be written as below.

| (6) |

The data distribution between pretraining and finetuning stages are often not identical thus subject to a covariate shift, i.e. . It is reasonable to believe the optimal backbone networks subject to pretraining and finetuning objectives respectively are not identical either. As a result, directly re-using for the supervised finetuning task would be sub-optimal. Further pretrain the backbone on the whole target dataset and the backbone parameters are initialized by the pretrained parameters, i.e. , as below.

| (7) |

Compared with direct finetuning from , target pretrained weights is first updated on the whole target dataset . With covariate shift being eliminated, will move towards the optimal weights for target task. Eventually, as initial weights for supervised finetuning can ease the difficulty in optimization for finetuning. To validate the above hypotheses we carry out the following empirical studies.

IV-E1 Target Pretraining as Adaptation

In this experiment, we validate the hypothesis of target pretraining as an adaptation of weights pretrained on generic dataset to specific target dataset. We keep all the target pretraining protocols the same with Sect. IV-C, an ImageNet pretrained weights are taken as initial weights, target pretraining is carried out on three classification datasets respectively. A cross-dataset finetuning experiment is carried out by transferring the target pretrained weights across CIFAR-10, CIFAR-100 and SVHN, with results presented in Tab. VI. We make the following observations. First, transferring weights target pretrained on CIFAR-10/100 to finetuning on SVHN yields moderate improvement from without target pretraining (), while they are still behind target pretrained on SVHN itself. Due to the large distribution gap between CIFAR-10/100 (natural object images) and SVHN (digit images), this result suggests target pretraining is essentially adapting weights to target data distribution. Moreover, transferring SVHN target pretrained weights to CIFAR10/100 produces much inferior results than even without target pretraining and transferring between CIFAR-10 and CIFAR-100 gives a relatively smaller drop from within dataset pretraining. Both of the above two observations indicate target pretraining must be carried out on the distribution of target dataset which again validates the adaptation hypothesis.

| Finetune | ||||

|---|---|---|---|---|

| Pretrain | Trg. Pre. | CIFAR-10 | CIFAR-100 | SVHN |

| IN Sup | - | 49.69 | 30.85 | 16.92 |

| IN Sup | CIFAR-10 | 65.13 | 29.51 | 19.71 |

| IN Sup | CIFAR-100 | 49.46 | 34.63 | 20.06 |

| IN Sup | SVHN | 24.91 | 12.12 | 35.82 |

IV-E2 Target Pretraining Improves Class Discovery

We further investigate how target pretraining helps adapt to better initial weights by evaluating unsupervised class discovery (clustering). As an alternative approach towards unsupervised feature learning, clustering has been employed to generate pseudo-labels for representation learning [46, 47]. Therefore, if better clustering results are identified it means the features are potentially more useful for finetuning. Specifically, we first denote the cluster indices as and the ground-truth classification label as , where is the number is samples and is the number of classes/clusters. For both and , there is only a single element with 1 at each row. Since clustering is invariant to the permutation of cluster index the assignment of cluster index to class label is unknown. To calculate clustering accuracy, we first find the following permutation of cluster indices,

| (8) |

The following problem is formulated to find the best permutation that maximizes the accuracy, where is an elementwise product.

| (9) |

The above problem can be solved by Hungarian algorithm where the cost matrix is . We eventually report the performance as the classification described above. With results presented in Tab. VII, we observe consistently better clustering results for target pretrained features suggesting the effectiveness of target pretraining as good initial weights for representation learning.

| Pretrain | Trg. Pretr. | Cifar-10 | Cifar-100 | SVHN |

|---|---|---|---|---|

| IN Sup | - | 46.31/45.66 | 6.40/6.74 | 12.96/12.64 |

| IN Sup | BYOL | 47.17/48.02 | 7.91/7.44 | 15.96/16.23 |

IV-E3 TSNE Visualization.

We further visualize feature representations after target pretraining on target dataset to draw qualitative insight into its effect. Specifically, we collect 500 randomly selected testing samples from CIFAR-10 with features as the output of last layer in backbone after global average pooling. We compared two set of features, the first is collected from ResNet18 with ImageNet pretrained weights and another is from ImageNet pretrained weights plus target pretraining. We concatenate both sets of features and project them into 2D via TSNE [48] for visualization. As shown in Fig. 5, we see a noticeable clustering structure after target pretraining on target data, demonstrating the effectiveness of target pretraining.

IV-E4 Impact of Target Pretraining Steps.

We study the impact of target pretraining w.r.t. the number of labeled data and steps of target pretraining. Specifically, we evaluate supervised finetuning performance on CIFAR-10 with 250, 1000, 4000 and all labeled data. The initial weights for finetuning is evaluated from 0 to 250 epochs. The results are illustrated in Fig. 3. First, target pretraining is effective after at least 200 epochs training, suggesting enough training iterations is required for a good adaptation to target dataset. Second, the improvement brought by target pretraining is consistently better at lower-labeling regime. This aligns with many previous investigations into the impact of pretraining [9].

IV-E5 Comparing Convergence

As discussed, having good initialization allows faster finetuning with better results after convergence. In this section, we compare the convergence between with and without contrastive target pretraining on CIFAR-10 #40 labeled data, CIFAR-100 #400 labeled data and SVHN #40 labeled data. Specifically, we evaluate both supervised finetuning and semi-supervised finetuning with different initial weights and visualize the results in Fig. 4. In Fig. 4 (left), we first show the test accuracy curves on three classification datasets over 200 training epochs. Initial weights are randomly initialized before target pretraining. For all three datasets, it is very clear that with target pretraining (dashed line), the converged accuracy is substantially higher. In Fig. 4 (middle), with ImageNet supervised pretrained weights, target pretraining (dashed line) demonstrates superior performance with significant margin on CIFAR-10 and SVHN. Finally, in Fig. 4 (right), we compare the convergence of FixMatch semi-supervised finetuning with different initial weights. There is a very clear margin for CIFAR-10 and SVHN when target pretraining is included while the margin is smaller for CIFAR-100 probably because more labeled data (#400 labeled) is available in CIFAR-100 experiment. We also notice that all methods have converged within 200 epochs, thus making this comparison fair. All these observations again validate that good initial weights play an important role in SSL finetuning at low-label regime and target pretraining is an effective way to produce better initial weights.

V Conclusion

Motivated by the recent studies into combining transfer learning with semi-supervised learning, we first revealed that a good initial weights are actually accountable for the substantial improvement on semi-supervised finetuning. We demonstrate that with the same initial weights, semi-supervised learning based on pseudo-labeling is still a better option. We further discover that due to the covariate shift between pretrain and target datasets, direct finetuning from weights pretrained on separate large dataset is not optimal. A contrastive target pretraining step is proposed to adapt the weights to target dataset. We demonstrate on multiple classification and segmentation datasets that semi-supervised finetuning can benefit substantially from the adapted weights at low-label regime. This study encourages people to rethink the relation between transfer learning and semi-supervised learning and contrastive learning could be a good way to synergistically combine both.

References

- [1] L. Samuli and A. Timo, “Temporal ensembling for semi-supervised learning,” in International Conference on Learning Representations, 2017.

- [2] A. Tarvainen and H. Valpola, “Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results,” in Advances in neural information processing systems, 2017.

- [3] K. Sohn, D. Berthelot, C.-L. Li, Z. Zhang, N. Carlini, E. D. Cubuk, A. Kurakin, H. Zhang, and C. Raffel, “Fixmatch: Simplifying semi-supervised learning with consistency and confidence,” in Advances in neural information processing systems, 2020.

- [4] T. Chen, S. Kornblith, M. Norouzi, and G. Hinton, “A simple framework for contrastive learning of visual representations,” in International conference on machine learning, 2020.

- [5] A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” 2009.

- [6] X. Wang, J. Gao, M. Long, and J. Wang, “Self-tuning for data-efficient deep learning,” in International Conference on Machine Learning, 2021.

- [7] G. French, T. Aila, S. Laine, M. Mackiewicz, and G. Finlayson, “Semi-supervised semantic segmentation needs strong, high-dimensional perturbations,” 2019.

- [8] E. Arazo, D. Ortego, P. Albert, N. E. O’Connor, and K. McGuinness, “Pseudo-labeling and confirmation bias in deep semi-supervised learning,” in International Joint Conference on Neural Networks, 2020.

- [9] K. He, R. Girshick, and P. Dollár, “Rethinking imagenet pre-training,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019.

- [10] J.-B. Grill, F. Strub, F. Altché, C. Tallec, P. H. Richemond, E. Buchatskaya, C. Doersch, B. A. Pires, Z. D. Guo, M. G. Azar et al., “Bootstrap your own latent: A new approach to self-supervised learning,” in Advances in neural information processing systems, 2020.

- [11] K. He, H. Fan, Y. Wu, S. Xie, and R. Girshick, “Momentum contrast for unsupervised visual representation learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [12] O. Henaff, “Data-efficient image recognition with contrastive predictive coding,” in International Conference on Machine Learning, 2020.

- [13] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein et al., “Imagenet large scale visual recognition challenge,” International Journal of Computer Vision, 2015.

- [14] L. Shao, F. Zhu, and X. Li, “Transfer learning for visual categorization: A survey,” IEEE transactions on neural networks and learning systems, 2014.

- [15] L.-C. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, “Encoder-decoder with atrous separable convolution for semantic image segmentation,” in Proceedings of the European conference on computer vision, 2018.

- [16] S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” Advances in neural information processing systems, 2015.

- [17] T. Chen, S. Kornblith, K. Swersky, M. Norouzi, and G. Hinton, “Big self-supervised models are strong semi-supervised learners,” in Advances in neural information processing systems, 2020.

- [18] K. You, Z. Kou, M. Long, and J. Wang, “Co-tuning for transfer learning,” Advances in Neural Information Processing Systems, 2020.

- [19] T. Miyato, S.-i. Maeda, M. Koyama, and S. Ishii, “Virtual adversarial training: a regularization method for supervised and semi-supervised learning,” IEEE transactions on pattern analysis and machine intelligence, 2018.

- [20] Q. Xie, Z. Dai, E. Hovy, M.-T. Luong, and Q. V. Le, “Unsupervised data augmentation for consistency training,” in Advances in neural information processing systems, 2020.

- [21] M. Wang and W. Deng, “Deep visual domain adaptation: A survey,” Neurocomputing, 2018.

- [22] D. Berthelot, N. Carlini, I. Goodfellow, N. Papernot, A. Oliver, and C. Raffel, “Mixmatch: A holistic approach to semi-supervised learning,” in Advances in neural information processing systems, 2019.

- [23] D. Berthelot, N. Carlini, E. D. Cubuk, A. Kurakin, K. Sohn, H. Zhang, and C. Raffel, “Remixmatch: Semi-supervised learning with distribution alignment and augmentation anchoring,” in International Conference on Learning Representations, 2020.

- [24] V. Olsson, W. Tranheden, J. Pinto, and L. Svensson, “Classmix: Segmentation-based data augmentation for semi-supervised learning,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2021.

- [25] I. Alonso, A. Sabater, D. Ferstl, L. Montesano, and A. C. Murillo, “Semi-supervised semantic segmentation with pixel-level contrastive learning from a class-wise memory bank,” 2021.

- [26] J.-C. Su, Z. Cheng, and S. Maji, “A realistic evaluation of semi-supervised learning for fine-grained classification,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021.

- [27] S. J. Pan and Q. Yang, “A survey on transfer learning,” IEEE Transactions on knowledge and data engineering, 2009.

- [28] Y. Ganin and V. Lempitsky, “Unsupervised domain adaptation by backpropagation,” in International conference on machine learning. PMLR, 2015.

- [29] J. N. Kundu, N. Venkat, R. V. Babu et al., “Universal source-free domain adaptation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020.

- [30] Y. Sun, X. Wang, Z. Liu, J. Miller, A. Efros, and M. Hardt, “Test-time training with self-supervision for generalization under distribution shifts,” in International Conference on Machine Learning, 2020.

- [31] Y. Liu, P. Kothari, B. van Delft, B. Bellot-Gurlet, T. Mordan, and A. Alahi, “Ttt++: When does self-supervised test-time training fail or thrive?” in Advances in Neural Information Processing Systems, 2021.

- [32] D. Wang, E. Shelhamer, S. Liu, B. Olshausen, and T. Darrell, “Tent: Fully test-time adaptation by entropy minimization,” in International Conference on Learning Representations, 2021.

- [33] S. Li, D. Chen, Y. Chen, L. Yuan, L. Zhang, Q. Chu, B. Liu, and N. Yu, “Unsupervised finetuning,” arXiv preprint arXiv:2110.09510, 2021.

- [34] Z. Xie, Y. Lin, Z. Zhang, Y. Cao, S. Lin, and H. Hu, “Propagate yourself: Exploring pixel-level consistency for unsupervised visual representation learning,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021.

- [35] X. Wang, R. Zhang, C. Shen, T. Kong, and L. Li, “Dense contrastive learning for self-supervised visual pre-training,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021.

- [36] M. Jaderberg, K. Simonyan, A. Zisserman et al., “Spatial transformer networks,” Advances in neural information processing systems, 2015.

- [37] A. v. d. Oord, Y. Li, and O. Vinyals, “Representation learning with contrastive predictive coding,” arXiv preprint arXiv:1807.03748, 2018.

- [38] Y. Netzer, T. Wang, A. Coates, A. Bissacco, B. Wu, and A. Y. Ng, “Reading digits in natural images with unsupervised feature learning,” in NIPS Workshop on Deep Learning and Unsupervised Feature Learning, 2011.

- [39] M. Everingham, L. Van Gool, C. K. I. Williams, J. Winn, and A. Zisserman, “The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results,” http://www.pascal-network.org/challenges/VOC/voc2012/workshop/index.html.

- [40] M. Cordts, M. Omran, S. Ramos, T. Rehfeld, M. Enzweiler, R. Benenson, U. Franke, S. Roth, and B. Schiele, “The cityscapes dataset for semantic urban scene understanding,” in Proc. of the IEEE Conference on Computer Vision and Pattern Recognition, 2016.

- [41] C. Xue, Q. Dou, X. Shi, H. Chen, and P.-A. Heng, “Robust learning at noisy labeled medical images: Applied to skin lesion classification,” in 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), 2019.

- [42] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016.

- [43] N. C. Codella, D. Gutman, M. E. Celebi, B. Helba, M. A. Marchetti, S. W. Dusza, A. Kalloo, K. Liopyris, N. Mishra, H. Kittler et al., “Skin lesion analysis toward melanoma detection: A challenge at the 2017 international symposium on biomedical imaging (isbi), hosted by the international skin imaging collaboration (isic),” in 2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018). IEEE, 2018, pp. 168–172.

- [44] X. Chen, H. Fan, R. Girshick, and K. He, “Improved baselines with momentum contrastive learning,” arXiv preprint arXiv:2003.04297, 2020.

- [45] D. Erhan, A. Courville, Y. Bengio, and P. Vincent, “Why does unsupervised pre-training help deep learning?” in Proceedings of the thirteenth international conference on artificial intelligence and statistics, 2010.

- [46] M. Caron, P. Bojanowski, A. Joulin, and M. Douze, “Deep clustering for unsupervised learning of visual features,” in European Conference on Computer Vision, 2018.

- [47] M. Caron, I. Misra, J. Mairal, P. Goyal, P. Bojanowski, and A. Joulin, “Unsupervised learning of visual features by contrasting cluster assignments,” 2020.

- [48] L. Van der Maaten and G. Hinton, “Visualizing data using t-sne.” Journal of machine learning research, vol. 9, no. 11, 2008.