Reversible Quantization Index Modulation for Static Deep Neural Network Watermarking

Abstract

Static deep neural network (DNN) watermarking techniques typically employ irreversible methods to embed watermarks into the DNN model weights. However, this approach causes permanent damage to the watermarked model and fails to meet the requirements of integrity authentication. Reversible data hiding (RDH) methods offer a potential solution, but existing approaches suffer from weaknesses in terms of usability, capacity, and fidelity, hindering their practical adoption. In this paper, we propose a novel RDH-based static DNN watermarking scheme using quantization index modulation (QIM). Our scheme incorporates a novel approach based on a one-dimensional quantizer for watermark embedding. Furthermore, we design two schemes to address the challenges of integrity protection and legitimate authentication for DNNs. Through simulation results on training loss and classification accuracy, we demonstrate the feasibility and effectiveness of our proposed schemes, highlighting their superior adaptability compared to existing methods.

Index Terms:

deep neural network (DNN), watermarking, reversible data hiding (RDH).1 Introduction

Deep neural networks (DNNs) have gained significant popularity due to their remarkable performance and have found applications in various fields [1, 2, 3, 4, 5, 6, 7]. However, the increasing use of deep learning-based systems also poses a risk of unauthorized usage or modification of DNN models without proper attribution to the original authors. To address this concern, watermarking techniques for DNNs have emerged as an important step in protecting the intellectual property embedded in these models [8]. Watermarking provides an additional layer of security that allows the original authors to prove ownership of their models, safeguard them from unauthorized access and use, track their provenance, ensure integrity, facilitate versioning, and identify malicious models [9].

Deep neural network (DNN) watermarking techniques can be broadly categorized into static and dynamic watermarking approaches [10, 11], depending on where the watermark can be read from. In static watermarking methods (e.g., [12, 13, 14, 15]), the watermark can be directly extracted from the network weights. During the training phase, these weights are determined and typically represented in floating-point formats, which differ from the popular unsigned-integer format commonly used for images. Static watermarking techniques aim to embed the watermark directly into the weights of the DNN, ensuring that the ownership and integrity of the model can be verified by examining these weight values. Static watermarking is particularly relevant in scenarios where the protection of the model’s weights and ownership verification are of utmost importance. On the other hand, dynamic watermarking techniques (e.g., [16, 17]) rely on the modification of the network’s behavior when provided with specific inputs, resulting in a visible watermark in the model’s output. By carefully designing the input signals or modifying the network’s architecture, dynamic watermarking allows for the extraction of the watermark through the observation of specific output patterns. Dynamic watermarking techniques offer a more flexible approach by embedding the watermark in the network’s behavior rather than its weights. This enables the watermark to be extracted from the model’s output, making it suitable for applications where the focus is on detecting unauthorized usage or tracking the dissemination of the model.

An intriguing research direction is the development of reversible watermarking schemes for DNNs. Reversible watermarking is a type of digital watermarking that enables content owners to protect their digital data without causing any permanent modifications [18, 19]. It allows embedded information to be retrieved from the host object without any data loss or damage. Reversible watermarking algorithms have been successfully applied to the unsigned integer format commonly used in images, including techniques such as difference expansion (DE) [20], prediction-error expansion (PEE) [21, 22], and histogram shifting (HS) [23]. Considering that the weights in DNNs can be treated as conventional multimedia objects, reversible watermarking of DNNs can be seen as an extension of static DNN watermarking, where reversible watermarks are embedded within the weights. However, existing approaches, such as the HS-based method proposed by Guan et al. [24] for watermarking convolutional neural networks (CNNs), face challenges when dealing with floating-point weights and suffer from degradation when the host exhibits a uniform or uniform-like distribution.

Motivated by these challenges, we propose a novel reversible watermarking scheme specifically tailored for floating-point weights in DNNs. Our contributions, along with their highlights, are summarized as follows:

-

•

First, we design a simple yet efficient reversible watermarking algorithm, named reversible quantization index modulation (R-QIM), which improves upon the widely used quantization index modulation (QIM) [25, 26, 27, 28]. R-QIM allows for reversible embedding of watermarks in floating-point or real-valued objects, resembling a lattice quantizer that maps input values from a large continuous set to a countable smaller set with a finite number of elements. While QIM is naturally lossy, we leverage the availability of the cover object during the watermark embedding process to add a scaled version of the difference vector back to the quantized output values, enabling reversibility.

-

•

Second, we demonstrate how R-QIM can be deployed in DNN watermarking to achieve integrity protection and legitimacy authentication. For integrity protection, our scheme allows the owner or a trusted third-party institution to verify the occurrence of data tampering, regardless of noiseless or known noisy channel conditions. This addresses the limitations of existing schemes that are unavailable in noisy channel transmission. For legitimacy authentication, our proposed scheme provides an effective means to differentiate between legal and illegal use of target DNNs. This added layer of protection helps deter attackers and facilitates the identification of individuals responsible for unauthorized use. Additionally, it provides assurance that a given DNN is authentic, ensuring the integrity of the produced data.

-

•

Third, we provide theoretical justifications and conduct numerical simulations to showcase the advantages of R-QIM. We analyze the signal-to-watermark ratio (SWR) of R-QIM, which measures capacity and fidelity, and compare the training loss and classification accuracy of R-QIM with the HS-based method [24] by analyzing the weights of multi-layer perceptron (MLP) and visual geometry group (VGG) models.

The remainder of the paper is organized as follows. Section 2 introduces DNN watermarking models and existing algorithms. Sections 3 and 4 present R-QIM along with theoretical analyses and its applications in DNN watermarking. Section 5 provides simulation results, and Section 6 concludes the paper.

2 Preliminaries

2.1 Reversible DNN Watermarking Basics

Reversible deep neural network (DNN) watermarking involves the embedding of a watermark into the weights of a DNN model in a manner that allows for its extraction without any permanent modifications or loss of information. This reversible embedding process is analogous to static DNN watermarking, where the watermark is embedded directly into the network weights during the training phase [12, 10]. However, reversible watermarking techniques ensure that the original weights can be perfectly recovered after the watermark is extracted.

The mathematical model for reversible DNN watermarking can be described as follows. Let denote the set of all weights in a trained DNN model. During watermark embedding, specific weights from are selected based on a location sequence guided by a clue or key , resulting in a cover sequence . The information sequence is then embedded into using a carefully designed embedding function , resulting in the watermarked sequence .

To ensure correct extraction and recovery of the watermark, the following triplet of operations is applied:

| (1) |

where represents the embedding function that embeds the information sequence into the cover sequence to produce the watermarked sequence . and denote the extraction and recovery functions, respectively. represents the additive noise present in the received watermarked sequence .

While reversible DNN watermarking shares similarities with reversible image watermarking, there are notable differences in terms of the cover format, robustness, and fidelity requirements. Table I summarizes the key differences between reversible image watermarking and reversible DNN watermarking. Reversible DNN watermarking operates on floating-point weights, which differ from the unsigned integers typically used in reversible image watermarking. The fidelity requirement in reversible DNN watermarking pertains to the effectiveness of the host network after watermark embedding, rather than the visual quality of the host signal as in image watermarking. Additionally, reversible DNN watermarking should have the capacity to embed a large amount of data or information into the network weights. Security is crucial to prevent unauthorized parties from accessing, reading, or modifying the watermark. Lastly, efficiency is important to ensure faster embedding and extraction processes for DNN watermarking algorithms.

By understanding the unique characteristics and requirements of reversible DNN watermarking, we can develop tailored algorithms and techniques that enable the embedding, extraction, and recovery of watermarks while preserving the integrity and effectiveness of the DNN models.

| Features | Reversible image watermarking | Reversible DNN watermarking |

| Format of covers | Unsigned integers | Floating-point numbers |

| Fidelity | Higher quality of the host signal after watermark embedding | Higher effectiveness of the host network after watermark embedding |

| Capacity | Ability to embed a watermark with a massive amount of data/information | |

| Security | Ability to remain secret from unauthorized parties accessing, reading, and modifying the watermark | |

| Efficiency | Higher speed for the embedding and extraction process of the watermarking algorithm | |

2.2 Existing Methods

2.2.1 HS

HS (Histogram Shifting) is a reversible watermarking algorithm originally developed for images, but it has been adapted for use in CNNs [24]. The method consists of three main parts: host sequence construction, data preprocessing, and the watermarking algorithm.

In the host sequence construction, a host matrix is constructed from a convolutional layer in the CNN. This step is not directly relevant to this paper and will not be discussed further. In the data preprocessing step, each weight is defined as follows:

| (2) |

Here, represents the total length of digits for the weight. To meet the requirements of an integer host, the consecutive non-zero digit pairs in , corresponding to the minimum entropy, are chosen as the significant digit pairs to construct the host sequence. These chosen pairs are then adjusted by adding an adjustable integer parameter to ensure they fall within the appropriate range of .

For the watermarking algorithm, HS [23] scheme is employed as the embedding and extraction strategy. The 1-bit HS embedding process for the watermark can be described as follows:

| (3) |

The histogram shifting operation in this 1-bit embedding process is depicted in Fig. 1(a), where the bins greater than are shifted to the right by a fixed to create a vacant bin for embedding. The watermark with a uniform distribution is then embedded into the bin equal to using HS. This divides the entire cover into three regions, as depicted in Fig. 1(c): region i for covers smaller than , region ii for covers equal to , and region iii for covers larger than . The mapping rule for changes depending on the bit, as shown in Fig. 1(b).

Using the same process of host sequence construction and data preprocessing, the extraction process can be described as follows:

| (4) |

and the recovery process as:

| (5) |

2.2.2 QIM

QIM (Quantization Index Modulation) is a widely used method for non-reversible watermarking [25, 26, 27, 28]. Its rationale can be explained using the example shown in Fig. 2(a). The circle and cross positions in Fig. 2(a) represent two sets, and , arranged alternately. Given a host or cover sample and a one-bit message , the watermarked value is obtained by moving to the nearest point in when , and to the nearest point in when .

Let be a quantization function with as the step-size parameter. The embedding process can be described as follows:

| (6) |

where , , , and .

Assuming that the transmitted has been contaminated by an additive noise term , the received signal is given by . A minimum distance decoder is used to extract the watermark as follows:

| (7) |

If , the estimated value is correct.

In terms of embedding distortion, as shown in Fig. 3(a), the maximum error caused by embedding is . If the quantization errors are uniformly distributed over , the mean-squared embedding distortion is given by . Considering the capacity, QIM achieves an approximate rate of 1 bpps (bit per sample), which means each sample of the host cover can carry 1 bit of watermark information.

3 THE PROPOSED METHOD

In this section, we introduce a QIM-based RDH (Reversible Data Hiding) algorithm called reversible QIM (R-QIM) and highlight its advantages compared to the method proposed in [24].

3.1 R-QIM

We observe that there exists a quantization error between the cover vector and its quantized watermarked vector , given by:

| (8) |

If we only use as the watermarked vector, the information about is lost. However, QIM has a certain error tolerance capability. If we consider as ”beneficial noise” and add it back to , we can maintain the information about the cover , making the scheme reversible. The challenge lies in properly scaling the ”beneficial noise” to meet specific requirements. First, the scaled should be small enough to stay within the correct decoding region. Second, the scaled should not be too small to avoid exceeding the representation accuracy of numbers.

The method that incorporates these ideas is called R-QIM. Its embedding operation is defined as:

| (9) |

where represents a scaling factor that satisfies , is an encrypted quantizer defined as:

| (10) |

In Eq. (10), denotes the same used in conventional QIM, and represents a dithering component for secrecy. R-QIM can be considered a fast version of the lattice-based method proposed in [29].

The parameters and are typically treated as secret keys in a watermarking scheme. By setting and , we can achieve a 1-bit embedding example of R-QIM as depicted in Fig. 2(b). In this case, the watermarked covers are distributed in the green and red zones around the circle and cross positions, rather than on the positions themselves.

For the receiver, the estimated watermark can be extracted from the received signal using the following equation:

| (11) |

If the noise term is small enough to satisfy the condition:

| (12) |

the correct extraction is achieved, whether in a noiseless or noisy channel.

To estimate the original weight from the received signal , we use the following equation:

| (13) |

The correct restoration occurs if and only if such that . In the presence of noise, the estimation error is given by:

| (14) |

By setting and , the embedding distortion is depicted in Fig. 3(b), with a maximum error of . If the quantization errors are uniformly distributed over , the mean-squared embedding distortion is:

| (15) |

Since (as shown in Fig. 2), each bit of the watermark can be embedded into a host sample with any characteristic and distribution. These features make R-QIM capable of accommodating a watermark of almost the same maximum length as the number of host samples.

3.2 Discussions

In this section, we compare the R-QIM algorithm with the HS algorithm proposed in [24] and discuss their respective advantages in terms of usability, capacity, and imperceptibility.

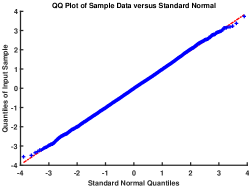

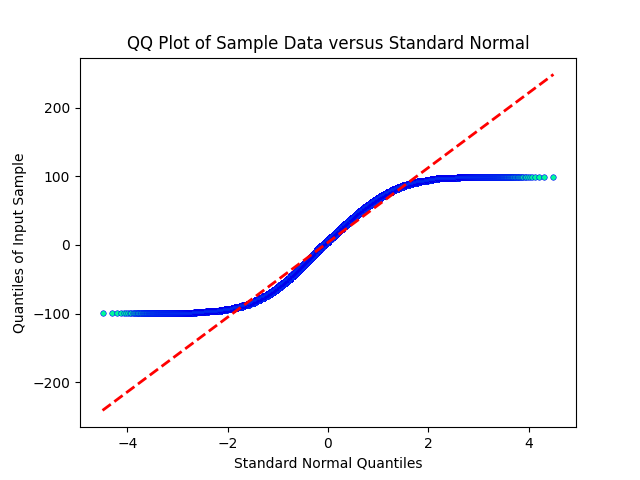

First, let’s consider usability. The HS algorithm is not suitable for RDH-based static DNN watermarking due to two main reasons. Firstly, it mismatches the host of uniform distribution, which makes the watermarked sequence exhibit obvious statistical characteristics. This vulnerability makes the algorithm defenseless against passive attacks. Secondly, the low capacity of the HS algorithm becomes even worse when applied to uniformly distributed hosts. Therefore, HS is not feasible for static DNN watermarking, as the data preprocessing operation makes the host sequence uniform rather than normally distributed. We conducted experiments to verify this by preprocessing different randomly generated data of normal distribution, testing them multiple times for skewness, kurtosis, and Kolmogorov-Smirnov (K-S) tests, and plotting the Quantile-Quantile (Q-Q) plot for one of the test results. The results (Fig. 4) clearly show that the preprocessed data becomes flatter and deviates from a normal distribution according to the K-S test. Figures 4(d), (e), and (f) demonstrate that the preprocessed data follows a uniform distribution. Thus, HS [24] lacks practical usability, while R-QIM is feasible for data admitting any distribution.

Next, let’s analyze the theoretical advantages of R-QIM in terms of capacity and imperceptibility compared to HS. To evaluate the embedding capacity, we consider a host sequence of length and analyze the maximum available watermark length for both R-QIM and HS. In the HS algorithm, the watermark is only embedded into the bin where , and the other bins do not contain any information about the watermarks. Recall that Regions i, ii and iii are shown in Fig. 1(c). The maximum length of the available watermark in HS can be calculated as:

| (16) |

On the other hand, in R-QIM, the entire host sequence can be used to embed the watermark, resulting in . It is evident that for host sequences of the same length, R-QIM has a higher embedding capacity.

In terms of embedding distortion or imperceptibility, we define the signal-to-watermark ratio (SWR) as a measure. The SWR is defined as:

| (17) |

where and represent the power of the host and the additive watermark, respectively. A smaller value of indicates a higher SWR, which implies better imperceptibility. To analyze the embedding distortion fairly, we assume the same capacity and host distribution for both HS in [24] and R-QIM, corresponding to embedding the watermark into the host which follows a Gaussian distribution.

Regarding the embedding distortion, we have the following result:

Theorem 1.

R-QIM achieves a larger SWR than HS when .

Proof.

According to Theorem 1, when considering a fixed setting with in HS [24], it can be observed that R-QIM achieves lower embedding distortion and better fidelity, based on the aforementioned assumption. Furthermore, it indicates that the fidelity of R-QIM can be controlled. When , by adjusting the parameters, we can obtain flexible fidelity performance, whether it is better or worse than HS. In the subsequent scheme design, we will demonstrate the benefits of this feature.

4 Applications of R-QIM in Static DNN Watermarking

In this section, we explore the application of R-QIM in static deep neural network (DNN) watermarking. We propose a scheme that includes several algorithms to facilitate the embedding, extraction, and restoration processes. Furthermore, we outline the concrete steps for functions such as integrity protection and infringement identification, which can be realized using the proposed scheme. The schematics of the two applications are depicted in Figure 5. For the sake of simplicity, we refer to the owner of the DNNs as ”Alice,” the legal user as ”Bob,” the illegal user as ”Mallory,” and the trusted third-party institution as ”Institution.”

4.1 Wrapping up R-QIM

To address security concerns, R-QIM requires additional measures. The watermarking, extracting, and restoring processes based on R-QIM are presented through pseudo-codes in Mark (Algorithm 1), Extract (Algorithm 2), and Restore (Algorithm 3). In these algorithms, certain parameters such as and are set using a pseudo-random number generator (PRNG), while others like the step size and scaling factor are determined by the owner.

Mark (Algorithm 1) takes as input the trained model , the watermark m, and the aforementioned parameters. It outputs the watermarked model along with side information, including the watermark information and the secret key . By selecting a sequence with the clue and extracting relevant information ( and ) from m using the function, each bit of the watermark is embedded into using the R-QIM embedding equation (9) via the function. The watermark information combines and , while includes , , and . To maintain the security properties of the embedded watermark, the owner of the DNN model should keep , , and confidential.

Extract (Algorithm 2) performs the watermark extraction from the watermarked model generated by Mark. With the assistance of the watermark information and the secret key held by the owner, an estimated sequence is created using the R-QIM extraction equation (11) via the function, following the same selection process as in Mark. Then, utilizing the watermark information in the codebook, Extract outputs an estimated watermark derived from . Notably, since watermark extraction requires the assistance of the secret key rather than the scaling

factor , which relates to the security of DNN model recovery, Extract should be performed by the DNN model owner or a trusted third-party institution, ensuring the non-disclosure of the scaling factor .

Restore (Algorithm 3) takes the watermarked model as input and restores it to its original form using the watermark information , the secret key , and the scaling factor as side information. After the same selection process as Mark, each sample is recovered to one by one using the R-QIM recovery equation (13) via the function. Since the correct restoration relies on the noise term , we can detect tampering in the watermarked model under a noiseless channel or a known noisy channel, making the watermarking process reversible for protecting the integrity of the watermarked model. Furthermore, as the restored model no longer contains the watermark, the effectiveness of the restoration process can be evaluated by verifying the absence of the watermark in the DNN model.

Input: Trained Model , Watermark , Scaling Factor , Dithering Vector , Embedding Clue , Step Size

Output: Watermarked Model , Watermark Information , Secret Key

Input: Watermarked Model , Watermark Information , Secret Key

Output: Extracted Watermark

Input: Watermarked Model , Watermark Information , Secret Key , Scaling Factor

Output: Recovered Model

4.2 Integrity Protection

To enable reversibility in DNN watermarks, Guan et al. [24] introduced the concept of integrity protection for DNN models. They proposed a scheme that verifies whether a DNN model has been tampered with by comparing the bit differences in weights between the restored and original models. Building on this idea, we present an integrity protection scheme that employs R-QIM [24], as depicted in Figure 5 (a).

In this scheme, Alice embeds her watermarks into a commercialized DNN model using the Mark algorithm [24], resulting in a watermarked DNN model . During transmission, Mallory illegally intercepts , modifies its weights, and profits from sharing the tampered model. To identify tampering, we define two types of operations for noiseless and noisy channels.

-

•

Noiseless channel: In this scenario, where correct recovery is guaranteed, the tampered DNN model is restored using the Restore algorithm [24] to obtain an estimated model. Notably, the Restore function can meet the requirements of perfect recovery after watermark extraction since Equation (13) [24] contains , which can be regarded as watermark extraction. The weights of the restored model are then compared to the original model using a difference function , which calculates a difference ratio . Due to the sensitivity of the recovery process, even minor changes to would lead to differences in the weights of the restored model. This characteristic allows for integrity assessment, where (or ) indicates that has (not) been tampered with.

-

•

Noisy channel: In this scenario, tampering of DNN models can be identified when the noise term is sufficiently small. By leveraging Equation (14) [24], the difference between the restored and original models can be theoretically measured, allowing for a comparison that excludes the interference of the noise term . Theoretical differences between the restored and original models are computed using Equation (14) [24], and a difference ratio is obtained. When (or ), it indicates that has (not) been tampered with.

4.3 Infringement Identification

In addition to integrity protection, reversible DNN watermarking can be utilized for infringement identification of suspicious DNN models. When the watermark is removed during the recovery operation, no watermark remains in the restored model. This enables the distinction between a legal user holding the restored model and an illegal user holding the watermarked model. Based on this concept, we propose a novel scheme for infringement identification of DNN models, where a user receives a secret key for recovery after legalization and obtains a restored model. The proposed scheme for legitimate authentication is illustrated in Figure 5 (b). For simplicity, we refer to the owner, legal user, illegal user, and trusted third-party institution as ”Alice,” ”Bob,” ”Mallory,” and ”Institution,” respectively.

In our proposed scheme, Alice sells her commercialized DNN model through an online/offline platform and utilizes our scheme for marking the ownership of . After embedding a watermark into using the Mark algorithm [24], the resulting watermarked model is sent to the platform, serving as an exhibit or trial product to promote Alice’s model. As the embedding process occurs after model training, the fidelity of is intentionally lower, ensuring that its disclosure does not harm Alice’s rights.

When Bob expresses interest in the product, Alice shares , , and with him. Bob can then recover to its original form using the Restore algorithm [24]. The recovered model is identical to the original model, maximizing its effectiveness, and the watermark is completely removed, making it undetectable in the recovered model. If Mallory illegally steals the DNN model and shares it on public platforms, Alice can report the incident to the Institution for arbitration. To authenticate the legitimacy of the suspicious model held by Mallory, Alice or the Institution can extract the estimated watermark from the suspect model using the Extract algorithm [24]. Then, can be compared to Alice’s watermark using , which outputs a difference ratio for detecting the presence of the embedded watermark. When , the watermark is considered detected, and Mallory is identified as an illegal user.

To avoid infringing on Alice’s rights, the DNN watermarking scheme for infringement identification should exhibit lower fidelity, ensuring that the effectiveness of the watermarked model is no better than the original one. Thanks to Theorem 1 [24], R-QIM offers greater distortion than HS [24] by setting and an appropriate , making it more suitable for the infringement identification scenario.

5 SIMULATIONS

To evaluate the effectiveness of the proposed R-QIM scheme, the simulations are divided into three parts: i) Usability of the HS method in [24]. ii) Comparison between R-QIM and HS in terms of capacity and fidelity. iii) Impact of R-QIM parameters.

The experimental setups for these simulations are summarized as follows:

Datasets and Models: The datasets chosen for training the models are MNIST [30] and CIFAR10 [31]. The MNIST dataset consists of 60,000 training and 10,000 testing gray-scale images of handwritten digits, each with a size of 2828 pixels and divided into 10 classes. The CIFAR10 dataset contains 50,000 training and 10,000 testing color images of various objects, with a size of 3232 pixels. Two combinations of models and datasets are used: the Multi-layer Perceptron (MLP) model trained on MNIST (referred to as Group A) and the Visual Geometry Group (VGG) model trained on CIFAR10 (referred to as Group B).

Parameters: The watermark is generated by converting a piece of text into a bit stream. The step size is set to , and the scaling factor is chosen as with a dithering value of .

Indicators: The fidelity of the watermarked model is evaluated using training loss and classification accuracy. The training loss measures the damage caused by watermark embedding, while the classification accuracy reflects the effectiveness of the watermarked network. Lower training loss indicates less impact from watermark embedding, and higher classification accuracy indicates greater effectiveness. To detect the existence of copyright information and perform tampering detection, the bit error rate (BER) is employed. BER is calculated as the ratio of the number of differing bits between the original and estimated message to the total number of bits. In copyright protection, if BER is not larger than 10%, it indicates the presence of information; otherwise, it does not. For tampering detection, a model is considered untampered if BER equals 0.

5.1 Usability test

| Metric | MLP ( length) | VGG ( length) | ||

| Original | Preprocessed | Original | Preprocessed | |

| Skewness | 0.0426 | -0.1059 | 34.8544 | -0.0028 |

| Kurtosis | 2.8642 | 1.8324 | 1692.39 | 1.8276 |

| in K-S test |

|

|

|

|

| in J-B test |

|

|

|

|

| Host length | MLP ( length) | VGG ( length) | ||

| R-QIM | HS[24] | R-QIM | HS[24] | |

| % | 39732 | 377 | 40000 | 396 |

| % | 79463 | 811 | 80000 | 760 |

| % | 119194 | 1187 | 120000 | 1177 |

| % | 158925 | 1565 | 160000 | 1604 |

| % | 198656 | 1969 | 200000 | 1982 |

In Section 3.2, we theoretically identified potential weaknesses of the HS method. To support our claims, we conducted a numerical analysis of the weights of DNN models.

Figure 6 presents the Q-Q plot comparing the original and preprocessed weights of the MLP and VGG models against normal and uniform distributions. We observe that the original weights of both models exhibit a distribution closer to the normal distribution, while the preprocessed weights clearly follow the uniform distribution as integers.

Furthermore, we performed an analysis of skewness, kurtosis, K-S test, and Jarque-Bera (J-B) test results for the original and preprocessed weights of the MLP and VGG models. The summarized analysis is presented in Table II. The results indicate that:

i) Data preprocessing flattens the distribution of the weights, resulting in lower kurtosis values for the preprocessed weights.

ii) Neither the original nor the preprocessed weights of the MLP and VGG models pass the K-S and J-B tests.

These experiments demonstrate that realistic DNN weights do not follow a normal distribution. However, this does not undermine the validity of the data preprocessing method proposed in [24] for transforming data from a normal to a uniform distribution. Nevertheless, it highlights the limited usability of the [24] method.

5.2 Capacity and fidelity comparisons

In order to assess the adaptability and superiority of the two proposed schemes in terms of capacity and fidelity, we conducted several experiments to compare R-QIM with HS in two specific applications. The first application focuses on integrity protection, which requires a watermarking method with a high embedding capacity and minimal embedding damage. Therefore, we compared the maximum available capacity, classification accuracy, and training loss between our proposed scheme and the method proposed by [24] with a fixed step size .

The results for the maximum available capacity are presented in Table III, revealing a significant difference between R-QIM and HS. Regardless of whether it is group A or B, the table clearly indicates that R-QIM exhibits a higher embedding capability compared to the benchmark method, which aligns with our theoretical analysis.

Regarding fidelity, we compared the training loss and classification accuracy of the watermarked models embedded at different epochs using R-QIM and HS. The corresponding results are depicted in Fig. 7. It can be observed that R-QIM consistently outperforms the benchmark method in both metrics for both group A and B, with the difference being more pronounced in group B. Importantly, these observations support our assumption that lower embedding distortion leads to better fidelity of the watermarked model.

For infringement identification, according to Theorem 1, R-QIM can achieve a more noticeable decline in fidelity compared to HS by setting . To verify this, we examined the classification accuracy and training loss of MLP and VGG models with different values of and , as shown in Fig. 8. Based on these observations, we conclude the following: i) As and increase, the loss of the watermarked model increases while the accuracy decreases, which aligns with our expectation regarding the relationship between distortion and fidelity. ii) When in R-QIM, it outperforms HS in terms of both loss and accuracy for groups A and B. However, when and , HS performs better than R-QIM. This finding supports Theorem 1 and demonstrates the flexible performance of R-QIM, which determines its applicability in infringement identification.

5.3 Performance of R-QIM Recovery

To assess the performance of R-QIM recovery, we conducted several simulations focusing on the accuracy of the recovered values and the presence of watermarks. In these experiments, the watermarks were converted to uniform data consisting of 4264 bits.

In the first experiment, we aimed to compare the performance difference in implementing reversible operations. We trained two combinations from scratch twice for 60 epochs and embedded the watermark at epoch 30 using the proposed scheme. In the first run (denoted by the green line), a reversible operation was applied immediately after the embedding process, while in the second run (denoted by the red line), no reversible operation was applied. Fig. 9 presents notable observations from this experiment: i) After watermark embedding, the model’s accuracy sharply degraded due to the parameter modification. However, with the implementation of the proposed reversible operation, the reduced accuracy immediately restored. This demonstrates the effectiveness of the reversible operation in offsetting the damages caused by watermark embedding. ii) Without the reversible operation, when the reduced accuracy reached a plateau, the accuracy of both groups dropped below that of the reversible operation applied case. This indicates incomplete compensation in subsequent training for the watermarked model without reversible operation, while the compensation is complete with the reversible operation. iii) We observed that group A was more severely affected than group B after watermark embedding, and it reached a slower plateau in subsequent training, suggesting a higher effectiveness of the reversible operation in group A.

To analyze the specific effects of the various processes in the proposed method, we compared the values of the original, watermarked, and recovered weights for the two combinations in Fig. 10. In this figure, the points representing the original and recovered cover (represented by a horizontal line and a vertical bar, respectively) coincide at each index of the sample, indicating correct recovery. Additionally, the distribution of watermarked weights (represented by crosses) in Fig. 10 illustrates the impact on the weights caused by watermark embedding.

Finally, as the infringement identification function relies on determining whether the restored DNN model contains a watermark, we compared the bit error rate (BER) metric of the watermark with and without the reversible operation, as depicted in Fig. 11. The BER value of the watermarked model was 0.0005, whereas it increased to 0.43 after applying the reversible operation. This confirms that the reversible operation can effectively remove the watermark embedded in the host model, thereby demonstrating the validity of the legitimacy authentication scheme.

6 CONCLUSION

In this paper, we have proposed a novel static deep neural network (DNN) watermarking scheme called Reversible QIM (R-QIM). The R-QIM scheme offers higher capacity and fidelity compared to existing methods, and it overcomes the weaknesses associated with the usability of host data under various distributions. We have also introduced two R-QIM-based schemes for integrity protection and infringement identification of DNNs. The integrity protection scheme enables the verification of watermarked DNNs’ integrity by comparing the restored model with the original model. In infringement identification, the presence of watermarks in the watermarked model can determine the legality of the current user. Theoretical analyses and numerical simulations have demonstrated the superior performance of R-QIM compared to the method proposed in [24]. R-QIM exhibits greater flexibility in fidelity performance, higher embedding capacity, and adaptability to weights with arbitrary distributions.

In conclusion, the R-QIM scheme presents a significant advancement in DNN watermarking, offering enhanced capacity, fidelity, and applicability in various scenarios. This scheme holds promise for effective integrity protection and infringement identification of DNN models in practical applications.

References

- [1] G. Montavon, W. Samek, and K.-R. Müller, “Methods for interpreting and understanding deep neural networks,” Digital signal processing, vol. 73, pp. 1–15, 2018.

- [2] V. Sze, Y.-H. Chen, T.-J. Yang, and J. S. Emer, “Efficient processing of deep neural networks: A tutorial and survey,” Proceedings of the IEEE, vol. 105, no. 12, pp. 2295–2329, 2017.

- [3] C. Szegedy, A. Toshev, and D. Erhan, “Deep neural networks for object detection,” Advances in neural information processing systems, vol. 26, 2013.

- [4] W. Samek, G. Montavon, S. Lapuschkin, C. J. Anders, and K.-R. Müller, “Explaining deep neural networks and beyond: A review of methods and applications,” Proceedings of the IEEE, vol. 109, no. 3, pp. 247–278, 2021.

- [5] A. Radhakrishnan, M. Belkin, and C. Uhler, “Wide and deep neural networks achieve consistency for classification,” Proceedings of the National Academy of Sciences, vol. 120, no. 14, p. e2208779120, 2023.

- [6] W. Tang, B. Li, M. Barni, J. Li, and J. Huang, “An automatic cost learning framework for image steganography using deep reinforcement learning,” IEEE Trans. Inf. Forensics Secur., vol. 16, pp. 952–967, 2021.

- [7] S. Lou, J. Deng, and S. Lyu, “Chaotic signal denoising based on simplified convolutional denoising auto-encoder,” Chaos, Solitons & Fractals, vol. 161, p. 112333, 2022.

- [8] M. Barni, F. Pérez-González, and B. Tondi, “DNN watermarking: Four challenges and a funeral,” in IH&MMSec ’21: ACM Workshop on Information Hiding and Multimedia Security, Virtual Event, Belgium, June, 22-25, 2021, pp. 189–196, 2021.

- [9] F. Regazzoni, P. Palmieri, F. Smailbegovic, R. Cammarota, and I. Polian, “Protecting artificial intelligence ips: a survey of watermarking and fingerprinting for machine learning,” CAAI Transactions on Intelligence Technology, vol. 6, no. 2, pp. 180–191, 2021.

- [10] Y. Li, H. Wang, and M. Barni, “A survey of deep neural network watermarking techniques,” Neurocomputing, vol. 461, pp. 171–193, 2021.

- [11] J. Zhang, Z. Gu, J. Jang, H. Wu, M. P. Stoecklin, H. Huang, and I. Molloy, “Protecting intellectual property of deep neural networks with watermarking,” in Proceedings of the 2018 on Asia Conference on Computer and Communications Security, pp. 159–172, 2018.

- [12] Y. Uchida, Y. Nagai, S. Sakazawa, and S. Satoh, “Embedding watermarks into deep neural networks,” in Proceedings of the 2017 ACM on international conference on multimedia retrieval, pp. 269–277, 2017.

- [13] M. Kuribayashi, T. Tanaka, S. Suzuki, T. Yasui, and N. Funabiki, “White-box watermarking scheme for fully-connected layers in fine-tuning model,” in Proceedings of the 2021 ACM Workshop on Information Hiding and Multimedia Security, pp. 165–170, 2021.

- [14] Y. Li, B. Tondi, and M. Barni, “Spread-transform dither modulation watermarking of deep neural network,” Journal of Information Security and Applications, vol. 63, p. 103004, 2021.

- [15] G. Pagnotta, D. Hitaj, B. Hitaj, F. Perez-Cruz, and L. V. Mancini, “Tattooed: A robust deep neural network watermarking scheme based on spread-spectrum channel coding,” arXiv preprint arXiv:2202.06091, 2022.

- [16] Y. Li, L. Abady, H. Wang, and M. Barni, “A feature-map-based large-payload DNN watermarking algorithm,” in Digital Forensics and Watermarking - 20th International Workshop, IWDW 2021, Beijing, China, November 20-22, 2021, Revised Selected Papers, pp. 135–148, 2021.

- [17] J. Fei, Z. Xia, B. Tondi, and M. Barni, “Supervised GAN watermarking for intellectual property protection,” in IEEE International Workshop on Information Forensics and Security, WIFS 2022, Shanghai, China, December 12-16, 2022, pp. 1–6, 2022.

- [18] Y. Shi, X. Li, X. Zhang, H. Wu, and B. Ma, “Reversible data hiding: Advances in the past two decades,” IEEE Access, vol. 4, pp. 3210–3237, 2016.

- [19] G. Hua, J. Huang, Y. Q. Shi, J. Goh, and V. L. L. Thing, “Twenty years of digital audio watermarking - a comprehensive review,” Signal Process., vol. 128, pp. 222–242, 2016.

- [20] J. Tian, “Reversible data embedding using a difference expansion,” IEEE Trans. Circuits Syst. Video Technol., vol. 13, no. 8, pp. 890–896, 2003.

- [21] D. M. Thodi and J. J. Rodríguez, “Prediction-error based reversible watermarking,” in Proceedings of the 2004 International Conference on Image Processing, ICIP 2004, Singapore, October 24-27, 2004, pp. 1549–1552, 2004.

- [22] X. Wu and W. Sun, “High-capacity reversible data hiding in encrypted images by prediction error,” Signal Process., vol. 104, pp. 387–400, 2014.

- [23] Z. Ni, Y. Shi, N. Ansari, and W. Su, “Reversible data hiding,” IEEE Trans. Circuits Syst. Video Technol., vol. 16, no. 3, pp. 354–362, 2006.

- [24] X. Guan, H. Feng, W. Zhang, H. Zhou, J. Zhang, and N. Yu, “Reversible watermarking in deep convolutional neural networks for integrity authentication,” in Proceedings of the 28th ACM International Conference on Multimedia, oct 2020.

- [25] B. Chen and G. W. Wornell, “Quantization index modulation: A class of provably good methods for digital watermarking and information embedding,” IEEE Trans. Inf. Theory, vol. 47, no. 4, pp. 1423–1443, 2001.

- [26] P. Moulin and R. Koetter, “Data-hiding codes,” Proc. IEEE, vol. 93, no. 12, pp. 2083–2126, 2005.

- [27] S. Lyu, “Optimized dithering for quantization index modulation,” in ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 1–5, 2023.

- [28] B. Feng, W. Lu, W. Sun, J. Huang, and Y.-Q. Shi, “Robust image watermarking based on tucker decomposition and adaptive-lattice quantization index modulation,” Signal Processing: Image Communication, vol. 41, pp. 1–14, 2016.

- [29] J. Qin, S. Lyu, J. Deng, X. Liang, S. Xiang, and H. Chen, “A lattice-based embedding method for reversible audio watermarking,” arXiv preprint arXiv:2209.07066, 2022.

- [30] Y. LeCun and C. Cortes. ”mnist handwritten digit database”. [Online]. Available: http://yann.lecun.com/exdb/mnist/

- [31] A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” Master’s thesis, University of Tront, 2009.