Rethinking Noise Synthesis and Modeling in Raw Denoising

Abstract

The lack of large-scale real raw image denoising dataset gives rise to challenges on synthesizing realistic raw image noise for training denoising models. However, the real raw image noise is contributed by many noise sources and varies greatly among different sensors. Existing methods are unable to model all noise sources accurately, and building a noise model for each sensor is also laborious. In this paper, we introduce a new perspective to synthesize noise by directly sampling from the sensor’s real noise. It inherently generates accurate raw image noise for different camera sensors. Two efficient and generic techniques: pattern-aligned patch sampling and high-bit reconstruction help accurate synthesis of spatial-correlated noise and high-bit noise respectively. We conduct systematic experiments on SIDD and ELD datasets. The results show that (1) our method outperforms existing methods and demonstrates wide generalization on different sensors and lighting conditions. (2) Recent conclusions derived from DNN-based noise modeling methods are actually based on inaccurate noise parameters. The DNN-based methods still cannot outperform physics-based statistical methods. The code will be available at https://github.com/zhangyi-3/noise-synthesis.

1 Introduction

Learning-based methods have made great progress on raw image denoising in recent years. However, collecting a large-scale real raw image dataset is time and manpower consuming. Therefore, most learning-based methods on raw image denoising are trained with synthetic datasets, which model and apply synthesis noise to clean images to create noisy inputs. As a result, their denoising performances in real-world scenarios are significantly dependent on the discrepancy between the synthetic noise and actual noise in real raw images.

Existing methods for synthesizing raw image noise generally conducts the following two steps: (1) building a noise model and optimizing the parameters by fitting the real noise distribution, and (2) generating the synthetic noise randomly from the noise model. According to the different types of noise models, they can be divided into two categories: physics-based statistical methods and Deep Neural Network (DNN)-based methods.

DNN-based methods [30, 1, 7] learn to model noise distribution from real datasets with deep generative networks (e.g., GAN, Flow). Although the deep models have powerful representation capability, it is particularly hard for them to generate accurate values for each pixel in dense-prediction tasks. For example, in image generation, how to generate realistic small details with less artifacts is a relatively challenging problem. While all DNN-based methods claim superior performances on SIDD dataset compared with the physics-based noise models, we found that those conclusions are built on the uncalibrated noise profiles of the SIDD dataset (noise profiles for the Poisson-Gaussian distribution).

Physics-based statistical methods [29, 14] are more promising compared with the DNN-based methods since they follow the physical process of the specific camera sensor and model various noise sources step by step according to the physical process from photos to digital numbers. However, there also exist obvious limitations for physics-based methods. First, it is impossible to accurately extract and model all kinds of noise sources in the camera since every electronic component of the camera can be a noise source. Second, in most cases, modeling a noise source is based on inaccurate distributions since the natural distributions for most noise sources are unknown. Third, the real noise distributions vary dramatically in different cameras or lighting conditions (e.g., low-light), which makes physics-based methods laborious. All those limitations hinder physics-based methods to achieve accurate noise modeling.

In this paper, we propose a new perspective for synthesizing realistic image noise. Specifically, instead of building the noise model first and then generating synthetic noise from the noise model step by step, we synthesize the noise by sampling directly from the real noise distribution.

We first describe the general raw image formation based on the physical process and decompose the raw image noise into signal-dependent and signal-independent components. For the signal-dependent noise, we only consider the photon shot noise, which follows the Poisson distribution strictly due to the quantum nature of light. Other signal-dependent noise (e.g., Photo Response Non-Uniformity) only have marginal impact111Several previous papers and experiments show the percentage of the mean signal value of PRNU is mostly less than 3% (e.g. Figure 2 in [15] and Figure 7 in [25].) due to the advances of sensor design and manufacture. We synthesize the signal-dependent noise by sampling from real Poisson distribution with the calibrated total gain parameters. For the complicated signal-independent noise, we use the target sensor to capture dark frames with the corresponding exposure and ISO settings in a purely dark room so that all signal-independent noise are preserved and saved into a dark-frame database. We synthesize the signal-independent noise by directly sampling pixel values from the dark-frame database.

Even though the naive implementation that samples pixel values from 10-bit dark frames is based on the real noise, we empirically find that it cannot work well over all camera sensors and exposure ratios due to the missing of spatial-correlated noise and high-bit information. We therefore propose two techniques to tackle the issues: First, we use pattern-aligned patch sampling for signal-independent noise to keep the spatial-correlated and pattern-correlated noise (e.g., row noise, fixed pattern noise). Second, we reconstruct the accurate continuous distribution for the quantized dark frames by using a set of bell-shaped distributions and restore the signal-independent noise to higher bits.

To demonstrate the effectiveness and generalization of our method, we systematically compare it with both DNN-based and statistical methods on SIDD [2] and SID [8] datasets. Our method outperforms all existing methods and shows consistent improvements on various camera sensors and lighting conditions.

Moreover, we find that the noise profiles of popular raw image datasets [2, 8] are quite inaccurate, which have been used widely in previous works. After calibrating the noise profile carefully for the statistical methods, we observe that recent DNN-based methods actually underperform statistical methods with a clear gap, which is opposite to recent works.

Our contributions are summarized as follows:

-

•

We propose an efficient and generic perspective to synthesize noise for raw image denoising.

-

•

We propose raw pattern-aligned patch and high-bit reconstruction to synthesize realistic raw image noise.

-

•

We systematically study the statistical methods and DNN-based methods for noise modeling.

-

•

The proposed method outperforms existing statistical and DNN-based methods on raw image datasets and demonstrates great generalization on different camera sensors and lighting conditions.

2 Related Work

Image denoising has been studied for many years. In this section, we only review the related works for image noise modeling. The methods have been categorized into two types: physical-based and DNN-based.

2.1 Physics-based statistical noise modeling

The Gaussian noise model is most widely used when we evaluate the denoising methods [31, 24, 10, 22, 6]. But its generalization in real-world denoising is relatively poor. For real-world raw image noise, the Poisson-Gaussian (P-G) distribution [14, 13] is one of the typical noise models. It models the shot and read noise by Poisson and Gaussian distribution. A widely used alternative of P-G distribution is to approximate the Poisson distribution to the Gaussian distribution [5, 26]. The overall noise model turns to a heteroscedastic Gaussian model with zero mean and signal-dependent variance. But recent work [29] shows that using the accurate P-G noise model improves the denoising results over using the heteroscedastic Gaussian model.

The noise modeling for extreme low-light environments is also important for low-light raw image and video denoising. Recent works [28, 29] build the noise model for the low-light condition by analyzing the sensor processing pipeline and modeling noise sources with the assumed distributions. But, as studied in the electronic imaging community [16, 23, 15, 11], the camera sensor processing pipeline is relatively complicated, which makes it impossible to extract and model all noise sources.

2.2 DNN-based noise modeling

Another research direction focuses using Deep Neural Network (DNN) to model the real noise distribution. Early methods [9, 21] use GAN to generate the noise distribution but only show limited performance improvements. More recent works demonstrate their effectiveness on the SIDD dataset [2]. Noise FLow [1] following the sensor processing pipeline adopts a flow-based generative model to generate raw image noise. Camera-aware GAN [7] learns a camera-aware model that regards the synthetic noisy images by P-G noise model as the generator input. All of them claim superior performance compared with the physics-based methods. But we found the in-camera noise profiles for the physics-based methods are inaccurate. After careful calibration, the physics-based methods outperform the DNN-based methods.

3 Method

3.1 Raw Image Formation

To analyze the noise sources in raw images, we first describe the raw image formation of the Complementary Metal-Oxide-Semiconductor (CMOS) sensors, which is the dominant sensor of both Digital Single Lens Reflex Cameras (DSLRs) and smartphones. For a raw image produced by a typical CMOS sensor, we model the process222Since the saturation effect can be implemented exactly by the clipping operation, we didn’t include it in our noise modeling. from incident photons to digital values as

| (1) |

where is the digital values saved in raw images, is the corresponding quantization noise when transforming the analog signals to digital values, is the number of incident photons of the real scene, and is the signal-dependent photon shot noise. and are analog gain and digital gain respectively. and denote the summation of the other noises produced before the analog gain and digital gain.We will then explain all noise in Eq. (1) in detail.

Due to the the quantum nature of light and the uncertainty of the collected photons, the number of incident photon numbers for all pixels follow the Poisson distribution strictly:

| (2) |

where denotes the Poisson distribution that regards the photon number of real scenes as the expectation.

Before saving the raw signal to fixed-bit raw images (e.g., 10- or 14-bit), the Analog-to-Digital Converters (ADC) quantizes the analog signals with the fixed quantization step:

| (3) | ||||

where is the value in the position , is the number of bits, is the quantization step, is the quantization step number, is the quantization noise following the uniform distribution .

Since the gain factor in the physical processes affects the noise distribution significantly, we divide the sensor processing into stages according to the position of gain factors. and represent the summation of the other noise before the analog gain and the digital gain respectively. Their components are complicated and vary greatly on different sensors. Generally, for the noise , it includes the noise introduced by the dark current (dark current shot noise, and fixed pattern noise) [3], reset noise [23], flicker noise [4], etc. For the noise , it includes thermal noise, column fixed pattern noise [27], and so on.

3.2 Noise synthesis for signal-dependent and signal-independent noise

Through the above discussed raw image formation, we can see that the noise distribution in raw images is quite complicated and highly relies on the sensor processing pipeline. As a result, the difficulty of building the noise model lies in two aspects. First, since every semiconductor and operation in the circuit can be a noise source and we do not know what is the most appropriate distribution for most of them, it is impossible to isolate and model each noise accurately for all noise sources. Second, different sensor processing pipelines and different lighting conditions also affect the actual noise distribution significantly. Building and calibrating the noise model for each case is laborious, especially when we aim to synthesize accurate noise for a wide range of devices and lighting conditions.

Our key insight is that we can decompose the raw images’ noise into signal-dependent noise and signal-independent noise. Signal-dependent noise can be mostly modeled as Poisson distribution and its synthesis can be easily achieved by sampling from the distribution. Signal-independent noise can be synthesized via sampling from an actual noise database rather than building a mathematical noise model and synthesizing noise from the model. In this way, no matter what the sensor processing pipeline and lighting conditions are, the noise can be synthesized much more accurately.

Specifically, we analyze the image formation and decompose the raw image noise in Eq. (1) into signal-dependent and signal-independent components:

| (4) |

Signal-dependent noise.

For the signal-dependent noise, we only consider the photo shot noise in the raw image formation since other signal-dependent noise sources only have very limited impact (less than 3% according to [15, 18]). Since the incident photon numbers follow the Poisson distribution strictly as described in Eq. (2), we can accurately synthesize the signal-dependent noise as

| (5) | |||

| (6) |

where is the clean image obtained by removing all noise in Eq. (4), and is the sampled signal-dependent noise (photon shot noise). To synthesize the photon shot noise, we invert the digital numbers to the photon numbers by dividing the total gain . Then, we sample the signal-dependent noise from the Poisson distribution. The total gain can be estimated by the linear curve between the mean and variance of flat-field frames [19].

Signal-independent noise.

For the signal-independent noise, we capture dark frames in the dark room to construct the dark-frame database, and the synthetic noise is sampled directly from the dark-frame database. In each shooting of the dark frame, all signal-dependent noise naturally disappears as we block all incident light. Formally, it can be formulated by removing the signal-dependent component in Eq. (4):

| (7) | |||

| (8) |

where is the dark frame, and is the synthetic signal-independent noise. All actual signal-independent noise is accumulated and saved in the shooting of the dark frames. As a result, the synthetic signal-independent noise is most accurate and contains all kinds of noise types that cannot be modeled explicitly before.

Moreover, it is convenient and feasible to sample actual signal-independent noise from dark-frame databases of different devices with various exposure and ISO settings. For a popular camera sensor, each raw image contains more than 1M pixels, which indicates more than 1M noise values are sampled from the actual noise distribution at each shoot. We can easily obtain sufficient signal-independent noise samples to form the signal-dependent noise database.

By synthesizing the signal-dependent and -independent components separately, for a given clean image , we can generate the synthetic noisy image via Eq. (4).

| (9) |

where is the synthetic noisy image.

| Model | PSNR / SSIM | PSNR / SSIM | PSNR / SSIM |

|---|---|---|---|

| P-G [14] | 53.79 / 0.997 | 46.96 / 0.926 | 43.84 / 0.856 |

| P-G + LB | 53.59 / 0.997 | 46.35 / 0.944 | 42.98 / 0.872 |

| ELD [29] | 53.43 / 0.997 | 47.77 / 0.970 | 45.46 / 0.940 |

| ELD + LB | 53.41 / 0.997 | 45.74 / 0.920 | 42.35 / 0.847 |

| Pixel-wise | 53.90 / 0.997 | 45.71 / 0.917 | 42.57 / 0.846 |

| Patch | 53.93 / 0.997 | 45.83 / 0.918 | 42.70 / 0.846 |

| PAP | 53.91 / 0.997 | 47.13 / 0.958 | 44.36 / 0.906 |

| PAP + HB | 53.92 / 0.997 | 47.90 / 0.973 | 45.47 / 0.940 |

3.3 Treating signal-independent noise separately does not work well

We first try a naive implementation by treating each dark-frame pixel value as an individual signal-independent noise sample and save them separately in the noise database. We use SonyA7S2 for this experiment and evaluate on the ELD dataset.

Specifically, for the signal-dependent noise, we synthesize it as described in Eq. (6). For synthesizing the signal-independent noise, 10 dark frames are captured under no-light condition for each ISO setting with the default exposure time to construct the dark-frame database. The noise values are saved equally and signal-independent noise is synthesized by sampling pixels randomly from the dark-frame database. The results are shown in Table 1. Benefited from the noise sampling from the actual noise database, even the naive implementation outperforms the state-of-the-art ELD noise model [29] in normal exposure ratio (ratio ), which is the exposure ratio we commonly used in daily life. But for more challenging extreme low-light environments (ratio , ), we found directly pixel-wise sampling for the signal-independent noise cannot match the performances of statistical methods. We found that it is due to two issues:

- 1.

-

2.

The signal-independent noise is the dominant noise in low-light conditions, but the dark frames have been quantized into lower bits (10- or 14-bit images), which destroy the natural signal-independent noise distributions significantly.

3.4 Pattern-aligned patch sampling and high-bit reconstruction

To tackle the two problems in naive signal-independent noise sampling, we propose two techniques to better synthesize such noise: pattern-aligned patch sampling and high-bit reconstruction.

pattern-aligned patch sampling.

The spatially-correlated noise becomes significant under low-light conditions. To preserve the spatially-correlated noise, we use patch sampling, which synthesizes the signal-independent noise by cropping patches from the constructed dark-frame database. In this way, the spatially-correlated noise is preserved in the dark-frame patches. Moreover, we observe that the raw Bayer pattern also affects the noise distribution, we further propose pattern-aligned patch sampling (PAP), which ensure that dark-frame patches have the exactly aligned Bayer pattern as the clean image to be corrupted. Table 1 shows the results of using pixel-wise sampling, patch sampling and pattern-aligned patch sampling (PAP) for synthesizing signal-independent noise. The proposed pattern-aligned patch sampling helps to improve the denoising performance under the extreme low-light condition.

The effect of high-bit synthetic noise.

In extreme low-light conditions, the signal-independent noise becomes the dominant noise, which requires accurate and high-bit synthetic noise. To show that, in Table 1, we quantize the signal-independent component of the physics-based methods to 14-bit (the same as the dark frames), which is denoted as “LB”. The physics-based methods show an obvious performance drop when using lower-bit synthetic noise, especially for low-light conditions (e.g., ). Therefore, high-bit signal-independent noise plays a crucial role in noise synthesis for low-light environments.

High-bit reconstruction.

Theoretically, we can dump dark frames before the quantization operation in the sensor processing pipeline or save high-bit dark frames (e.g. 32-bit) to preserve actual signal-independent noise. However, most CMOS sensor manufacturers do not allow researchers to have access to such raw data. Therefore, we propose a high-bit reconstruction method to inverse the quantization first and synthesize high-bit values to refine the noise patches sampled by the pattern-aligned patch sampling.

Figure 1(a) shows a toy example illustrating the quantization effect for the continuous analog signal distribution of camera sensors. Figure 1(b) shows the details within each quantization step. The quantization process maps a set of continuous values in to a single value , which makes our collected dark frames have lower bits. To reconstruct the high-bit dark frames, we first estimate the continuous distribution of the low-bit noise sampled from the dark frames. Then, as shown in Figure 1(b), according to the estimated continuous distribution, we sample high-bit value (32-bit) within the quantization step for each low-bit discrete value , and use the sampled high-bit values to replace the in the dark frames. The sample probability of high-bit values is obtained by normalizing the estimated continuous distribution within the quantization step. In this way, the dark frames are reconstructed to higher bits while keeping the characteristics of the real noise.

We use statistical models to estimate the continuous distribution of quantized noise in the dark-frame database. Instead of modeling it with a single distribution (e.g., Gaussian [14], Tukey Lambda [29]), we construct a continuous distribution set which contains 5 bell-shaped distributions, including Student’s t, Weibull, Tukey lambda, Gaussian, and Gamma distribution, for better modeling noise of different sensors. We use the distribution set to fit the noise in the dark frames and identify the distribution that can best describe the noise distribution.

4 Experiments

We systematically compare existing DNN-based methods, physics-based statistical methods, and our method for noise synthesis on SIDD mobile dataset [2] and ELD DSLR dataset [29], and use the synthesized images for training denoising networks. We also demonstrate the effectiveness and generalization of our method over different camera sensors and lighting conditions.

4.1 Implementation details

Evaluation datasets.

We follow previous works [29, 1, 7] and select two representative datasets to systematically evaluate our methods and compare with existing DNN-based and physics-based methods.

-

1.

SIDD [2] is a mobile dataset, which is collected by five different smartphones under three lighting conditions (low-light, normal and high exposure). We use three recent smartphones (iPhone 7, Google Pixel, Samsung Galaxy S6 Edge) in our experiments. Most recent DNN-based methods [1, 7, 30] have demonstrated their effectiveness on SIDD dataset.

-

2.

ELD [29] is a recent DSLR dataset, which is captured over a wide range exposure ratio (). We evaluate our method on two popular cameras (SonyA7S2 and NikonD850) over all exposure ratios.

| \addstackgap[.5] Camera | Index | DNN-based | Physics-based | Real-noise-based | |||||

|---|---|---|---|---|---|---|---|---|---|

|

Noise FLow

[1] |

Camera-Aware

GAN [7] |

In-Camera

P-G |

Calibrated

P-G |

ELD [29] | Paired data | Pixel-wise | PAP + HB | ||

| S6 | PSNR | 44.68 | 45.00 | 34.64 | 45.38 | 45.39 | 44.35 | 45.33 | 45.62 |

| SSIM | 0.973 | 0.975 | 0.720 | 0.976 | 0.977 | 0.971 | 0.976 | 0.978 | |

| IP | PSNR | 48.29 | 55.48 | 44.58 | 56.71 | 56.73 | 55.64 | 56.76 | 56.79 |

| SSIM | 0.983 | 0.994 | 0.960 | 0.996 | 0.996 | 0.995 | 0.996 | 0.996 | |

| GP | PSNR | 45.15 | 45.96 | 36.65 | 46.48 | 46.43 | 44.58 | 46.52 | 46.56 |

| SSIM | 0.962 | 0.975 | 0.776 | 0.976 | 0.977 | 0.955 | 0.977 | 0.978 | |

Compared methods.

-

1.

Poisson-Gaussian (P-G) distribution [14] is the most typical noise model for raw image denoising. We use the accurate P-G noise model for comparison instead of approximating the Poisson distribution to the Gaussian distribution.

-

2.

ELD noise model [29] is the recently developed method for low-light environments. We have reproduced the results in ELD with the authors’ help and modified several lines of code to synthesize noise for a wider ratio range ().

- 3.

Training and testing.

In all experiments, we adopt the U-shape net in SID [8] as our backbone for training denoising models with synthesized noisy images. For both SIDD and ELD dataset, we pack raw images to four channels and randomly sample non-overlapped patches with rotation and flipping augmentation. We follow the common training setting: loss function, Adam optimizer with default hyper-parameters, training from scratch, batch size 1, and initial learning rate of . The models are trained for 30k iterations and the learning rate halves twice at iterations 15k and 25k. During the training, we sample the ISO uniformly from the set . For each ISO, we capture 10 dark frames to construct the dark-frame database and use the proposed pattern-aligned patch sampling with high-bit reconstruction to synthesize realistic signal-independent noise. The synthesis of the signal-dependent noise follows Eq. (6).

For the SIDD dataset, three scenes (02, 03 and 05) are kept for testing. For evaluating on ELD dataset, we train models on SID Sony dataset and use the official testing code for all cameras and exposure ratios.

| Camera | Ratio | Index | DNN-based | Physics-based | Real-noise-based | |||

|---|---|---|---|---|---|---|---|---|

| Noise Flow [1] | P-G [14] | ELD [29] | Paired data [8] | Pixel-wise | PAP + HB | |||

| SonyA7S2 | PSNR | 41.20 | 53.79 | 53.43 | NA | 53.90 | 53.92 | |

| SSIM | 0.842 | 0.997 | 0.997 | NA | 0.997 | 0.997 | ||

| PSNR | 40.79 | 51.79 | 51.70 | NA | 51.73 | 52.14 | ||

| SSIM | 0.836 | 0.992 | 0.993 | NA | 0.992 | 0.994 | ||

| PSNR | 38.68 | 46.96 | 47.77 | 46.73 | 45.71 | 47.90 | ||

| SSIM | 0.793 | 0.926 | 0.970 | 0.970 | 0.917 | 0.973 | ||

| PSNR | 36.30 | 42.84 | 45.46 | 44.57 | 42.57 | 45.47 | ||

| SSIM | 0.713 | 0.856 | 0.940 | 0.935 | 0.846 | 0.940 | ||

| NikonD850 | PSNR | 39.20 | 48.84 | 48.60 | NA | 48.57 | 49.15 | |

| SSIM | 0.831 | 0.990 | 0.989 | NA | 0.990 | 0.991 | ||

| PSNR | 37.97 | 47.01 | 46.81 | NA | 46.87 | 47.34 | ||

| SSIM | 0.806 | 0.979 | 0.979 | NA | 0.981 | 0.984 | ||

| PSNR | 32.87 | 41.61 | 42.08 | 41.13 | 41.83 | 42.56 | ||

| SSIM | 0.694 | 0.877 | 0.910 | 0.930 | 0.920 | 0.925 | ||

| PSNR | 30.40 | 39.19 | 39.99 | 39.36 | 39.43 | 40.35 | ||

| SSIM | 0.583 | 0.825 | 0.877 | 0.909 | 0.879 | 0.889 | ||

4.2 SIDD dataset

Parameters calibration.

The in-camera noise profile for Poisson-Gaussian noise model can be obtained directly from the meta information of DNG file [17]. They have been used widely in existing works. However, we found that the in-camera noise profiles are not calibrated seriously.

We collect the three recent smartphones used in SIDD dataset and estimate parameters for the P-G noise model as described in the original paper [14]. As shown in Figure 2, except the parameters of Google Pixel, all other in-camera noise parameters show large differences compared with our calibrated parameters. In Table 2, we use the in-camera and calibrated noise parameters to synthesize noise for denoising model training and keep all other settings the same. The results show that using the calibrated parameters performs much better than using the in-camera parameters. This indicates that the in-camera noise parameters are not accurate.

We also calibrate the noise parameters for the ELD noise models. It uses the Tukey Lambda (TL) distribution family [20] to fit the read noise distribution and use the Probability Plot Correlation Coefficient (PPCC) [12] test to find the best shape parameter. We found that, for the mobile sensors, the estimated shape parameter is quite close to 0.14, which is an approximate Gaussian distribution in the TL distribution family. As a result, ELD noise model reduces to the P-G noise model with additional row noise and quantization noise.

Denoising results.

We train a denoising model for each noise synthesis method with the same training setting. The denoising results on SIDD dataset are summarized in Table 2.

Same as reported in previous works, the in-camera P-G noise mode cannot outperform DNN-based methods, especially for GAN-based models. However, unluckily, all previous works are built on inaccurate noise profiles, since the re-calibrated P-G noise model shows large PSNR improvements on the SIDD dataset. It even works much better than all recent DNN-based methods in our experiments. As described before, the TL distribution in the ELD noise model reduces to the Gaussian distribution. Therefore, the results of the ELD noise model on all three cameras are quite similar to the P-G noise model.

Our method consistently outperforms both DNN-based and statistical methods over all three mobile cameras. Since the SIDD dataset is captured under the normal exposure ratio (), the naive pixel-wise sampling can produce comparable results as the well-calibrated P-G noise models. And , using the high-bit reconstruction also shows consistent improvements.

4.3 ELD dataset

Parameters calibration.

The noise profiles are not provided in both SonyA7S2 and NikonD850 cameras. Similar to the experiment on SIDD dataset, we use the same model of cameras and calibrate noise profiles for statistical methods (P-G and ELD noise models).

Denoising results.

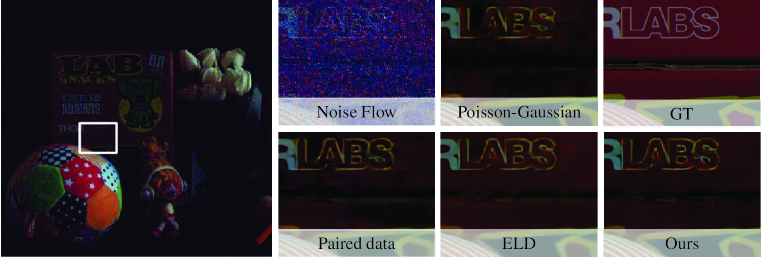

Table 3 shows the denoising performances over four exposure ratios of the denoising model trained with data from different noise synthesis methods. The DNN-based method shows poor generalization on the extreme low-light datasets. It suffers from severe color distortion as shown in Figure 3.

For the physics-based methods, the ELD noise model outperforms the P-G noise model in extreme low-light environments (). But for the normal lighting conditions (), the P-G noise model shows better performances. The results for exposure ratio 1 and 10 are not available (NA) since there are no corresponding training pairs for ratio 1 and 10 in the SID dataset.

Our methods (denoted by PAP + HB) consistently outperforms all existing noise synthesis methods over different cameras and exposure ratios. Table 3 shows that the high-bit reconstruction plays an important role in our method, especially in extreme low-light environments. Some qualitative results are shown in Figure 3. The denoising model trained with our synthetic noise can recover more textures and vivid colors compared with existing methods.

Since our method can synthesize all kinds of signal-independent noise, it helps the denoising model remove the fixed pattern noise successfully even though we do not model it explicitly. The model trained with the real noise (paired data) can also remove it. But physics-based methods can only synthesize the noise that they are able to model. As a result, the denoising model trained with them cannot deal with the unmodeled noise.

5 Conclusions

In this paper, we propose a new perspective for raw image noise synthesis. The synthetic noise from the presented method is accurate inherently since it benefits from the actual noise. Two techniques, pattern-aligned patch sampling and high-bit reconstruction, for the signal-independent noise enhance the synthesis performance and generalization in the low-light environments. Our method is both generic and efficient. On both SIDD and ELD datasets, our method outperforms recent DNN-based methods and physics-based methods over different cameras and lighting conditions. Moreover, through systematic comparisons, we found existing comparisons of DNN-based methods and physics-based methods are built on inaccurate noise parameters. After careful noise parameters calibration, DNN-based methods surprisingly underperform the physics-based methods with a clear gap, which is opposite to the recent advances.

6 Acknowledgement

This work is supported in part by the General Research Fund through the Research Grants Council of Hong Kong under Grants (Nos. 14204021, 14208417, 14207319, 14202217, 14203118, 14208619, ), in part by Research Impact Fund Grant No. R5001-18, in part by CUHK Strategic Fund.

References

- [1] Abdelrahman Abdelhamed, Marcus A Brubaker, and Michael S Brown. Noise Flow: Noise Modeling with Conditional Normalizing Flows . In International Conference on Computer Vision (ICCV), 2019.

- [2] Abdelrahman Abdelhamed, Stephen Lin, and Michael S. Brown. A high-quality denoising dataset for smartphone cameras. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

- [3] Richard L Baer. A model for dark current characterization and simulation. In Sensors, Cameras, and Systems for Scientific/Industrial Applications VII, 2006.

- [4] JA Barnes and DW Allan. A statistical model of flicker noise. Proceedings of the IEEE, 1966.

- [5] Tim Brooks, Ben Mildenhall, Tianfan Xue, Jiawen Chen, Dillon Sharlet, and Jonathan T Barron. Unprocessing images for learned raw denoising. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

- [6] Antoni Buades, Bartomeu Coll, and Jean - Michel Morel. Non-local means denoising. Image Process. Line, 1, 2011.

- [7] Ke-Chi Chang, Ren Wang, Hung-Jin Lin, Yu-Lun Liu, Chia-Ping Chen, Yu-Lin Chang, and Hwann-Tzong Chen. Learning camera-aware noise models. In European Conference on Computer Vision (ECCV), 2020.

- [8] Chen Chen, Qifeng Chen, Jia Xu, and Vladlen Koltun. Learning to see in the dark. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2018.

- [9] Jingwen Chen, Jiawei Chen, Hongyang Chao, and Ming Yang. Image blind denoising with generative adversarial network based noise modeling. In CVPR , 2018.

- [10] Kostadin Dabov, Alessandro Foi, Vladimir Katkovnik, and Karen O. Egiazarian. Image denoising by sparse 3-d transform-domain collaborative filtering. IEEE Trans. Image Processing, 16(8):2080–2095, 2007.

- [11] Abbas El Gamal and Helmy Eltoukhy. Cmos image sensors. IEEE Circuits and Devices Magazine, 2005.

- [12] James J Filliben. The probability plot correlation coefficient test for normality. Technometrics, 17(1):111–117, 1975.

- [13] Alessandro Foi. Clipped noisy images: Heteroskedastic modeling and practical denoising. Signal Process., 89(12):2609–2629, 2009.

- [14] Alessandro Foi, Mejdi Trimeche, Vladimir Katkovnik, and Karen O. Egiazarian. Practical poissonian-gaussian noise modeling and fitting for single-image raw-data. IEEE Trans. Image Process., 17(10):1737–1754, 2008.

- [15] Ryan D Gow, David Renshaw, Keith Findlater, Lindsay Grant, Stuart J McLeod, John Hart, and Robert L Nicol. A comprehensive tool for modeling cmos image-sensor-noise performance. IEEE Transactions on Electron Devices, 54(6):1321–1329, 2007.

- [16] Glenn Healey and Raghava Kondepudy. Radiometric CCD camera calibration and noise estimation. IEEE Trans. Pattern Anal. Mach. Intell., 16(3):267–276, 1994.

- [17] ADOBE SYSTEMS INCORPORATED. Digital negative (dng) specification. %****␣arxiv_noise_synthesis.bbl␣Line␣100␣****https://www.adobe.com/content/dam/acom/en/products/photoshop/pdfs/dng_spec_1.4.0.0.pdf, 2012.

- [18] James R Janesick, Tom Elliott, Stewart Collins, Morley M Blouke, and Jack Freeman. Scientific charge-coupled devices. Optical Engineering, 26(8):268692, 1987.

- [19] James R Janesick, Kenneth P Klaasen, and Tom Elliott. Charge-coupled-device charge-collection efficiency and the photon-transfer technique. Optical engineering, 26(10):261072, 1987.

- [20] Brian L Joiner and Joan R Rosenblatt. Some properties of the range in samples from tukey’s symmetric lambda distributions. Journal of the American Statistical Association, 66(334):394–399, 1971.

- [21] Dong - Wook Kim, Jae Ryun Chung, and Seung - Won Jung. GRDN: grouped residual dense network for real image denoising and gan-based real-world noise modeling. In CVPR Workshops, 2019.

- [22] Ymir M ̈a kinen, Lucio Azzari, and Alessandro Foi. Exact transform-domain noise variance for collaborative filtering of stationary correlated noise. In ICIP , pages 185–189. IEEE , 2019.

- [23] Mikhail V. Konnik and James Welsh. High-level numerical simulations of noise in CCD and CMOS photosensors: review and tutorial. CoRR, abs/1412.4031, 2014.

- [24] Jaakko Lehtinen, Jacob Munkberg, Jon Hasselgren, Samuli Laine, Tero Karras, Miika Aittala, and Timo Aila. Noise2noise: Learning image restoration without clean data. In ICML , volume 80 of Proceedings of Machine Learning Research, pages 2971–2980. PMLR , 2018.

- [25] Emil Martinec. Noise, dynamic range and bit depth in digital slrs. http://theory.uchicago.edu/~ejm/pix/20d/tests/noise/, 2008.

- [26] Ben Mildenhall, Jonathan T Barron, Jiawen Chen, Dillon Sharlet, Ren Ng, and Robert Carroll. Burst denoising with kernel prediction networks. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

- [27] Martijn F Snoeij, Albert JP Theuwissen, Kofi AA Makinwa, and Johan H Huijsing. A cmos imager with column-level adc using dynamic column fixed-pattern noise reduction. IEEE Journal of Solid-State Circuits, 2006.

- [28] Wei Wang, Xin Chen, Cheng Yang, Xiang Li, Xuemei Hu, and Tao Yue. Enhancing low light videos by exploring high sensitivity camera noise. In ICCV , pages 4110–4118. IEEE , 2019.

- [29] Kaixuan Wei, Ying Fu, Jiaolong Yang, and Hua Huang. A physics-based noise formation model for extreme low-light raw denoising. In IEEE Conference on Computer Vision and Pattern Recognition, 2020.

- [30] Zongsheng Yue, Qian Zhao, Lei Zhang, and Deyu Meng. Dual adversarial network: Toward real-world noise removal and noise generation. In Proceedings of the European Conference on Computer Vision (ECCV), August 2020.

- [31] Kai Zhang, Wangmeng Zuo, Yunjin Chen, Deyu Meng, and Lei Zhang. Beyond a Gaussian denoiser: Residual learning of deep CNN for image denoising. IEEE Transactions on Image Processing, 26(7):3142–3155, 2017.

7 Details for noise parameters calibration

Here, we provide more details on noise parameters calibration for three smartphone cameras in the SIDD dataset. Following the DNG document [17], we estimate two noise parameters for each sensor under the typical Poisson-Gaussian distribution. Specifically, denotes the total gain factor inner the camera and is the variance (Gaussian distribution) for the signal independent noise. For , we first capture a series of flat-field frames by adjusting the uniform illumination and use the Photo Transfer method [19] to fit the linear relationship between the mean and variance of the flat-field frames. For , we capture black frames for each ISO settings and remove their black levels. The variance parameter can be estimated under the Gaussian distribution.

8 More qualitative results.

In fig. 4, we show more qualitative results from the ELD dataset. Our method can produce more textures than other methods. And, the color around the dark areas also can be preserved well.