Rethinking Image Skip Connections in StyleGAN2

Abstract

Various models based on StyleGAN have gained significant traction in the field of image synthesis, attributed to their robust training stability and superior performances. Within the StyleGAN framework, the adoption of image skip connection is favored over the traditional residual connection. However, this preference is just based on empirical observations; there has not been any in-depth mathematical analysis on it yet. To rectify this situation, this brief aims to elucidate the mathematical meaning of the image skip connection and introduce a groundbreaking methodology, termed the image squeeze connection, which significantly improves the quality of image synthesis. Specifically, we analyze the image skip connection technique to reveal its problem and introduce the proposed method which not only effectively boosts the GAN performance but also reduces the required number of network parameters. Extensive experiments on various datasets demonstrate that the proposed method consistently enhances the performance of state-of-the-art models based on StyleGAN. We believe that our findings represent a vital advancement in the field of image synthesis, suggesting a novel direction for future research and applications.

Index Terms:

Generative adversarial network, image synthesis, StyleGAN, image skip connectionI Introduction

Generative adversarial networks (GANs) [1] have shown impressive outcomes in various computer vision tasks including image synthesis [2, 3, 4, 5, 6], image-to-image translation [7, 8, 9], and image inpainting [10, 11]. In general, the GANs framework comprises two distinct networks: the generator (G) and the discriminator (D). G is tasked with producing data that mimics the distribution of real data, aiming to deceive D. Conversely, D pours attention on distinguishing the synthesized samples from the real ones. Ideally, the process would culminate in an optimal solution where G can perfectly mimic the real data distribution, making it nearly impossible for D to discern the source of the images [1].

However, the competition between G and D is not fully balanced. Specifically, D, by evaluating the difference between real and synthesized samples, assumes a dual role in this adversarial framework: as both competitor and arbiter. This configuration allows D to dominate the game and becomes a primary cause of instability of GANs training. To alleviate this problem, some papers [12, 13, 14, 15, 16, 17, 18] introduced regularization or normalization techniques that inhibit the discriminator from making the sharp gradient space. In traditional regularization approaches [13, 16, 17], gradient penalty-based methods have become particularly popular. These techniques, which integrate the gradient norm as a penalty term into the adversarial loss function, have been extensively adopted across various applications for their effectiveness. Normalization techniques [12, 15, 18] have also received significant attention in research. Among these, spectral normalization (SN) emerges as the most notably recognized method. Since the SN method ensures adherence to the Lipschitz constraint for discriminators by regulating the SN of each layer, it plays a crucial role in stabilizing training processes. These normalization and regularization methods have been extensively adopted owing to their critical role in improving the stability of GAN training.

On the other hand, explorations into architectural enhancements for GANs have led to significant developments in performance as well as training stability [19, 20, 21, 22, 5]. Miyato et al. [20] proposed a novel conditional projection approach, a technique that integrates conditional information into D directly. This approach has significantly improved the performance of conditional GANs (cGANs) through a straightforward method. Zhang et al. [22] proposed a novel self-attention module that guides G and D on where to focus, whereas Yeo et al. [5] introduced a cascading rejection module that iteratively computes the adversarial loss using varied feature vectors. Li et al. [23] proposed a dynamic weighted GAN, called DW-GAN, which synthesizes the images following the color tone of the target image. These techniques offer the advantage of significantly enhancing the image synthesis performance of GANs through simple methods.

Recently, StyleGAN [24] has emerged as the baseline model in the GAN-based image synthesis domain. This method is celebrated for its ability to produce images of exceptional quality and for effectively mitigating the issue of training instability. Unlike with the previous studies [12, 13, 14, 15, 16, 17, 18], StyleGAN allows G to control the synthesis process of the image at different levels of detail through the use of Style parameters. In detail, Style parameters are extracted from a latent space and serve to modulate the mean and variance within the framework of adaptive instance normalization (AdaIN) [25], enabling control over characteristics of the generated images. To more effectively control Style parameters, a variety of models such as StyleGAN2 [26], StyleGAN3 [27], and StyleGAN-XL [28] have been developed, where these technologies are capable of producing images with the high quality.

Among the StyleGAN variations, StyleGAN2 [26] is being widely adopted as the baseline model in recent research papers [2, 3, 4, 29, 30, 31, 32]. In [26], they conducted a variety of studies, including innovative ways to utilize Style parameters, new regularization techniques, and an analysis of issues with the progressive growing technique. Among these diverse research efforts, we have delved into the significance of using image skip connections as an alternative to the progressive growing technique. In [26], they have conducted experiments to evaluate performance by applying residual connections and image skip connections to G instead of using the progressive growing technique. Through numerous experiments, they proved that applying the image skip connection to G is more effective than the residual connection. However, these findings are based on empirical evidence rather than a mathematical analysis of the image skip connection; the conclusions drawn do not stem from a theoretical examination of their underlying principles.

To the best of our knowledge, however, there has been no prior research that has pointed out this issue. To address this issue, this brief first analyzes the mathematical meaning of the image skip connection and discusses the reasons for their superior performances compared to the residual connection. Then, we introduce a novel skip connection approach, called the image squeeze connection, which consistently surpasses the performance of the image skip connection in cutting-edge models derived from StyleGAN2. Furthermore, we will show that the proposed method achieves superior performance despite necessitating fewer network parameters compared to the original G in StyleGAN2. To validate the superiority of its proposed method, this brief presents comprehensive experimental findings across a variety of datasets such as CIFAR-10 [33], FFHQ [24], subsets of LSUN [34], and AFHQ [35]. Moreover, a series of ablation studies were conducted to highlight the robust generalization capabilities of the proposed method. Through quantitative evaluations, it is clear that the proposed method not only significantly boosts GAN performance but also achieves remarkable enhancements in key metrics, including the Inception score (IS) [36], Frechet Inception Distance (FID) [37], and Precision/Recall [38, 39]. The proposed method can be seamlessly integrated into any setup, as it effectively reduces the number of network parameters by approximately 12.1%. Our contribution can be summarized as follows:

-

•

We propose a novel approach, called image squeeze connection, which is simple but consistently improves the performance of various methods based on StyleGAN2.

-

•

Experimental results on several datasets show that our method works better than previous approaches, achieving higher scores in key performance measures.

-

•

The proposed method simplifies and streamlines the baseline model by reducing hardware costs by approximately 12.1%.

II Preliminaries

II-A Generative Adversarial Networks

GANs [1] consist of two neural networks, the generator (G) and the discriminator (D), which are trained simultaneously through a competitive process. The role of G is to generate data that is indistinguishable from real data, while the job of D is to distinguish between the fake data synthesized by G and actual data. This adversarial process is akin to a forger trying to create a counterfeit painting, with D acting as the art critic trying to spot the fake. Through this competition, G could produce fake data that looks real theoretically. The traditional objective functions of G and D are defined as follows:

| (1) |

| (2) | |||||

However, a notable challenge inherent in the original objective functions is the instability during the training phase. This instability often manifests as difficulty in convergence, leading to sub-optimal generation of data and sometimes causing the training process to fully fail. To address these issues, many papers [40, 13, 15, 41, 26] have been actively proposing and evaluating alternative objective functions that can offer more stable training dynamics.

In the current landscape of GAN research and application, a particularly effective approach has gained prominence, known as the non-saturating logistic loss with regularization [41, 26, 24, 27]. This method enhances the training stability and the quality of the generated images by carefully balancing the learning process of G and D. The non-saturating logistic loss ensures that the gradients of G do not vanish or explode, maintaining a steady pressure on the generator to improve. Concurrently, the regularization adds a penalty based on the first-order gradient of the D for real data, encouraging smoother decision boundaries and further stabilizing the training dynamics. The non-saturating logistic loss with regularization is defined as below:

| (3) | |||||

| (4) |

where is a hyper-parameter that constrains the gradient magnitude of the discriminator. By using this function, many GAN-based image synthesis methods have achieved more reliable and higher-quality results.

II-B Revisit StyleGAN2

StyleGAN2 [26] is an advanced version of the original StyleGAN architecture, which represents a significant advancement in the field of GANs. StyleGAN2 introduces several key improvements over its predecessor to enhance image quality and address specific issues observed in StyleGAN-generated images, such as unnatural artifacts and inconsistencies. One of the notable changes in StyleGAN2 is the redesign of the normalization method that maintains stylistic control while reducing artifacts. In detail, the original StyleGAN used a feature called AdaIN (Adaptive Instance Normalization) at each layer of G to inject style information. However, this led to certain artifacts and issues related to style control. StyleGAN2 introduces a revised approach to normalization, called weight demodulation. This technique replaces the previous modulation mechanism and ensures that the scaling of the convolutional weights does not introduce undesired artifacts in the generated images. Weight demodulation helps in producing cleaner and more coherent textures, significantly improving image quality.

Another improvement is in the handling of the progressive growing technique in StyleGAN. Instead of progressively increasing the resolution during training, StyleGAN2 modifies the network architecture and training process to maintain a fixed resolution, which helps reduce training instability and eliminates phase artifacts. Specifically, they conducted extensive experiments to evaluate the effectiveness of different strategies that improve the information flow within the network, such as residual connections [42, 12, 13], image skip connections [43, 44], and hierarchical methods [45, 46]. Based on the experimental results, they concluded that integrating G with image skip connections and D with residual connections leads to effective performance. It is reliable to draw conclusions based on the results of various experiments, but we believe that it is necessary to consider the theoretical reasons why image skip connections yield good results. Therefore, in this brief, we first analyze the mathematical meaning of image skip connections and propose a new image skip connection method that shows impressive performance.

III Proposed Method

III-A Analysis of the image skip connection

As shown in Fig. 1, StyleGAN2 generates the output image by aggregating the images derived from the intermediate layers. While this appears to be a coarse-to-fine technique where images are created from intermediate layers and then progressively added together, it would not be the case. The reason is as follows: Unlike the convolution layer that conducts both modulation and demodulation (yellow box in Fig. 1), the toRGB layer performs only modulation. In other words, since demodulation is not performed, the operation of the toRGB layer is equivalent to first modulating the feature and then performing a traditional convolution layer without activation function. Therefore, we can express the toRGB layer with the following formula:

| (5) |

where indicates j-th intermediate feature modulated by scaling each channel along with the given Style, and and are the j-th intermediate image and weight matrix of convolution, respectively. In addition, x and y represent the pixel coordinates of the feature map, while denotes the channel dimension of . Note that there is no non-linearity in Eq. 5 since the modulation and the convolution in each pixel are linear operations.

Using Eq. 5, we can express in mathematical terms how the output image is formed. For simplicity, let us assume that the output image has resolution. Then, the output image can be formulated as

| (6) |

where indicates the up-sampling function such as nearest neighbor and bilinear interpolation methods, and and are intermediate images having and resolution, respectively. Using Eq. 5, we can rewrite the Eq. 6 as

| (7) |

where is an integrated matrix formed by the combination of and , and also indicates an combined feature formed by concatenating and along the channel dimension. That means the existing image skip connection technique achieves mathematically identical results by concatenating the modulated intermediate features, i.e. , in the channel direction, followed by performing a 1x1 convolution operation.

Based on this analysis, we point out that the conventional image skip connection method may encounter problems when generating high-resolution images. For instance, when producing images with a resolution of , image skip connections are executed from a resolution of up to . As mentioned earlier, the image skip connection technique, which has the same mathematical meaning with concatenating intermediate features at each resolution followed by a 1x1 convolution, leads to an issue where the dimension of the concatenated features increases. In essence, when generating a image, it is equivalent to projecting modulated features with 2,496 dimensions onto a three-dimensional image using a single linear operation, i.e. convolution layer without actviation function. However, this method faces challenges in achieving optimal performance due to the difficulty of directly projecting high-dimensional features into a lower-dimensional space.

III-B Squeeze Image Connection

To alleviate this problem, we propose a method that is simple but surprisingly effective. Fig. 2 illustrates the overall framework of the proposed method, which replaces the two convolution layers present in each resolution block of StyleGAN2 (Two convolution layers of StyleGAN2 are described in Fig. 1). Specifically, we first produce the feature by using convolution layer with an up-sampling technique. Then, is transformed into a feature , which has a lower channel dimension compared to a , through a 3x3 convolution operation (i.e. , squeeze process), where r is a user parameter that represents the channel-squeeze ratio. Here, is used to generate an intermediate image via the toRGB layer; this process means reducing the dimension of the features that are concatenated to generate the output image (recall Eq. 7). Subsequently, through another 3x3 convolution operation, we produce a feature , which has the same channel dimension as (i.e. excitation process). We agree that there might be an information loss owing to the squeeze and excitation process. To mitigate this issue, we produce the output feature by first concatenating and and then applying a 1x1 convolution operation. The effectiveness of this process will be proved in Section IV-D. Note that all convolution operations, except the toRGB layer, incorporate both modulation and demodulation processes.

These simple structural changes also help in reducing the number of network parameters. In the case of the original StyleGAN2, each resolution requires convolution weight parameters, whereas the proposed method needs parameters (assuming the number of input and output channels is c, and excluding the number of Style parameters). Thus, if the user parameter r is greater than 2.25, it is possible to reduce the number of network variables, leading to improvements in memory efficiency. For instance, setting r to 8 can reduce the convolution weight parameters by approximately 32%. Furthermore, our framework is straightforward and can be easily integrated into existing StyleGAN2-based models. In the following section, we demonstrate the effectiveness of our method in unconditional image generation across various datasets.

| Parameter | FFHQ | CIFAR-10 |

|---|---|---|

| LSUN | ||

| AFHQ | ||

| Resolution | ||

| Training length | 25M | 100M |

| Minibatch size | 64 | 64 |

| Minibatch stddev | 8 | 32 |

| Dataset x-flips | ✓/ - / ✓ | ✓ |

| ADA [47] | - / - / ✓ | ✓ |

| LR () | 2.5 | 2.5 |

| Reg. | 1 | 0.01 |

| G moving Average | 20k | 500k |

| Mapping depth | 8 | 2 |

| Style mixing Regularization | ✓ | ✓ |

| Path length Regularization | ✓ | ✓ |

| Resnet D | ✓ | ✓ |

IV Experiments

IV-A Implementation Details

We assess the effectiveness of the proposed method across multiple datasets, including CIFAR-10 [33], FFHQ [24], multiple LSUN [34] categories such as cat, horse, and church, and AFHQ [35]. CIFAR-10 is comprised of 50,000 small color images distributed across 10 classes, while FFHQ encompasses 70,000 high-quality facial images. AFHQ contains approximately 5,000 images per cat, dog, and wild animal face. The LSUN church, horse, and cat datasets depict respective scenes, and we have used 200,000 images per each dataset [2]. Consistent with prior research, we have implemented horizontal flips for the CIFAR-10, FFHQ, and AFHQ datasets. All images were resized to , except CIFAR-10 images, which were maintained at . The proposed method has a single user parameter r that determines the channel squeeze ratio. In our experiments, we empirically set the r value as 8, except for the ablation studies.

To prove the superior performance and generalizability of the proposed method, we executed comparative experiments against various baseline models, including StyleGAN2 [26], ADA [47], GGDR [2], and GLeaD [3]. StyleGAN2 is extensively recognized in this domain for its robust performance and stable generator training. Both GGDR and GLeaD are evolved iterations of StyleGAN2, highlighting significant performance improvements in recent years. ADA applies data augmentation strategies and is particularly effective in scenarios involving datasets with limited numbers of images such as CIFAR-10 and AFHQ.

In our experiments, we systematically substituted the generator block with our proposed method across all baseline models. We maintained consistency in hyperparameters, such as learning rate and regularization weights, following the previous papers. We conducted the experiments using the official source code of each baseline models111https://github.com/NVlabs/stylegan2-ada-pytorch, 222https://github.com/naver-ai/GGDR, 333https://github.com/EzioBy/glead. Detailed parameter settings can be found in Table I.

| CIFAR-10 | FID | IS |

|---|---|---|

| DDPM [48] | ||

| NCSN++ [49] | 2.2 | 9.89 |

| WDiff [50] | 4.01 | - |

| ProGAN [51] | 15.52 | |

| AutoGAN [52] | 12.42 | |

| StyleGAN2 [26] | ||

| FSMR [53] | 2.90 | 9.68 |

| ADA [26] | ||

| GGDR [2] | ||

| ADA [26] + Ours | 2.50 | 9.94 |

| GGDR [2] + Ours | 1.98 | 10.13 |

IV-B Evaluation Metric

In this study, we utilized widely recognized metrics, namely the Fréchet Inception Distance (FID) [37] and Precision/Recall [38, 39], to evaluate the realism of the generated images. The FID assesses the Wasserstein-2 distance between the feature distributions of real and generated images, derived from the pre-trained Inception model [54]. FID is defined as follows:

| (8) |

where and are the mean and covariance of the samples with distributions of real and generated images, respectively. A lower FID score indicates that the generated images have better quality. Precision measures the proportion of generated images resembling those in the training set, while Recall indicates the proportion of training images that the model can replicate. For CIFAR-10, we also use Inception Score (IS) [36] following the previous papers [2, 47]. IS can be expressed as

| (9) |

where l is the class label predicted by the Inception model [54] trained by using the ImageNet dataset [55], and and represent the conditional class distributions and marginal class distributions, respectively. Unlike the FID, the higher the IS score, the better the quality of the generated image. For our analysis, we generated 50,000 samples and calculated these metrics accordingly.

| Method | FFHQ | LSUN Church | LSUN Horse | LSUN Cat | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| FID | P | R | FID | P | R | FID | P | R | FID | P | R | |||||

| UT [56] | 6.11 | 0.73 | 0.48 | 4.07 | 0.71 | 0.45 | - | - | - | - | - | - | ||||

| Polarity [57] | - | - | - | 3.92 | 0.61 | 0.39 | - | - | - | 6.39 | 0.64 | 0.32 | ||||

| StyleGAN2 [26] | 3.71 | 0.69 | 0.44 | 3.97 | 0.59 | 0.39 | 3.62 | 0.63 | 0.36 | 7.98 | 0.60 | 0.27 | ||||

| StyleGAN2 [26] + Ours | 3.37 | 0.69 | 0.48 | 3.86 | 0.59 | 0.46 | 2.95 | 0.62 | 0.42 | 7.28 | 0.59 | 0.30 | ||||

| GGDR [2] | 3.14 | 0.69 | 0.50 | 3.15 | 0.61 | 0.46 | 2.50 | 0.64 | 0.43 | 5.28 | 0.58 | 0.38 | ||||

| GGDR [2] + Ours | 2.90 | 0.68 | 0.52 | 2.61 | 0.59 | 0.52 | 2.45 | 0.60 | 0.48 | 5.03 | 0.58 | 0.39 | ||||

| GLeaD [3] | 3.24 | 0.69 | 0.47 | 2.82 | 0.62 | 0.43 | - | - | - | - | - | - | ||||

| GLeaD [3]+Ours | 2.69 | 0.68 | 0.53 | 2.29 | 0.59 | 0.53 | 2.41 | 0.61 | 0.48 | 5.43 | 0.56 | 0.40 | ||||

IV-C Experimental Results

To evaluate the performance enhancement brought by our method, we first conducted experiments on the CIFAR-10 dataset. We compare the performance of our method not only with the leading GANs-based models [51, 52, 26, 53, 26, 2] but also with the latest diffusion-based models [48, 49, 50]. Table II shows the FID and IS scores of ours and comparison methods on the CIFAR-10 dataset. Specifically, we incorporated our method into ADA [47], recognized as a benchmark model for unconditional image generation, particularly effective on small datasets. As shown in Table II, the proposed method successfully improves the ADA performance in terms of both FID and IS scores. Moreover, we applied our proposed method to GGDR [2] which already outperforms ADA. As demonstrated by our experimental results, the proposed method not only significantly boosts the GGDR performance but also achieves state-of-the-art performance. Fig. 3 shows the samples of our method on the CIFAR-10 dataset.

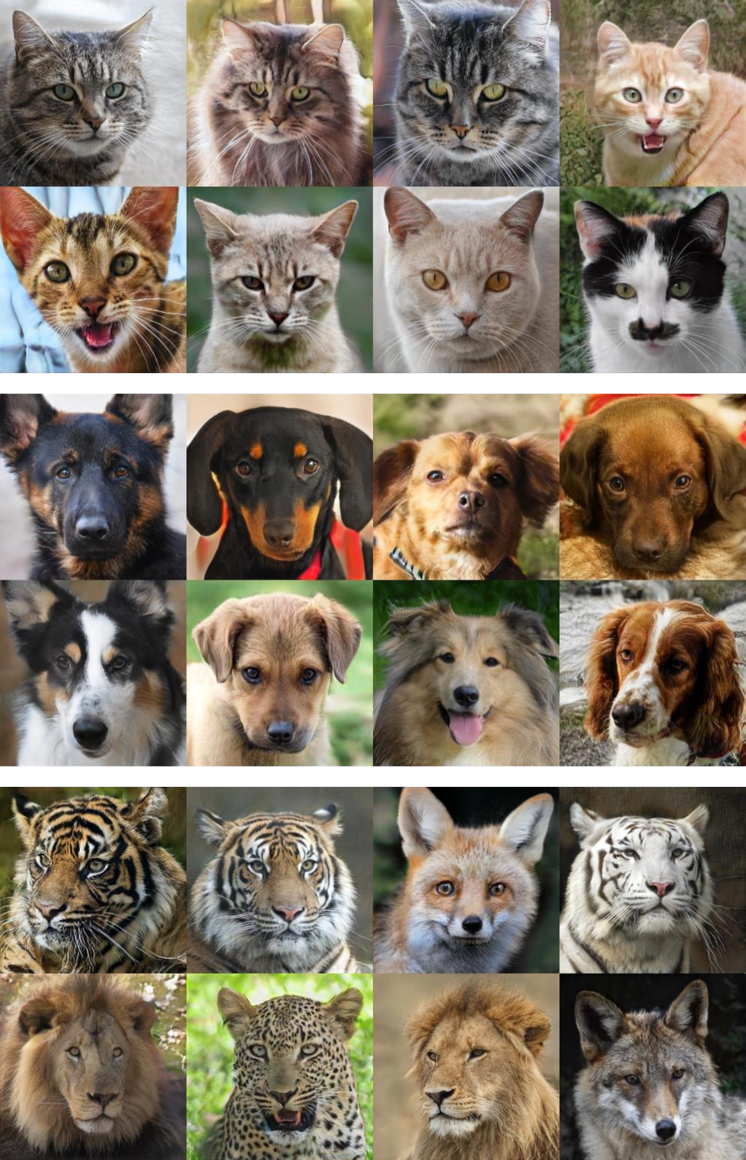

To prove the enhanced capability of the proposed method in generating high-resolution images, we conducted experiments using multiple datasets such as FFHQ, LSUN-Church, LSUN-Horse, and LSUN-Cat. We set the StyleGAN2, GGDR, and GLeaD [3] methods as our baseline models following the previous papers [2, 3]. Table III presents the FID and Precision/Recall scores for both the proposed method and the comparative methods. Specifically, we first assess the performance enhancement brought by the proposed method, using StyleGAN2 as the baseline model. As shown in Table III, the proposed method not only achieves superior performance across all datasets used in the experiments compared to prior works such as UT [56] and Polarity [57] but also marginally enhances the performance of StyleGAN. In particular, the proposed method improves the Recall with the large gap compared to StyleGAN2, which indicates that the proposed method generates more diverse images and is less prone to mode collapse. Moreover, we proceeded with experiments using GGDR and GLeaD, which enhance the performance of StyleGAN2, as our baseline models. As depicted in Table III, the proposed method improves performance across all datasets, and notably, in the case of the GLeaD baseline, both FID and Recall metrics exhibit significant enhancements. Therefore, these experimental results confirm that the proposed method consistently boosts performance, regardless of the baseline. The samples of the generated image are presented in Fig. 4. As shown in Fig. 4, the proposed method produces high-quality synthetic images that appear remarkably real.

To show the robustness of the proposed method against the small dataset, we conducted experiments using the AFHQ dataset [35] by setting the ADA [47] as the baseline model. Table IV presents the experimental results. Since the existing paper [53] only compared FID scores, this brief also focuses solely on FID comparisons. As shown in Table IV, the proposed method improves the baseline in terms of FID scores with a large gap; the proposed method enhances the performance of the baseline model successfully, even on datasets with a smaller number of images. These results indicate that the proposed method is not only effective but also highly adaptable to various types of data, which in turn, enhances its utility and relevance in the various applications. Fig. 5 shows samples of our method on the AFHQ datasets.

IV-D Ablation Studies

The proposed method includes a user-defined variable r that determines the degree of channel compression. While compressing the channels can reduce the number of network parameters, it may also decrease performance. Thus, making the selection of an appropriate r value is crucial. To determine the optimal value, we conducted ablation studies on the FFHQ and LSUN church datasets. We use the StyleGAN2 as the baseline model. As summarized in Table V, we can observe that as the r value increases, the number of parameters in G decreases. However, there is a corresponding decline in performance in terms of FID metric as the number of G parameters decreases. For instance, at , the performance on the LSUN church dataset shows weak performance compared to the baseline model. Conversely, and not only show superior performance compared to the baseline model but also the added benefit of reduced parameter count. In terms of the Recall metric, the proposed method consistently outperforms the baseline model, irrespective of the r value. We chose as it significantly reduces the parameters of the network while still maintaining respectable performance.

| Method | Dog | Cat | Wild | |

|---|---|---|---|---|

| StyleGAN2 [26] | 19.65 | 8.37 | 4.17 | |

| DiffAug [58] | 16.92 | 6.39 | 4.39 | |

| ADA [47] | 13.56 | 6.64 | 3.74 | |

| ADA [47] + FSMR [53] | 11.76 | 5.71 | 3.24 | |

| ADA [47] + Ours | 9.68 | 4.38 | 2.63 |

As mentioned in Section III-B, there is a need to validate the necessity of the feature blending process, which involves the concatenation of features followed by a convolution. To this end, we build a modified structure without the feature blending process (as shown in Fig. 6(a)) and evaluate its performance. Table VI summarizes the experimental results. The method without the feature blending process shows lower performance compared to the proposed method, yet it still exhibits comparable or superior performance relative to the baseline model. These results indicate that the significant contribution to performance improvement stems from the image squeeze connection rather than the feature blending process. We believe that these results indirectly highlight the limitations of the existing image skip connection method and the superiority of our approach.

| Method | G Parmas. | FFHQ | LSUN Church | ||||

|---|---|---|---|---|---|---|---|

| FID | R | FID | R | ||||

| StlyeGAN2 | 24.80M | 3.71 | 0.44 | 3.97 | 0.39 | ||

| + Ours () | 24.25M | 3.29 | 0.49 | 3.71 | 0.46 | ||

| + Ours () | 21.80M | 3.37 | 0.48 | 3.86 | 0.46 | ||

| + Ours () | 20.56M | 3.44 | 0.49 | 4.25 | 0.45 | ||

Furthermore, we also measured the performance variations according to the placement of the toRGB layer (Figs. 6(b) and 6(c)). Specifically, Fig 6(b) illustrates the use of the toRGB layer before the squeeze process, while Fig. 6(c) depicts its application after the excitation process. It is important to note that both methods employ features that have high-dimension to the toRGB layer. In other words, similar to the traditional image skip connection, high-dimensional intermediate features are concatenated through the toRGB layer. As shown in Table VII, it can be observed that both cases exhibit lower performance compared to the proposed method. Additionally, they also show decreased performance compared to the baseline model. These results indicate that the performance improvement in the proposed method is not merely due to the presence of squeeze and excitation processes. Instead, it suggests that utilizing low-dimensional features to the toRGB layer enhances performance. Based on these experimental results, we consider the proposed method as a solution that addresses the issues with the traditional image skip connection and significantly aids in improving the performance of StyleGAN2-based models.

V Conclusion

In this brief, we identify and mathematically analyze the issue with the image skip connection in StyleGAN2, detailing the reasons behind the problem. Inspired by our observations, we propose a new skip connection method, called image squeeze connection, aimed at enhancing the image synthesis performance. The proposed technique not only shows superior performance compared to strong baseline models but also reduces the number of network parameters. Furthermore, due to its simplicity, the proposed method can be easily applied to existing baseline models. To prove the superiority of the proposed method through comprehensive experiments conducted on various baseline models developed using StyleGAN2. Furthermore, due to the excellence of StyleGAN2, many models based on it continue to be developed recently. Therefore, we anticipate that our proposed method could be widely adopted in the relevant fields.

| Method | G Parmas. | FFHQ | LSUN Church | ||||

|---|---|---|---|---|---|---|---|

| FID | R | FID | R | ||||

| StlyeGAN2 | 24.80M | 3.71 | 0.44 | 3.97 | 0.39 | ||

| + Ours | 21.80M | 3.37 | 0.48 | 3.86 | 0.46 | ||

| + Ours w/o FBP | 21.75M | 3.40 | 0.46 | 4.05 | 0.43 | ||

| Method | FFHQ | LSUN Church | ||||

| FID | R | FID | R | |||

| StlyeGAN2 | 3.71 | 0.44 | 3.97 | 0.39 | ||

| + Ours | 3.37 | 0.48 | 3.86 | 0.46 | ||

| + Ablation1 (Fig. 6(b)) | 3.91 | 0.48 | 4.11 | 0.45 | ||

| + Ablation2 (Fig. 6(c)) | 3.48 | 0.48 | 4.20 | 0.42 | ||

References

- [1] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672–2680.

- [2] G. Lee, H. Kim, J. Kim, S. Kim, J.-W. Ha, and Y. Choi, “Generator knows what discriminator should learn in unconditional gans,” in European Conference on Computer Vision. Springer, 2022, pp. 406–422.

- [3] Q. Bai, C. Yang, Y. Xu, X. Liu, Y. Yang, and Y. Shen, “Glead: Improving gans with a generator-leading task,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 12 094–12 104.

- [4] S. Park and Y.-G. Shin, “A novel generator with auxiliary branch for improving gan performance,” IEEE Transactions on Neural Networks and Learning Systems, 2024.

- [5] Y.-J. Yeo, Y.-G. Shin, S. Park, and S.-J. Ko, “Simple yet effective way for improving the performance of gan,” IEEE Transactions on Neural Networks and Learning Systems, vol. 33, no. 4, pp. 1811–1818, 2021.

- [6] M.-C. Sagong, Y.-J. Yeo, Y.-G. Shin, and S.-J. Ko, “Conditional convolution projecting latent vectors on condition-specific space,” IEEE Transactions on Neural Networks and Learning Systems, 2022.

- [7] P. Isola, J.-Y. Zhu, T. Zhou, and A. A. Efros, “Image-to-image translation with conditional adversarial networks,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 1125–1134.

- [8] Y. Choi, M. Choi, M. Kim, J.-W. Ha, S. Kim, and J. Choo, “Stargan: Unified generative adversarial networks for multi-domain image-to-image translation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 8789–8797.

- [9] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 2223–2232.

- [10] M.-c. Sagong, Y.-g. Shin, S.-w. Kim, S. Park, and S.-j. Ko, “Pepsi: Fast image inpainting with parallel decoding network,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019, pp. 11 360–11 368.

- [11] Y.-G. Shin, M.-C. Sagong, Y.-J. Yeo, S.-W. Kim, and S.-J. Ko, “Pepsi++: fast and lightweight network for image inpainting,” IEEE Transactions on Neural Networks and Learning Systems, 2020.

- [12] T. Miyato, T. Kataoka, M. Koyama, and Y. Yoshida, “Spectral normalization for generative adversarial networks,” arXiv preprint arXiv:1802.05957, 2018.

- [13] I. Gulrajani, F. Ahmed, M. Arjovsky, V. Dumoulin, and A. C. Courville, “Improved training of wasserstein gans,” in Advances in neural information processing systems, 2017, pp. 5767–5777.

- [14] H. Zhang, Z. Zhang, A. Odena, and H. Lee, “Consistency regularization for generative adversarial networks,” arXiv preprint arXiv:1910.12027, 2019.

- [15] M. Arjovsky, S. Chintala, and L. Bottou, “Wasserstein gan,” arXiv preprint arXiv:1701.07875, 2017.

- [16] B. Wu, S. Zhao, C. Chen, H. Xu, L. Wang, X. Zhang, G. Sun, and J. Zhou, “Generalization in generative adversarial networks: A novel perspective from privacy protection,” arXiv preprint arXiv:1908.07882, 2019.

- [17] X. Wei, B. Gong, Z. Liu, W. Lu, and L. Wang, “Improving the improved training of wasserstein gans: A consistency term and its dual effect,” arXiv preprint arXiv:1803.01541, 2018.

- [18] K. Kurach, M. Lučić, X. Zhai, M. Michalski, and S. Gelly, “A large-scale study on regularization and normalization in gans,” in International Conference on Machine Learning. PMLR, 2019, pp. 3581–3590.

- [19] S. Park and Y.-G. Shin, “Generative residual block for image generation,” Applied Intelligence, pp. 1–10, 2021.

- [20] T. Miyato and M. Koyama, “cgans with projection discriminator,” arXiv preprint arXiv:1802.05637, 2018.

- [21] S. Park and Y.-G. Shin, “Generative convolution layer for image generation,” arXiv preprint arXiv:2111.15171, 2021.

- [22] H. Zhang, I. Goodfellow, D. Metaxas, and A. Odena, “Self-attention generative adversarial networks,” in International conference on machine learning. PMLR, 2019, pp. 7354–7363.

- [23] W. Li, C. Gu, J. Chen, C. Ma, X. Zhang, B. Chen, and P. Chen, “Dw-gan: Toward high-fidelity color-tones of gan-generated images with dynamic weights,” IEEE Transactions on Neural Networks and Learning Systems, 2023.

- [24] T. Karras, S. Laine, and T. Aila, “A style-based generator architecture for generative adversarial networks,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 4401–4410.

- [25] X. Huang and S. Belongie, “Arbitrary style transfer in real-time with adaptive instance normalization,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 1501–1510.

- [26] T. Karras, S. Laine, M. Aittala, J. Hellsten, J. Lehtinen, and T. Aila, “Analyzing and improving the image quality of stylegan,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 8110–8119.

- [27] T. Karras, M. Aittala, S. Laine, E. Härkönen, J. Hellsten, J. Lehtinen, and T. Aila, “Alias-free generative adversarial networks,” Advances in Neural Information Processing Systems, vol. 34, pp. 852–863, 2021.

- [28] A. Sauer, K. Schwarz, and A. Geiger, “Stylegan-xl: Scaling stylegan to large diverse datasets,” in ACM SIGGRAPH 2022 conference proceedings, 2022, pp. 1–10.

- [29] A. Sauer, T. Karras, S. Laine, A. Geiger, and T. Aila, “Stylegan-t: Unlocking the power of gans for fast large-scale text-to-image synthesis,” arXiv preprint arXiv:2301.09515, 2023.

- [30] Y. Wang, L. Jiang, and C. C. Loy, “Styleinv: A temporal style modulated inversion network for unconditional video generation,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 22 851–22 861.

- [31] A. B. Yildirim, H. Pehlivan, B. B. Bilecen, and A. Dundar, “Diverse inpainting and editing with gan inversion,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 23 120–23 130.

- [32] X. Zhang, J. Zhang, R. Chacko, H. Xu, G. Song, Y. Yang, and J. Feng, “Getavatar: Generative textured meshes for animatable human avatars,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 2273–2282.

- [33] A. Krizhevsky, G. Hinton et al., “Learning multiple layers of features from tiny images,” 2009.

- [34] F. Yu, Y. Zhang, S. Song, A. Seff, and J. Xiao, “Lsun: Construction of a large-scale image dataset using deep learning with humans in the loop,” arXiv preprint arXiv:1506.03365, 2015.

- [35] Y. Choi, Y. Uh, J. Yoo, and J.-W. Ha, “Stargan v2: Diverse image synthesis for multiple domains,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 8188–8197.

- [36] T. Salimans, I. Goodfellow, W. Zaremba, V. Cheung, A. Radford, and X. Chen, “Improved techniques for training gans,” Advances in neural information processing systems, vol. 29, 2016.

- [37] M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter, “GANs trained by a two time-scale update rule converge to a local Nash equilibrium,” in Advances in Neural Information Processing Systems, 2017, pp. 6626–6637.

- [38] T. Kynkäänniemi, T. Karras, S. Laine, J. Lehtinen, and T. Aila, “Improved precision and recall metric for assessing generative models,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- [39] M. S. Sajjadi, O. Bachem, M. Lucic, O. Bousquet, and S. Gelly, “Assessing generative models via precision and recall,” Advances in neural information processing systems, vol. 31, 2018.

- [40] X. Mao, Q. Li, H. Xie, R. Y. Lau, Z. Wang, and S. Paul Smolley, “Least squares generative adversarial networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 2794–2802.

- [41] L. Mescheder, A. Geiger, and S. Nowozin, “Which training methods for gans do actually converge?” in International conference on machine learning. PMLR, 2018, pp. 3481–3490.

- [42] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [43] A. Karnewar and O. Wang, “Msg-gan: Multi-scale gradients for generative adversarial networks,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 7799–7808.

- [44] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18. Springer, 2015, pp. 234–241.

- [45] H. Zhang, T. Xu, H. Li, S. Zhang, X. Wang, X. Huang, and D. N. Metaxas, “Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks,” in Proceedings of the IEEE international conference on computer vision, 2017, pp. 5907–5915.

- [46] ——, “Stackgan++: Realistic image synthesis with stacked generative adversarial networks,” IEEE transactions on pattern analysis and machine intelligence, vol. 41, no. 8, pp. 1947–1962, 2018.

- [47] T. Karras, M. Aittala, J. Hellsten, S. Laine, J. Lehtinen, and T. Aila, “Training generative adversarial networks with limited data,” Advances in neural information processing systems, vol. 33, pp. 12 104–12 114, 2020.

- [48] J. Ho, A. Jain, and P. Abbeel, “Denoising diffusion probabilistic models,” Advances in neural information processing systems, vol. 33, pp. 6840–6851, 2020.

- [49] Y. Song, J. Sohl-Dickstein, D. P. Kingma, A. Kumar, S. Ermon, and B. Poole, “Score-based generative modeling through stochastic differential equations,” arXiv preprint arXiv:2011.13456, 2020.

- [50] H. Phung, Q. Dao, and A. Tran, “Wavelet diffusion models are fast and scalable image generators,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 10 199–10 208.

- [51] T. Karras, T. Aila, S. Laine, and J. Lehtinen, “Progressive growing of gans for improved quality, stability, and variation,” arXiv preprint arXiv:1710.10196, 2017.

- [52] X. Gong, S. Chang, Y. Jiang, and Z. Wang, “Autogan: Neural architecture search for generative adversarial networks,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 3224–3234.

- [53] J. Kim, Y. Choi, and Y. Uh, “Feature statistics mixing regularization for generative adversarial networks,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 11 294–11 303.

- [54] C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Rethinking the inception architecture for computer vision,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 2818–2826.

- [55] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE conference on computer vision and pattern recognition. Ieee, 2009, pp. 248–255.

- [56] S. Bond-Taylor, P. Hessey, H. Sasaki, T. P. Breckon, and C. G. Willcocks, “Unleashing transformers: Parallel token prediction with discrete absorbing diffusion for fast high-resolution image generation from vector-quantized codes,” in European Conference on Computer Vision. Springer, 2022, pp. 170–188.

- [57] A. I. Humayun, R. Balestriero, and R. Baraniuk, “Polarity sampling: Quality and diversity control of pre-trained generative networks via singular values,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 10 641–10 650.

- [58] S. Zhao, Z. Liu, J. Lin, J.-Y. Zhu, and S. Han, “Differentiable augmentation for data-efficient gan training,” Advances in neural information processing systems, vol. 33, pp. 7559–7570, 2020.