Restructuring, Pruning, and Adjustment of Deep Models for Parallel Distributed Inference

Abstract

Using multiple nodes and parallel computing algorithms has become a principal tool to improve training and execution times of deep neural networks as well as effective collective intelligence in sensor networks. In this paper, we consider the parallel implementation of an already-trained deep model on multiple processing nodes (a.k.a. workers) where the deep model is divided into several parallel sub-models, each of which is executed by a worker. Since latency due to synchronization and data transfer among workers negatively impacts the performance of the parallel implementation, it is desirable to have minimum interdependency among parallel sub-models. To achieve this goal, we propose to rearrange the neurons in the neural network and partition them (without changing the general topology of the neural network), such that the interdependency among sub-models is minimized under the computations and communications constraints of the workers. We propose RePurpose, a layer-wise model restructuring and pruning technique that guarantees the performance of the overall parallelized model. To efficiently apply RePurpose, we propose an approach based on optimization and the Munkres assignment algorithm. We show that, compared to the existing methods, RePurpose significantly improves the efficiency of the distributed inference via parallel implementation, both in terms of communication and computational complexity.

1 Introduction

In recent years, the size and complexity of deep neural networks has been increased significantly in terms of model’s structure and number of parameters. Consequently, real-time implementation and inference in many machine learning (ML) problems has become a challenging task. Although the execution time of deep neural networks can be improved significantly by the application of parallel computing algorithms and using multiple processing units (such as GPU’s or clusters of computing nodes), it generally requires synchronization and data exchange among processing units to some extent. This is mainly due to the fact that in parallel computations, each processing unit performs a portion of the computations, its inputs generally depend on the outputs from other units, and the results of computations should be aggregated to yield the desired output. These co-dependencies can lead to significant delays in computations. Moreover, in some real-world scenarios, such as sensor networks, the inference is done on the data observed by the entire network, i.e., each node in the network only observes part of the data. However, transferring all data to a central powerful node to aggregate and perform the ML task is undesirable due to the sheer amount of data to be collected, limited computational power, privacy concerns, or even availability of such a node. Hence, it is more favorable to develop a distributed equivalence of a deep model for deploying over the processors/sensor network.

In the aforementioned applications, straightforward parallel computing algorithms cannot be arbitrarily scaled up for deep models with complex connectivity structures. The majority of past works on distributed/parallel execution of deep neural networks are concerned with algorithmic aspects of the parallel implementation of the neural network (e.g., Zinkevich et al. (2010); Chung et al. (2014); De Grazia et al. (2012)). However, here, we focus on the structure of deep models and how we can modify it for efficient parallel distributed implementation.

In recent years, there has been an increasing interest in compressing, pruning, or modifying the structure of deep models to reduce their computational or storage costs, while keeping the accuracy or performance of the modified model acceptable. The majority of these approaches can be classified into three categories:

- •

-

•

Using Structured Parameters to reduce the size of deep model or its processing time. Examples include using circulant matrices Cheng et al. (2015) or Adaptive Fastfood transform Yang et al. (2015) for fully connected layers, and separable filters Rigamonti et al. (2013) or low-rank tensor decomposition Tai et al. (2016) for convolutional layers.

-

•

Pruning Parameters has been used extensively to reduce the complexity of the model as well as over-parametrization. or regularization Louizos et al. (2018), and group-sparsity Zhou et al. (2016); Wen et al. (2016) have been successfully used to promote sparsity of the parameters during training. Model pruning algorithms such as Optimal Brain Damage Cun et al. (1990), Optimal Brain Surgeon Hassibi et al. (1993), hard-thresholding the parameters Han et al. (2015), and similar works Castellano et al. (1997); Leung et al. (2001), mainly focus on removing the insignificant edges or neurons, by considering the magnitude of the weights or their approximate Hessian matrix as a measure of importance. More recently, Aghasi, et al. Aghasi et al. (2017, 2020) proposed Net-Trim, a convex optimization technique to prune the parameters of the deep model by analyzing the signals in the neural network.

Although it is possible to design deep models according to the capability and constraints of the processing system, following such an approach requires training a new deep model for every target hardware which is infeasible or demanding in many ML problems. Further, imposing a possibly unnecessary structure in advance during training a deep model would likely be limiting in terms of model performance and accuracy. It will be also an undesirable approach for parallel implementation since a model specifically designed for optimum implementation on a target platform or architecture may be far from optimum on other platforms (e.g., GPUs with different compute capabilities, or CPU vs GPU vs sensor network). Hence optimizing and fixing the structure for one particular parallel distributed setting in advance would limit the optimal deployment on other platforms. As a result, we assume that a complex deep model has already been trained with minimum or no hardware-specific constraints on its parameters or structure. Our goal would be readjusting the model via restructuring the layers and manipulating the parameters of the neural network without changing its general topology for more efficient parallel implementation.

As an example, consider the simple neural network in Fig. 1(a). Simply partitioning the model into two sub-models (as depicted by a dashed line in the Fig. 1(a)) imposes lots of communication between the two partitions. However, by rearranging the neurons properly, the co-dependency (and hence required communications) between the two sub-models (the red edges in Fig. 1(b)) is reduced substantially. It is worth mentioning that there are approximately different partitioning to distribute computations of a neural network’s layer with neurons over workers. Hence, enumerating all such possibilities and choosing a good one is infeasible specially for large networks. In this paper, we propose a systematic approach to perform such partitioning and parameter adjustment to ensure efficient implementation of the modified model while keeping its accuracy close to the original model.

Notations

| Number of workers | |

| Number of neurons | |

| Number of layers | |

| Weight matrix | |

| Bias | |

| Mask matrix |

Bold lowercase letters represent vectors and the -th element of the vector is denoted as . Matrices are denoted by bold capital letters such as , with the -th element represented by or . is the Hadamard (element-wise) product of and . is the Frobenius norm of , and are the and norms of , respectively. is a vector or matrix of all ones, whose size would be clear from the context.

2 Problem Statement and our Approach

Consider the problem of parallel distributed implementation of a trained deep neural network over processing units (hereafter referred to as workers), where the deep model is divided into sub-models, each of which is executed by a worker. As managing the synchronization and data transfer among workers degrades the efficiency of the parallel implementation (e.g., higher latency), it is crucial to reduce the communication among workers. The communication is needed between the workers when the input of a neuron in a sub-model is from the output of a neuron belonging to a different sub-model which resides in another worker. These co-dependencies can lead to significant delays in computation.

For the sake of simplicity in presentations and analysis, here, we mainly focus on feedforward deep models, specifically fully-connected layers. Note that the convolution layer can be represented as a special case of a fully connected layer. 111Recall that the convolution can be represented as for a circulant matrix constructed from . For more details and the extensions of our approach to other complex architectures, please refer to the supplementary document.

Consider an arbitrary neural network with layers and parameters , where is the parameters of the -th layer. Let be the input signal to the -th layer. Then, the output of the layer (input to the next layer) would be given by

| (1) |

where is the activation function.

To analyze the bottlenecks, consider an arbitrary layer with input , and parameters and (Fig. 2). Hence, would be the input signal to the neurons of the layer. Suppose that and are subsets of the signals that are processed by the -th worker. Without loss of generality, we assume that the neurons are ordered such that the -th block of consecutive neurons belongs to the -th sub-model, i.e., . By partitioning and accordingly, we observe that

| (2) |

Note that the first term can be computed at the -th worker independent of the others, whereas computing the second term requires synchronization and communication from the other workers. Hence, to reduce the dependency among workers and the communication cost, we consider minimizing the number of non-zero elements in , for .

By defining an appropriate binary mask (Fig. 2 (right)), the connections between sub-models can be determined by the non-zero elements of . In general, if and are the number of input and output neurons assigned to the -th worker, then is an anti-diagonal block matrix, given by

Remark 1.

Note that the bias does not contribute to the communication between workers and can be safely ignored in computing the cost. Further, can be viewed as the number of edges between sub-models, and be used as an approximation to the latency caused by the communication and synchronization among workers. Similarly, by defining an appropriate binary mask , we can find the edges from worker to from the non-zero entries of . Depending on the communication protocol among workers, the number of non-zero edges, number of non-zero rows, or number of non-zero columns of can be interpreted as a measure of latency due to the communication from worker to . For the sake of simplicity, in this work, we consider as a measure of total communication latency. However, the extensions of our proposed approach to other cases is straightforward.

To reduce the communication, one may attempt to reduce the number of cross-edges among sub-models. However, as we observed in our experiments, generally there are many important connections between neurons from different sub-models, and removing those connections can severely affect the performance of the neural network. Hence, it is important to have such neurons in the same sub-model. On the other hand, the problem of neuron assignment to the workers is combinatorial and discrete with complexity for a layer with neurons and workers. Hence, enumerating all possibilities or using ordinary optimization techniques as well as genetic algorithms or simulated annealing would fail due to the complex nature of interactions among neurons in a deep neural network. Based on the above observations, we devise RePurpose, a layer-wise neural network restructuring and pruning for efficient parallel implementation. The gist of the idea is as follows;

The neurons of the input layer are assigned to the sub-models based on each worker’s computational power and/or structure of the input data. For example, in a sensor network, it is dictated by the input of each sensor. Next, we restructure and adjust the neural network, sequentially one layer at a time. For the -th layer, the assignments of the neurons in layer are assumed to be fixed and known from the previous steps. The neurons in layer are rearranged and assigned to each sub-model, and the parameters of the layer are pruned and fine-tuned, such that () the performance of the modified neural network is close to the original one, and () the communication between the sub-models (measured by the number of edges connecting neurons from different sub-models) is minimized.

3 RePurpose: Restructuring and Pruning Deep Models

Consider the -th layer of neural network and assume that the neurons in the previous layers have already been partitioned and rearranged, i.e., the input of the layer is partitioned as , where is computed at the -th worker. Let and be the signals and parameters of the -th layer in the original model. RePurpose rearranges the neurons such that the -th block of neurons are being assigned to the -th worker (Fig. 3). Note that the rearrangement of the neurons can be captured via a permutation matrix . Hence, if we use the same weights, the effect of neuron-rearrangement can be formulated as and , and the number of cross-edges between workers would be . To further reduce the communication between workers, RePurpose not only rearranges the neurons, but it also prunes and adjusts . Hence, the optimization problem for RePurpose is formulated as

| (3) |

where is a parameter controlling the closeness of the parameters. Directly solving (3) is infeasible as it is (mixed-)discrete, non-convex, and there are different permutation matrices. In the following, we propose an alternative and efficient approach to solve (3).

Recall that if neuron is assigned to worker , the signal at that neuron can be rewritten as , where is the -th column of , and is the -th block of corresponding to . Hence, the communication cost from other workers to worker would be . By incorporating an additional optional cost to encourage the total sparsity of the parameters, , the cost of assigning neuron to worker would be

| (4) |

where and control the trade-off between the error, sparsity, and cross-communication.

Lemma 1.

The solution of (4) is given by element-wise hard-thresholding , i.e.,

| (5) |

where or , depending on whether neuron from the previous layer has been assigned to the -th worker or not.

Restructuring and neuron assignment can be interpreted as selecting elements from the cost matrix , whose -th element is given by (4), such that () from row , elements are selected, i.e., neurons are assigned to worker , () from each column, only one element is selected, i.e., each neuron can be assigned to only one worker, and () the sum of selected elements is minimized, i.e., the total cost of neuron assignment and parameter adjustment is minimum.

Algorithm 1 summarizes the proposed solution, where Munkres() uses the Munkres assignment algorithm Kuhn (1955); Munkres (1957) to find the (row-column) index pairs that minimizes the total sum cost .

Theorem 2.

Algorithm 1 finds the optimum solution of

| (6) |

with time complexity , where is the number of layer’s neurons (number of columns of ).

Note that by setting , (6) would be the Lagrangian of (3) and choosing appropriate value for can lead to the desired error bound . Finally, it is worth mentioning that the bias term does not contribute to the communication cost and is given by .

Remark 2.

In model pruning and compression, it is common to retrain the modified model to fine-tune the parameters and improve the accuracy of the model. This extra post-processing is generally referred to as post-training phase or fine-tuning. The same principle can be applied to our proposed algorithm.

4 Experiments

To evaluate the performance of the RePurpose algorithm, we consider different neural network architectures and compare the accuracy, communication and wall-clock times w.r.t. naive implementation where the input data is communicated to all nodes in the network, so they all have the entire input data, baseline which is parallel implementation of the deep model without any modification to the parameters or structures, and sparse implementation which sparsifies the parameters to reduce cross-edges between the workers without re-arranging the neurons. We evaluate the accuracy-communication trade-off in different sensor networks, as well as the reduction in total computation time (wall-clock time) in Edge and Data Center platforms.

4.1 Sensor Network

Setup 1. Figure 6(a) shows a 2 sensors network, sensor observes location of a target object and each sensor’s task is to determine whether the object is in the blue or green region. A simple neural network (Fig. 6(b)) is trained at a central node to perform the task with accuracy . In the naive approach, the sensors exchange their observations (’s) and run the inference (NN) independently. Hence, the NN is executed twice throughout the network at the cost of higher computational complexity. Alternatively, we can apply RePurpose to efficiently distribute the inference over the sensors. We applied RePurpose with , (Fig. 6(c)), and (Fig. 6(d)). As a result, the number of cross-worker communications reduced significantly to , and for , and , and for for layers 1, 2, and 3, respectively. Specifically, with only communicated values, the computational complexity at each sensor is reduced by almost a factor of compared to the naive implementation. However, the accuracy of the distributed parallel model, prior to the post-training phase, is reduced to . By retraining the modified model for few iterations (and imposing the structural constraints found through RePurpose), the accuracy of the fine-tuned model becomes .

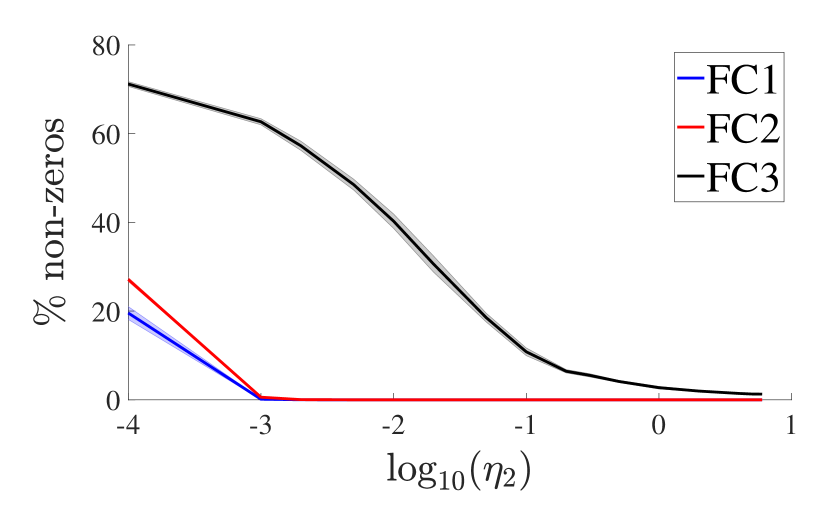

Setup 2. Next, we consider a network of sensors where each sensor observes an image of a digit (from MNIST dataset) and the goal is finding the rounded average . We adapted a Lenet-5 like structure LeCun et al. (1998) for the neural network which is trained in a central server (Fig. 7), and repeated the experiments several times. Note that one might attempt to classify the digits at each individual sensor and then share the value with other nodes to compute the average. However, in addition to the increased computational complexity at each individual node, it is worth mentioning that if the accuracy of digit recognition is , close to , then the final accuracy in computing the average would be approximately . For example, for a network with nodes and , the final accuracy would be less than . We applied the RePurpose algorithm on the trained model for distributed inference over the sensor network with different communication (cross-worker edges) constraints. Fig. 6 compares the results of RePurpose with the baseline and direct sparsification, in a network with sensors.

Setup 3. Next, we consider sensors (cameras) that observe a scene and detect whether an specific object exists or not. For this purpose, we used a Resnet-like neural network He et al. (2016) over CIFAR10 and the objective is detecting the presence of a ”dog” in any of the images (Fig. 8). Fig. 6 shows the results of RePurpose, the baseline, and direct sparsification, in a network with sensors.

As seen from figures 4(a) and 5(a), RePurpose significantly outperforms sparsification and although its accuracy is dropped for large , with 1 or 10 epochs of post-training for MNIST and CIFAR10, respectively, (”FT RePurpose” in the figures) it achieves almost the same accuracy as the original model, while direct sparsification fails to provide good accuracy. Moreover, interestingly, RePurpose sparsifies the cross-edges between workers significantly for the hidden layers. The restructured model can achieve the same performance as the original model by using less than of the cross-edges (i.e., between to connections out of more than edges between workers). Finally, figures 4(c) and 5(c) compare the accuracy vs the cross-communication between workers. Clearly, direct sparsification performs well only when there are enough number of cross-edges between the workers, while the accuracy of the model obtained by RePurpose does not change for a vast sparsity range.

Finally, it is worth mentioning that in the naive approach to inference over the sensor network, each node has to transmit its observations to other nodes, hence the communication between any two pair of nodes would be or values for Setups 2 and 3, respectively. However, RePurpose can achieve the same accuracy with less than 200 total communicated values across the entire network.

4.2 System Evaluations

| Name | Node Compute | Node Memory | Network Bandwidth | Number of Nodes |

|---|---|---|---|---|

| Datacenter | 125 TOPS | 4GB | 150 GB/s (NVLink) | 1-32 |

| Edge | 0.5 TOPS | 1GB | 100 MB/s (Ethernet) | 1-32 |

Methodology- We evaluate RePurpose on two distributed accelerator platforms, described in Tbl. LABEL:table:system_config, simulated using ASTRA-sim Rashidi et al. (2020). ASTRA-sim is an open-source distributed Deep Learning platform simulator that models cycle-level communication behavior in details for any partitioning strategy across multiple interconnected accelerator nodes. ASTRA-sim takes the compute cycles for each layer of the model as an external input, and manages communication scheduling similar to communication libraries like NVIDIA NCCL NVIDIA (2018). We obtained compute cycles for the Datacenter configuration from a NVIDIA V100 GPU implementation, and for the Edge configuration (e.g., sensor network) from a separate DNN accelerator simulator Samajdar et al. (2020).

We tried to stress the aforementioned platforms under various sized problems to show the efficiency of RePurpose. In all models, we assumed a stack of 5 layers with same number of neurons. In our notation, refers to the number of neurons per layer (or matrix dimensions). For the datacenter system, varies from to , while for edge system the variation is from to . We also assumed strict ordering between current communication and computation of next layer, meaning that each node begins computation of each layer only when it has all inputs available.

We picked 4 different flavors of RePurpose with 50%, 75%, 90% and 99% sparsity factor named as RP-50, RP-75, RP-90, and RP-99, respectively. In addition, we changed the number of worker nodes from 1 to 32 for both system configurations.

Results- Fig. 9 shows the total amount of data that each node needs to send out for one input sample for . Clearly, specification has the linear effect on the amount of communicating data. On the other hand, partitioning across more nodes also increases the total communicating data. But the increase in rate diminishes as the number of nodes increases, converges to 2X more data compared to the case of 2 nodes.

To further investigate the effect of RePurpose in reducing the computation and communication times, Fig. 11 shows the simulation results of the communication and computation breakdown for the baseline system and RePurpose for . As seen from Fig. 10(a), in a datacenter system, on average and across different number of nodes, RP-50, RP-75, RP-90 and RP-99 achieve 1.7, 2.76, 4.77 and 10.47 speed-up in computations, respectively. The average improvement for communication ratio is 1.2, 1.45, 1.74 and 1.75, respectively. The reason for lower improvements of communication time is that due to NVLink’s high bandwidth. For , network communication time is mostly network latency limited. Hence, reduction in input size does not correspond to linear reduction in communication time.

Fig. 10(b) shows the similar results but for edge system. Here, due to much lower network bandwidth, the effect of communication is more considerable. On average applying RP-50, RP-75, RP-90 and RP-99 improve computation times by 1.7, 2.77, 4.78 and 11.01, respectively. This value for communication is 1.2, 1.38, 1.82 and 3.04 respectively. As the number of nodes grow, the communication gap between the baseline and RePurpose decreases. This is mostly because of the congestion in the network (e.g. switch) that decreases the effect of benefits gained by RePurpose.

Fig. 11 shows how communication, computation and total times change as the the number of neurons grows. For each network size, computation and communication times are averaged across different sparsity factors and node counts. For datacenter system (Fig10(c)), computation is the dominant factor. This is expected since the computation grows as while communication increases as . Since the network band-width is very high in datacenter, the effect of communication is negligible. In general, the total time ratio increase from 1.01 in to 2.06 in . On the other hand, communication remains a considerable factor in the edge systems (Fig. 10(d)) due to: () low network bandwidth, and () lower dimensions of workloads on edge systems. The total time improvement for edge system is for and it increases to for .

5 Conclusion

In this paper, we considered the problem of efficient parallel distributed inference of an already trained deep model over a cluster of processing units or a sensor network. Required communication and synchronization among processing units or network nodes (i.e., workers) can adversely affect the computation time. Moreover, in the wireless sensor networks, it may significantly increase the power consumption due to the transmission of large amount of data. We claimed that traditional approaches to prune or compress the deep models fail to consider the constraints imposed in such distributed inference systems. To overcome the shortcomings of the existing methods, we devised RePurpose, a framework to restructure the deep model by rearranging the neurons, optimum assignment of neurons to the workers, and then pruning the parameters, such that the dependency among workers is reduced. We showed that RePurpose can significantly reduce the number of cross-communication between workers and improve the computation time significantly, while the performance loss of the modified model is remained negligible.

References

- Aghasi et al. [2017] Alireza Aghasi, Afshin Abdi, Nam Nguyen, and Justin Romberg. Net-Trim: Convex Pruning of Deep Neural Networks with Performance Guarantee. In I Guyon, U V Luxburg, S Bengio, H Wallach, R Fergus, S Vishwanathan, and R Garnett, editors, Advances in Neural Information Processing Systems 30, pages 3180–3189. Curran Associates, Inc., 2017.

- Aghasi et al. [2020] Alireza Aghasi, Afshin Abdi, and Justin Romberg. Fast convex pruning of deep neural networks. SIAM Journal on Mathematics of Data Science, 2(1):158–188, 2020.

- Castellano et al. [1997] Giovanna Castellano, Anna Maria Fanelli, and Marcello Pelillo. An iterative pruning algorithm for feedforward neural networks. IEEE Transactions on Neural Networks, 8(3):519–531, may 1997. ISSN 1045-9227. doi: 10.1109/72.572092.

- Cheng et al. [2015] Yu Cheng, X Yu Felix, Rogerio S Feris, Sanjiv Kumar, Alok Choudhary, and Shih-Fu Chang. Fast neural networks with circulant projections. arXiv preprint arXiv:1502.03436, 2015.

- Chung et al. [2014] I-Hsin Hsin Chung, Tara N. Sainath, Bhuvana Ramabhadran, Michael Picheny, John Gunnels, Vernon Austel, Upendra Chauhari, and Brian Kingsbury. Parallel Deep Neural Network Training for Big Data on Blue Gene/Q. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, volume 28 of SC ’14, pages 745–753, Piscataway, NJ, USA, 2014. IEEE Press. ISBN 978-1-4799-5500-8. doi: 10.1109/TPDS.2016.2626289.

- Cun et al. [1990] Yann Le Cun, John S Denker, Sara A Sola, T Bell Laboratories, and Sara A Solla. Optimal Brain Damage. In Advances in Neural Information Processing Systems 2, pages 598–605, San Francisco, CA, USA, 1990. Morgan Kaufmann Publishers Inc. ISBN 1-55860-100-7.

- De Grazia et al. [2012] Michele De Filippo De Grazia, Ivilin Stoianov, and Marco Zorzi. Parallelization of deep networks. Proceedings of 2012 European Symposium on Artificial NN, Computational Intelligence and Machine Learning, pages 621–626, 2012.

- Han et al. [2015] Song Han, Jeff Pool, John Tran, and William J. Dally. Learning both Weights and Connections for Efficient Neural Networks. CoRR, abs/1506.02626:1–9, 2015. URL http://arxiv.org/abs/1506.02626.

- Hassibi et al. [1993] Babak Hassibi, David G Stork, Sand Hill Road, and Menlo Park. Second Order Derivatives for Network Pruning: Optimal Brain Surgeon. In Advances in Neural Information Processing Systems 5, [NIPS Conference], pages 164–171, San Francisco, CA, USA, 1993. Morgan Kaufmann Publishers Inc. ISBN 1-55860-274-7. URL http://dl.acm.org/citation.cfm?id=645753.668069.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- Hinton et al. [2015] Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. Distilling the Knowledge in a Neural Network. ArXiv e-prints, pages 1–9, mar 2015. URL http://arxiv.org/abs/1503.02531.

- Jonker and Volgenant [1987] Roy Jonker and Anton Volgenant. A shortest augmenting path algorithm for dense and sparse linear assignment problems. Computing, 38(4):325–340, 1987.

- Kuhn [1955] H. W. Kuhn. The hungarian method for the assignment problem. Naval Research Logistics Quarterly, 2(1-2):83–97, mar 1955. doi: 10.1002/nav.3800020109.

- LeCun et al. [1998] Yann LeCun, Léon Bottou, Yoshua Bengio, and Patrick Haffner. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

- Leung et al. [2001] Chi-Sing Sing Leung, Kwok-Wo Wo Wong, Pui-Fai Fai Sum, and Lai-Wan Wan Chan. A pruning method for the recursive least squared algorithm. Neural Networks, 14(2):147–174, 2001. ISSN 0893-6080. doi: http://dx.doi.org/10.1016/S0893-6080(00)00093-9.

- Louizos et al. [2018] Christos Louizos, Max Welling, and Diederik P. Kingma. Learning Sparse Neural Networks through Regularization. In ICLR, pages 1–13, 2018.

- Munkres [1957] James Munkres. Algorithms for the assignment and transportation problems. Journal of the Society for Industrial and Applied Mathematics, 5(1):32–38, mar 1957. doi: 10.1137/0105003.

- NVIDIA [2018] NVIDIA. Nvidia collective communications library (nccl), 2018. URL https://developer.nvidia.com/nccl.

- Rashidi et al. [2020] Saeed Rashidi, Srinivas Sridharan, Sudarshan Srinivasan, and Tushar Krishna. ASTRA-SIM: Enabling SW/HW Co-Design Exploration for Distributed DL Training Platforms. In IEEE International Symposium on Performance Analysis of Systems and Software, ISPASS 2020, 2020.

- Rigamonti et al. [2013] Roberto Rigamonti, Amos Sironi, Vincent Lepetit, and Pascal Fua. Learning separable filters. In 2013 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, jun 2013. doi: 10.1109/cvpr.2013.355.

- Romero et al. [2015] Adriana Romero, Nicolas Ballas, Samira Ebrahimi Kahou, Antoine Chassang, Carlo Gatta, and Yoshua Bengio. Fitnets: Hints for thin deep nets. In ICLR, 2015.

- Samajdar et al. [2020] Ananda Samajdar, Jan Moritz Joseph, Yuhao Zhu, Paul Whatmough, Matthew Mattina, and Tushar Krishna. A systematic methodology for characterizing scalability of dnn accelerators using scale-sim. In 2020 IEEE International Symposium on Performance Analysis of Systems and Software, 2020.

- Tai et al. [2016] Cheng Tai, Tong Xiao, Yi Zhang, Xiaogang Wang, et al. Convolutional neural networks with low-rank regularization. In ICLR, 2016.

- Tomizawa [1971] N. Tomizawa. On some techniques useful for solution of transportation network problems. Networks, 1(2):173–194, 1971.

- Wen et al. [2016] Wei Wen, Chunpeng Wu, Yandan Wang, Yiran Chen, and Hai Li. Learning structured sparsity in deep neural networks. In Advances in Neural Information Processing Systems, pages 2074–2082, 2016. ISBN 1878-3686 (Electronic). doi: 10.1016/j.ccr.2008.06.009.

- Yang et al. [2015] Zichao Yang, Marcin Moczulski, Misha Denil, Nando De Freitas, Alex Smola, Le Song, Ziyu Wang, Nando de Freitas, Alex Smola, Le Song, and Ziyu Wang. Deep Fried Convnets. In The IEEE International Conference on Computer Vision (ICCV), pages 1476–1483, dec 2015. ISBN 9781467383912. doi: 10.1109/ICCV.2015.173.

- Zagoruyko and Komodakis [2017] Sergey Zagoruyko and Nikos Komodakis. Paying more attention to attention: Improving the performance of convolutional neural networks via attention transfer. In ICLR, 2017.

- Zhou et al. [2016] Hao Zhou, Jose M Alvarez, and Fatih Porikli. Less is more: Towards compact cnns. In European Conference on Computer Vision, pages 662–677. Springer, 2016.

- Zinkevich et al. [2010] Martin A Zinkevich, Alex J Smola, Markus Weimer, Lihong Li, and Alex J Smola. Parallelized Stochastic Gradient Descent. In J D Lafferty, C K I Williams, J Shawe-Taylor, R S Zemel, and A Culotta, editors, Advances in Neural Information Processing Systems 23, pages 2595–2603. Curran Associates, Inc., 2010.

Appendix A Complexity of Naive Direct Partitioning

Consider distributing processing of a layer of a deep neural network with neurons over workers. Without assuming any constraint on the number of neurons per worker, there are possible assignments for each neuron, hence, the total possible neuron assignments to the workers would be .

Now, assume that exactly neurons have to be assigned to the -th worker, where . Clearly, there are

possible neuron assignment to the workers. To have a relatively balanced neuron assignment (i.e., no worker or a small subset of workers has to process almost all signals), we assume that , where , i.e., there exists such that . Using Stirling’s approximation for factorial, , and noting that , , we have

Therefore, the direct approach to find good neuron assignment for parallel distributed inference requires evaluation of different assignments, which for large number of neurons or number of workers becomes prohibitive.

Appendix B Application of RePurpose in Deep Neural Networks

Recall that at the core of the RePurpose algorithm is solving the optimization problem and finding the cost of assigning neuron to worker , given by

| (7) |

where and control the trade-off between the error, sparsity, and cross-communication.

The basic RePurpose function and its application to a deep neural network with weights and biases are summarized in Algorithms 1 and 2, respectively. In Alg. 2, is the number of neurons in layer being assigned to worker , and is the (modified) element-wise hard-thresholding operator, defined as

| (8) |

Input: , , , , Output: , , for layers do Algorithm 2 Applying RePurpose to Deep Neural Networks

Recall that when applying RePurpose to layers of a neural network, permuting neurons of layer with matrix changes the signal of that layer by and affects the weight matrix of that layer by . As a result, and to have the same signal at the next layer, , the weight matrix of layer should be modified as . Line 2 of Alg. 2 accounts for these adjustments.

Appendix C Performance Guarantee of RePurpose

Consider an arbitrary neural network with layers and parameters , where is the parameters of the -th layer. Let be the input signal to the -th layer. Then, the output of the layer (input to the next layer) would be given by

| (9) |

where is the activation function.

To analyze the performance of the modified neural network, assume that the original neural network has the following properties:

-

A1.

The activation functions are -Lipschitz, i.e., for all , .

-

A2.

The Frobenius norms of the weights of the neural network are bounded, i.e., for some constant , , for all layers .

-

A3.

The signals in the neural networks are bounded, i.e., there exists a constant such that for input signal , and forward signals (outputs of the hidden layers), for .

Moreover, suppose that the parameters and at each call of the RePurpose are adjusted such that the solution of Lagrangian formulation (6), given by RePurpose, is also the solution of the following constrained optimization problem

| (10) |

Hence, by Alg. 2 and the cascade application of RePurpose, the modified weight matrix of the -th layer of neural network satisfies . For the simplicity in notations, let .

Theorem 3.

For an input data , let and be the outputs of the original and RePurposed neural network, respectively. If is the permutation of the final output neurons in the RePurposed neural network, then under assumptions A1-3,

| (11) |

Especially, if the parameters of the neural network are normalized such that , then

Proof.

Let and be the signals in the original and modified neural network, corresponding to the input . Note that and the input to both networks are the same, . Let and be the permutation matrix and parameters of the modified neural network, found via (3). Therefore, using , for any arbitrary layer ,

where is because for arbitrary permutation and vector , is because is -Lipschitz, is due to the fact that , is from for arbitrary and , and is by assumption A2 and (3). Therefore,

| (12) |

Since , (12) implies that

| (13) |

Specifically, for the output signals, and , it implies that

∎

Therefore, if the hyperparameters of RePurpose are chosen carefully, we can ensure that the output of the modified neural network is close to the original model (after accounting for the possible rearrangement of the neurons of the output layer).

Appendix D Proofs of the Main Results

D.1 Proof of Lemma 1

Lemma.

The solution of

| (14) |

is given by element-wise hard-thresholding , i.e.,

| (15) |

where or , depending on whether neuron is in or not.

Proof.

Let be the indexes in the -th block. Therefore, consists of elements of that are not in the set , and

where if is true and is otherwise. Therefore, the minimization in (14) can be cast as separate minimizations over scalars . For example, if , there are two possibilities for ,

Hence, the solution would be

Similarly,

∎

D.2 Proof of Theorem 2

Theorem.

Algorithm 1 finds the optimum solution of

| (16) |

with time complexity , where is the number of layer’s neurons (number of columns of ).

Proof.

First, we note that for any permutation matrix , , , and . Therefore, by defining , the optimization (6) can be rewritten as

On the other hand, recall that , and hence if is from the -th sub-block, i.e., it corresponds to the -th worker, the inner minimization would be

| (17) |

By repeating the -th row of matrix whose elements are defined as (17) to construct the new matrix , we will have . Therefore,

As a result, selecting the best neuron assignment boils down to choosing elements from such that from each row or column only one element is selected and the sum of the selected values is minimum. This problem can be solved efficiently in polynomial time using the Hungarian algorithm. Tomizawa [1971], Jonker and Volgenant [1987] solve the assignment algorithm with time complexity. Since the complexity of creating is at most , the total complexity of Algorithm 1 would be . ∎

Appendix E Reduction in Computational Complexity

One major benefit of applying RePurpose, as demonstrated in simulations, is the reduction in the computational complexity. For the sake of simplicity, assume that there are workers. Recall that the computations at worker is given as . By the application of RePurpose to the weight matrix , the off-diagonal blocks, and , become sparse. Let be the indexes of the columns of which are non-zero, and define to be the restriction of to those non-zero columns. Similarly, define to be the restriction of to the indexes given by . Therefore, can be more efficiently calculated as . If is an matrix, the computational complexity and the communication requirement of the cross-term in the original calculation would be and , respectively. RePurpose reduces these complexities to and . As shown in simulations, the set can be extremely small, making the computational complexity of the cross-term negligible. For example, in applying the proposed technique to an matrix to distributed its computations over workers, if the number of cross dependencies are reduced by a factor of , then the computational complexity of matrix multiplication would be reduced to per worker, almost times reduction from in naive parallel implementation.

Appendix F Extension of RePurpose to Convolutional Layers

Consider a convolutional layer whose input consists of channels of -dimensional tensors and its output has channels. Let be the kernel. For the sake of simplicity in notations, we ignore strides and dilation in convolution operator. Hence, the output would be

where is the input -dimensional tensor with channels and is the output tensor.

Note that due to the nature of the convolution operator, it is not possible to rearrange the neurons within each channel (e.g., changing locations of pixels in images). However, we propose to change the order of the channels. Note that the convolution can be rewritten as

where is the kernel connecting input channel to output channel , is the -th channel of the input tensor, and is the -th output channel. Now, similar to (4), we can define the cost of assigning channel to the -th worker as follows:

| (18) |

where is the set of input channels located at the -th worker, and if is true, and is , otherwise. Note that for the convolutional layers, we treat the individual filters as a whole, and the entire channel filter may be set to zero, not the individual coefficients. The solution of (18) is given by hard-thresholding,

| (19) |

where if and , otherwise.

With the new assignment cost, RePurpose for convolutional layers is simply given as in Alg. 1.