Resource-Aware Discretization of Accelerated Optimization Flows

Abstract

This paper tackles the problem of discretizing accelerated optimization flows while retaining their convergence properties. Inspired by the success of resource-aware control in developing efficient closed-loop feedback implementations on digital systems, we view the last sampled state of the system as the resource to be aware of. The resulting variable-stepsize discrete-time algorithms retain by design the desired decrease of the Lyapunov certificate of their continuous-time counterparts. Our algorithm design employs various concepts and techniques from resource-aware control that, in the present context, have interesting parallelisms with the discrete-time implementation of optimization algorithms. These include derivative- and performance-based triggers to monitor the evolution of the Lyapunov function as a way of determining the algorithm stepsize, exploiting sampled information to enhance algorithm performance, and employing high-order holds using more accurate integrators of the original dynamics. Throughout the paper, we illustrate our approach on a newly introduced continuous-time dynamics termed heavy-ball dynamics with displaced gradient, but the ideas proposed here have broad applicability to other globally asymptotically stable flows endowed with a Lyapunov certificate.

I Introduction

A recent body of research seeks to understand the acceleration phenomena of first-order discrete optimization methods by means of models that evolve in continuous time. Roughly speaking, the idea is to study the behavior of ordinary differential equations (ODEs) which arise as continuous limits of discrete-time accelerated algorithms. The basic premise is that the availability of the powerful tools of the continuous realm, such as differential calculus, Lie derivatives, and Lyapunov stability theory, can be then brought to bear to analyze and explain the accelerated behavior of these flows, providing insight into their discrete counterparts. Fully closing the circle to provide a complete description of the acceleration phenomenon requires solving the outstanding open question of how to discretize the continuous flows while retaining their accelerated convergence properties. However, the discretization of accelerated flows has proven to be a challenging task, where retaining acceleration seems to depend largely on the particular ODE and the discretization method employed. This paper develops a resource-aware approach to the discretization of accelerated optimization flows.

Literature Review

The acceleration phenomenon goes back to the seminal paper [1] introducing the so-called heavy-ball method, which employed momentum terms to speed up the convergence of the classical gradient descent method. The heavy-ball method achieves optimal convergence rate in a neighborhood of the minimizer for arbitrary convex functions and global optimal convergence rate for quadratic objective functions. Later on, the work [2] proposed the Nesterov’s accelerated gradient method and, employing the technique of estimating sequences, showed that it converges globally with optimal convergence rate for convex and strongly-convex smooth functions. The algebraic nature of the technique of estimating sequences does not fully explain the mechanisms behind the acceleration phenomenon, and this has motivated many approaches in the literature to provide fundamental understanding and insights. These include coupling dynamics [3], dissipativity theory [4], integral quadratic constraints [5, 6], and geometric arguments [7].

Of specific relevance to this paper is a recent line of research initiated by [8] that seeks to understand the acceleration phenomenon in first-order optimization methods by means of models that evolve in continuous time. [8] introduced a second-order ODE as the continuous limit of Nesterov’s accelerated gradient method and characterized its accelerated convergence properties using Lyapunov stability analysis. The ODE approach to acceleration now includes the use of Hamiltonian dynamical systems [9, 10], inertial systems with Hessian-driven damping [11], and high-resolution ODEs [12, 13]. This body of research is also reminiscient of the classical dynamical systems approach to algorithms in optimization, see [14, 15]. The question of how to discretize the continuous flows while maintaining their accelerated convergence rates has also attracted significant attention, motivated by the ultimate goal of fully understanding the acceleration phenomenon and taking advantage of it to design better optimization algorithms. Interestingly, discretizations of these ODEs do not necessarily lead to acceleration [16]. In fact, explicit discretization schemes, like forward Euler, can even become numerically unstable after a few iterations [17]. Most of the discretization approaches found in the literature are based on the study of well-known integrators, including symplectic integrators [9, 18], Runge-Kutta integrators [19] or modifications of Nesterov’s three sequences [17, 18, 20]. Our previous work [21] instead developed a variable-stepsize discretization using zero-order holds and state-triggers based on the derivative of the Lyapunov function of the original continuous flow. Here, we provide a comprehensive approach based on powerful tools from resource-aware control, including performance-based triggering and state holds that more effectively use sampled information. Other recent approaches to the acceleration phenomena and the synthesis of optimization algorithms using control-theoretic notions and techniques include [22], which employs hybrid systems to design a continuous-time dynamics with a feedback regulator of the viscosity of the heavy-ball ODE to guarantee arbitrarily fast exponential convergence, and [23], which introduced an algorithm which alternates between two (one fast when far from the minimizer but unstable, and another slower but stable around the minimizer) continuous heavy-ball dynamics.

Statement of Contributions

This paper develops a resource-aware control framework to the discretization of accelerated optimization flows that fully exploits their dynamical properties. Our approach relies on the key observation that resource-aware control provides a principled way to go from continuous-time control design to real-time implementation with stability and performance guarantees by opportunistically prescribing when certain resource should be employed. In our treatment, the resource to be aware of is the last sampled state of the system, and hence what we seek to maximize is the stepsize of the resulting discrete-time algorithm. Our first contribution is the introduction of a second-order differential equation which we term heavy-ball dynamics with displaced gradient. This dynamics generalizes the continuous-time heavy-ball dynamics analyzed in the literature by evaluating the gradient of the objective function taking into account the second-order nature of the flow. We establish that the proposed dynamics retains the same convergence properties as the original one while providing additional flexibility in the form of a design parameter.

Our second contribution uses trigger design concepts from resource-aware control to synthesize criteria that determine the variable stepsize of the discrete-time implementation of the heavy-ball dynamics with displaced gradient. We refer to these criteria as event- or self-triggered, depending on whether the stepsize is implicitly or explicitly defined. We employ derivative- and performance-based triggering to ensure the algorithm retains the desired decrease of the Lyapunov function of the continuous flow. In doing so, we face the challenge that the evaluation of this function requires knowledge of the unknown optimizer of the objective function. To circumvect this hurdle, we derive bounds on the evolution of the Lyapunov function that can be evaluated without knowledge of the optimizer. We characterize the convergence properties of the resulting discrete-time algorithms, establishing the existence of a minimum inter-event time and performance guarantees with regards to the objective function.

Our last two contributions provide ways of exploiting the sampled information to enhance the algorithm performance. Our third contribution provides an adaptive implementation of the algorithms that adaptively adjusts the value of the gradient displacement parameter depending on the region of the space to which the state belongs. Our fourth and last contribution builds on the fact that the continuous-time heavy-ball dynamics can be decomposed as the sum of a second-order linear dynamics with a nonlinear forcing term corresponding to the gradient of the objective function. Building on this observation, we provide a more accurate hold for the resource-aware implementation by using the samples only on the nonlinear term, and integrating exactly the resulting linear system with constant forcing. We establish the existence of a minimum inter-event time and characterize the performance with regards to the objective function of the resulting high-order-hold algorithm. Finally, we illustrate the proposed optimization algorithms in simulation, comparing them against the heavy-ball and Nesterov’s accelerated gradient methods and showing superior performance to other discretization methods proposed in the literature.

II Preliminaries

This section presents basic notation and preliminaries.

II-A Notation

We denote by and the sets of real and positive real numbers, resp. All vectors are column vectors. We denote their scalar product by . We use to denote the -norm in Euclidean space. Given , a continuously differentiable function is -strongly convex if for , . Given and a function between two normed spaces and (), is -Lipschitz if for , . The functions we consider here are continuously differentiable, -strongly convex and have -Lipschitz continuous gradient. We refer to the set of functions with all these properties by . A function is positive definite relative to if and for .

II-B Resource-Aware Control

Our work builds on ideas from resource-aware control to develop discretizations of continuous-time accelerated flows. Here, we provide a brief exposition of its basic elements and refer to [24, 25] for further details.

Given a controlled dynamical system , with and , assume we are given a stabilizing continuous state-feedback so that the closed-loop system has as a globally asymptotically stable equilibrium point. Assume also that a Lyapunov function is available as a certificate of the globally stabilizing nature of the controller. Here, we assume this takes the form

| (1) |

for all . Although exponential decay of along the system trajectories is not necessary, we restrict our attention to this case as it arises naturally in our treatment.

Suppose we are given the task of implementing the controller signal over a digital platform, meaning that the actuator cannot be continuously updated as prescribed by the specification . In such case, one is forced to discretize the control action along the execution of the dynamics, while making sure that stability is still preserved. A simple-to-implement approach is to update the control action periodically, i.e., fix , sample the state as and implement

This approach requires to be small enough to ensure that remains a Lyapunov function and, consequently, the system remains stable. By contrast, in resource-aware control, one employs the information generated by the system along its trajectory to update the control action in an opportunistic fashion. Specifically, we seek to determine in a state-dependent fashion a sequence of times , not necessarily uniformly spaced, such that remains a globally asymptotically stable equilibrium for the system

| (2) |

The main idea to accomplish this is to let the state sampling be guided by the principle of maintaining the same type of exponential decay (1) along the new dynamics. To do this, one defines triggers to ensure that this decay is never violated by prescribing a new state sampling. Formally, one sets and , where the stepsize is defined by

| (3) |

We refer to the criteria as event-triggering or self-triggering depending on whether the evaluation of the function requires monitoring of the state along the trajectory of (2) (ET) or just knowledge of its initial condition (ST). The more stringent requirements to implement event-triggering lead to larger stepsizes versus the more conservative ones characteristic of self-triggering. In order for the state sampling to be implementable in practice, the inter-event times must be uniformly lower bounded by a positive minimum inter-event time, abbreviated MIET. In particular, the existence of a MIET rules out the existence of Zeno behavior, i.e., the possibility of an infinite number of triggers in a finite amount of time.

Depending on how the evolution of the function is examined, we describe two types of triggering conditions111In both cases, for a given , we let denote the solution of with initial condition .: {LaTeXdescription}

In this case, is defined as an upper bound of the expression . This definition ensures that (1) is maintained along (2);

In this case, is defined as an upper bound of the expression . Note that this definition ensures that the integral version of (1) is maintained along (2). In general, the performance-based trigger gives rise to stepsizes that are at least as large as the ones determined by the derivative-based approach, cf. [26]. This is because the latter prescribes an update as soon as the exponential decay is about to be violated, and therefore, does not take into account the fact that the Lyapunov function might have been decreasing at a faster rate since the last update. Instead, the performance-based approach reasons over the accumulated decay of the Lyapunov function since the last update, potentially yielding longer inter-sampling times.

A final point worth mentioning is that, in the event-triggered control literature, the notion of resource to be aware of can be many different things, beyond the actuator described above, including the sensor, sensor-controller communication, communication with other agents, etc. This richness opens the way to explore more elaborate uses of the sampled information beyond the zero-order hold in (2), something that we also leverage later in our presentation.

III Problem Statement

Our motivation here is to show that principled approaches to discretization can retain the accelerated convergence properties of continuous-time dynamics, fill the gap between the continuous and discrete viewpoints on optimization algorithms, and lead to the construction of new ones. Throughout the paper, we focus on the continuous-time version of the celebrated heavy-ball method [1]. Let be a function in and let be its unique minimizer. The heavy-ball method is known to have an optimal convergence rate in a neighborhood of the minimizer. For its continuous-time counterpart, consider the following -dependent family of second-order differential equations, with , proposed in [12],

| (4a) | ||||

| (4b) | ||||

We refer to this dynamics as . The following result characterizes the convergence properties of (4) to .

Theorem III.1 ([12]).

Let be

| (5) |

which is positive definite relative to . Then along the dynamics (4) and, as a consequence, is globally asymptotically stable. Moreover, for , the exponential decrease of implies

| (6) |

This result, along with analogous results [12] for the Nesterov’s accelerated gradient descent, serves as an inspiration to build Lyapunov functions that help to explain the accelerated convergence rate of the discrete-time methods.

Inspired by the success of resource-aware control in developing efficient closed-loop feedback implementations on digital systems, here we present a discretization approach to accelerated optimization flows using resource-aware control. At the basis of the approach taken here is the observation that the convergence rate (6) of the continuous flow is a direct consequence of the Lyapunov nature of the function (III.1). In fact, the integration of along the system trajectories yields

Since , we deduce

The characterization of the convergence rate via the decay of the Lyapunov function is indeed common among accelerated optimization flows. This observation motivates the resource-aware approach to discretization pursued here, where the resource that we aim to use efficiently is the sampling of the state itself. By doing so, the ultimate goal is to give rise to large stepsizes that take maximum advantage of the decay of the Lyapunov function (and consequently of the accelerated nature) of the continuous-time dynamics in the resulting discrete-time implementation.

IV Resource-Aware Discretization of Accelerated Optimization Flows

In this section we propose a discretization of accelerated optimization flows using state-dependent triggering and analyze the properties of the resulting discrete-time algorithm. For convenience, we use the shorthand notation . In following with the exposition in Section II-B, we start by considering the zero-order hold implementation , of the heavy-ball dynamics (4),

| (7a) | ||||

| (7b) | ||||

Note that the solution trajectory takes the form , which in discrete-time terminology corresponds to a forward-Euler discretization of (4). Component-wise, we have

As we pointed out in Section II-B, the use of sampled information opens the way to more elaborated constructions than the zero-order hold in (7). As an example, given the second-order nature of the heavy-ball dynamics, it would seem reasonable to leverage the (position, velocity) nature of the pair (meaning that, at position , the system is moving with velocity ) by employing the modified zero-order hold

| (8a) | ||||

| (8b) | ||||

where . Note that the trajectory of (8) corresponds to the forward-Euler discretization of the continuous-time dynamics

| (9) |

We refer to this as the heavy-ball dynamics with displaced gradient and denote it by (note that (8) and (9) with recover (7) and (4), respectively). In order to pursue the resource-aware approach laid out in Section II-B with the modified zero-order hold in (8), we need to characterize the asymptotic convergence properties of the heavy-ball dynamics with displaced gradient, which we tackle next.

Remark IV.1.

(Connection between the use of sampled information and high-resolution-ODEs). A number of works [27, 28, 29] have explored formulations of Nesterov’s accelerated that employ displaced-gradient-like terms similar to the one used above. Here, we make this connection explicit. Given Nesterov’s algorithm

the work [12] obtains the following limiting high-resolution ODE

| (10) |

Interestingly, considering instead the evolution of the -variable and applying similar arguments to the ones in [12], one instead obtains

| (11) |

which corresponds to the continuous heavy-ball dynamics in (4) evaluated with a displaced gradient, i.e., (9). Even further, if we Taylor expand the last term in (11) as

and disregard the term, we recover (10). This shows that (11) is just (10) with extra higher-order terms in , and provides evidence of the role of gradient displacement in enlarging the modeling capabilities of high-resolution ODEs.

IV-A Asymptotic Convergence of Heavy-Ball Dynamics with Displaced Gradient

In this section, we study the asymptotic convergence of heavy-ball dynamics with displaced gradient. Interestingly, for sufficiently small, this dynamics enjoys the same convergence properties as the dynamics (4), as the following result shows.

Theorem IV.2.

(Global asymptotic stability of heavy-ball dynamics with displaced gradient). Let be

where, for brevity, , and define

| (12) |

Then, for , along the dynamics (9) and, as a consequence, is globally asymptotically stable. Moreover, for , the exponential decrease of implies (6) holds along the trajectories of .

Proof.

Note that

where in the second equality, we have added and subtracted . Observe that “Term I” corresponds to and is therefore negative by Theorem III.1. From [21], this term can be bounded as

| Term I | |||

Let us study the other two terms. By strong convexity, we have , and therefore

| Term II |

Regarding Term III, one can use the -Lipschitzness of and strong convexity to obtain

| Term III |

Now, using the notation in the statement, we can write

| (13) | ||||

If , then the RHS of (13) is negative for any . If , the RHS of (13) is negative if and only if

The RHS of this equation corresponds to , with the function defined in (A.3). From Lemma A.1, as long as , this function is lower bounded by

where is defined in (A.4). This exactly corresponds to (12), concluding the result. ∎

Remark IV.3.

(Adaptive displacement along the trajectories of heavy-ball dynamics with displaced gradient). From the proof of Theorem IV.2, one can observe that if is such that and , for , then one can upper bound the LHS of (13) by

If , any makes this expression negative. If instead , then must satisfy

| (14) |

This argument shows that over the region , any satisfying (14) ensures that , and hence the desired exponential decrease of the Lyapunov function. This observation opens the way to modify the value of the parameter adaptively along the execution of the heavy-ball dynamics with displaced gradient, depending on the region of state space visited by its trajectories.

IV-B Triggered Design of Variable-Stepsize Algorithms

In this section we propose a discretization of the continuous heavy-ball dynamics based on resource-aware control. To do so, we employ the approaches to trigger design described in Section II-B on the dynamics , whose forward-Euler discretization corresponds to the modified zero-order hold (8) of the heavy-ball dynamics.

Our starting point is the characterization of the asymptotic convergence properties of developed in Section IV-A. The trigger design necessitates of bounding the evolution of the Lyapunov function in (III.1) for the continuous-time heavy-ball dynamics with displaced gradient along its zero-order hold implementation. However, this task presents the challenge that the definition of involves the minimizer of the optimization problem itself, which is unknown (in fact, finding it is the ultimate objective of the discrete-time algorithm we seek to design). In order to synthesize computable triggers, this raises the issue of bounding the evolution of as accurately as possible while avoiding any requirement on the knowledge of . The following result, whose proof is presented in Appendix A, addresses this point.

Proposition IV.4.

(Upper bound for derivative-based triggering with zero-order hold). Let and define

where

Let be the trajectory of the zero-order hold dynamics , . Then, for ,

The importance of Proposition IV.4 stems from the fact that the triggering conditions defined by , , can be evaluated without knowledge of the optimizer . We build on this result next to establish an upper bound for the performance-based triggering condition.

Proposition IV.5.

(Upper bound for performance-based triggering with zero-order hold). Let and

for . Let be the trajectory of the zero-order hold dynamics , . Then, for ,

Proof.

We rewrite , and note that

Note that the integrand corresponds to the derivative-based criterion bounded in Proposition IV.4. Therefore,

for , and the result follows. ∎

Propositions IV.4 and IV.5 provide us with the tools to determine the stepsize according to the derivative- and performance-based triggering criteria, respectively. For convenience, and following the notation in (3), we define the stepsizes

| (15a) | ||||

| (15b) | ||||

for . Observe that, as long as and , we have for and, as a consequence, . The ET/ST terminology is justified by the following observation: in the ET case, the equation defining the stepsize is in general implicit in . Instead, in the ST case, the equation defining the stepsize is explicit in . Equipped with this notation, we define the variable-stepsize algorithm described in Algorithm 1, which consists of following the dynamics (8) until the exponential decay of the Lyapunov function is violated as estimated by the derivative-based () or the performance-based () triggering condition. When this happens, the algorithm re-samples the state before continue flowing along (8).

IV-C Convergence Analysis of Displaced-Gradient Algorithm

Here we characterize the convergence properties of the derivative- and performance-based implementations of the Displaced-Gradient Algorithm. In each case, we show that algorithm is implementable (i.e., it admits a MIET) and inherits the convergence rate from the continuous-time dynamics. The following result deals with the derivative-based implementation of Algorithm 1.

Theorem IV.6.

(Convergence of derivative-based implementation of Displaced-Gradient Algorithm). Let be

and define

| (16) |

with . Then, for , , and , the variable-stepsize strategy in Algorithm 1 has the following properties

-

(i)

the stepsize is uniformly lower bounded by the positive constant , where

(17) , , and

-

(ii)

for all and .

As a consequence, .

Proof.

Regarding fact (i), we prove the result for the -case, as the -case follows from . We start by upper bounding by a negative quadratic function of and as follows,

Using the -Lipschitzness of the gradient and Young’s inequality, we can easily upper bound

| (a) | |||

| (b) | |||

| (c) | |||

| (d) | |||

Note that, with the definition of the constants in the statement, we can write

Therefore, for , we have

and hence . Similarly, introducing

one can show that

Thus, from (15a), we have

| (18) | ||||

Using now [21, supplementary material, Lemma 1], we deduce

where

With these elements in place and referring to (18), we have

We observe now that is monotonically decreasing and lower bounded. So, if is upper bounded, then is lower bounded by a positive constant. Taking gives the bound of the stepsize. Finally, the algorithm design together with Proposition IV.4 ensure fact (ii) throughout its evolution. ∎

It is worth noticing that the derivative-based implementation of the Displaced-Gradient Algorithm generalizes the algorithm proposed in our previous work [21] (in fact, the strategy proposed there corresponds to the choice ). The next result characterizes the convergence properties of the performance-based implementation of Algorithm 1.

Theorem IV.7.

(Convergence of performance-based implementation of Displaced-Gradient Algorithm). For , , and , the variable-stepsize strategy in Algorithm 1 has the following properties

-

(i)

the stepsize is uniformly lower bounded by the positive constant ;

-

(ii)

for all and .

As a consequence, .

Proof.

To show (i), notice that it is sufficient to prove that is uniformly lower bounded away from zero. This is because of the definition of stepsize in (15b) and the fact that for all and all . For an arbitrary fixed , note that is strictly negative in the interval given the definition of stepsize in (15a). Consequently, the function is strictly negative over . From the definition of , it then follows that . The result now follows by noting that is uniformly lower bounded away from zero by a positive constant, cf. Theorem IV.6(i).

The proof of Theorem IV.7 brings up an interesting geometric interpretation of the relationship between the stepsizes determined according to the derivative- and performance-based approaches. In fact, since

we observe that is precisely the (positive) critical point of . Therefore, is the smallest nonzero root of , whereas is the time where achieves its smallest value, and consequently is furthest away from zero. This confirms the fact that the performance-based approach obtains larger stepsizes than the derivative-based approach.

V Exploiting Sampled Information to Enhance Algorithm Performance

Here we describe two different refinements of the implementations proposed in Section IV to further enhance their performance. Both of them are based on further exploiting the sampled information about the system. The first refinement, cf. Section V-A, looks at the possibility of adapting the value of the gradient displacement as the algorithm is executed. The second refinement, cf. Section V-B, develops a high-order hold that more accurately approximates the evolution of the continuous-time heavy-ball dynamics with displaced gradient.

V-A Adaptive Gradient Displacement

The derivative- and performance-based triggered implementations in Section IV-B both employ a constant value of the parameter . Here, motivated by the observation made in Remark IV.3, we develop triggered implementations that adaptively adjust the value of the gradient displacement depending on the region of the space to which the state belongs. Rather than relying on the condition (14), which would require partitioning the state space based on bounds on and , we seek to compute on the fly a value of the parameter that ensures the exponential decrease of the Lyapunov function at the current state. Formally, the strategy is stated in Algorithm 2.

Proposition V.1.

(Convergence of Adaptive Displaced-Gradient Algorithm). For , , and , the variable-stepsize strategy in Algorithm 2 has the following properties:

-

(i)

it is executable (i.e., at each iteration, the parameter is determined in a finite number of steps);

-

(ii)

the stepsize is uniformly lower bounded by ;

-

(iii)

it satisfies , for .

Proof.

Notice first that the function defined in (17) is continuous and therefore attains its minimum over a compact set. At each iteration, Algorithm 2 first ensures that , decreasing if this is not the case. We know this process is guaranteed as soon as (cf. proof of Theorem IV.6) and hence only takes a finite number of steps. Once , the stepsize could be computed to guarantee the desired decrease of the Lyapunov function . The algorithm next checks if the stepsize is lower bounded by . If that is not the case, then the algorithm reduces and re-checks if . With this process and in a finite number of steps, the algorithm eventually either computes a stepsize lower bounded by with or decreases enough to make , for which we know that the stepsize is already lower bounded by . These arguments establish facts (i) and (ii) at the same time. Finally, fact (iii) is a consequence of the prescribed decreased of the Lyapunov function along the algorithm execution. ∎

V-B Discretization via High-Order Hold

The modified zero-order hold based on employing displaced gradients developed in Section IV is an example of the possibilities enabled by more elaborate uses of sampled information. In this section, we propose another such use based on the observation that the continuous-time heavy-ball dynamics can be decomposed as the sum of a linear term and a nonlinear term. Specifically, we have

Note that the first term in this decomposition is linear, whereas the other one contains the potentially nonlinear gradient term that complicates finding a closed-form solution. Keeping this in mind when considering a discrete-time implementation, it would seem reasonable to perform a zero-order hold only on the nonlinear term while exactly integrating the resulting differential equation. Formally, a zero-order hold at of the nonlinear term above yields a system of the form

| (19) |

with , and where

Equation (19) is an in-homogeneous linear dynamical system, which is integrable by the method of variation of constants [30]. Its solution is given by , or equivalently,

| (20a) | ||||

| (20b) | ||||

We refer to this trajectory as a high-order-hold integrator. In order to develop a discrete-time algorithm based on this type of integrator, the next result provides a bound of the evolution of the Lyapunov function along the high-order-hold integrator trajectories. The proof is presented in Appendix A.

Proposition V.2.

(Upper bound for derivative-based triggering with high-order hold). Let and define

where

and

Let be the high-order-hold integrator trajectory (20) from . Then, for ,

Analogously to what we did in Section IV-B, we build on this result to establish an upper bound for the performance-based triggering condition with the high-order-hold integrator.

Proposition V.3.

(Upper bound for performance-based triggering with high-order hold). Let and

| (21) |

for . Let be the high-order-hold integrator trajectory (20) from . Then, for ,

Using Proposition V.2, the proof of this result is analogous to that of Proposition IV.5, and we omit it for space reasons. Propositions V.2 and V.3 are all we need to fully specify the variable-stepsize algorithm based on high-order-hold integrators. Formally, we set

| (22) |

for and . With this in place, we design Algorithm 3, which is a higher-order counterpart to Algorithm 2, and whose convergence properties are characterized in the following result.

Proposition V.4.

(Convergence of Adaptive High-Order-Hold Algorithm). For , and , there exists such that for , the variable-stepsize strategy in Algorithm 3 has the following properties:

-

(i)

it is executable (i.e., at each iteration, the parameter is determined in a finite number of steps);

-

(ii)

the stepsize is uniformly lower bounded by ;

-

(iii)

it satisfies , for .

We omit the proof of this result, which is analogous to that of Proposition V.1, with lengthier computations.

VI Simulations

Here we illustrate the performance of the methods resulting from the proposed resource-aware discretization approach to accelerated optimization flows. Specifically, we simulate in two examples the performance-based implementation of the Displaced Gradient algorithm (denoted DG) and the derivative- and performance-based implementations of the High-Order-Hold (HOH and HOH respectively) algorithms. We compare these algorithms against the Nesterov’s accelerated gradient and the heavy-ball methods, as they still exhibit similar or superior performance to the discretization approaches proposed in the literature, cf. Section I.

Optimization of Ill-Conditioned Quadratic Objective Function

Consider the optimization of the objective function defined by . Note that and . We use and initialize the velocity according to (4b). For DG, HOH, and HOH, we set and implement the event-triggered approach (at each iteration, we employ a numerical zero-finding routine to explicitly determine the stepsizes , , and , respectively).

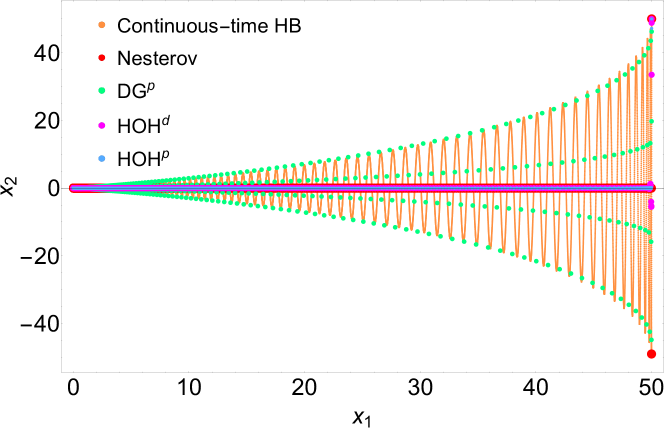

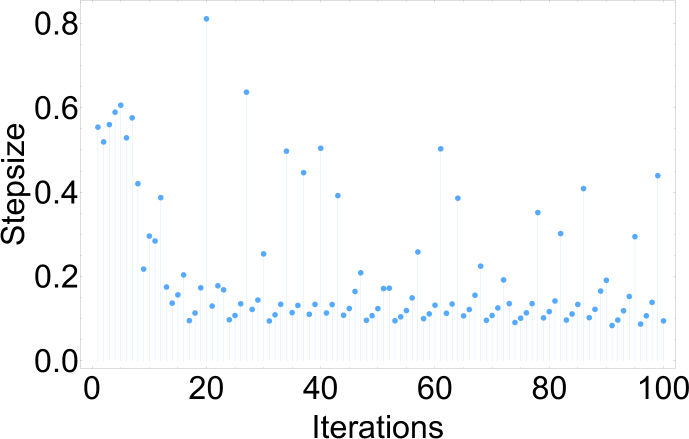

Figure 1(a) illustrates how the stepsize of HOH changes during the first iterations. After the tuning of the stepsize during the first iterations, it becomes quite steady (likely due to the simplicity of quadratic functions) until the trajectory approaches the minimizer. After iterations, the algorithm stepsize becomes almost equal to the optimal stepsize.

Figure 1(b) compares the performance of DG, HOH, and HOH against the continuous heavy-ball method and the discrete Nesterov method for strongly convex functions. The DG algorithm takes large stepsizes following the evolution of the continuous heavy-ball along the straight lines . Meanwhile, the higher-order nature of the hold employed by HOH and HOH makes them able to leap over the oscillations, yielding a state evolution similar to Nesterov’s method. Figure 2 shows the evolution of the objective and Lyapunov functions. We observe that after some initial iterations, HOH outperforms Nesterov’s method. Eventually, also DG catches up to Nesterov’s method.

Logarithmic Regression

Consider the optimization of the regularized logistic regression cost function defined by , where the points are generated randomly using a uniform distribution in the interval , and the points are generated similarly with quantized values. This objective function is -strongly convex and one can also compute the value . We use and , and initialize the velocity according to (4b). Figure 3(a) and (b) show the evolution of the stepsize along HOH, which changes as a function of the state looking to satisfy the desired decay of the Lyapunov function.

Figure 4 shows the evolution of the objective and Lyapunov functions. We observe how HOH and HOH outperform Nesterov’s method, although eventually the heavy-ball algorithm performs the best. The Lyapunov function decreases at a much faster rate along HOH and HOH than along DG.

VII Conclusions

We have introduced a resource-aware control framework to the discretization of accelerated optimization flows that specifically takes advantage of their dynamical properties. We have exploited fundamental concepts from opportunistic state-triggering related to the various ways of encoding the notion of valid Lyapunov certificates, the use of sampled-data information, and the construction of state estimators and holders to synthesize variable-stepsize optimization algorithms that retain by design the convergence properties of their continuous-time counterparts. We believe these results open the way to a number of exciting research directions. Among them, we highlight the design of adaptive learning schemes to refine the use of sampled data and optimize the algorithm performance with regards to the objective function, the characterization of accelerated convergence rates, employing tools and insights from hybrid systems for analysis and design, enriching the proposed designs by incorporating re-start schemes as triggering conditions to avoid overshooting and oscillations, and developing distributed implementations for network optimization problems.

References

- [1] B. T. Polyak, “Some methods of speeding up the convergence of iterative methods,” USSR Computational Mathematics and Mathematical Physics, vol. 4, no. 5, pp. 1–17, 1964.

- [2] Y. E. Nesterov, “A method of solving a convex programming problem with convergence rate ,” Soviet Mathematics Doklady, vol. 27, no. 2, pp. 372–376, 1983.

- [3] Z. Allen-Zhu and L. Orecchia, “Linear Coupling: An Ultimate Unification of Gradient and Mirror Descent,” in 8th Innovations in Theoretical Computer Science Conference (ITCS 2017), Dagstuhl, Germany, 2017, pp. 1–22.

- [4] B. Hu and L. Lessard, “Dissipativity theory for Nesterov’s accelerated method,” in International Conference on Machine Learning, International Convention Centre, Sydney, Australia, August 2017, pp. 1549–1557.

- [5] L. Lessard, B. Recht, and A. Packard, “Analysis and design of optimization algorithms via integral quadratic constraints,” SIAM Journal on Optimization, vol. 26, no. 1, pp. 57–95, 2016.

- [6] B. V. Scoy, R. A. Freeman, and K. M. Lynch, “The fastest known globally convergent first-order method for minimizing strongly convex functions,” IEEE Control Systems Letters, vol. 2, no. 1, pp. 49–54, 2018.

- [7] S. Bubeck, Y. Lee, and M. Singh, “A geometric alternative to Nesterov’s accelerated gradient descent,” arXiv preprint arXiv:1506.08187, 2015.

- [8] W. Su, S. Boyd, and E. J. Candès, “A differential equation for modeling Nesterov’s accelerated gradient method: theory and insights,” Journal of Machine Learning Research, vol. 17, pp. 1–43, 2016.

- [9] M. Betancourt, M. Jordan, and A. C. Wilson, “On symplectic optimization,” arXiv preprint arXiv: 1802.03653, 2018.

- [10] C. J. Maddison, D. Paulin, Y. W. Teh, B. O’Donoghue, and A. Doucet, “Hamiltonian descent methods,” arXiv preprint arXiv:1809.05042, 2018.

- [11] H. Attouch, Z. Chbani, J. Fadili, and H. Riahi, “First-order optimization algorithms via inertial systems with Hessian driven damping,” arXiv preprint arXiv:1907.10536, 2019.

- [12] B. Shi, S. S. Du, M. I. Jordan, and W. J. Su, “Understanding the acceleration phenomenon via high-resolution differential equations,” arXiv preprint arXiv:1810.08907, 2018.

- [13] B. Sun, J. George, and S. Kia, “High-resolution modeling of the fastest first-order optimization method for strongly convex functions,” arXiv preprint arXiv:2008.11199, 2020.

- [14] R. W. Brockett, “Dynamical systems that sort lists, diagonalize matrices, and solve linear programming problems,” Linear Algebra and its Applications, vol. 146, pp. 79–91, 1991.

- [15] U. Helmke and J. B. Moore, Optimization and Dynamical Systems. Springer, 1994.

- [16] B. Shi, S. S. Du, M. I. Jordan, and W. J. Su, “Acceleration via symplectic discretization of high-resolution differential equations,” arXiv preprint arXiv:1902.03694, 2019.

- [17] A. Wibisono, A. C. Wilson, and M. I. Jordan, “A variational perspective on accelerated methods in optimization,” Proceedings of the National Academy of Sciences, vol. 113, no. 47, pp. E7351–E7358, 2016.

- [18] A. Wilson, L. Mackey, and A. Wibisono, “Accelerating rescaled gradient descent: Fast optimization of smooth functions,” in Advances in Neural Information Processing Systems, H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alché Buc, E. Fox, and R. Garnett, Eds., vol. 31. Curran Associates, Inc., 2019, pp. 13 555–13 565.

- [19] J. Zhang, A. Mokhtari, S. Sra, and A. Jadbabaie, “Direct Runge-Kutta discretization achieves acceleration,” in Advances in Neural Information Processing Systems, S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, Eds., vol. 31. Curran Associates, Inc., 2018, pp. 3900–3909.

- [20] A. C. Wilson, B. Recht, and M. I. Jordan, “A Lyapunov analysis of momentum methods in optimization,” arXiv preprint arXiv:1611.02635, 2018.

- [21] M. Vaquero and J. Cortés, “Convergence-rate-matching discretization of accelerated optimization flows through opportunistic state-triggered control,” in Advances in Neural Information Processing Systems, H. Wallach, H. Larochelle, A. Beygelzimer, F. d’Alché Buc, E. Fox, and R. Garnett, Eds. Curran Associates, Inc., 2019, vol. 32, pp. 9767–9776.

- [22] A. S. Kolarijani, P. M. Esfahani, and T. Keviczky, “Fast gradient-based methods with exponential rate: a hybrid control framework,” in International Conference on Machine Learning, July 2018, pp. 2728–2736.

- [23] D. Hustig-Schultz and R. G. Sanfelice, “A robust hybrid Heavy-Ball algorithm for optimization with high performance,” in American Control Conference, July 2019, pp. 151–156.

- [24] W. P. M. H. Heemels, K. H. Johansson, and P. Tabuada, “An introduction to event-triggered and self-triggered control,” in IEEE Conf. on Decision and Control, Maui, HI, 2012, pp. 3270–3285.

- [25] C. Nowzari, E. Garcia, and J. Cortés, “Event-triggered control and communication of networked systems for multi-agent consensus,” Automatica, vol. 105, pp. 1–27, 2019.

- [26] P. Ong and J. Cortés, “Event-triggered control design with performance barrier,” in IEEE Conf. on Decision and Control, Miami Beach, FL, Dec. 2018, pp. 951–956.

- [27] M. Laborde and A. Oberman, “A Lyapunov analysis for accelerated gradient methods: From deterministic to stochastic case,” in AISTATS, vol. 108, Online, 2020, pp. 602–612.

- [28] M. Muehlebach and M. Jordan, “A dynamical systems perspective on Nesterov acceleration,” in International Conference on Machine Learning, vol. 97, Long Beach, California, USA, 2019, pp. 4656–4662.

- [29] I. Sutskever, J. Martens, G. Dahl, and G. Hinton, “On the importance of initialization and momentum in deep learning,” in International Conference on Machine Learning, vol. 28, Atlanta, Georgia, USA, 2013, pp. 1139–1147.

- [30] L. Perko, Differential Equations and Dynamical Systems, 3rd ed., ser. Texts in Applied Mathematics. New York: Springer, 2000, vol. 7.

- [31] S. Lang, Real and Functional Analysis, 3rd ed. New York: Springer, 1993.

Appendix A

Throughout the appendix, we make use of a number of basic facts that we gather here for convenience,

| (A.1a) | ||||

| (A.1b) | ||||

| (A.1c) | ||||

| (A.1d) | ||||

| (A.1e) | ||||

We also resort at various points to the expression of the gradient of ,

| (A.2) |

The following result is used in the proof of Theorem IV.2.

Lemma A.1.

For , the function

| (A.3) |

is positively lower bounded on .

Proof.

The derivative of is

The solutions to are then given by

| (A.4) |

Note that , is negative on , and positive on . Therefore the minimum value over is achieved at , and corresponds to . ∎

Proof of Proposition IV.4.

We break out as follows

and bound each term separately.

. From the definition (III.1) of and the fact that , we have

Using this bound, we obtain

Writing as and using strong convexity, we can upper bound in the last summand by the expression

Substituting this bound above and re-grouping terms,

Observe that

| (a) | |||

| (b) | |||

where, in the expression of (a), we have expressed as and, in the expression of (b), we have expanded the square and used the Cauchy-Schwartz inequality [31]. Finally, resorting to (A.1), we obtain

. Using (A.2) we have

Therefore, reads

and hence

The RHS of the last expression is precisely . Using the -Lipschitzness of , one can see that .

. From (III.1),

Expanding the squares in the second and third summands, and simplifying, we obtain

Note that

where in the inequality we have used (A.1d) with and . Using this in the equation above, one identifies the expression of . Finally, applying (A.1c), one can show that , concluding the proof. ∎

Proof of Proposition V.2.

For convenience, let

where . We next provide a bound for the expression

Next, we bound each term separately.

. Since , this term is exactly the same as Term I + II + III in the proof of Proposition IV.4, and hence the bound obtained there is valid.

. Using (A.2), we have

from where we obtain . Now, using , the -Lipschitzness of , and the Cauchy-Schwartz inequality, we have

Using (20), the triangle inequality, and , we can write

| (A.5a) | ||||

| (A.5b) | ||||

Substituting into the bound for above, we obtain

as claimed.

. Using (A.2), we have

Taking the absolute value and using the Cauchy-Schwartz inequality in conjunction with (A.5), we obtain the expression corresponding to .

. From (III.1),

Expanding the third summand (using and ) as , we obtain after simplification

| (A.6) | |||

Using (20), we have

where we have used (A.1d) to derive the inequality. Substituting this bound into (A.6), we obtain . To obtain the -expressions, we bound each remaining term separately as follows. Note that

where we have used (A.5a) to obtain the last inequality. Next,

where we have used (A.5b) to obtain the last inequality. Using , we bound

where we have used (A.5). Finally,

Employing these bounds in the expression of , we obtain , as claimed. ∎

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/876cea68-1c0b-4d21-8f49-abac6b6cf5ef/photo-MV.jpg) |

Miguel Vaquero was born in Galicia, Spain. He received his Licenciatura and Master’s degree in mathematics from the Universidad de Santiago de Compostela, Spain and the Ph.D. degree in mathematics from Instituto de Ciencias Matemáticas (ICMAT), Spain in . He was then a postdoctoral scholar working on the ERC project “Invariant Submanifolds in Dynamical Systems and PDE” also at ICMAT. Since October 2017, he has been a postdoctoral scholar at the Department of Mechanical and Aerospace Engineering of UC San Diego. He will start in as an Assistant Professor at the School of Human Sciences and Technology of IE University, Madrid, Spain. His interests include optimization, dynamical systems, control theory, machine learning, and geometric mechanics. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/876cea68-1c0b-4d21-8f49-abac6b6cf5ef/photo-Pol.jpg) |

Pol Mestres was born in Catalonia, Spain in 1997. He is currently a student of the Bachelor’s Degree in Mathematics and the Bachelor’s Degree in Engineering Physics at Universitat Politècnica de Catalunya, Barcelona, Spain. He was a visiting scholar at University of California, San Diego, CA, USA, from September 2019 to March 2020. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/876cea68-1c0b-4d21-8f49-abac6b6cf5ef/photo-JC.jpg) |

Jorge Cortés (M’02, SM’06, F’14) received the Licenciatura degree in mathematics from Universidad de Zaragoza, Zaragoza, Spain, in 1997, and the Ph.D. degree in engineering mathematics from Universidad Carlos III de Madrid, Madrid, Spain, in 2001. He held postdoctoral positions with the University of Twente, Twente, The Netherlands, and the University of Illinois at Urbana-Champaign, Urbana, IL, USA. He was an Assistant Professor with the Department of Applied Mathematics and Statistics, University of California, Santa Cruz, CA, USA, from 2004 to 2007. He is currently a Professor in the Department of Mechanical and Aerospace Engineering, University of California, San Diego, CA, USA. He is the author of Geometric, Control and Numerical Aspects of Nonholonomic Systems (Springer-Verlag, 2002) and co-author (together with F. Bullo and S. Martínez) of Distributed Control of Robotic Networks (Princeton University Press, 2009). He is a Fellow of IEEE and SIAM. At the IEEE Control Systems Society, he has been a Distinguished Lecturer (2010-2014), and is currently its Director of Operations and an elected member (2018-2020) of its Board of Governors. His current research interests include distributed control and optimization, network science, resource-aware control, nonsmooth analysis, reasoning and decision making under uncertainty, network neuroscience, and multi-agent coordination in robotic, power, and transportation networks. |