2021

[1]\fnmXiaoYan \surLiu

1]\orgdivFaculty of Information Engineering and Automation, \orgnameKunming University of Science and Technology, \orgaddress\cityKunming, \postcode650504, \countryChina

Research on Parallel SVM Algorithm Based on Cascade SVM

Abstract

Cascade SVM (CSVM) can group datasets and train subsets in parallel, which greatly reduces the training time and memory consumption. However, the model accuracy obtained by using this method has some errors compared with direct training. In order to reduce the error, we analyze the causes of error in grouping training, and summarize the grouping without error under ideal conditions. A Balanced Cascade SVM (BCSVM) algorithm is proposed, which balances the sample proportion in the subset after grouping to ensure that the sample proportion in the subset is the same as the original dataset. At the same time, it proves that the accuracy of the model obtained by BCSVM algorithm is higher than that of CSVM. Finally, two common datasets are used for experimental verification, and the results show that the accuracy error obtained by using BCSVM algorithm is reduced from 1% of CSVM to 0.1%, which is reduced by an order of magnitude.

keywords:

parallel computing,support vector machine,chunking,balance subset1 Introduction

Support Vector Machines (SVM) vapnik1995 is a very powerful tool in the field of classification and regression, but its calculation and storage requirements grow exponentially with the increase of training samples. The core of support vector machine is a Quadratic Programming (QP) problem. A key factor limiting the application of SVM in large sample problems is that the super-large Quadratic Programming problems induced by SVM training cannot be solved by standard Quadratic Programming methods. SMO algorithm (Sequential Minimal Optimization (SMO)SMO is a large-scale SVM training algorithm. Its advantage is that each step of Optimization only deals with two samples, and it can be solved quickly by analytical methods. Therefore, it is faster than other algorithms in computing large sample sets. However, when the number of samples increases further, the increase of its computational complexity is still much faster than the increase of the number of samples, so it is difficult to meet the actual demand.

Since support vector machines only rely on support vectors in the training sample set to make decisions, non-support vectors have no effect and are just useless information. Removing them does not affect the training results at all. It is the processing of such useless information that occupies the main time of the algorithm, so one of the ideas to speed up SVM training is ”chunking”. The so-called block is to divide the original sample set into many subsets, and only one subset is optimized by the SMO algorithm in each step. The feature that non-support vectors can be removed after training is used to accelerate the training speed.

Wu Xiang et al. wuxiang improved the idea of block algorithm and proposed the block SMO algorithm. The improved algorithm has a very obvious advantage in speed for the large sample problem with a small support vector set. Graf et al. cascadeSVM proposed the cascade support vector machine, which is also an algorithm based on the idea of partitioning. In this algorithm, the data sets are randomly grouped, the subsets after grouping are trained in parallel, and the support vectors obtained by training are combined in pairs. After the combination, the training is repeated to obtain a final training result. Finally, whether the final result is regrouped is determined according to the conditions. In recent years, the cascade support vector machine algorithm has been combined with distributed computing for training, making full use of the advantages of distributed computing that can flexibly schedule multiple computing nodes, greatly reducing the training time. For example, Zhang Pengxiangmapreduce , Liu Zeshan spark , Niehadoop , Bai Yusxin flink and others have implemented corresponding parallel training algorithms based on cascade support vector machines on MapReduce, Spark, Hadoop and Flink platforms respectively. However, because the data set is randomly segmented based on the cascaded support vector machine, there is a certain probability that the real global support vector of the whole sample set will be removed during the training of the subset, and the final model will inevitably have certain errors compared with the direct training.

This paper analyzes the situation of global support vector reservation, proposes Balanced Cascade Support Vector Machine, tries to use BCSVM algorithm to reduce the error between CSVM and direct training, and proves mathematically that BCSVM algorithm can preserve global support vector with higher probability. The organization of this paper is as follows: Chapter 2 briefly introduces support vector machines and cascade support vector machines; Chapter 3 presents BCSVM and explains it in detail in pseudocode. Chapter 4 proves the validity of BCSVM in a probabilistic way. Chapter 5 further verifies the utility of BCSVM through experiments. The last chapter summarizes the article.

2 Preliminaries

2.1 Support Vector Machines

Support vector machine is a supervised learning model that can be used for classification and is one of the most famous machine learning algorithmsengineering2019 . The principle is to find the classification hyperplane with the maximum edge distance in the feature space to determine the classification of new data.

Given a binary data set with data points , which contains data points and the corresponding label , we call the label samples positive samples, and the others negative samples. All of the samples in the data set are a dimensional vector, which is . Support vector machines are all about finding a dimensional hyperplane to distinguish between two categories in a sample. However, in Euclidean space, real-world data tends to be linearly indivisible. Therefore, in practice, in order to make the two categories distinguishable by hyperplane, data is often mapped to a higher dimensional space . This method is also called kernel trick, which was first proposed by Aizerman et al.aizerman1964 , and then applied to support vector machines by Boser et al.boser1992

2.2 Cascade SVM

The architecture of cascaded support vector machines is shown in Figure 1. Firstly, the algorithm divides the whole dataset into several subsets, trains each subset in parallel in the first layer, and combines the obtained support vectors in pairs as the input of the next layer. In this architecture, one SVM does not need to process the entire training set. If the first layers can filter out most of the non-support vectors, the last layer needs to process very few samples.

The advantage of this algorithm is that each subset only needs to process part of the data, and the training among each subset is independent, which can perform parallel computing well. Therefore, compared with the direct training of the whole data set, cascaded support vector machine can greatly accelerate the training speed. However, since the grouping method is random grouping, there is a high probability of losing the global support vector. That is, there is still a certain gap between the model finally obtained and the model directly trained. And because it adopts binary cascade, there are multiple merge behaviors. If most of the merged support vectors are global support vectors, only a small number of samples can be removed after the merged training, and the training efficiency will be very low. To solve this problem, The SP-SVM proposed by Liu Zeshan et alspark modified the cascade mode in the distributed cluster environment. They integrated the support vectors obtained from each layer of training into a training set, and then conducted group training on the integrated training set. This cascading mode effectively solves the problem of low utilization of cluster resources in a distributed cluster environment. The grouped parallel SVM algorithm is especially suitable for data sets with large number of samples and small support vectors. In this case, the training time and memory consumption show obvious advantages, because most samples can be filtered each time. However, its disadvantages are also obvious. In the case that the support vector occupies the majority of the data set, each segmentation basically cannot filter the sample, and in extreme cases, its training speed will even be slower than direct training.

3 Balance Cascade SVM

Balanced Cascaded Support Vector Machine adopts the idea of group cascade in cascaded support vector machine, combined with SP-SVM in the cascade of each layer of training results, and modified the group mode of cascaded support vector machine on this basis.

The difference between the balanced cascaded support vector machine and the cascaded support vector machine lies in the grouping method. The grouping method adopted by the cascaded support vector machine is random grouping, while the balanced cascaded support vector machine balances the sample proportion in the subset after grouping, that is, the sample proportion in each subset is the same as the original data set. Its pseudocode is shown in algorithm 1. Where classifyNum is the group number of each layer, for example, the number group [4,1] indicates that the first layer is divided into four subsets, and the second layer only has one subset. It should be noted that the last element in classifyNum must is 1, so as to obtain a unique training model. The algorithm firstly separates the training set according to the type of samples, and then divides all kinds of samples into each subset in a balanced way. After that, we train the subsets in parallel and integrate the support vectors, and then train the support vectors in groups, and finally get a unique model.

Compared with CSVM, BCSVM only modified the grouping method of subsets, and the time complexity of the original SMO algorithm was between and , while the time complexity after grouping was ( was the number of samples, was the number of groups), so there was no essential change in its complexity. However, in the application, each subset only needs to process the data in the subset, so the actual training time can be greatly reduced. Generally, in order to speed up the training speed, the program will store the kernel function matrix in memory, so its space complexity is . In the same way as the time complexity, the space complexity does not change substantially after grouping, only changing to . However, most SVM training tools adopt certain strategies to limit memory occupation, for example, LibSVMLIBSVM adopts LRU(Least Recently Used) algorithm to control memory occupation.

4 Prove

Through the research and analysis of SVM training process, there are the following situations in which global support vector is not removed in grouping training:

1. There is no noise in the dataset. Noise is linearly indivisible data. If the data does not exist, then the dataset is linearly separable. Suppose represents the entire data set, represents the support vector, represents any sample other than the support vector, and represents the geometric distance between the sample and the detached hyperplane. There are clearly

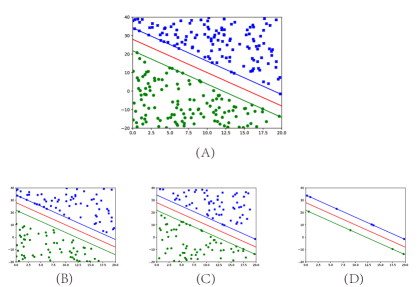

In other words, if the global support vector exists, the global support vector must be the sample closest to the separated hyperplane, that is, it must not be discarded. At the same time, it is shown that if the whole dataset is linearly separable, the optimal training result can be obtained as long as any subset of grouping contains multiple types of samples. As shown in Figure 2, the data set in A is linearly separable, BC shows the training result of randomly dividing the data set in A into two subsets, and D is the retraining of the support vector obtained by summarizing BC training. It can be seen that after BC filtering, d only needs to train fewer samples, and the training results obtained in D are completely consistent with direct training of A. Meanwhile, it was also noted that the training results of BC were consistent with those of A. This happens only when BC contains global support vectors. At the beginning, it is not clear that those samples belong to global support vectors, so the support vectors obtained by BC training should be trained again to get a final result D.

2.Support vector and noise exist simultaneously. Taking the positive sample as an example, when the global positive sample support vector, positive noise (misclassified positive sample) and negative noise (misclassified negative sample) exist at the same time in the subset, the global positive sample support vector will have great probability retention. If the negative noise does not exist, the global positive sample support vector is likely to be lost. See Figure 3. Figure C represents the training results of the linear non-fractional data set, and the samples between the uppermost and bottommost lines are noise points. The dataset in AB is a subset of the dataset in C. In Figure A, the global positive sample support vector and positive noise exist simultaneously, but there is no negative sample. After training, the global positive sample support vector is lost. Figure B also contains global positive sample support vector, positive noise point and negative noise point, and most of the global positive sample support vector is retained after training.

Only in these two cases, the global support vector has a great probability of retention, and the other grouping methods have a great probability of losing the global support vector. In this paper, the samples in the data set are divided into support vector, noise point and ordinary sample. Before the training, only all positive samples and all negative samples are known, and other information about the samples is not known. If grouping is regarded as sampling of samples, both CSVM and BCSVM have the same sampling types of global support vector reservation. The difference lies in that BCSVM has fewer sampling methods than CSVM, so the probability of global support vector reservation of BCSVM is higher than that of CSVM. Taking the positive sample global support vector retention probability in dichotomies as an example, the proof process is as follows, and the specific significance of symbols is shown in Table 1.

| symbol | mean |

| pSv | positive support vector |

| nSv | negative support vector |

| pN | positive noisy |

| nN | negative noisy |

| pDS | common positive data set |

| nDS | common negative data set |

| pT | all positive samples=pSv+pDS+pN |

| nT | all negative samples=nSv+nDS+nN |

| T | all samples=pT+nT |

| m | num of chunking |

| \botrule |

The possible sampling outcomes for case 1 are

Where represents samples randomly selected from the positive sample support vector, and represents the number of samples in each subset.

The possible sampling outcomes for case 2 are

In other words, positive sample support vector, positive noise and negative noise exist simultaneously in the subset. In the case of random grouping, the sampling result of the subset is . Therefore, in the case of random grouping, the probability of retaining the global support vector of positive samples is:

Each subset in the BCSVM contains the same proportion of samples. So the number of positive samples in each subset, the number of negative samples , and obviously . The possible sampling result in this case is , that is, sample from all positive samples and negative sample from all negative samples. Therefore, in the case of uniform grouping, the probability of a positive sample global support vector not being removed is

because of , so obviously that

That is, BCSVM has a higher probability of retaining the global support vector than CSVM. A brief example is shown in Figure 4. ABC adopts the same data set, AB adopts CSVM and BCSVM for training respectively, and the third picture of AB shows the final training results. It can be seen that the training results obtained by using BCSVM are closer to those obtained by direct training without grouping.

5 Experiment

The training sets used in this experiment are two standard datasets, a9a111The a9a dataset contains 213 feature values,32562 training samples and 16281 test samples. https://www.csie.ntu.edu.tw/ cjlin/libsvmtools/datasets/binary.html#a9a and ijcnn1222The ijcnn1 dataset contains 22 feature values,49990 training samples and 91701 test samples. https://www.csie.ntu.edu.tw/ cjlin/libsvmtools/datasets/binary.html#ijcnn1, both of which are dichotomous samples. The training tool for SVM uses LibSVM. Hardware configuration: 16GB RAM, 8-core 1.8GHz base speed processor.

After the data set is read into the memory, a ’shuffle’ operation is performed to ensure that the original sample order of the data set will not interfere with the experiment. During the experiment, both CSVM and BCSVM divided the first data set into 8 parts, and then integrated the obtained support vector into a training set by combining the data cascade mode in SP-SVM. Finally, the set was trained to obtain the final result. The operation of CSVM is to select A sample from the data set after shuffling from the front to the back as A subset. The operation of BCSVM is to first divide the data set into a positive sample data set and negative sample data set, and select B and C samples respectively from the positive and negative sample data set to form A subset. Both data sets are trained by gaussian kernel function, and the same parameter combination is used in different grouping methods.

Figure 5 evaluates the prediction accuracy of direct training, CSVM and BCSVM in different data sets, from which it can be seen that the three different algorithms affect the prediction accuracy. The results obtained by using the same set of parameters are obviously the highest in direct training, and the accuracy of BCSVM is higher than that of CSVM. Direct training without grouping can retain all support vectors in this parameter combination, so the accuracy of both BCSVM and CSVM is obviously smaller than that. BCSVM has a higher probability of retaining a global support vector than CSVM, so the prediction accuracy of model obtained by BCSVM is higher than that of CSVM.

6 Conclusion

In this paper, BCSVM is proposed based on CSVM and SP-SVM data cascade mode. Compared with CSVM, BCSVM retains all its characteristics, and at the same time, BCSVM can retain the global support vector with a greater probability during training. Therefore, the prediction accuracy rate of model obtained by BCSVM is higher than that of CSVM. Due to the relationship between test set and training set, it may also occur that the model accuracy obtained by BCSVM is higher than that of CSVM when the test set is used for accuracy prediction.

Due to the randomness of molecular data set partitioning, results obtained by BCSVM are more likely to be better than those obtained by CSVM, and results obtained by CSVM are less likely to be better than those obtained by BCSVM. Moreover, BCSVM only reduces the error between CSVM and direct training, but does not completely solve the error problem caused by group training. At the same time, the paper does not give the specific value of error reduction. Therefore, the following work focuses on the performance optimization of group training. The model obtained by group training is the same as the model obtained by direct training, so as to solve the problem that the training time of SVM is too long and there will be errors in grouping.

References

- \bibcommenthead

- (1) Vapnik, V.: The Nature of Statistical Learning Theory. Springer, New York (1999)

- (2) Platt, J.C.: Advances in kernel methods. chapter fast training of support vector machines using sequential minimal optimization. MIT Press, Cambridge, MA, USA 3, 185–208 (1999)

- (3) Xiang, W., Li, T., et al.: Research on increasing the computation speed of huge-scale svm training. Pattern Recognition and Artificial Intelligence 16, 46–49 (2003)

- (4) Graf, H., Cosatto, E., Bottou, L., Dourdanovic, I., Vapnik, V.: Parallel support vector machines: The cascade svm. Advances in neural information processing systems 17, 521–528 (2004)

- (5) Peng-xiang, Z., Li-min, L., Zhi-qiang, M.: Research of parallel svm algorithm based on mapreduce. Computer Applications and Software 32, 172–176 (2015)

- (6) Ze-shen, L., Zhi-song, P.: Research on parallel svm algorithm based on spark. Computer Science 43, 238–242 (2016)

- (7) Nie, W., Fan, B., Kong, X., Ma, Q.: Optimization of multi kernel parallel support vector machine based on hadoop. In: 2016 IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), pp. 1602–1606 (2016). IEEE

- (8) Yu-xin, B., Xiao-yan, L.: Application research of parallel svm algorithm on flink platform. Journal of Chinese Computer Systems 42, 1003–1007 (2021)

- (9) Sadrfaridpour, E., Razzaghi, T., Safro, I.: Engineering fast multilevel support vector machines. Machine Learning 108(11), 1879–1917 (2019)

- (10) Aizerman, M.A.: Theoretical foundations of the potential function method in pattern recognition learning. Automation and remote control 25, 821–837 (1964)

- (11) Boser, B.E., Guyon, I.M., Vapnik, V.N.: A training algorithm for optimal margin classifiers. In: Proceedings of the Fifth Annual Workshop on Computational Learning Theory, pp. 144–152 (1992)

- (12) Chang, C.-C., Lin, C.-J.: Libsvm: A library for support vector machines. ACM transactions on intelligent systems and technology (TIST) 2(3), 1–27 (2011)