Relative Pose Estimation for Stereo ROLLING SHUTTER Cameras

Abstract

In this paper, we present a novel linear algorithm to estimate the 6 DoF relative pose from consecutive frames of stereo rolling shutter (RS) cameras. Our method is derived based on the assumption that stereo cameras undergo motion with constant velocity around the center of the baseline, which needs 9 pairs of correspondences on both left and right consecutive frames. The stereo RS images enable the recovery of depth maps from the semi-global matching (SGM) algorithm. With the estimated camera motion and depth map, we can correct the RS images to get the undistorted images without any scene structure assumption. Experiments on both simulated points and synthetic RS images demonstrate the effectiveness of our algorithm in relative pose estimation.

Index Terms— Stereo rolling shutter, relative pose, semi-global matching, rolling shutter correction.

1 Introduction

With the advantage of low-cost and simplicity in design, more and more rolling-shutter (RS) CMOS cameras [1] have been used in various real-world computer vision applications. Different from their global shutter (GS) counterparts that expose all pixels simultaneously, RS cameras capture the pixels of each row (or column) at consecutive times. Therefore, when there is instantaneous motion between the scene and the camera, the captured image will show rolling shutter effects such as skew or wobble, and the level of rolling shutter effect varies with respect to the camera motion and the object motion.

Currently, most of the geometric algorithms in computer vision are designed under the perspective camera model (i.e. GS camera model). However, this model fails to handle the RS images due to the significant difference between RS and GS cameras. Therefore, geometric algorithms for RS cameras have attracted more and more attention. In [1], Meingast et al introduced the geometric model for RS cameras. Along this direction, many algorithms have been proposed to solve the corresponding multi-view geometric problems. Purkait et al. [2] performed pose estimation under the Manhattan world assumption. Oliver et al. [3] proposed an algorithm based on plane sweeping to recover the depth map and 3D scene for multi-view RS images. Fraundorfer et al. [4] fused information from external sensors like IMU to estimate the relative motion while Lee et al [5] simplified the pose estimation problem by using the gyroscope. Dai et al. [6] derived a 20-point linear algorithm and a 44-point linear algorithm under different RS models based on generalized epipolar geometry.

It should be noted that all of these works are designed to solve the relative pose of a monocular RS camera. In practise, stereo cameras provide extra benefits over its monocular counterpart, i.e., more accurate motion estimation, depth estimation and the availability of global scale. Existing relative pose estimation methods for stereo cameras such as [7] are all designed for GS cameras. Surprisingly, no previous attempt has been reported on solving the 6 DoF relative pose problem with stereo RS cameras. Motivated by relative pose estimation for monocular camera [6], we exploit the epipolar geometry to solve the relative pose from consecutive RS frames and present the first relative pose estimation algorithm for stereo RS cameras as shown in Fig.1. Specifically, we figure out a linear solver for 6 DoF relative pose from consecutive stereo images under a continuous motion model. To solve the pose, 9 pairs of correspondences from the left and right cameras respectively are needed. The pose of each scanline on each frame can be solved under the assumption of a constant velocity motion model.

The estimated 6 DoF camera motion also enables the correction of the RS effects, which generally requires the underlying scene structures and the camera motions between scanlines or between views. Existing work with monocular cameras depend on strong assumptions to estimate the scene structure. Lao et al. [8] used at least 4 curves to estimate the camera’s rotation speed to eliminate the RS effect. Zhuang et al.[9] introduced optical flow based on RS successive frames, which proposed an 8-point algorithm for constant motion, and 9-point algorithm for constant acceleration motion, then they achieved SfM as well as image rectification. Vasu et al. [10] realized that the RS distortion effect is depth-dependent, and proposed an algorithm that can recover 3D scene from a set of RS distorted images.

Under our stereo RS camera configuration, we are able to estimate the scene structure without assumptions. Specifically, we show that for stereo RS images, using the semi-global matching (SGM) algorithm which is based on stereo GS image can also accurately estimate the depth map, then the depth will be used in the RS image correction to obtain a smoothly corrected image. Experimental results on both simulated points and synthetic RS images prove the effectiveness of our relative pose algorithm and its application in RS image correction,

2 METHOD

In this section, we establish the model of stereo RS cameras under consecutive frames and derive the linear solver.

2.1 Rolling shutter camera model

First of all, we will illustrate the differences between the GS model and the RS model in the form of image formation. GS cameras expose all pixels simultaneously, thus the projection can be expressed as: , where and represent the rotation and translation of the camera in the world coordinate respectively, denotes the intrinsic calibration matrix, is the 3D point which is projected to in image plane with a depth value .

For RS camera, each scanline will correspond to different poses when the camera is moving during image acquisition. We assume that the camera scans in rows. So both and can be described as functions of image row . The projection process under RS camera model is expressed as:

| (1) |

Next, we derive our linear solver of the stereo RS cameras under consecutive frames.

2.2 Stereo RS algorithm under consecutive images

As illustrated in Fig.1(b), we use the continuous camera motion model in this paper. For clarity, we denote the left and right camera at the first moment and the second moment as , , , respectively.

We suppose that stereo cameras undergo constant velocity motion among consecutive frames. As shown in Fig.2, we define the total readout time of one frame as and the delay time between consecutive frames as . The stereo cameras are rigidly connected through the baseline, so and of the left and right cameras are identical. and represent the rotation and translation velocities of the first row on , , and . Furthermore, we assume that the stereo cameras move around the center of the baseline, so we set pose of the center of baseline as , and the length of baseline is set as . Therefore, the pose of first row on can be represented as , and the pose of first row on is .

Suppose that the relative motions between consecutive frames are and , the rotation and translation velocities of each row on each frame can be computed through linear interpolation. The pose of row on is represented as:

| (2) |

where , is the readout time ratio, is the total number of rows per frame. Similar to , the pose of row on is expressed as:

| (3) |

Same as before, the pose of row on and pose of row on are:

| (4) |

and

| (5) |

Assume that the relative rotation between consecutive frames is small, the mapping between the rotation matrix and the rotation vector can be approximated as: . Therefore, we can develop the essential matrix between and as:

| (6a) | |||

| (6b) | |||

| (6c) | |||

Consequently, we can reorganize the formulation to get:

| (7) | |||

where ,, . Similarly, we can also sort out the essential matrix between and as:

| (8) | |||

where ,, . The epipolar constraints are expressed as:

| (9a) | |||

| (9b) | |||

By formulating Eq.(9a) and (9b) into the form of a linear equation, we can get and respectively. Combining them together yields a linear equation:

| (12) |

where in which each of parameter is composed by and , and are matrices consisted of known parameter and coordinates of correspondences points. In Eq.(10), has 27 unknowns. However, we notice that there are higher-order terms in , these terms will not affect the results so we omit them. Then the vector contain 19 parameters, thus a linear 18-point solver must exist to solve this formulation, i.e. 9 pairs of correspondences for and and 9 pairs of correspondences for and . Next, the right singular vector corresponding to the smallest singular value of is the solution of Eq.(10). Then, and can be extracted from .

2.3 Rolling Shutter Correction

In this section, we employ the Semi-Global Matching (SGM) algorithm [11] to estimate the depth map from the stereo image pair, and combine the camera pose of each row obtained in the previous section, the correction of the RS images can be completed. First, we use the pose and depth of each pixel in the RS image to back-project each pixel into 3D space, and then reproject 3D points to the image plane corresponding to the pose of the first row as follow:

| (13a) | |||

| (13b) | |||

We figure out that for stereo RS images, a relatively accurate depth map can be obtained by the SGM algorithm, the depth can be further applied to the correction to get a smoothly undistorted image. Note that the stereo RS cameras enable both 6 DoF camera motion estimation and accurate depth map estimation, which results in our improved RS correction.

3 EXPERIMENTS

In this section, we evaluate our algorithm on simulated points and synthetic RS images. Note that and are used to measure the translation and rotation errors, respectively.

3.1 Simulation Experiments

To verify the effectiveness of our proposed algorithm, we simulate matching points that satisfy the RS projection model. In the simulation experiments, we set the size of the image as with an 810 focal length. Then, we give the value of relative translation and relative rotation . The baseline length and the readout time ratio are also set. When all parameters are known, the correspondences between and and correspondences between and can be generated. To ensure the creditability, each experimental value is the mean value of 300 repetitions. Moreover, in order to check the robustness and practicability of the algorithm, we do not use RANSAC or nonlinear optimization in the simulation experiments, and experimental results are compared with the experimental results of the GS algorithm [12].

Accuracy versus noise level. First, we add different levels of random Gaussian noise to the image coordinates of correspondences to evaluate the robustness of our algorithm. Statistical results are shown in Fig.3, illustrating that although the estimation error increases with the increasing noise level, the overall increase is tiny.

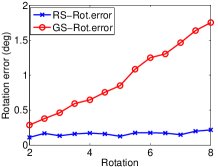

Accuracy versus RS velocity. Then, we test how RS velocity will affect the accuracy of our algorithm. For a constant Gaussian noise level of , we test the performance of the increasing rotation and increasing translation respectively as shown in the second and third column in Fig.3. It can be observed that our RS algorithm always achieves higher accuracy than the GS algorithm not only in translation estimation but also in rotation estimation with the increasing velocity. Our RS algorithm can maintain more stable and accurate results.

Accuracy versus readout time radio. To test and observe how the readout time ratio affects the estimation result, we vary the value of readout time ratio from 0.2 to 1 according to [13]. Simultaneously, the value of and are kept constant with a fixed Gaussian noise level of . Results are shown in the last column in Fig.3, the estimation error of both the GS algorithm and RS algorithm increase with the increase of , but GS gets higher errors. The reason for this result can be interpreted as that the RS effect becomes more evident with increasing .

Accuracy versus baseline length. Finally, we investigate how the length of the baseline can affect performances. We keep the value of and fixed, then increase the baseline from 0.1 to 1. As illustrated in the first column in Fig.3, the length of the baseline will not have a significant impact on the performance of results.

3.2 Experiments on synthetic RS images

To demonstrate the qualitative results of our relative pose solver, we generate RS images by the simulator and 3D models as in [9]. We first set the ground truth of all parameters such as , and , then we can calculate the pose of each row on each frame in Fig.2. According to the poses, the simulator will generate GS images, then synthesize RS images from these GS images by extracting the corresponding row pixels of the corresponding GS image. In experiments, we set the image resolution as with a 1384.6 focal length, the magnitude of is 0.3, and the magnitude of is fixed to . Besides, both translation and rotation include motion on x, y, and z-axis. Then we use SIFT [14] to extract feature points and RANSAC to reduce the effect of noise on pose estimation. Our experimental result is reported in Fig.(4). Fig.4(a) shows the original RS image. And in Fig.4(c).(f), we can observe the depth truth of RS image and the depth error between depth truth and depth estimated by the SGM algorithm. We notice that the left part of the depth error map is set to 0 because the SGM algorithm cannot estimate the depth value of the left area on the left image. Fig.4(b) shows the corrected image using our method and the depth estimated by the SGM algorithm. In Fig.4(d), we overlay the original RS image and the ground truth GS image rendered by the pose of the first row, the red area shows the distortion. And then we overlap the corrected image and the GS image as shown in Fig.4(e). Comparing Fig.4(d) and Fig.4(e), we can find that the rectified RS image almost coincides with the GS image, except for the surrounding area where the SGM algorithm cannot estimate the depth and a small area where the depth error is more than 2m. The experiments prove that our proposed algorithm can also estimate the motion well, and the SGM algorithm can be used to estimate the depth of stereo RS images and get an accurate depth value which can be used in RS image correction.

4 CONCLUSION

In this paper, we have proposed a novel linear relative pose solver for stereo rolling shutter cameras under consecutive frames using 9 pairs of correspondences for the left and right camera respectively. We showed that for RS stereo images, traditional SGM algorithm can be used to estimate the depth map and achieve an accurate result. Experimental results on synthetic RS images show that we can well correct the RS image using our proposed relative pose solver and depth estimated by the SGM algorithm.

Acknowledgements: This research was supported in part by the National Natural Science Foundation of China under Grants 61871325, 61420106007, and 61671387 and the National Key Research and Development Program of China under Grant 2018AAA0102803.

References

- [1] Marci Meingast, Christopher Geyer, and Shankar Sastry, “Geometric models of rolling-shutter cameras,” arXiv preprint cs/0503076, 2005.

- [2] Pulak Purkait, Christopher Zach, and Ales Leonardis, “Rolling shutter correction in Manhattan world,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 882–890.

- [3] Olivier Saurer, Kevin Koser, Jean-Yves Bouguet, and Marc Pollefeys, “Rolling shutter stereo,” in Proceedings of the IEEE International Conference on Computer Vision, 2013, pp. 465–472.

- [4] Friedrich Fraundorfer, Petri Tanskanen, and Marc Pollefeys, “A minimal case solution to the calibrated relative pose problem for the case of two known orientation angles,” in Proceedings of the European Conference on Computer Vision. Springer, 2010, pp. 269–282.

- [5] Chang-Ryeol Lee, Ju Hong Yoon, Min-Gyu Park, and Kuk-Jin Yoon, “Gyroscope-aided relative pose estimation for rolling shutter cameras,” arXiv preprint arXiv:1904.06770, 2019.

- [6] Yuchao Dai, Hongdong Li, and Laurent Kneip, “Rolling shutter camera relative pose: Generalized epipolar geometry,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 4132–4140.

- [7] Alexander Vakhitov, Victor Lempitsky, and Yinqiang Zheng, “Stereo relative pose from line and point feature triplets,” in Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 648–663.

- [8] Yizhen Lao and Omar Ait-Aider, “A robust method for strong rolling shutter effects correction using lines with automatic feature selection,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 4795–4803.

- [9] Bingbing Zhuang, Loong-Fah Cheong, and Gim Hee Lee, “Rolling-shutter-aware differential SFM and image rectification,” in Proceedings of the IEEE International Conference on Computer Vision, 2017, pp. 948–956.

- [10] Subeesh Vasu, Mahesh MR Mohan, and AN Rajagopalan, “Occlusion-aware rolling shutter rectification of 3D scenes,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 636–645.

- [11] Heiko Hirschmuller, “Stereo processing by semiglobal matching and mutual information,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 30, no. 2, pp. 328–341, 2007.

- [12] Richard Hartley, “In defense of the eight-point algorithm,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 19, no. 6, pp. 580–593, 1997.

- [13] Sunghoon Im, Hyowon Ha, Gyeongmin Choe, Hae-Gon Jeon, Kyungdon Joo, and In So Kweon, “High quality structure from small motion for rolling shutter cameras,” in Proceedings of the IEEE International Conference on Computer Vision, 2015, pp. 837–845.

- [14] David G Lowe, “Distinctive image features from scale-invariant keypoints,” International journal of computer vision, vol. 60, no. 2, pp. 91–110, 2004.